Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/08/political-milestones-for-ai.html

ChatGPT was released just nine months ago, and we are still learning how it will affect our daily lives, our careers, and even our systems of self-governance.

But when it comes to how AI may threaten our democracy, much of the public conversation lacks imagination. People talk about the danger of campaigns that attack opponents with fake images (or fake audio or video) because we already have decades of experience dealing with doctored images. We’re on the lookout for foreign governments that spread misinformation because we were traumatized by the 2016 US presidential election. And we worry that AI-generated opinions will swamp the political preferences of real people because we’ve seen political “astroturfing”—the use of fake online accounts to give the illusion of support for a policy—grow for decades.

Threats of this sort seem urgent and disturbing because they’re salient. We know what to look for, and we can easily imagine their effects.

The truth is, the future will be much more interesting. And even some of the most stupendous potential impacts of AI on politics won’t be all bad. We can draw some fairly straight lines between the current capabilities of AI tools and real-world outcomes that, by the standards of current public understanding, seem truly startling.

With this in mind, we propose six milestones that will herald a new era of democratic politics driven by AI. All feel achievable—perhaps not with today’s technology and levels of AI adoption, but very possibly in the near future.

Good benchmarks should be meaningful, representing significant outcomes that come with real-world consequences. They should be plausible; they must be realistically achievable in the foreseeable future. And they should be observable—we should be able to recognize when they’ve been achieved.

Worries about AI swaying an election will very likely fail the observability test. While the risks of election manipulation through the robotic promotion of a candidate’s or party’s interests is a legitimate threat, elections are massively complex. Just as the debate continues to rage over why and how Donald Trump won the presidency in 2016, we’re unlikely to be able to attribute a surprising electoral outcome to any particular AI intervention.

Thinking further into the future: Could an AI candidate ever be elected to office? In the world of speculative fiction, from The Twilight Zone to Black Mirror, there is growing interest in the possibility of an AI or technologically assisted, otherwise-not-traditionally-eligible candidate winning an election. In an era where deepfaked videos can misrepresent the views and actions of human candidates and human politicians can choose to be represented by AI avatars or even robots, it is certainly possible for an AI candidate to mimic the media presence of a politician. Virtual politicians have received votes in national elections, for example in Russia in 2017. But this doesn’t pass the plausibility test. The voting public and legal establishment are likely to accept more and more automation and assistance supported by AI, but the age of non-human elected officials is far off.

Let’s start with some milestones that are already on the cusp of reality. These are achievements that seem well within the technical scope of existing AI technologies and for which the groundwork has already been laid.

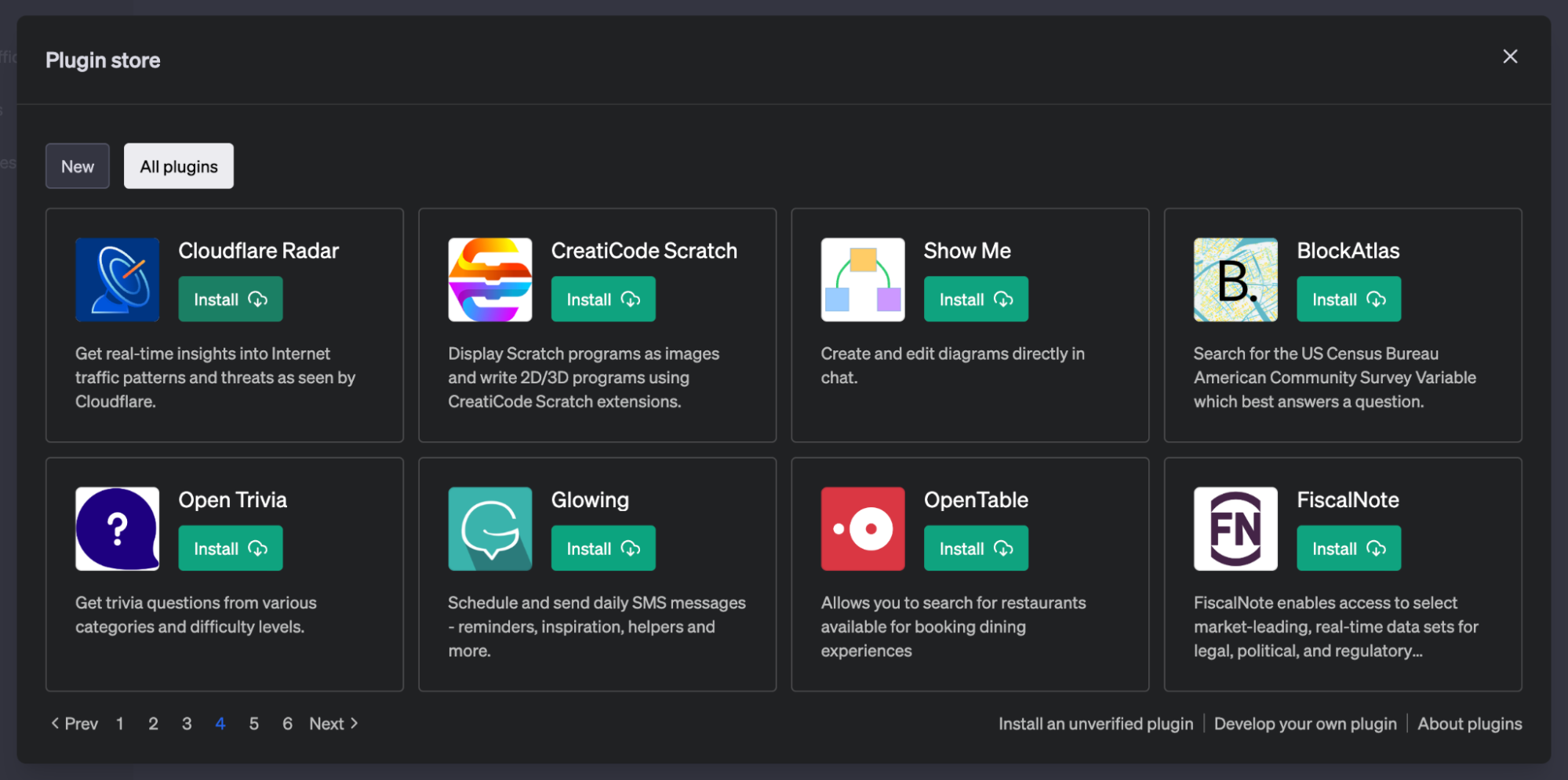

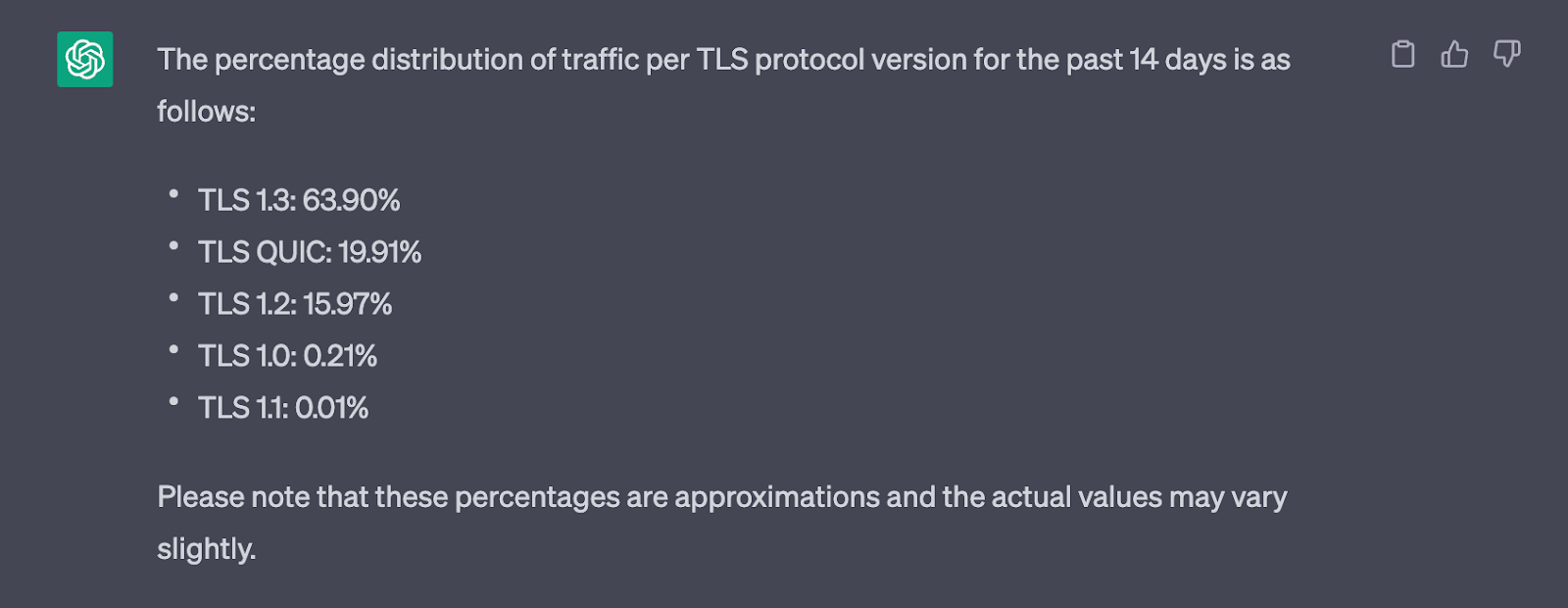

Milestone #1: The acceptance by a legislature or agency of a testimony or comment generated by, and submitted under the name of, an AI.

Arguably, we’ve already seen legislation drafted by AI, albeit under the direction of human users and introduced by human legislators. After some early examples of bills written by AIs were introduced in Massachusetts and the US House of Representatives, many major legislative bodies have had their “first bill written by AI,” “used ChatGPT to generate committee remarks,” or “first floor speech written by AI” events.

Many of these bills and speeches are more stunt than serious, and they have received more criticism than consideration. They are short, have trivial levels of policy substance, or were heavily edited or guided by human legislators (through highly specific prompts to large language model-based AI tools like ChatGPT).

The interesting milestone along these lines will be the acceptance of testimony on legislation, or a comment submitted to an agency, drafted entirely by AI. To be sure, a large fraction of all writing going forward will be assisted by—and will truly benefit from—AI assistive technologies. So to avoid making this milestone trivial, we have to add the second clause: “submitted under the name of the AI.”

What would make this benchmark significant is the submission under the AI’s own name; that is, the acceptance by a governing body of the AI as proffering a legitimate perspective in public debate. Regardless of the public fervor over AI, this one won’t take long. The New York Times has published a letter under the name of ChatGPT (responding to an opinion piece we wrote), and legislators are already turning to AI to write high-profile opening remarks at committee hearings.

Milestone #2: The adoption of the first novel legislative amendment to a bill written by AI.

Moving beyond testimony, there is an immediate pathway for AI-generated policies to become law: microlegislation. This involves making tweaks to existing laws or bills that are tuned to serve some particular interest. It is a natural starting point for AI because it’s tightly scoped, involving small changes guided by a clear directive associated with a well-defined purpose.

By design, microlegislation is often implemented surreptitiously. It may even be filed anonymously within a deluge of other amendments to obscure its intended beneficiary. For that reason, microlegislation can often be bad for society, and it is ripe for exploitation by generative AI that would otherwise be subject to heavy scrutiny from a polity on guard for risks posed by AI.

Milestone #3: AI-generated political messaging outscores campaign consultant recommendations in poll testing.

Some of the most important near-term implications of AI for politics will happen largely behind closed doors. Like everyone else, political campaigners and pollsters will turn to AI to help with their jobs. We’re already seeing campaigners turn to AI-generated images to manufacture social content and pollsters simulate results using AI-generated respondents.

The next step in this evolution is political messaging developed by AI. A mainstay of the campaigner’s toolbox today is the message testing survey, where a few alternate formulations of a position are written down and tested with audiences to see which will generate more attention and a more positive response. Just as an experienced political pollster can anticipate effective messaging strategies pretty well based on observations from past campaigns and their impression of the state of the public debate, so can an AI trained on reams of public discourse, campaign rhetoric, and political reporting.

With these near-term milestones firmly in sight, let’s look further to some truly revolutionary possibilities. While these concepts may have seemed absurd just a year ago, they are increasingly conceivable with either current or near-future technologies.

Milestone #4: AI creates a political party with its own platform, attracting human candidates who win elections.

While an AI is unlikely to be allowed to run for and hold office, it is plausible that one may be able to found a political party. An AI could generate a political platform calculated to attract the interest of some cross-section of the public and, acting independently or through a human intermediary (hired help, like a political consultant or legal firm), could register formally as a political party. It could collect signatures to win a place on ballots and attract human candidates to run for office under its banner.

A big step in this direction has already been taken, via the campaign of the Danish Synthetic Party in 2022. An artist collective in Denmark created an AI chatbot to interact with human members of its community on Discord, exploring political ideology in conversation with them and on the basis of an analysis of historical party platforms in the country. All this happened with earlier generations of general purpose AI, not current systems like ChatGPT. However, the party failed to receive enough signatures to earn a spot on the ballot, and therefore did not win parliamentary representation.

Future AI-led efforts may succeed. One could imagine a generative AI with skills at the level of or beyond today’s leading technologies could formulate a set of policy positions targeted to build support among people of a specific demographic, or even an effective consensus platform capable of attracting broad-based support. Particularly in a European-style multiparty system, we can imagine a new party with a strong news hook—an AI at its core—winning attention and votes.

Milestone #5: AI autonomously generates profit and makes political campaign contributions.

Let’s turn next to the essential capability of modern politics: fundraising. “An entity capable of directing contributions to a campaign fund” might be a realpolitik definition of a political actor, and AI is potentially capable of this.

Like a human, an AI could conceivably generate contributions to a political campaign in a variety of ways. It could take a seed investment from a human controlling the AI and invest it to yield a return. It could start a business that generates revenue. There is growing interest and experimentation in auto-hustling: AI agents that set about autonomously growing businesses or otherwise generating profit. While ChatGPT-generated businesses may not yet have taken the world by storm, this possibility is in the same spirit as the algorithmic agents powering modern high-speed trading and so-called autonomous finance capabilities that are already helping to automate business and financial decisions.

Or, like most political entrepreneurs, AI could generate political messaging to convince humans to spend their own money on a defined campaign or cause. The AI would likely need to have some humans in the loop, and register its activities to the government (in the US context, as officers of a 501(c)(4) or political action committee).

Milestone #6: AI achieves a coordinated policy outcome across multiple jurisdictions.

Lastly, we come to the most meaningful of impacts: achieving outcomes in public policy. Even if AI cannot—now or in the future—be said to have its own desires or preferences, it could be programmed by humans to have a goal, such as lowering taxes or relieving a market regulation.

An AI has many of the same tools humans use to achieve these ends. It may advocate, formulating messaging and promoting ideas through digital channels like social media posts and videos. It may lobby, directing ideas and influence to key policymakers, even writing legislation. It may spend; see milestone #5.

The “multiple jurisdictions” piece is key to this milestone. A single law passed may be reasonably attributed to myriad factors: a charismatic champion, a political movement, a change in circumstances. The influence of any one actor, such as an AI, will be more demonstrable if it is successful simultaneously in many different places. And the digital scalability of AI gives it a special advantage in achieving these kinds of coordinated outcomes.

The greatest challenge to most of these milestones is their observability: will we know it when we see it? The first campaign consultant whose ideas lose out to an AI may not be eager to report that fact. Neither will the campaign. Regarding fundraising, it’s hard enough for us to track down the human actors who are responsible for the “dark money” contributions controlling much of modern political finance; will we know if a future dominant force in fundraising for political action committees is an AI?

We’re likely to observe some of these milestones indirectly. At some point, perhaps politicians’ dollars will start migrating en masse to AI-based campaign consultancies and, eventually, we may realize that political movements sweeping across states or countries have been AI-assisted.

While the progression of technology is often unsettling, we need not fear these milestones. A new political platform that wins public support is itself a neutral proposition; it may lead to good or bad policy outcomes. Likewise, a successful policy program may or may not be beneficial to one group of constituents or another.

We think the six milestones outlined here are among the most viable and meaningful upcoming interactions between AI and democracy, but they are hardly the only scenarios to consider. The point is that our AI-driven political future will involve far more than deepfaked campaign ads and manufactured letter-writing campaigns. We should all be thinking more creatively about what comes next and be vigilant in steering our politics toward the best possible ends, no matter their means.

This essay was written with Nathan Sanders, and previously appeared in MIT Technology Review.