Post Syndicated from Rita Kozlov original https://blog.cloudflare.com/welcome-to-developer-week-2024

It’s time to ship. For us (that’s what Innovation Weeks are all about!), and also for our developers.

Shipping itself is always fun, but getting there is not always easy. Bringing something from idea to life requires many stars to align. That’s what this week is all about — helping developers, including the two million developers already building on our platform, bring their ideas to life.

The full-stack cloud

Building applications requires assembling many different components.

The frontend, the face of the application, must be intuitive, responsive, and visually appealing to engage users effectively. Behind the scenes, you need a backend to handle data processing, storage, and retrieval, ensuring smooth functionality and performance. On top of all that, in the past year AI has entered the chat, so to speak, and increasingly every application requires an element of AI, making it a crucial part of the stack.

The job of a good platform is to provide all these components, and any others you will need, to you, the developer.

Just as there’s nothing more frustrating than coming home from the grocery store and realizing you left out an ingredient, realizing a platform is missing a major component or piece of functionality is no different.

We view providing the tooling that developers need as a critical part of our job as a platform, which is why with every Developer Week, we make it our mission to provide you with more and more pieces you may need. This week is no different — you can expect us to announce more tools and primitives from the frontend to backend to AI.

However, our job doesn’t stop there. If a good platform provides the components, a great platform goes a step further than that.

The job of a great platform is not only to provide the components, but make sure they play well with each other in a way that makes your job as a developer easier. Our vision for the developer platform is exactly that: to anticipate not just the tools you need but also think about how they work with each other, and how they integrate into your development flow.

This week, you will see announcements and deep dives that expound on our vision for an integrated platform: pulling back the curtain on the way we expose services in Workers through bindings for an integrated developer experience, talking about our vision for a unified data platform, updating you on framework support, and more.

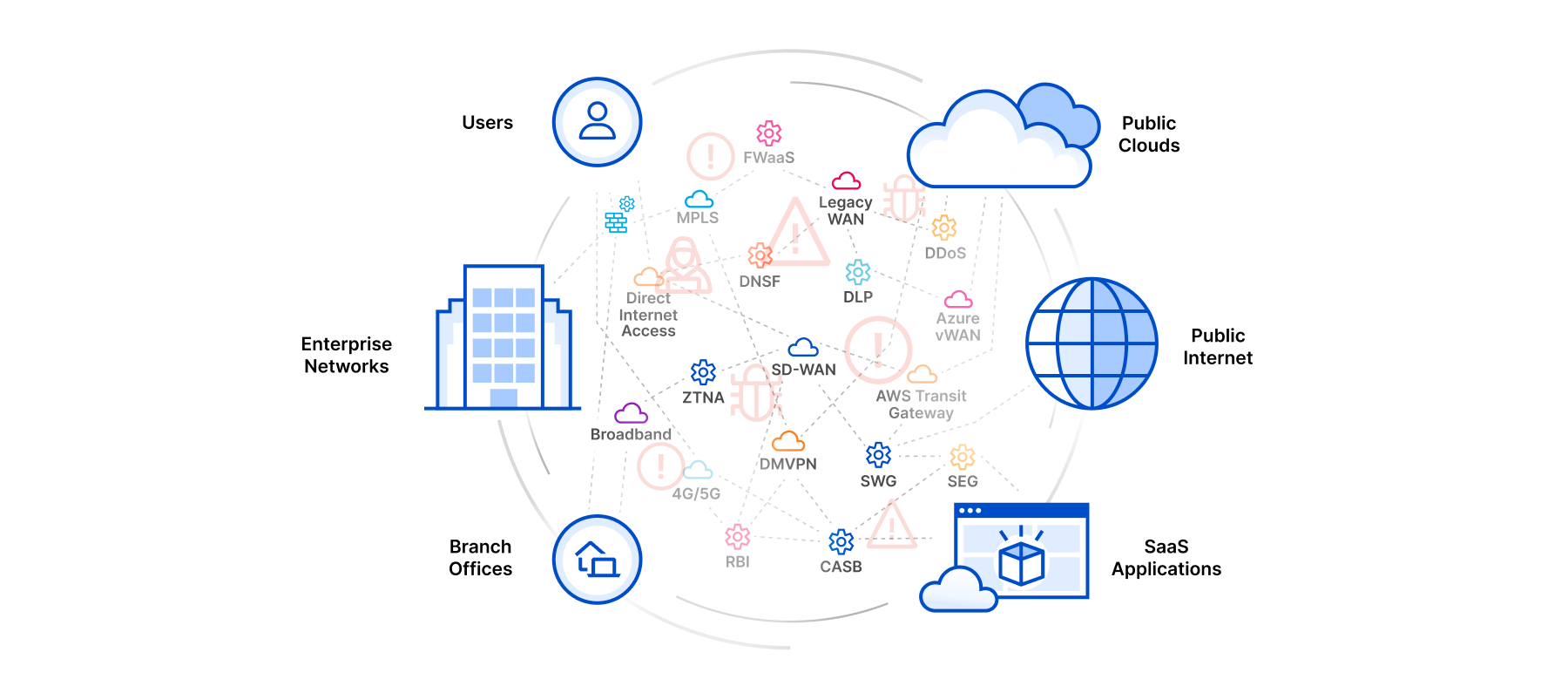

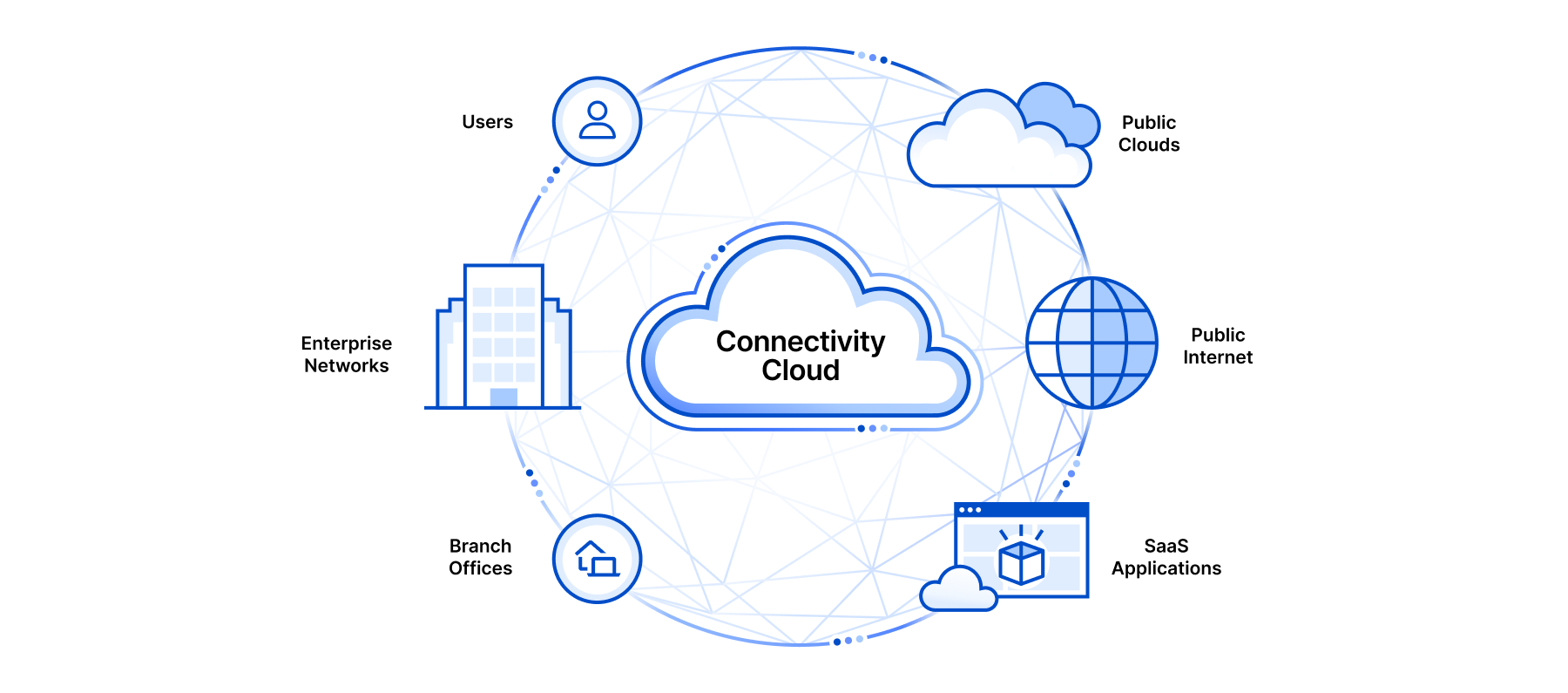

The connectivity cloud

While we’re excited for you to build on us as much as possible, we also realize that development projects are rarely greenfield. If you’ve been at this for a long time, chances are a large portion of your application already lives somewhere, whether on another cloud, or on-prem.

That’s why we’re constantly making it easier for you to connect to existing infrastructure or other providers, and working hard to make sure you can still reap the benefits of building on Cloudflare by making your application feel fast and global, regardless of where your backend is.

And vice versa, if your data is on us, but you need to access it from other providers, it’s not our job to keep it hostage in a captivity cloud by charging a tariff for egress.

The experimentation cloud

Before you start assembling components, or even coming up with a plan or a spec for it, there’s an important but overlooked step to the development process — experimentation.

Experimentation can take many forms. Experimentation can be in the form of prototyping an MVP before you spend months developing a product or a feature. If you’ve found yourself rewriting your entire personal website just to try out a new tool or framework, that’s also experimentation.

It’s easy to overlook experimentation as a part of the process, but innovation doesn’t happen without it, which is why it’s something we always want to encourage and support as a part of our platform.

That’s why offering a generous free tier is something that’s been a part of our DNA since the very beginning, and something you can expect to forever be a staple of our platform.

The demo to production cloud

Alright, you’ve got all the tools you need, you’ve had a chance to experiment, and at some point… it’s time to ship.

Shipping is exciting, but shipping is also vulnerable and scary. You’re exposing the thing you’ve been working hard on to the world to criticize. You’re exposing your code to a world of untested edge cases and abuse. You’re exposing your colleagues who are on call to the possibility of getting paged at 1 AM due to the code you released.

Of course, the wrong answer is not shipping.

The right answer is having a platform that supports you and holds your hand through the scary parts. This means a platform that can seamlessly scale from zero to sixty. A platform gives you the tools to test your code, and release it gradually to the world to help you gain confidence. Or a platform provides the observability you need when you are trying to figure out what’s gone wrong at 1 AM.

That’s why this week, you can look forward to some announcements from us that we hope will help you sleep better.

The demo to production cloud — for inference

We talked about some of the scary parts of deploying to production, and while all these apply to AI as well, building AI applications today, especially in production, presents its own unique set of challenges.

Almost every day you see a new AI demo go viral — from Sora to Devin, it’s easy and inspiring to imagine our world completely changed by AI. But if you’ve started actually playing with and implementing AI use cases, you know the harsh reality of making AI truly work. It requires a lot of trial and error to get the results you want — choosing a model, RAG, fine-tuning…

And that’s before you even go to production.

That’s when you encounter the real challenge — provisioning enough capacity to stay up, without over-provisioning and overpaying. This is the exact challenge we set out to solve from the early days of Workers — helping developers not worry about infrastructure, just the application they want to build.

With the recent rise of AI, we’ve noticed many of these challenges return. Thankfully, managing loads and infrastructure is what we’re good at here at Cloudflare. It’s what we’ve had practice at for over a decade of running our platform. It’s all just one giant scheduler.

Our vision for our AI platform is to help solve the exact challenges in deploying AI workloads that we’ve been helping developers solve for, well, any other type of workload. Whether you’re deploying directly on us with Workers AI, or another provider, we’ll help provide the tools you need to access the models you need, without overpaying for idle compute.

Don’t worry, it’s all going to be fine.

So what can you expect this week?

No one in my family can keep a secret — my sister cannot get me a birthday present without spoiling it the week before. For me, the anticipation and the look of surprise is part of the fun! My coworkers seem to have clued into this.

While I won’t give away too much, we’ve already teased out a few things last week (you can find some hints here, here and here), as well as in this blog post if you read closely (because as it turns out, I too, can’t help myself).

See you tomorrow!

Our series of announcements starts on Monday, April 1st. We look forward to sharing them with you here on our blog, and discussing them with you on Discord and X.