Post Syndicated from Ulysses Kee original https://blog.cloudflare.com/internship-experience-software-development-intern/

Before we dive into my experience interning at Cloudflare, let me quickly introduce myself. I am currently a master’s student at the National University of Singapore (NUS) studying Computer Science. I am passionate about building software that improves people’s lives and making the Internet a better place for everyone. Back in December 2021, I joined Cloudflare as a Software Development Intern on the Partnerships team to help improve the experience that Partners have when using the platform. I was extremely excited about this opportunity and jumped at the prospect of working on serverless technology to build viable tools for our partners and customers. In this blog post, I detail my experience working at Cloudflare and the many highlights of my internship.

Interview Experience

The process began for me back when I was taking a software engineering module at NUS where one of my classmates had shared a job post for an internship at Cloudflare. I had known about Cloudflare’s DNS service prior and was really excited to learn more about the internship opportunity because I really resonated with the company’s mission to help build a better Internet.

I knew right away that this would be a great opportunity and submitted my application. Soon after, I heard back from the recruiting team and went through the interview process – the entire interview process was extremely accommodating and is definitely the most enjoyable interview experience I have had. Throughout the process, I was constantly asked about the kind of things I would like to work on and the relevance of the work that I would be doing. I felt that this thorough communication carried on throughout the internship and really was a cornerstone of my experience interning at Cloudflare.

My Internship

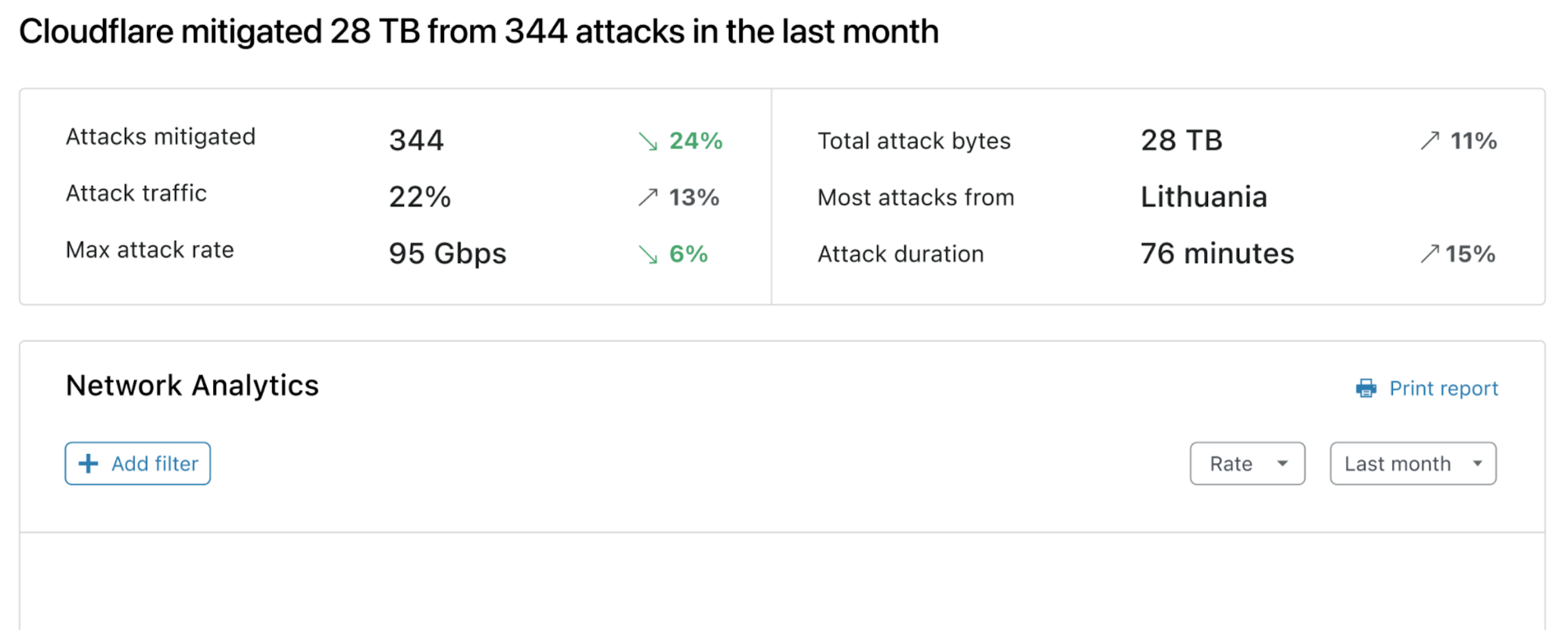

My internship began with onboarding and training, and then after, I had discussions with my mentor, Ayush Verma, on the projects we aimed to complete during the internship and the order of objectives. The main issues we wanted to address was the current manual process that our internal teams and partners go through when they want to duplicate the configuration settings on a zone, or when they want to compare one zone to other zones to ensure that there are no misconfigurations. As you can imagine, with the number of different configurations offered on the Cloudflare dashboard for customers, it could take significant time to copy over every setting and rule manually from one zone to another. Additionally, this process, when done manually, poses a potential threat for misconfigurations due to human error. Furthermore, as more and more customers onboard different zones onto Cloudflare, there needs to be a more automated and improved way for them to make these configuration setups.

Initially, we discussed using Terraform as Cloudflare already supports terraform automation. However, this approach would only cater towards customers and users that have more technical resources and, in true Cloudflare spirit, we wanted to keep it simple such that it could be used by any and everyone. Therefore, we decided to leverage the publicly available Cloudflare APIs and create a browser-based application that interacts with these APIs to display configurations and make changes easily from a simple UI.

With the end goal of simplifying the experience for our partners and customers in duplicating zone configurations, we decided to build a Zone Copier web application solely built on Cloudflare Workers. This tool would, in a click of a button, automatically copy over every setting that can be copied from one zone to another, significantly reducing the amount of time and effort required to make the changes.

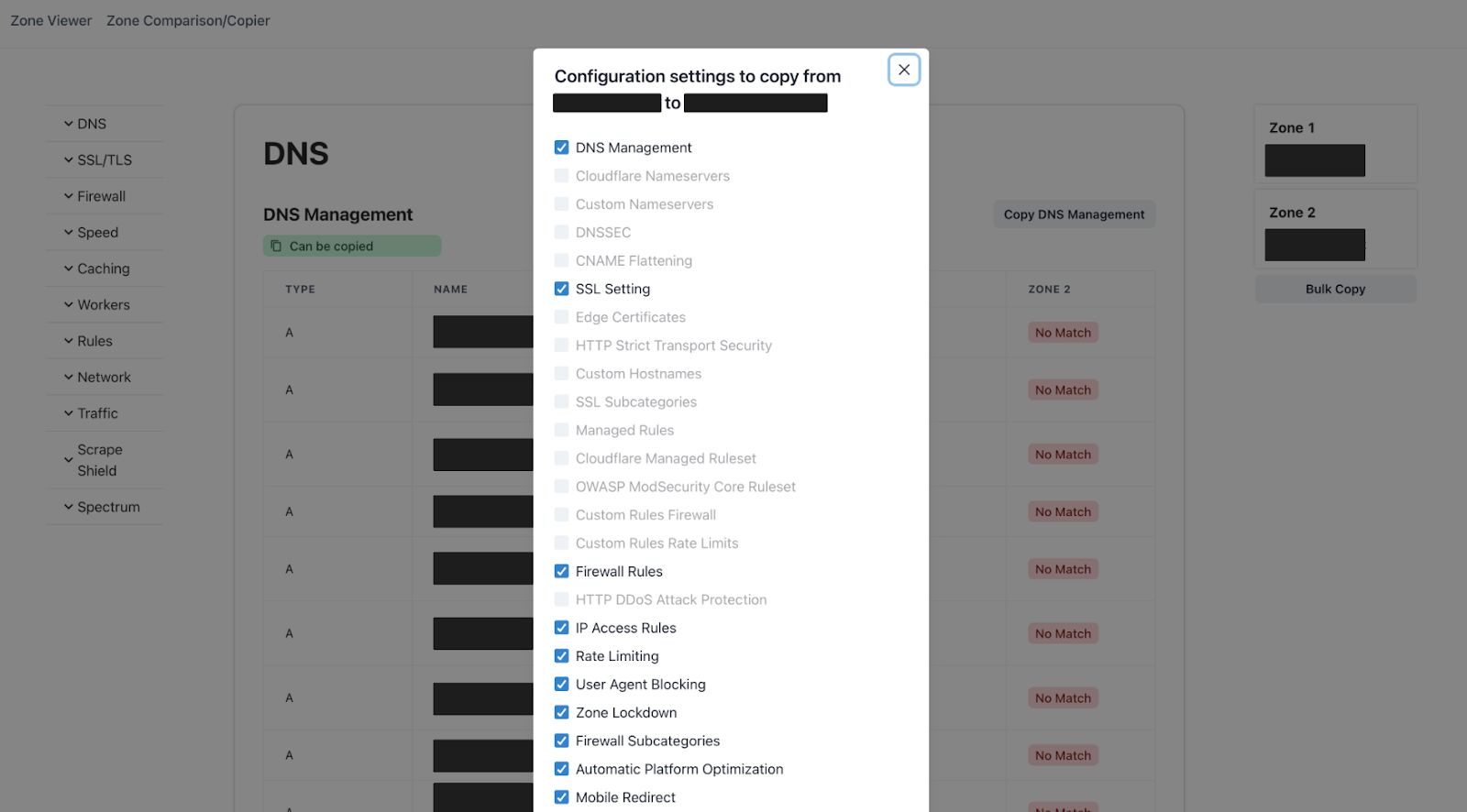

Alongside the Zone Copier, we would have some auxiliary tools such as a Zone Viewer, and Zone Comparison, where a customer can easily have a full view of their configurations on a single webpage and be able to compare different zones that they use respectively. These other applications improve upon the existing methods through which Cloudflare users can view their zone configurations, and allow for the direct comparison between different zones.

Importantly, these applications are not to replace the Cloudflare Dashboard, but to complement it instead – for deeper dives into a single particular configuration setting, the Cloudflare Dashboard remains the way to go.

To begin building the web application, I spent the first few weeks diving into the publicly available APIs offered by Cloudflare as part of the v4 API to verify the outputs of each endpoint, and the type of data that would be sent as a response from a request. This took much longer than expected as certain endpoints provided different default responses for a zone that has either an empty setting – for example, not having any Firewall Rules created – or uses a nested structure for its relevant response. These different potential responses have to be examined so that when the web application calls the respective API endpoint, the responses are handled appropriately. This process was quite manual as each endpoint had to be verified individually to ensure the output would work seamlessly with the application.

Once I completed my research, I was able to start designing the web application. Building the web application was a very interesting experience as the stack rested solely on Workers, a serverless application platform. My prior experiences building web applications used servers that require the deployment of a server built using Express and Node.js, whereas for my internship project, I completely relied on a backend built using the itty-router library on Workers to interface with the publicly available Cloudflare APIs. I found this extremely exciting as building a serverless application required less overhead compared to setting up a server and deploying it, and using Workers itself has many other added benefits such as zero cold starts. This introduction to serverless technology and my experience deep-diving into the capabilities of Workers has really opened my eyes to the possibilities that Workers as a platform can offer. With Workers, you can deploy any application on Cloudflare’s global network like I did!

For the frontend of the web application, I used React and the Chakra-UI library to build the user interface for which the Zone Viewer, Zone Comparison, and Zone Copier, is based on. The routing between different pages was done using React Router and the application is deployed directly through Workers.

Here is a screenshot of the application:

Presenting the prototype application

As developers will know, the best way to obtain feedback for the tool that you’re building is to directly have your customers use them and let you know what they think of your application and the kind of features they want to have built on top of it. Therefore, once we had a prototype version of the web application for the Zone Viewer and Zone Comparison complete, we presented the application to the Solutions Engineering team to hear their thoughts on the impact the tool would have on their work and additional features they would like to see built on the application. I found this process very enriching as they collectively mentioned how impactful the application would be for their work and the value add this project provides to them.

Some interesting feedback and feature requests I received were:

- The Zone Copier would definitely be very useful for our partners who have to replicate the configuration of one zone to another regularly, and it’s definitely going to help make sure there are less human errors in the process of configuring the setups.

- Besides duplicating configurations from zone-to-zone, could we use this to replicate the configurations from a best-in-class setup for different use cases and allow partners to deploy this with a few clicks?

- Can we use this tool to generate quarterly reports?

- The Zone Viewer would be very helpful for us when we produce documentation on a particular zone’s configuration as part of a POC report.

- The Zone Viewer will also give us much deeper insight to better understand the current zone configurations and provide recommendations to improve it.

It was also a very cool experience speaking to the broad Solutions Engineering team as I found that many were very technically inclined and had many valid suggestions for improving the architecture and development of the applications. A special thanks to Edwin Wong for setting up the sharing session with the internal team, and many thanks to Xin Meng, AQ Jiao, Yonggil Choi, Steve Molloy, Kyouhei Hayama, Claire Lim and Jamal Boutkabout for their great insight and suggestions!

Impact of Cloudflare outside of work

While Cloudflare is known for its impeccable transparency throughout the company, and the stellar products it provides in helping make the Internet better, I wanted to take this opportunity to talk about the other endeavors that the company has too.

Cloudflare is part of the Pledge 1%, where the company dedicates 1% of products and 1% of our time to give back to the local communities as well as all the communities we support online around the world.

I took part in one of these activities, where we spent a morning cleaning up parts of the East Coast Park beach, by picking up trash and litter that had been left behind by other park users. Here’s a picture of us from that morning:

From day one, I have been thoroughly impressed by Cloudflare’s commitment to its culture and the effort everyone at Cloudflare puts in to make the company a great place to work and have a positive impact on the surrounding community.

In addition to giving back to the community, other aspects of company culture include having a good team spirit and safe working environment where you feel appreciated and taken care of. At Cloudflare, I have found that everyone is very understanding of work commitments. I faced a few challenges during the internship where I had to spend additional time on university related projects and work, and my manager has always been very supportive and understanding if I required additional time to complete parts of the internship project.

Concluding takeaways

My experience interning at Cloudflare has been extremely positive, and I have seen first hand how transparent the company is with not only its employees but also its customers, and it truly is a great place to work. Cloudflare’s collaborative culture allowed me to access members from different teams, to obtain their thoughts and assistance with certain issues that I faced from time to time. I would not have been able to produce an impactful project without the help of the different brilliant, and motivated, people I worked with across the span of the internship, and I am truly grateful for such a rewarding experience.

We are getting ready to open intern roles for this coming Fall, so we encourage you to visit our careers page frequently, to be up-to-date on all the opportunities we have within our teams.