Post Syndicated from Nevi Shah original https://blog.cloudflare.com/pages-function-goes-ga/

Before we launched Pages back in April 2021, we knew it would be the start of something magical – an experience that felt “just right”. We envisioned an experience so simple yet so smooth that any developer could ship a website in seconds and add more to it by using the rest of our Cloudflare ecosystem.

A few months later, when we announced that Pages was a full stack platform in November 2021, that vision became a reality. Creating a development platform for just static sites was not the end of our Pages story, and with Cloudflare Workers already a part of our ecosystem, we knew we were sitting on untapped potential. With the introduction of Pages Functions, we empowered developers to take any static site and easily add in dynamic content with the power of Cloudflare Workers.

In the last year since Functions has been in open beta, we dove into an exploration on what kinds of full stack capabilities developers are looking for on their projects – and set out to fine tune the Functions experience into what it is today.

We’re thrilled to announce that Pages Functions is now generally available!

Functions recap

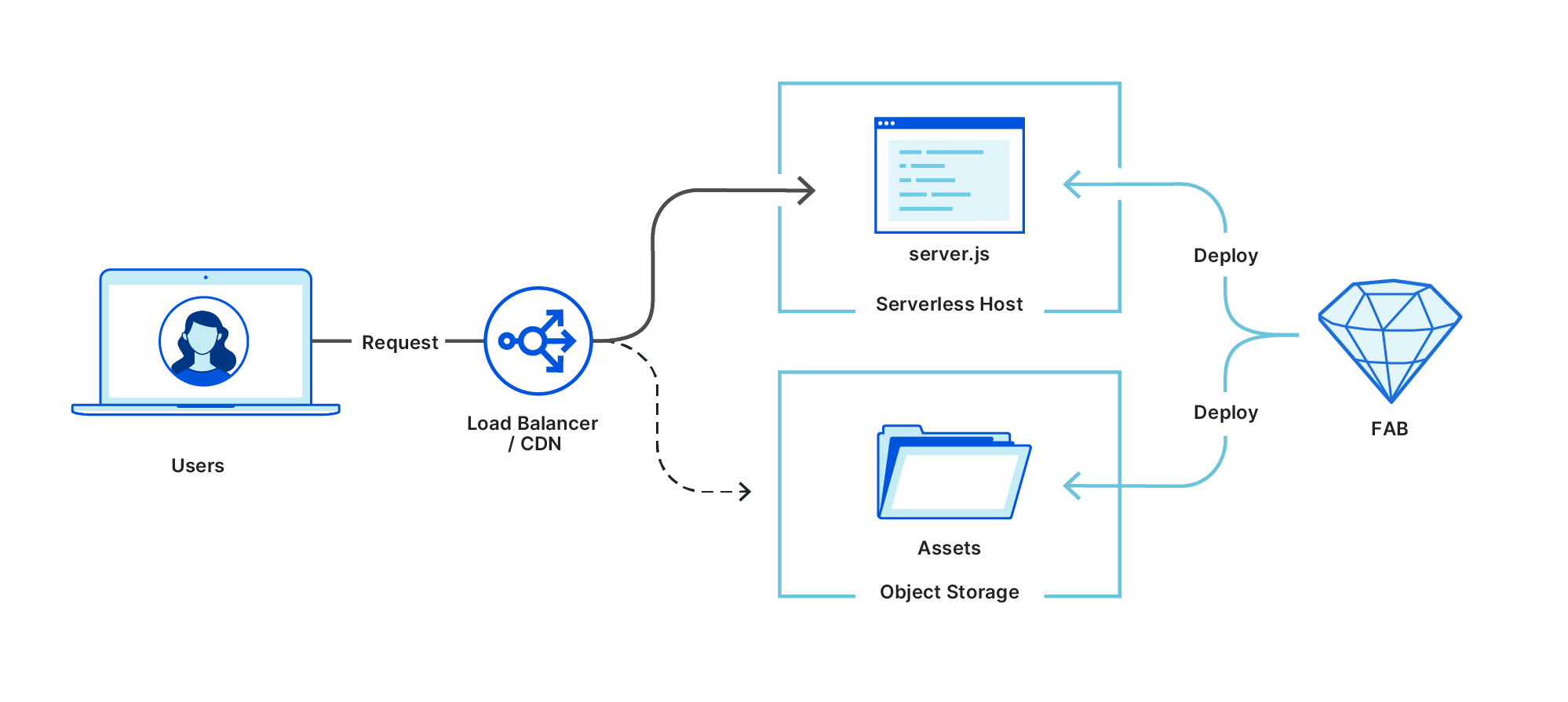

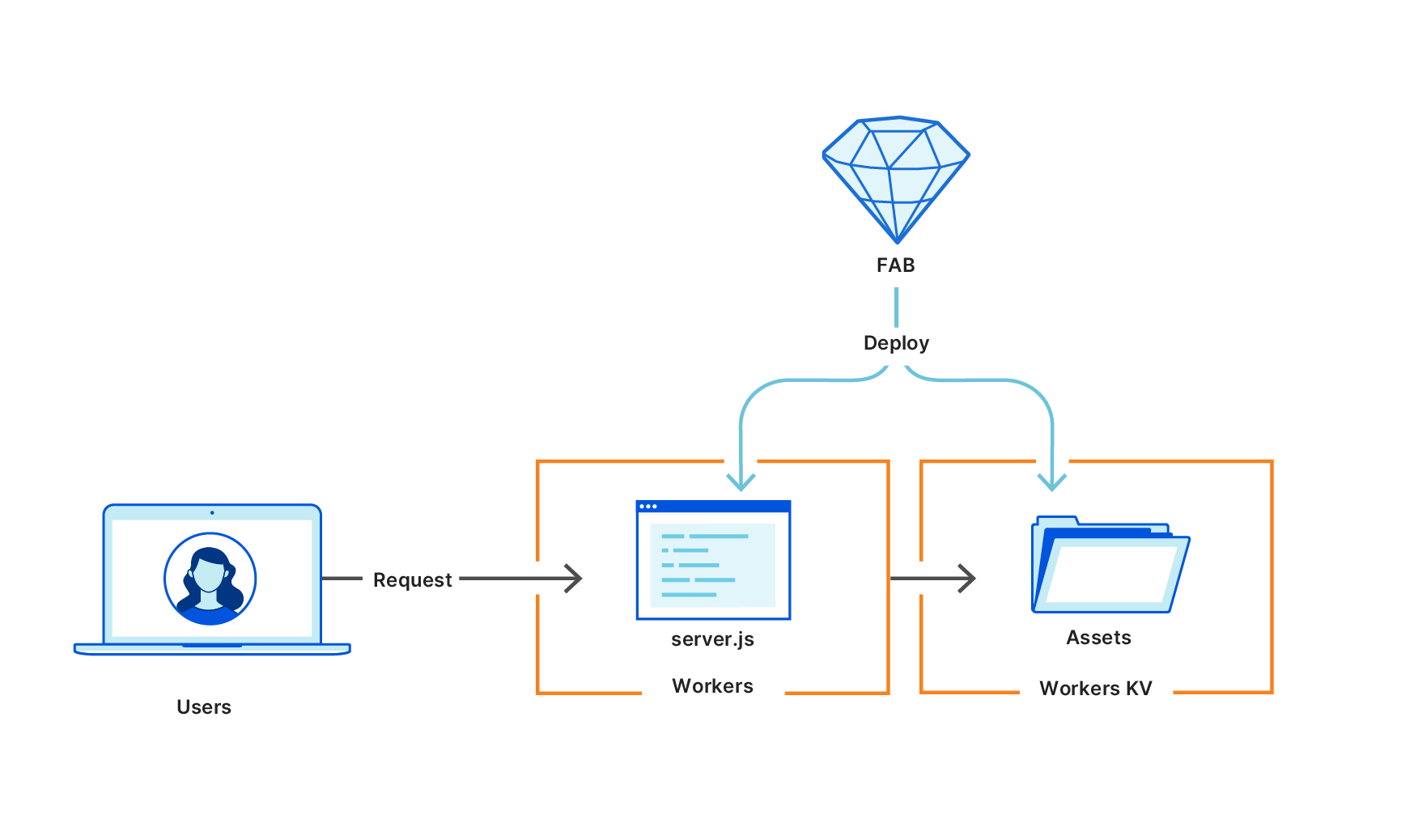

Though called “Functions” in the context of Pages, these functions running on our Cloudflare network are Cloudflare Workers in “disguise”. Pages harnesses the power and scalability of Workers and specializes them to align with the Pages experience our users know and love.

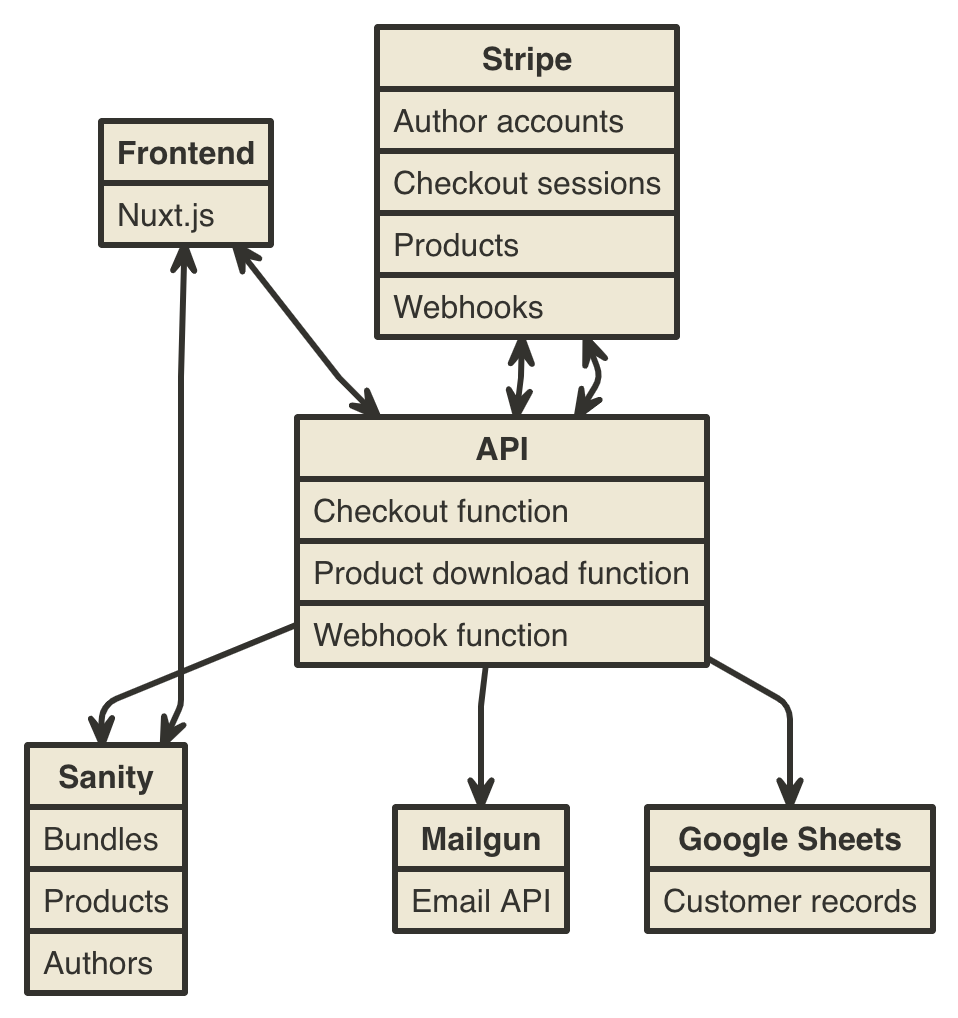

With Functions you can dream up the possibilities of dynamic functionality to add to your site – integrate with storage solutions, connect to third party services, use server side rendering with your favorite full stack frameworks and more. As Pages Functions opens its doors to production traffic, let’s explore some of the exciting features we’ve improved and added on this release.

The experience

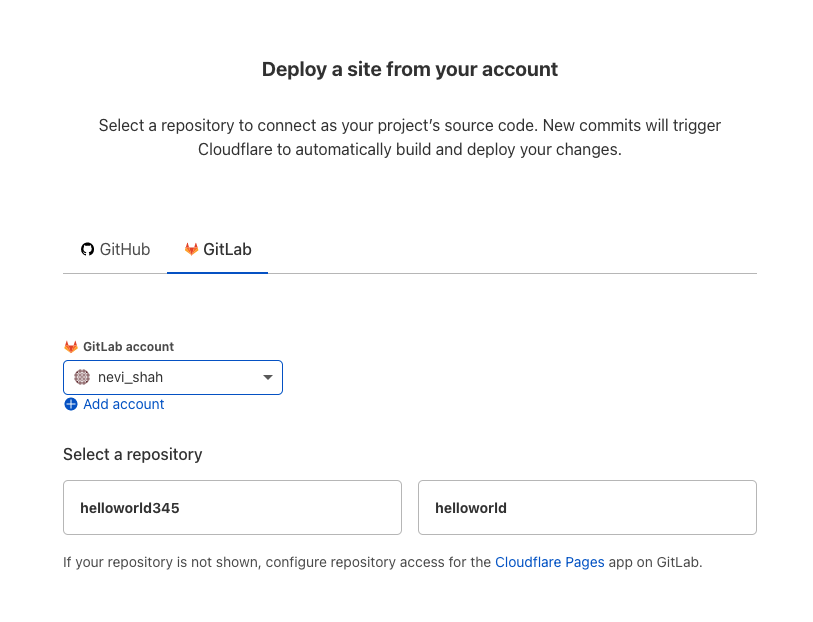

Deploy with Git

Love to code? We’ll handle the infrastructure, and leave you to it.

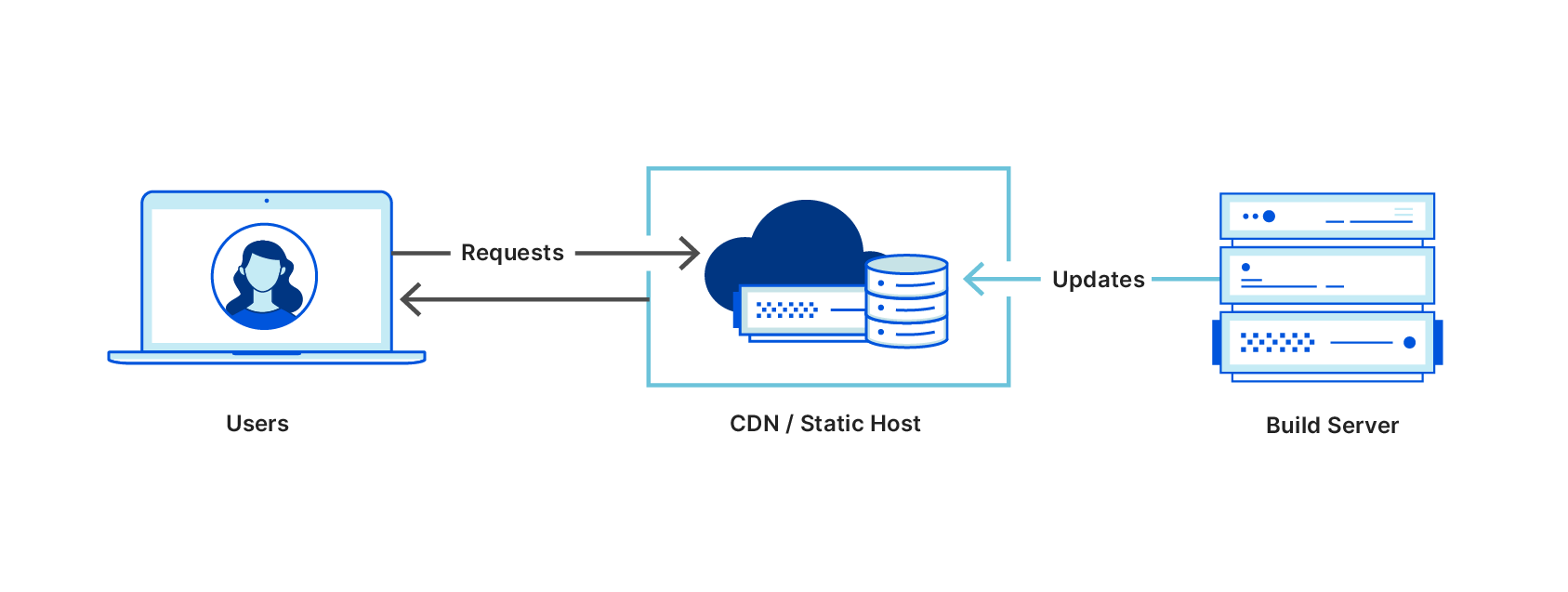

Simply write a JavaScript/Typescript Function and drop it into a functions directory by committing your code to your Git provider. Our lightning fast CI system will build your code and deploy it alongside your static assets.

Directly upload your Functions

Prefer to handle the build yourself? Have a special git provider not yet supported on Pages? No problem! After dropping your Function in your functions folder, you can build with your preferred CI tooling and then upload your project to Pages to be deployed.

Debug your Functions

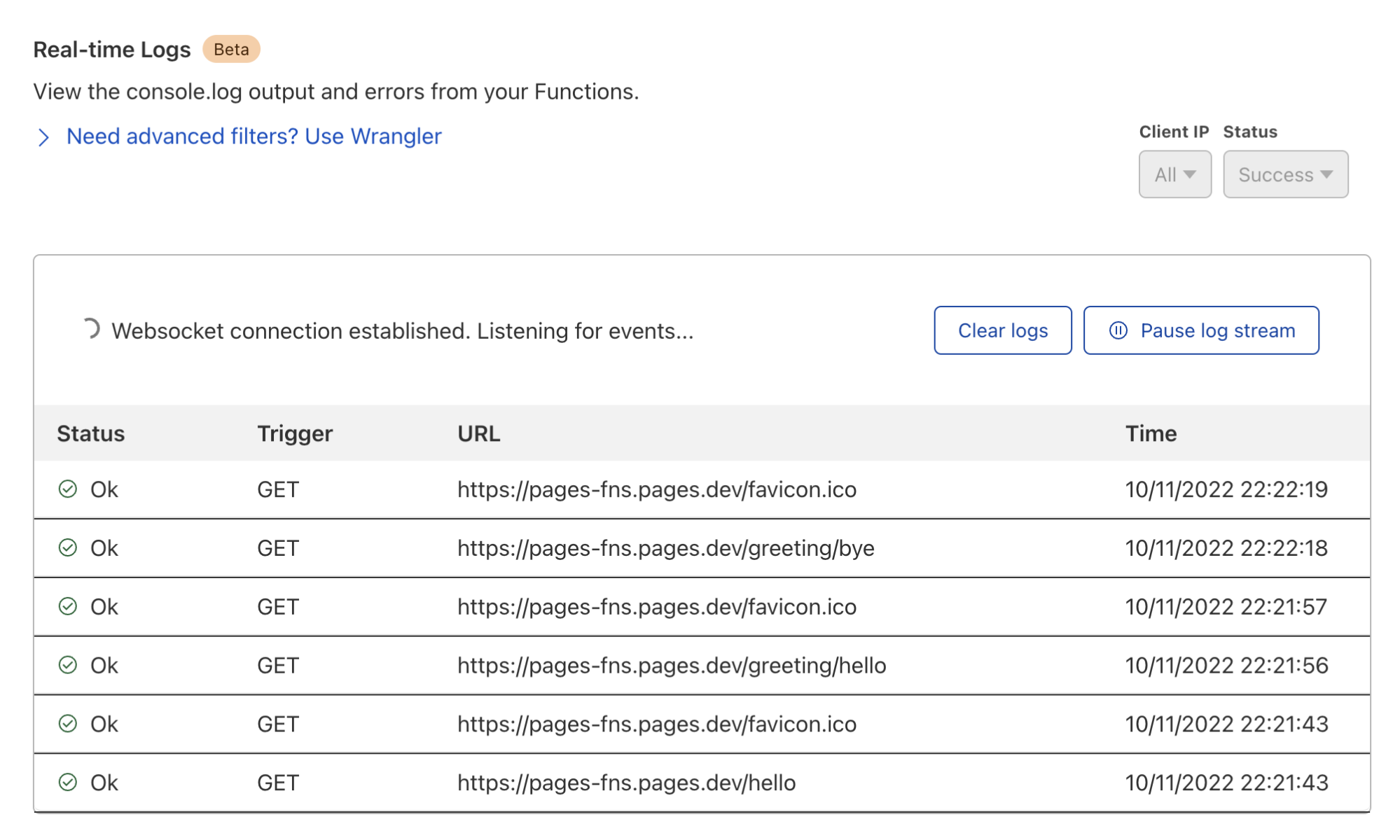

While in beta, we learned that you and your teams value visibility above all. As on Cloudflare Workers, we’ve built a simple way for you to watch your functions as it processes requests – the faster you can understand an issue the faster you can react.

You can now easily view logs for your Functions by “tailing” your logs. For basic information like outcome and request IP, you can navigate to the Pages dashboard to obtain relevant logs.

For more specific filters, you can use

wrangler pages deployment tail

to receive a live feed of console and exception logs for each request your Function receives.

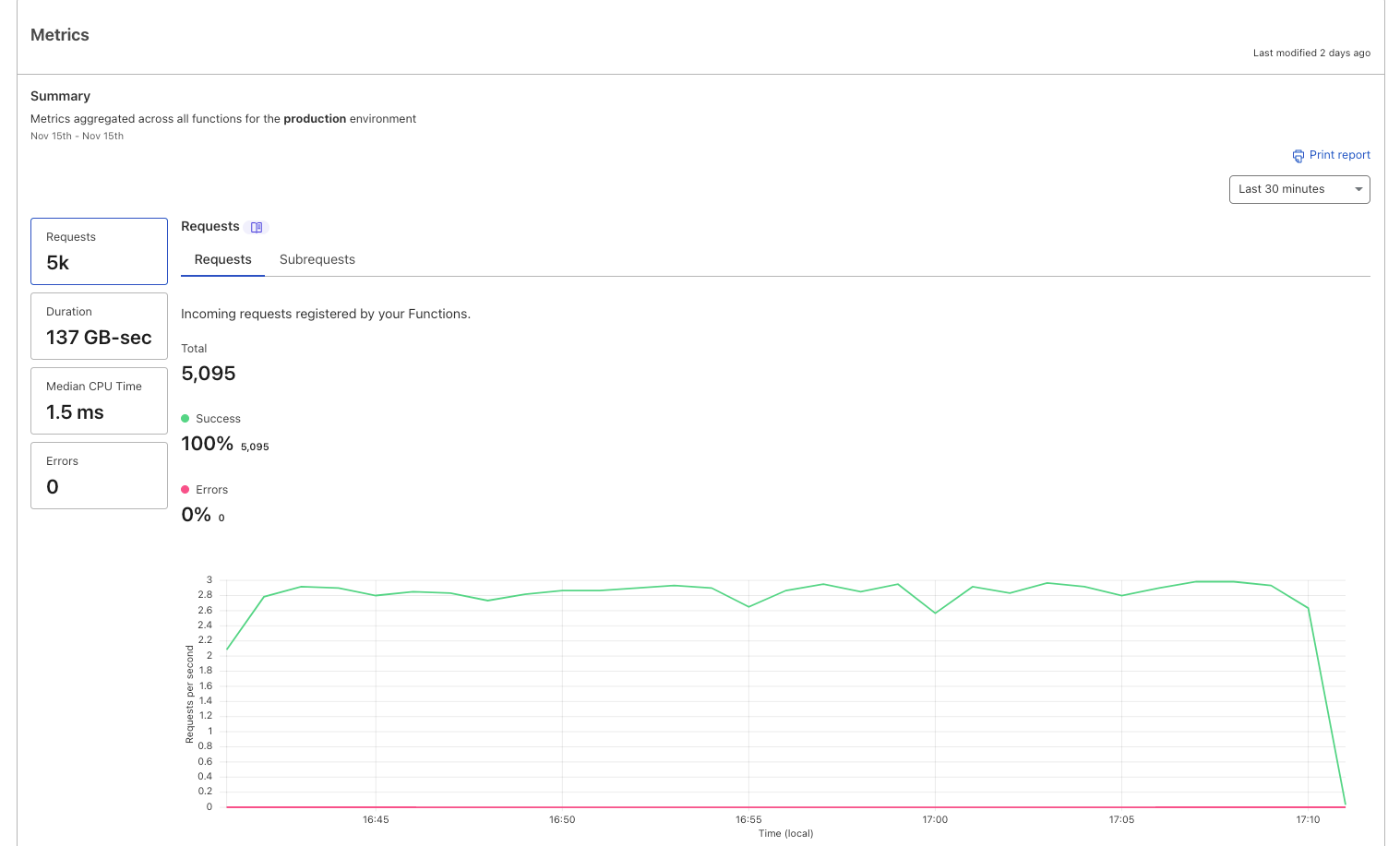

Get real time Functions metrics

In the dashboard, Pages aggregates data for your Functions in the form of request successes/error metrics and invocation status. You can refer to your metrics dashboard not only to better understand your usage on a per-project basis but also to get a pulse check on the health of your Functions by catching success/error volumes.

Quickly integrate with the Cloudflare ecosystem

Storage bindings

Want to go truly full stack? We know finding a storage solution that fits your needs and fits your ecosystem is not an easy task – but it doesn’t have to be!

With Functions, you can take advantage of our broad range of storage products including Workers KV, Durable Objects, R2, D1 and – very soon – Queues and Workers Analytics Engine! Simply create your namespace, bucket or database and add your binding in the Pages dashboard to get your full stack site up and running in just a few clicks.

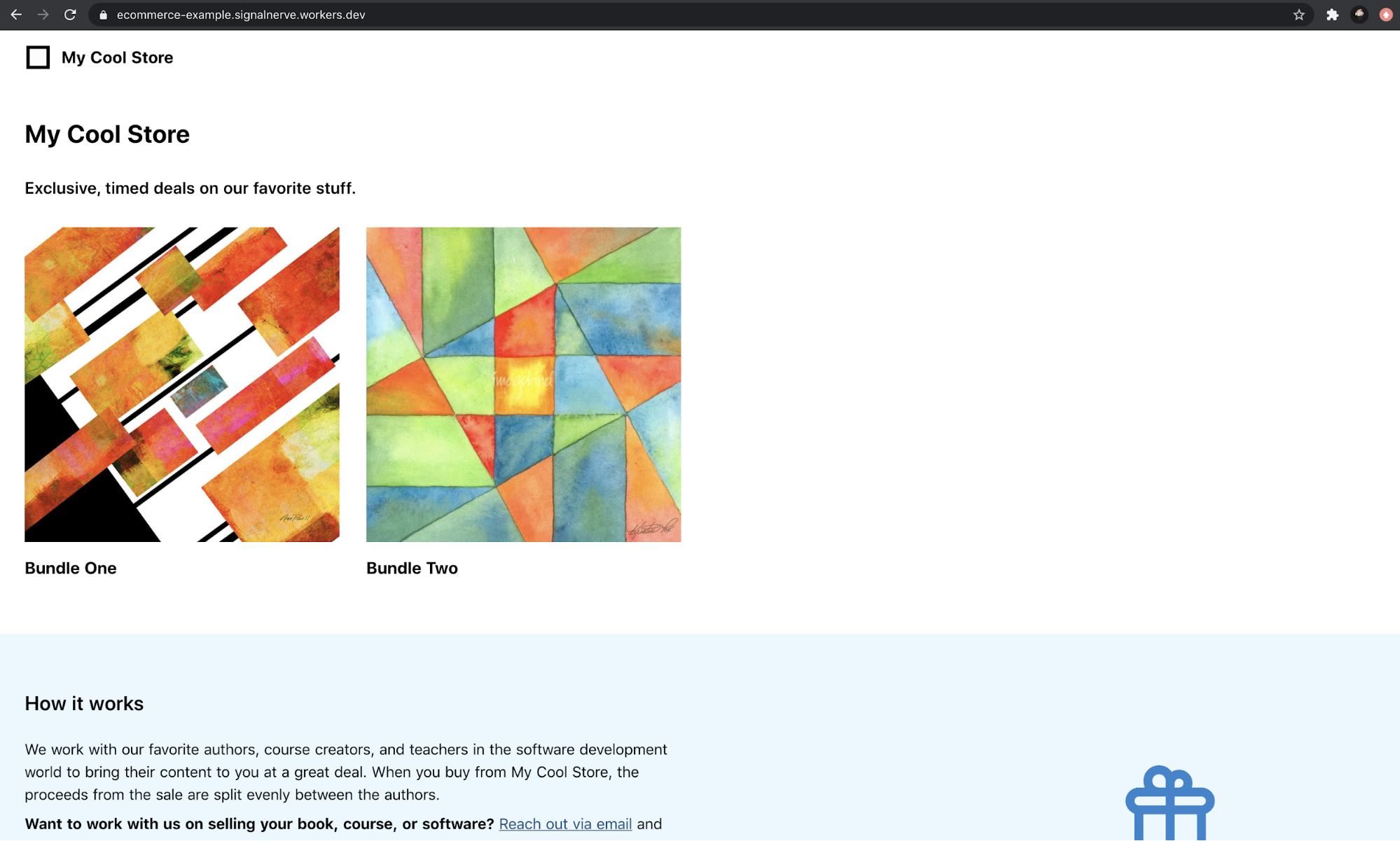

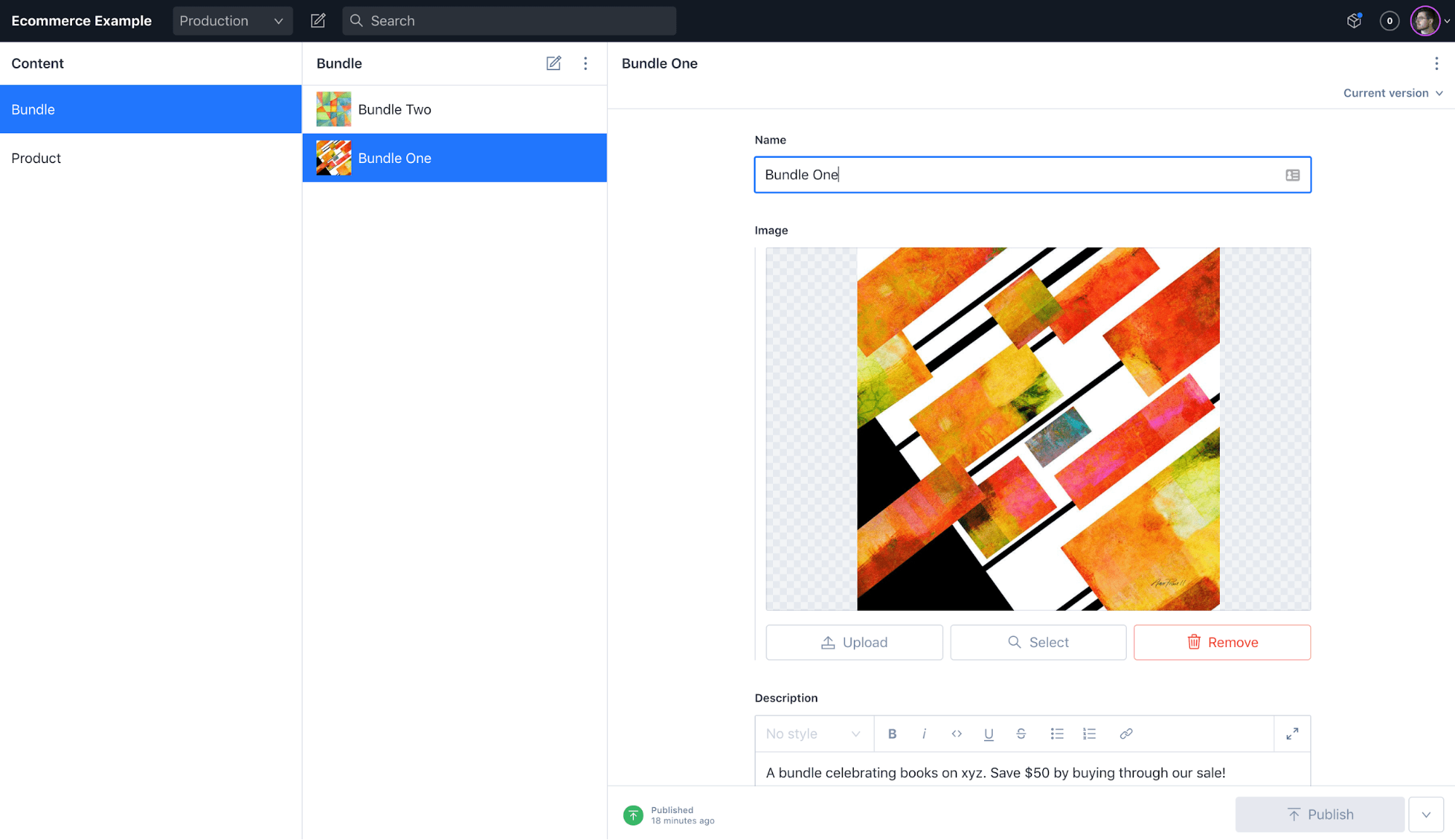

From dropping in a quick comment system to rolling your own authentication to creating database-backed eCommerce sites, integrating with existing products in our developer platform unlocks an exponential set of use cases for your site.

Secret bindings

In addition to adding environment variables that are available to your project at both build-time and runtime, you can now also add “secrets” to your project. These are encrypted environment variables which cannot be viewed by any dashboard interfaces, and are a great home for sensitive data like API tokens or passwords.

Integrate with 3rd party services

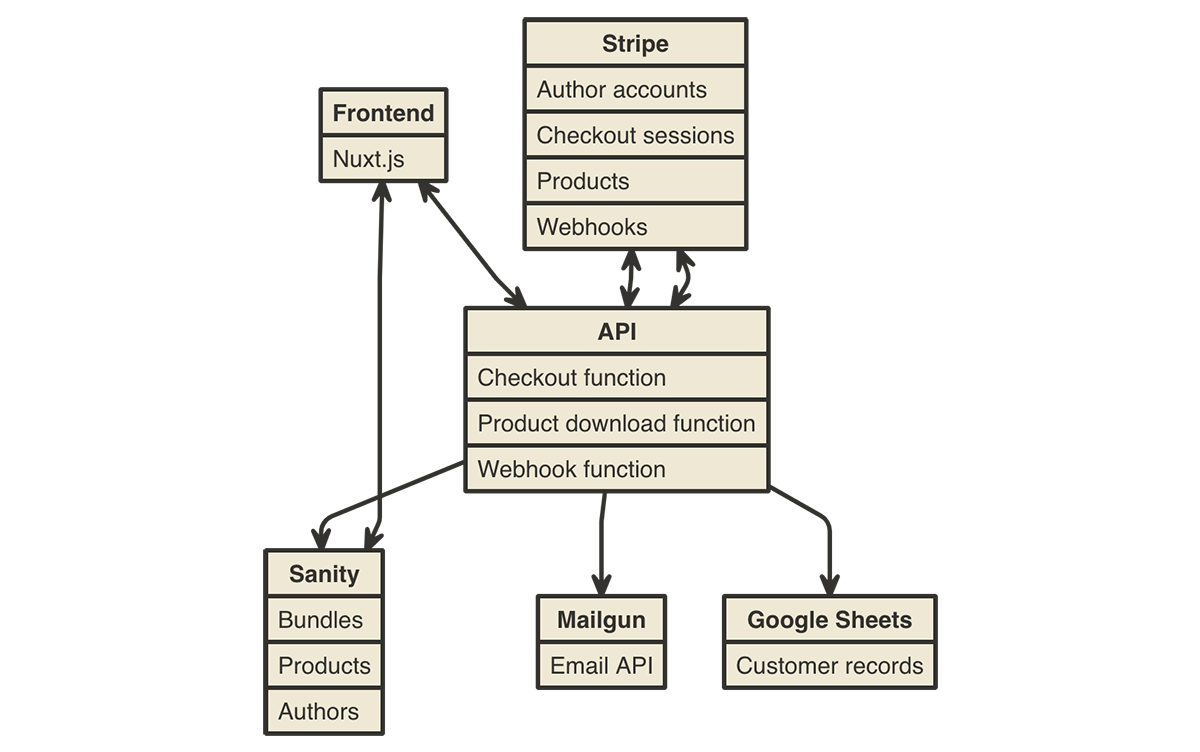

Our goal with Pages is always to meet you where you are when it comes to the tools you love to use. During this beta period we also noticed some consistent patterns in how you were employing Functions to integrate with common third party services. Pages Plugins – our ready-made snippets of code – offers a plug and play experience for you to build the ecosystem of your choice around your application.

In essence, a Pages Plugin is a reusable – and customizable – chunk of runtime code that can be incorporated anywhere within your Pages application. It’s a “composable” Pages Function, granting Plugins the full power of Functions (i.e. Workers), including the ability to set up middleware, parameterized routes, and static assets.

With Pages Plugins you can integrate with a plethora of 3rd party applications – including officially supported Sentry, Honeycomb, Stytch, MailChannels and more.

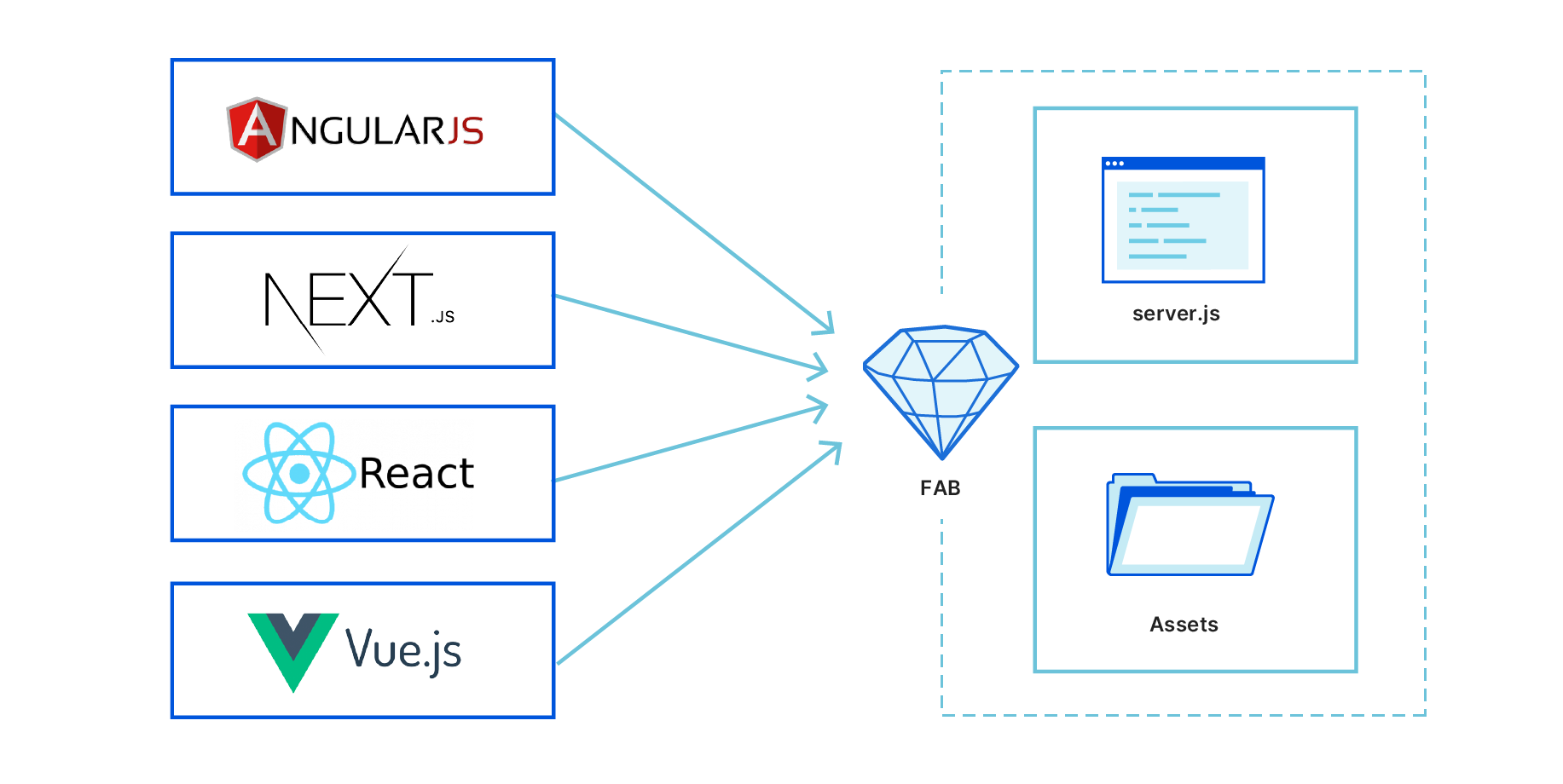

Use your favorite full stack frameworks

In the spirit of meeting developers where they are at, this sentiment also comes in the form of Javascript frameworks. As a big supporter of not only widely adopted frameworks but up and coming frameworks, our team works with a plethora of framework authors to create opportunities for you to play with their new tech and deploy on Pages right out of the box.

Now compatible with Next.js 13 and more!

Recently, we announced our support for Next.js applications which opt in to the Edge Runtime. Today we’re excited to announce we are now compatible with Next.js 13. Next.js 13 brings some most-requested modern paradigms to the Next.js framework, including nested routing, React 18’s Server Components and streaming.

Have a different preference of framework? No problem.

Go full stack on Pages to take advantage of server side rendering (SSR) with one of many other officially supported frameworks like Remix, SvelteKit, QwikCity, SolidStart, Astro and Nuxt. You can check out our blog post on SSR support on Pages and how to get started with some of these frameworks.

Go fast in advanced mode

While Pages Functions are powered by Workers, we understand that at face-value they are not exactly the same. Nevertheless, for existing users who are perhaps using Workers and are keen on trying Cloudflare Pages, we’ve got a direct path to get you started quickly.

If you already have a single Worker and want an easy way to go full stack on Pages, you can use Pages Function’s “advanced mode”. Generate an ES module Worker called _worker.js in the output directory of your project and deploy!

This can be especially helpful if you’re a framework author or perhaps have a more complex use case that does not fit into our file-based router.

Scaling without limits

So today, as we announce Functions as generally available we are thrilled to allow your traffic to scale. During the Open Beta period, we imposed a daily limit of 100,000 free requests per day as a way to let you try out the feature. While 100,000 requests per day remains the free limit today, you can now pay to truly go unlimited.

Since Functions are just “special” Workers, with this announcement you will begin to see your Functions usage reflected on your bill under the Workers Paid subscription or via your Workers Enterprise contract. Like Workers, when on a paid plan, you have the option to choose between our two usage models – Bundled and Unbound – and will be billed accordingly.

Keeping Pages on brand as Cloudflare’s “gift to the Internet”, you will get unlimited free static asset requests and will be billed primarily on dynamic requests. You can read more about how billing with Functions works in our documentation.

Get started today

To start jamming, head over to the Pages Functions docs and check out our blog on some of the best frameworks to use to deploy your first full stack application. As you begin building out your projects be sure to let us know in the #functions channel under Pages of our Cloudflare Developers Discord. Happy building!