Post Syndicated from Duc Nguyen original https://blog.cloudflare.com/logpush-filters-alerts/

Logs are a critical part of every successful application. Cloudflare products and services around the world generate massive amounts of logs upon which customers of all sizes depend. Structured logging from our products are used by customers for purposes including analytics, debugging performance issues, monitoring application health, maintaining security standards for compliance reasons, and much more.

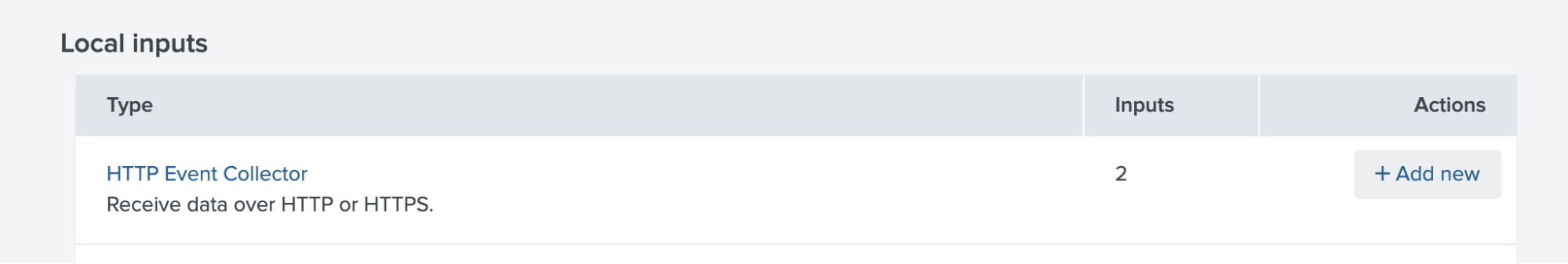

Logpush is Cloudflare’s product for pushing these critical logs to customer systems for consumption and analysis. Whenever our products generate logs as a result of traffic or data passing through our systems from anywhere in the world, we buffer these logs and push them directly to customer-defined destinations like Cloudflare R2, Splunk, AWS S3, and many more.

Today we are announcing three new key features related to Cloudflare’s Logpush product. First, the ability to have only logs matching certain criteria be sent. Second, the ability to get alerted when logs are failing to be pushed due to customer destinations having issues or network issues occurring between Cloudflare and the customer destination. In addition, customers will also be able to query for analytics around the health of Logpush jobs like how many bytes and records were pushed, number of successful pushes, and number of failing pushes.

Filtering logs before they are pushed

Because logs are both critical and generated with high volume, many customers have to maintain complex infrastructure just to ingest and store logs, as well as deal with ever-increasing related costs. On a typical day, a real, example customer receives about 21 billion records, or 2.1 terabytes (about 24.9 TB uncompressed) of gzip compressed logs. Over the course of a month, that could easily be hundreds of billions of events and hundreds of terabytes of data.

It is often unnecessary to store and analyze all of this data, and customers could get by with specific subsets of the data matching certain criteria. For example, a customer might want just the set of HTTP data that had status code >= 400, or the set of firewall data where the action taken was to block the user.

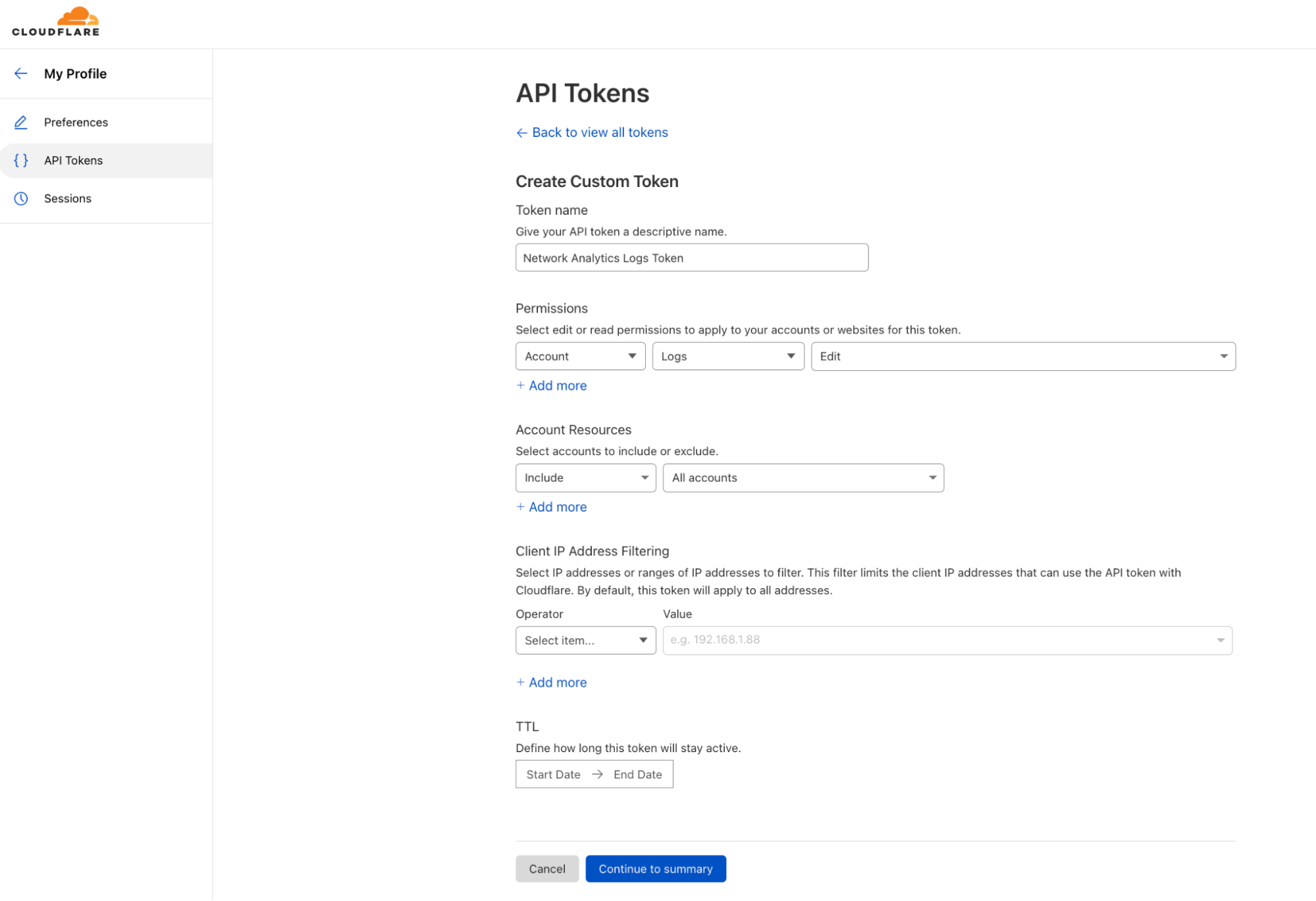

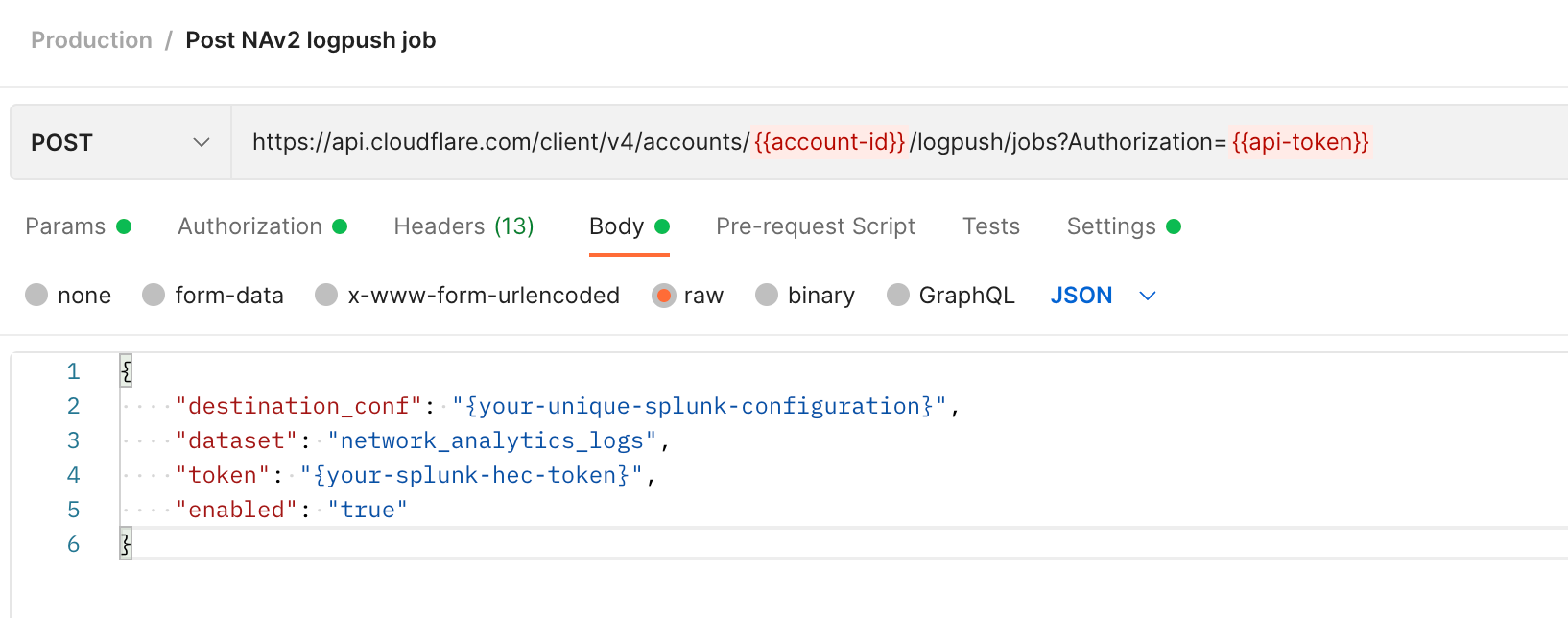

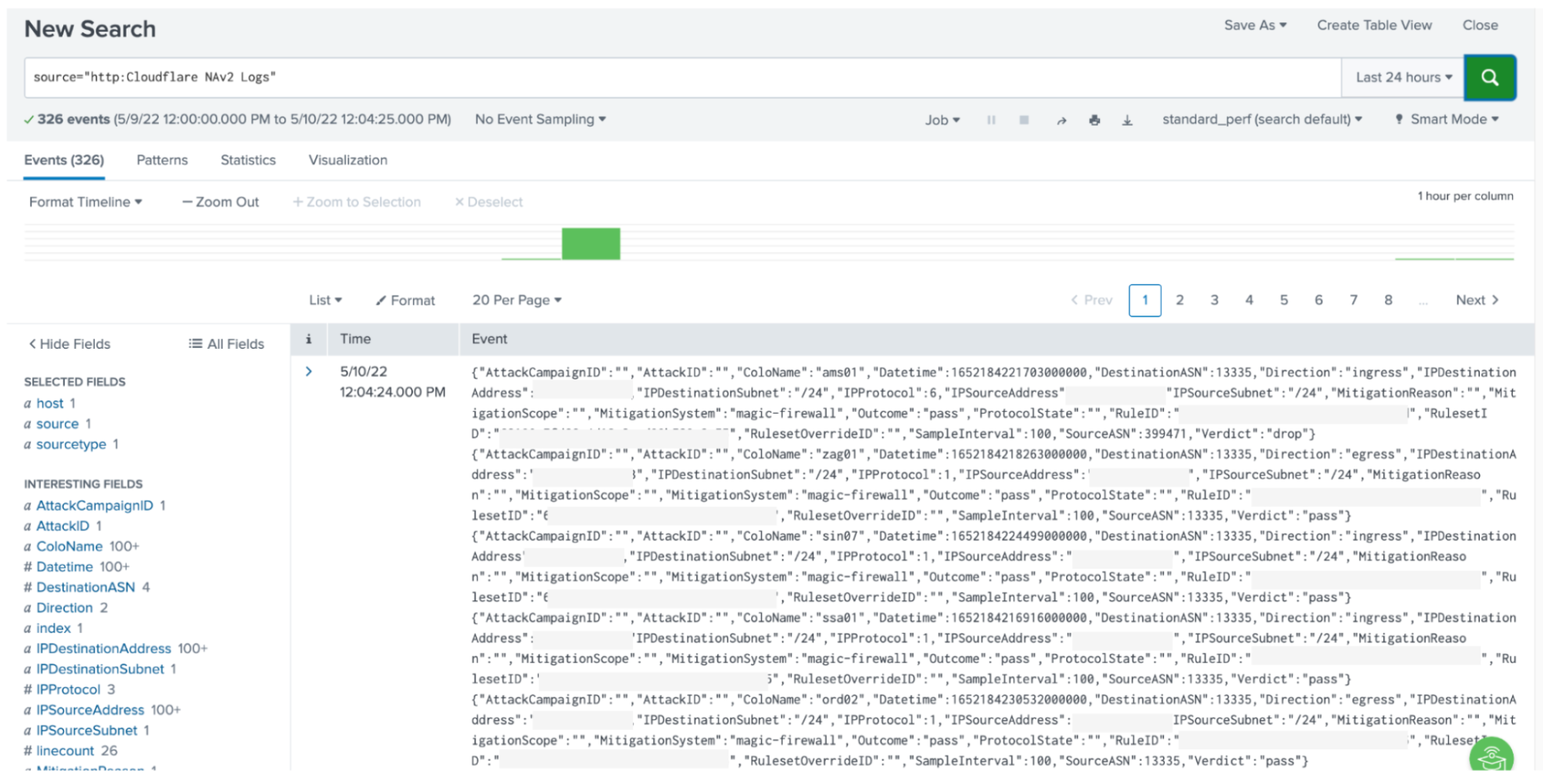

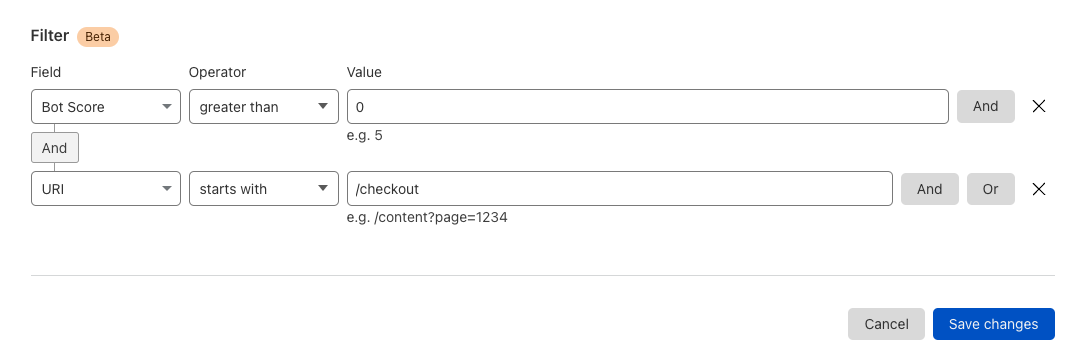

We can now achieve this in our Logpush jobs by setting specific filters on the fields of the log messages themselves. You can use either our API or the Cloudflare dashboard to set up filters.

To do this in the dashboard, either create a new Logpush job or modify an existing job. You will see the option to set certain filters. For example, an ecommerce customer might want to receive logs only for the checkout page where the bot score was non-zero:

Logpush job alerting

When logs are a critical part of your infrastructure, you want peace of mind that logging infrastructure is healthy. With that in mind, we are announcing the ability to get notified when your Logpush jobs have been retrying to push and failing for 24 hours.

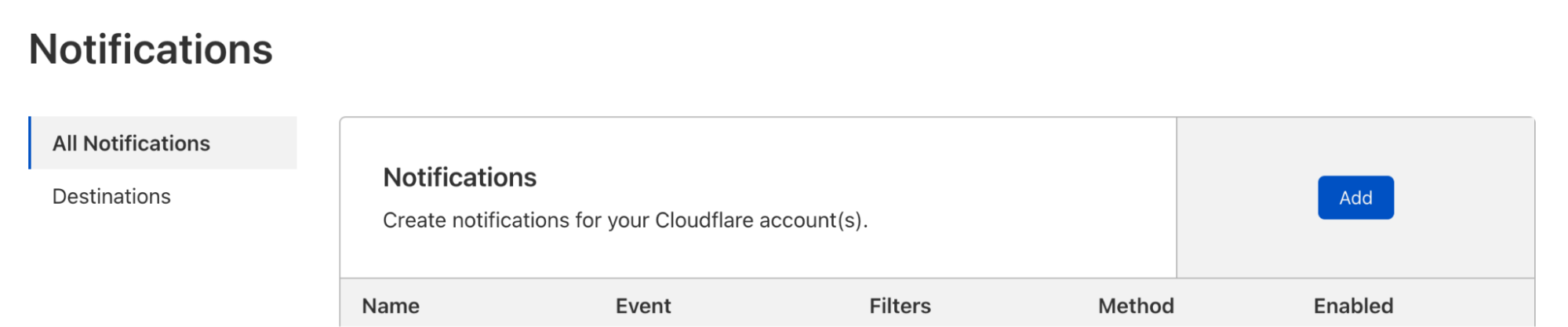

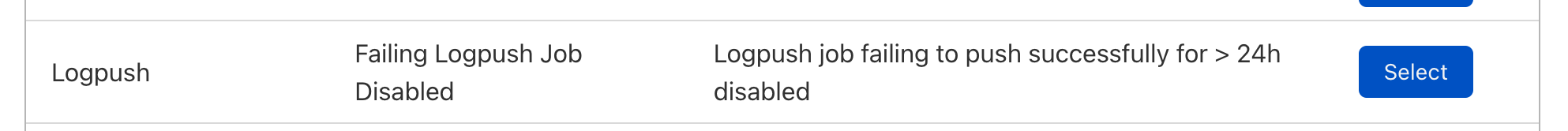

To set up alerts in the Cloudflare dashboard:

1. First, navigate to “Notifications” in the left-panel of the account view

2. Next, Click the “add” button

3. Select the alert “Failing Logpush Job Disabled”

4. Configure the alert and click Save.

That’s it — you will receive an email alert if your Logpush job is disabled.

Logpush Job Health API

We have also added the ability to query for stats related to the health of your Logpush jobs to our graphql API. Customers can now use our GraphQL API to query for things like the number of bytes pushed, number of compressed bytes pushed, number of records pushed, the status of each push, and much more. Using these stats, customers can have greater visibility into a core part of infrastructure. The GraphQL API is self documenting so full details about the new logpushHealthAdaptiveGroups node can be found using any GraphQL client, but head to GraphQL docs for more information.

Below are a couple example queries of how you can use the GraphQL to find stats related to your Logpush jobs.

Query for number of pushes to S3 that resulted in status code != 200

query

{

viewer

{

zones(filter: { zoneTag: $zoneTag})

{

logpushHealthAdaptiveGroups(filter: {

datetime_gt:"2022-08-15T00:00:00Z",

destinationType:"s3",

status_neq:200

},

limit:10)

{

count,

dimensions {

jobId,

status,

destinationType

}

}

}

}

}

Getting the number of bytes, compressed bytes and records that were pushed

query

{

viewer

{

zones(filter: { zoneTag: $zoneTag})

{

logpushHealthAdaptiveGroups(filter: {

datetime_gt:"2022-08-15T00:00:00Z",

destinationType:"s3",

status:200

},

limit:10)

{

sum {

bytes,

bytesCompressed,

records

}

}

}

}

}

Summary

Logpush is a robust and flexible platform for customers who need to integrate their own logging and monitoring systems with Cloudflare. Different Logpush jobs can be deployed to support multiple destinations or, with filtering, multiple subsets of logs.

Customers who haven’t created Logpush jobs are encouraged to do so. Try pushing your logs to R2 for safe-keeping! For customers who don’t currently have access to this powerful tool, consider upgrading your plan.