Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/robotic-waiter-learning-to-serve-drinks/

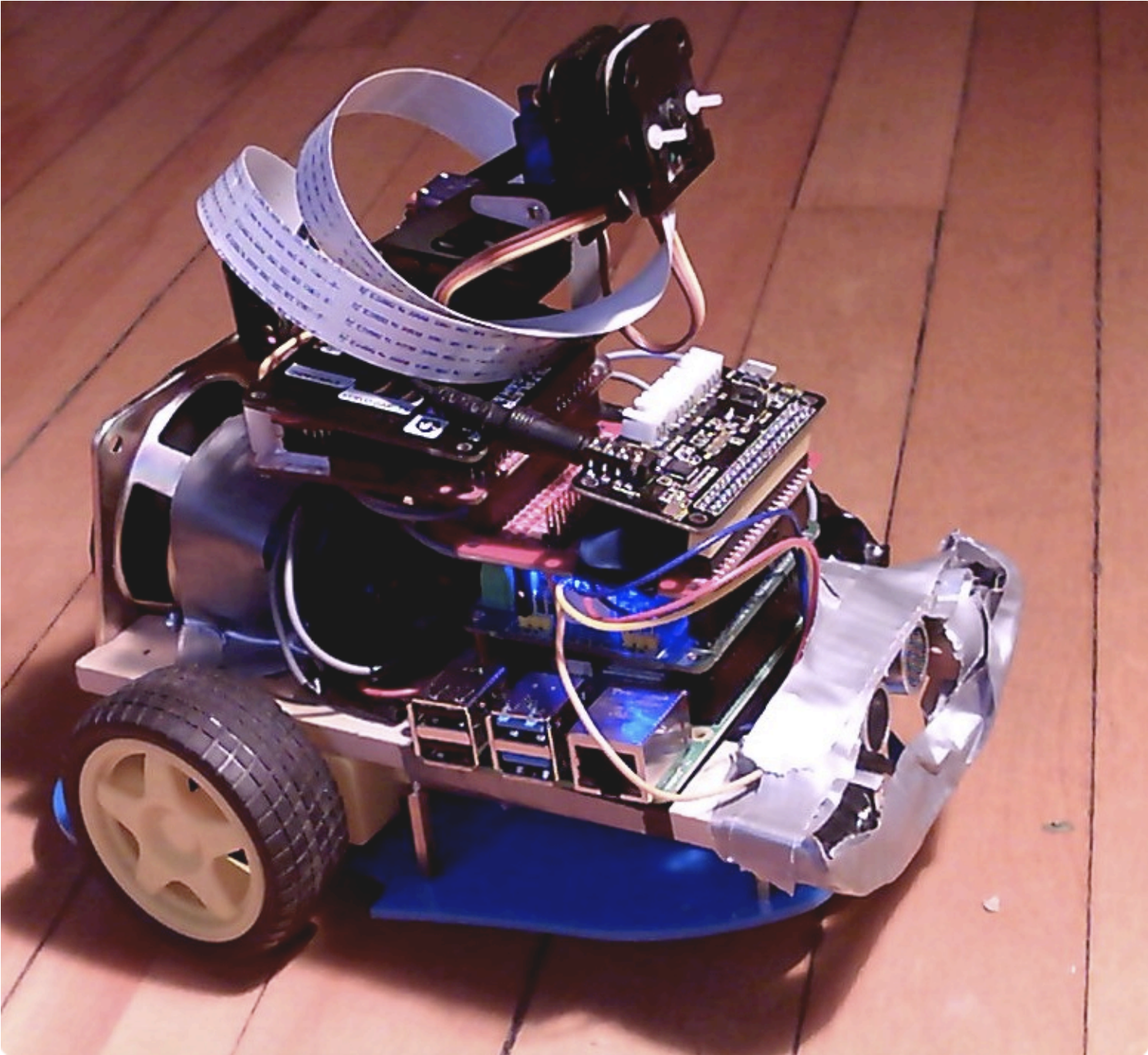

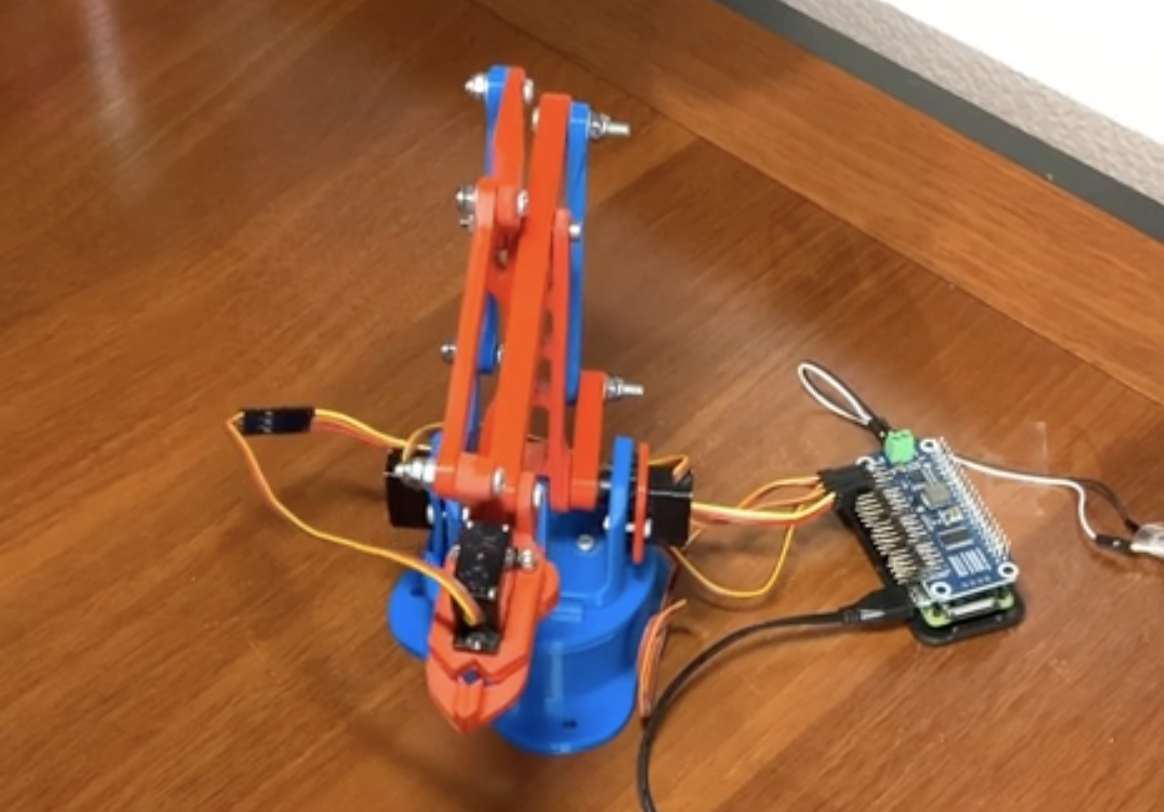

The maker of this robotic waiter had almost all of the parts for this project just sat around collecting dust on a shelf. We’re delighted they decided to take the time to pick up the few extra bits they needed online, then take the extra hour (just an hour?!) to write a program in Python to get this robotic waiter up and running.

We are also thrilled to report (having spotted it in the reddit post we found this project on) that the maker had “so much fun picking up and sometimes crushing small things with this claw.” The line between serving drinks and wanting to crush things is thinner than you might imagine.

And in even better news, all the code you need to recreate this build is on GitHub.

Parts list

- Raspberry Pi Zero

- Servo driver HAT for Raspberry Pi

- PlayStation 4 controller

reddit comments bantz

One of our favourite things about finding Raspberry Pi-powered projects on reddit is the comments section. It’s (usually) the perfect mix of light adoration, constructive suggestions, and gateways to tangents we cannot ignore.

Like this one recalling the Rick and Morty sketch in which a cute tiny robot realises their sole purpose is to pass butter:

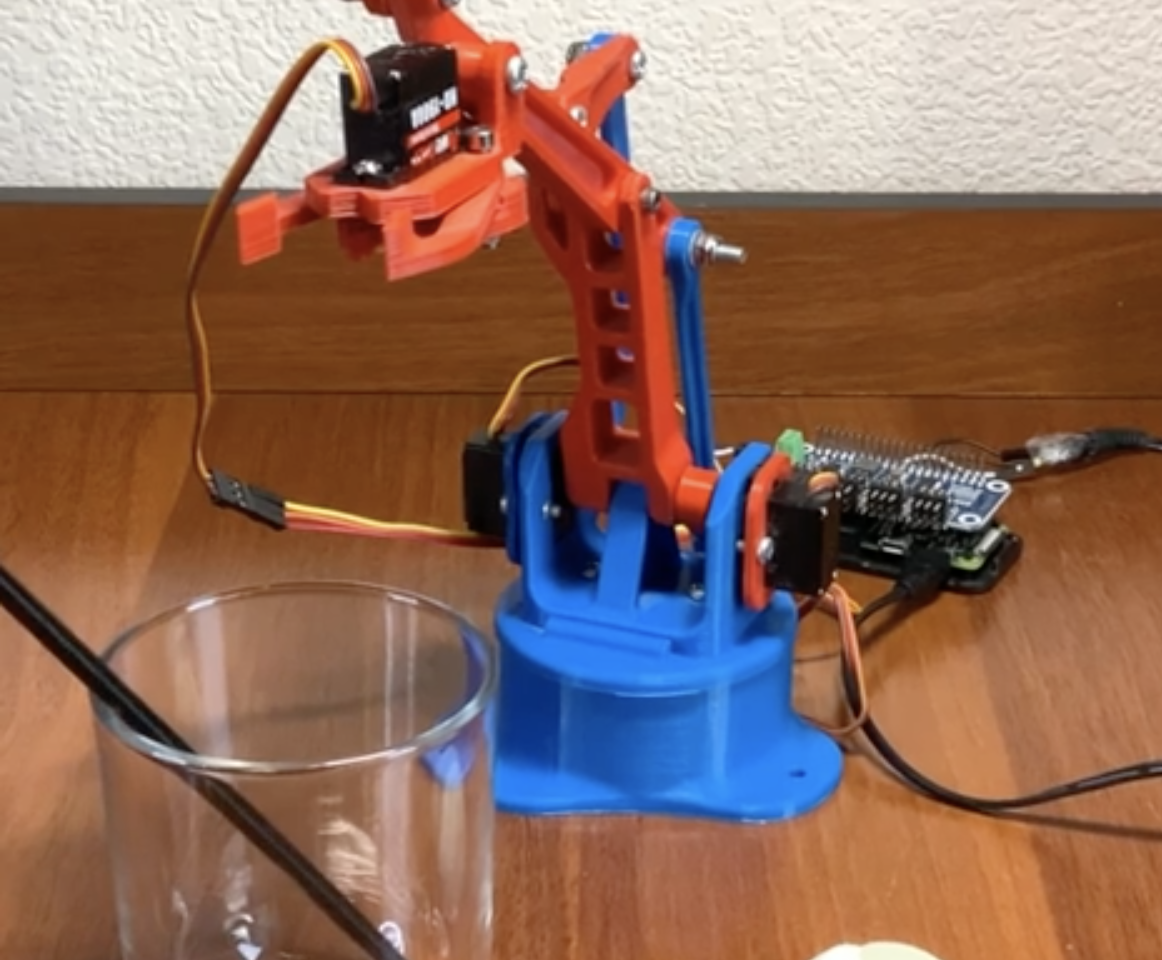

And also this one pointing us to another robotic arm having a grand old time picking up a tiny ball, sending it down a tiny slide, and then doing it all over again. Because it’s important we know how to make our own fun:

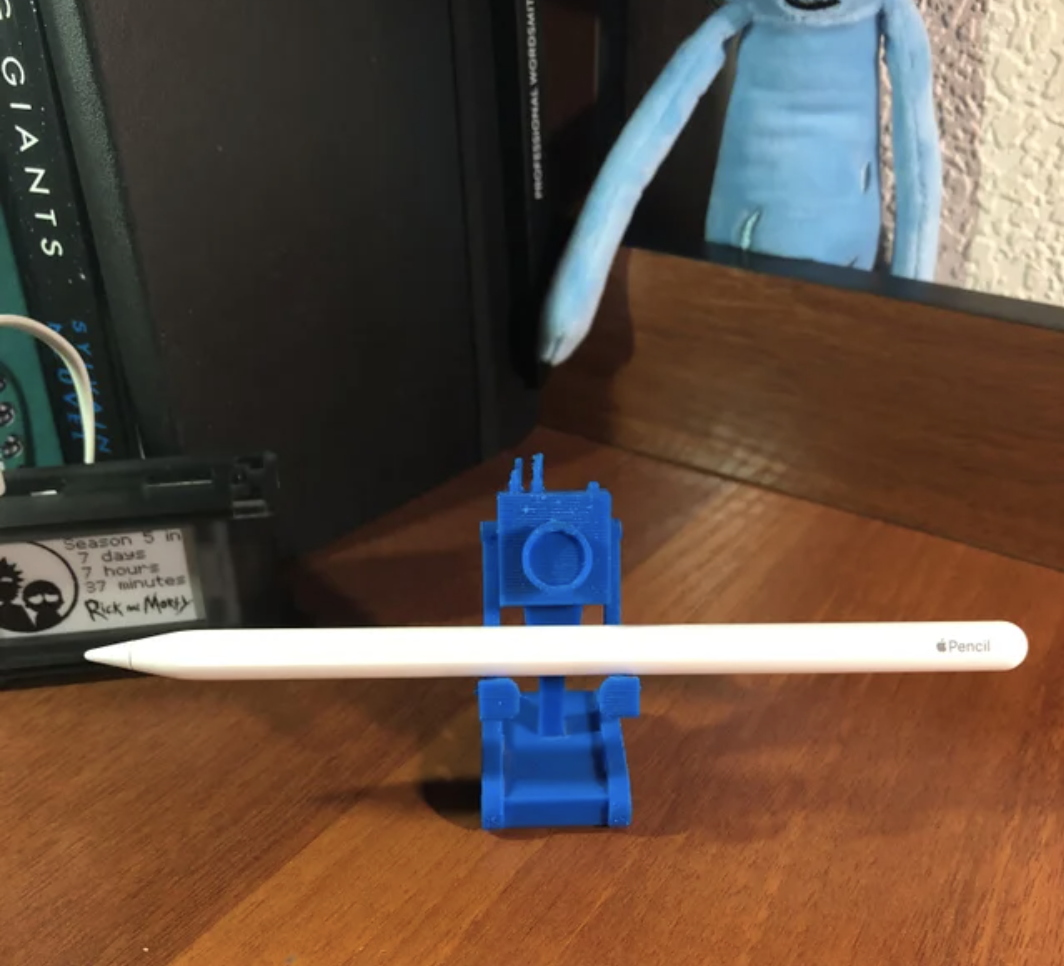

We also greatly enjoyed the fact that the original maker couldn’t use the Rick and Morty “what is my purpose” line to share this project because they are such an uber fan that they already used it for a project they posted just the day before. This cute creation’s sole reason for existing is to hold an Apple pencil while looking fabulous. And we are HERE for it:

The post Robotic waiter learning to serve drinks appeared first on Raspberry Pi.