Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/05/large-language-models-and-elections.html

Earlier this week, the Republican National Committee released a video that it claims was “built entirely with AI imagery.” The content of the ad isn’t especially novel—a dystopian vision of America under a second term with President Joe Biden—but the deliberate emphasis on the technology used to create it stands out: It’s a “Daisy” moment for the 2020s.

We should expect more of this kind of thing. The applications of AI to political advertising have not escaped campaigners, who are already “pressure testing” possible uses for the technology. In the 2024 presidential election campaign, you can bank on the appearance of AI-generated personalized fundraising emails, text messages from chatbots urging you to vote, and maybe even some deepfaked campaign avatars. Future candidates could use chatbots trained on data representing their views and personalities to approximate the act of directly connecting with people. Think of it like a whistle-stop tour with an appearance in every living room. Previous technological revolutions—railroad, radio, television, and the World Wide Web—transformed how candidates connect to their constituents, and we should expect the same from generative AI. This isn’t science fiction: The era of AI chatbots standing in as avatars for real, individual people has already begun, as the journalist Casey Newton made clear in a 2016 feature about a woman who used thousands of text messages to create a chatbot replica of her best friend after he died.

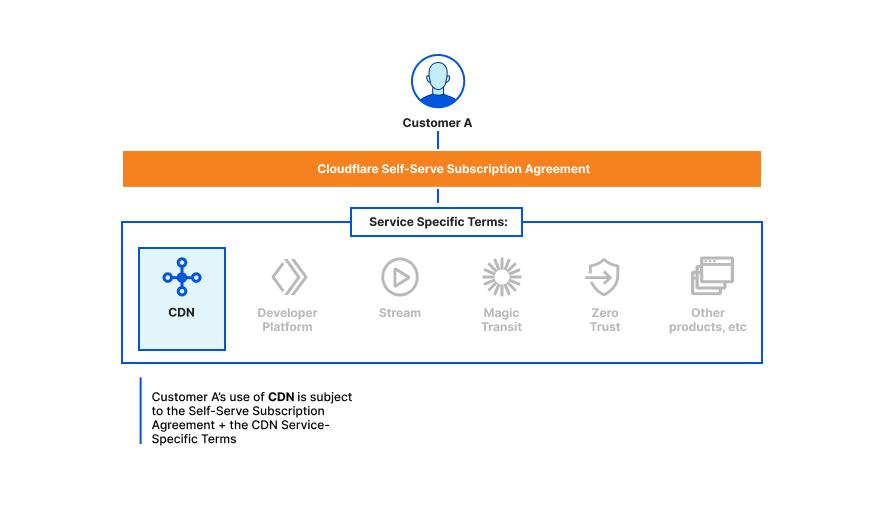

The key is interaction. A candidate could use tools enabled by large language models, or LLMs—the technology behind apps such as ChatGPT and the art-making DALL-E—to do micro-polling or message testing, and to solicit perspectives and testimonies from their political audience individually and at scale. The candidates could potentially reach any voter who possesses a smartphone or computer, not just the ones with the disposable income and free time to attend a campaign rally. At its best, AI could be a tool to increase the accessibility of political engagement and ease polarization. At its worst, it could propagate misinformation and increase the risk of voter manipulation. Whatever the case, we know political operatives are using these tools. To reckon with their potential now isn’t buying into the hype—it’s preparing for whatever may come next.

On the positive end, and most profoundly, LLMs could help people think through, refine, or discover their own political ideologies. Research has shown that many voters come to their policy positions reflexively, out of a sense of partisan affiliation. The very act of reflecting on these views through discourse can change, and even depolarize, those views. It can be hard to have reflective policy conversations with an informed, even-keeled human discussion partner when we all live within a highly charged political environment; this is a role almost custom-designed for LLM. In US politics, it is a truism that the most valuable resource in a campaign is time. People are busy and distracted. Campaigns have a limited window to convince and activate voters. Money allows a candidate to purchase time: TV commercials, labor from staffers, and fundraising events to raise even more money. LLMs could provide campaigns with what is essentially a printing press for time.

If you were a political operative, which would you rather do: play a short video on a voter’s TV while they are folding laundry in the next room, or exchange essay-length thoughts with a voter on your candidate’s key issues? A staffer knocking on doors might need to canvass 50 homes over two hours to find one voter willing to have a conversation. OpenAI charges pennies to process about 800 words with its latest GPT-4 model, and that cost could fall dramatically as competitive AIs become available. People seem to enjoy interacting with chatbots; Open’s product reportedly has the fastest-growing user base in the history of consumer apps.

Optimistically, one possible result might be that we’ll get less annoyed with the deluge of political ads if their messaging is more usefully tailored to our interests by AI tools. Though the evidence for microtargeting’s effectiveness is mixed at best, some studies show that targeting the right issues to the right people can persuade voters. Expecting more sophisticated, AI-assisted approaches to be more consistently effective is reasonable. And anything that can prevent us from seeing the same 30-second campaign spot 20 times a day seems like a win.

AI can also help humans effectuate their political interests. In the 2016 US presidential election, primitive chatbots had a role in donor engagement and voter-registration drives: simple messaging tasks such as helping users pre-fill a voter-registration form or reminding them where their polling place is. If it works, the current generation of much more capable chatbots could supercharge small-dollar solicitations and get-out-the-vote campaigns.

And the interactive capability of chatbots could help voters better understand their choices. An AI chatbot could answer questions from the perspective of a candidate about the details of their policy positions most salient to an individual user, or respond to questions about how a candidate’s stance on a national issue translates to a user’s locale. Political organizations could similarly use them to explain complex policy issues, such as those relating to the climate or health care or…anything, really.

Of course, this could also go badly. In the time-honored tradition of demagogues worldwide, the LLM could inconsistently represent the candidate’s views to appeal to the individual proclivities of each voter.

In fact, the fundamentally obsequious nature of the current generation of large language models results in them acting like demagogues. Current LLMs are known to hallucinate—or go entirely off-script—and produce answers that have no basis in reality. These models do not experience emotion in any way, but some research suggests they have a sophisticated ability to assess the emotion and tone of their human users. Although they weren’t trained for this purpose, ChatGPT and its successor, GPT-4, may already be pretty good at assessing some of their users’ traits—say, the likelihood that the author of a text prompt is depressed. Combined with their persuasive capabilities, that means that they could learn to skillfully manipulate the emotions of their human users.

This is not entirely theoretical. A growing body of evidence demonstrates that interacting with AI has a persuasive effect on human users. A study published in February prompted participants to co-write a statement about the benefits of social-media platforms for society with an AI chatbot configured to have varying views on the subject. When researchers surveyed participants after the co-writing experience, those who interacted with a chatbot that expressed that social media is good or bad were far more likely to express the same view than a control group that didn’t interact with an “opinionated language model.”

For the time being, most Americans say they are resistant to trusting AI in sensitive matters such as health care. The same is probably true of politics. If a neighbor volunteering with a campaign persuades you to vote a particular way on a local ballot initiative, you might feel good about that interaction. If a chatbot does the same thing, would you feel the same way? To help voters chart their own course in a world of persuasive AI, we should demand transparency from our candidates. Campaigns should have to clearly disclose when a text agent interacting with a potential voter—through traditional robotexting or the use of the latest AI chatbots—is human or automated.

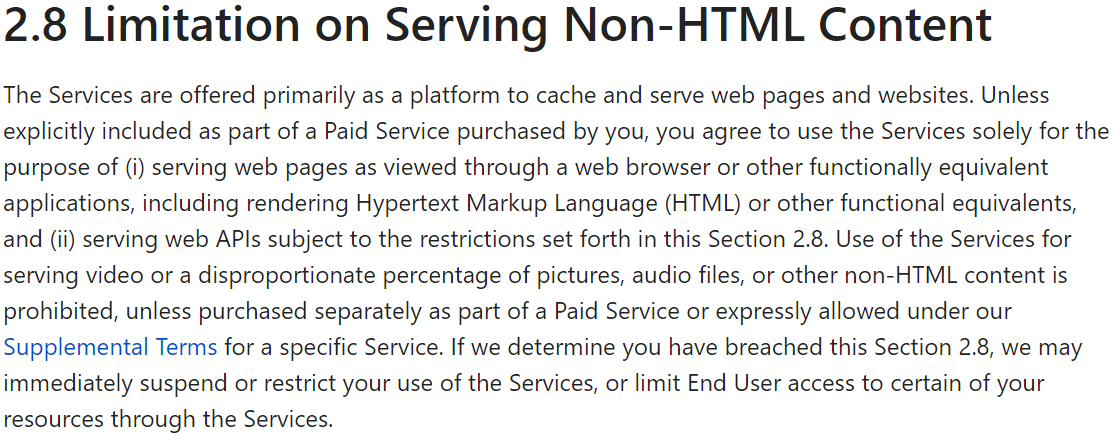

Though companies such as Meta (Facebook’s parent company) and Alphabet (Google’s) publish libraries of traditional, static political advertising, they do so poorly. These systems would need to be improved and expanded to accommodate user-level differentiation in ad copy to offer serviceable protection against misuse.

A public, anonymized log of chatbot conversations could help hold candidates’ AI representatives accountable for shifting statements and digital pandering. Candidates who use chatbots to engage voters may not want to make all transcripts of those conversations public, but their users could easily choose to share them. So far, there is no shortage of people eager to share their chat transcripts, and in fact, an online database exists of nearly 200,000 of them. In the recent past, Mozilla has galvanized users to opt into sharing their web data to study online misinformation.

We also need stronger nationwide protections on data privacy, as well as the ability to opt out of targeted advertising, to protect us from the potential excesses of this kind of marketing. No one should be forcibly subjected to political advertising, LLM-generated or not, on the basis of their Internet searches regarding private matters such as medical issues. In February, the European Parliament voted to limit political-ad targeting to only basic information, such as language and general location, within two months of an election. This stands in stark contrast to the US, which has for years failed to enact federal data-privacy regulations. Though the 2018 revelation of the Cambridge Analytica scandal led to billions of dollars in fines and settlements against Facebook, it has so far resulted in no substantial legislative action.

Transparency requirements like these are a first step toward oversight of future AI-assisted campaigns. Although we should aspire to more robust legal controls on campaign uses of AI, it seems implausible that these will be adopted in advance of the fast-approaching 2024 general presidential election.

Credit the RNC, at least, with disclosing that their recent ad was AI-generated—a transparent attempt at publicity still counts as transparency. But what will we do if the next viral AI-generated ad tries to pass as something more conventional?

As we are all being exposed to these rapidly evolving technologies for the first time and trying to understand their potential uses and effects, let’s push for the kind of basic transparency protection that will allow us to know what we’re dealing with.

This essay was written with Nathan Sanders, and previously appeared on the Atlantic.

EDITED TO ADD (5/12): Better article on the “daisy” ad.