Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/08/decembers-reimagining-democracy-workshop.html

Imagine that we’ve all—all of us, all of society—landed on some alien planet, and we have to form a government: clean slate. We don’t have any legacy systems from the US or any other country. We don’t have any special or unique interests to perturb our thinking.

How would we govern ourselves?

It’s unlikely that we would use the systems we have today. The modern representative democracy was the best form of government that mid-eighteenth-century technology could conceive of. The twenty-first century is a different place scientifically, technically and socially.

For example, the mid-eighteenth-century democracies were designed under the assumption that both travel and communications were hard. Does it still make sense for all of us living in the same place to organize every few years and choose one of us to go to a big room far away and create laws in our name?

Representative districts are organized around geography, because that’s the only way that made sense 200-plus years ago. But we don’t have to do it that way. We can organize representation by age: one representative for the thirty-one-year-olds, another for the thirty-two-year-olds, and so on. We can organize representation randomly: by birthday, perhaps. We can organize any way we want.

US citizens currently elect people for terms ranging from two to six years. Is ten years better? Is ten days better? Again, we have more technology and therefor more options.

Indeed, as a technologist who studies complex systems and their security, I believe the very idea of representative government is a hack to get around the technological limitations of the past. Voting at scale is easier now than it was 200 year ago. Certainly we don’t want to all have to vote on every amendment to every bill, but what’s the optimal balance between votes made in our name and ballot measures that we all vote on?

In December 2022, I organized a workshop to discuss these and other questions. I brought together fifty people from around the world: political scientists, economists, law professors, AI experts, activists, government officials, historians, science fiction writers and more. We spent two days talking about these ideas. Several themes emerged from the event.

Misinformation and propaganda were themes, of course—and the inability to engage in rational policy discussions when people can’t agree on the facts.

Another theme was the harms of creating a political system whose primary goals are economic. Given the ability to start over, would anyone create a system of government that optimizes the near-term financial interest of the wealthiest few? Or whose laws benefit corporations at the expense of people?

Another theme was capitalism, and how it is or isn’t intertwined with democracy. And while the modern market economy made a lot of sense in the industrial age, it’s starting to fray in the information age. What comes after capitalism, and how does it affect how we govern ourselves?

Many participants examined the effects of technology, especially artificial intelligence. We looked at whether—and when—we might be comfortable ceding power to an AI. Sometimes it’s easy. I’m happy for an AI to figure out the optimal timing of traffic lights to ensure the smoothest flow of cars through the city. When will we be able to say the same thing about setting interest rates? Or designing tax policies?

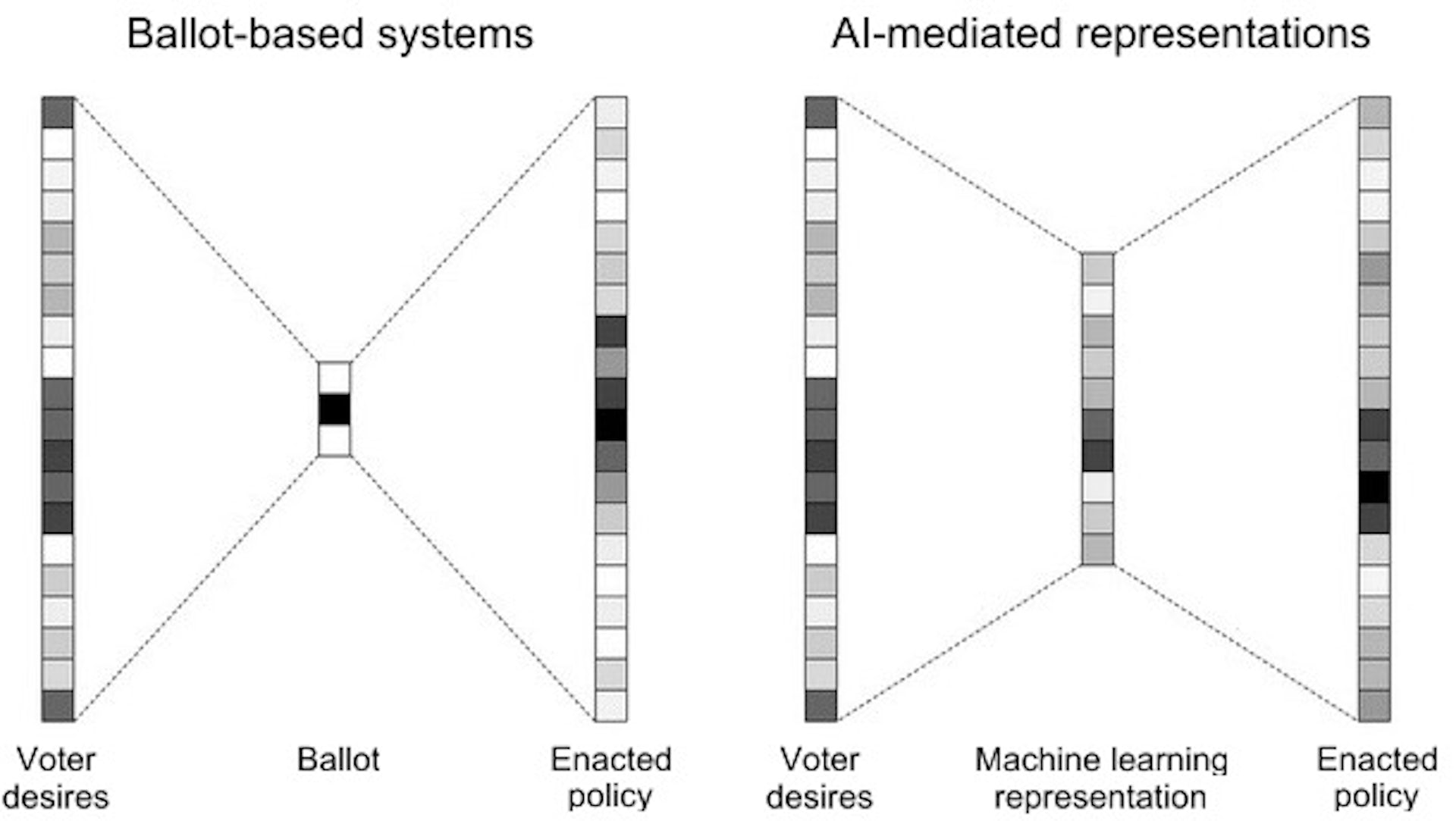

How would we feel about an AI device in our pocket that voted in our name, thousands of times per day, based on preferences that it inferred from our actions? If an AI system could determine optimal policy solutions that balanced every voter’s preferences, would it still make sense to have representatives? Maybe we should vote directly for ideas and goals instead, and leave the details to the computers. On the other hand, technological solutionism regularly fails.

Scale was another theme. The size of modern governments reflects the technology at the time of their founding. European countries and the early American states are a particular size because that’s what was governable in the 18th and 19th centuries. Larger governments—the US as a whole, the European Union—reflect a world in which travel and communications are easier. The problems we have today are primarily either local, at the scale of cities and towns, or global—even if they are currently regulated at state, regional or national levels. This mismatch is especially acute when we try to tackle global problems. In the future, do we really have a need for political units the size of France or Virginia? Or is it a mixture of scales that we really need, one that moves effectively between the local and the global?

As to other forms of democracy, we discussed one from history and another made possible by today’s technology.

Sortition is a system of choosing political officials randomly to deliberate on a particular issue. We use it today when we pick juries, but both the ancient Greeks and some cities in Renaissance Italy used it to select major political officials. Today, several countries—largely in Europe—are using sortition for some policy decisions. We might randomly choose a few hundred people, representative of the population, to spend a few weeks being briefed by experts and debating the problem—and then decide on environmental regulations, or a budget, or pretty much anything.

Liquid democracy does away with elections altogether. Everyone has a vote, and they can keep the power to cast it themselves or assign it to another person as a proxy. There are no set elections; anyone can reassign their proxy at any time. And there’s no reason to make this assignment all or nothing. Perhaps proxies could specialize: one set of people focused on economic issues, another group on health and a third bunch on national defense. Then regular people could assign their votes to whichever of the proxies most closely matched their views on each individual matter—or step forward with their own views and begin collecting proxy support from other people.

This all brings up another question: Who gets to participate? And, more generally, whose interests are taken into account? Early democracies were really nothing of the sort: They limited participation by gender, race and land ownership.

We should debate lowering the voting age, but even without voting we recognize that children too young to vote have rights—and, in some cases, so do other species. Should future generations get a “voice,” whatever that means? What about nonhumans or whole ecosystems?

Should everyone get the same voice? Right now in the US, the outsize effect of money in politics gives the wealthy disproportionate influence. Should we encode that explicitly? Maybe younger people should get a more powerful vote than everyone else. Or maybe older people should.

Those questions lead to ones about the limits of democracy. All democracies have boundaries limiting what the majority can decide. We all have rights: the things that cannot be taken away from us. We cannot vote to put someone in jail, for example.

But while we can’t vote a particular publication out of existence, we can to some degree regulate speech. In this hypothetical community, what are our rights as individuals? What are the rights of society that supersede those of individuals?

Personally, I was most interested in how these systems fail. As a security technologist, I study how complex systems are subverted—hacked, in my parlance—for the benefit of a few at the expense of the many. Think tax loopholes, or tricks to avoid government regulation. I want any government system to be resilient in the face of that kind of trickery.

Or, to put it another way, I want the interests of each individual to align with the interests of the group at every level. We’ve never had a system of government with that property before—even equal protection guarantees and First Amendment rights exist in a competitive framework that puts individuals’ interests in opposition to one another. But—in the age of such existential risks as climate and biotechnology and maybe AI—aligning interests is more important than ever.

Our workshop didn’t produce any answers; that wasn’t the point. Our current discourse is filled with suggestions on how to patch our political system. People regularly debate changes to the Electoral College, or the process of creating voting districts, or term limits. But those are incremental changes.

It’s hard to find people who are thinking more radically: looking beyond the horizon for what’s possible eventually. And while true innovation in politics is a lot harder than innovation in technology, especially without a violent revolution forcing change, it’s something that we as a species are going to have to get good at—one way or another.

This essay previously appeared in The Conversation.