Post Syndicated from Alex Krivit original http://blog.cloudflare.com/speeding-up-your-website-in-a-few-clicks/

Every day, website visitors spend far too much time waiting for websites to load in their browsers. This waiting is partially due to browsers not knowing which resources are critically important so they can prioritize them ahead of less-critical resources. In this blog we will outline how millions of websites across the Internet can improve their performance by specifying which critical content loads first with Cloudflare Workers and what Cloudflare will do to make this easier by default in the future.

Popular Content Management Systems (CMS) like WordPress have made attempts to influence website resource priority, for example through techniques like lazy loading images. When done correctly, the results are magical. Performance is optimized between the CMS and browser without needing to implement any changes or coding new prioritization strategies. However, we’ve seen that these default priorities have opportunities to improve greatly.

In this co-authored blog with Google’s Patrick Meenan we will explain where the opportunities exist to improve website performance, how to check if a specific site can improve performance, and provide a small JavaScript snippet which can be used with Cloudflare Workers to do this optimization for you.

What happens when a browser receives the response?

Before we dive into where the opportunities are to improve website performance, let’s take a step back to understand how browsers load website assets by default.

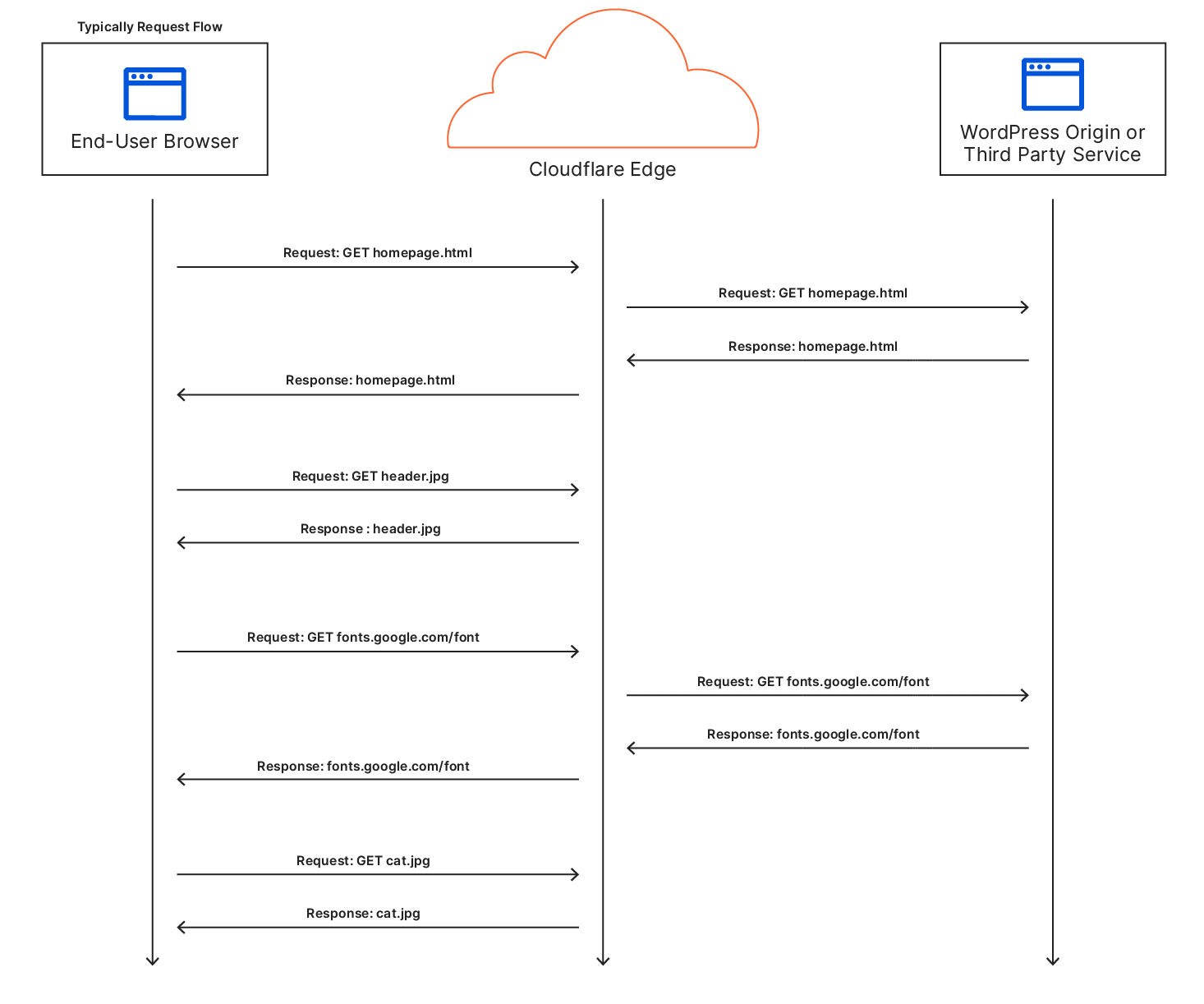

After the browser sends a HTTP request to a server, it receives a HTTP response containing information like status codes, headers, and the requested content. The browser carefully analyzes the response's status code and response headers to ensure proper handling of the content.

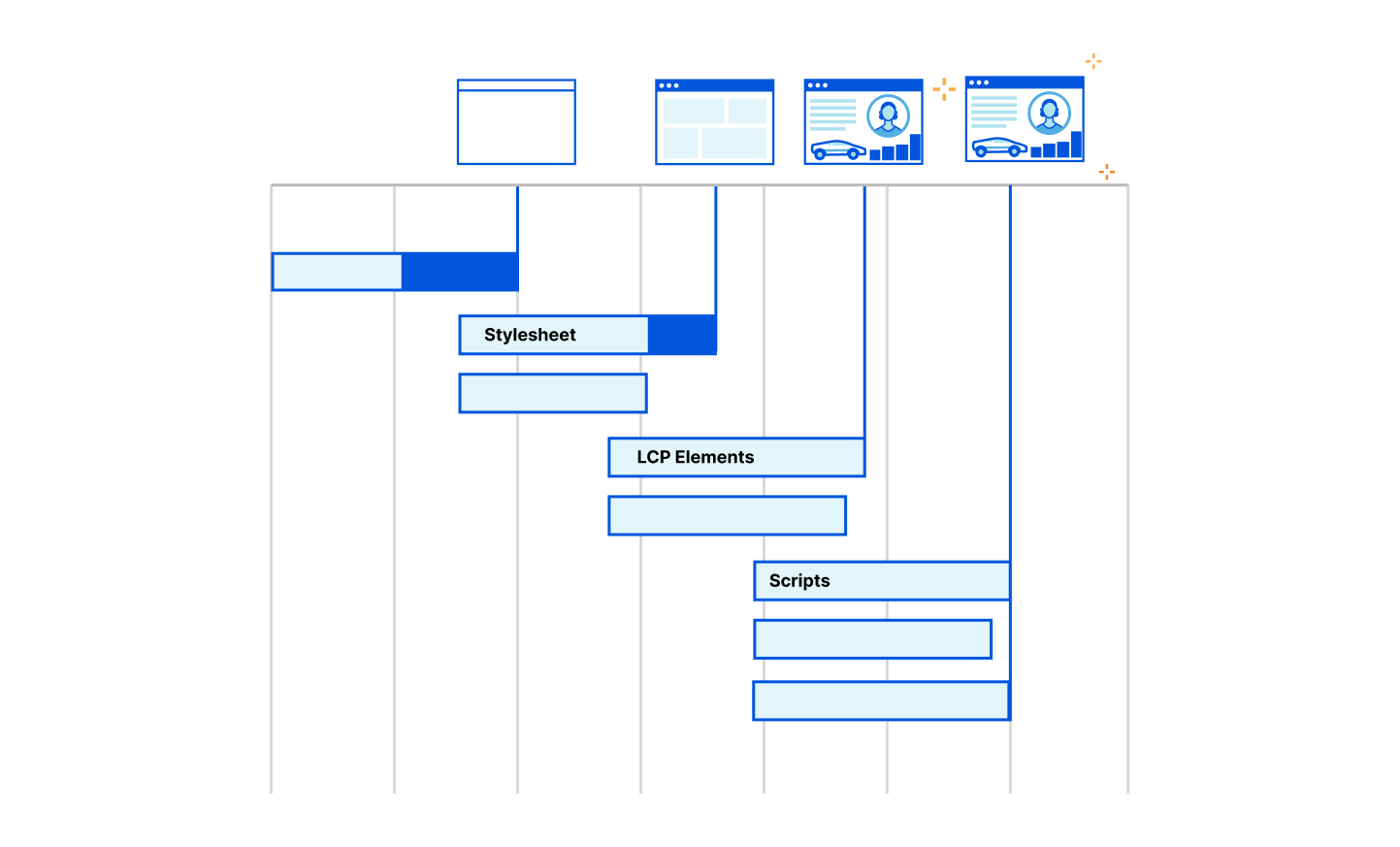

Next, the browser processes the content itself. For HTML responses, the browser extracts important information from the <head> section of the HTML, such as the page title, stylesheets, and scripts. Once this information is parsed, the browser moves on to the response <body> which has the actual page content. During this stage, the browser begins to present the webpage to the visitor.

If the response includes additional 3rd party resources like CSS, JavaScript, or other content, the browser may need to fetch and integrate them into the webpage. Typically, browsers like Google Chrome delay loading images until after the resources in the HTML <head> have loaded. This is also known as “blocking” the render of the webpage. However, developers can override this blocking behavior using fetch priority or other methods to boost other content’s priority in the browser. By adjusting an important image's fetch priority, it can be loaded earlier, which can lead to significant improvements in crucial performance metrics like LCP (Largest Contentful Paint).

Images are so central to web pages that they have become an essential element in measuring website performance from Core Web Vitals. LCP measures the time it takes for the largest visible element, often an image, to be fully rendered on the screen. Optimizing the loading of critical images (like LCP images) can greatly enhance performance, improving the overall user experience and page performance.

But here's the challenge – a browser may not know which images are the most important for the visitor experience (like the LCP image) until rendering begins. If the developer can identify the LCP image or critical elements before it reaches the browser, its priority can be increased at the server to boost website performance instead of waiting for the browser to naturally discover the critical images.

In our Smart Hints blog, we describe how Cloudflare will soon be able to automatically prioritize content on behalf of website developers, but what happens if there’s a need to optimize the priority of the images right now? How do you know if a website is in a suboptimal state and what can you do to improve?

Using Cloudflare, developers should be able to improve image performance with heuristics that identify likely-important images before the browser parses them so these images can have increased priority and be loaded sooner.

Identifying Image Priority opportunities

Just increasing the fetch priority of all images won't help if they are lazy-loaded or not critical/LCP images. Lazy-loading is a method that developers use to generally improve the initial load of a webpage if it includes numerous out-of-view elements. For example, on Instagram, when you continually scroll down the application to see more images, it would only make sense to load those images when the user arrives at them otherwise the performance of the page load would be needlessly delayed by the browser eagerly loading these out-of-view images. Instead the highest priority should be given to the LCP image in the viewport to improve performance.

So developers are left in a situation where they need to know which images are on users' screens/viewports to increase their priority and which are off their screens to lazy-load them.

Recently, we’ve seen attempts to influence image priority on behalf of developers. For example, by default, in WordPress 5.5 all images with an IMG tag and aspect ratios were directed to be lazy-loaded. While there are plugins and other methods WordPress developers can use to boost the priority of LCP images, lazy-loading all images in a default manner and not knowing which are LCP images can cause artificial performance delays in website performance (they’re working on this though, and have partially resolved this for block themes).

So how do we identify the LCP image and other critical assets before they get to the browser?

To evaluate the opportunity to improve image performance, we turned to the HTTP Archive. Out of the approximately 22 million desktop pages tested in February 2023, 46% had an LCP element with an IMG tag. Meaning that for page load metrics, LCP had an image included about half the time. Though, among these desktop pages, 8.5 million had the image in the static HTML delivered with the page, indicating a total potential improvement opportunity of approximately 39% of the desktop pages within the dataset.

In the case of mobile pages, out of the ~28.5 million tested, 40% had an LCP element as an IMG tag. Among these mobile pages, 10.3 million had the image in the static HTML delivered with the page, suggesting a potential improvement opportunity in around 36% of the mobile pages within the dataset.

However, as previously discussed, prioritizing an image won't be effective if the image is lazy-loaded because the directives are contradictory. In the dataset, approximately 1.8 million LCP desktop images and 2.4 million LCP mobile images were lazy-loaded.

Therefore, across the Internet, the opportunity to improve image performance would be about ~30% of pages that have an LCP image in the original HTML markup that weren’t lazy-loaded, but with a more advanced Cloudflare Worker, the additional 9% of lazy-loaded LCP images can also be improved improved by removing the lazy-load attribute.

If you’d like to determine which element on your website serves as the LCP element so you can increase the priority or remove any lazy-loading, you can use browser developer tools, or speed tests like Webpagetest or Cloudflare Observatory.

39% of desktop images seems like a lot of opportunity to improve image performance. So the next question is how can Cloudflare determine the LCP image across our network and automatically prioritize them?

Image Index

We thought that how soon the LCP image showed up in the HTML would serve as a useful indicator. So we analyzed the HTTP Archive dataset to see where the cumulative percentage of LCP images are discovered based on their position in the HTML, including lazy-loaded images.

We found that approximately 25% of the pages had the LCP image as the first image in the HTML (around 10% of all pages). Another 25% had the LCP image as the second image. WordPress seemed to arrive at a similar conclusion and recently released a development to remove the default lazy-load attribute from the first image on block themes, but there are opportunities to go further.

Our analysis revealed that implementing a straightforward rule like "do not lazy-load the first four images," either through the browser, a content management system (CMS), or a Cloudflare Worker could address approximately 75% of the issue of lazy-loading LCP images (example Worker below).

Ignoring small images

In trying to find other ways to identify likely LCP images we next turned to the size of the image. To increase the likelihood of getting the LCP image early in the HTML, we looked into ignoring “small” images as they are unlikely to be big enough to be a LCP element. We explored several sizes and 10,000 pixels (less than 100×100) was a pretty reliable threshold that didn’t skip many LCP images and avoided a good chunk of the non-LCP images.

By ignoring small images (<10,000px), we found that the first image became the LCP image in approximately 30-34% of cases. Adding the second image increased this percentage to 56-60% of pages.

Therefore, to improve image priority, a potential approach could involve assigning a higher priority to the first four "not-small" images.

Chrome 114 Image Prioritization Experiment

An experiment running in Chrome 114 does exactly what we described above. Within the browser there are a few different prioritization knobs to play with that aren’t web-exposed so we have the opportunity to assign a “medium” priority to images that we want to boost automatically (directly controlling priority with “fetch priority” lets you set high or low). This will let us move the images ahead of other images, async scripts and parser-blocking scripts late in the body but still keep the boosted image priority below any high-priority requests, particularly dynamically-injected blocking scripts.

We are experimenting with boosting the priority of varying numbers of images (2, 5 and 10) and with allowing one of those medium-priority images to load at a time during Chromes “tight” mode (when it is loading the render-blocking resources in the head) to increase the likelihood that the LCP image will be available when the first paint is done.

The data is still coming in and no “ship” decisions have been made yet but the early results are very promising, improving the LCP time across the entire web for all arms of the experiment (not by massive amounts but moving the metrics of the whole web is notoriously difficult).

How to use Cloudflare Workers to boost performance

Now that we’ve seen that there is a large opportunity across the Internet for helping prioritize images for performance and how to identify images on individual pages that are likely LCP images, the question becomes, what would the results be of implementing a network-wide rule that could boost image priority from this study?

We built a test worker and deployed it on some WordPress test sites with our friends at Rocket.net, a WordPress hosting platform focused on performance. This worker boosts the priority of the first four images while removing the lazy-load attribute, if present. When deployed we saw good performance results and the expected image prioritization.

export default {

async fetch(request) {

const response = await fetch(request);

// Check if the response is HTML

const contentType = response.headers.get('Content-Type');

if (!contentType || !contentType.includes('text/html')) {

return response;

}

const transformedResponse = transformResponse(response);

// Return the transformed response with streaming enabled

return transformedResponse;

},

};

async function transformResponse(response) {

// Create an HTMLRewriter instance and define the image transformation logic

const rewriter = new HTMLRewriter()

.on('img', new ImageElementHandler());

const transformedBody = await rewriter.transform(response).text()

const transformresponse = new Response(transformedBody, response)

// Return the transformed response with streaming enabled

return transformresponse

}

class ImageElementHandler {

constructor() {

this.imageCount = 0;

this.processedImages = new Set();

}

element(element) {

const imgSrc = element.getAttribute('src');

// Check if the image is small based on Chrome's criteria

if (imgSrc && this.imageCount < 4 && !this.processedImages.has(imgSrc) && !isImageSmall(element)) {

element.removeAttribute('loading');

element.setAttribute('fetchpriority', 'high');

this.processedImages.add(imgSrc);

this.imageCount++;

}

}

}

function isImageSmall(element) {

// Check if the element has width and height attributes

const width = element.getAttribute('width');

const height = element.getAttribute('height');

// If width or height is 0, or width * height < 10000, consider the image as small

if ((width && parseInt(width, 10) === 0) || (height && parseInt(height, 10) === 0)) {

return true;

}

if (width && height) {

const area = parseInt(width, 10) * parseInt(height, 10);

if (area < 10000) {

return true;

}

}

return false;

}

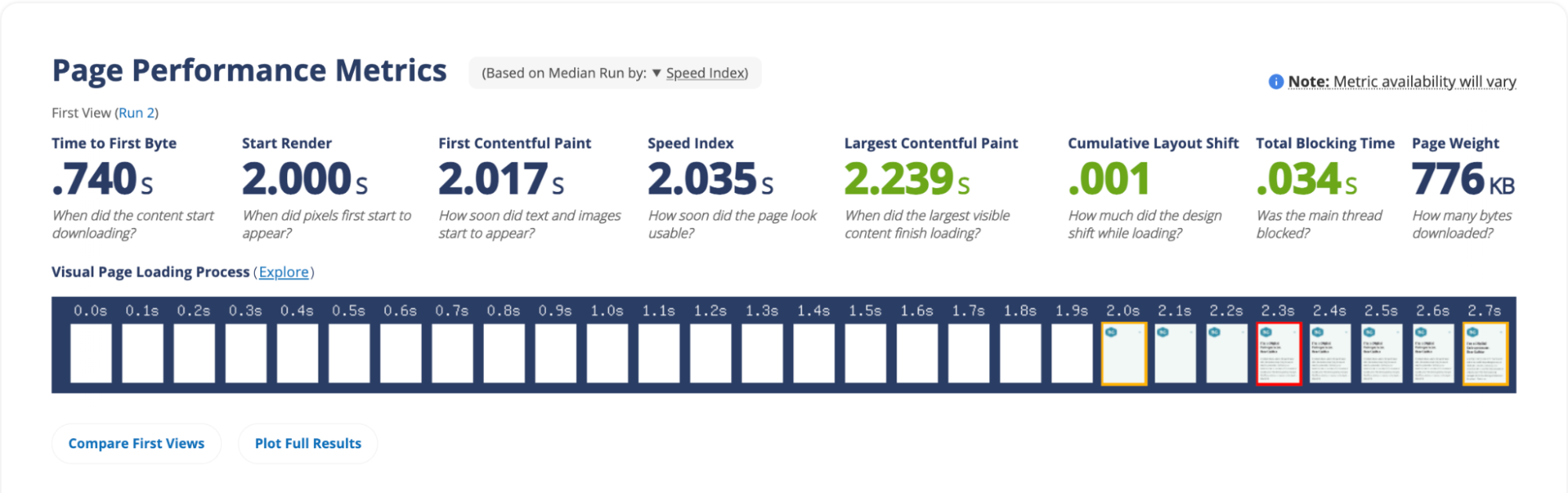

When testing the Worker, we saw that default image priority was boosted into “high” for the first four images and the fifth image remained “low.” This resulted in an LCP range of “good” from a speed test. While this initial test is not a dispositive indicator that the Worker will boost performance in every situation, the results are promising and we look forward to continuing to experiment with this idea.

While we’ve experimented with WordPress sites to illustrate the issues and potential performance benefits, this issue is present across the Internet.

Website owners can help us experiment with the Worker above to improve the priority of images on their websites or edit it to be more specific by targeting likely LCP elements. Cloudflare will continue experimenting using a very similar process to understand how to safely implement a network-wide rule to ensure that images are correctly prioritized across the Internet and performance is boosted without the need to configure a specific Worker.

Automatic Platform OptimizationBut what about APO?

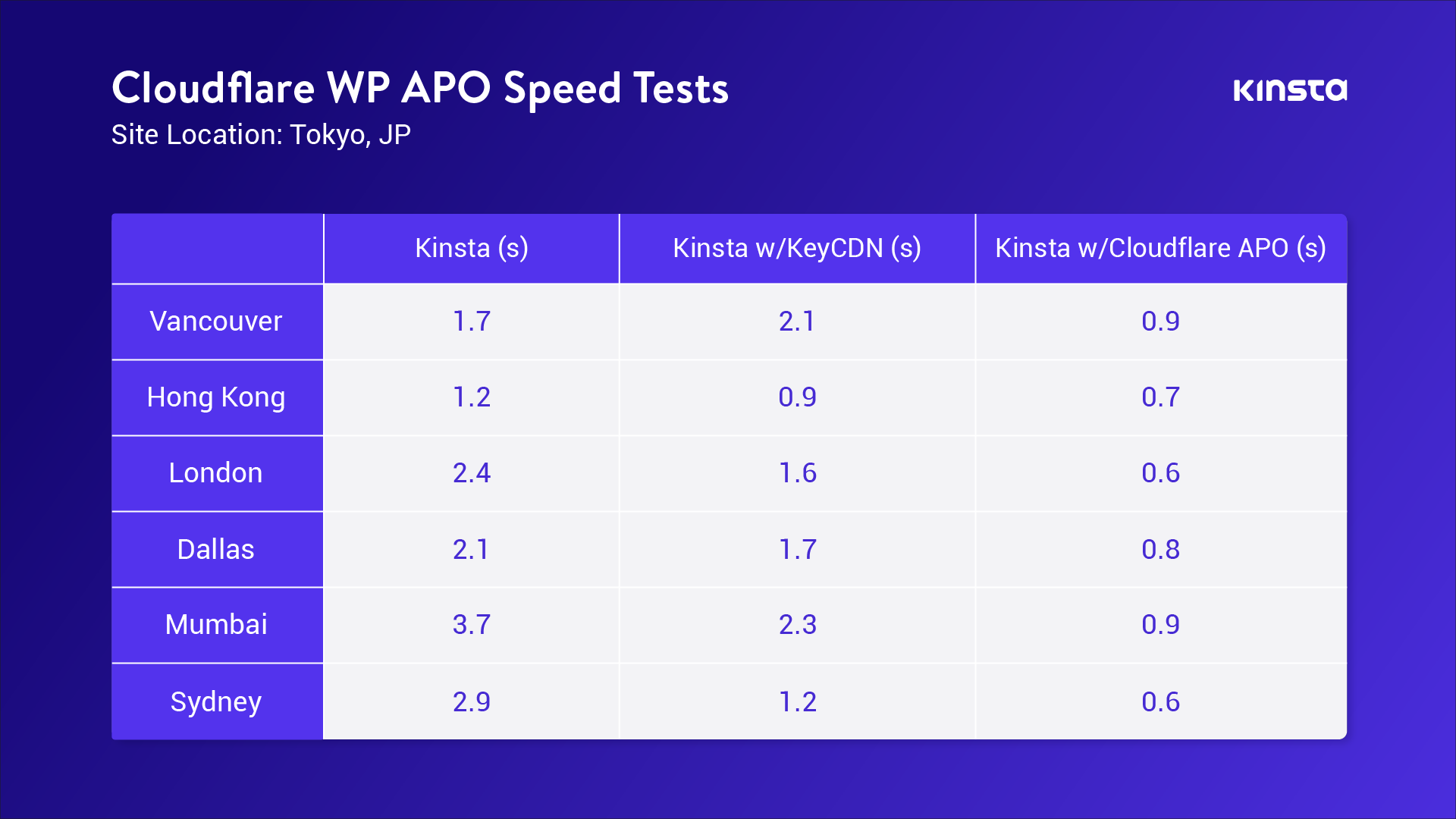

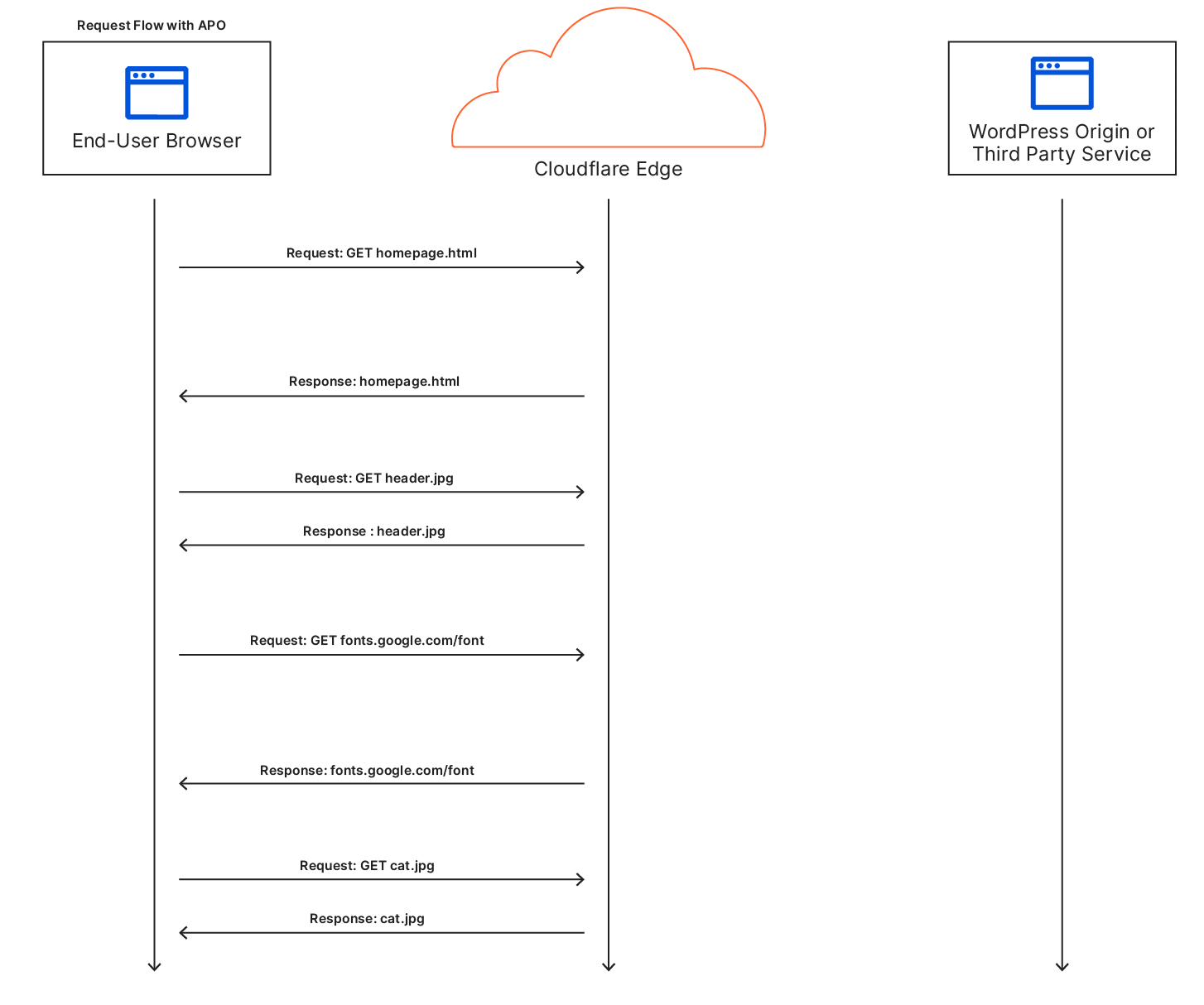

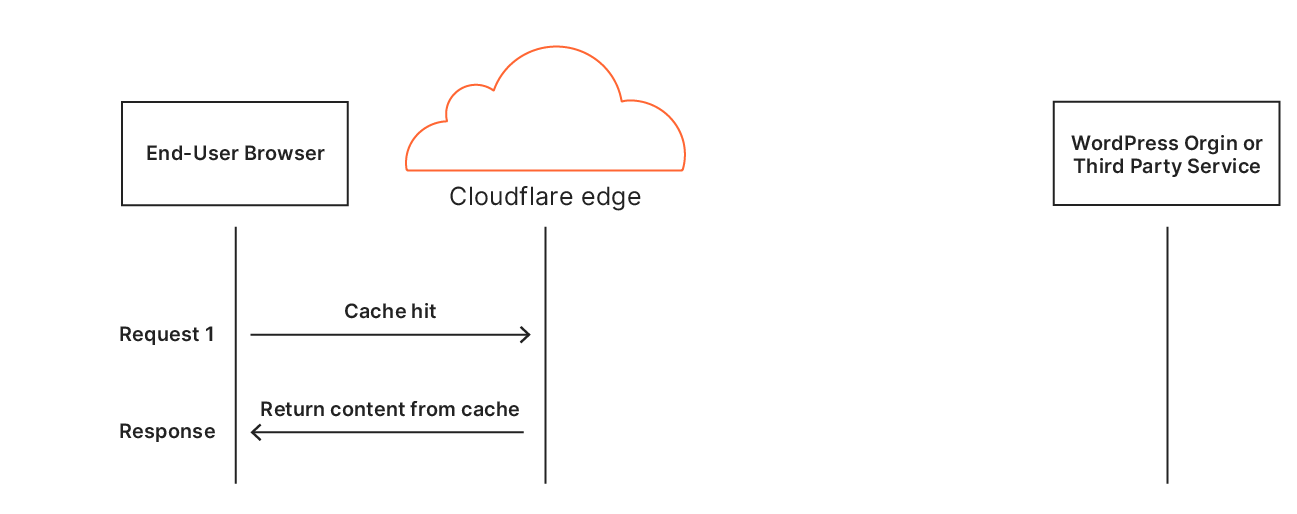

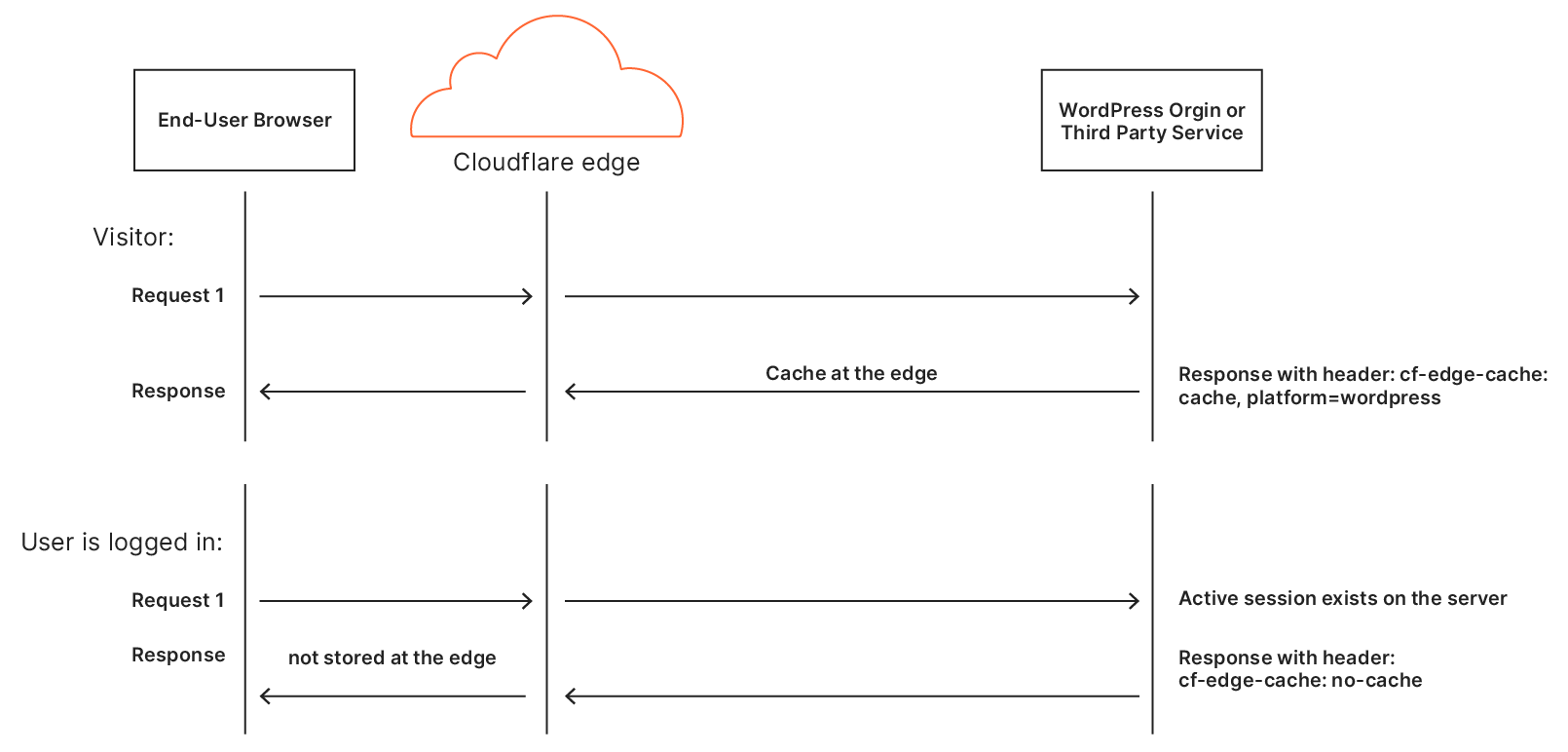

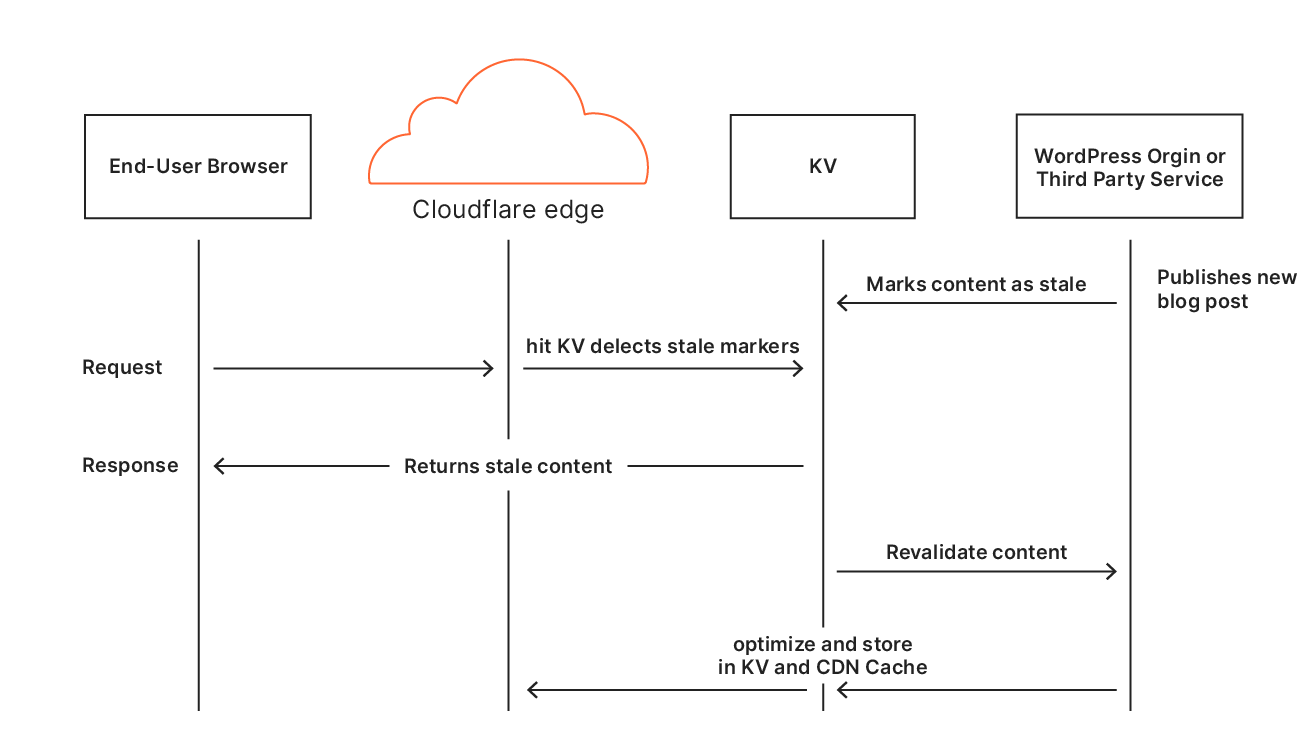

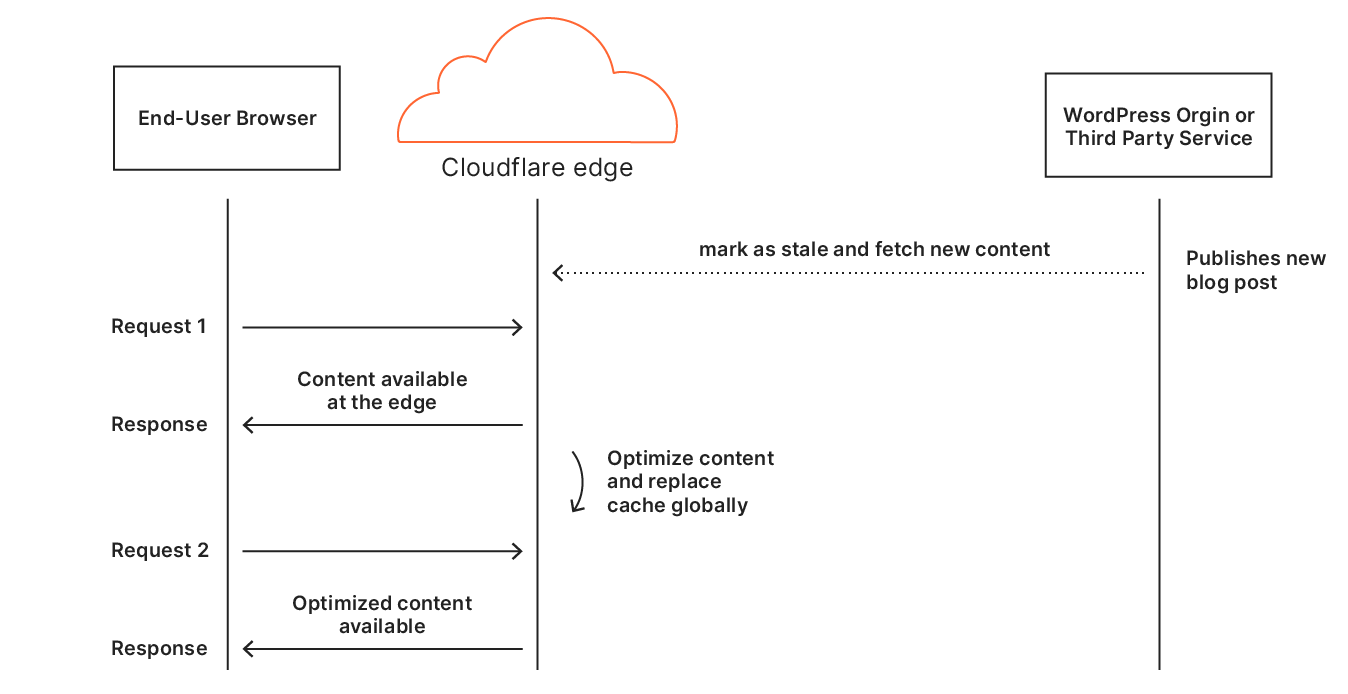

Cloudflare’s Automatic Platform Optimization (APO) is a plugin for WordPress which allows Cloudflare to deliver your entire WordPress site from our network ensuring consistent, fast performance for visitors. By serving cached sites, APO can improve performance metrics. APO does not currently have a way to prioritize images over other assets to improve browser render metrics or dynamically rewrite HTML, techniques we’ve discussed in this post. Although this presents a potential opportunity for future development, it requires thorough testing to ensure safe and reliable support.

In the future we’ll may look to include the techniques discussed today as part of APO, however in the meantime we recommend using Snippets (and Experiments) to test with the code example above to see the performance impact on your website.

Get in touch!

If you are interested in using the JavaScript above, we recommended testing with Workers or using Cloudflare Snippets. We’d love to hear from you on what your results were. Get in touch via social media and share your experiences.