Post Syndicated from Daniela Dorneanu original https://aws.amazon.com/blogs/big-data/build-secure-encrypted-data-lakes-with-aws-lake-formation/

Maintaining customer data privacy, protection against intellectual property loss, and compliance with data protection laws are essential objectives of today’s organizations. To protect data against security threats, vulnerabilities within the organization, malicious software, or cyber criminality, organizations are increasingly encrypting their data. Although you can enable server-side encryption in Amazon Simple Storage Service (Amazon S3), you may prefer to manage your own encryption keys. Amazon Key Management Service (AWS KMS) makes it easy to create, rotate, and disable cryptographic keys across a wide range of AWS services, including over your data lake in Amazon S3.

AWS Lake Formation is a one-stop service to build and manage your data lake. Among its many features, it allows discovering and cataloging data sources, setting up transformation jobs, configuring fine-grained data access and security policies, and auditing and controlling access from data lake consumers. You can also provide column-level security, which is an imperative feature when you want to protect personal identifiable information (PII).

Using AWS KMS with Lake Formation requires several steps, which we discuss in this post. We create a complete solution for processing encrypted data using customer managed keys with Lake Formation, Amazon Athena, AWS Glue, and AWS KMS. We use an S3 bucket registered through Lake Formation, which only accepts encrypted data with customer managed keys. Additionally, we demonstrate how to easily restrict access to PII data for data analysis stakeholders.

To demonstrate the solution, we upload an encrypted document into the S3 bucket and run data transformations using AWS Glue. The processed data is stored back in an encrypted way to Amazon S3. We automated this solution using AWS CloudFormation to have an end-to-end deployment of data lakes supporting encryption.

Solution overview

We use AWS CloudFormation to deploy the data transformation pipeline and explain all the configurations necessary to achieve end-to-end encryption of your data into a data lake.

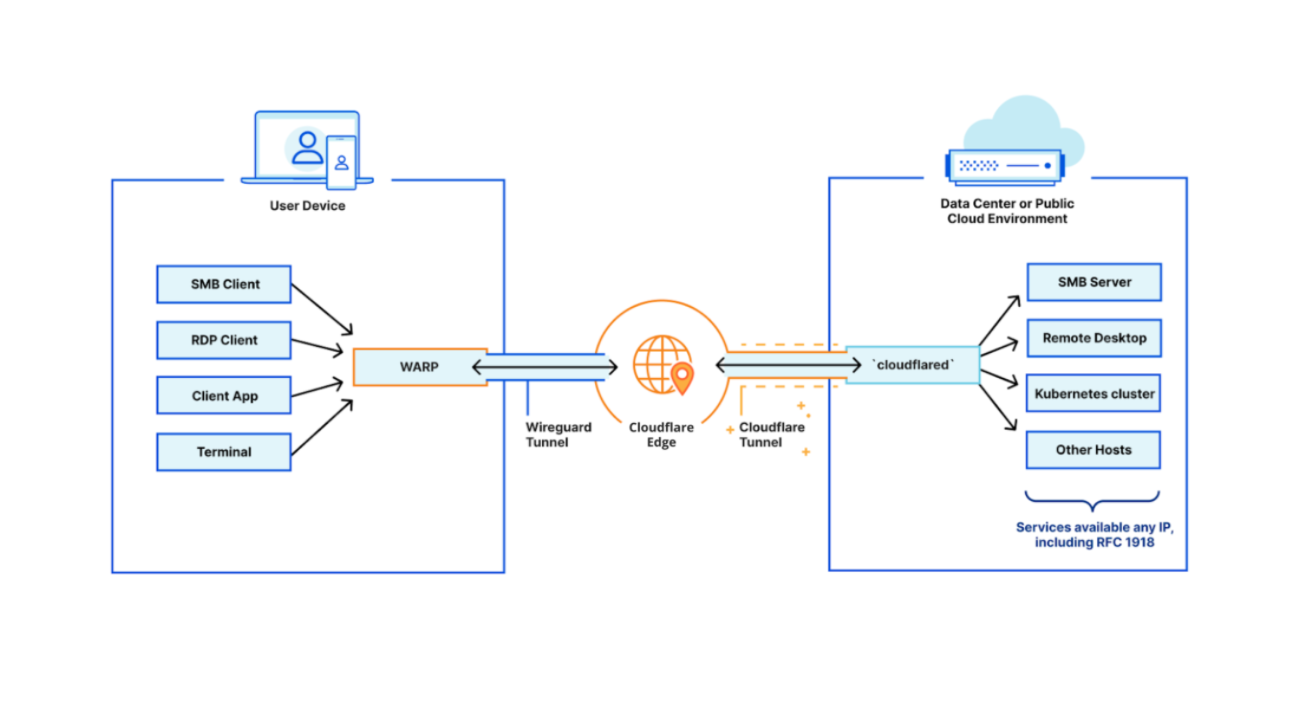

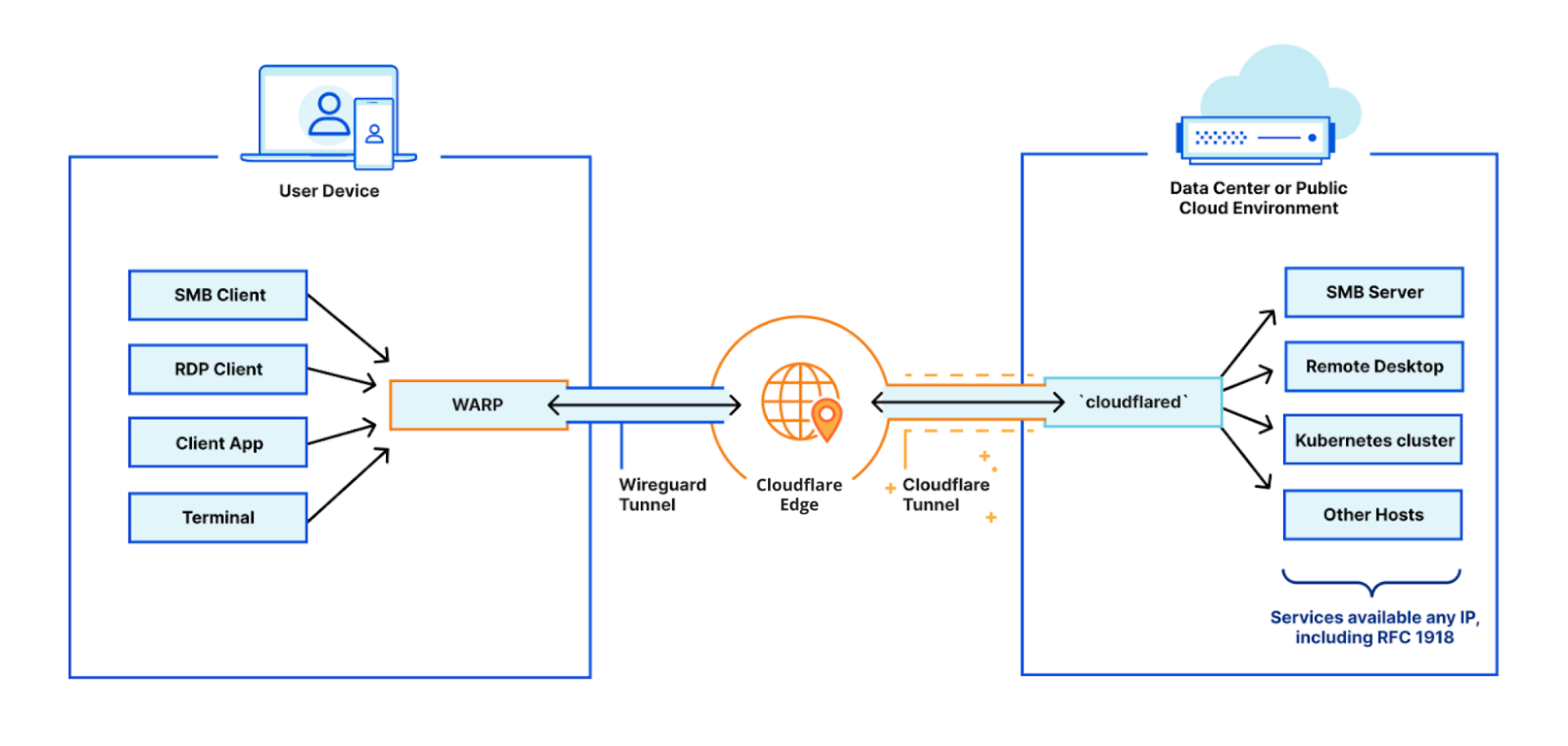

The following diagram shows a generic infrastructure of a serverless data lake enhanced by encryption. Transformations such as removing duplicated or bad data are required. Afterward, we want to automatically catalog the data to use it with our consumers (through SQL querying, analytics dashboards, or machine learning services).

The reproducible pattern to support customer managed key encryption requires the following steps:

- Configure the S3 bucket to use server-side encryption.

- Set up a KMS key policy to allow the AWS Identity and Access Management (IAM) role for Lake Formation to use the key for encryption.

- Create the AWS Glue security configuration to specify the keys to use for encryption with AWS Glue.

Prerequisites

Before getting started, complete the following prerequisites:

- Sign in to the AWS Management Console and choose the US East (N. Virginia) Region for this sample deployment.

- Ensure that Lake Formation has the administrators set up, and the default permissions go through Lake Formation for all newly created databases and tables.

Deploy the solution

To deploy the solution, complete the following steps:

- On the Lake Formation console, choose Add administrators.

- Add your current role and user as an administrator.

- In the navigation pane, under Data catalog, select Settings.

- Deselect Use only IAM access for new databases and Use only IAM access control for new tables in new databases.

This makes sure that both IAM and Lake Formation permission modules are used.

- Choose Save.

- Download the content from the following GitHub repository. The repo should contain the following files:

- The raw data sample file data.json

- The AWS Glue script sample script.py

- The CloudFormation template lakeformation_encryption_demo.yaml

- Create an S3 bucket in us-east-1 and upload the AWS Glue script.

- Record the script path to use as a parameter for the CloudFormation stack.

You now deploy the CloudFormation stack.

- Choose Launch Stack:

- Leave the default location for the template and choose Next.

- On the Specify stack details page, enter a stack name.

- For GlueJobScriptBucketPath, enter the bucket containing the AWS Glue script.

- For DataLakeBucket, enter the name of the bucket that the stack creates.

- On the Configure stack options page, choose Next.

- On the Review page, select the check boxes.

- Choose Create stack.

At this point, you have successfully created the resources for the Data Lake solution supporting end-to-end encryption.

The stack deploys an S3 bucket in which you upload the file, and registers that bucket within Lake Formation. An AWS Glue job transforms the data into Parquet format, and an AWS Glue crawler detects the schema of the processed data. Additionally, the stack deploys all the AWS KMS resources, which we describe in detail in the next section.

What is happening in the background?

In this section, we describe in more detail the encryption/decryption process. Namely we talk about how encrypted data is uploaded to the S3 bucket, and the role the AWS Glue security configuration is playing to configure Glue jobs and crawlers to use a particular KMS key.

KMS key

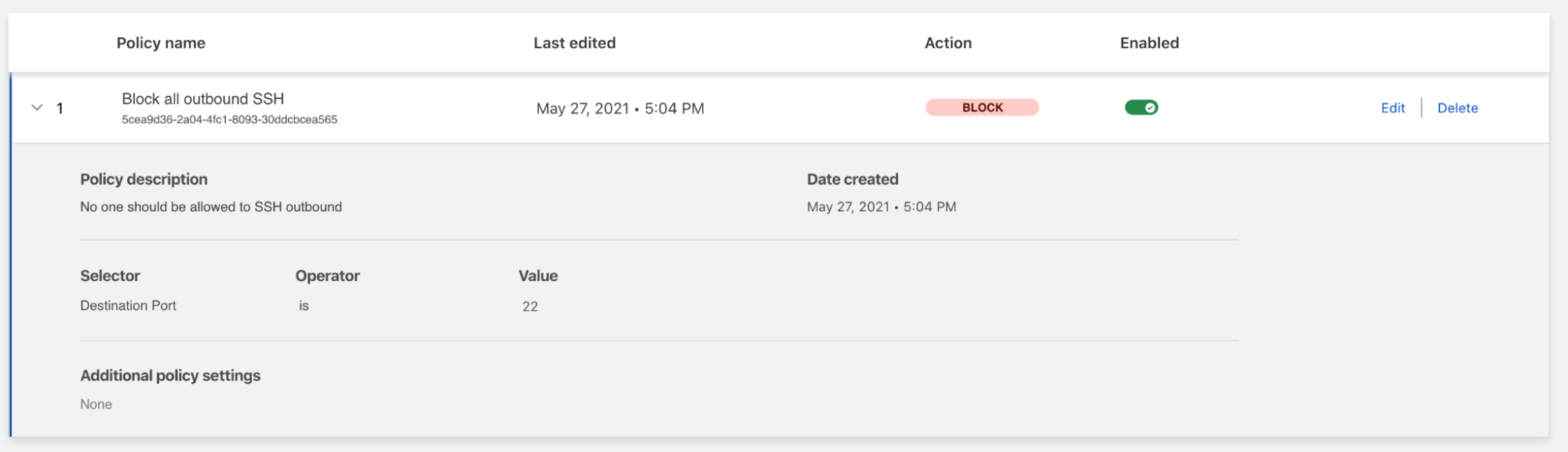

As shown in the following screenshot, the KMS key policy enables access for several IAM roles.

lake-formation-demo-role: Lake Formation is the central service managing access to the data. To enable the Lake Formation service to use the KMS key, we add the IAM role used to register the S3 bucket to Lake Formation to the key policy used within this solution.

demo-lake-formation-glue-job-role: The AWS Glue job role also needs to use the KMS key to encrypt the output data after running the ETL job.

demo-lake-formation-glue-crawler-role: Lastly, the AWS Glue crawler uses the KMS key to decrypt the data and infer the schema of the data.

Learn more about registering an S3 location to Lake Formation in the AWS documentation.

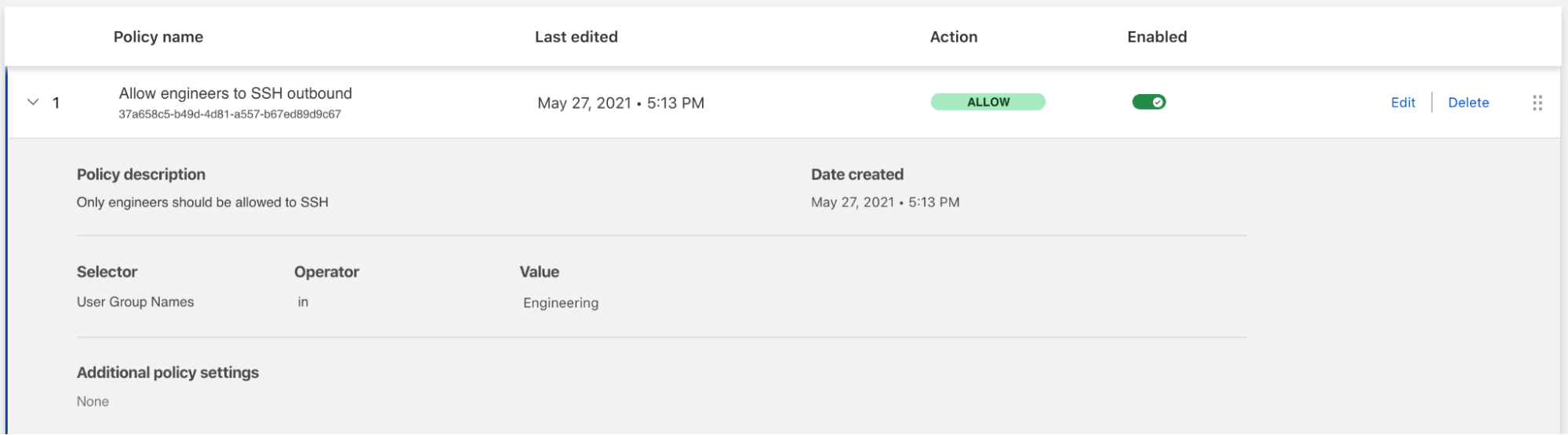

Amazon S3 storage uploads only encrypted data

The data lake S3 bucket has a bucket policy enforcing encryption on all the data uploaded to the bucket with the KMS key. This also allows any user to use their own KMS keys to encrypt the data. Additionally, teams within an organization can use different keys when uploading the data, supporting separation of access within an organization.

The following screenshot shows the S3 bucket policy implemented through the CloudFormation stack. The policy denies Amazon S3 Put API calls for objects that aren’t AWS KMS encrypted.

AWS Glue security configuration

An AWS Glue security configuration contains the properties needed when you read and write encrypted data. To create and view all AWS security configurations, on the AWS Glue console, choose Security configurations in the navigation pane.

A security configuration was added to the AWS Glue job and the crawler to configure what encryption key AWS Glue should use when running a job or a crawler.

Test the solution

In this section, we walk through the steps of the end-to-end encryption pipeline:

- Upload sample data to Amazon S3.

- Run the AWS Glue job.

- Give permissions to the AWS Glue crawler to the Amazon S3 location and run the crawler.

- Set up permissions for the new role to query the new table.

- Run an Athena query.

Upload sample data to Amazon S3

Use the following command to upload a sample file to Amazon S3:

For <LAKE_FORMATION_KMS_DATA_KEY> value, you need to enter the Key ID of the kms key with the alias lakeformation-kms-data-key, which you can find in the AWS KMS service console.

In the preceding command, data.json is the file that we upload to Amazon S3, and we specify the prefix raw. While uploading, we provide the KMS key to encrypt the file with this encryption key.

Run the AWS Glue job

We’re now ready to run our AWS Glue job.

- On the AWS Glue console, choose Jobs in the navigation pane.

- Select the job

lake-formation-demo-glue-job. - On the Action menu, choose Run job.

When the job is complete, we should see the processed data in the S3 bucket you configured under the prefix processed. When we check the properties of the output file, we should see that the data is encrypted using the KMS key lakeformation-kms-data-key.

Give permissions to the AWS Glue crawler and run the crawler

We now give permissions to the AWS Glue crawler to access Amazon S3, and then run the crawler.

- On the Lake Formation console, under Permissions, choose Data locations.

- Choose Grant.

- Select My account.

- For IAM users and roles, choose demo-lake-formation-glue-crawler-role.

- For Storage locations, choose the S3 bucket where your data is stored.

- For Registered account location, enter the current account number.

- Choose Grant.

This step is required for the crawler to have permissions to the Amazon S3 location where the data to be crawled is stored.

- On the AWS Glue console, choose Crawlers.

- Select the configured crawler and choose Run crawler.

The crawler infers the schema of the processed data, and a new table is now visible within the database: lakeformation-glue-catalog-db.

This table is also visible on the Lake Formation console.

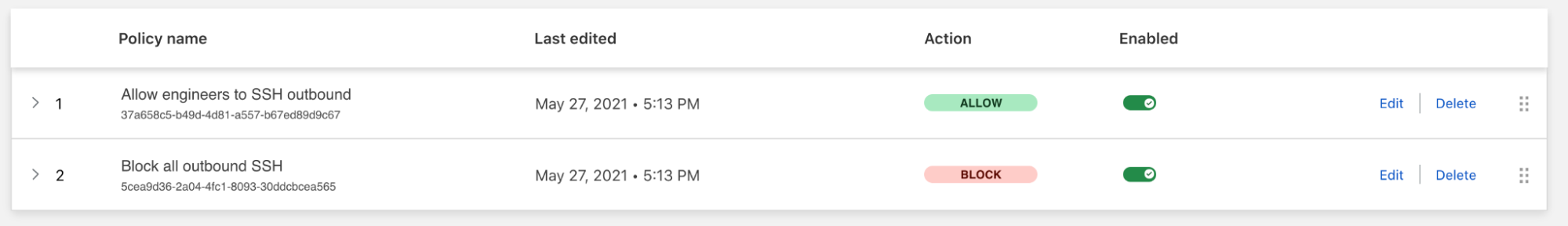

Set up permissions for the current role to query the table

Next, we configure Athena to have the proper rights to query this newly created table over the encrypted data.

One advantage of using Lake Formation to set up permissions is the ability to restrict access to PII in order to stay compliant and protect the privacy of your customers. For this post, we restrict access to all columns in the processed database that aren’t symbol.

- On the Lake Formation console, under Data catalog¸ choose Tables.

- Select the processed

- Click on Actions and select Grant.

- Select My account.

- For IAM users and roles, choose the current user/role.

- For Column-based permissions, choose Include columns.

- For Include columns, choose the column

symbol. - For Table permissions, select Select.

- Choose Grant.

Run an Athena query

We can now query the database with Athena.

- On the Athena console, choose the database

lakeformation-glue-catalog-db. - Choose the options icon next to the

processedtable and choose Preview table. - Enter the following query:

- Choose Run query.

The following screenshot shows our output, in which we can see the value of the symbol column. The other columns aren’t visible due to the column-level security configuration.

Further steps

We can also enable encryption at rest for the Athena results, meaning that Athena encrypts the query results in Amazon S3. For more information, see Encrypting Query Results Stored in Amazon S3.

Summary

In this post, we addressed the use case of customers with strict regulatory restrictions that require end-to-end data encryption to comply with their country regulations. Additionally, we set up a data lake to support column-level security to restrict access to PII within tables. We included a step-by-step guide and automated the solution with AWS CloudFormation to deploy it promptly.

If you need any help in building data lakes, please reach out to AWS Professional Services. If you have questions about this post, let us know in the comments section, or start a new thread on the Lake Formation forum.

About the Authors

Daniela Dorneanu is a Data Lake Architect at AWS. As part of Professional Services, Daniela supports customers hands-on to get more value out of their data. Daniela advocates for inclusive and diverse work environments, and she is co-chairing the Software Engineering conference track at the Grace Hopper Celebration, the largest gathering of women in Computing.

Daniela Dorneanu is a Data Lake Architect at AWS. As part of Professional Services, Daniela supports customers hands-on to get more value out of their data. Daniela advocates for inclusive and diverse work environments, and she is co-chairing the Software Engineering conference track at the Grace Hopper Celebration, the largest gathering of women in Computing.

Muhammad Shahzad is a Professional Services consultant who enables customers to implement DevOps by explaining principles, delivering automated solutions and integrating best practices in their journey to the cloud.

Muhammad Shahzad is a Professional Services consultant who enables customers to implement DevOps by explaining principles, delivering automated solutions and integrating best practices in their journey to the cloud.

Noritaka Sekiyama is a Senior Big Data Architect at AWS Glue and AWS Lake Formation. He is passionate about big data technology and open source software, and enjoys building and experimenting in the analytics area.

Noritaka Sekiyama is a Senior Big Data Architect at AWS Glue and AWS Lake Formation. He is passionate about big data technology and open source software, and enjoys building and experimenting in the analytics area. Sachet Saurabh is a Senior Software Development Engineer at AWS Glue and AWS Lake Formation. He is passionate about building fault tolerant and reliable distributed systems at scale.

Sachet Saurabh is a Senior Software Development Engineer at AWS Glue and AWS Lake Formation. He is passionate about building fault tolerant and reliable distributed systems at scale. Vikas Malik is a Software Development Manager at AWS Glue. He enjoys building solutions that solve business problems at scale. In his free time, he likes playing and gardening with his kids and exploring local areas with family.

Vikas Malik is a Software Development Manager at AWS Glue. He enjoys building solutions that solve business problems at scale. In his free time, he likes playing and gardening with his kids and exploring local areas with family.

Nitin Aggarwal is a Senior Solutions Architect at AWS, where helps digital native customers with architecting data analytics solutions and providing technical guidance on various AWS services. He brings more than 16 years of experience in software engineering and architecture roles for various large-scale enterprises.

Nitin Aggarwal is a Senior Solutions Architect at AWS, where helps digital native customers with architecting data analytics solutions and providing technical guidance on various AWS services. He brings more than 16 years of experience in software engineering and architecture roles for various large-scale enterprises. Gaurav Sharma is a Solutions Architect at AWS. He works with digital native business customers providing architectural guidance on AWS services.

Gaurav Sharma is a Solutions Architect at AWS. He works with digital native business customers providing architectural guidance on AWS services. Vivek Kumar is a Solutions Architect at AWS. He works with digital native business customers providing architectural guidance on AWS services.

Vivek Kumar is a Solutions Architect at AWS. He works with digital native business customers providing architectural guidance on AWS services.