Post Syndicated from Kabir Sikand original https://blog.cloudflare.com/relational-database-connectors/

At Cloudflare, we’re building the best compute platform in the world. We want to make it easy, seamless, and obvious to build your applications with us. But simply making the best compute platform is not enough — at the heart of your applications are the data they interact with.

Cloudflare has multiple data storage solutions available today: Workers KV, R2, and Durable Objects. All three follow Cloudflare’s design goals for Workers: global by default, infinitely scalable, and delightful for developers to use. We’ve partnered with third-party storage solutions like Fauna, MongoDB and Prisma, who have built data platforms that align beautifully with our design goals and written tutorials for databases that already support HTTP connections.

The one area that’s been sorely missed: relational databases. Cloudflare itself runs on relational databases, and we’re not alone. In April, we asked which Node libraries you wanted us to support, and four of the top five requests were related to databases. For this Full Stack Week, we asked ourselves: how could we support relational databases in a way that aligned with our design goals?

Today, we’re taking a first step towards that world by announcing support for relational databases, including Postgres and MySQL from Workers.

Connecting to a database is no simple task — if it were as easy as passing a connection string to a database driver, we would have already done it. We’ve had to overcome several hurdles to reach this point, and have several more still to conquer.

Our goal with this announcement is to work with you, our developers, to solve the unique pain points that come from accessing databases inside Workers. If you’d like to work with us, fill out this form or join us on Discord — this is just the beginning. If you’d just like to grab the code and play around, use this example to get started connecting to your own database, or check out our demo.

Why are Database Connectors so hard to build?

Serverless database connections are challenging to support for several reasons.

Databases are needy — they often require TCP connections, since they assume long-lived connections between an application server and the database. The Workers runtime doesn’t currently support TCP connections, so we’ve only been able to support HTTP-based databases or proxies.

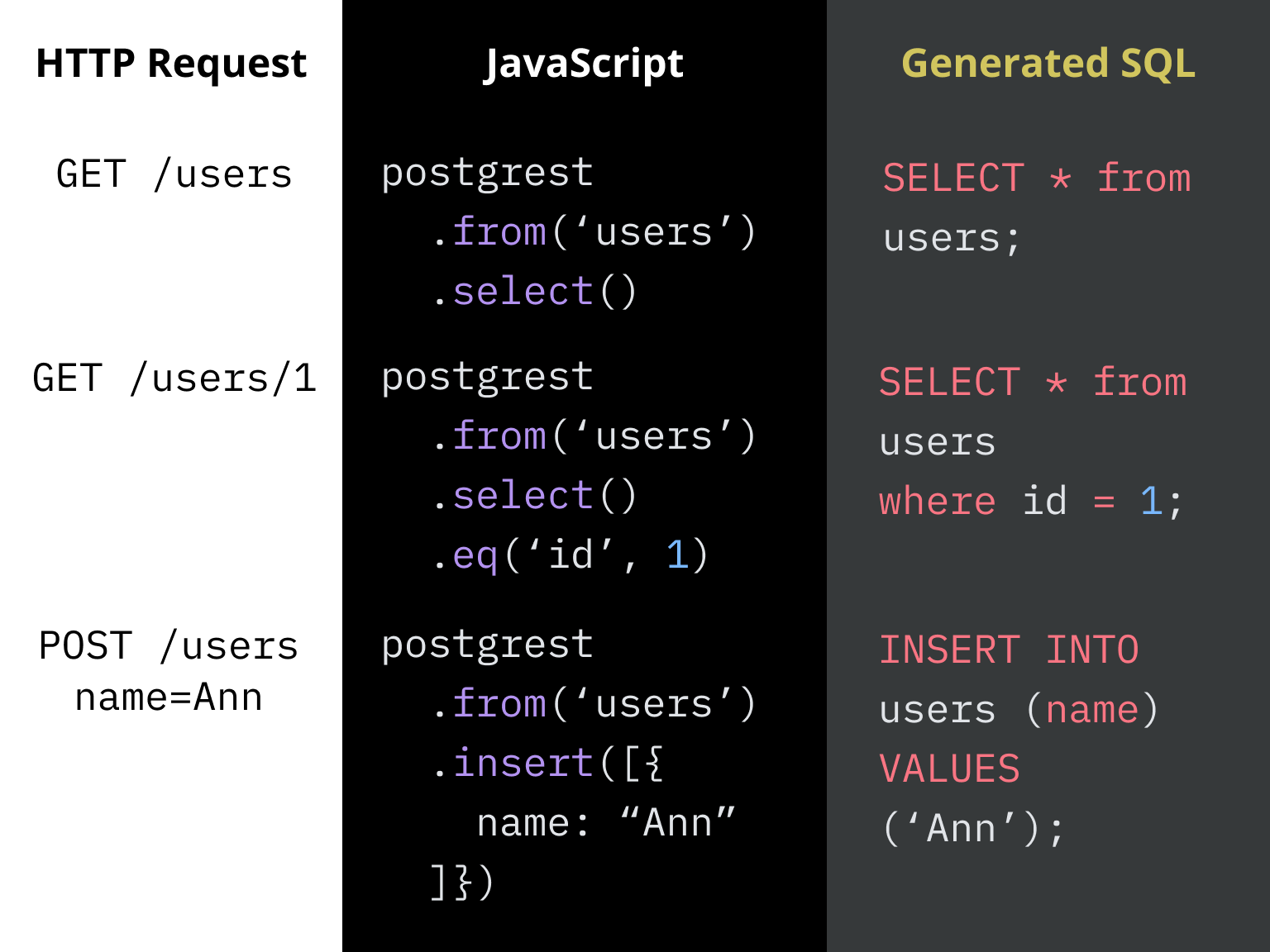

Like a relationship, establishing a connection isn’t quite enough. Developers use client libraries for databases to make submitting queries and managing the responses easy. Since the Workers runtime is not entirely Node.js compatible, we need to either roll our own database library or find one that does not use unsupported built-in libraries.

Finally, databases are sensitive. It often takes external libraries to manage shared connections between an application server and a database, since these connections tend to be expensive to establish.

Moving past these challenges

Our approach today gives us the foundation to address each of these challenges in creative ways going forward.

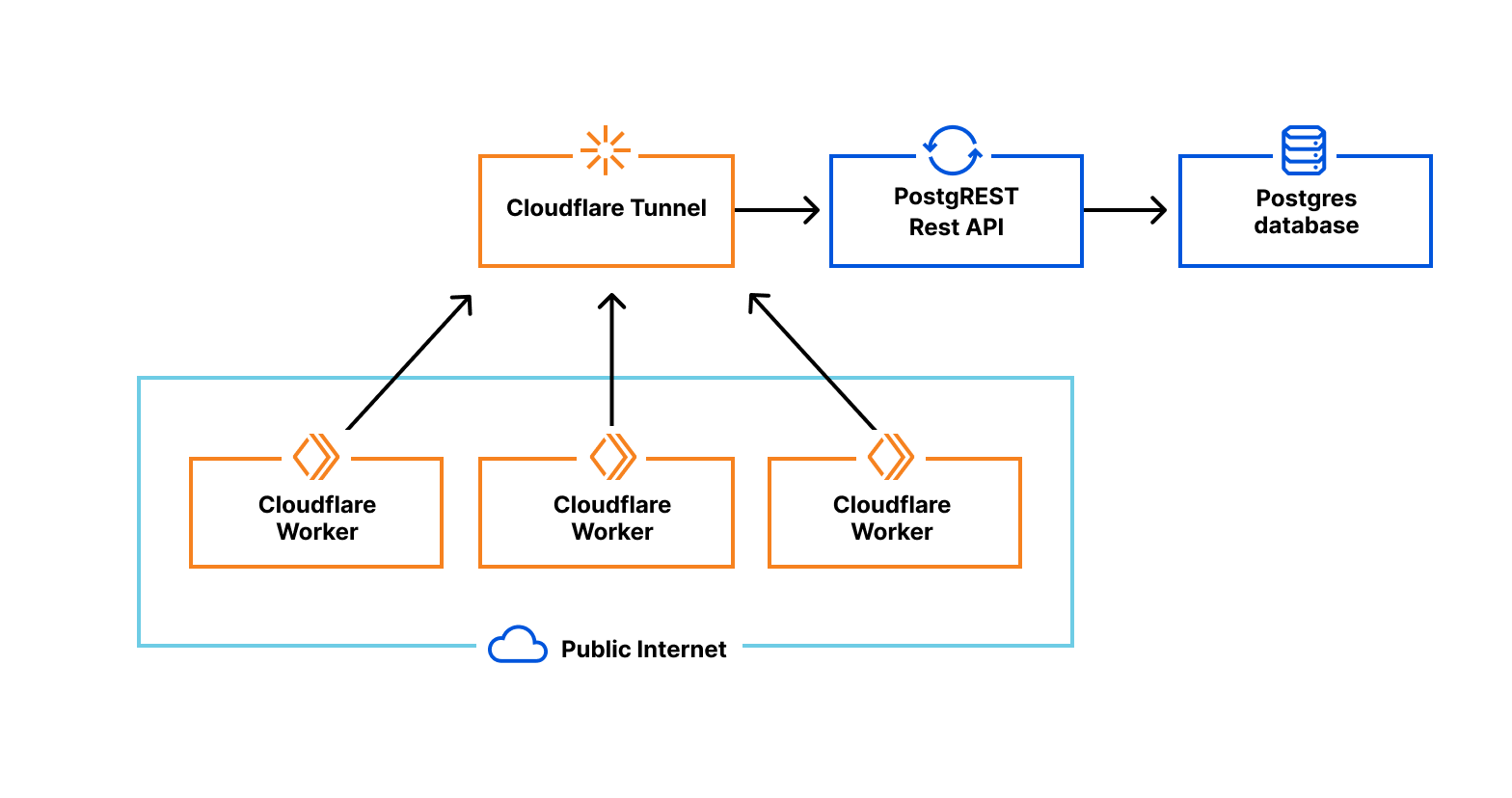

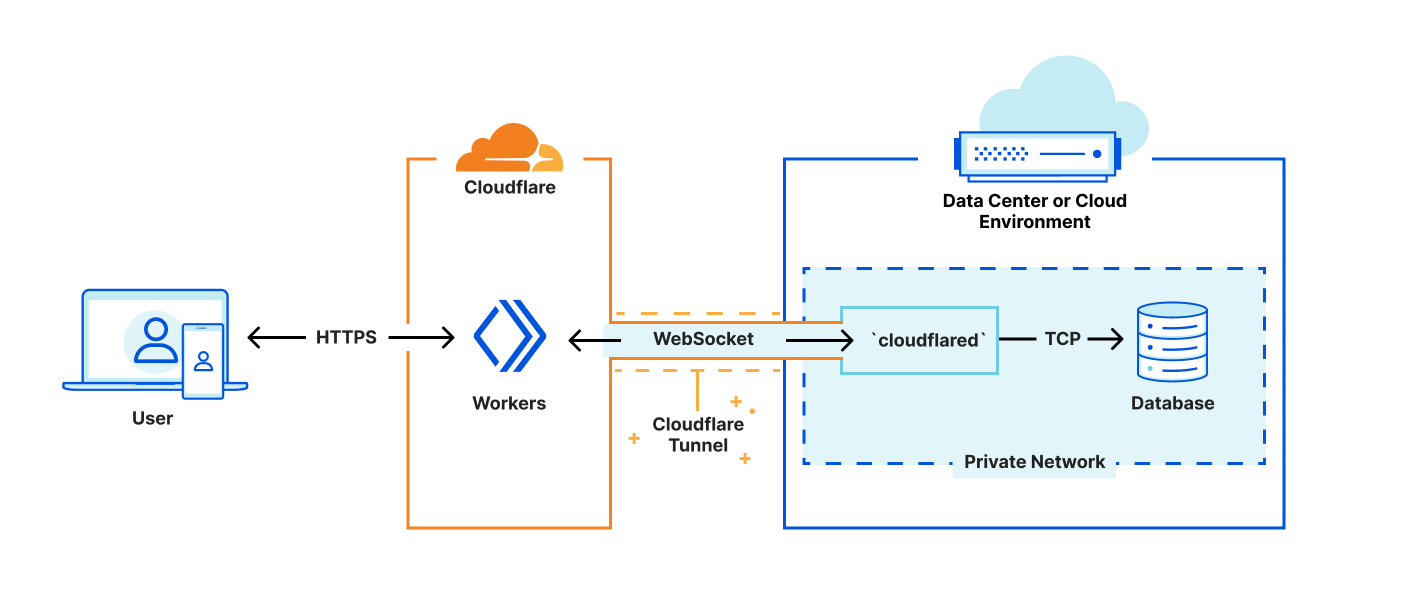

First, we’re leveraging cloudflared to create a secure tunnel between Cloudflare and a private network within your existing infrastructure. Cloudflared already supports proxying HTTP to TCP over WebSockets — Our challenge is providing interfaces that look like the socket interfaces existing libraries expect, while rewiring the implementations to redirect reads and writes to our websocket. This method is fast, safe, and secure; but limiting in that we lack control of where to direct the final connections. This is a problem we will solve soon, but until then our approach is essential to gathering latency and performance data to see where else we need to improve.

Next, we’ve created a shim-layer that adapts the socket API from a popular runtime to connect directly to databases using a WebSocket. This allows us to bundle code as-is, without forking or otherwise making significant changes to the database library. As part of this announcement, we’ve published a tutorial on how to connect to and query a Postgres database from your Workers, using existing Cloudflare technology and a driver from the growing community at Deno. We’re excited to work with the upstream maintainers, on expanding support.

Finally, we’re most excited for how this approach will let us begin to manage connection pooling and connection establishment overhead. While our current tech demo requires setting up the Cloudflare Tunnel on your own infrastructure, we’re looking for customers who’d like to pilot a model where Cloudflare hosts the tunnel for you.

Where we’re going

We’re just getting started. Our goal with today’s announcement is to find customers who are looking to build new applications or migrate existing applications to Workers while working with data that’s stored in a relational database.

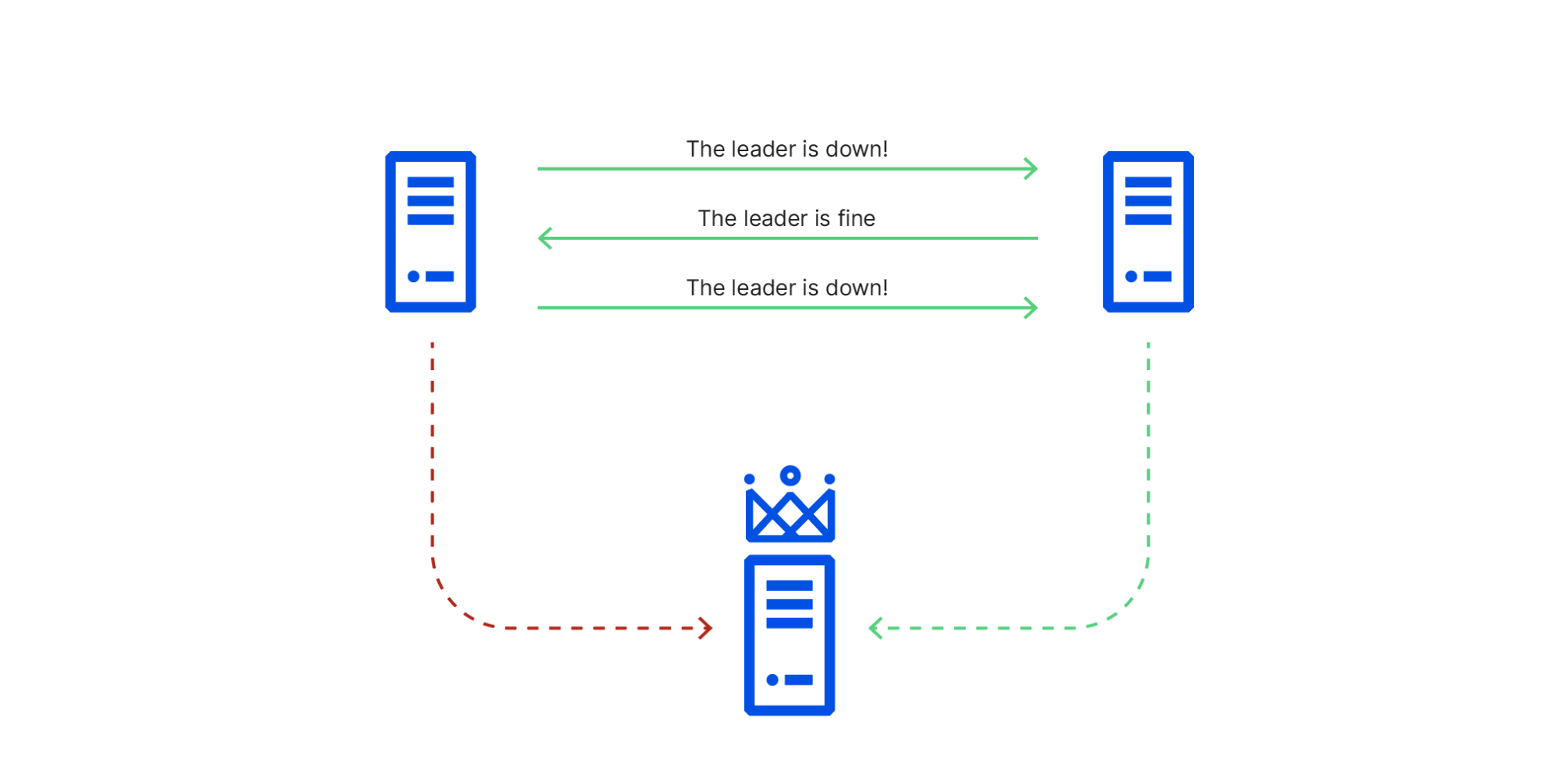

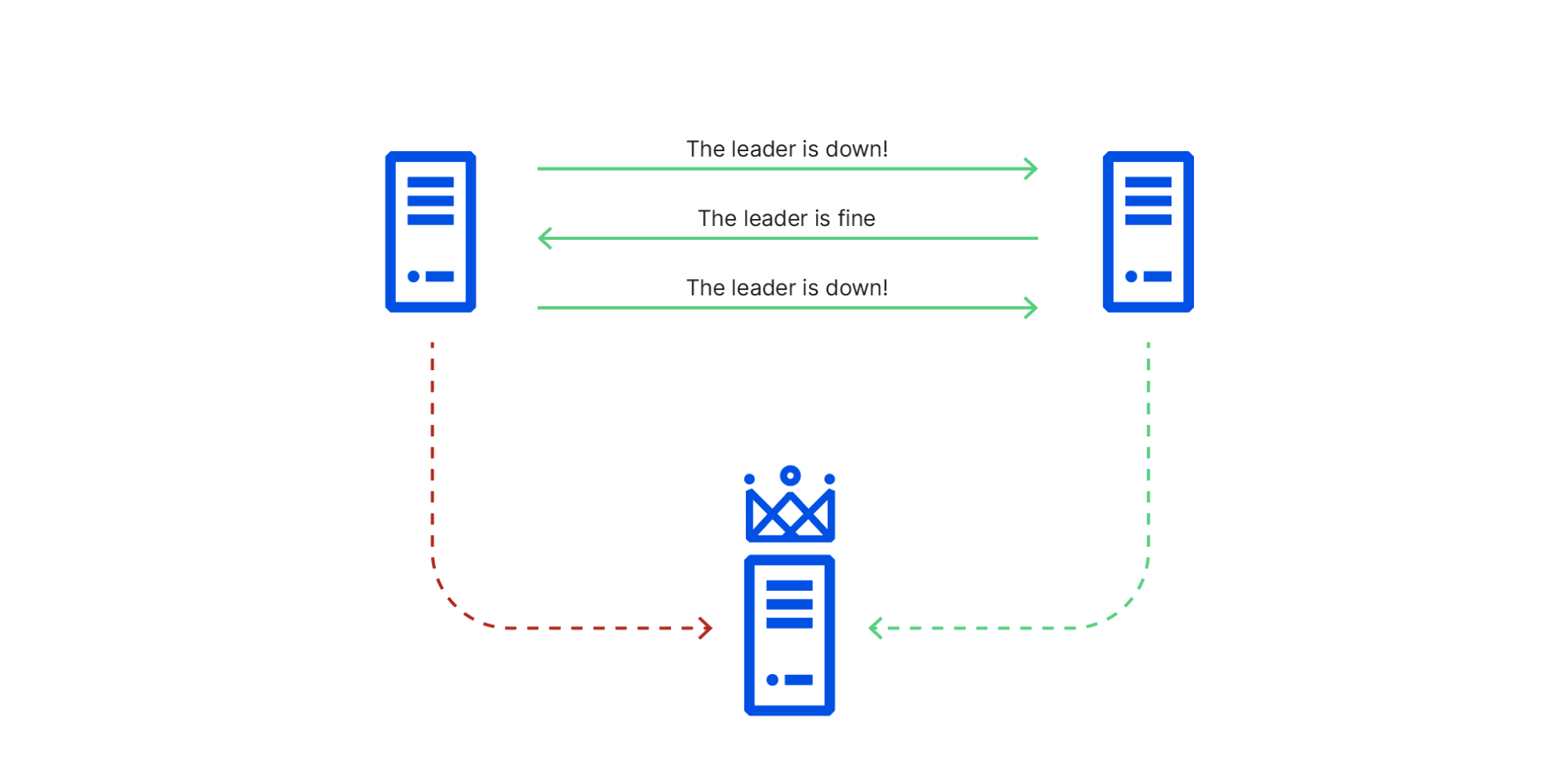

Just as Cloudflare started by providing security, performance, and reliability for customer’s websites, we’re excited about a future where Cloudflare manages database connections, handles replication of data across cloud providers and provides low-latency access to data globally.

First, we’re looking to add support for TCP into the runtime natively. With native support for TCP we’ll not only have better support for databases, but expand the Workers runtime to work with data infrastructure more broadly.

Our position in the network layer of the stack makes providing performance, security benefits and extremely reduced egress costs to global databases all possible realities. To do so, we’ll repurpose the HTTP to TCP proxy service that we’ve currently built and run it for developers as a connection pooling service, managing connections to their databases on their behalf.

Finally, our network makes caching data and making it accessible globally at low latency possible. Once we have connections back to your data, making it globally accessible in Cloudflare’s network will unlock fundamentally new architectures for distributed data.

Take our connectors for a spin

Want to check things out? There are three main steps to getting up-and-running:

- Deploying cloudflared within your infrastructure.

- Deploying a database that connects to cloudflared.

- Deploying a Worker with the database driver that submits queries.

The Postgres tutorial is available here.

When you’re all done, it’ll look a little something like this:

import { Client } from './driver/postgres/postgres'

export default {

async fetch(request: Request, env, ctx: ExecutionContext) {

try {

const client = new Client({

user: 'postgres',

database: 'postgres',

hostname: 'https://db.example.com',

password: '',

port: 5432,

})

await client.connect()

const result = await client.queryArray('SELECT * FROM users WHERE uuid=1;')

ctx.waitUntil(client.end())

return new Response(JSON.stringify(result.rows[0]))

} catch (e) {

return new Response((e as Error).message)

}

},

}

Hit any snags? Fill out this form, join our Discord or shoot us an email and let’s chat!