Post Syndicated from Abe Carryl original https://blog.cloudflare.com/introducing-warp-connector-paving-the-path-to-any-to-any-connectivity-2

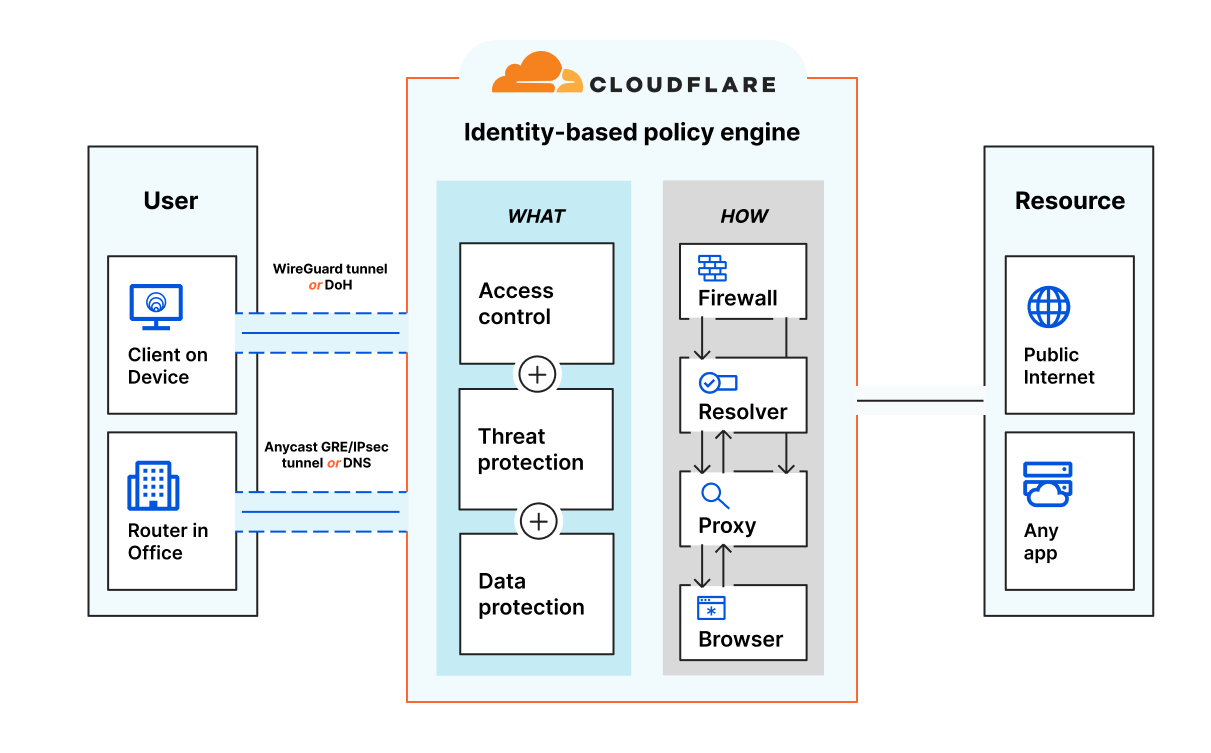

In the ever-evolving domain of enterprise security, CISOs and CIOs have to tirelessly build new enterprise networks and maintain old ones to achieve performant any-to-any connectivity. For their team of network architects, surveying their own environment to keep up with changing needs is half the job. The other is often unearthing new, innovative solutions which integrate seamlessly into the existing landscape. This continuous cycle of construction and fortification in the pursuit of secure, flexible infrastructure is exactly what Cloudflare’s SASE offering, Cloudflare One, was built for.

Cloudflare One has progressively evolved based on feedback from customers and analysts., Today, we are thrilled to introduce the public availability of the Cloudflare WARP Connector, a new tool that makes bidirectional, site-to-site, and mesh-like connectivity even easier to secure without the need to make any disruptive changes to existing network infrastructure.

Bridging a gap in Cloudflare’s Zero Trust story

Cloudflare’s approach has always been focused on offering a breadth of products, acknowledging that there is no one-size-fits-all solution for network connectivity. Our vision is simple: any-to-any connectivity, any way you want it.

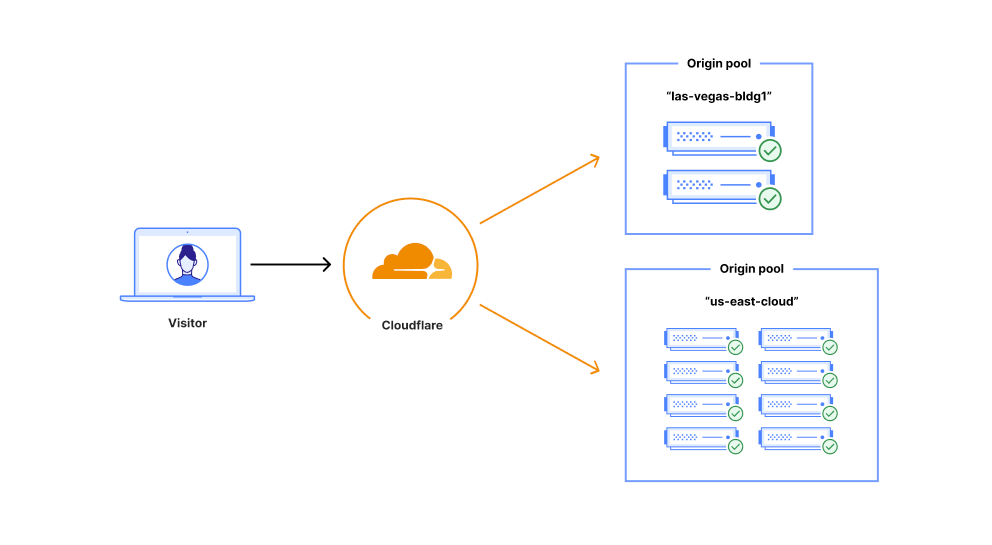

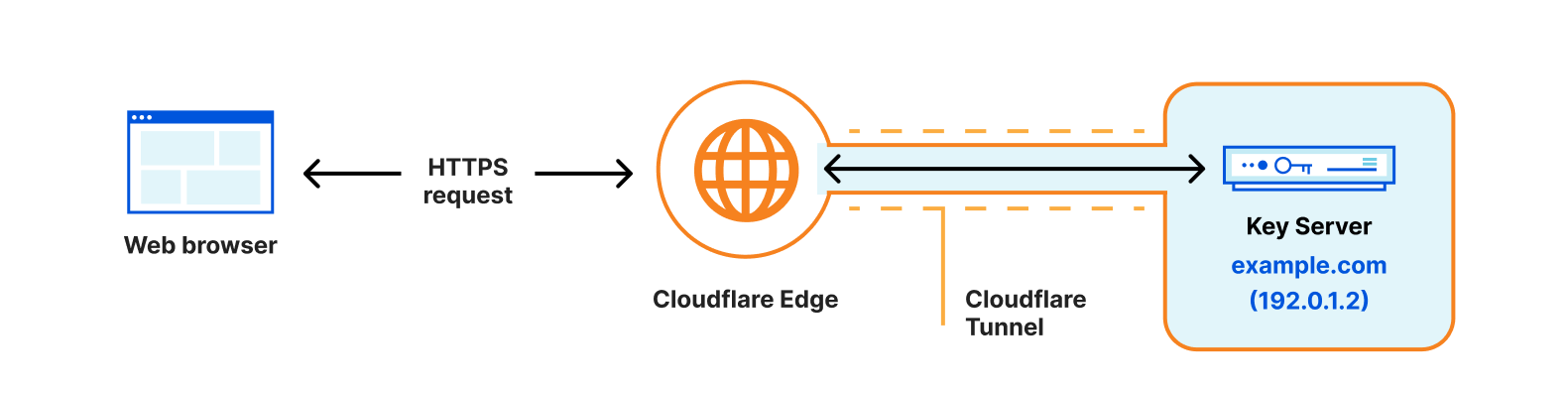

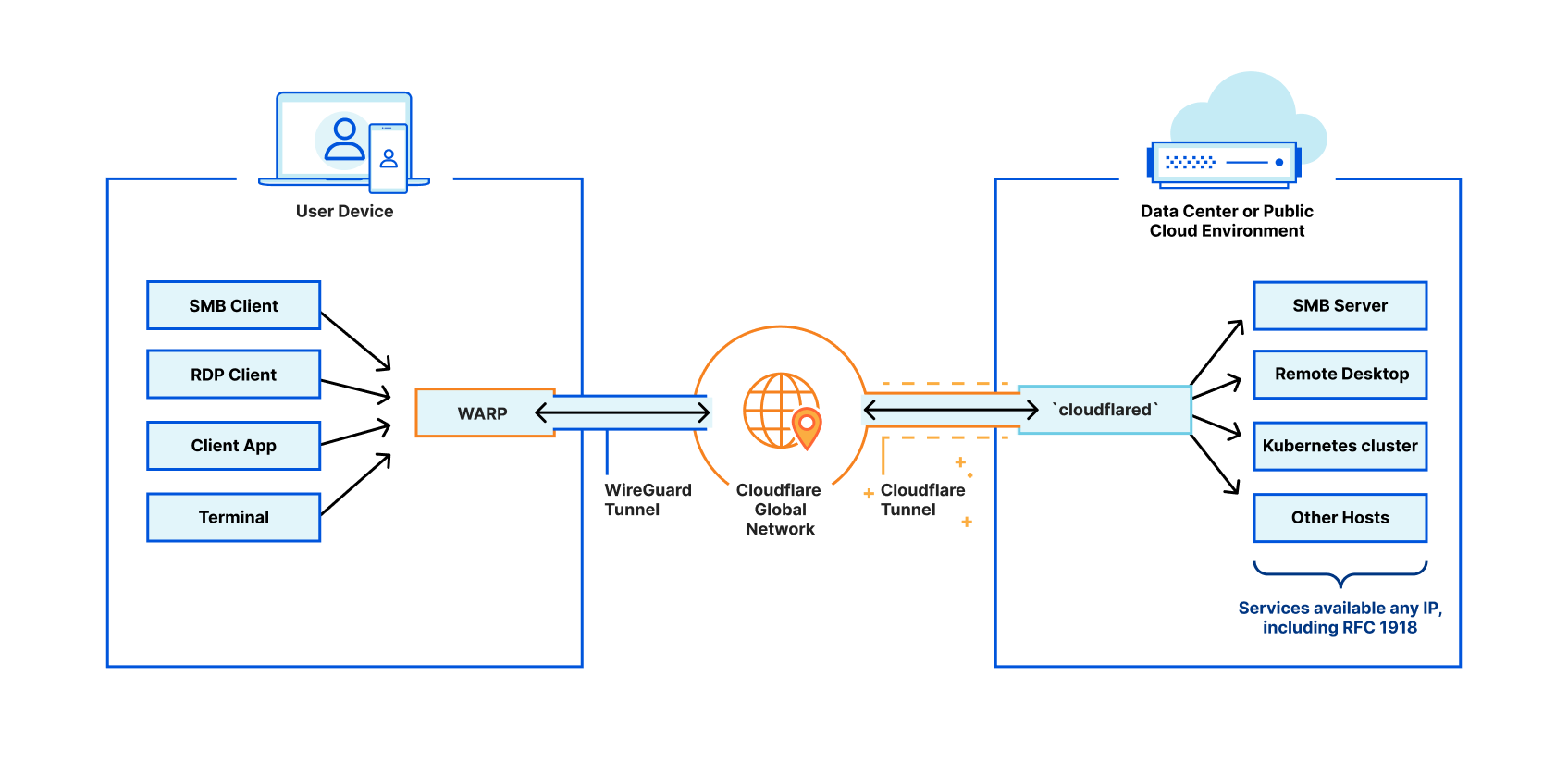

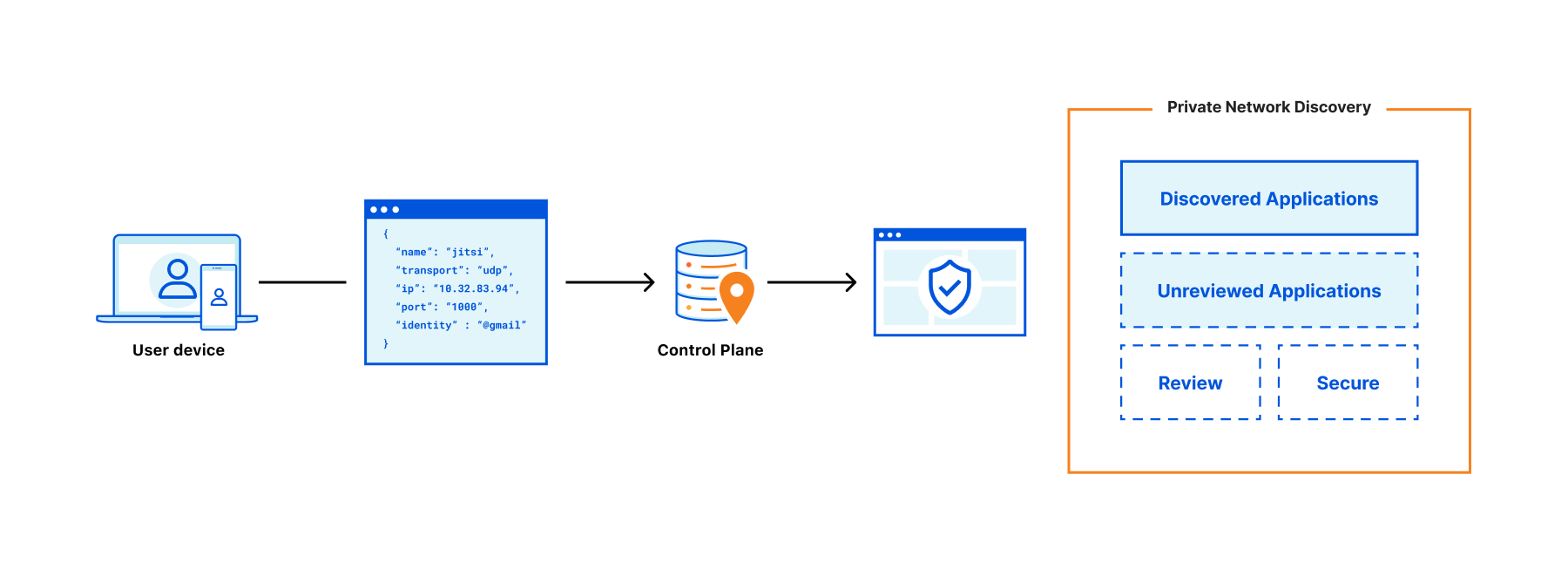

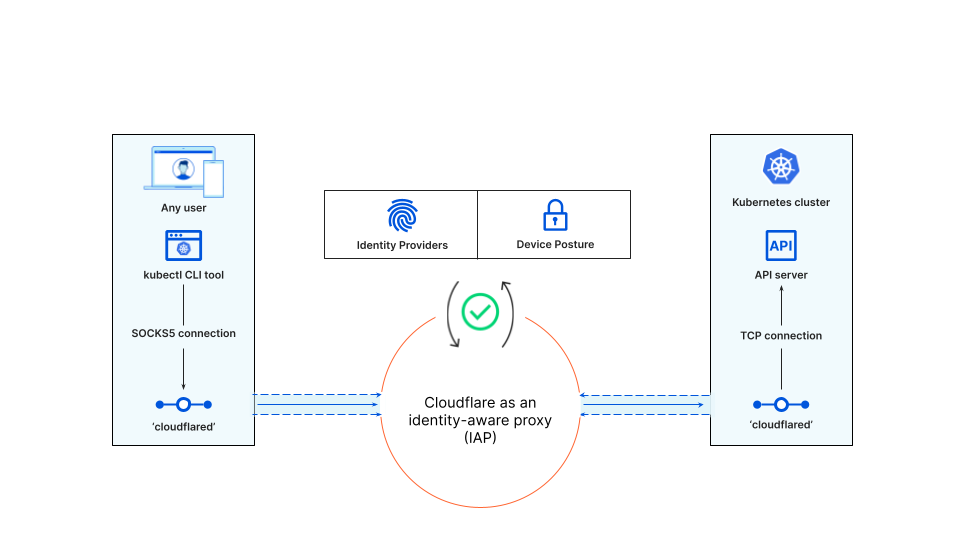

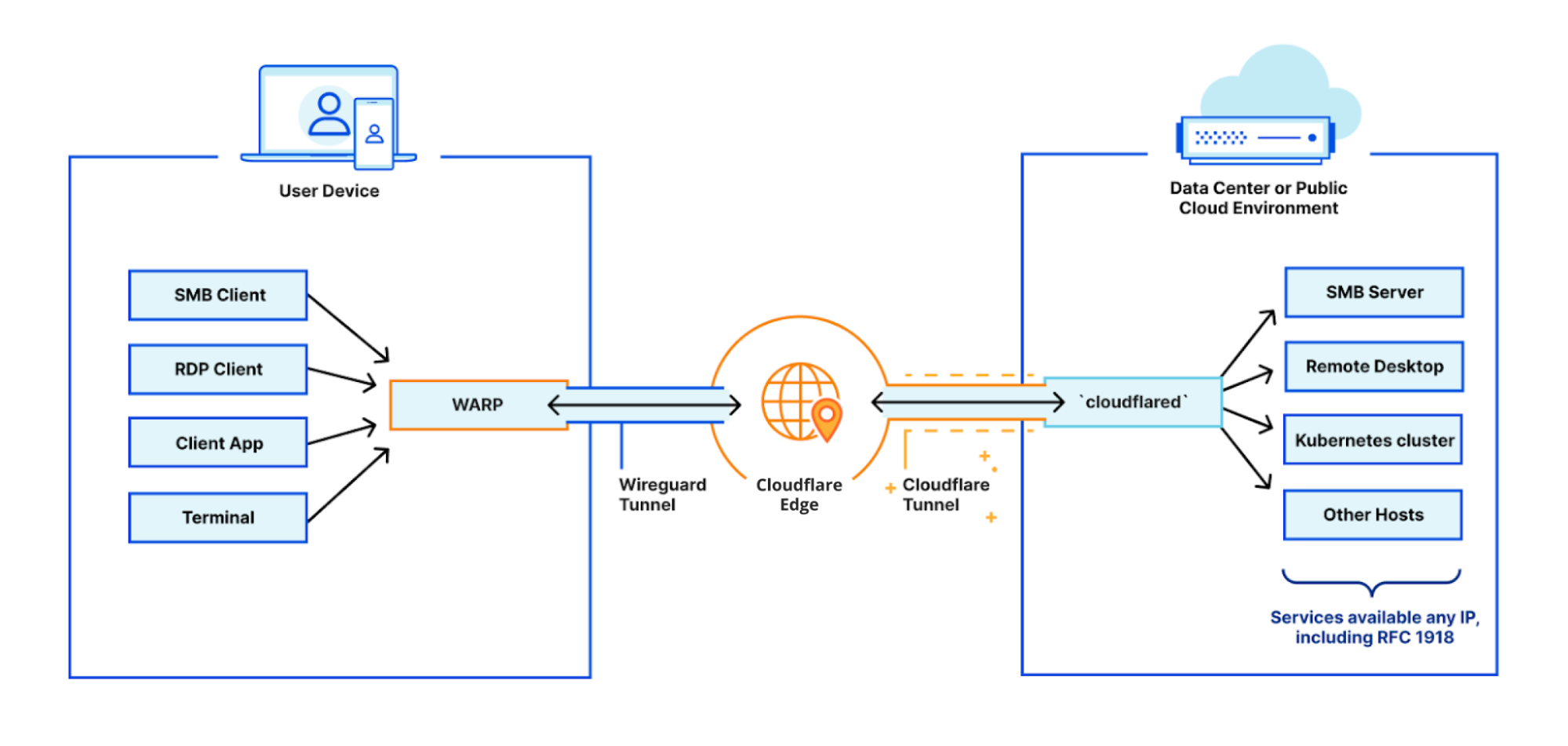

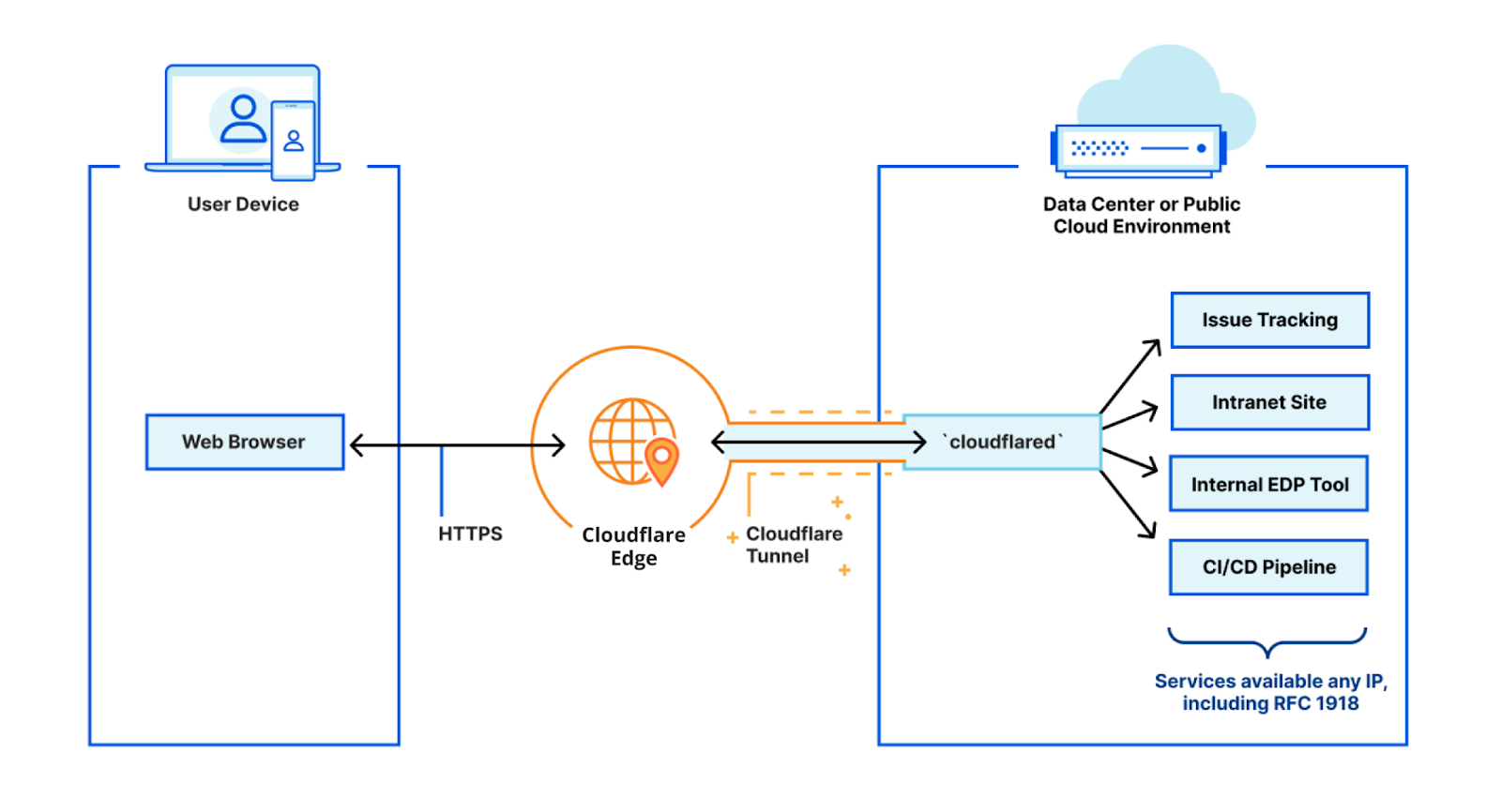

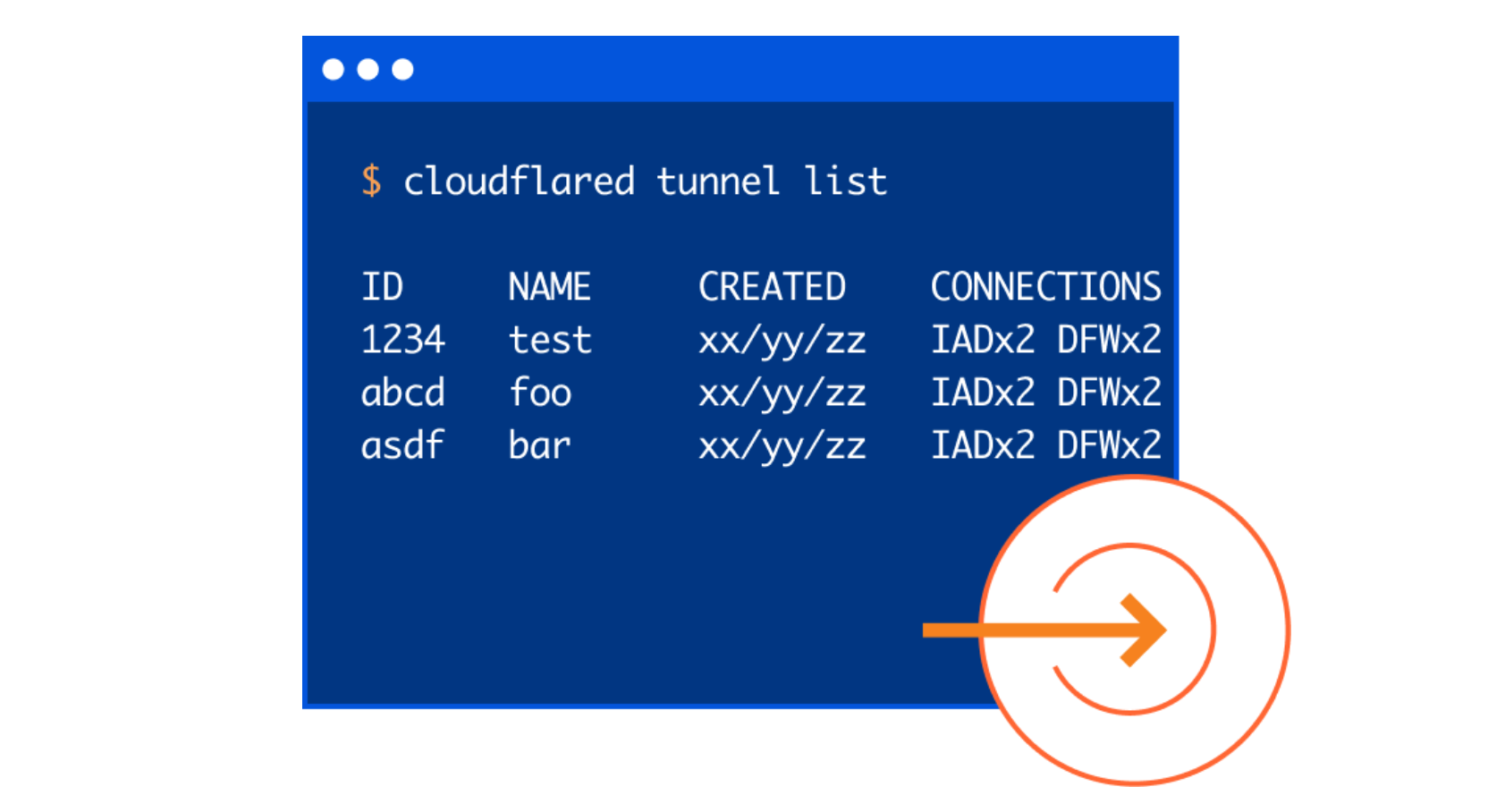

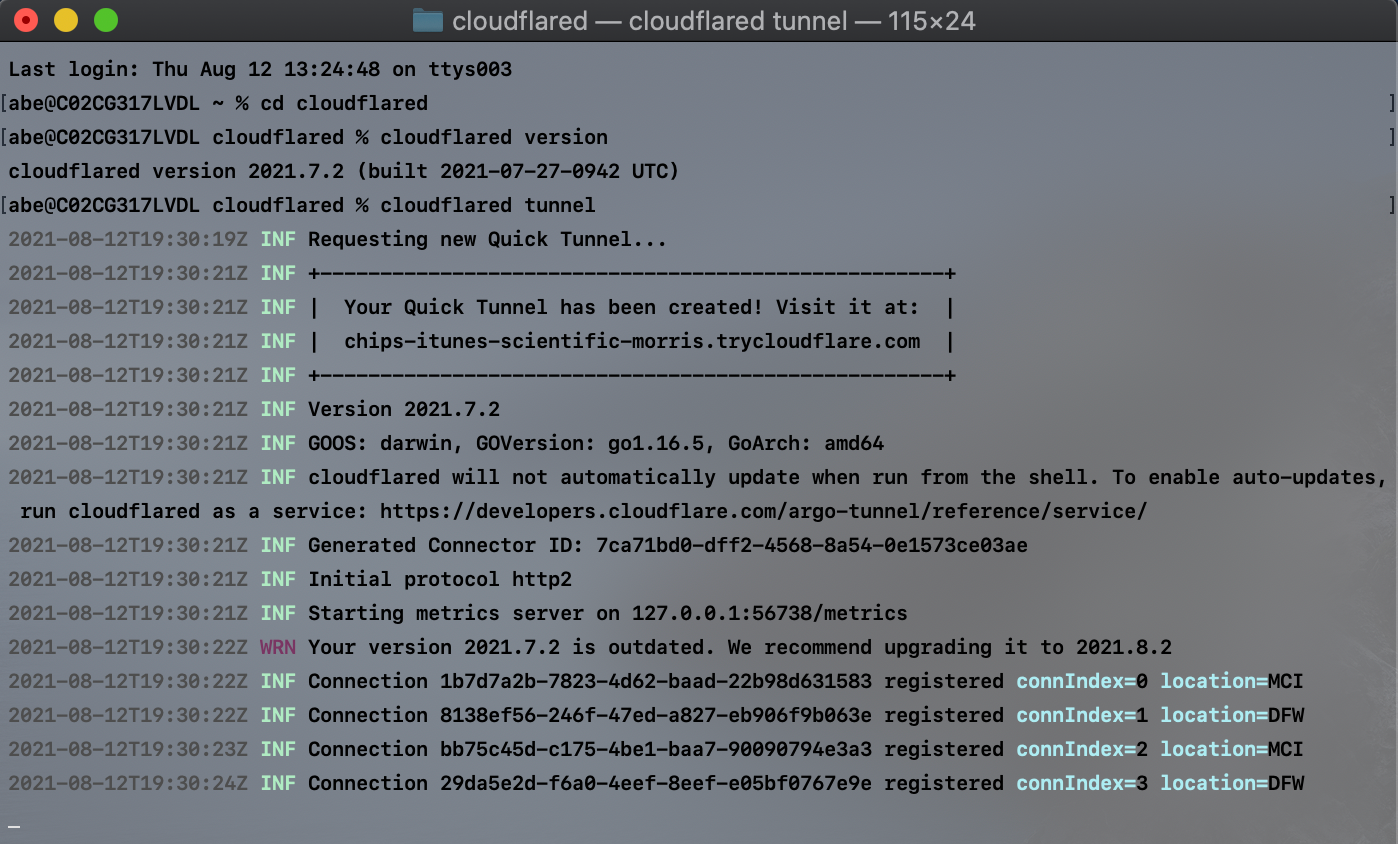

Prior to the WARP Connector, one of the easiest ways to connect your infrastructure to Cloudflare, whether that be a local HTTP server, web services served by a Kubernetes cluster, or a private network segment, was through the Cloudflare Tunnel app connector, cloudflared. In many cases this works great, but over time customers began to surface a long tail of use cases which could not be supported based on the underlying architecture of cloudflared. This includes situations where customers utilize VOIP phones, necessitating a SIP server to establish outgoing connections to user’s softphones, or a CI/CD server sending notifications to relevant stakeholders for each stage of the CI/CD pipelines. Later in this blog post, we explore these use cases in detail.

As clouflared proxies at Layer 4 of the OSI model, its design was optimized specifically to proxy requests to origin services — it was not designed to be an active listener to handle requests from origin services. This design trade-off means that cloudflared needs to source NAT all requests it proxies to the application server. This setup is convenient for scenarios where customers don’t need to update routing tables to deploy cloudflared in front of their original services. However, it also means that customers can’t see the true source IP of the client sending the requests. This matters in scenarios where a network firewall is logging all the network traffic, as the source IP of all the requests will be cloudflared’s IP address, causing the customer to lose visibility into the true client source.

Build or borrow

To solve this problem, we identified two potential solutions: start from scratch by building a new connector, or borrow from an existing connector, likely in either cloudflared or WARP.

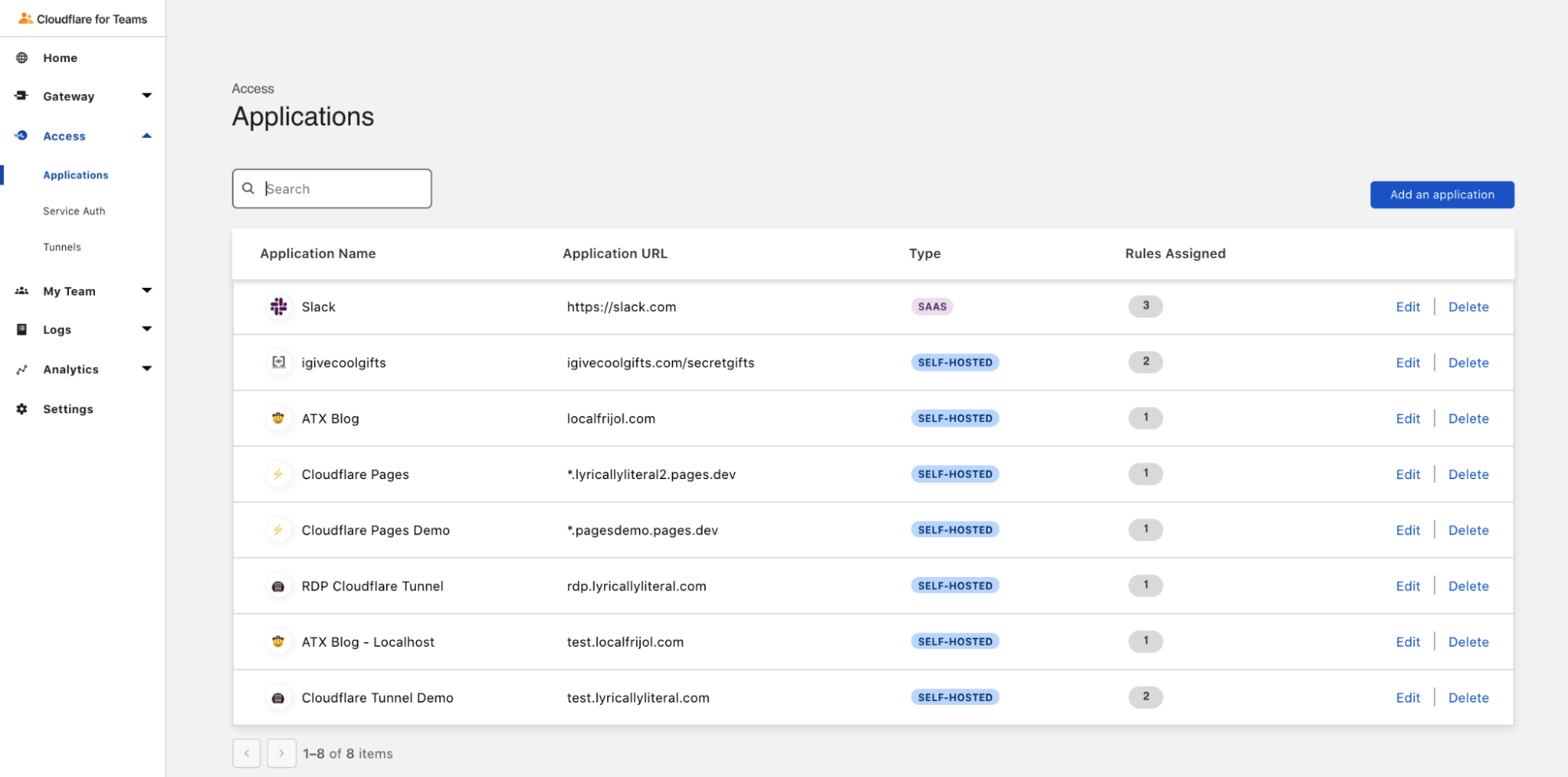

The following table provides an overview of the tradeoffs of the two approaches:

|

Features |

Build in cloudflared |

Borrow from WARP |

|

Bidirectional traffic flows |

As described in the earlier section, limitations of Layer 4 proxying. |

This does proxying at Layer 3, because of which it can act as default gateway for that subnet, enabling it to support traffic flows from both directions. |

|

User experience |

For Cloudflare One customers, they have to work with two distinct products (cloudflared and WARP) to connect their services and users. |

For Cloudflare One customers, they just have to get familiar with a single product to connect their users as well as their networks. |

|

Site-to-site connectivity between branches, data centers (on-premise and cloud) and headquarters. |

Not recommended |

For sites where running agents on each device is not feasible, this could easily connect the sites to users running WARP clients in other sites/branches/data centers. This would work seamlessly where the underlying tunnels are all the same. |

|

Visibility into true source IP |

It does source NATting. |

Since it acts as the default gateway, it preserves the true source IP address for any traffic flow. |

|

High availability |

Inherently reliable by design and supports replicas for failover scenarios. |

Reliability specifications are very different for a default gateway use case vs endpoint device agent. Hence, there is opportunity to innovate here. |

Introducing WARP Connector

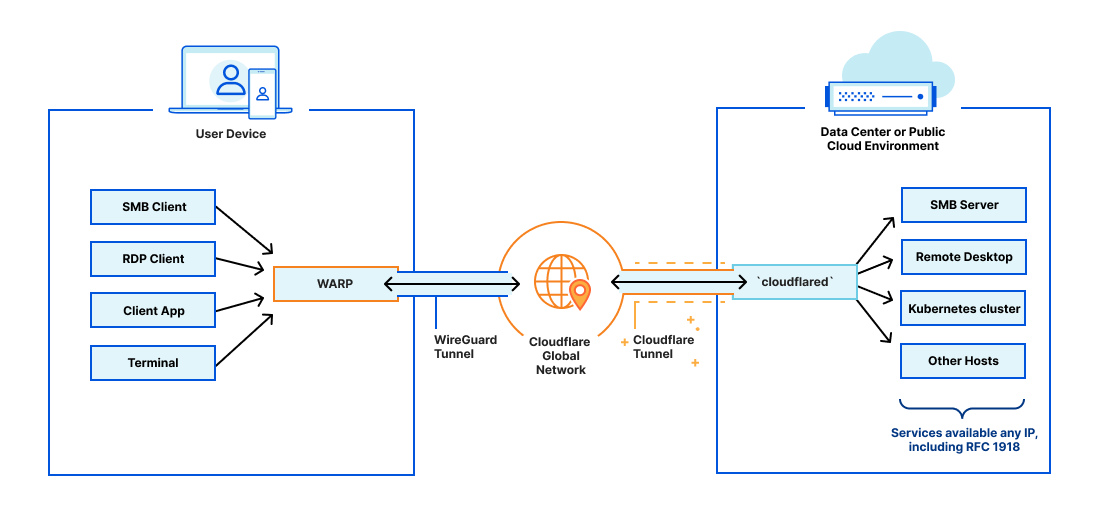

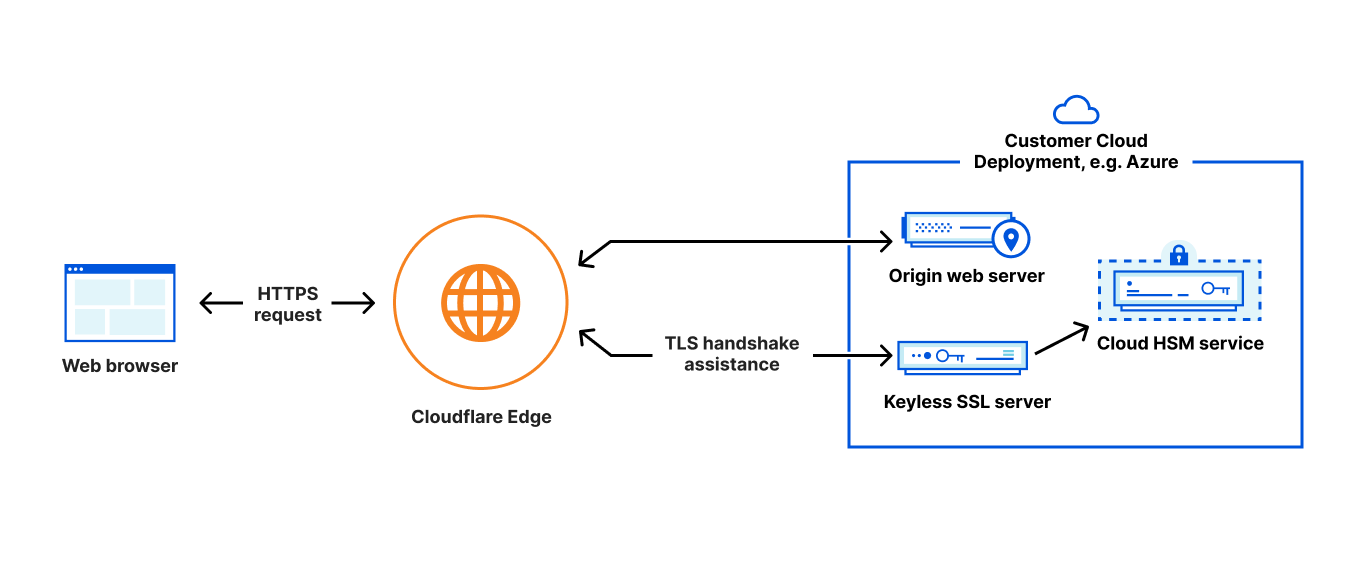

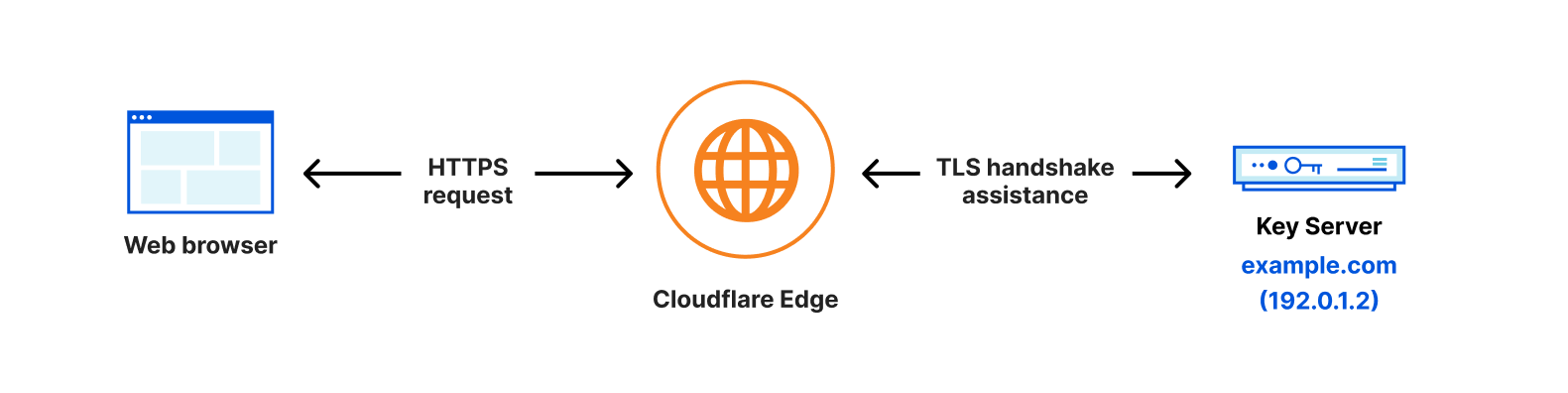

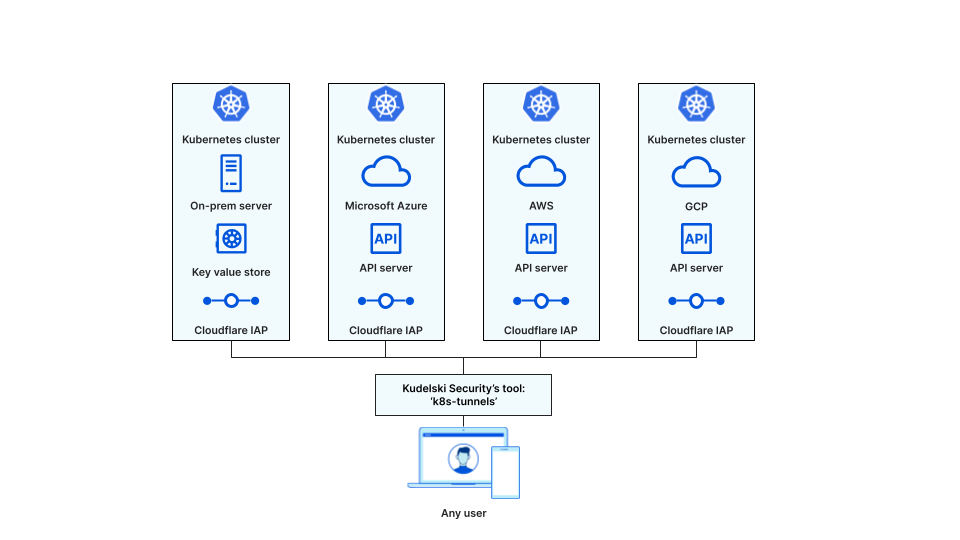

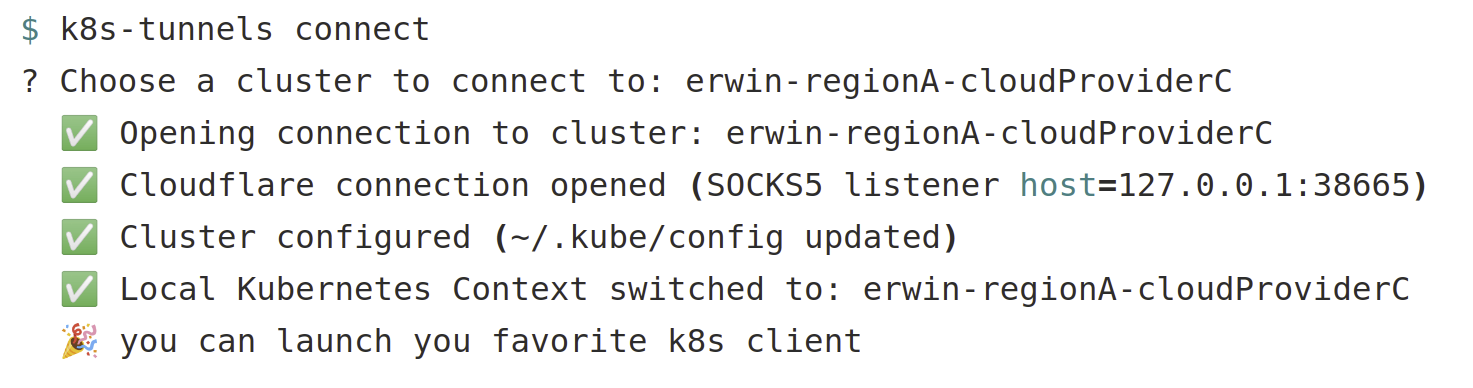

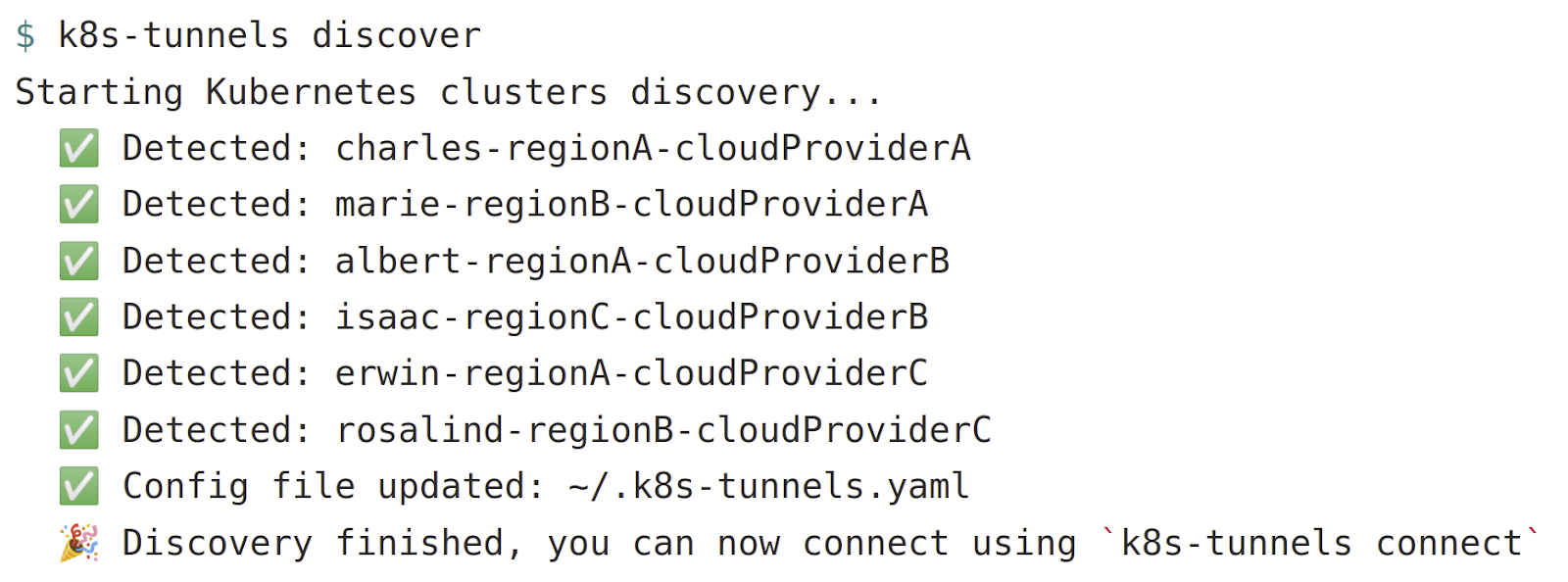

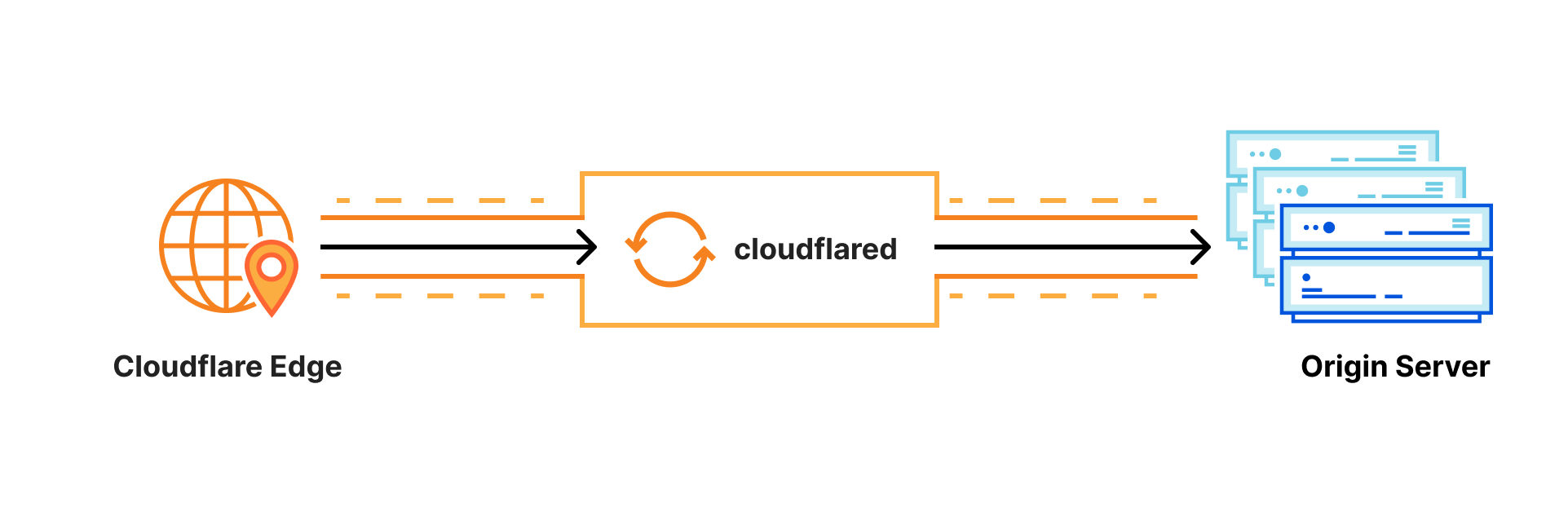

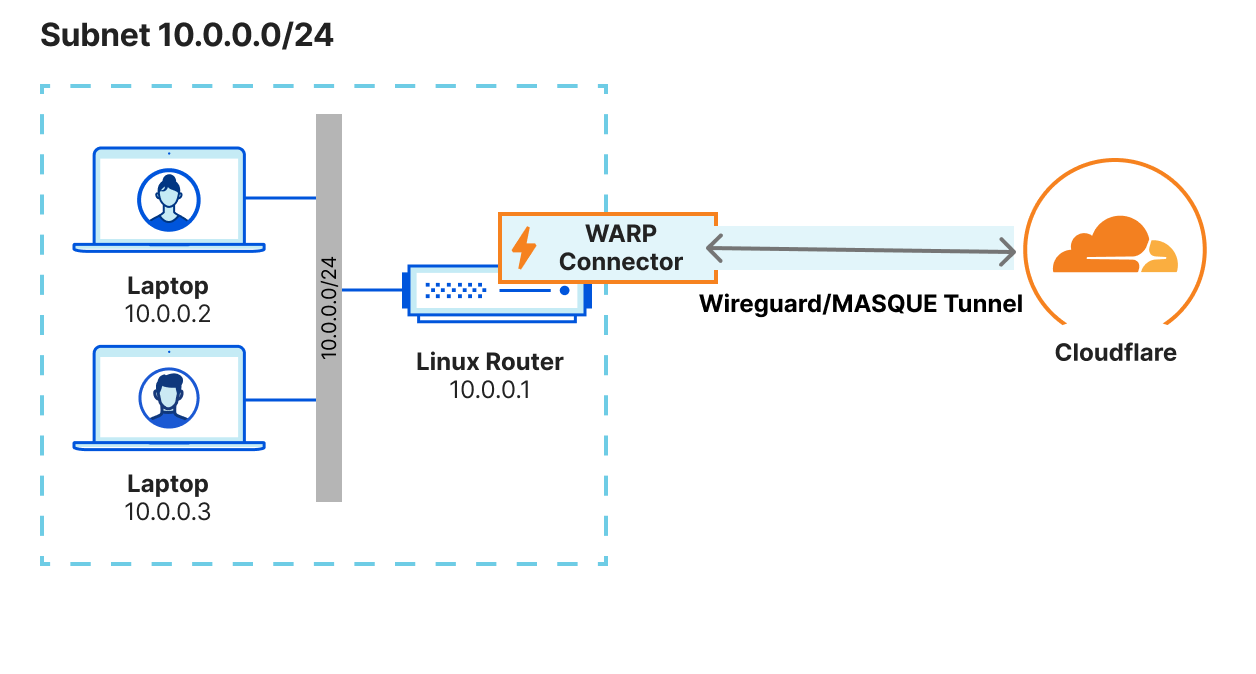

Starting today, the introduction of WARP Connector opens up new possibilities: server initiated (SIP/VOIP) flows; site-to-site connectivity, connecting branches, headquarters, and cloud platforms; and even mesh-like networking with WARP-to-WARP. Under the hood, this new connector is an extension of warp-client that can act as a virtual router for any subnet within the network to on/off-ramp traffic through Cloudflare.

By building on WARP, we were able to take advantage of its design, where it creates a virtual network interface on the host to logically subdivide the physical interface (NIC) for the purpose of routing IP traffic. This enables us to send bidirectional traffic through the WireGuard/MASQUE tunnel that’s maintained between the host and Cloudflare edge. By virtue of this architecture, customers also get the added benefit of visibility into the true source IP of the client.

WARP Connector can be easily deployed on the default gateway without any additional routing changes. Alternatively, static routes can be configured for specific CIDRs that need to be routed via WARP Connector, and the static routes can be configured on the default gateway or on every host in that subnet.

Private network use cases

Here we’ll walk through a couple of key reasons why you may want to deploy our new connector, but remember that this solution can support numerous services, such as Microsoft’s System Center Configuration Manager (SCCM), Active Directory server updates, VOIP and SIP traffic, and developer workflows with complex CI/CD pipeline interaction. It’s also important to note this connector can either be run alongside cloudflared and Magic WAN, or can be a standalone remote access and site-to-site connector to the Cloudflare Global network.

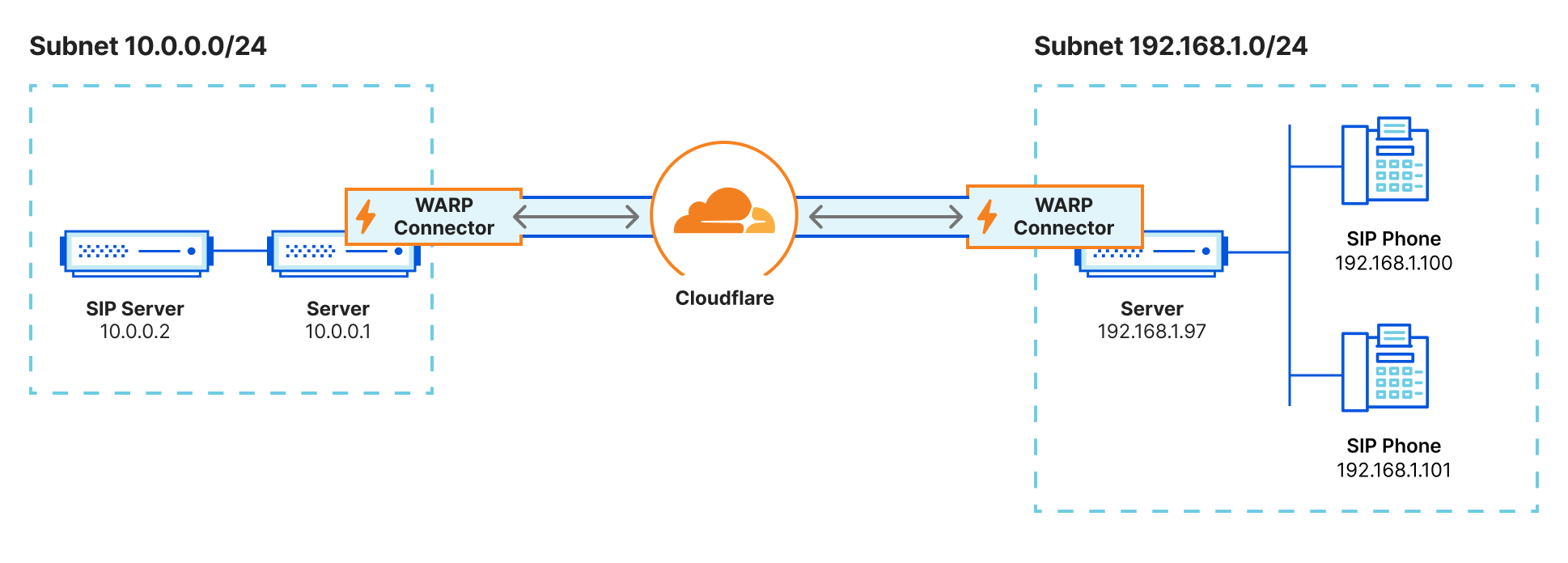

Softphone and VOIP servers

For users to establish a voice or video call over a VOIP software service, typically a SIP server within the private network brokers the connection using the last known IP address of the end-user. However, if traffic is proxied anywhere along the path, this often results in participants only receiving partial voice or data signals. With the WARP Connector, customers can now apply granular policies to these services for secure access, fortifying VOIP infrastructure within their Zero Trust framework.

Securing access to CI/CD pipeline

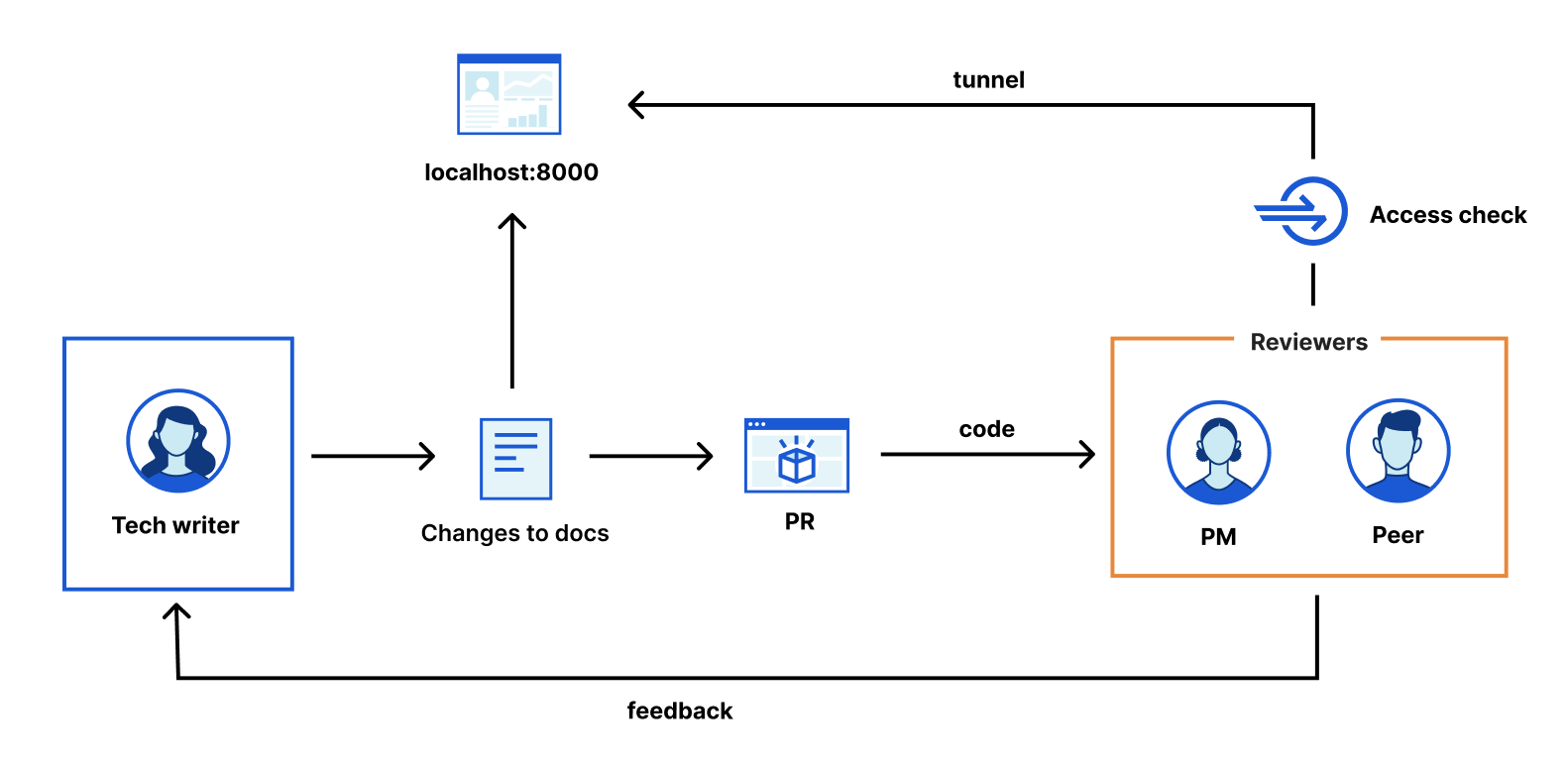

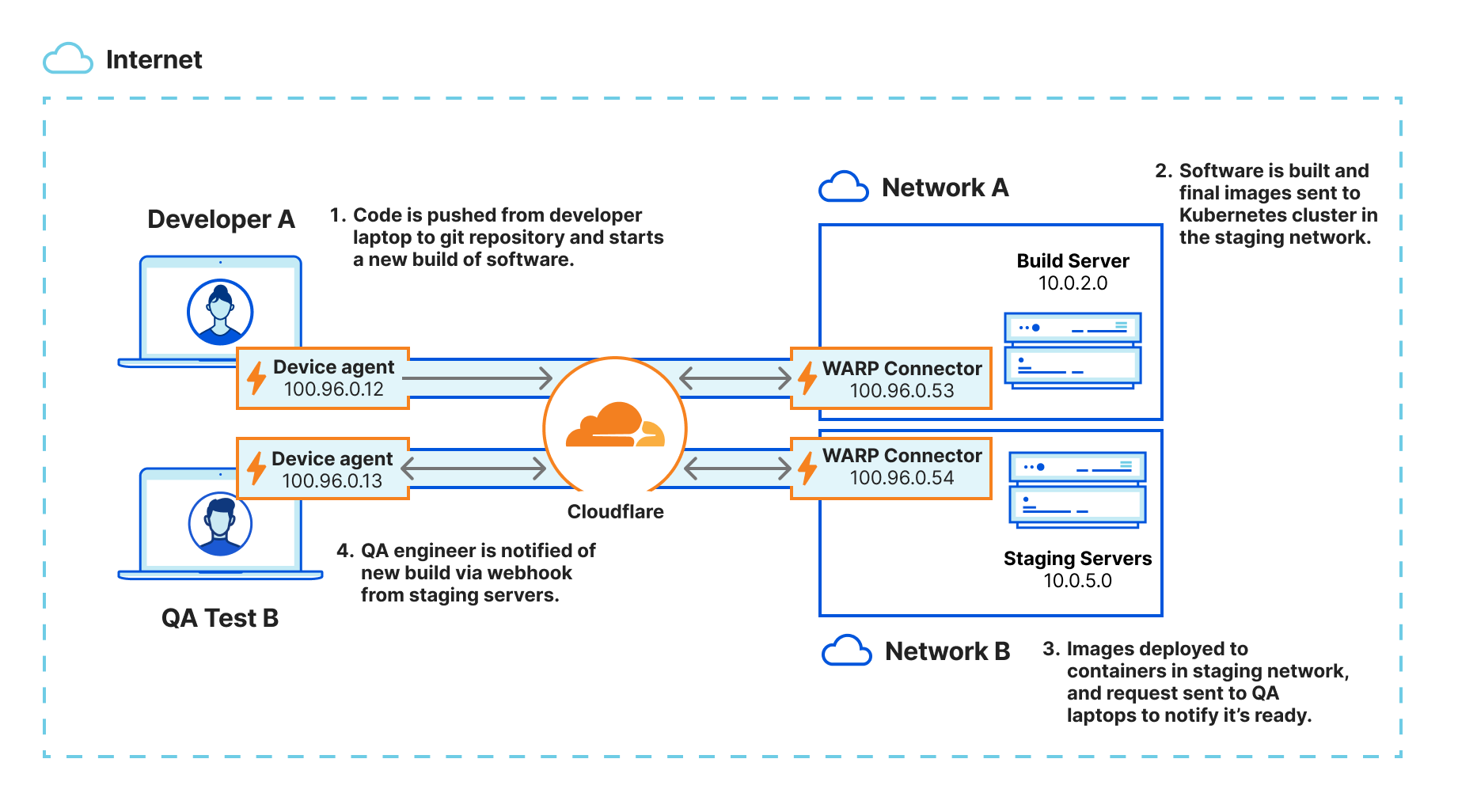

An organization’s DevOps ecosystem is generally built out of many parts, but a CI/CD server such as Jenkins or Teamcity is the epicenter of all development activities. Hence, securing that CI/CD server is critical. With the WARP Connector and WARP Client, organizations can secure the entire CI/CD pipeline and also streamline it easily.

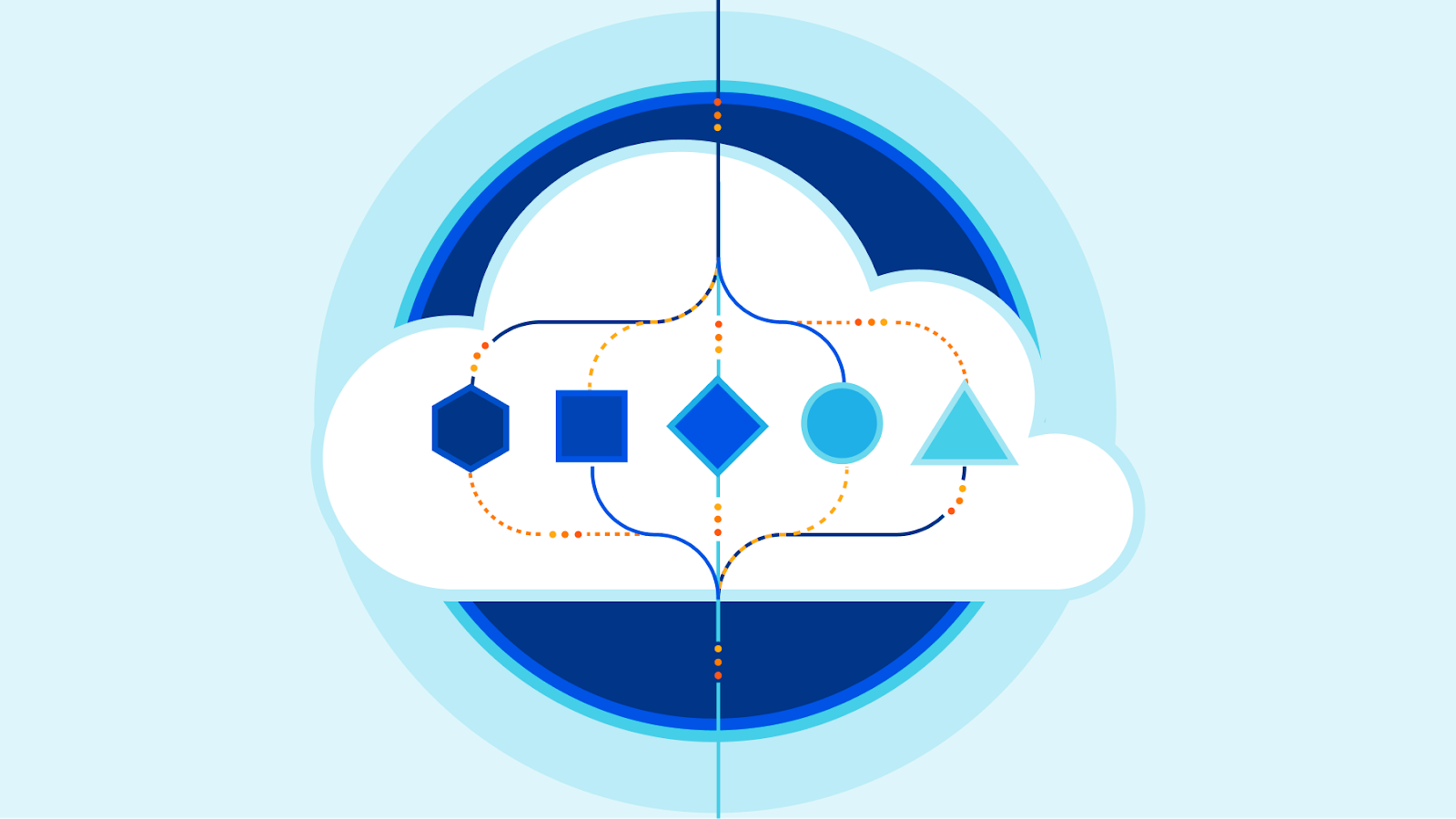

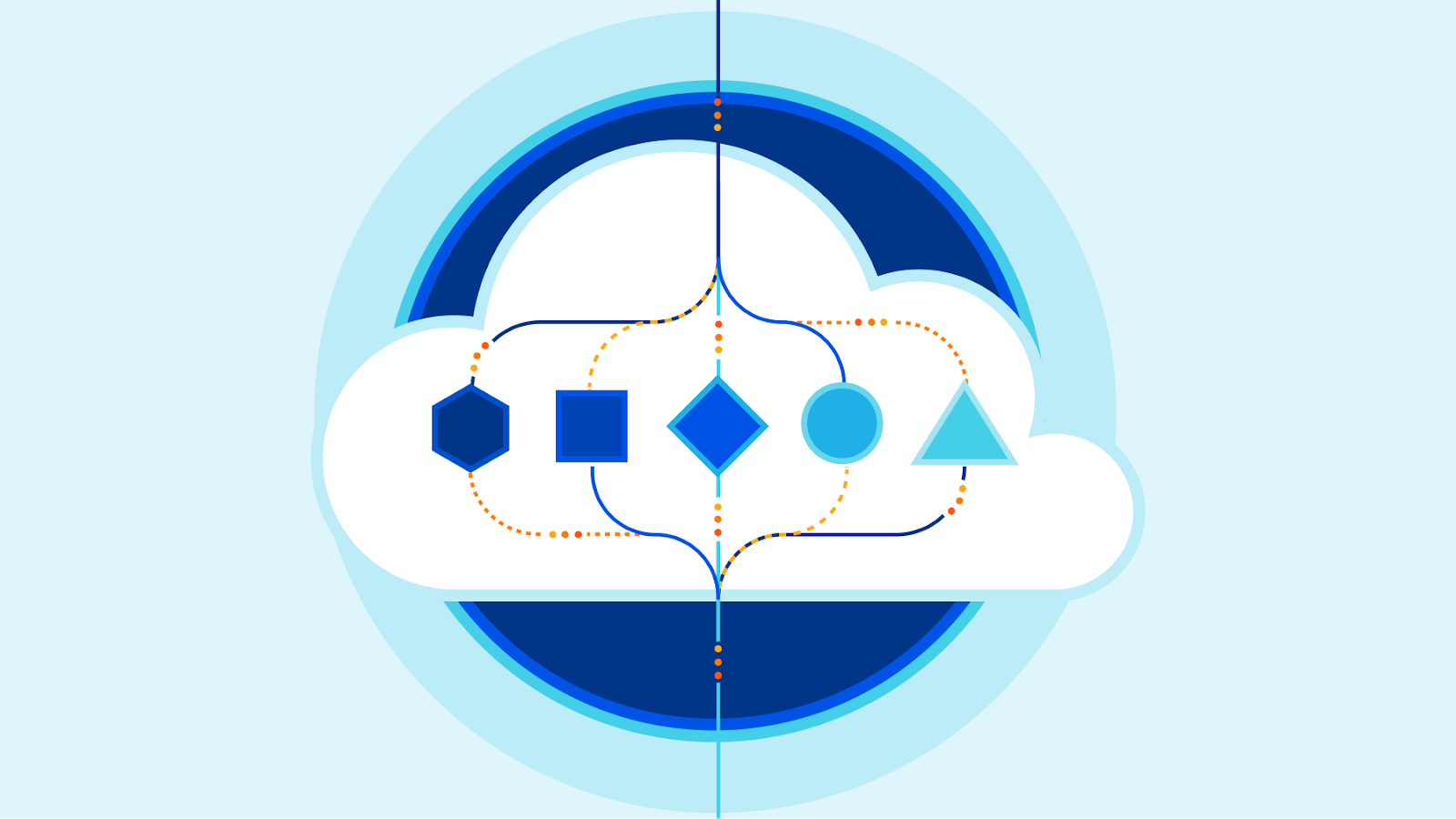

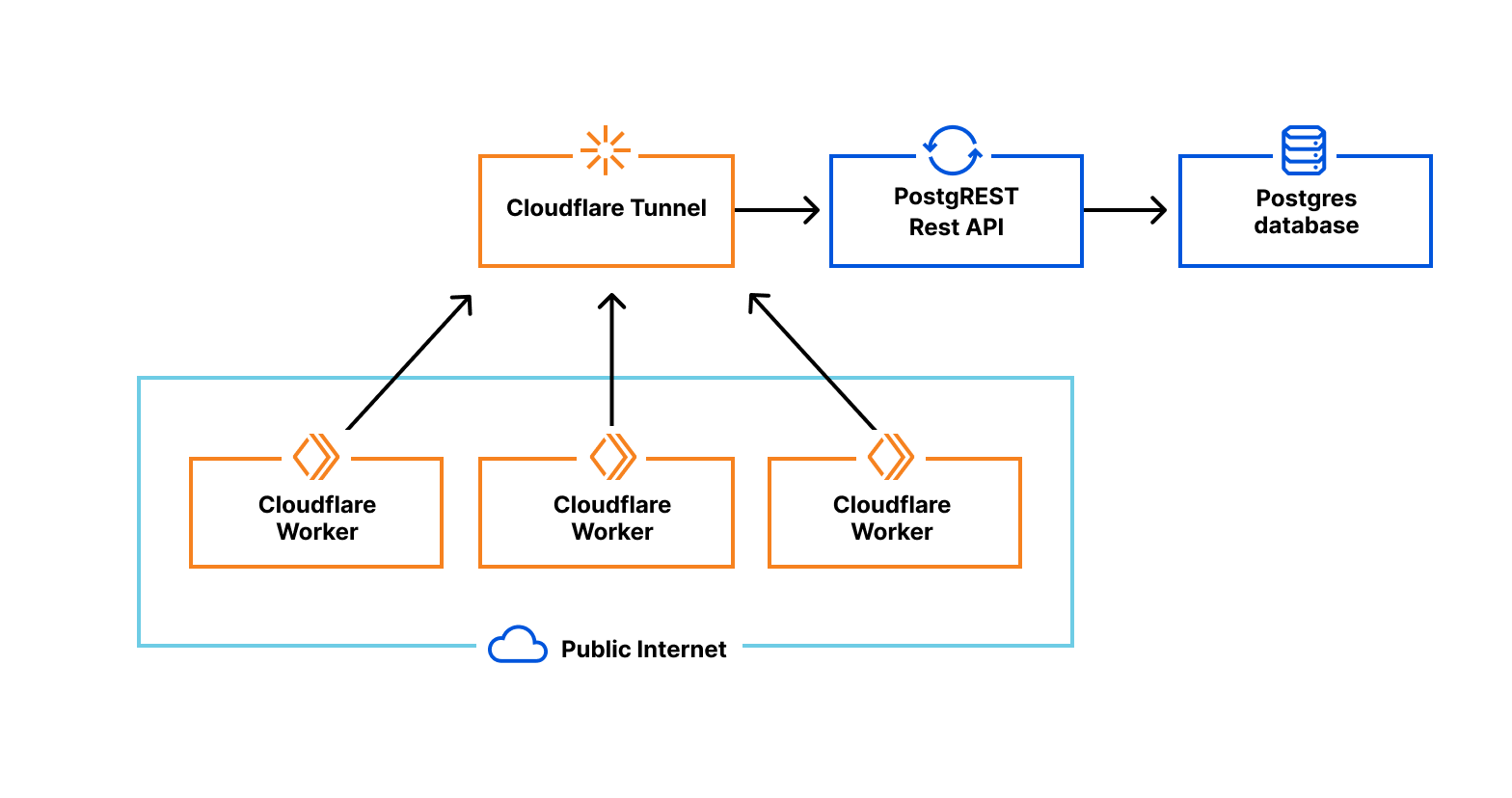

Let’s look at a typical CI/CD pipeline for a Kubernetes application. The environment is set up as depicted in the diagram above, with WARP clients on the developer and QA laptops and a WARP Connector securely connecting the CI/CD server and staging servers on different networks:

- Typically, the CI/CD pipeline is triggered when a developer commits their code change, invoking a webhook on the CI/CD server.

- Once the images are built, it’s time to deploy the code, which is typically done in stages: test, staging and production.

- Notifications are sent to the developer and QA engineer to notify them when the images are ready in the test/staging environments.

- QA engineers receive the notifications via webhook from the CI/CD servers to kick-start their monitoring and troubleshooting workflow.

With WARP Connector, customers can easily connect their developers to the tools in the DevOps ecosystem by keeping the ecosystem private and not exposing it to the public. Once the DevOps ecosystem is securely connected to Cloudflare, granular security policies can be easily applied to secure access to the CI/CD pipeline.

True source IP address preservation

Organizations running Microsoft AD Servers or non-web application servers often need to identify the true source IP address for auditing or policy application. If these requirements exist, WARP Connector simplifies this, offering solutions without adding NAT boundaries. This can be useful to rate-limit unhealthy source IP addresses, for ACL-based policies within the perimeter, or to collect additional diagnostics from end-users.

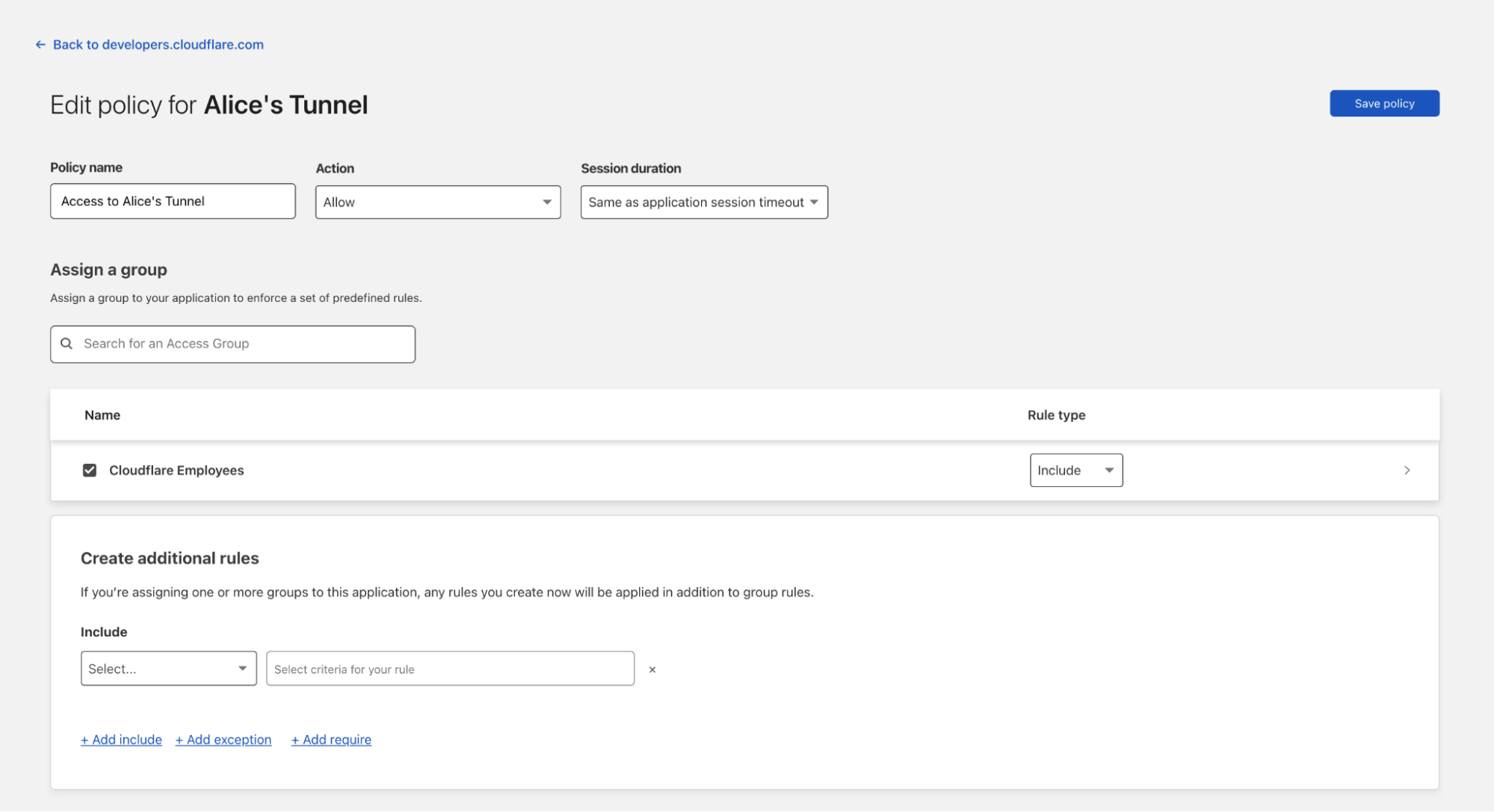

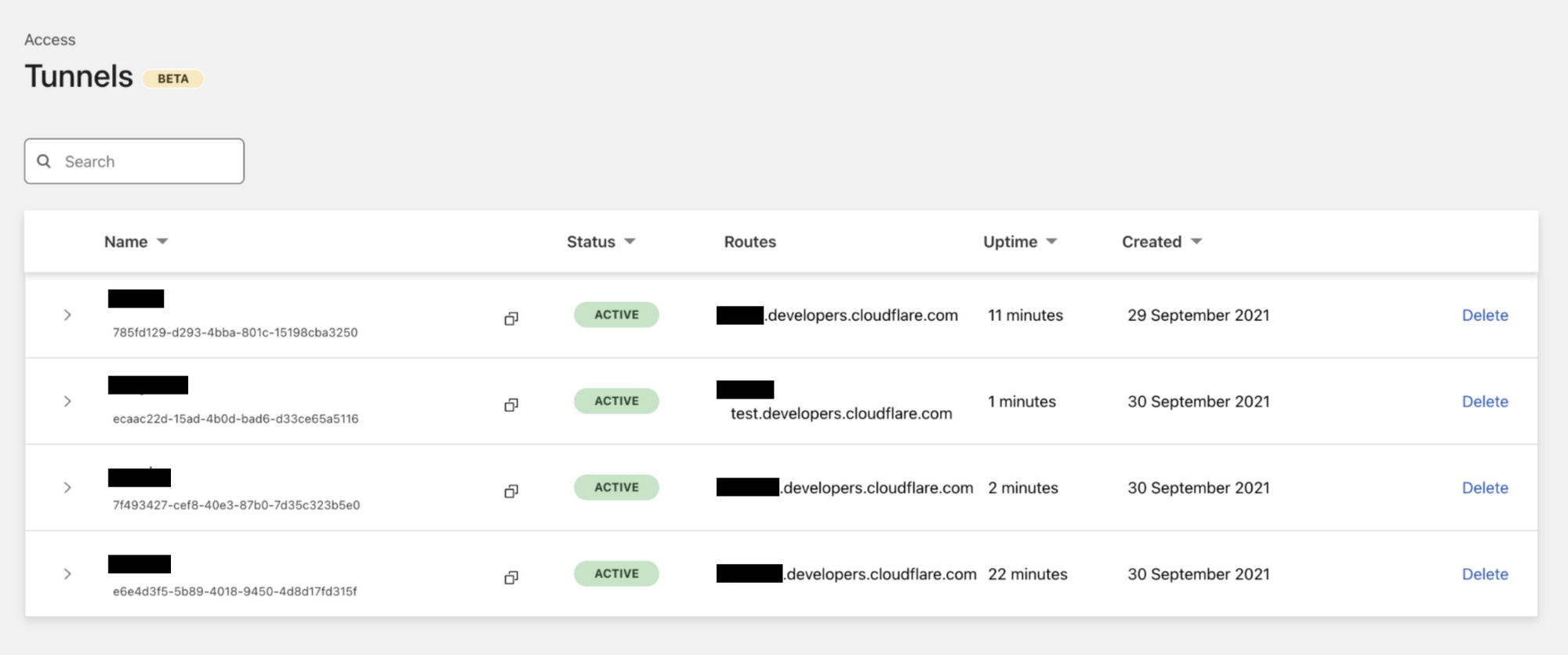

Getting started with WARP Connector

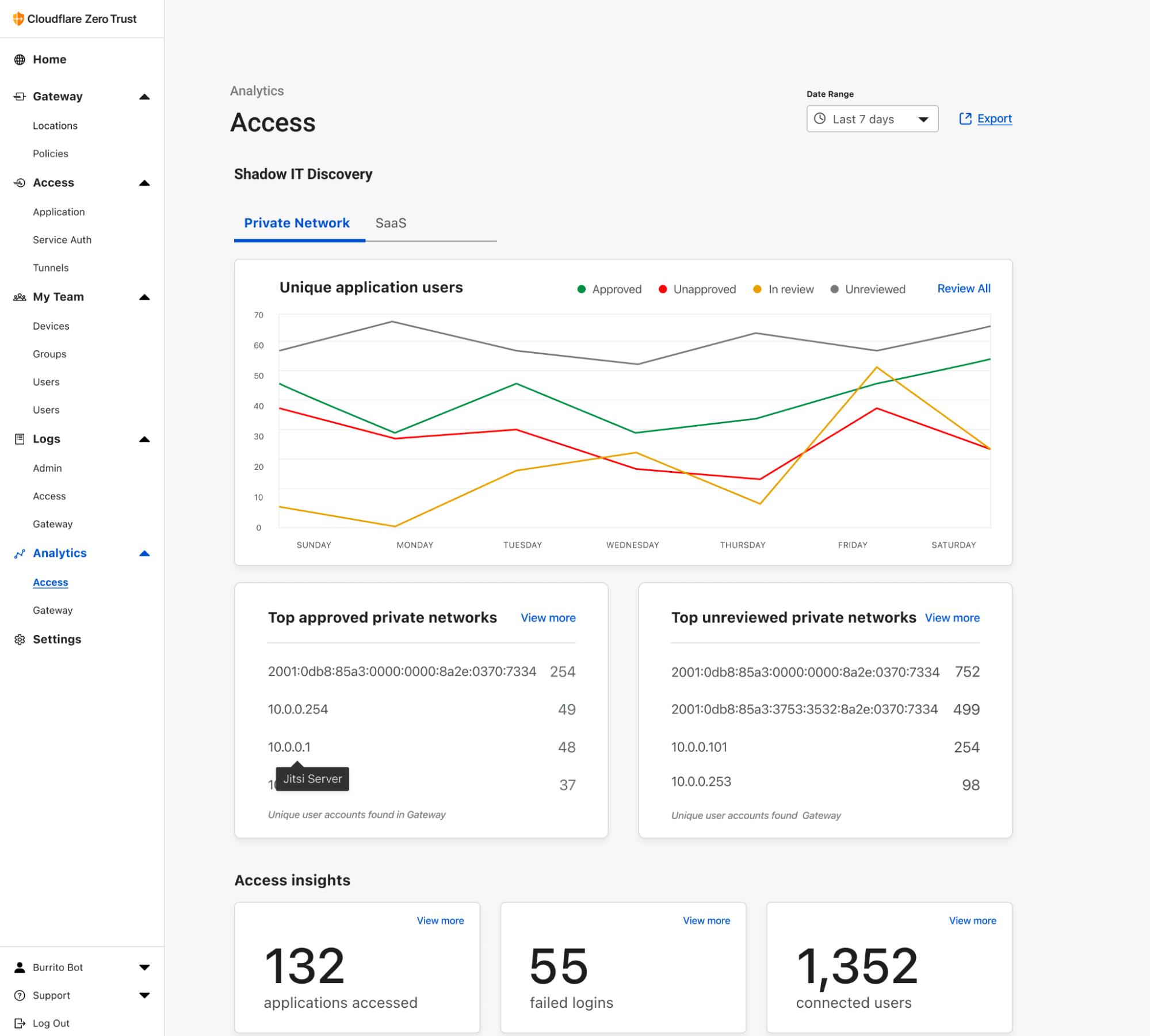

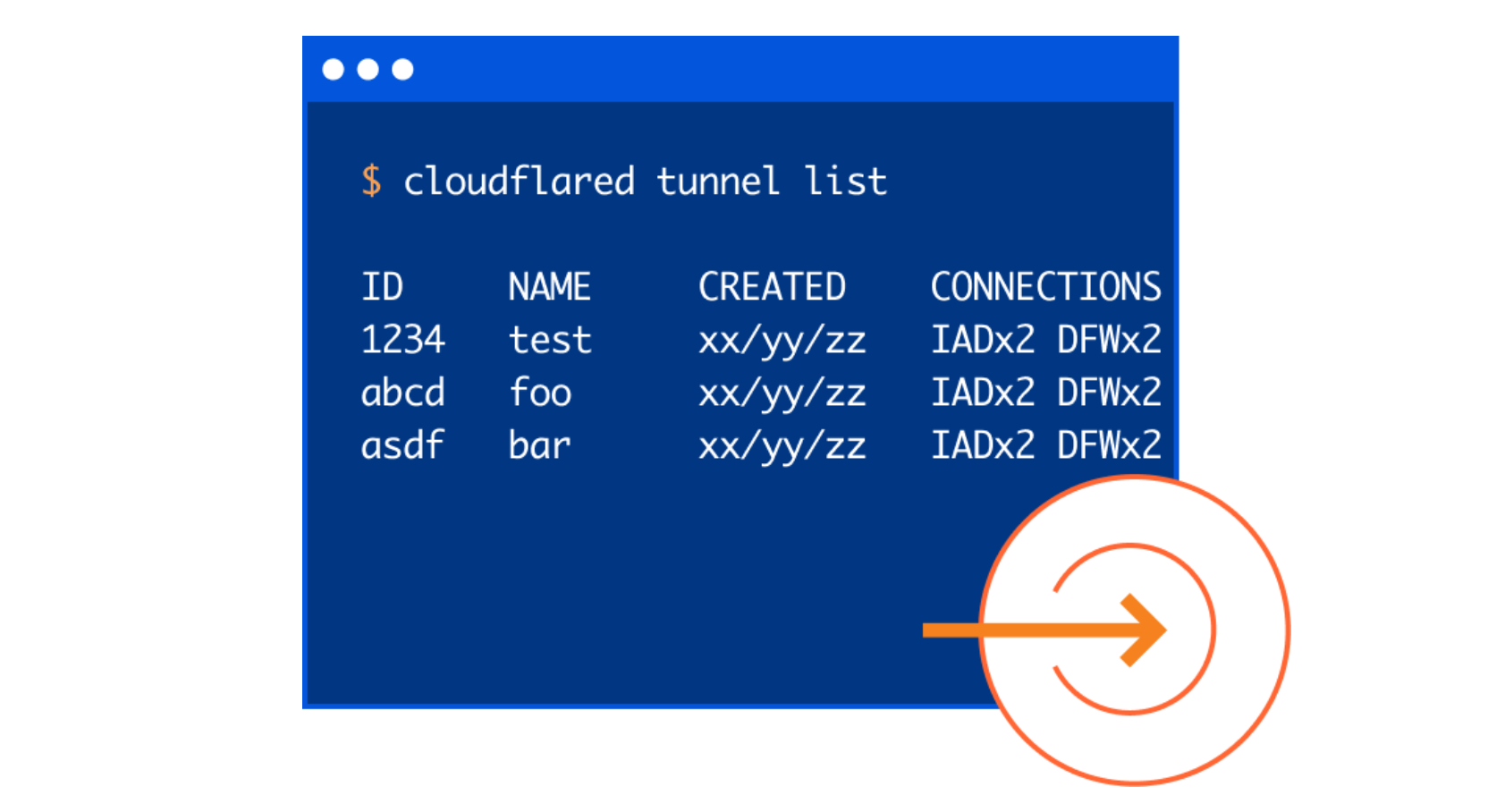

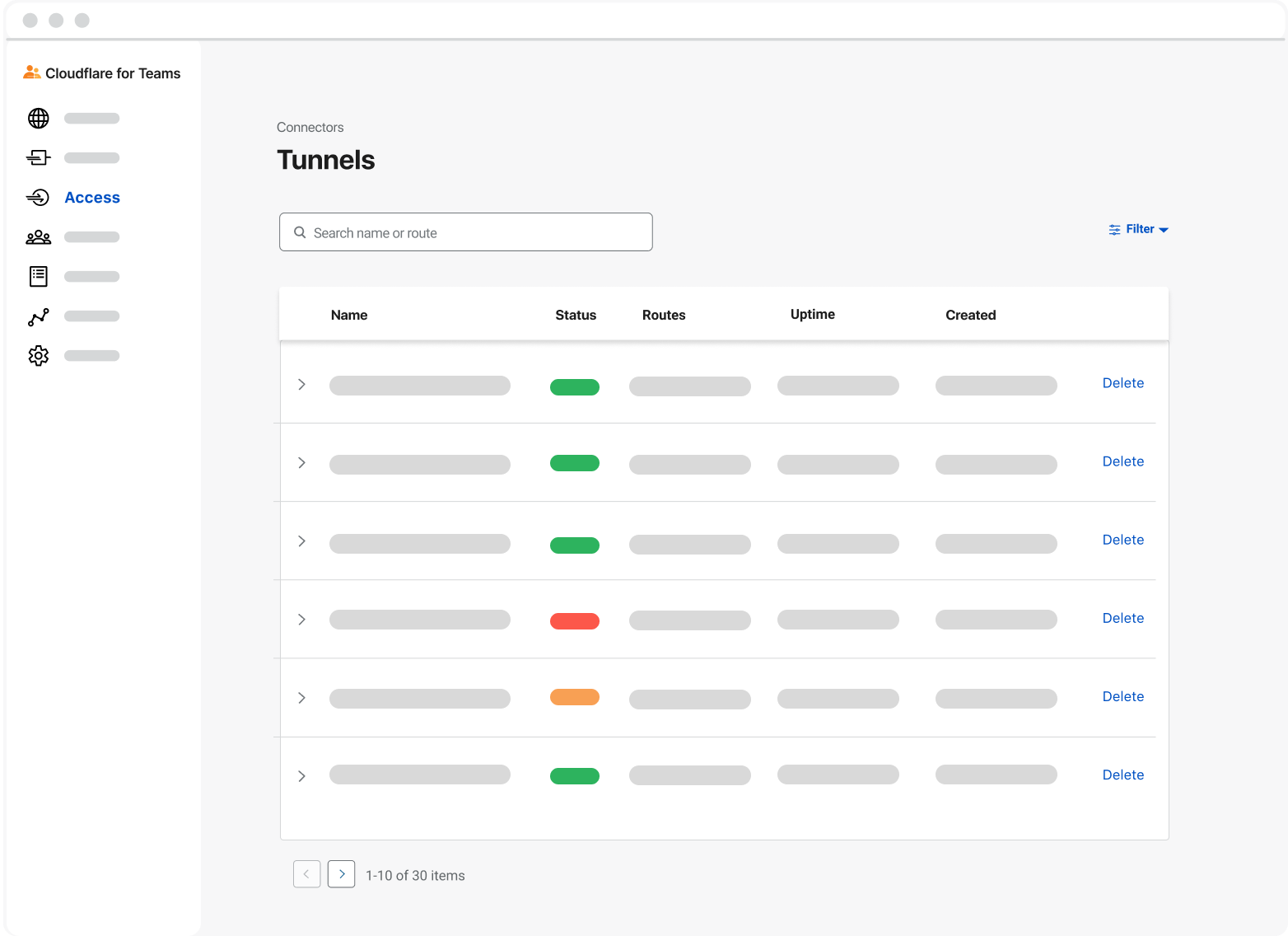

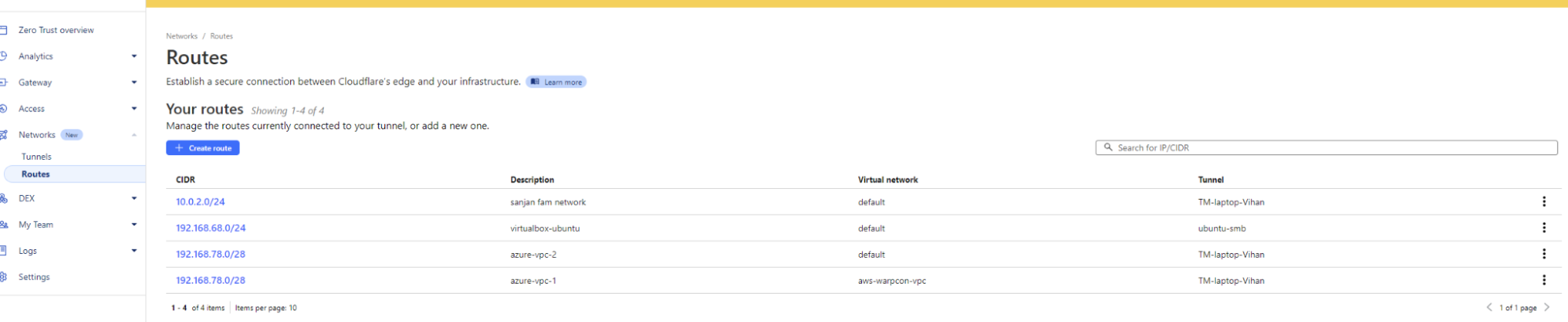

As part of this launch, we’re making some changes to the Cloudflare One Dashboard to better highlight our different network on/off ramp options. As of today, a new “Network” tab will appear on your dashboard. This will be the new home for the Cloudflare Tunnel UI.

We are also introducing the new “Routes” tab next to “Tunnels”. This page will present an organizational view of customer’s virtual networks, Cloudflare Tunnels, and routes associated with them. This new page helps answer a customer’s questions pertaining to their network configurations, such as: “Which Cloudflare Tunnel has the route to my host 192.168.1.2 ” or “If a route for CIDR 192.168.2.1/28 exists, how can it be accessed” or “What are the overlapping CIDRs in my environment and which VNETs do they belong to?”. This is extremely useful for customers who have very complex enterprise networks that use the Cloudflare dashboard for troubleshooting connectivity issues.

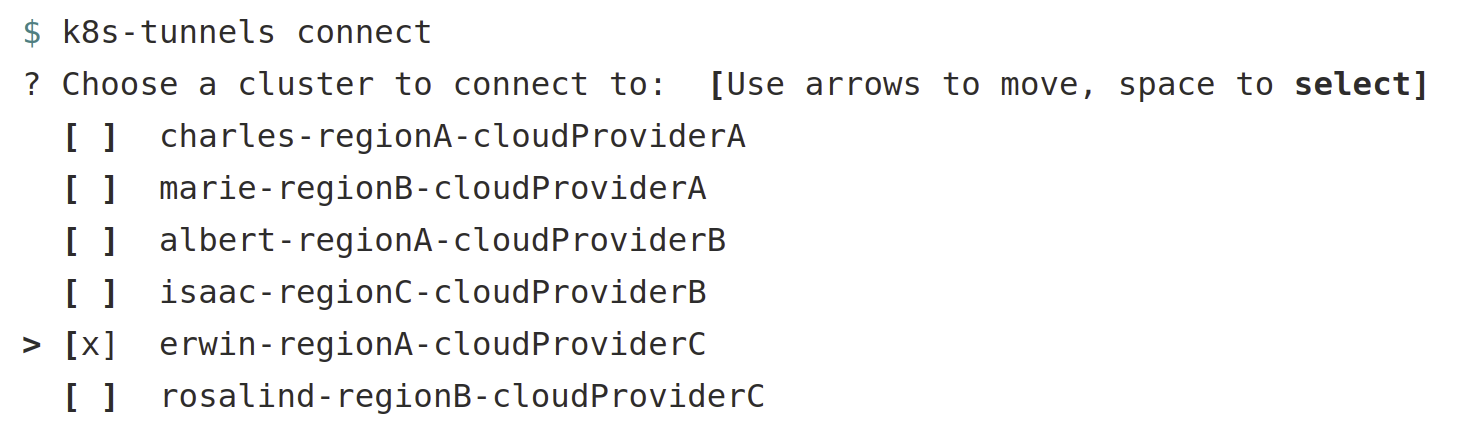

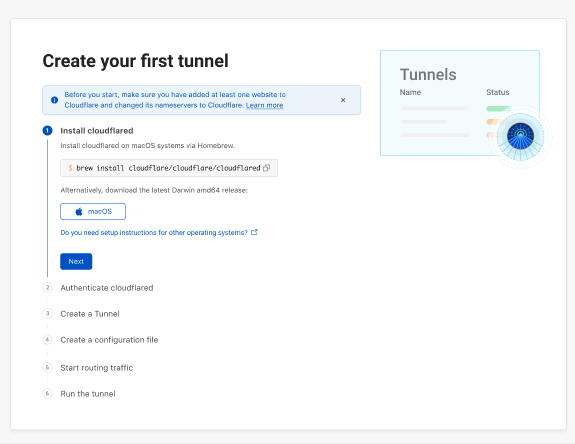

Embarking on your WARP Connector journey is straightforward. Currently deployable on Linux hosts, users can select “create a Tunnel” and pick from either cloudflared or WARP to deploy straight from the dashboard. Follow our developer documentation to get started in a few easy steps. In the near future we will be adding support for more platforms where WARP Connectors can be deployed.

What’s next?

Thank you to all of our private beta customers for their invaluable feedback. Moving forward, our immediate focus in the coming quarters is on simplifying deployment, mirroring that of cloudflared, and enhancing high availability through redundancy and failover mechanisms.

Stay tuned for more updates as we continue our journey in innovating and enhancing the Cloudflare One platform. We’re excited to see how our customers leverage WARP Connector to transform their connectivity and security landscape.