Post Syndicated from Omer Yoachimik original https://blog.cloudflare.com/ddos-threat-report-for-2024-q1

Welcome to the 17th edition of Cloudflare’s DDoS threat report. This edition covers the DDoS threat landscape along with key findings as observed from the Cloudflare network during the first quarter of 2024.

What is a DDoS attack?

But first, a quick recap. A DDoS attack, short for Distributed Denial of Service attack, is a type of cyber attack that aims to take down or disrupt Internet services such as websites or mobile apps and make them unavailable for users. DDoS attacks are usually done by flooding the victim’s server with more traffic than it can handle.

To learn more about DDoS attacks and other types of attacks, visit our Learning Center.

Accessing previous reports

Quick reminder that you can access previous editions of DDoS threat reports on the Cloudflare blog. They are also available on our interactive hub, Cloudflare Radar. On Radar, you can find global Internet traffic, attacks, and technology trends and insights, with drill-down and filtering capabilities, so you can zoom in on specific countries, industries, and networks. There’s also a free API allowing academics, data sleuths, and other web enthusiasts to investigate Internet trends across the globe.

To learn how we prepare this report, refer to our Methodologies.

2024 Q1 key insights

Key insights from the first quarter of 2024 include:

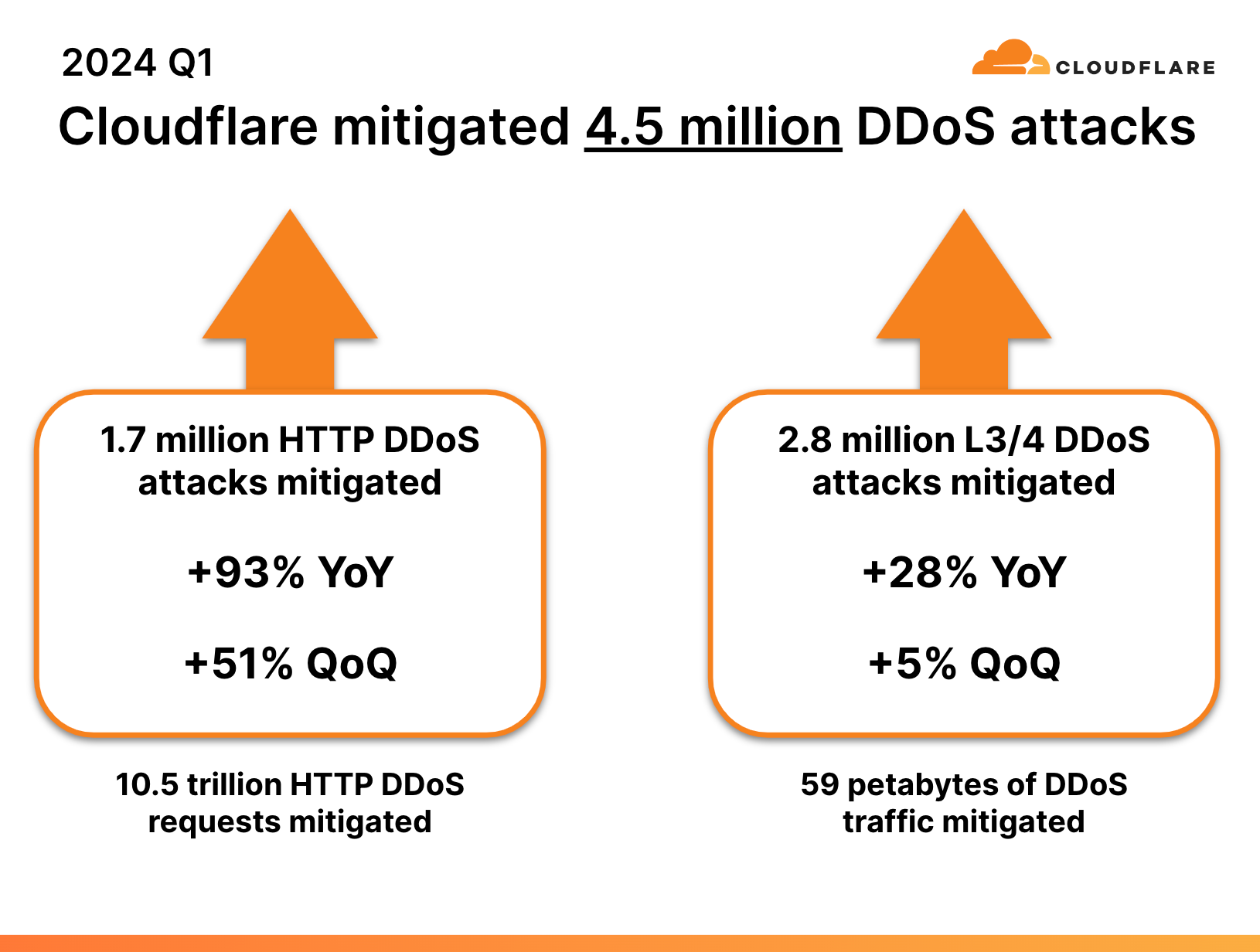

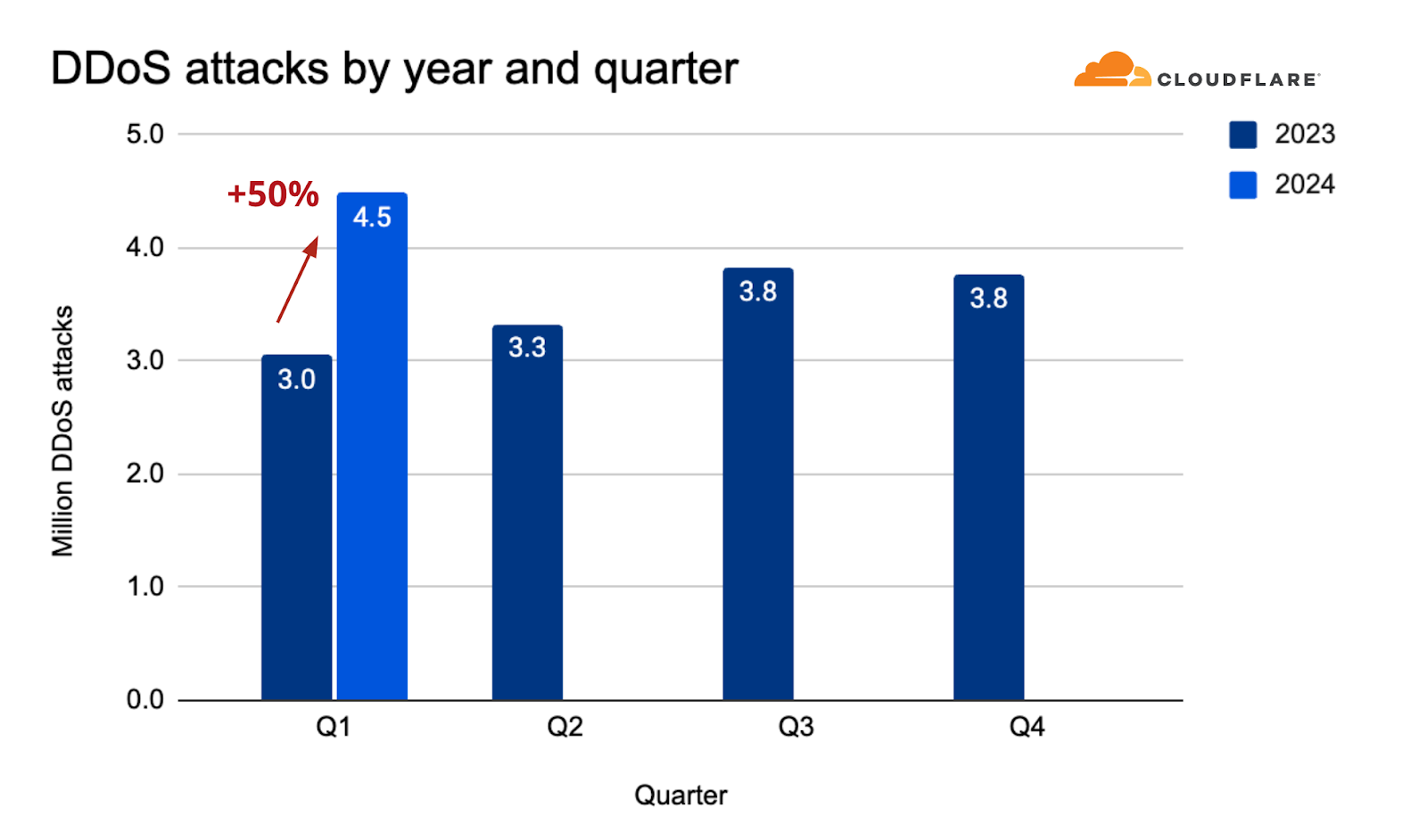

- 2024 started with a bang. Cloudflare’s defense systems automatically mitigated 4.5 million DDoS attacks during the first quarter — representing a 50% year-over-year (YoY) increase.

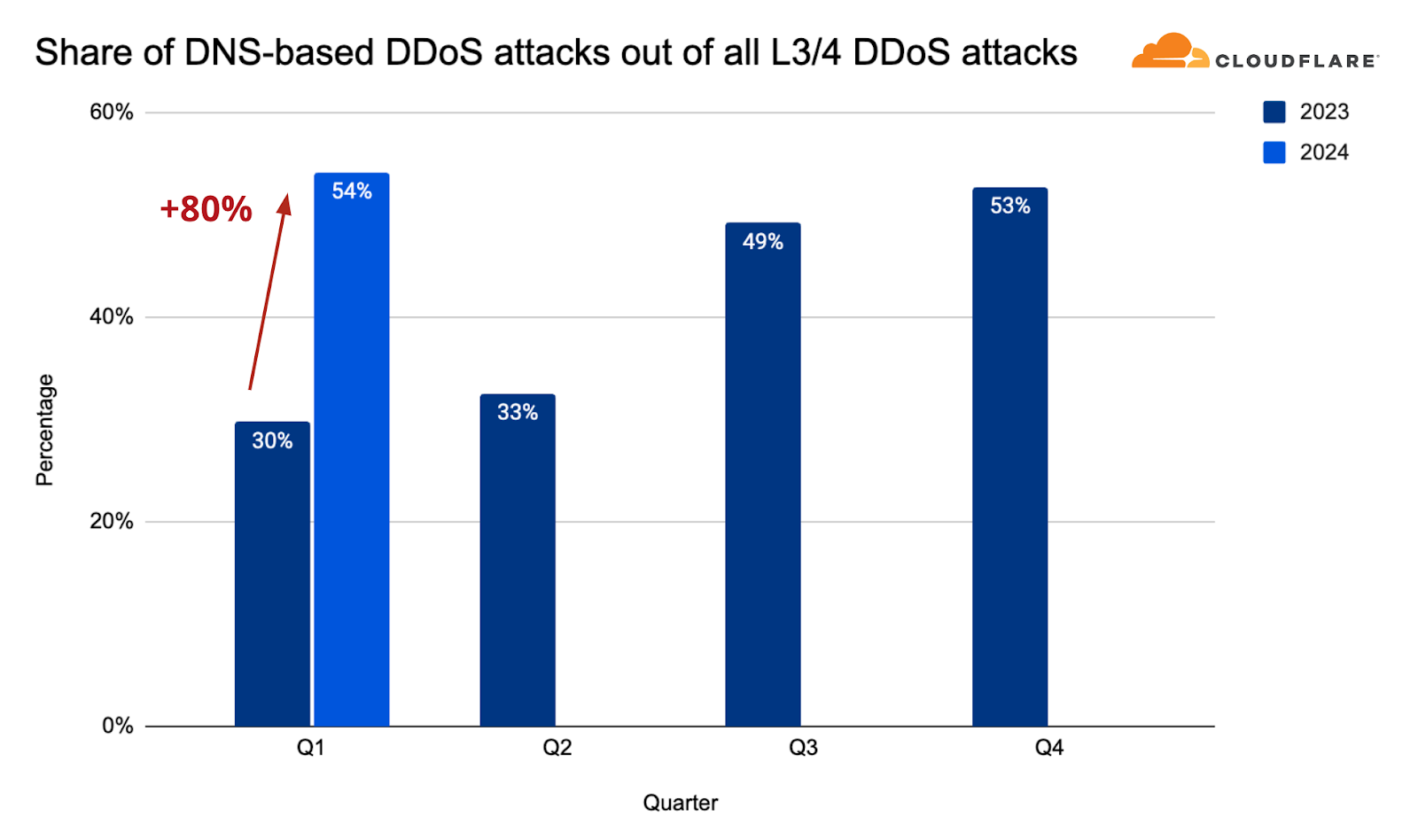

- DNS-based DDoS attacks increased by 80% YoY and remain the most prominent attack vector.

- DDoS attacks on Sweden surged by 466% after its acceptance to the NATO alliance, mirroring the pattern observed during Finland’s NATO accession in 2023.

Starting 2024 with a bang

We’ve just wrapped up the first quarter of 2024, and, already, our automated defenses have mitigated 4.5 million DDoS attacks — an amount equivalent to 32% of all the DDoS attacks we mitigated in 2023.

Breaking it down to attack types, HTTP DDoS attacks increased by 93% YoY and 51% quarter-over-quarter (QoQ). Network-layer DDoS attacks, also known as L3/4 DDoS attacks, increased by 28% YoY and 5% QoQ.

When comparing the combined number of HTTP DDoS attacks and L3/4 DDoS attacks, we can see that, overall, in the first quarter of 2024, the count increased by 50% YoY and 18% QoQ.

In total, our systems mitigated 10.5 trillion HTTP DDoS attack requests in Q1. Our systems also mitigated over 59 petabytes of DDoS attack traffic — just on the network-layer.

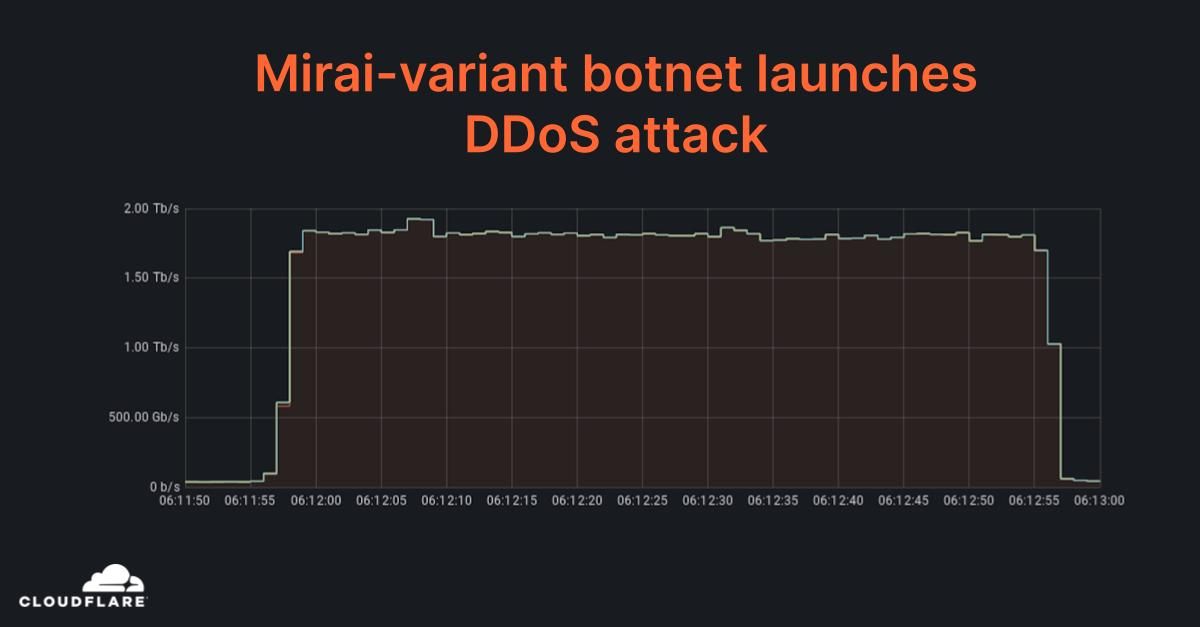

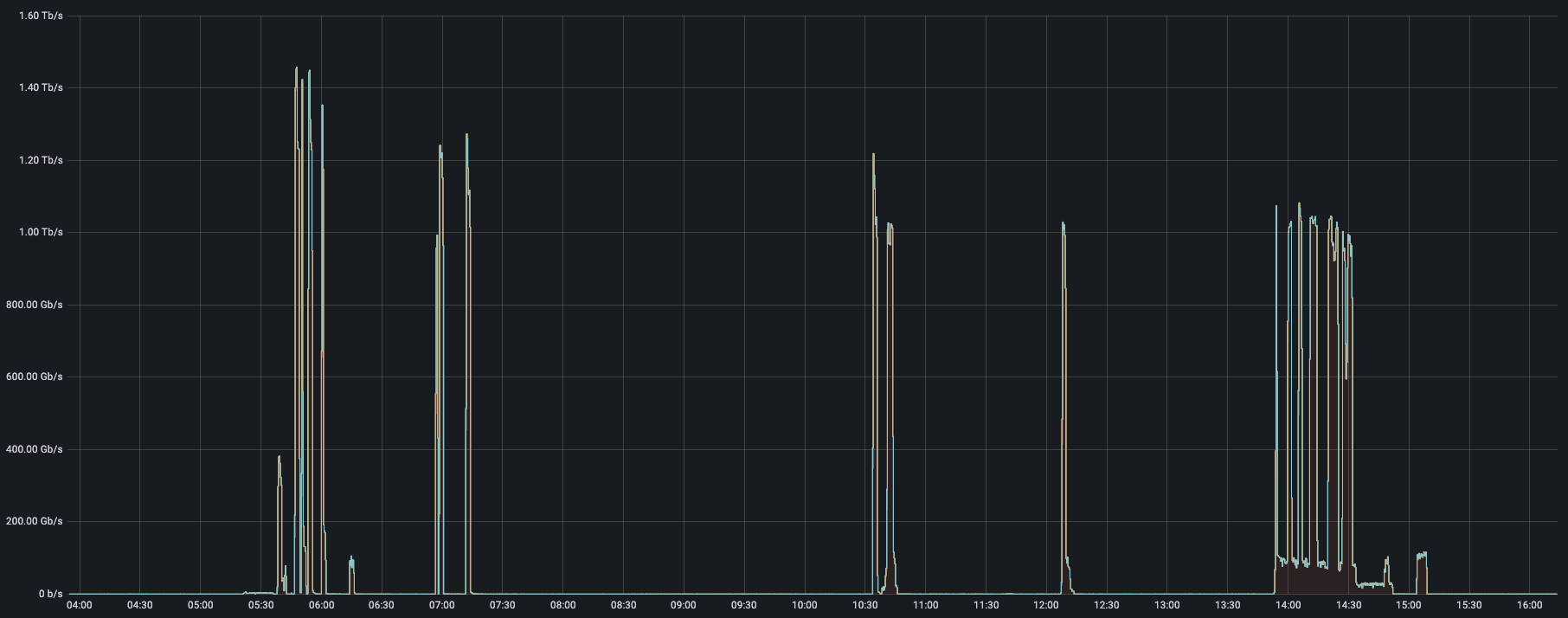

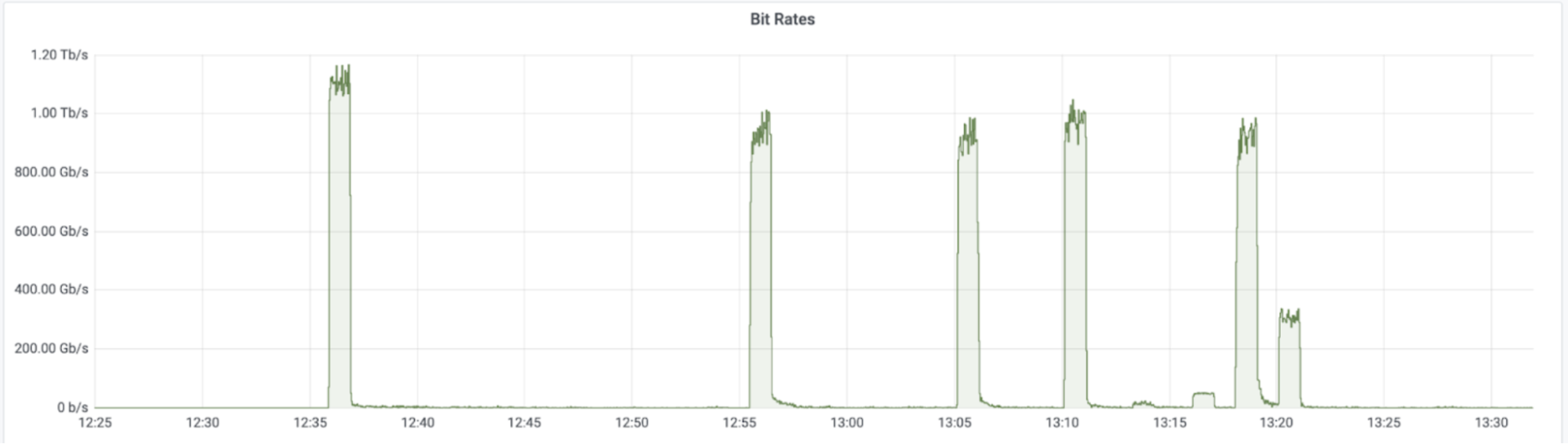

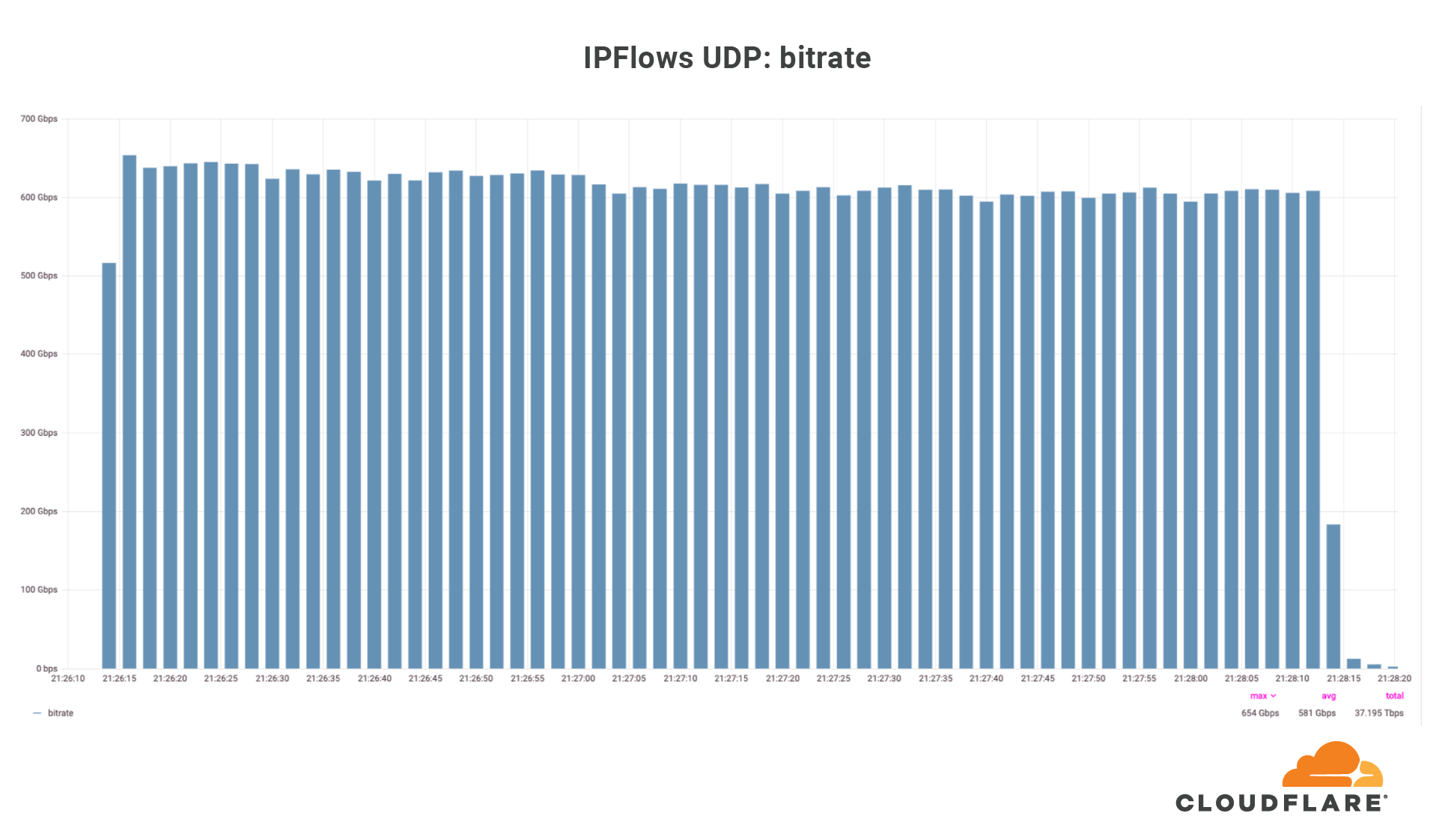

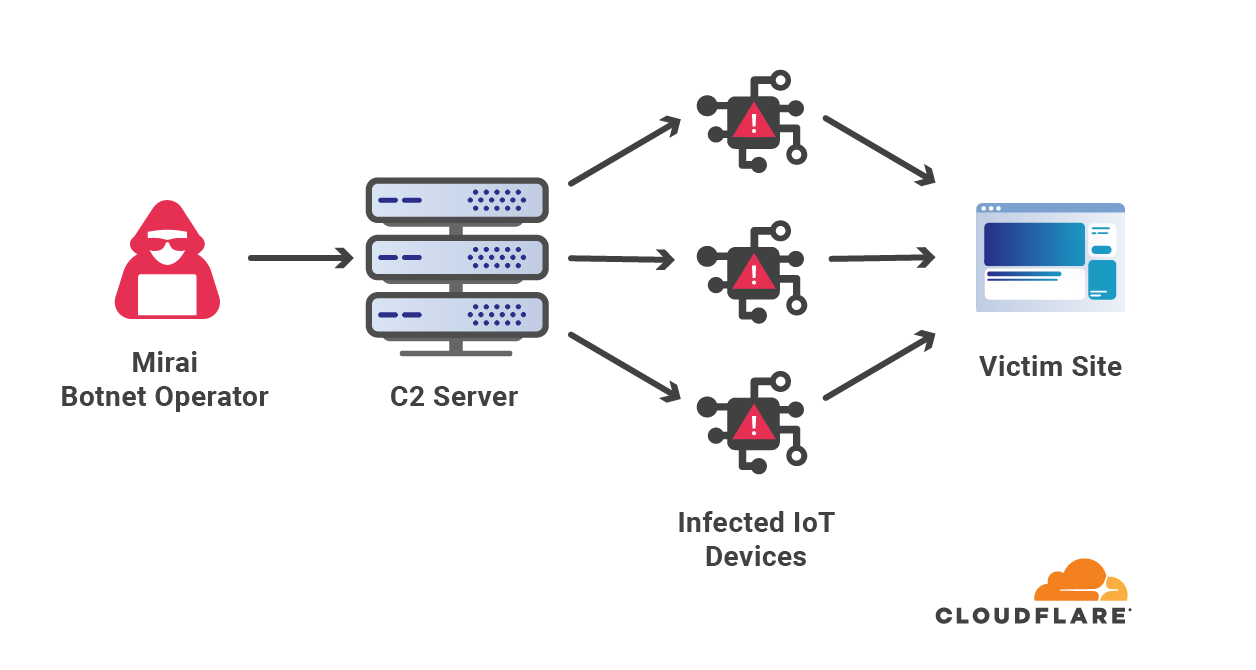

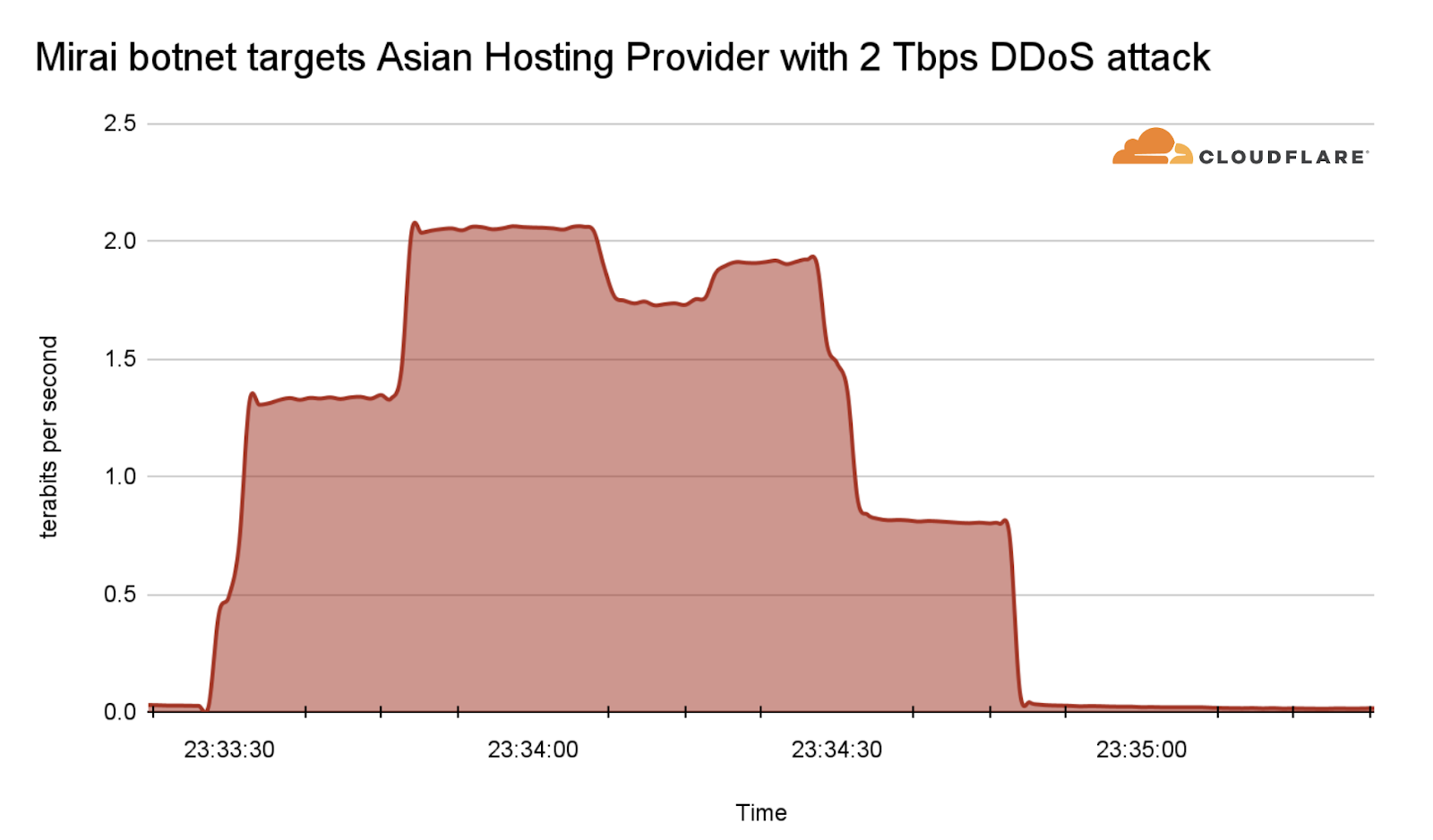

Among those network-layer DDoS attacks, many of them exceeded the 1 terabit per second rate — almost on a weekly basis. The largest attack that we have mitigated so far in 2024 was launched by a Mirai-variant botnet. This attack reached 2 Tbps and was aimed at an Asian hosting provider protected by Cloudflare Magic Transit. Cloudflare’s systems automatically detected and mitigated the attack.

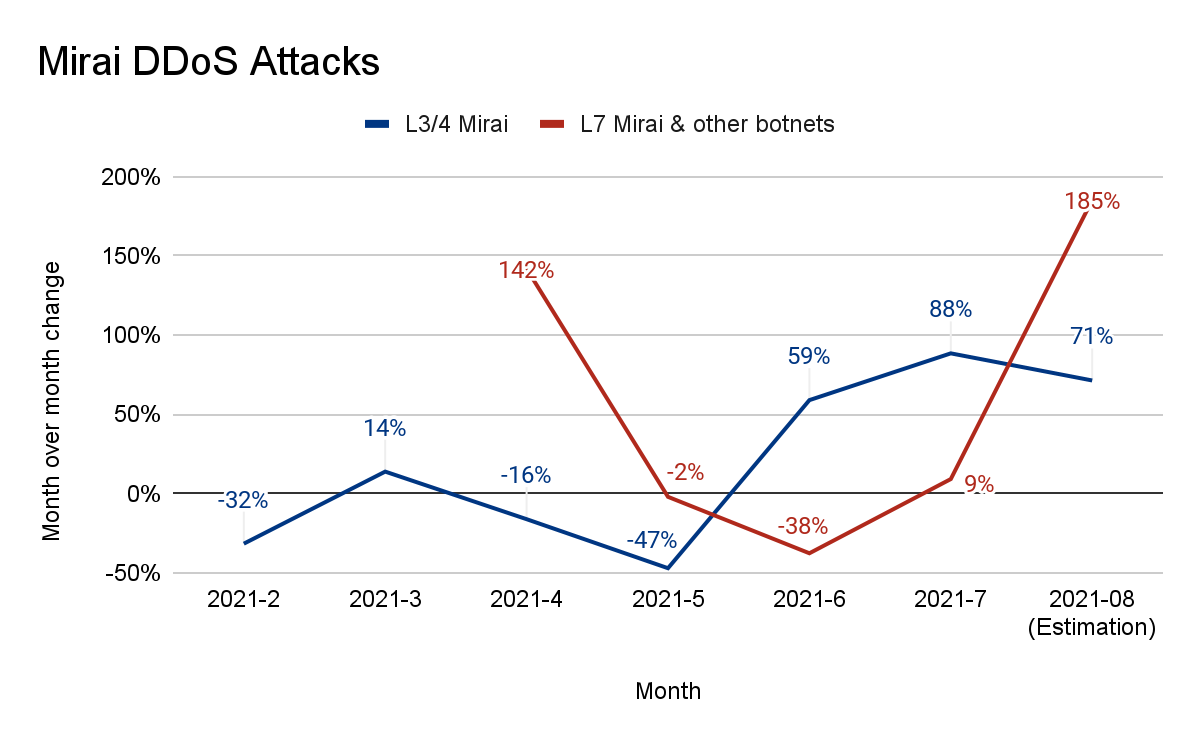

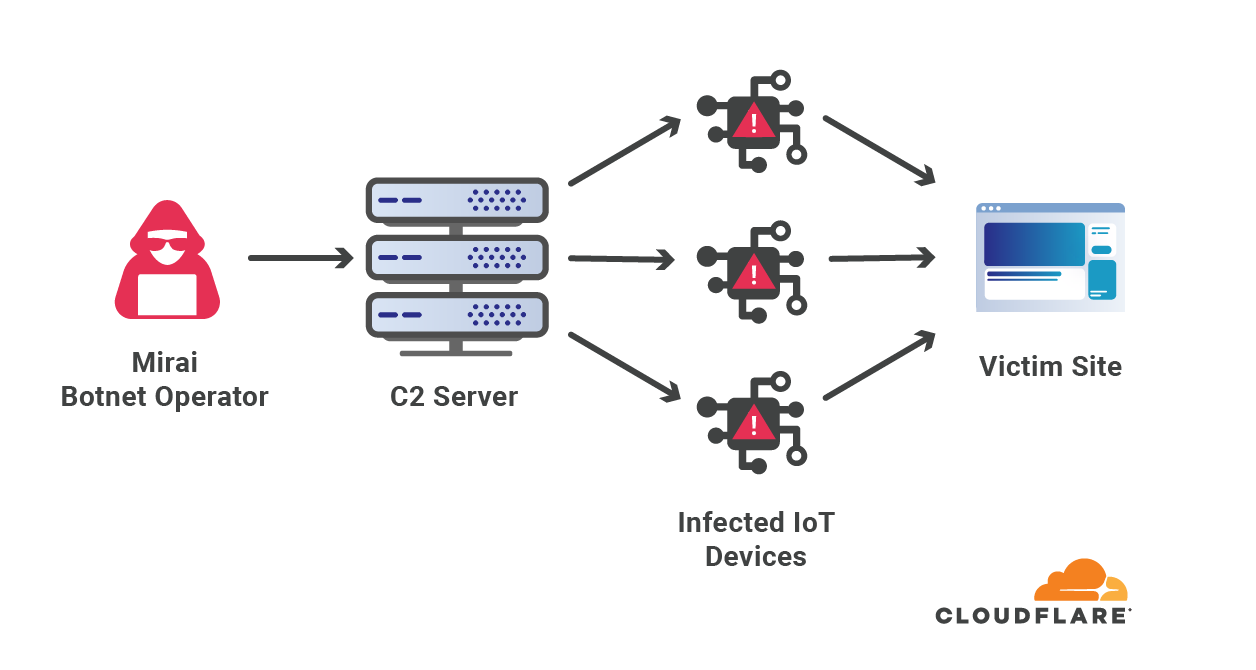

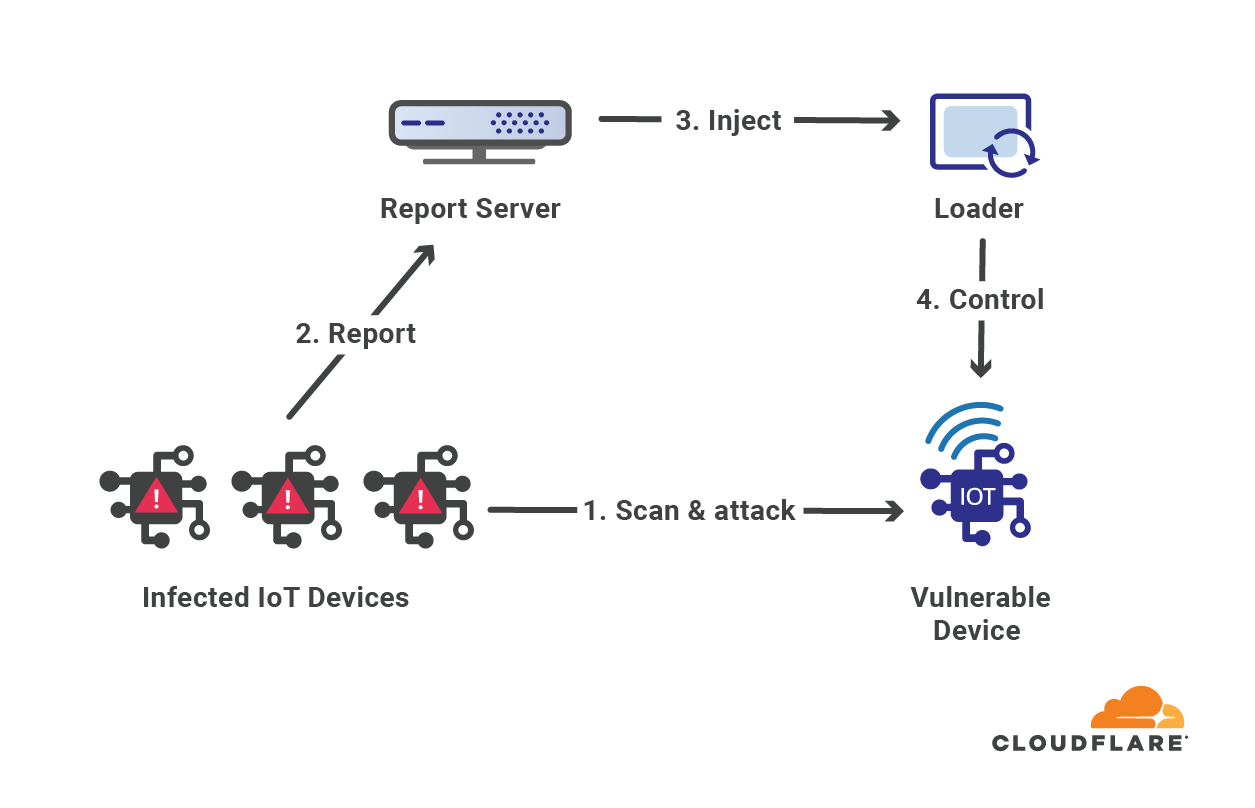

The Mirai botnet, infamous for its massive DDoS attacks, was primarily composed of infected IoT devices. It notably disrupted Internet access across the US in 2016 by targeting DNS service providers. Almost eight years later, Mirai attacks are still very common. Four out of every 100 HTTP DDoS attacks, and two out of every 100 L3/4 DDoS attacks are launched by a Mirai-variant botnet. The reason we say “variant” is that the Mirai source code was made public, and over the years there have been many permutations of the original.

DNS attacks surge by 80%

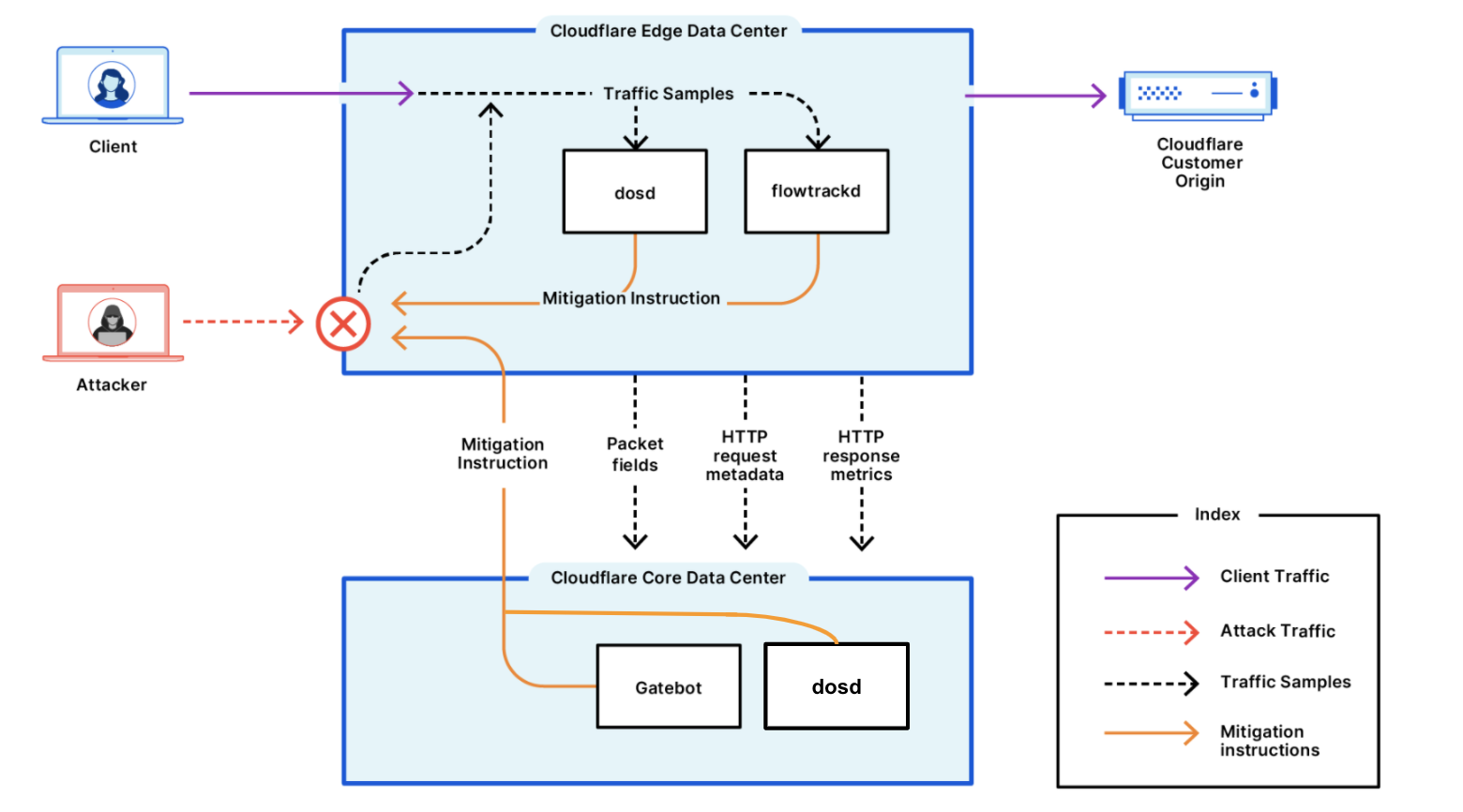

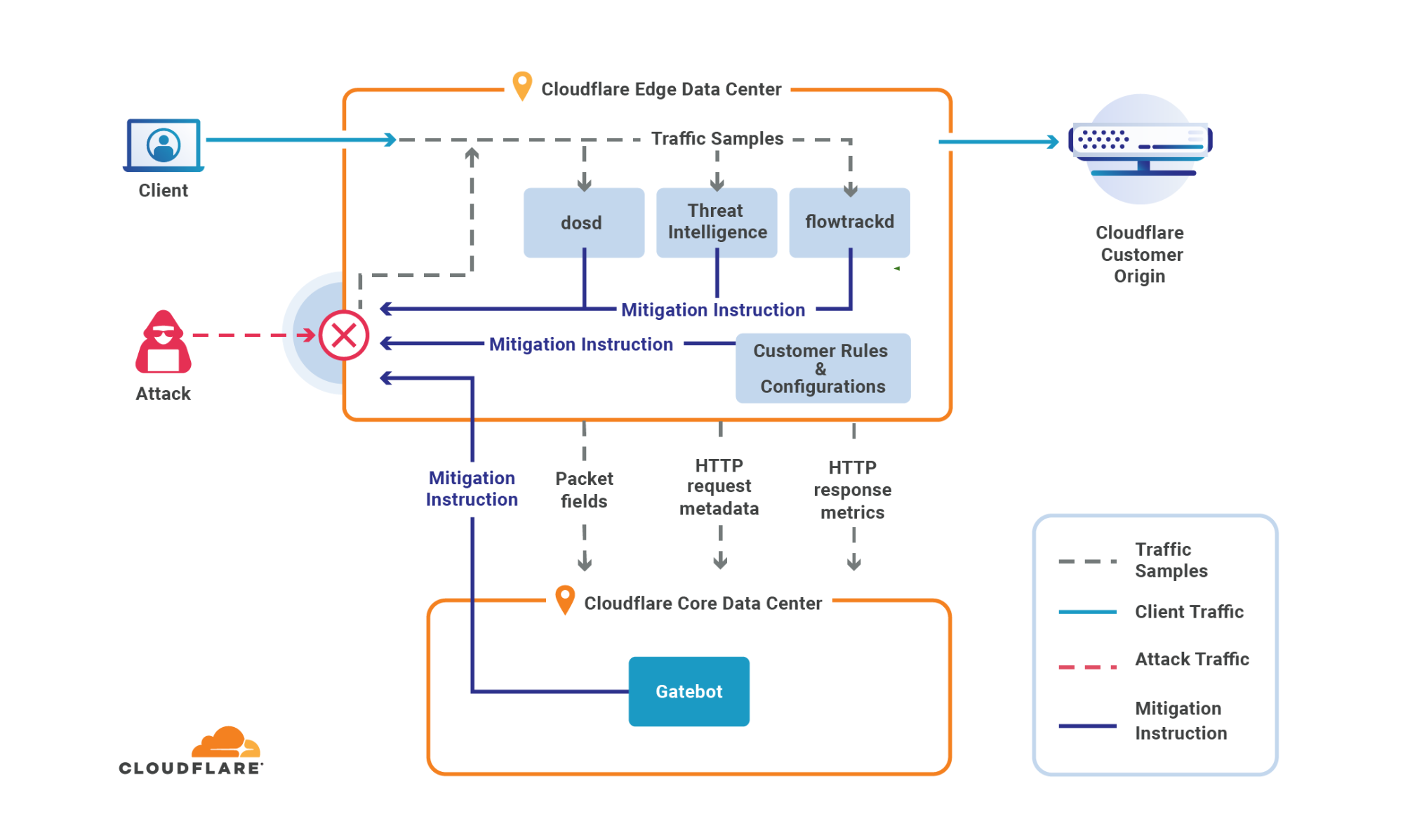

In March 2024, we introduced one of our latest DDoS defense systems, the Advanced DNS Protection system. This system complements our existing systems, and is designed to protect against the most sophisticated DNS-based DDoS attacks.

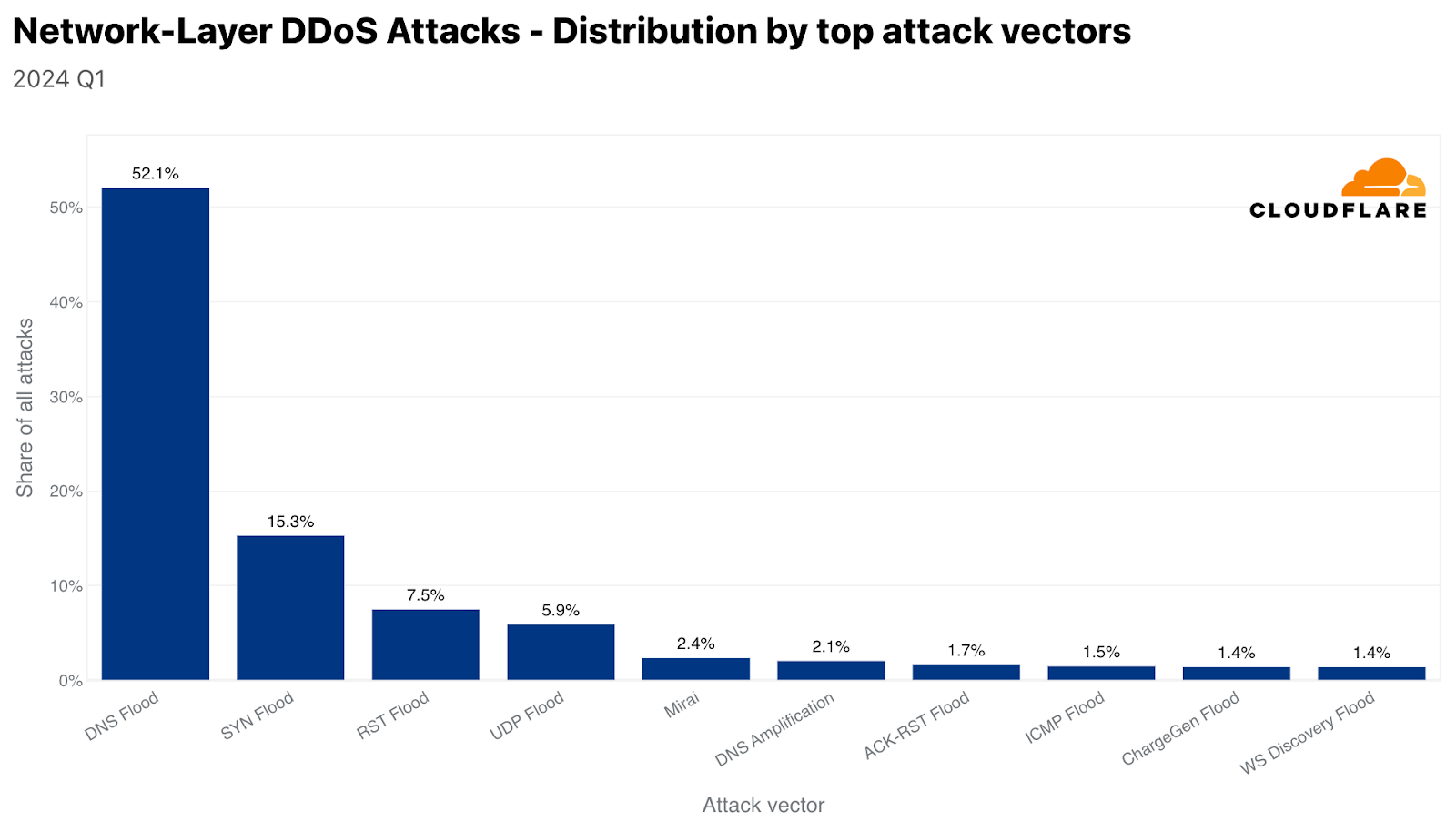

It is not out of the blue that we decided to invest in this new system. DNS-based DDoS attacks have become the most prominent attack vector and its share among all network-layer attacks continues to grow. In the first quarter of 2024, the share of DNS-based DDoS attacks increased by 80% YoY, growing to approximately 54%.

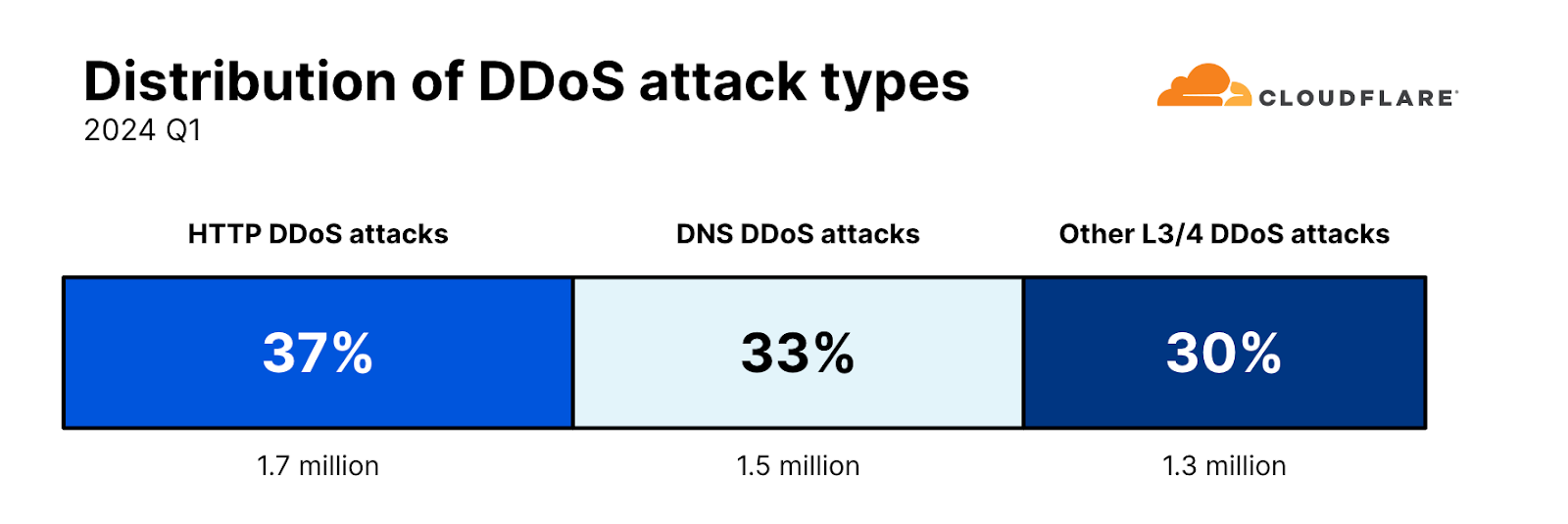

Despite the surge in DNS attacks and due to the overall increase in all types of DDoS attacks, the share of each attack type, remarkably, remains the same as seen in our previous report for the final quarter of 2023. HTTP DDoS attacks remain at 37% of all DDoS attacks, DNS DDoS attacks at 33%, and the remaining 30% is left for all other types of L3/4 attacks, such as SYN Flood and UDP Floods.

And in fact, SYN Floods were the second most common L3/4 attack. The third was RST Floods, another type of TCP-based DDoS attack. UDP Floods came in fourth with a 6% share.

When analyzing the most common attack vectors, we also check for the attack vectors that experienced the largest growth but didn’t necessarily make it into the top ten list. Among the top growing attack vectors (emerging threats), Jenkins Flood experienced the largest growth of over 826% QoQ.

Jenkins Flood is a DDoS attack that exploits vulnerabilities in the Jenkins automation server, specifically through UDP multicast/broadcast and DNS multicast services. Attackers can send small, specially crafted requests to a publicly facing UDP port on Jenkins servers, causing them to respond with disproportionately large amounts of data. This can amplify the traffic volume significantly, overwhelming the target’s network and leading to service disruption. Jenkins addressed this vulnerability (CVE-2020-2100) in 2020 by disabling these services by default in later versions. However, as we can see, even 4 years later, this vulnerability is still being abused in the wild to launch DDoS attacks.

HTTP/2 Continuation Flood

Another attack vector that’s worth discussing is the HTTP/2 Continuation Flood. This attack vector is made possible by a vulnerability that was discovered and reported publicly by researcher Bartek Nowotarski on April 3, 2024.

The HTTP/2 Continuation Flood vulnerability targets HTTP/2 protocol implementations that improperly handle HEADERS and multiple CONTINUATION frames. The threat actor sends a sequence of CONTINUATION frames without the END_HEADERS flag, leading to potential server issues such as out-of-memory crashes or CPU exhaustion. HTTP/2 Continuation Flood allows even a single machine to disrupt websites and APIs using HTTP/2, with the added challenge of difficult detection due to no visible requests in HTTP access logs.

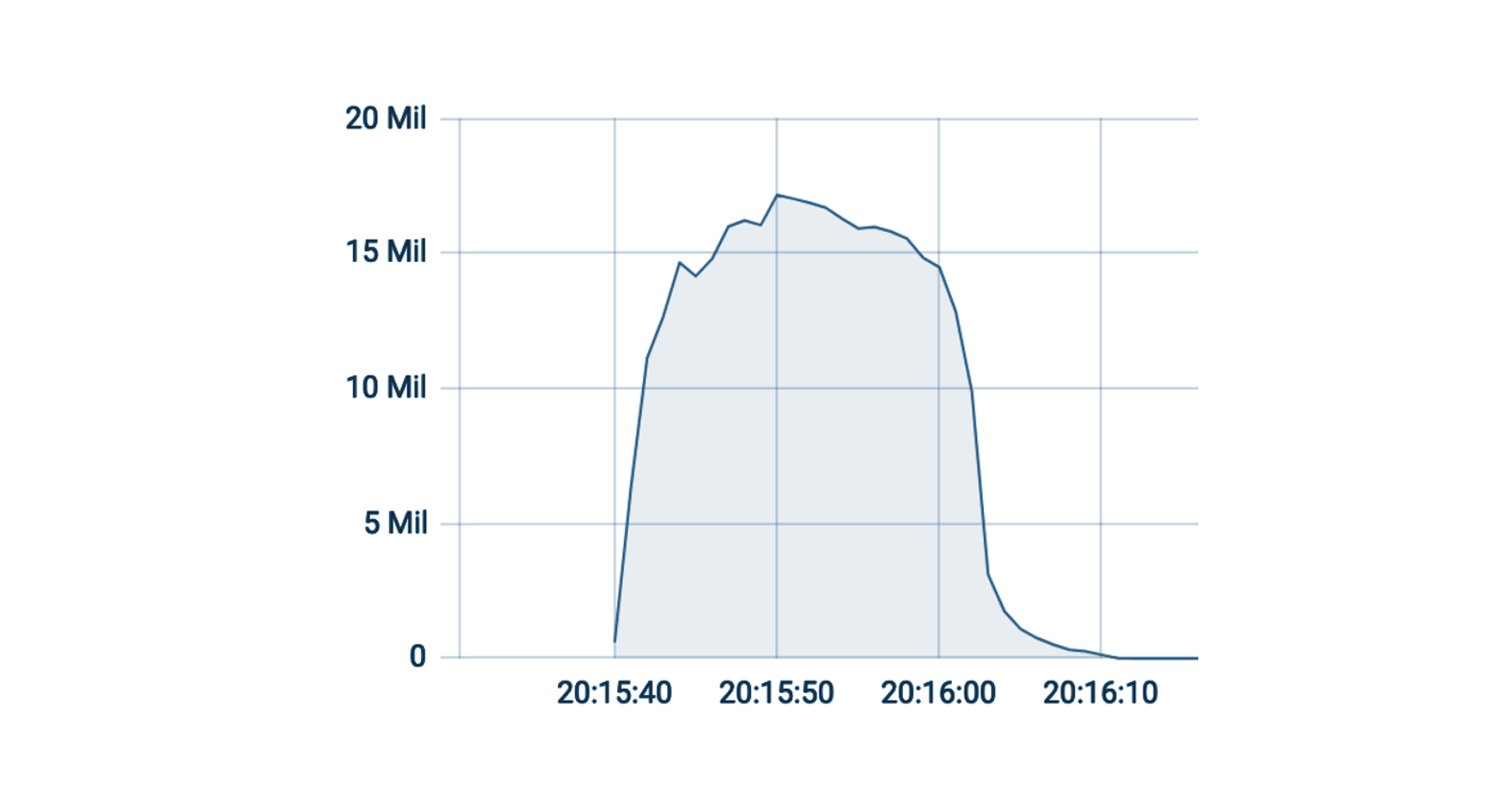

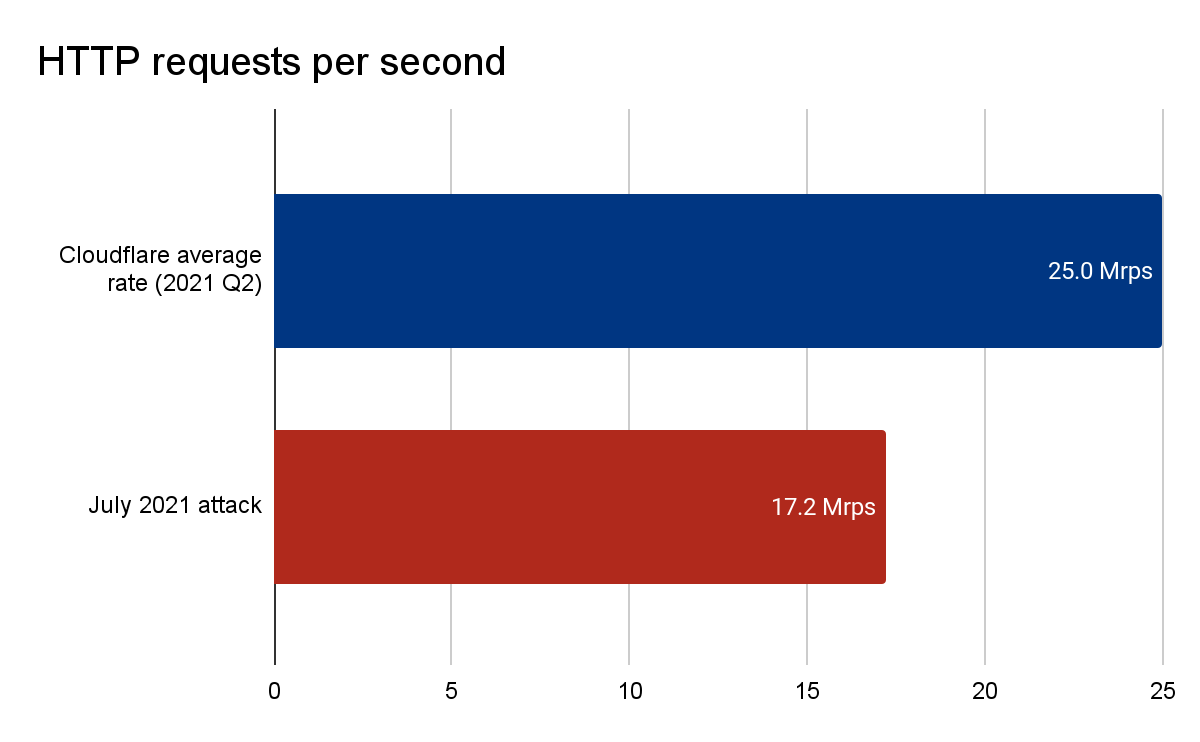

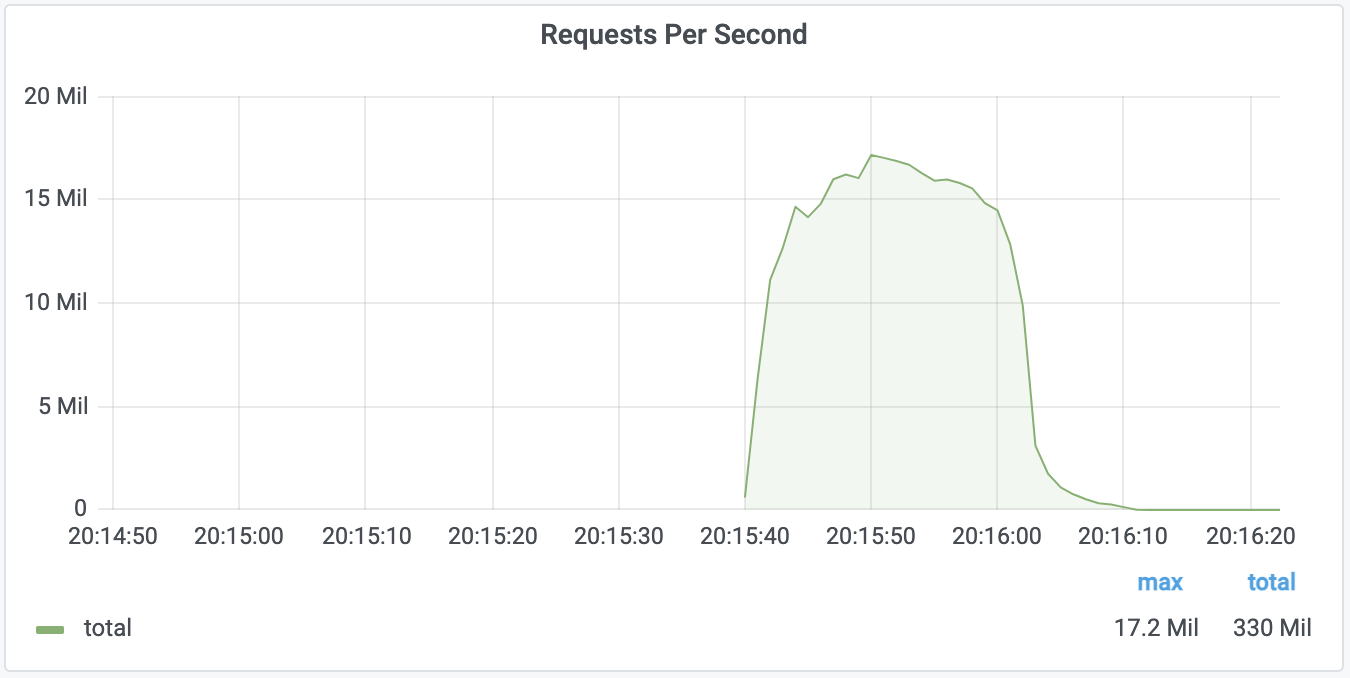

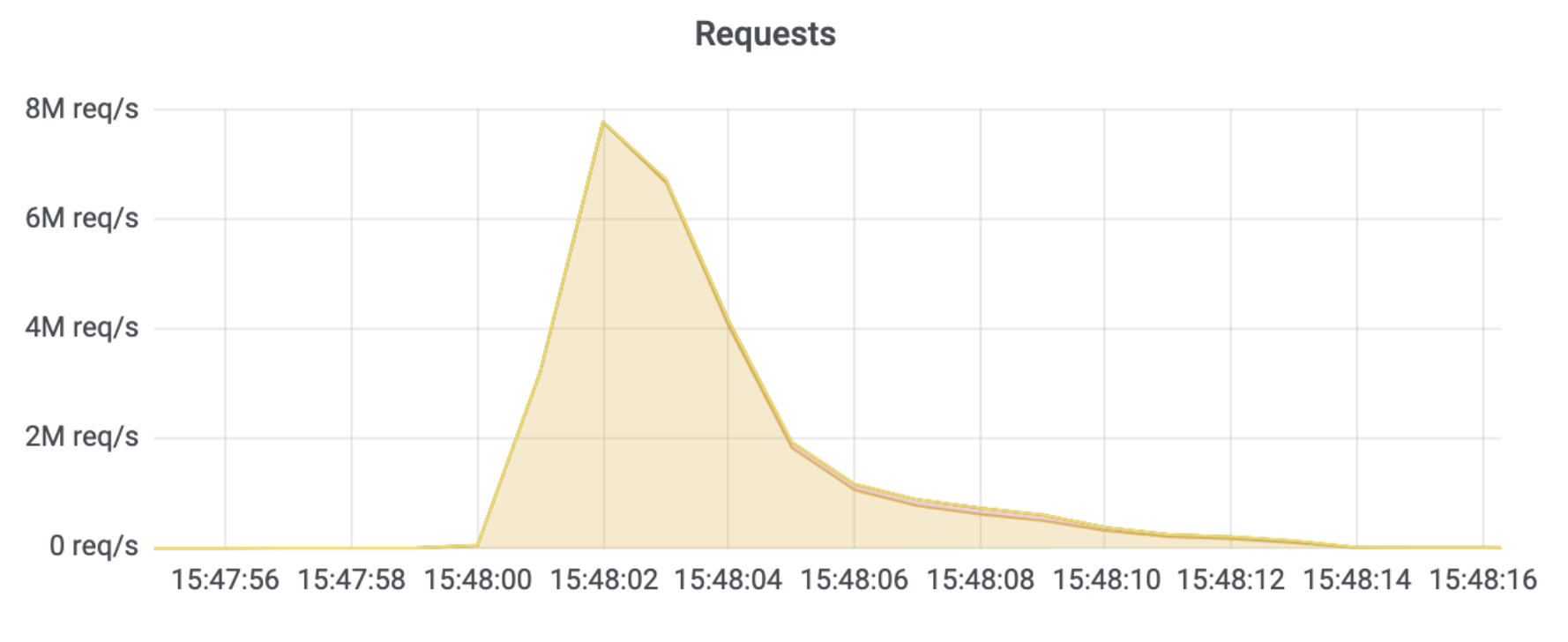

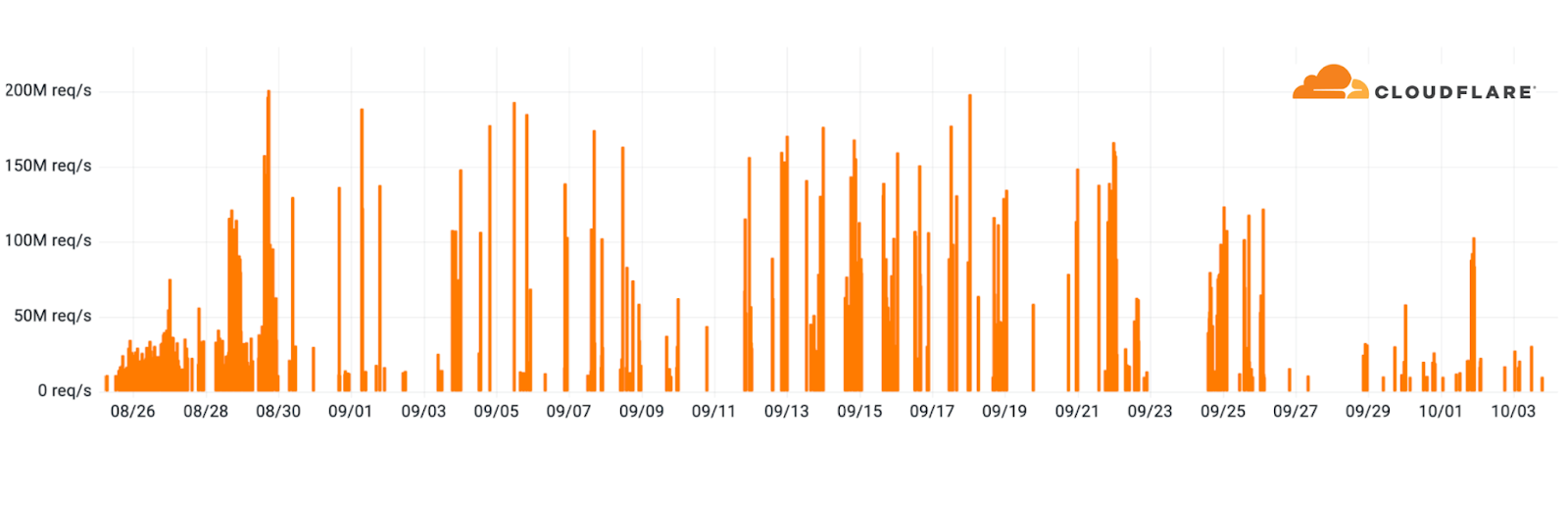

This vulnerability poses a potentially severe threat more damaging than the previously known

HTTP/2 Rapid Reset, which resulted in some of the largest HTTP/2 DDoS attack campaigns in recorded history. During that campaign, thousands of hyper-volumetric DDoS attacks targeted Cloudflare. The attacks were multi-million requests per second strong. The average attack rate in that campaign, recorded by Cloudflare, was 30M rps. Approximately 89 of the attacks peaked above 100M rps and the largest one we saw hit 201M rps. Additional coverage was published in our 2023 Q3 DDoS threat report.

Cloudflare’s network, its HTTP/2 implementation, and customers using our WAF/CDN services are not affected by this vulnerability. Furthermore, we are not currently aware of any threat actors exploiting this vulnerability in the wild.

Multiple CVEs have been assigned to the various implementations of HTTP/2 that are impacted by this vulnerability. A CERT alert published by Christopher Cullen at Carnegie Mellon University, which was covered by Bleeping Computer, lists the various CVEs:

| Affected service | CVE | Details |

|---|---|---|

| Node.js HTTP/2 server | CVE-2024-27983 | Sending a few HTTP/2 frames can cause a race condition and memory leak, leading to a potential denial of service event. |

| Envoy’s oghttp codec | CVE-2024-27919 | Not resetting a request when header map limits are exceeded can cause unlimited memory consumption which can potentially lead to a denial of service event. |

| Tempesta FW | CVE-2024-2758 | Its rate limits are not entirely effective against empty CONTINUATION frames flood, potentially leading to a denial of service event. |

| amphp/http | CVE-2024-2653 | It collects CONTINUATION frames in an unbounded buffer, risking an out of memory (OOM) crash if the header size limit is exceeded, potentially resulting in a denial of service event. |

| Go’s net/http and net/http2 packages | CVE-2023-45288 | Allows an attacker to send an arbitrarily large set of headers, causing excessive CPU consumption, potentially leading to a denial of service event. |

| nghttp2 library | CVE-2024-28182 | Involves an implementation using nghttp2 library, which continues to receive CONTINUATION frames, potentially leading to a denial of service event without proper stream reset callback. |

| Apache Httpd | CVE-2024-27316 | A flood of CONTINUATION frames without the END_HEADERS flag set can be sent, resulting in the improper termination of requests, potentially leading to a denial of service event. |

| Apache Traffic Server | CVE-2024-31309 | HTTP/2 CONTINUATION floods can cause excessive resource consumption on the server, potentially leading to a denial of service event. |

| Envoy versions 1.29.2 or earlier | CVE-2024-30255 | Consumption of significant server resources can lead to CPU exhaustion during a flood of CONTINUATION frames, which can potentially lead to a denial of service event. |

Top attacked industries

When analyzing attack statistics, we use our customer’s industry as it is recorded in our systems to determine the most attacked industries. In the first quarter of 2024, the top attacked industry by HTTP DDoS attacks in North America was Marketing and Advertising. In Africa and Europe, the Information Technology and Internet industry was the most attacked. In the Middle East, the most attacked industry was Computer Software. In Asia, the most attacked industry was Gaming and Gambling. In South America, it was the Banking, Financial Services and Insurance (BFSI) industry. Last but not least, in Oceania, was the Telecommunications industry.

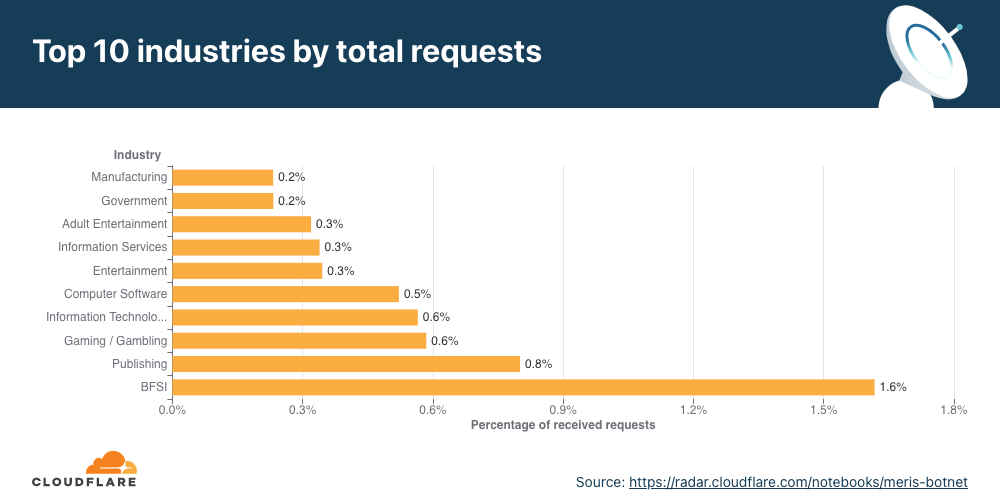

Globally, the Gaming and Gambling industry was the number one most targeted by HTTP DDoS attacks. Just over seven of every 100 DDoS requests that Cloudflare mitigated were aimed at the Gaming and Gambling industry. In second place, the Information Technology and Internet industry, and in third, Marketing and Advertising.

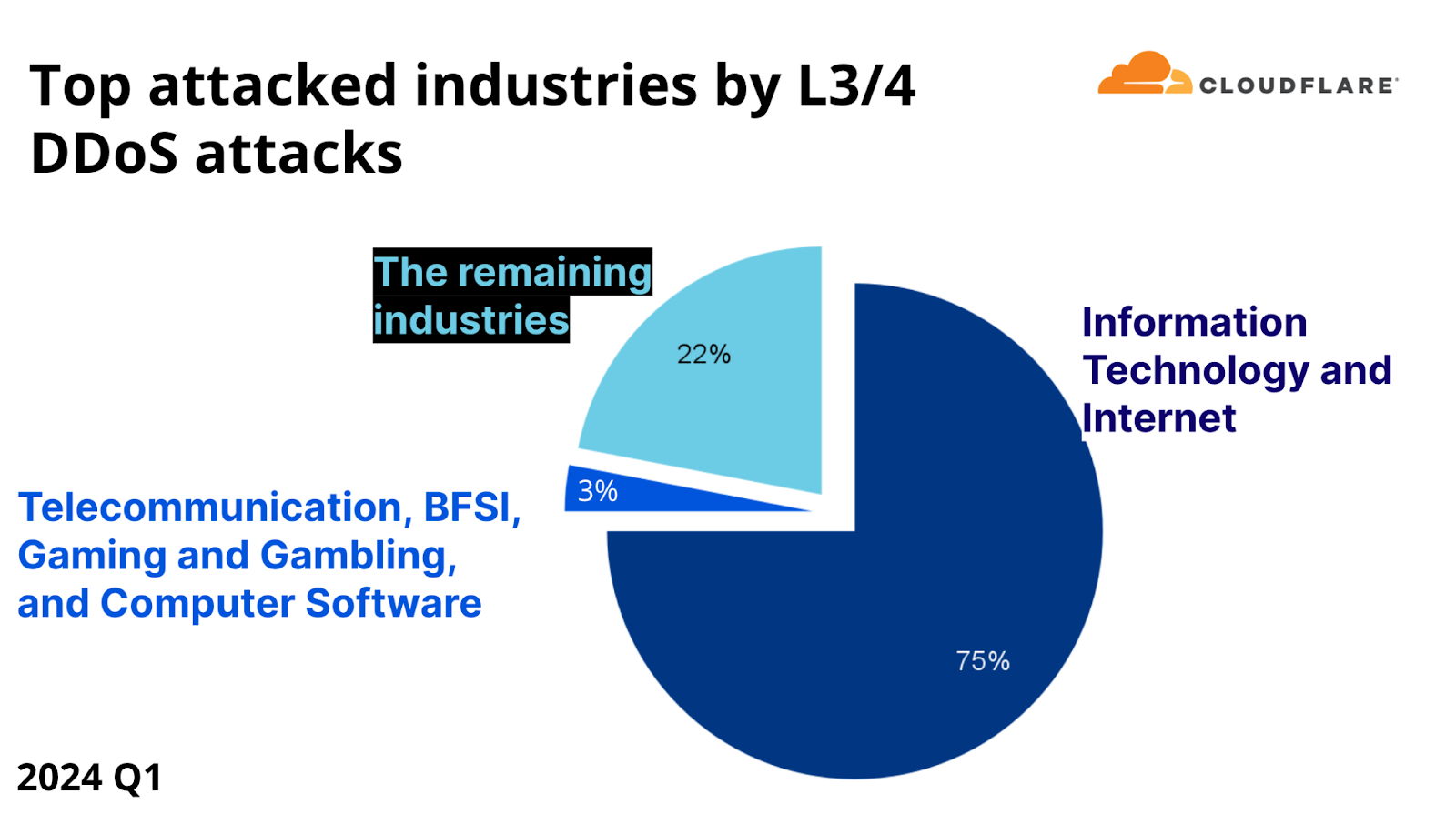

With a share of 75% of all network-layer DDoS attack bytes, the Information Technology and Internet industry was the most targeted by network-layer DDoS attacks. One possible explanation for this large share is that Information Technology and Internet companies may be “super aggregators” of attacks and receive DDoS attacks that are actually targeting their end customers. The Telecommunications industry, the Banking, Financial Services and Insurance (BFSI) industry, the Gaming and Gambling industry and the Computer Software industry accounted for the next three percent.

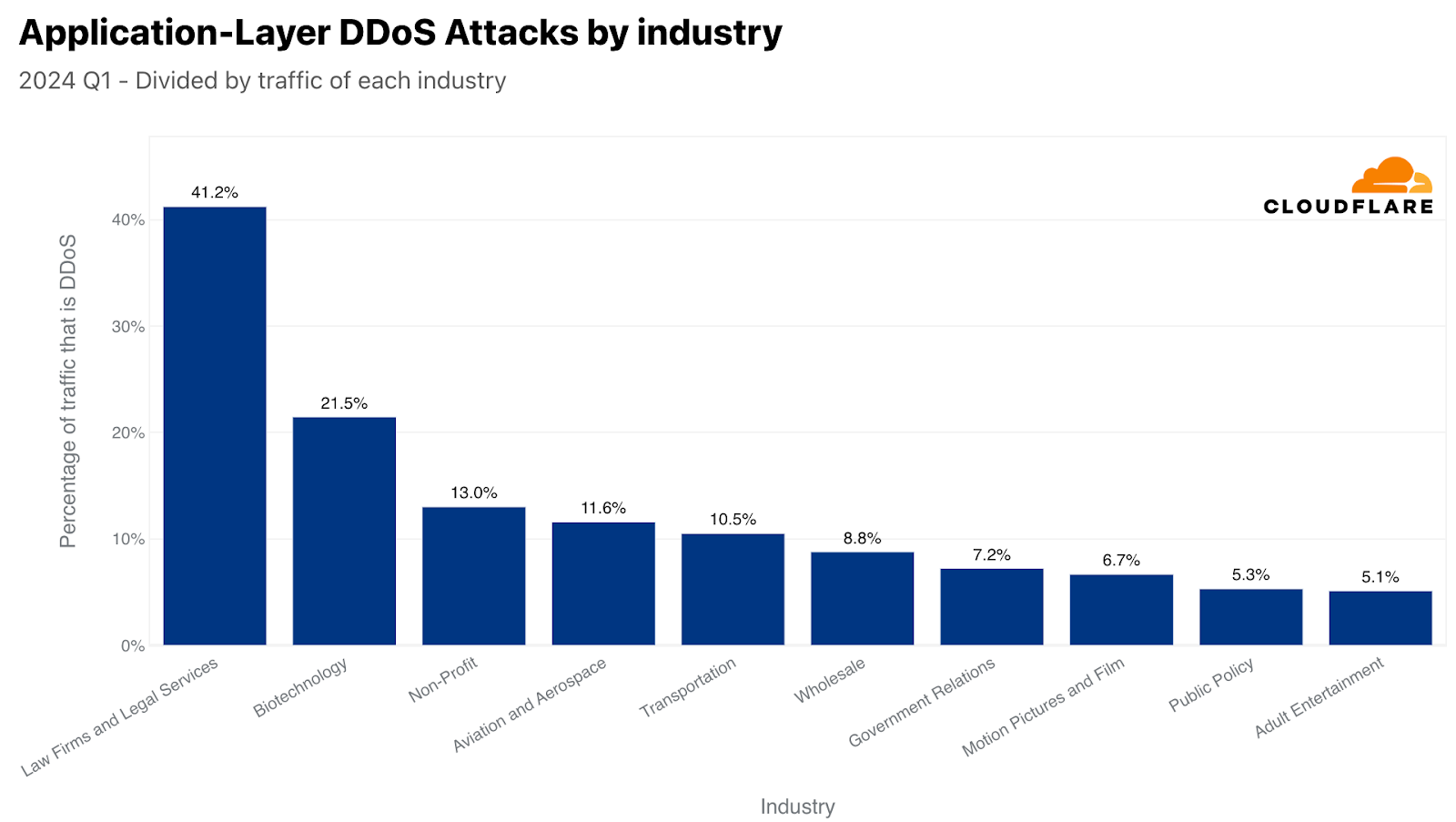

When normalizing the data by dividing the attack traffic by the total traffic to a given industry, we get a completely different picture. On the HTTP front, Law Firms and Legal Services was the most attacked industry, as over 40% of their traffic was HTTP DDoS attack traffic. The Biotechnology industry came in second with a 20% share of HTTP DDoS attack traffic. In third place, Nonprofits had an HTTP DDoS attack share of 13%. In fourth, Aviation and Aerospace, followed by Transportation, Wholesale, Government Relations, Motion Pictures and Film, Public Policy, and Adult Entertainment to complete the top ten.

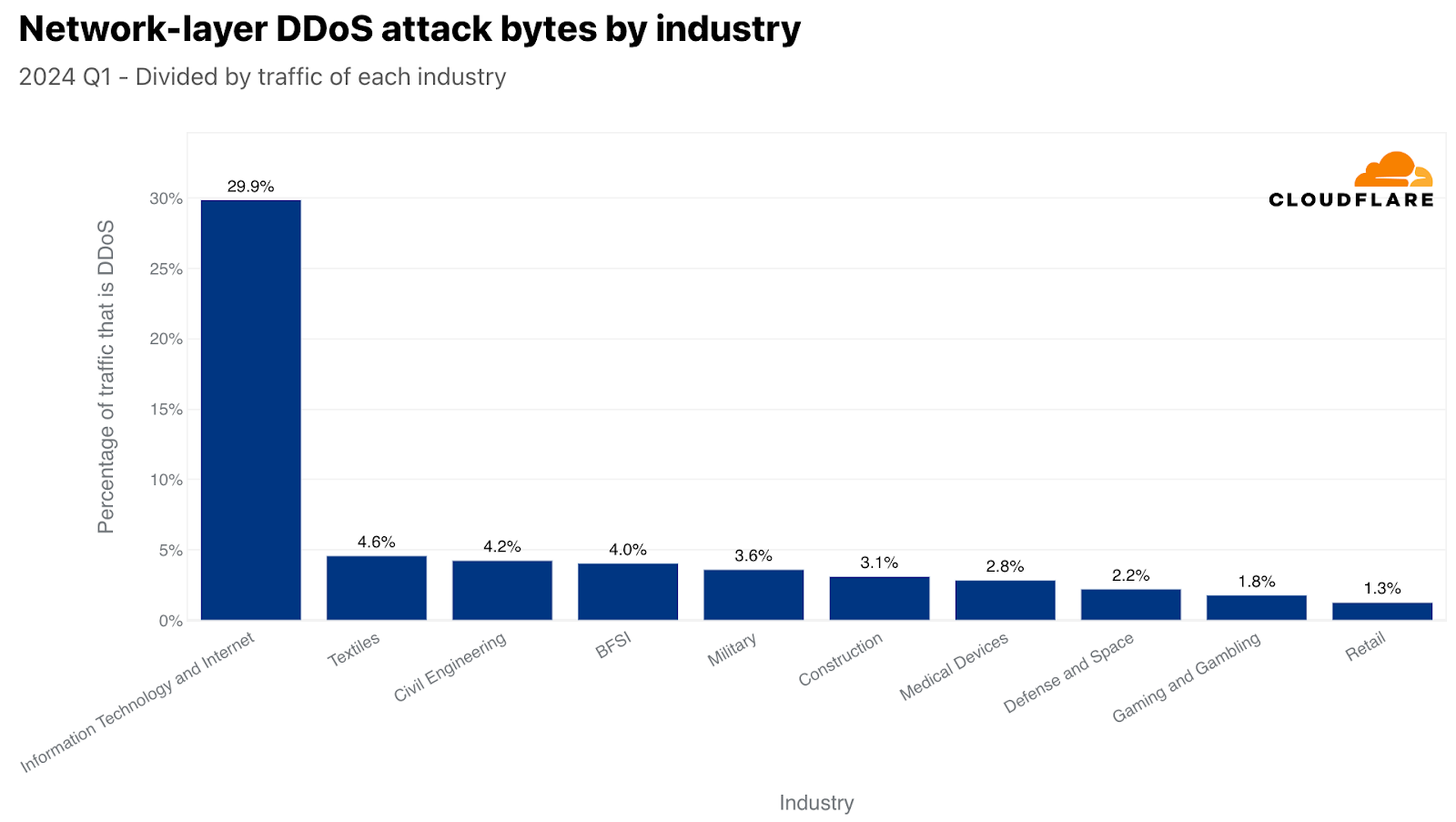

Back to the network layer, when normalized, Information Technology and Internet remained the number one most targeted industry by L3/4 DDoS attacks, as almost a third of their traffic were attacks. In second, Textiles had a 4% attack share. In third, Civil Engineering, followed by Banking Financial Services and Insurance (BFSI), Military, Construction, Medical Devices, Defense and Space, Gaming and Gambling, and lastly Retail to complete the top ten.

Largest sources of DDoS attacks

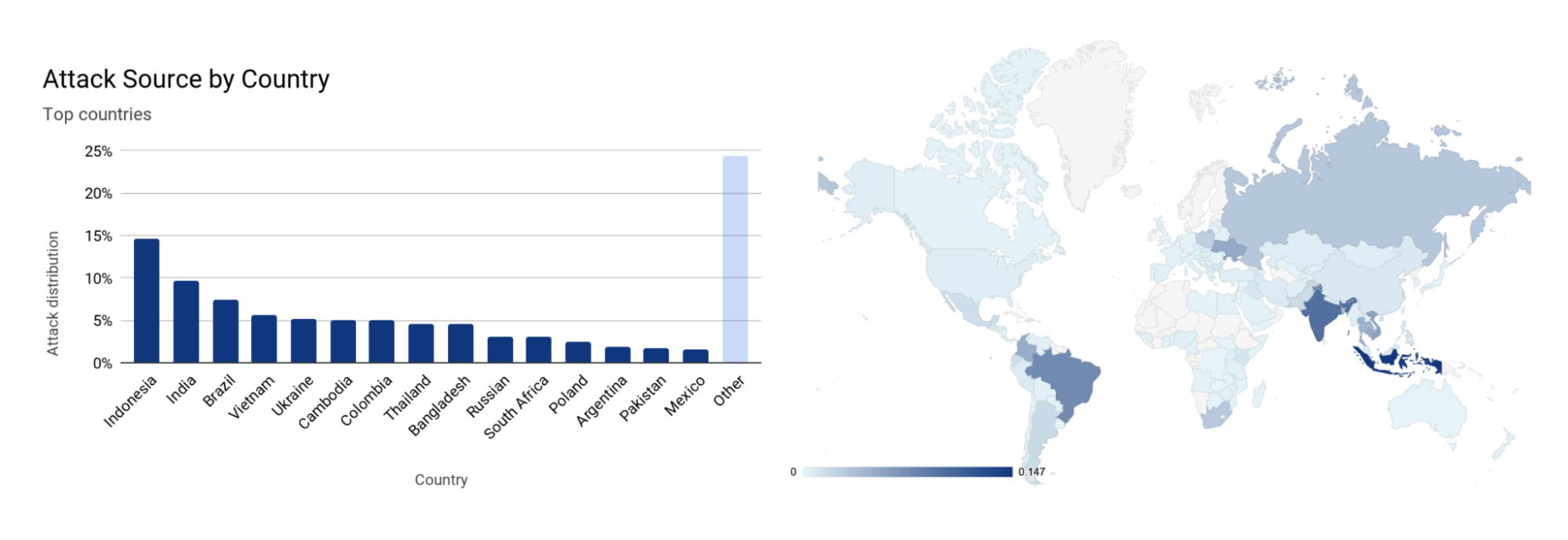

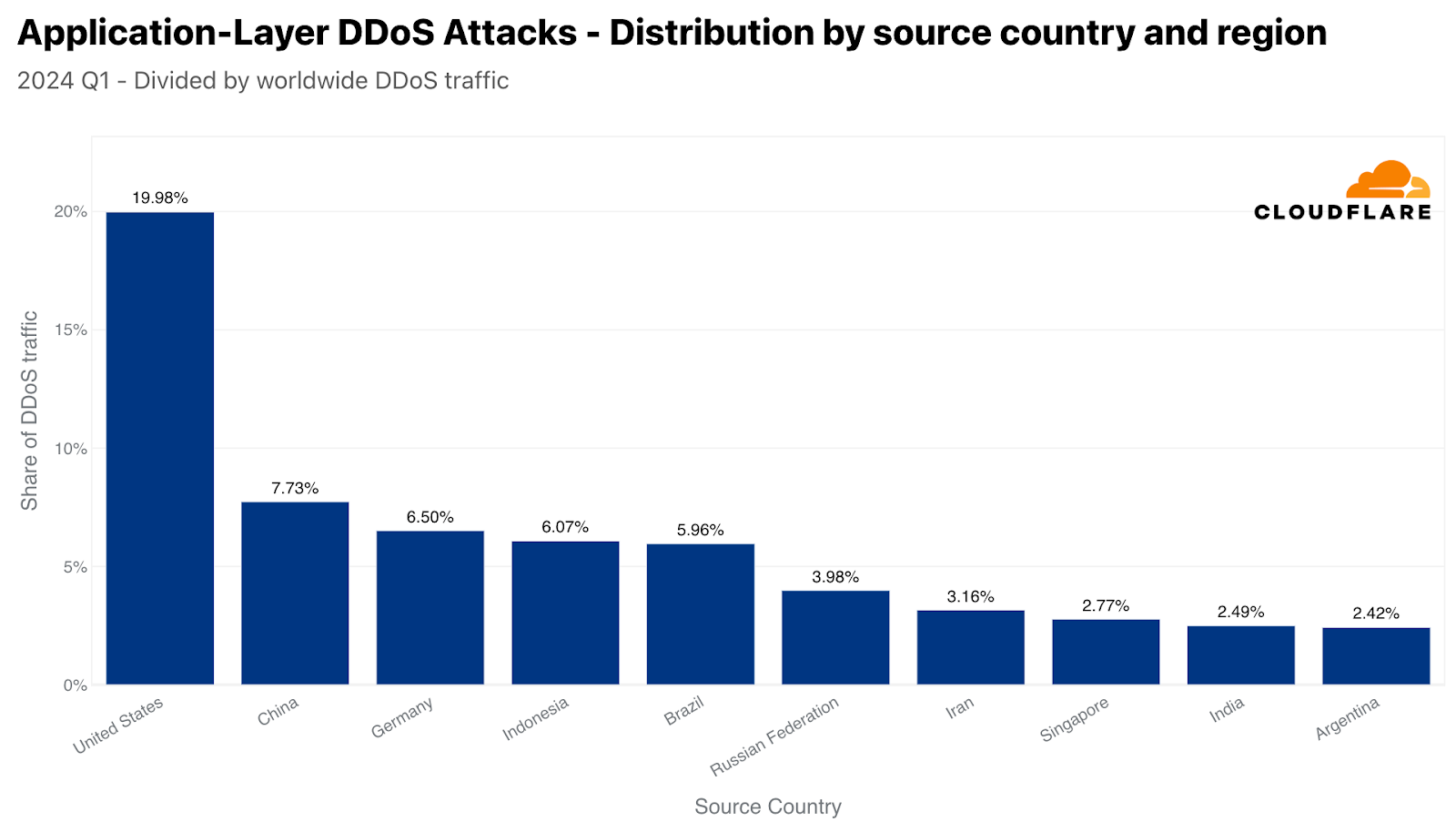

When analyzing the sources of HTTP DDoS attacks, we look at the source IP address to determine the origination location of those attacks. A country/region that’s a large source of attacks indicates that there is most likely a large presence of botnet nodes behind Virtual Private Network (VPN) or proxy endpoints that attackers may use to obfuscate their origin.

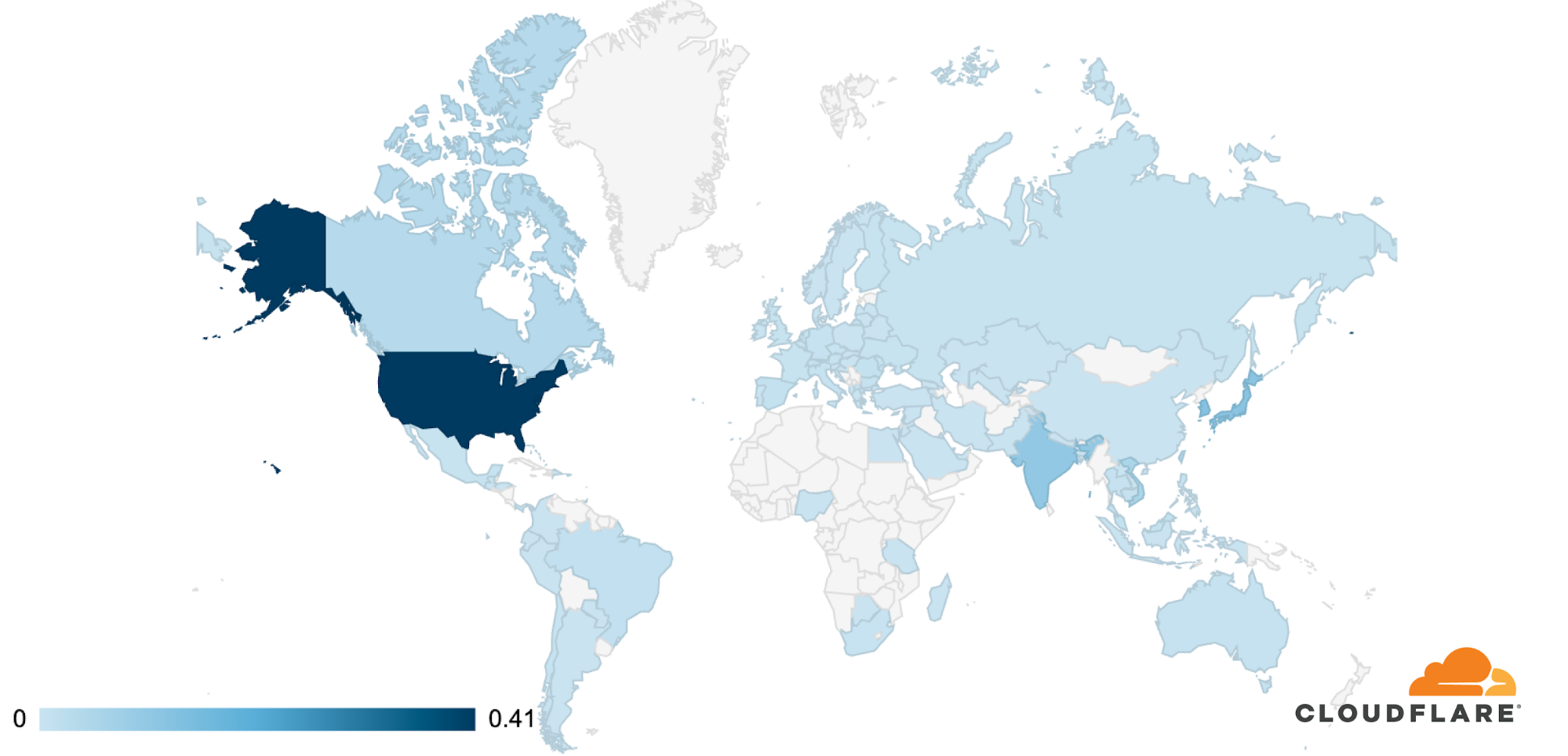

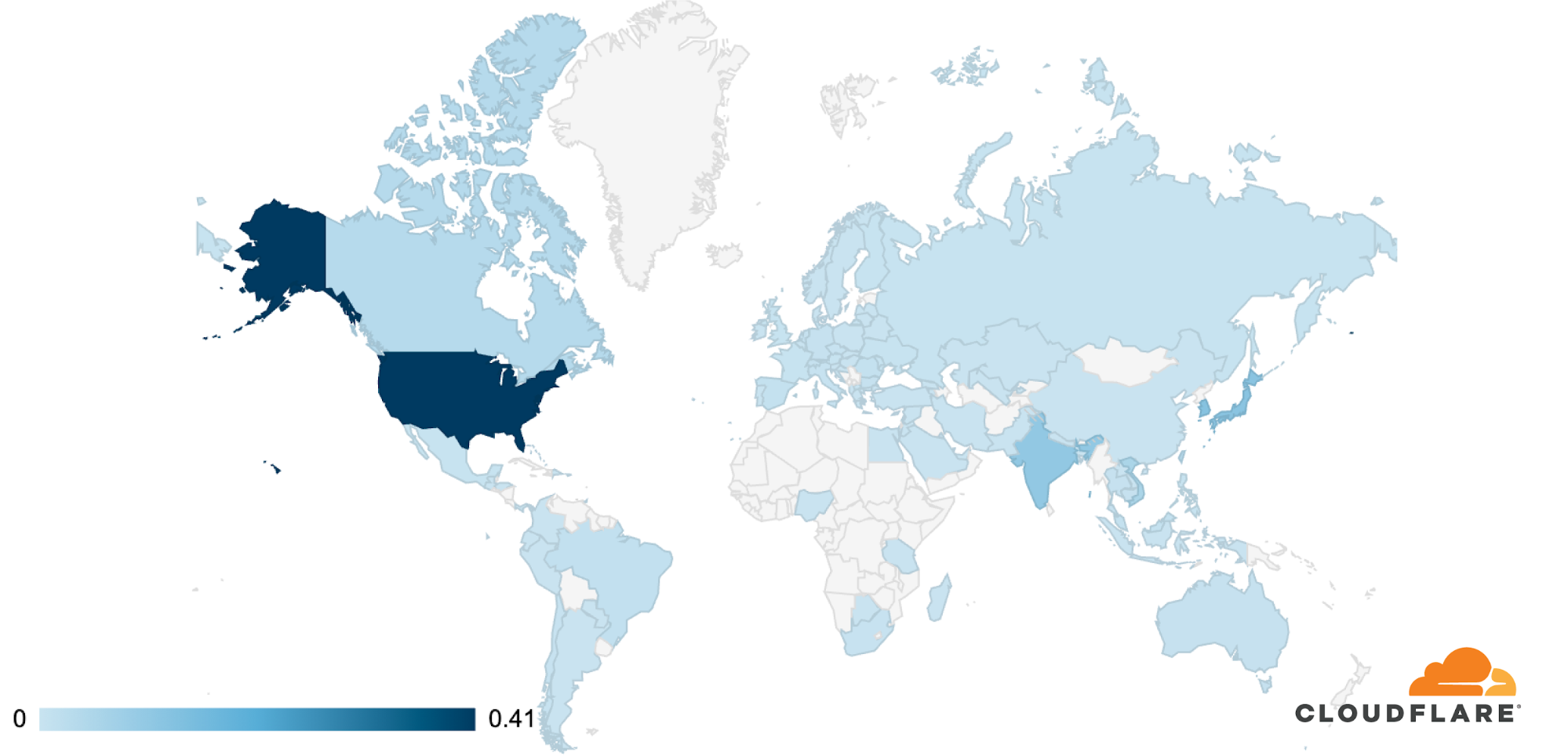

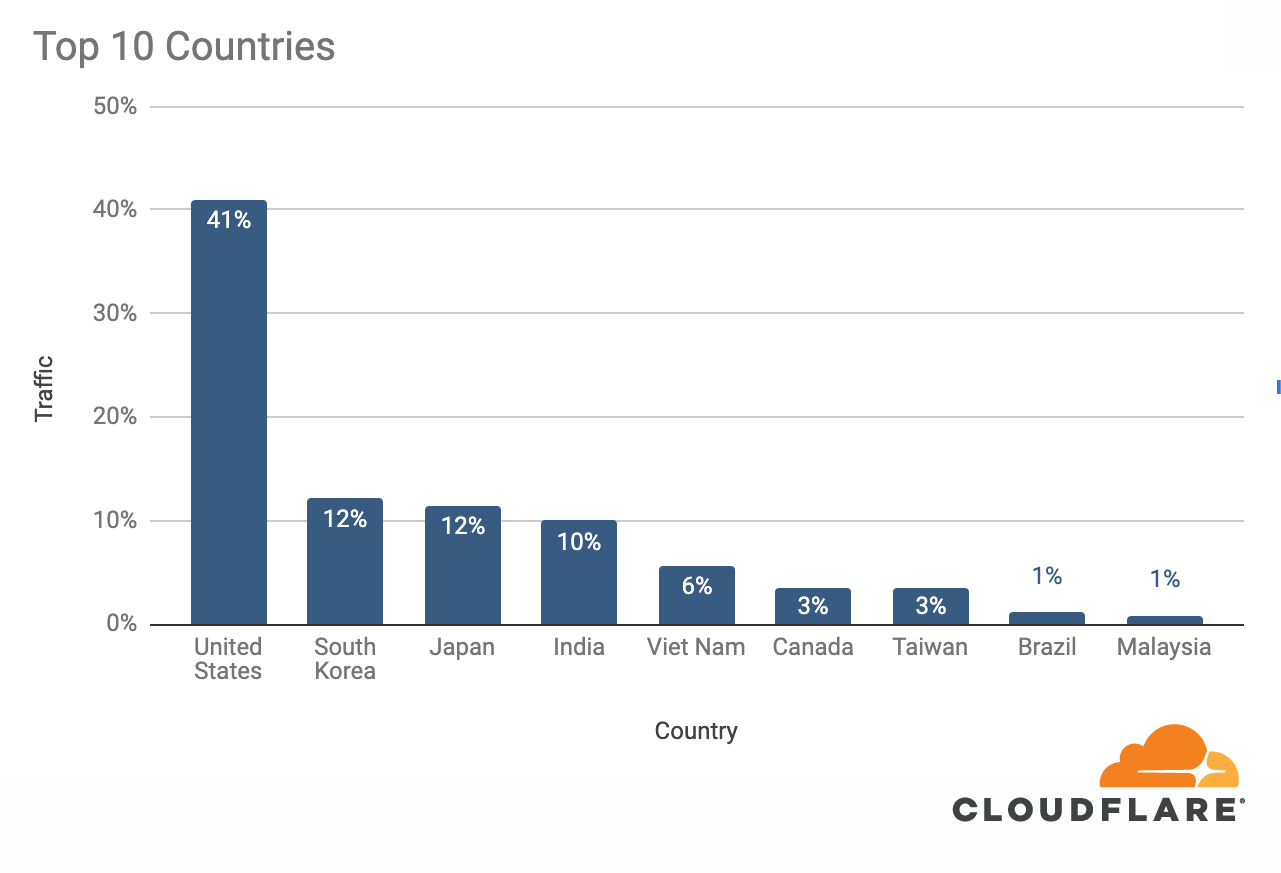

In the first quarter of 2024, the United States was the largest source of HTTP DDoS attack traffic, as a fifth of all DDoS attack requests originated from US IP addresses. China came in second, followed by Germany, Indonesia, Brazil, Russia, Iran, Singapore, India, and Argentina.

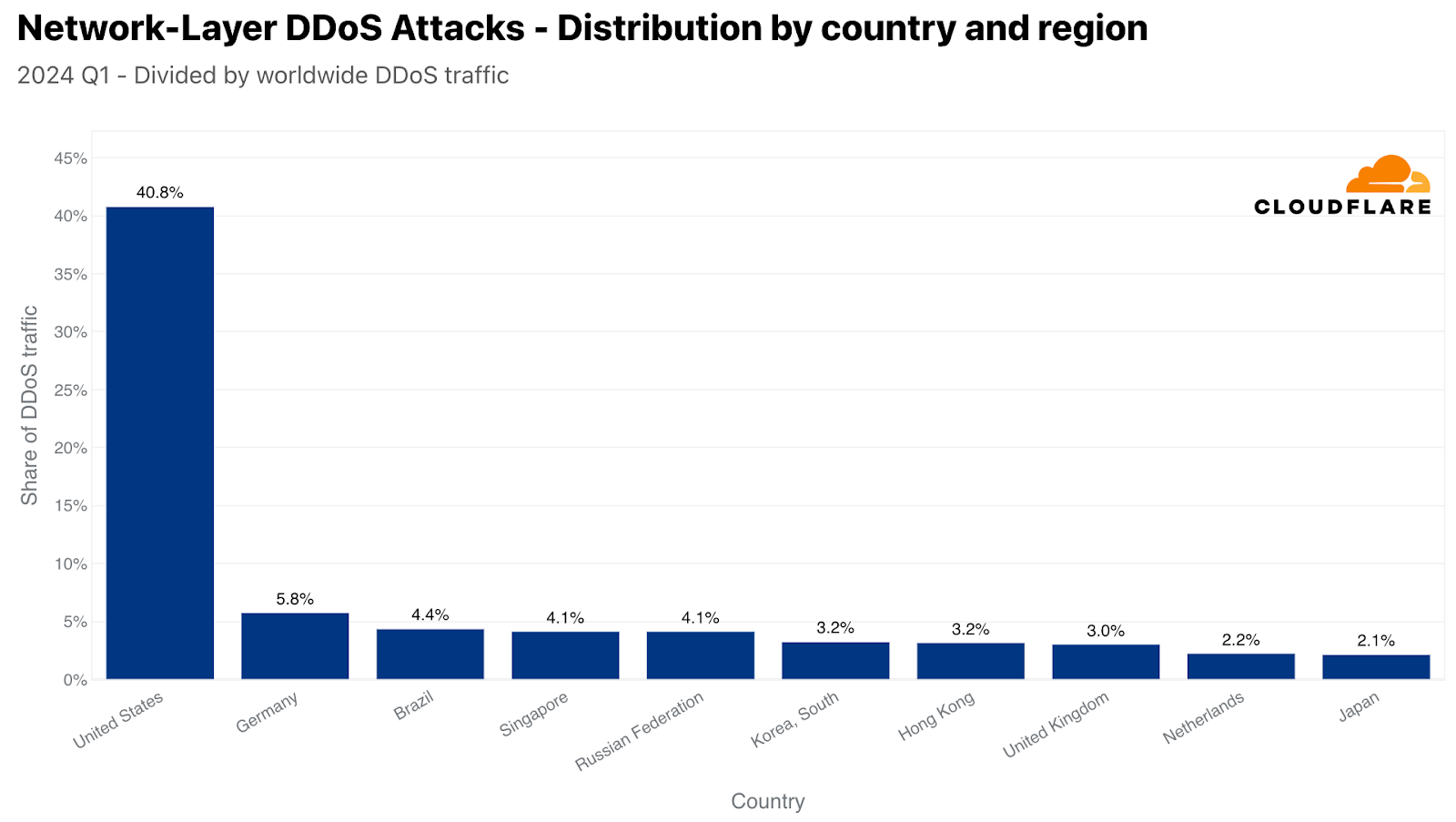

At the network layer, source IP addresses can be spoofed. So, instead of relying on IP addresses to understand the source, we use the location of our data centers where the attack traffic was ingested. We can gain geographical accuracy due to Cloudflare’s large global coverage in over 310 cities around the world.

Using the location of our data centers, we can see that in the first quarter of 2024, over 40% L3/4 DDoS attack traffic was ingested in our US data centers, making the US the largest source of L3/4 attacks. Far behind, in second, Germany at 6%, followed by Brazil, Singapore, Russia, South Korea, Hong Kong, United Kingdom, Netherlands, and Japan.

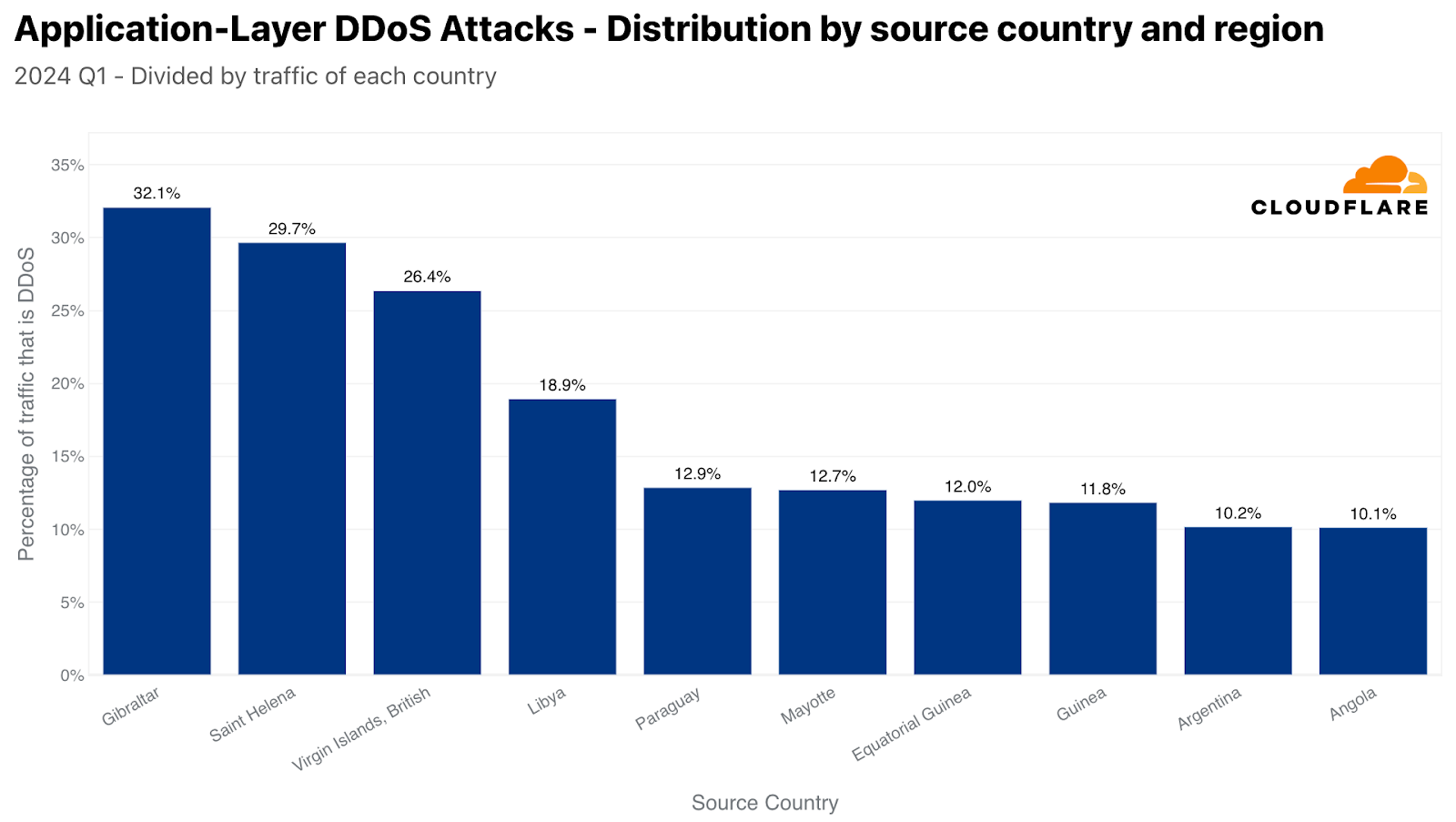

When normalizing the data by dividing the attack traffic by the total traffic to a given country or region, we get a totally different lineup. Almost a third of the HTTP traffic originating from Gibraltar was DDoS attack traffic, making it the largest source. In second place, Saint Helena, followed by the British Virgin Islands, Libya, Paraguay, Mayotte, Equatorial Guinea, Argentina, and Angola.

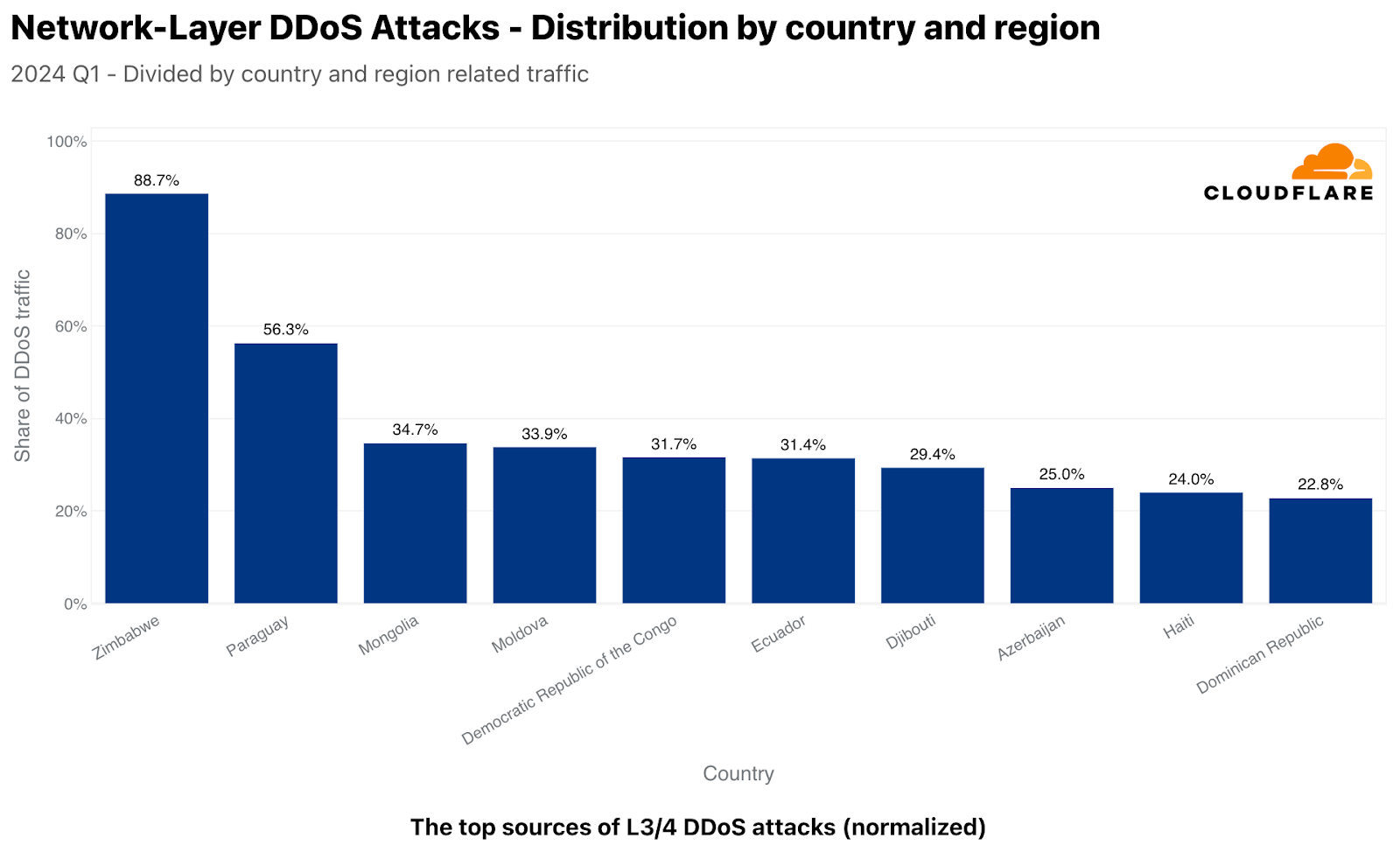

Back to the network layer, normalized, things look rather different as well. Almost 89% of the traffic we ingested in our Zimbabwe-based data centers were L3/4 DDoS attacks. In Paraguay, it was over 56%, followed by Mongolia reaching nearly a 35% attack share. Additional top locations included Moldova, Democratic Republic of the Congo, Ecuador, Djibouti, Azerbaijan, Haiti, and Dominican Republic.

Most attacked locations

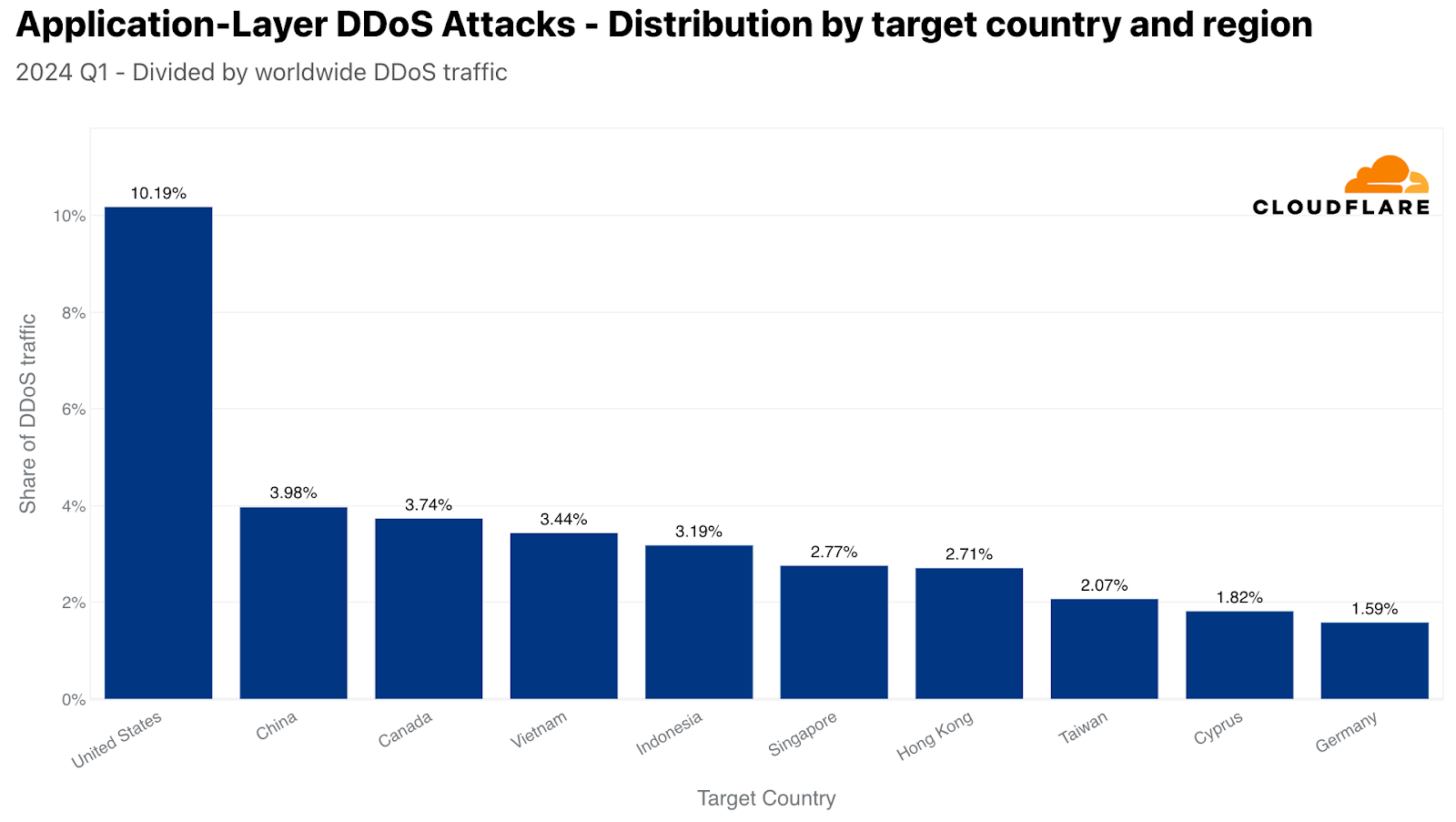

When analyzing DDoS attacks against our customers, we use their billing country to determine the “attacked country (or region)”. In the first quarter of 2024, the US was the most attacked by HTTP DDoS attacks. Approximately one out of every 10 DDoS requests that Cloudflare mitigated targeted the US. In second, China, followed by Canada, Vietnam, Indonesia, Singapore, Hong Kong, Taiwan, Cyprus, and Germany.

When normalizing the data by dividing the attack traffic by the total traffic to a given country or region, the list changes drastically. Over 63% of HTTP traffic to Nicaragua was DDoS attack traffic, making it the most attacked location. In second, Albania, followed by Jordan, Guinea, San Marino, Georgia, Indonesia, Cambodia, Bangladesh, and Afghanistan.

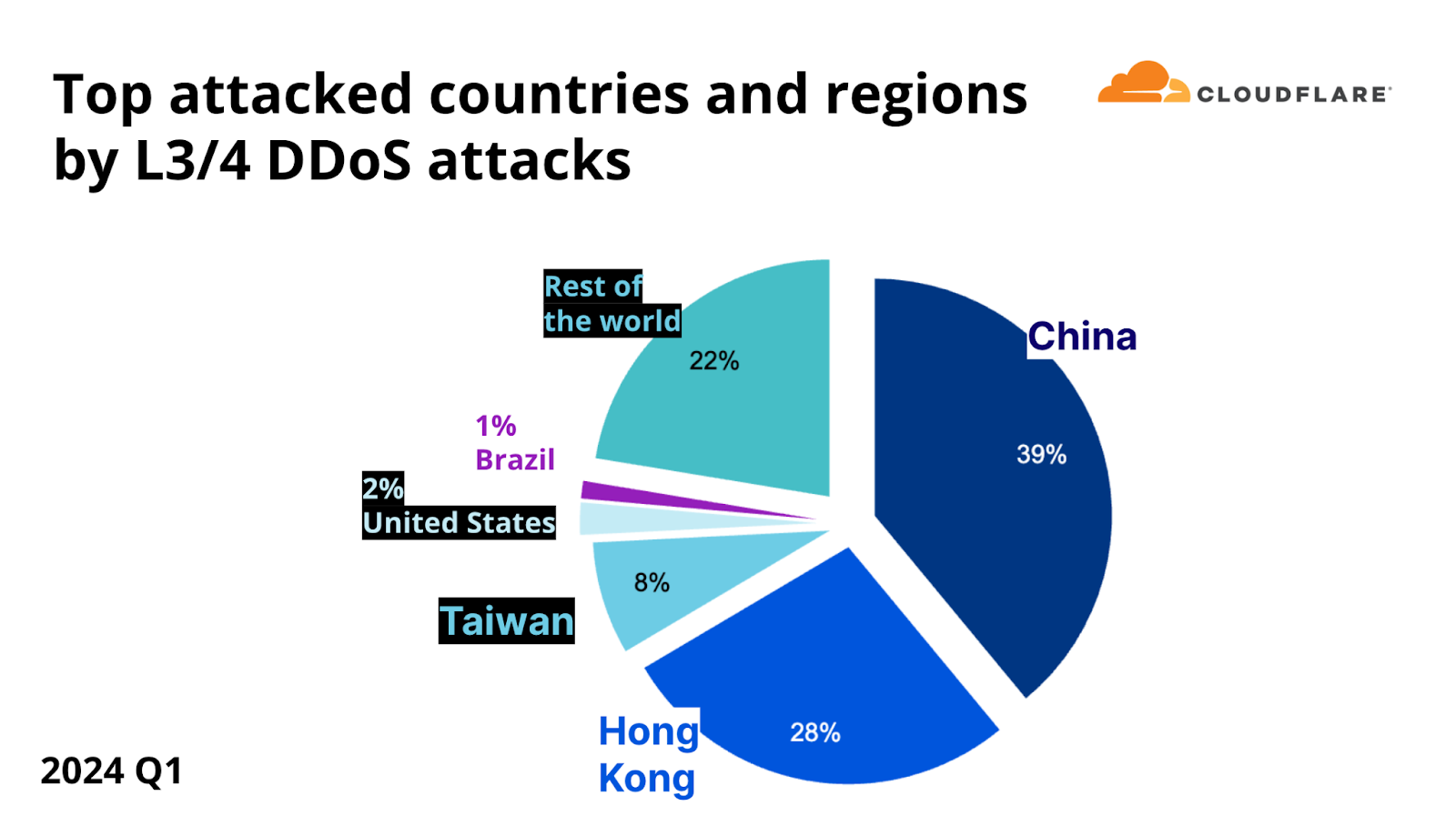

On the network layer, China was the number one most attacked location, as 39% of all DDoS bytes that Cloudflare mitigated during the first quarter of 2024 were aimed at Cloudflare’s Chinese customers. Hong Kong came in second place, followed by Taiwan, the United States, and Brazil.

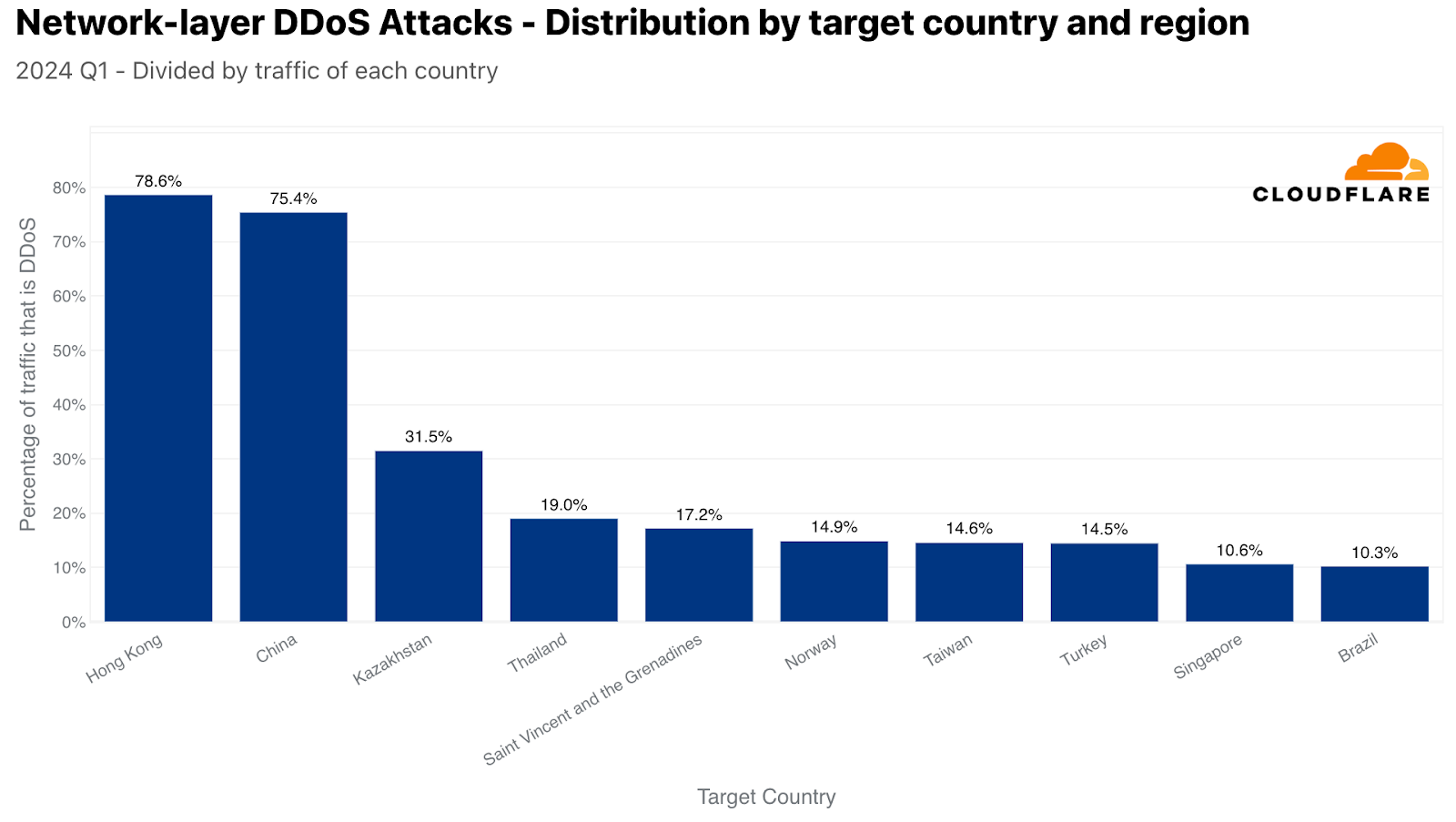

Back to the network layer, when normalized, Hong Kong takes the lead as the most targeted location. L3/4 DDoS attack traffic accounted for over 78% of all Hong Kong-bound traffic. In second place, China with a DDoS share of 75%, followed by Kazakhstan, Thailand, Saint Vincent and the Grenadines, Norway, Taiwan, Turkey, Singapore, and Brazil.

Cloudflare is here to help – no matter the attack type, size, or duration

Cloudflare’s mission is to help build a better Internet, a vision where it remains secure, performant, and accessible to everyone. With four out of every 10 HTTP DDoS attacks lasting over 10 minutes and approximately three out of 10 extending beyond an hour, the challenge is substantial. Yet, whether an attack involves over 100,000 requests per second, as is the case in one out of every 10 attacks, or even exceeds a million requests per second — a rarity seen in only four out of every 1,000 attacks — Cloudflare’s defenses remain impenetrable.

Since pioneering unmetered DDoS Protection in 2017, Cloudflare has steadfastly honored its promise to provide enterprise-grade DDoS protection at no cost to all organizations, ensuring that our advanced technology and robust network architecture do not just fend off attacks but also preserve performance without compromise.