Post Syndicated from Hannes Gerhart original https://blog.cloudflare.com/foundation-dns-launch

We are very excited to announce the official release of Foundation DNS, with new advanced nameservers, even more resilience, and advanced analytics to meet the complex requirements of our enterprise customers. Foundation DNS is one of Cloudflare’s largest leaps forward in our authoritative DNS offering since its launch in 2010, and we know our customers are interested in an enterprise-ready authoritative DNS service with the highest level of performance, reliability, security, flexibility, and advanced analytics.

Starting today, every new enterprise contract that includes authoritative DNS will have access to the Foundation DNS feature set and existing enterprise customers will have Foundation DNS features made available to them over the course of this year. If you are an existing enterprise customer already using our authoritative DNS services, and you’re interested in getting your hands on Foundation DNS earlier, just reach out to your account team, and they can enable it for you. Let’s get started…

Why is DNS so important?

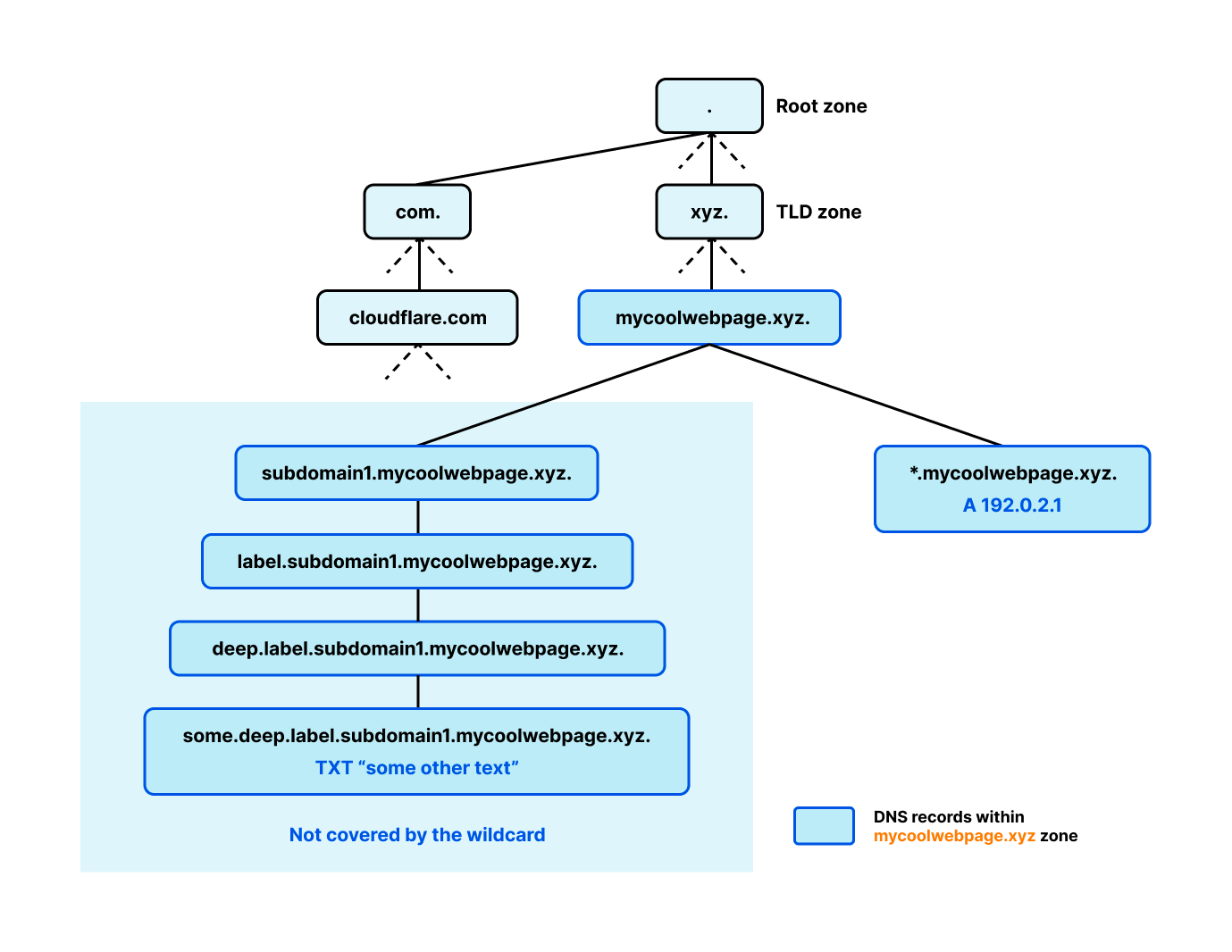

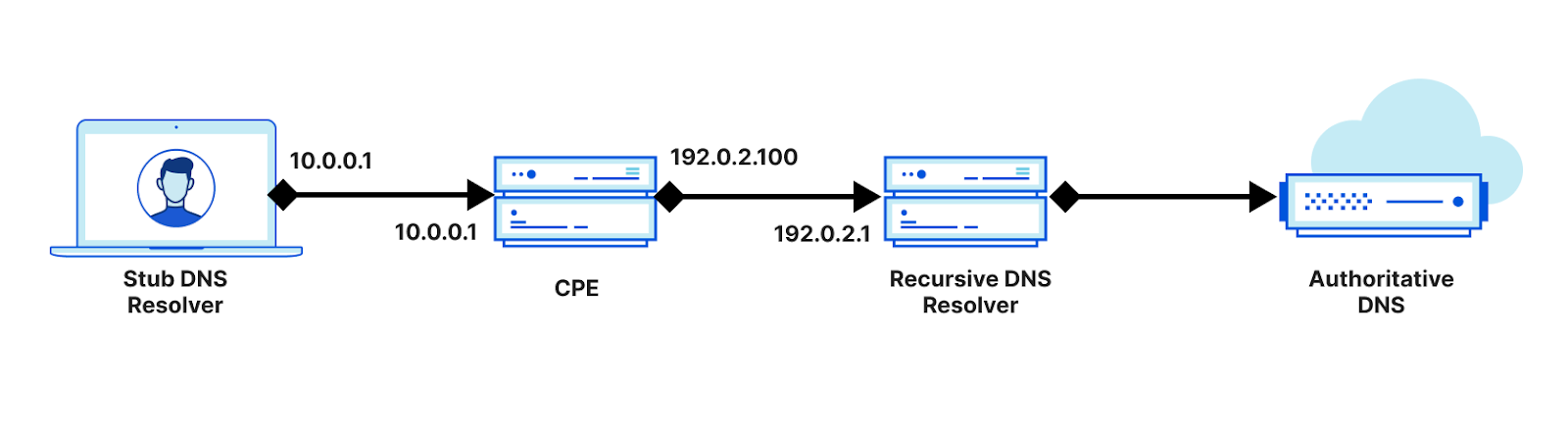

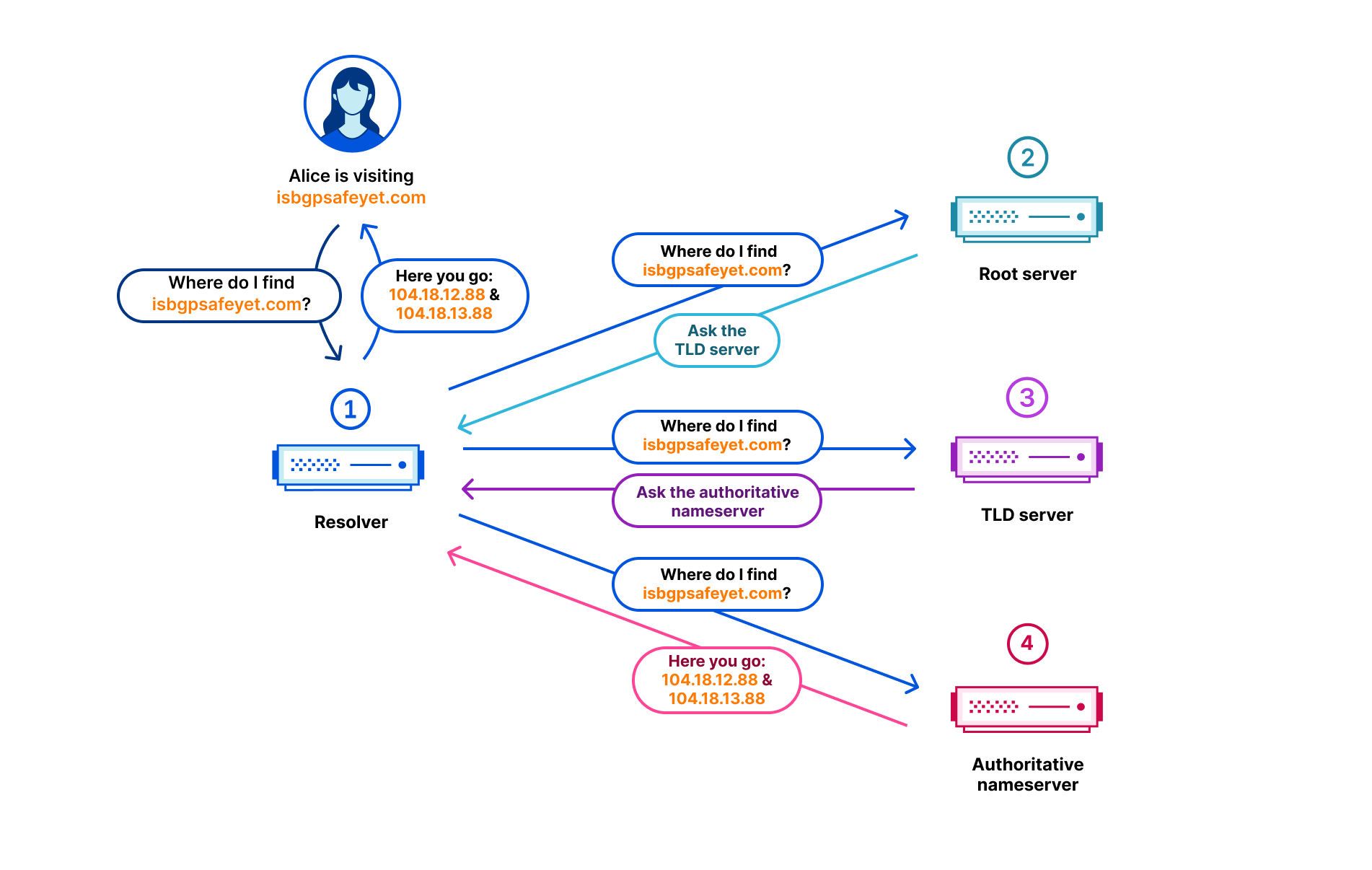

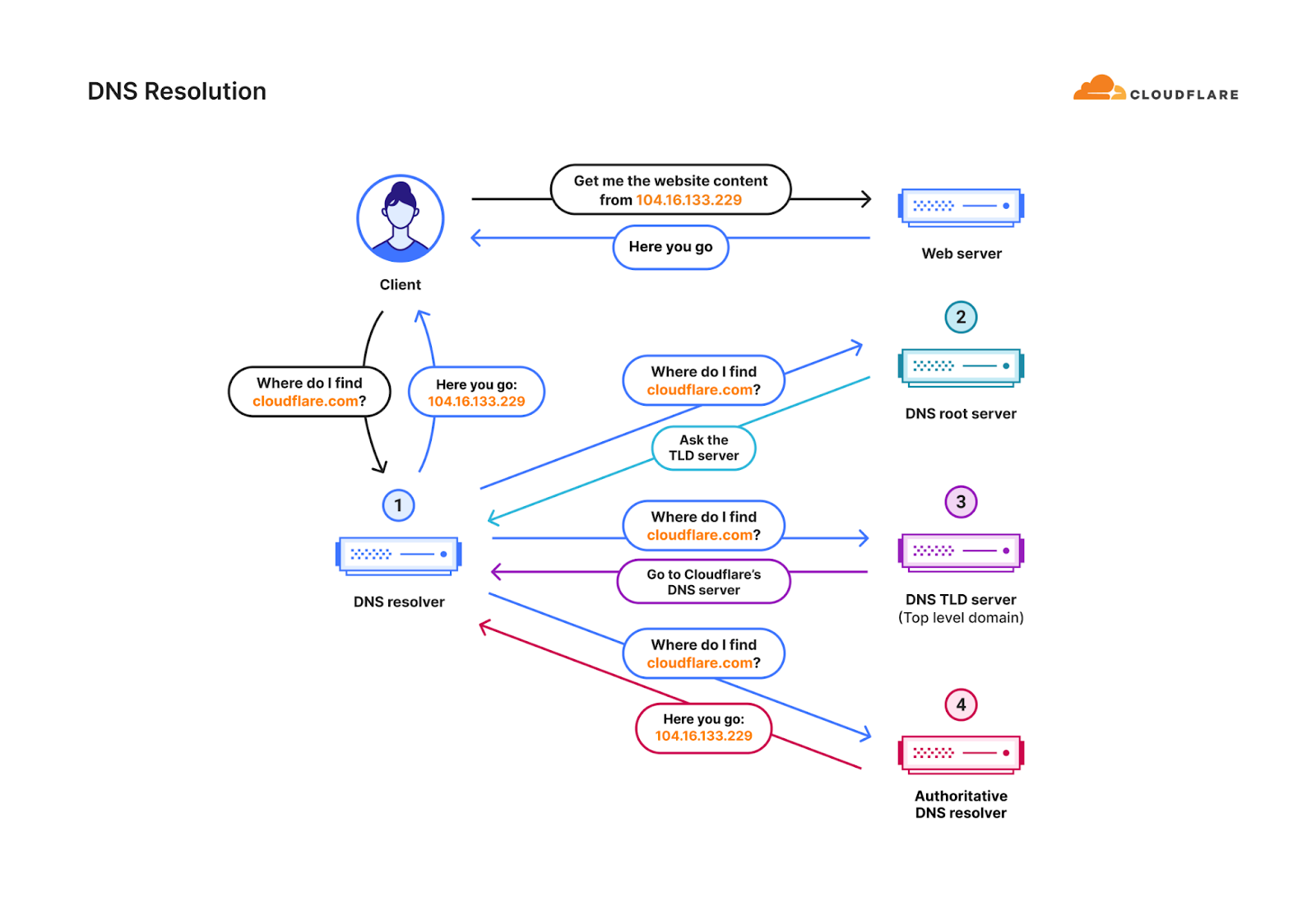

From an end user perspective, DNS makes the Internet usable. DNS is the phone book of the Internet which translates hostnames like www.cloudflare.com into IP addresses that our browsers, applications, and devices use to connect to services. Without DNS, users would have to remember IP addresses like 108.162.193.147 or 2606:4700:58::adf5:3b93 every time they wanted to visit a website on their mobile device or desktop – imagine having to remember something like that instead of just www.cloudflare.com. DNS is used in every end user application on the Internet, from social media to banking to healthcare portals. People’s usage of the Internet is entirely reliant on DNS.

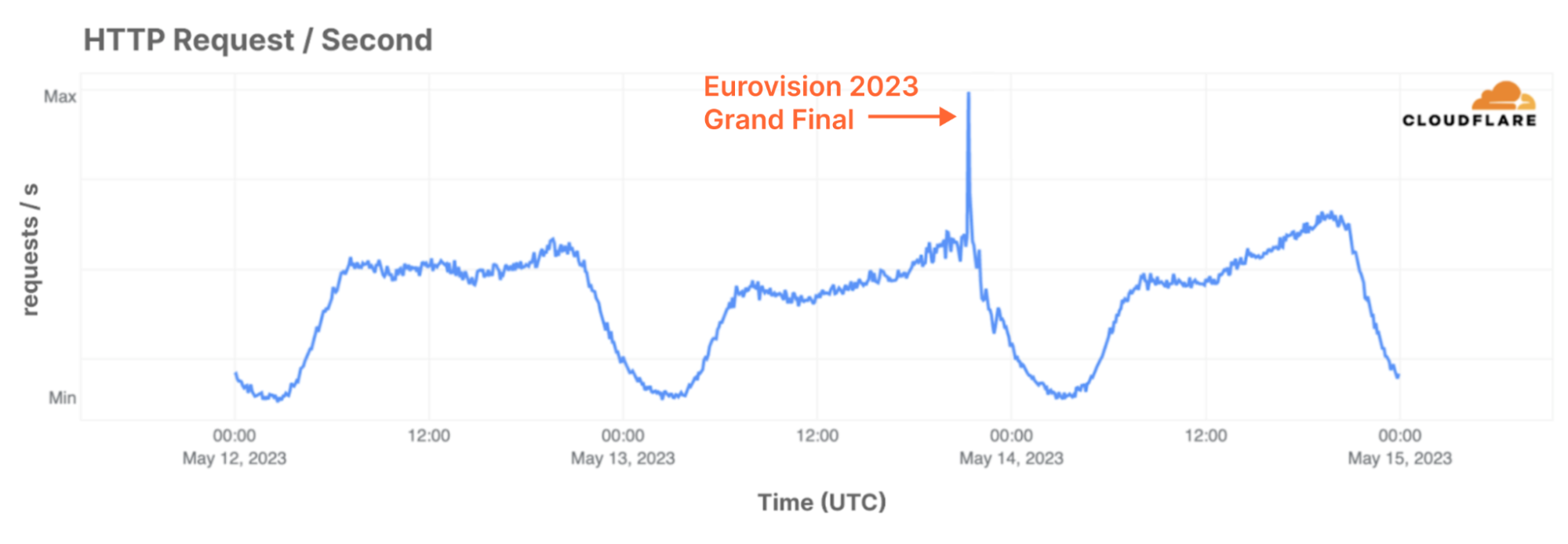

From a business perspective, DNS is the very first step in reaching websites and connecting to applications. Devices need to know where to connect in order to reach services, authenticate users, and provide the information being requested. Resolving DNS queries quickly can be the difference between a website or application being perceived as responsive or not and can have a real impact on user experience.

When DNS outages occur, the impacts are obvious. Imagine your go-to ecommerce site not loading, just like what happened with the outage Dyn experienced in 2016, which took down multiple popular ecommerce sites among others. Or, if you are part of a company, and customers aren’t able to reach your website to purchase the goods or services you are selling, a DNS outage will literally lose you money. DNS is often taken for granted, but make no mistake, you’ll notice it when it’s not working properly. Thankfully, if you use Cloudflare Authoritative DNS, these are problems you don’t worry about very much.

There is always room for improvement

Cloudflare has been providing authoritative DNS services for over a decade. Our authoritative DNS service hosts millions of domains across many different top level domains (TLDs). We have customers of all sizes, from single domains with just a few records to customers with tens of millions of records spread across multiple domains. Our enterprise customers, rightfully, demand the highest level of performance, reliability, security, and flexibility from our DNS service, along with detailed analytics. While our customers love our authoritative DNS, we recognize there is always room for improvement in some of those categories. To that end, we set off to make some major improvements to our DNS architecture, with new features as well as structural changes. We are proudly calling this improved offering Foundation DNS.

Meet Foundation DNS

As our new enterprise authoritative DNS offering, Foundation DNS was designed to enhance the reliability, security, flexibility, and analytics of our existing authoritative DNS service. Before we dive into all the specifics of Foundation DNS, here is a quick summary of what Foundation DNS brings to our authoritative DNS offering:

- Advanced nameservers bring DNS reliability to the next level.

- New zone-level DNS settings provide more flexible configuration of DNS specific settings.

- Unique DNSSEC keys per account and zone provide additional security and flexibility for DNSSEC.

- GraphQL-based DNS analytics provide even more insights into your DNS queries.

- A new release process ensures enterprise customers have the utmost stability and reliability.

- Simpler DNS pricing with more generous quotas for DNS-only zones and DNS records.

Now, let’s dive deeper into each of these new Foundation DNS features:

Advanced nameservers

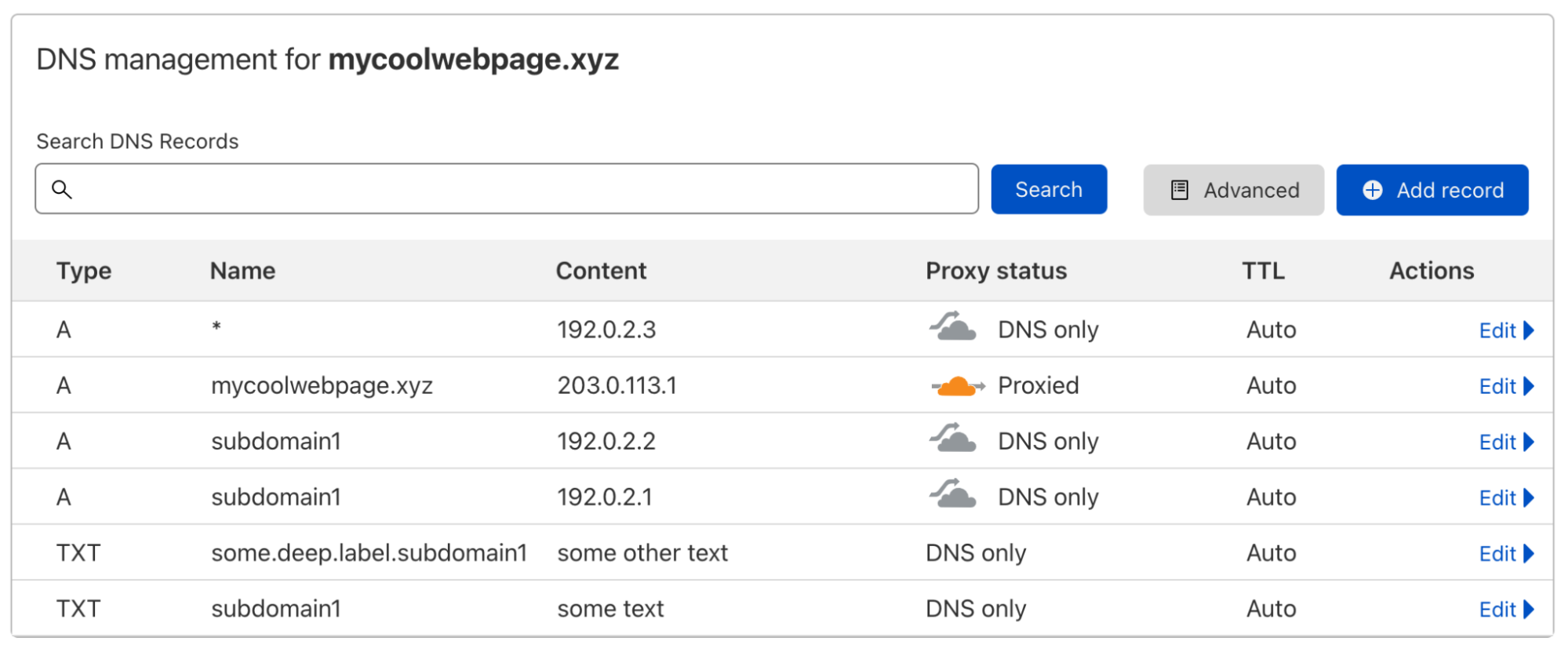

With Foundation DNS, we’re introducing advanced nameservers with a specific focus on enhancing reliability for your enterprise. You might be familiar with our standard authoritative nameservers which come as a pair per zone and use names within the cloudflare.com domain. Here’s an example:

$ dig mycoolwebpage.xyz ns +noall +answer

mycoolwebpage.xyz. 86400 IN NS kelly.ns.cloudflare.com.

mycoolwebpage.xyz. 86400 IN NS christian.ns.cloudflare.com.

Now, let’s look at the same zone using Foundation DNS advanced nameservers:

$ dig mycoolwebpage.xyz ns +noall +answer

mycoolwebpage.xyz. 86400 IN NS blue.foundationdns.com.

mycoolwebpage.xyz. 86400 IN NS blue.foundationdns.net.

mycoolwebpage.xyz. 86400 IN NS blue.foundationdns.org.

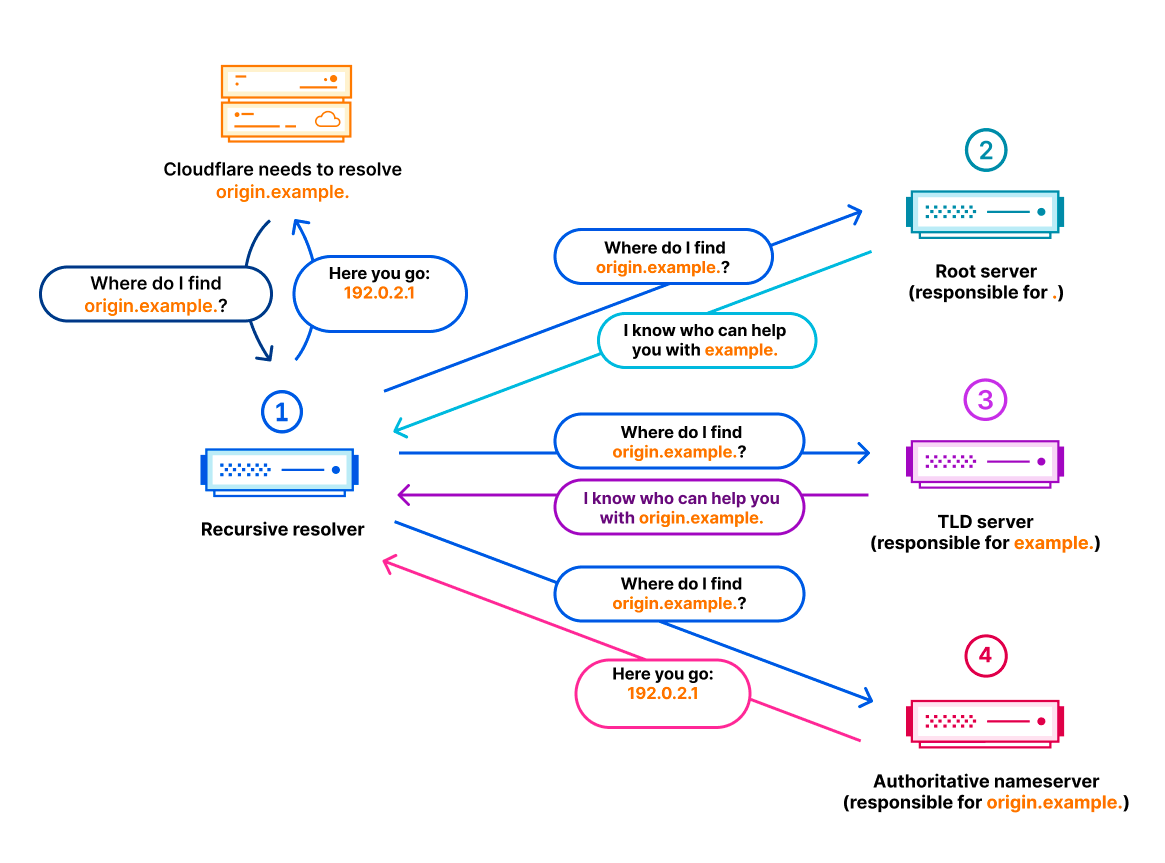

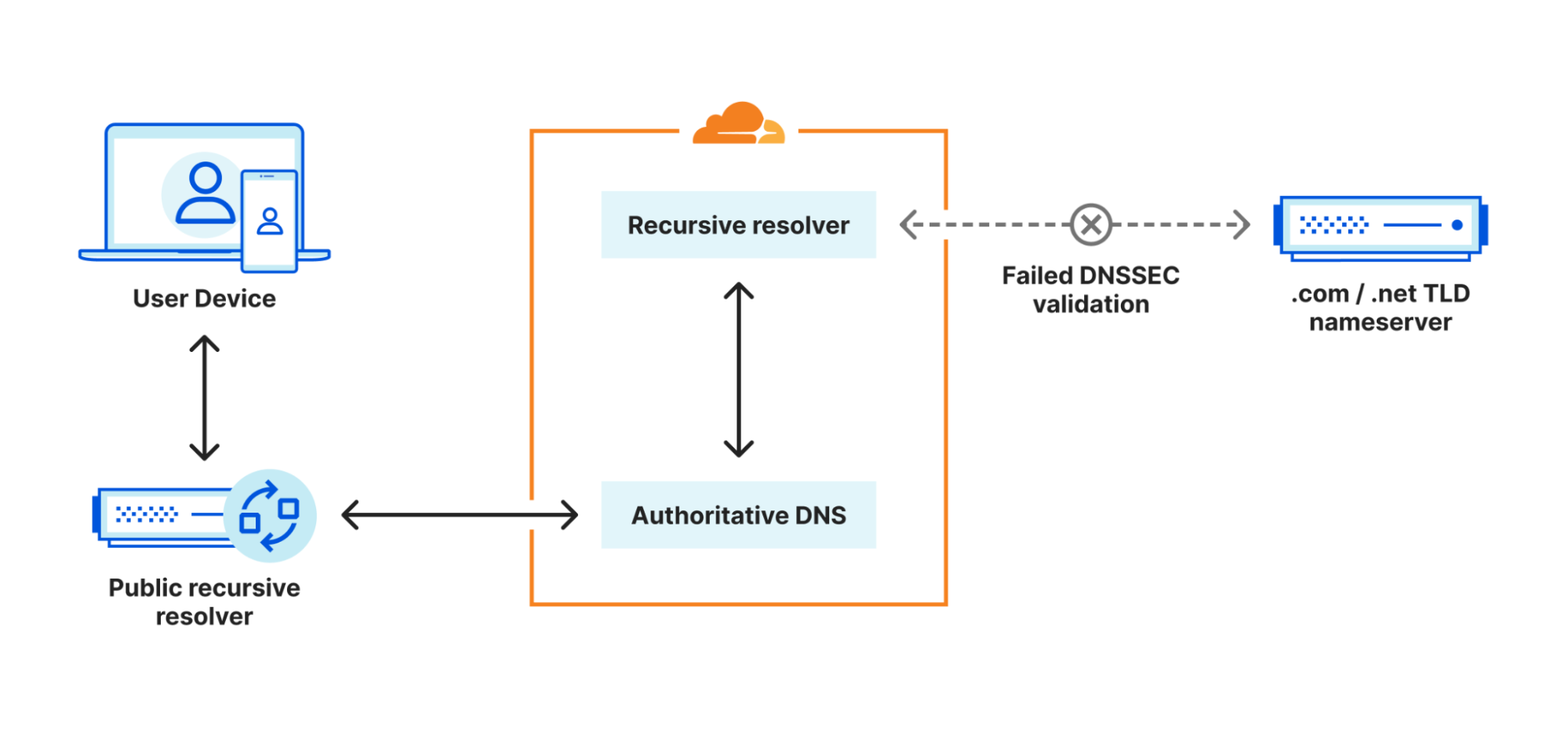

Advanced nameservers improve reliability in a few different ways. The first improvement comes from the Foundation DNS authoritative servers being spread across multiple TLDs. This provides protection from larger scale DNS outages and DDoS attacks that could potentially affect DNS infrastructure further up the tree, including TLD name servers. Foundation DNS authoritative nameservers are now located across multiple branches of the global DNS tree structure, further insulating our customers from these potential outages and attacks.

You might also have noticed that there is an additional nameserver listed with Foundation DNS. While this is an improvement, it’s not for the reason you might think it is. If we resolve each one of these nameservers to their respective IP addresses, we can make this a little easier to understand. Let’s do that here starting with our standard nameservers:

$ dig kelly.ns.cloudflare.com. +noall +answer

kelly.ns.cloudflare.com. 86353 IN A 108.162.194.91

kelly.ns.cloudflare.com. 86353 IN A 162.159.38.91

kelly.ns.cloudflare.com. 86353 IN A 172.64.34.91

$ dig christian.ns.cloudflare.com. +noall +answer

christian.ns.cloudflare.com. 86353 IN A 108.162.195.247

christian.ns.cloudflare.com. 86353 IN A 162.159.44.247

christian.ns.cloudflare.com. 86353 IN A 172.64.35.247

There are six total IP addresses for the two nameservers. As it turns out, this is all DNS resolvers actually care about when querying authoritative nameservers. DNS resolvers usually don’t track the actual domain names of authoritative servers; they simply maintain an unordered list of IP addresses that they can use to resolve queries for a given domain. So with our standard authoritative nameservers, we give resolvers six IP addresses to use to resolve DNS queries. Now, let’s look at the IP addresses for our Foundation DNS advanced nameservers:

$ dig blue.foundationdns.com. +noall +answer

blue.foundationdns.com. 300 IN A 108.162.198.1

blue.foundationdns.com. 300 IN A 162.159.60.1

blue.foundationdns.com. 300 IN A 172.64.40.1

$ dig blue.foundationdns.net. +noall +answer

blue.foundationdns.net. 300 IN A 108.162.198.1

blue.foundationdns.net. 300 IN A 162.159.60.1

blue.foundationdns.net. 300 IN A 172.64.40.1

$ dig blue.foundationdns.org. +noall +answer

blue.foundationdns.org. 300 IN A 108.162.198.1

blue.foundationdns.org. 300 IN A 162.159.60.1

blue.foundationdns.org. 300 IN A 172.64.40.1

Would you look at that! Foundation DNS provides the same IP addresses for each of the authoritative nameservers that we provide to a zone. So in this case, we have only provided three IP addresses for resolvers to use to resolve DNS queries. And you might be wondering,“isn’t six better than three? Isn’t this a downgrade?” It turns out more isn’t always better. Let’s talk about why.

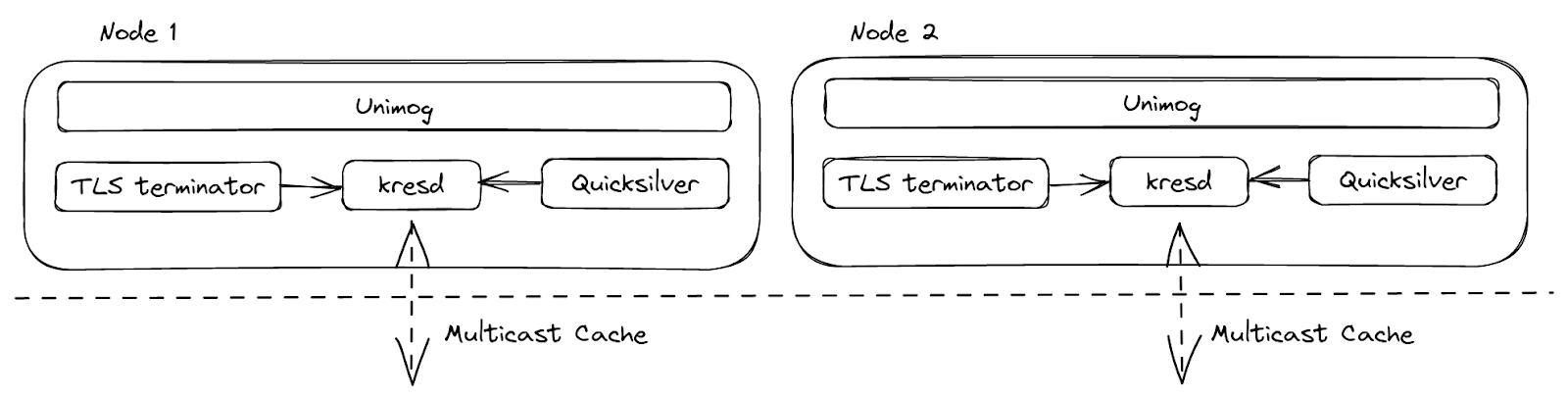

You are probably aware of Cloudflare’s use of Anycast and, as you might assume, our DNS services leverage Anycast to ensure that our authoritative DNS servers are available globally and as close as possible to users and resolvers across the Internet. Our standard nameservers are all advertised out of every Cloudflare data center by a single Anycast group. If we zoom in on Europe, you can see that in a standard nameserver deployment, both nameservers are advertised from every data center.

We can take those six IP addresses from our standard nameservers above and perform a lookup for their “hostname.bind” TXT record which will show us the airport code or physical location of the closest data center where our DNS queries are being resolved from. This output helps explain the reason why more isn’t always better.

$ dig @108.162.194.91 ch txt hostname.bind +short

"LHR"

$ dig @162.159.38.91 ch txt hostname.bind +short

"LHR"

$ dig @172.64.34.91 ch txt hostname.bind +short

"LHR"

$ dig @108.162.195.247 ch txt hostname.bind +short

"LHR"

$ dig @162.159.44.247 ch txt hostname.bind +short

"LHR"

$ dig @172.64.35.247 ch txt hostname.bind +short

"LHR"

As you can see, when queried from near London, all six of those IP addresses route to the same London (LHR) data center. Meaning that when a resolver in London is resolving DNS queries for a domain using Cloudflare’s standard authoritative DNS, no matter which nameserver IP address is being queried, they are always connecting to the same physical location.

You might be asking, “So what? What does that mean to me?” Let’s look at an example. If you wanted to resolve a domain using Cloudflare standard nameservers from London, and I am using a public resolver that is also located in London, the resolver will always connect to the Cloudflare LHR data center regardless of which nameserver it’s trying to reach. It doesn’t have any other option, because of Anycast.

Because of Anycast, should the LHR data center go offline completely, all the traffic intended for LHR would be routed to other nearby data centers and resolvers would continue to function normally. However, in the unlikely scenario where the LHR data center was online, but our DNS services aren’t able to respond to DNS queries, the resolver would have no way to resolve these DNS queries since they can’t reach out to any other data center. We could have 100 IP addresses, and it would not help us in this scenario. Eventually, cached responses will expire, and the domain will eventually stop being resolved.

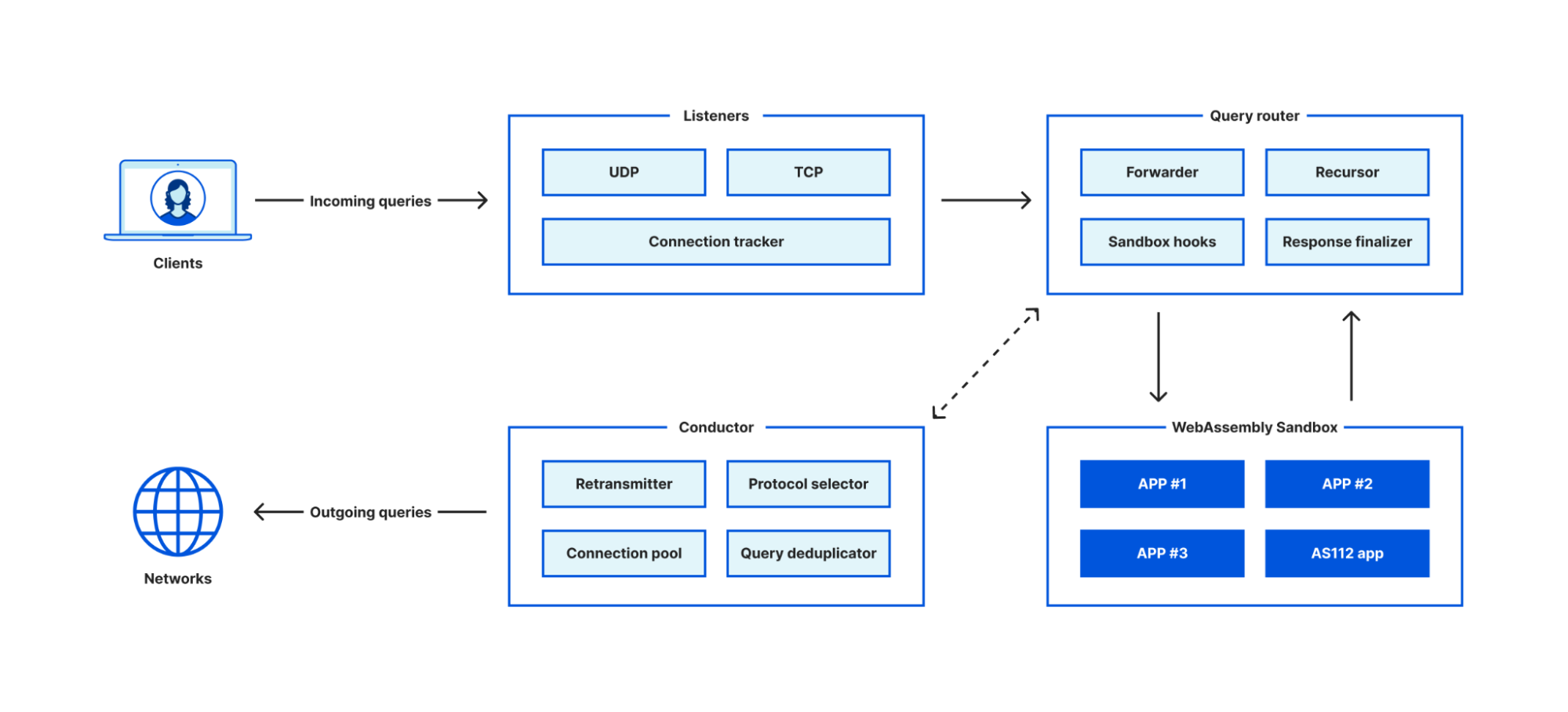

Foundation DNS advanced nameservers are changing the way we use Anycast by leveraging two Anycast groups, which breaks the previous paradigm of every authoritative nameserver IP being advertised from every data center. Using two Anycast groups means that Foundation DNS authoritative nameservers actually have different physical locations from one another, rather than all being advertised from each data center. Here is how that same region would look using two Anycast groups:

Let’s go back and finish our comparison of six authoritative IP addresses for standard authoritative DNS vs three IP addresses for Foundation DNS now that it’s understood that Foundation DNS is using two Anycast groups for advertising nameservers. Let’s see where Foundation DNS servers are being advertised from for our example:

$ dig @108.162.198.1 ch txt hostname.bind +short

"LHR"

$ dig @162.159.60.1 ch txt hostname.bind +short

"LHR"

$ dig @172.64.40.1 ch txt hostname.bind +short

"MAN"

Look at that! One of our three nameserver IP addresses is being advertised out of a different data center, Manchester (MAN), making Foundation DNS more reliable and resilient for the previously mentioned outage scenario. It’s worth mentioning that in some cities, Cloudflare operates out of multiple data centers which will result in all three queries returning the same airport code. While we guarantee that at least one of those IP addresses is being advertised out of a different data center, we understand some customers may want to test for themselves. In those cases, an additional query can show that IP addresses are being advertised out of different data centers.

$ dig @108.162.198.1 +nsid | grep NSID:

; NSID: 39 34 6d 33 39 ("94m39")

In the “94m30” returned in the response, the number before the “m” represents the data center that answered the query. As long as that number is different in one of the three responses, you know that one of your Foundation DNS authoritative nameservers is being advertised out of a different physical location.

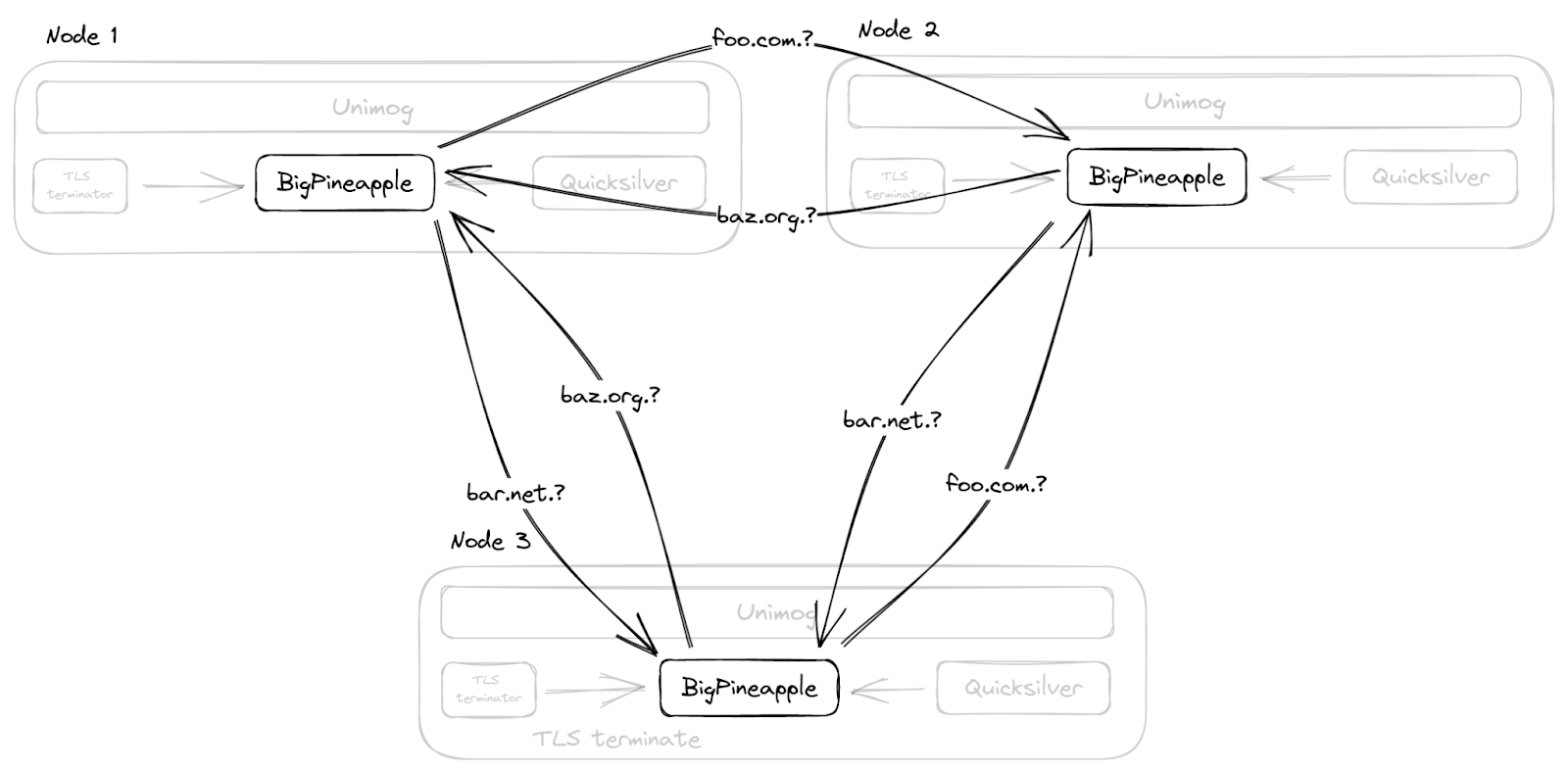

With Foundation DNS leveraging two Anycast groups, the previous outage scenario is handled seamlessly. DNS resolvers monitor requests to all the authoritative nameservers returned for a given domain, but primarily use the nameserver that is providing the fastest responses.

With this configuration, DNS resolvers are able to send requests to two different Cloudflare data centers, so, should a failure happen at one physical location, queries are then automatically sent to the second data center where they can be properly resolved.

Foundation DNS advanced nameservers are a big step forward in reliability for our enterprise customers. We welcome our enterprise customers to enable advanced nameservers for existing zones today. Migrating to Foundation DNS won’t involve any downtime either because even after Foundation DNS advanced nameservers are enabled for a zone, the previous standard authoritative DNS nameservers will continue to function and respond to queries for the zone. Customers don’t need to plan for a cutover or other service-impacting event to migrate to Foundation DNS advanced nameservers.

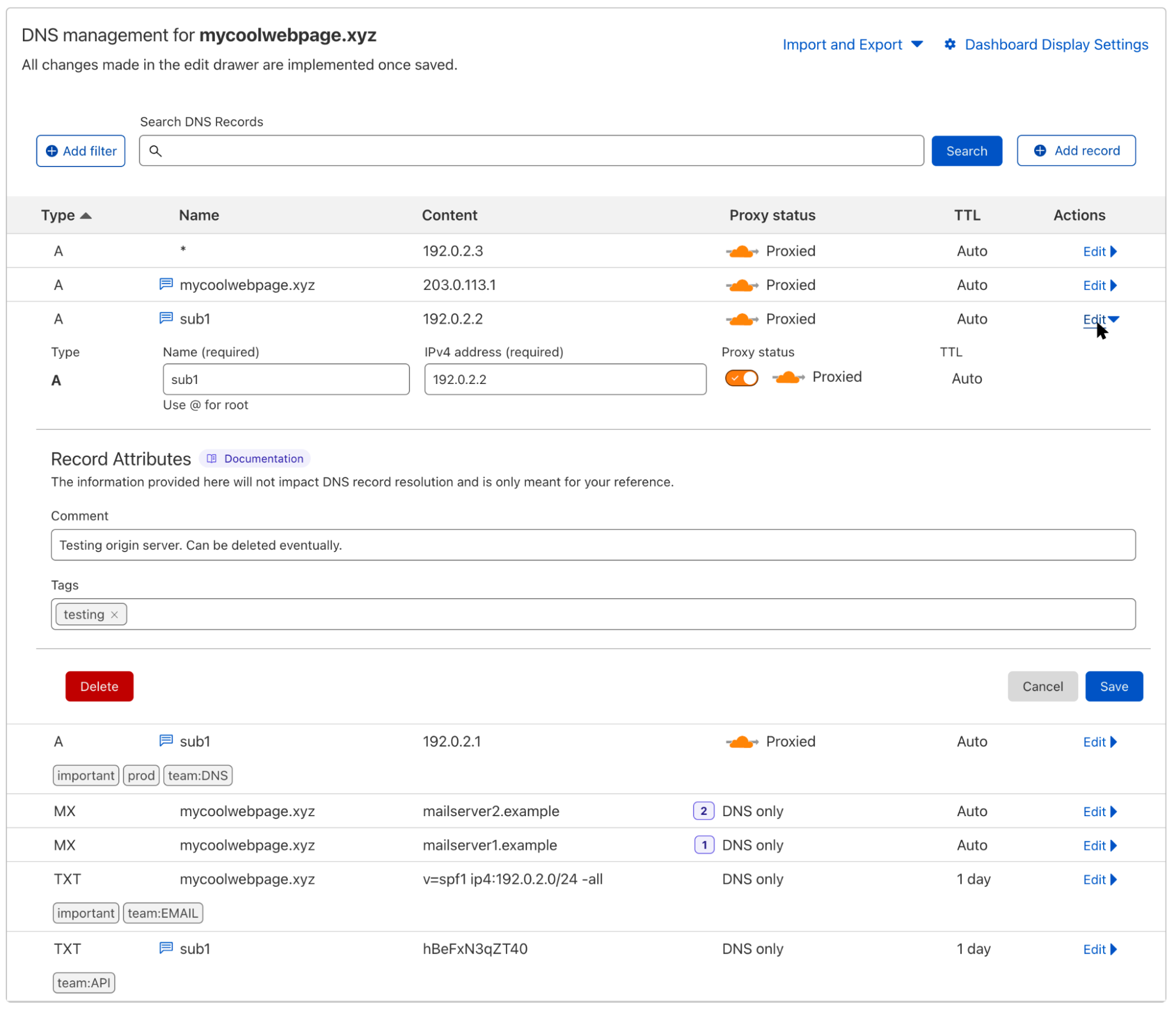

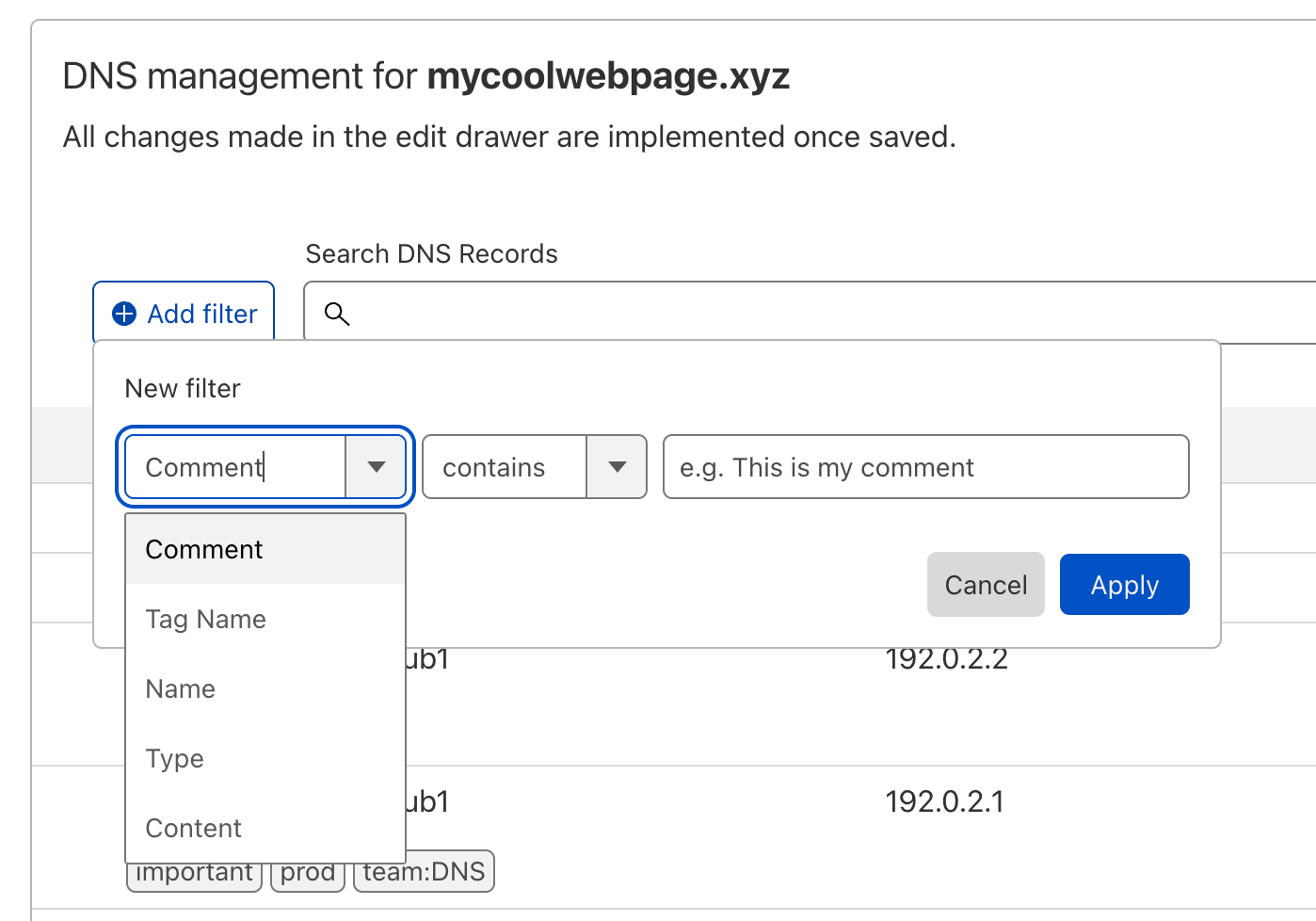

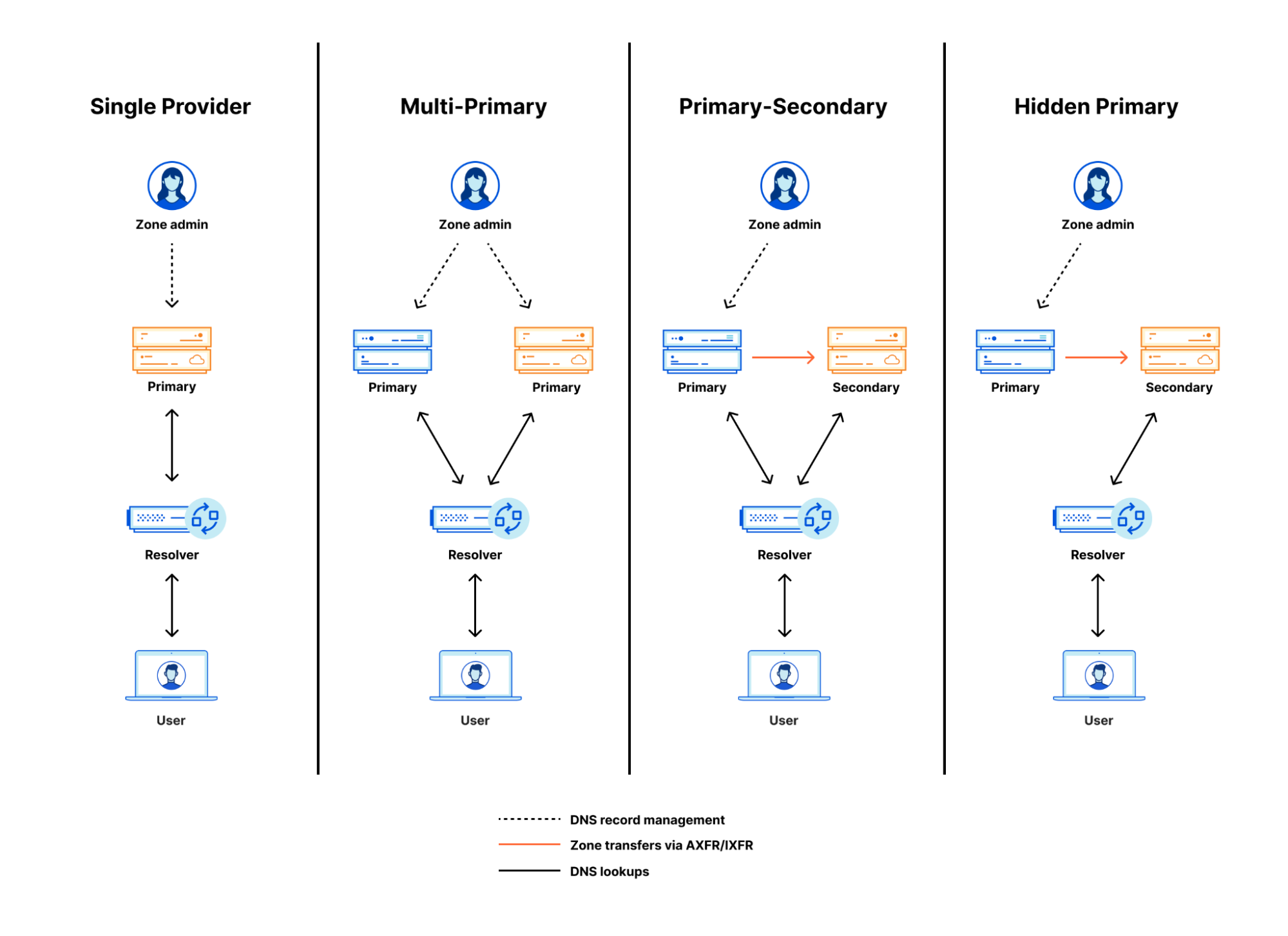

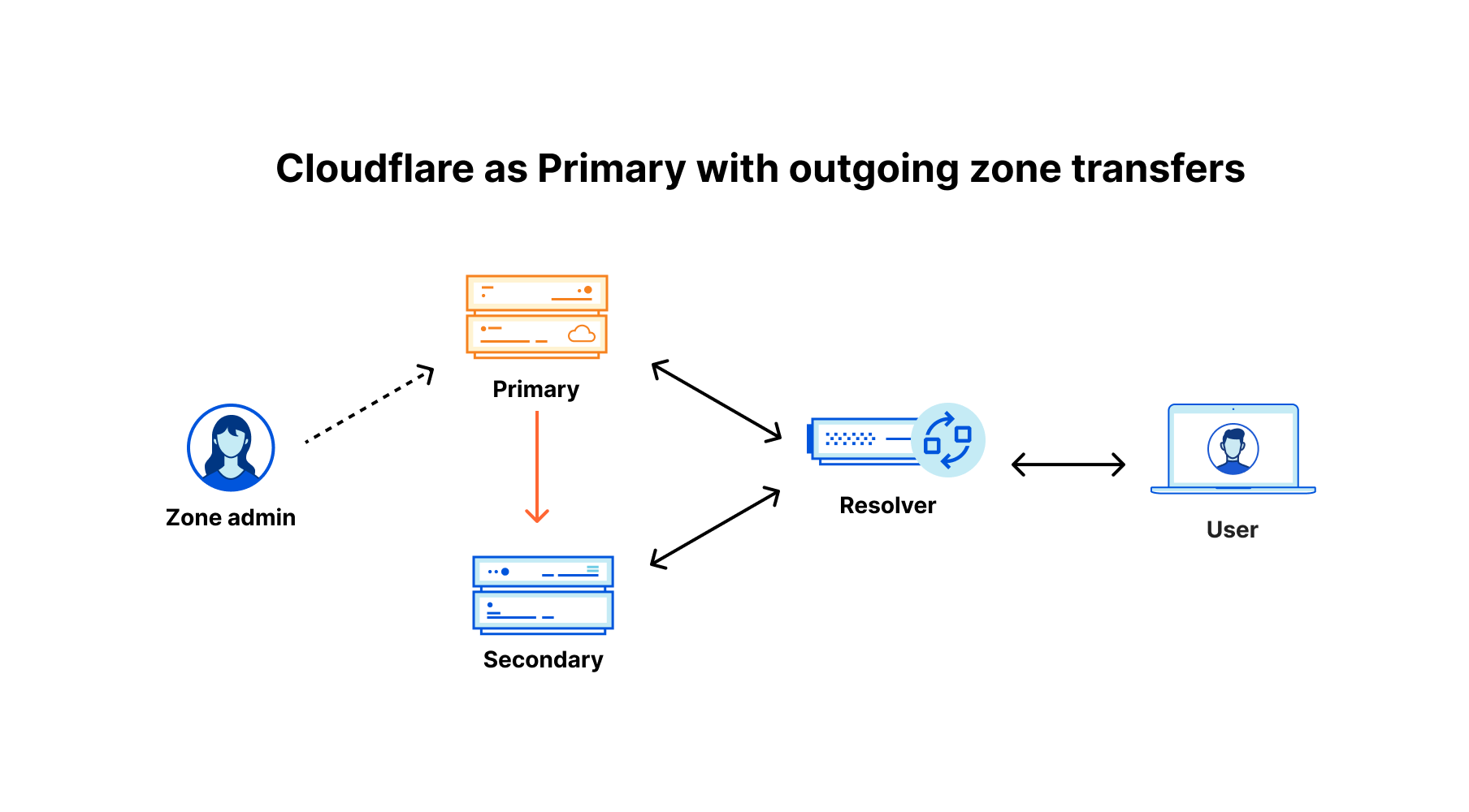

New zone-level DNS settings

Historically, we have received regular requests from our enterprise customers to adjust specific DNS settings that were not exposed via our API or dashboard, such as enabling secondary DNS overrides. When customers wanted these settings adjusted, they had to reach out to their account teams, who would change the configurations. With Foundation DNS, we are exposing the most commonly requested settings via the API and dashboard to give our customers increased flexibility with their Cloudflare authoritative DNS solution.

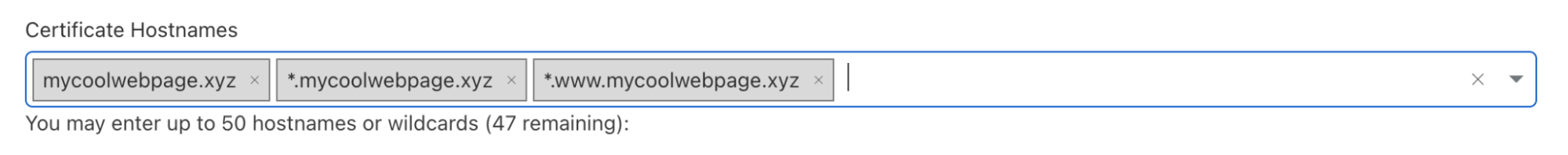

Enterprise customers can now configure the following DNS settings on their zones:

| Setting | Zone Type | Description |

|---|---|---|

| Foundation DNS advanced nameservers | Primary and secondary zones | Allows you to enable advanced nameservers on your zone. |

| Secondary DNS override | Secondary zones | Allows you to enable Secondary DNS Override on your zone in order to proxy HTTP/S traffic through Cloudflare. |

| Multi-provider DNS | Primary and secondary zones | Allows you to have multiple authoritative DNS providers while using Cloudflare as a primary nameserver. |

Unique DNSSEC keys per account and zone

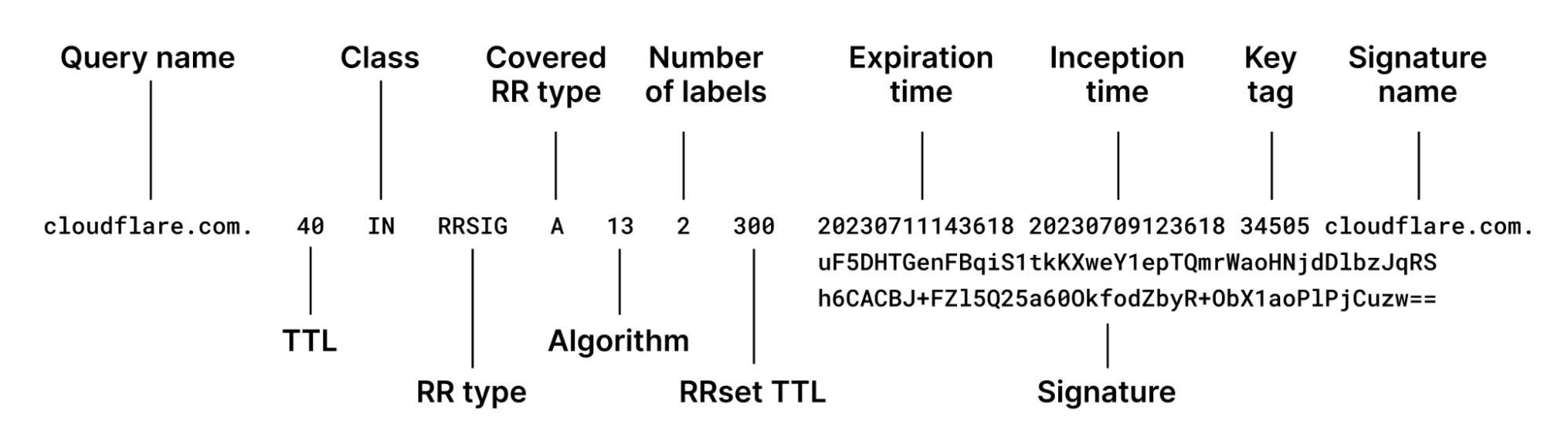

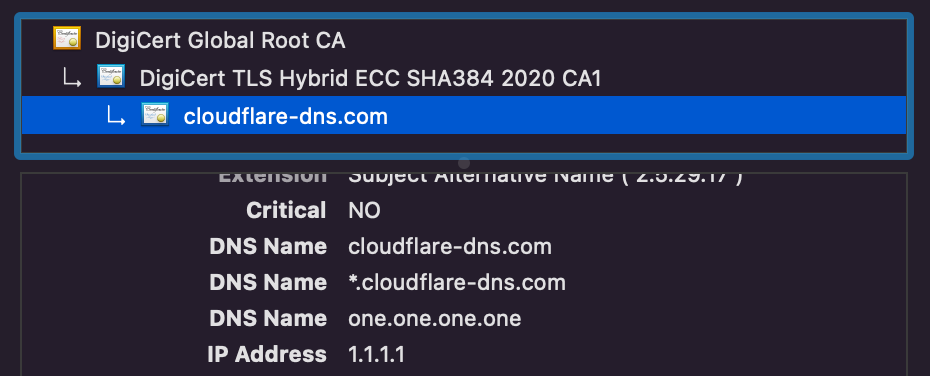

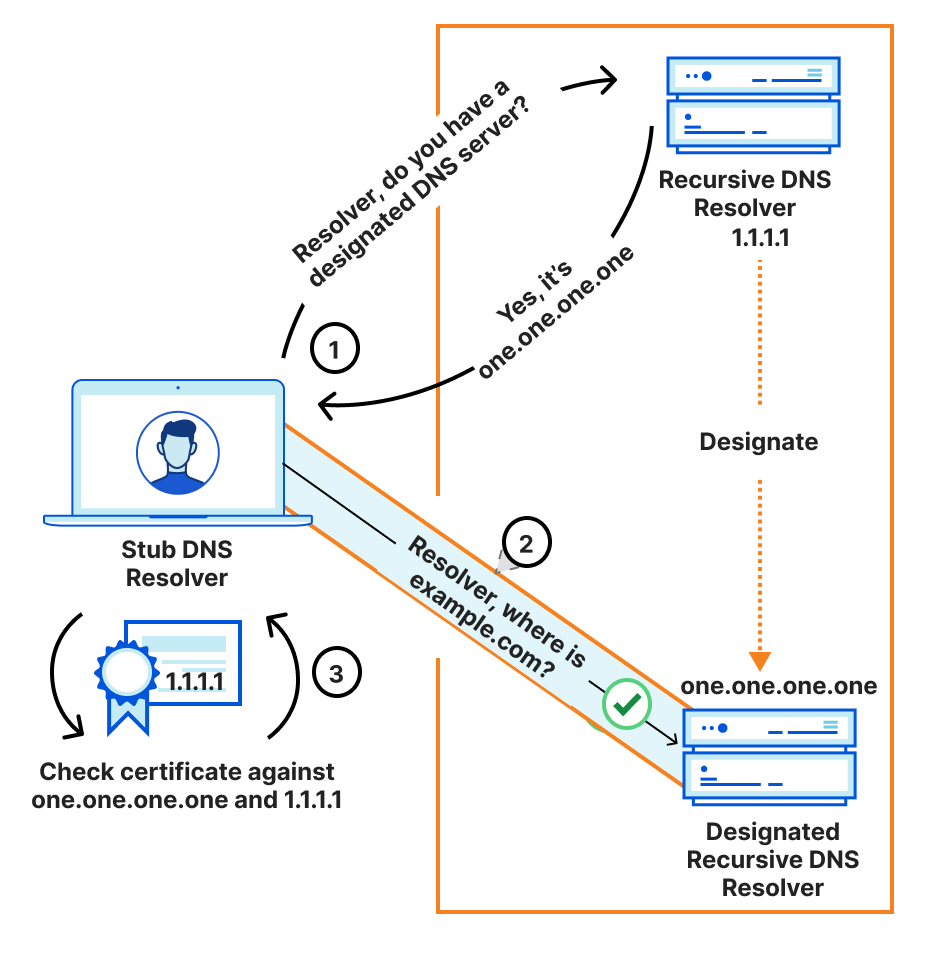

DNSSEC, which stands for Domain Name System Security Extensions, adds security to a domain or zone by providing a way to check that the response you receive for a DNS query is authentic and hasn’t been modified. DNSSEC prevents DNS cache poisoning (DNS spoofing) which helps ensure that DNS resolvers are responding to DNS queries with the correct IP addresses.

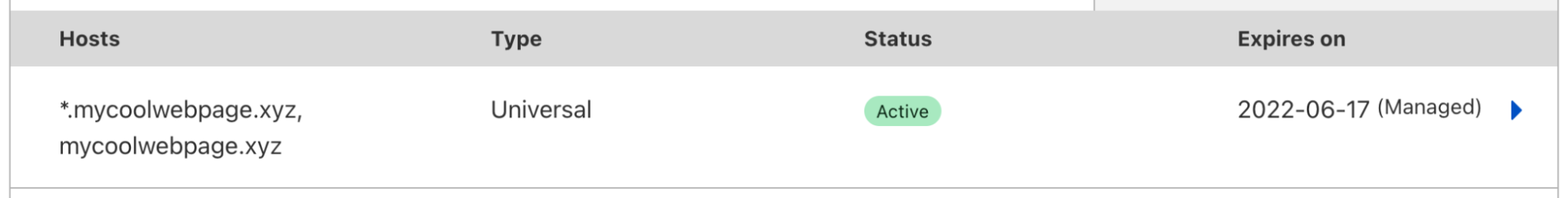

Since we launched Universal DNSSEC in 2015, we’ve made quite a few improvements, like adding support for pre-signed DNSSEC for secondary zones and multi-signer DNSSEC. By default, Cloudflare signs DNS records on the fly (live signing) as we respond to DNS queries. This allows Cloudflare to host a DNSSEC-secured domain while dynamically allocating IP addresses for the proxied origins. It also enables certain load balancing use cases since the IP addresses served in the DNS response in these cases change based on steering.

Cloudflare uses the Elliptic Curve algorithm ECDSA P-256, which is stronger than most RSA keys used today. It uses less CPU to generate signatures, making them more efficient to generate on the fly. Usually two keys are used as part of DNSSEC, the Zone Signing Key (ZSK) and the Key Signing Key (KSK). At the simplest level, the ZSK is used for signing the DNS records that are served in response to queries and the KSK is used to sign the DNSKEYs, including the ZSK to ensure its authenticity.

Today, Cloudflare uses a shared ZSK and KSK globally for all DNSSEC signing, and since we use such a strong cryptographic algorithm, we know how secure this key set is and as such, do not believe there is a need to regularly rotate the ZSK or KSK – at least for security reasons. There are customers, however, that have policies that require the rotation of these keys at certain intervals. Because of this, we’ve added the ability for our new Foundation DNS advanced nameservers to rotate both their ZSK and KSK as needed per account or per zone. This will first be available via the API and subsequently through the Cloudflare dashboard. So now, customers with strict policy requirements around their DNSSEC key rotation can meet those requirements with Cloudflare Foundation DNS.

GraphQL-based DNS analytics

For those who are not familiar with it, GraphQL is a query language for APIs and a runtime for executing those queries. It allows clients to request exactly what they need, no more, no less, enabling them to aggregate data from multiple sources through a single API call, and supports real-time updates through subscriptions.

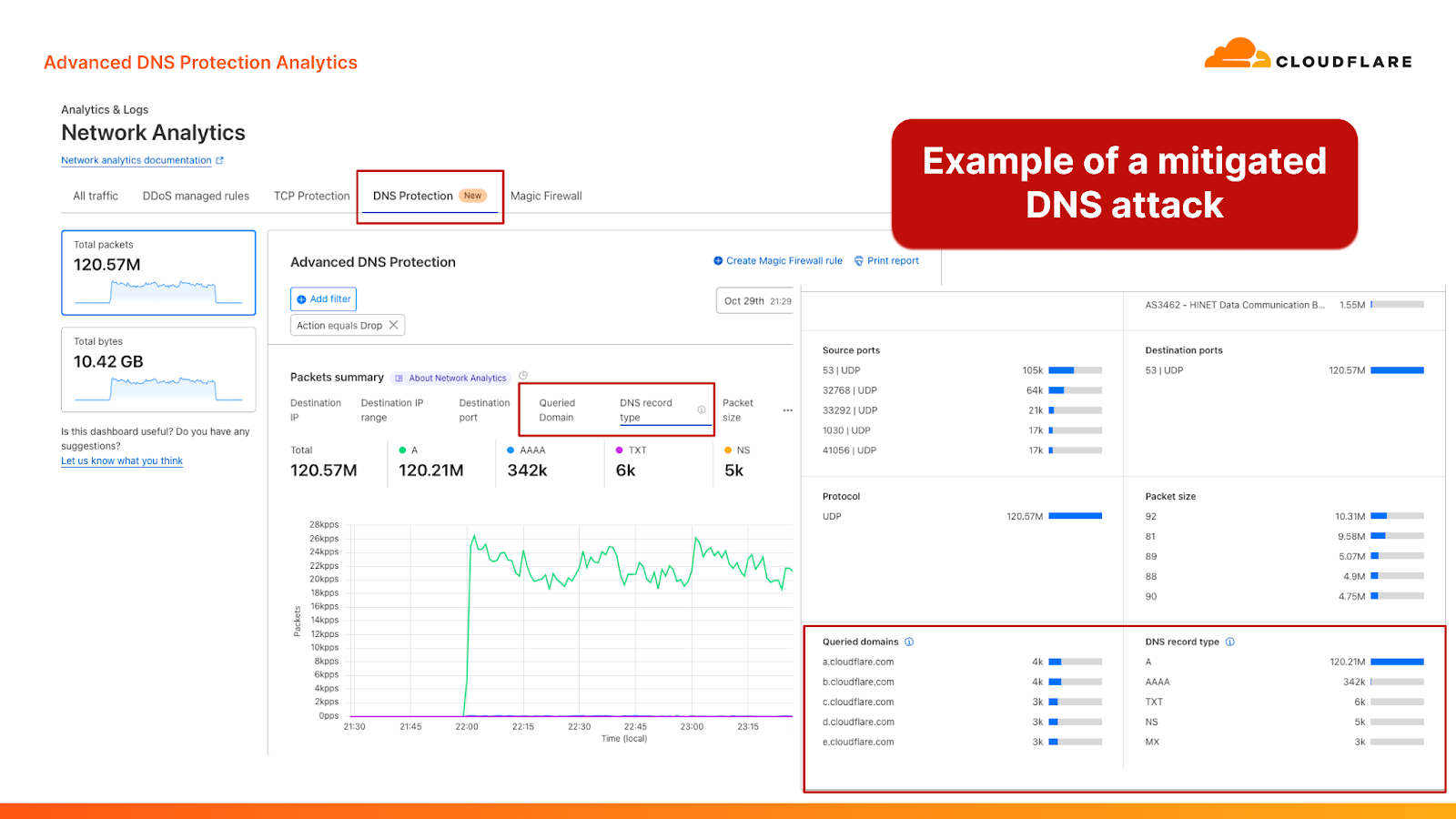

As you might know, Cloudflare has had a GraphQL API for a while now, but as part of Foundation DNS we are adding a new DNS dataset to that API that is only available with our new Foundation DNS advanced nameservers.

The new DNS dataset in our GraphQL API can be used to fetch information about the DNS queries a zone has received. This faster and more powerful alternative to our current DNS Analytics API allows you to query data from large time periods quickly and efficiently without running into limits or timeouts. The GraphQL API is more flexible with regard to which queries it accepts, and exposes more information than the DNS Analytics API.

For example, you can run this query to fetch the mean and 90th percentile processing time of your queries, grouped by source IP address, in 15 minute buckets. A query like this would be useful to see which IPs are querying your records most often for a given time range:

{

"query": "{

viewer {

zones(filter: { zoneTag: $zoneTag }) {

dnsAnalyticsAdaptiveGroups(

filter: $filter

limit: 10

orderBy: [datetime_ASC]

) {

avg {

processingTimeUs

}

quantiles {

processingTimeUsP90

}

dimensions {

datetimeFifteenMinutes

sourceIP

}

}

}

}

}",

"variables": {

"zoneTag": "<zone-tag>",

"filter": {

"datetime_geq": "2024-05-01T00:00:00Z",

"datetime_leq": "2024-06-01T00:00:00Z"

}

}

}

Previously, a query like this wouldn’t have been possible for several reasons. The first is that we have added new fields like sourceIP, which allows us to filter data based on which client IP addresses (usually resolvers) are making DNS queries. The second is that the GraphQL API query is able to process and return data from much larger time ranges. A DNS zone with sufficiently large amounts of queries was previously only able to query across a few days of traffic, while the new GraphQL API can provide data for a period of up to 31 days. We are planning further enhancements to that range, as well as how far back historical data can be stored and queried.

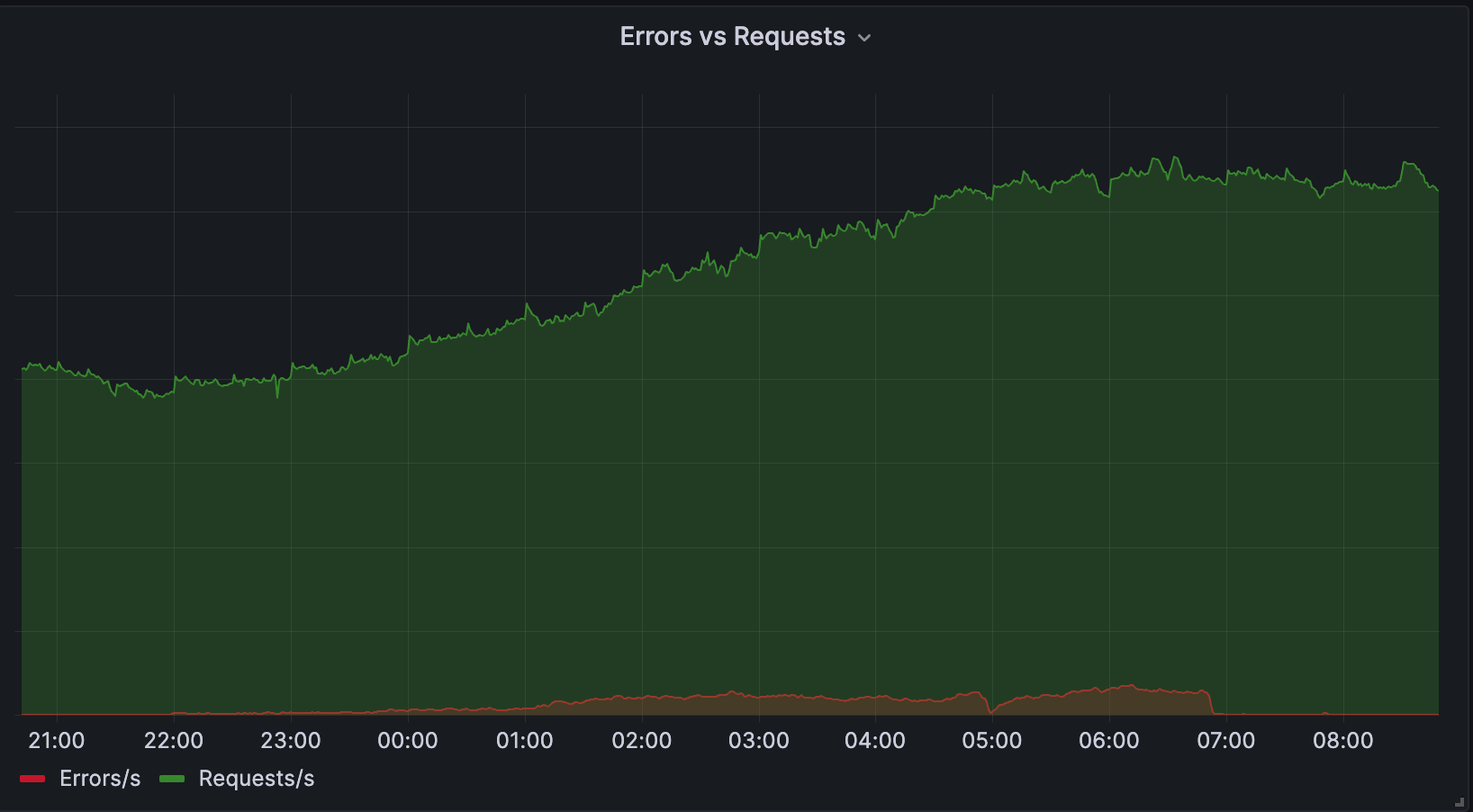

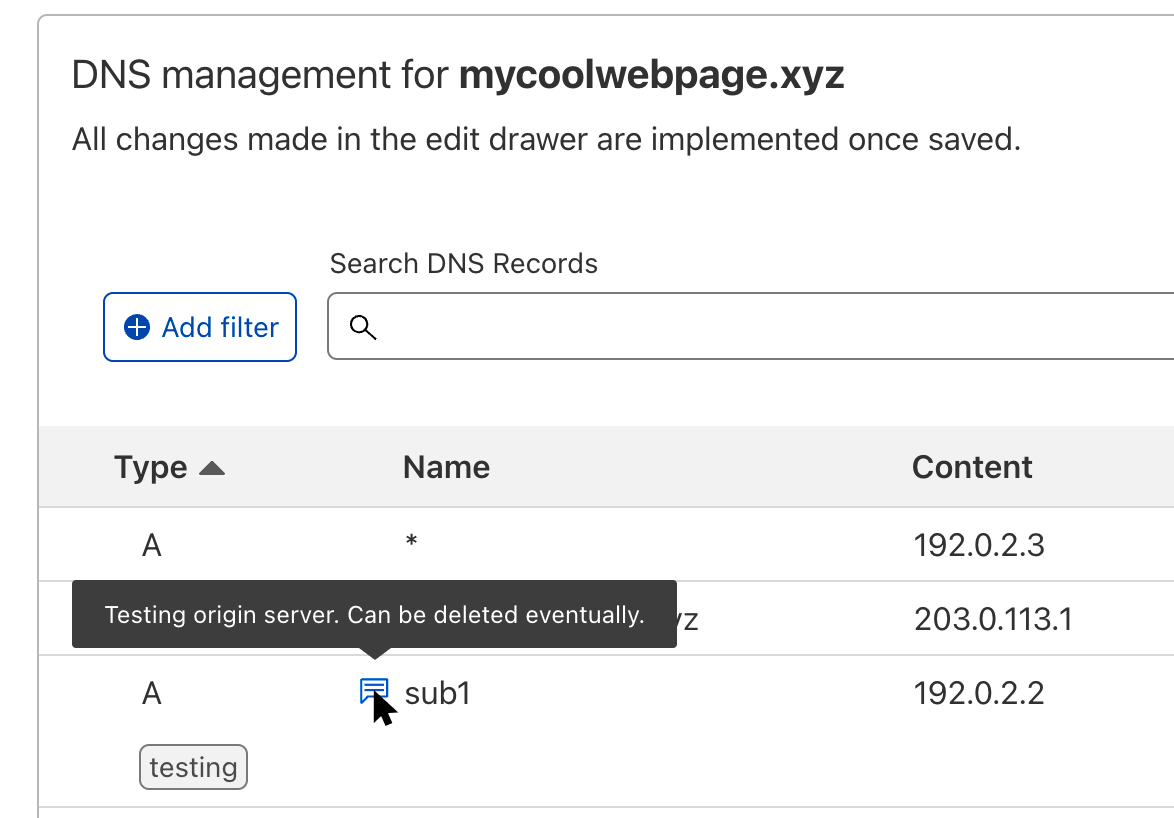

The GraphQL API also allows us to add a new DNS analytics section to the Cloudflare dashboard. Customers will be able to track the most queried records, see which data centers are answering those queries, see how many queries are being made, and much more.

The new DNS dataset in our GraphQL API and the new DNS analytics page work together to help our DNS customers to monitor, analyze, and troubleshoot their Foundation DNS deployments.

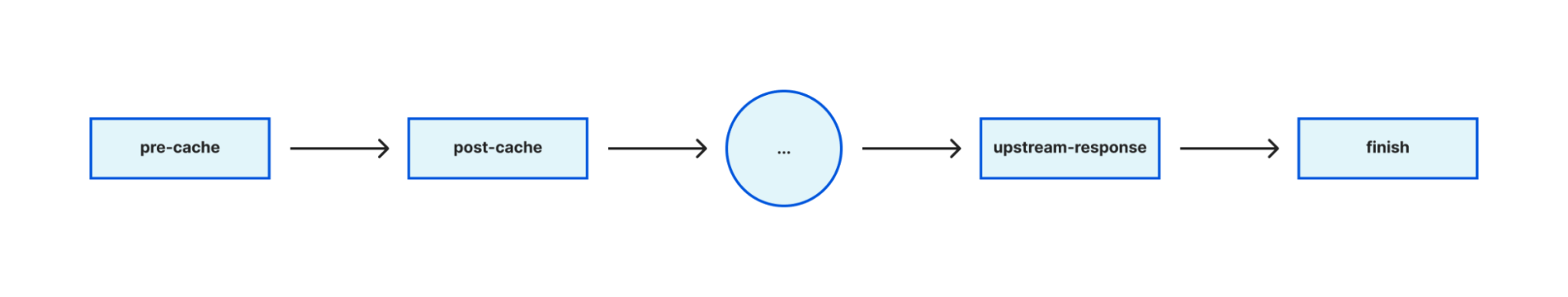

New release process

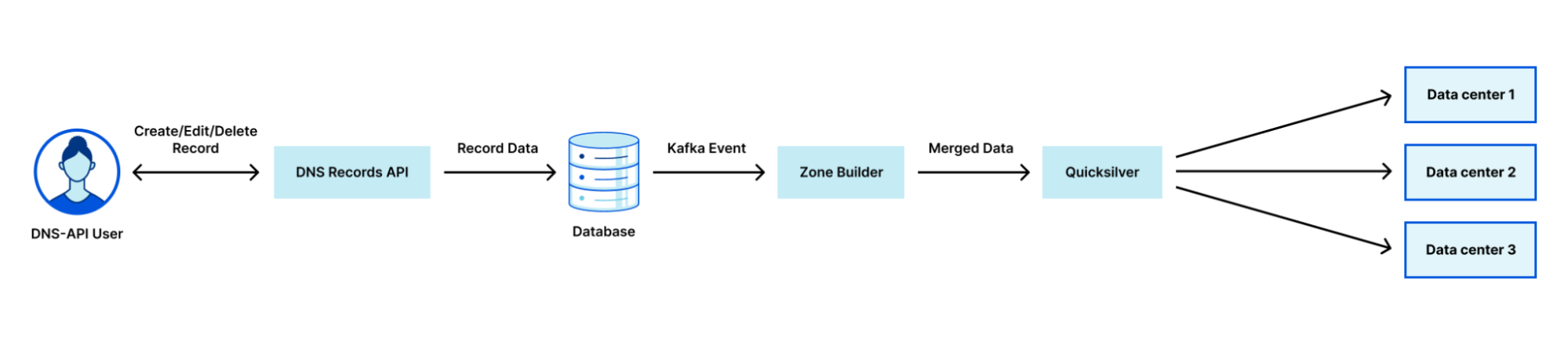

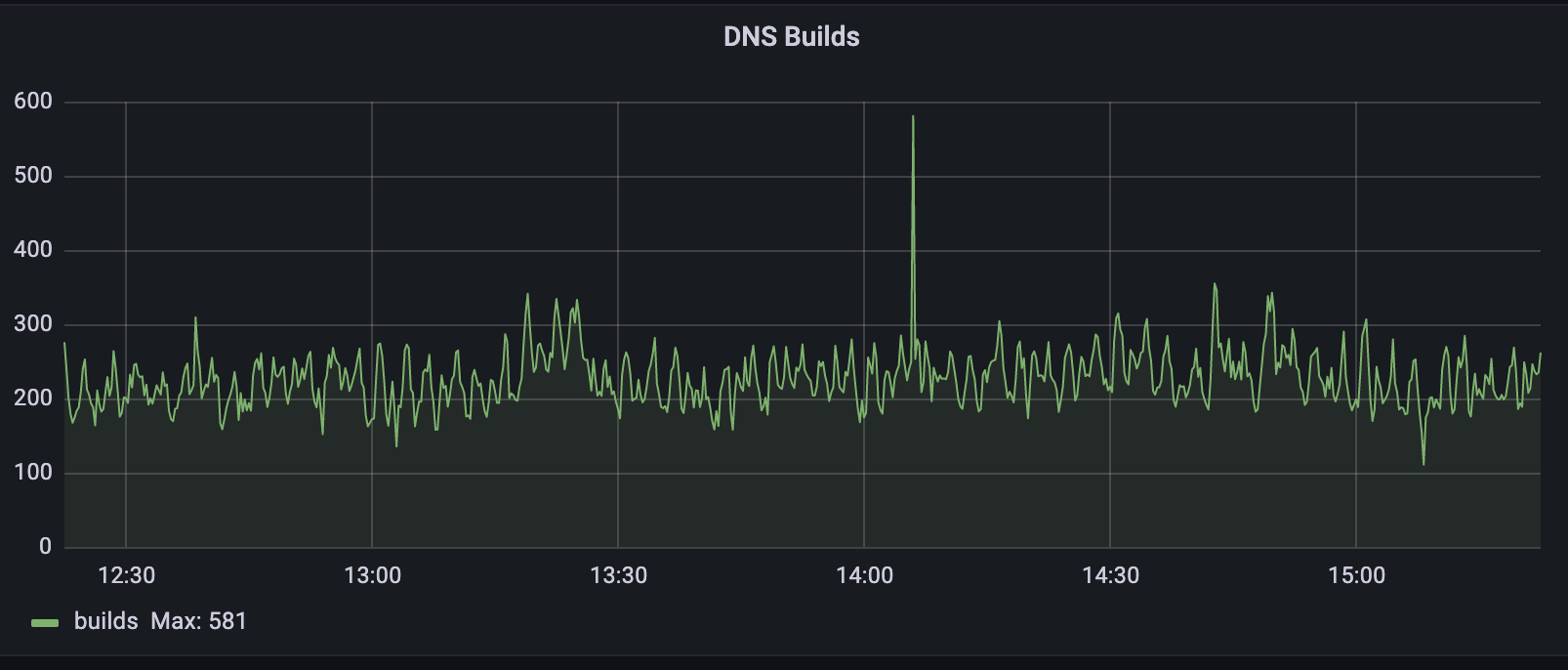

Cloudflare’s Authoritative DNS product receives software updates roughly once a week. Cloudflare has a sophisticated release process that helps prevent regressions from affecting production traffic. While uncommon, it’s possible to have issues only surface once the new release is subject to the volume and uniqueness of production traffic.

Because our enterprise customers desire stability even more than new features, new releases will be subject to a two-week soak time with our standard nameservers before our Foundation DNS advanced nameservers are upgraded. After two weeks with no issues, the Foundation DNS advanced nameservers will be upgraded as well.

Zones using Foundation DNS advanced nameservers will see increased reliability as they are better protected against regressions in new software releases.

Simpler DNS pricing

Historically, Cloudflare has charged for Authoritative DNS based on monthly DNS queries and the number of domains in the account. Our enterprise DNS customers are often interested in DNS-only zones, which are DNS zones hosted in Cloudflare that do not use our reverse proxy (layer 7) services such as our CDN, WAF, or Bot Management. With Foundation DNS, we’re making pricing simpler for the vast majority of those customers by including 10,000 DNS only domains by default. This change means most customers will only pay for the number of DNS queries they consume.

We’re also including 1 million DNS records across all domains in an account. But that doesn’t mean we can’t support more. In fact, the biggest single zone on our platform has over 3.9 million records, while our largest DNS account is just shy of 30 million DNS records spread across multiple zones. With Cloudflare DNS, there is no trouble handling even the largest deployments.

There is more to come

We are just getting started. In the future, we will add more exclusive features to Foundation DNS. One example is a highly requested feature: per-record scoped API tokens and user permissions. This will allow you to configure permissions on an even more granular level. For example, you could specify that a particular member of your account is only allowed to create and manage records of the type TXT and MX, so they don’t accidentally delete or edit address records impacting web traffic to your domain. Another example would be to specify permissions based on subdomain to further restrict the scope of specific users.

If you’re an existing enterprise customer and want to use Foundation DNS, get in touch with your account team to provision Foundation DNS on your account.

Figure 1 – DMARC Flow

Figure 1 – DMARC Flow