Post Syndicated from Suleman Ahmad original http://blog.cloudflare.com/post-quantum-to-origins/

Quantum computers pose a serious threat to security and privacy of the Internet: encrypted communication intercepted today can be decrypted in the future by a sufficiently advanced quantum computer. To counter this store-now/decrypt-later threat, cryptographers have been hard at work over the last decades proposing and vetting post-quantum cryptography (PQC), cryptography that’s designed to withstand attacks of quantum computers. After a six-year public competition, in July 2022, the US National Institute of Standards and Technology (NIST), known for standardizing AES and SHA, announced Kyber as their pick for post-quantum key agreement. Now the baton has been handed to Industry to deploy post-quantum key agreement to protect today’s communications from the threat of future decryption by a quantum computer.

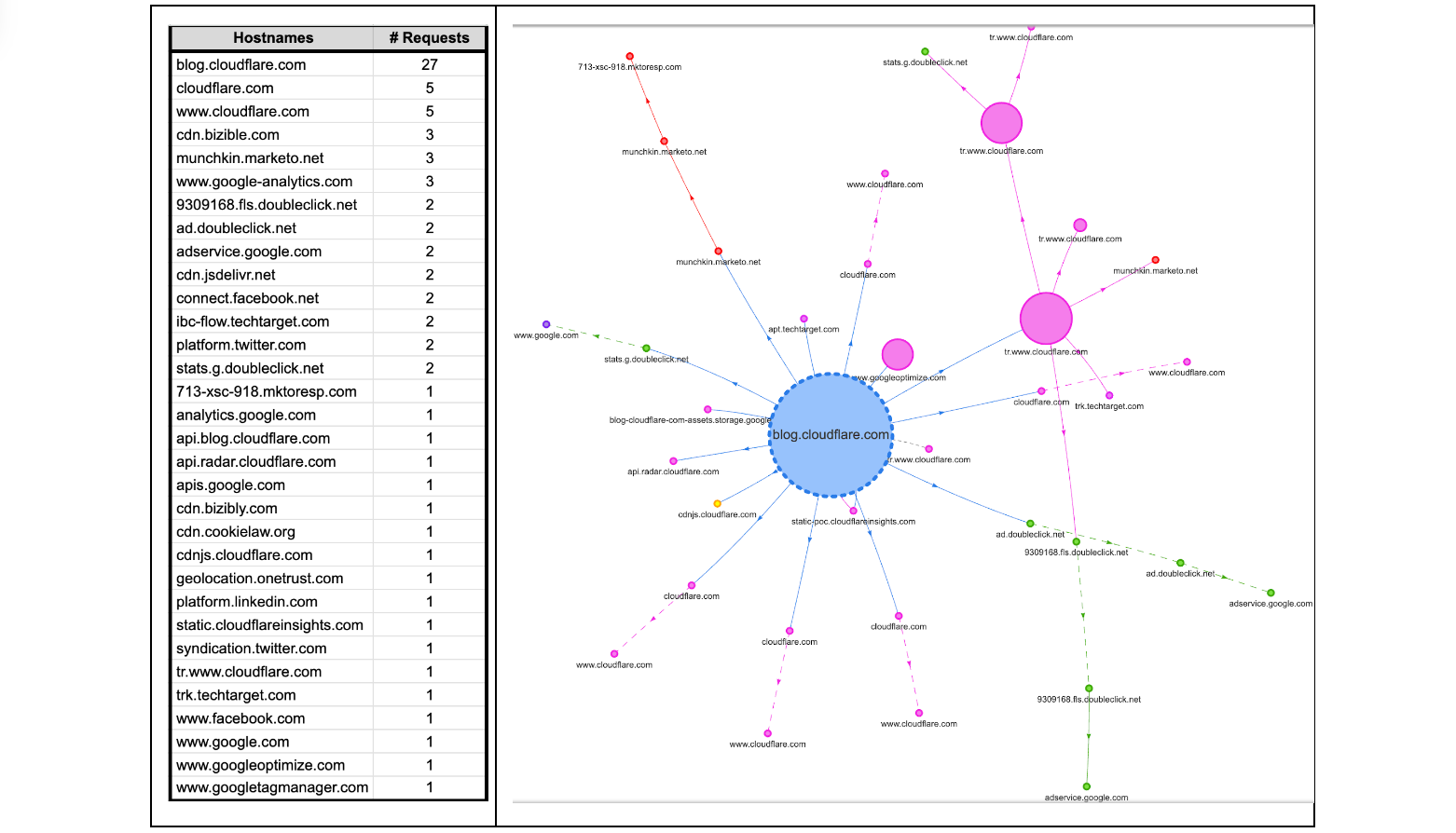

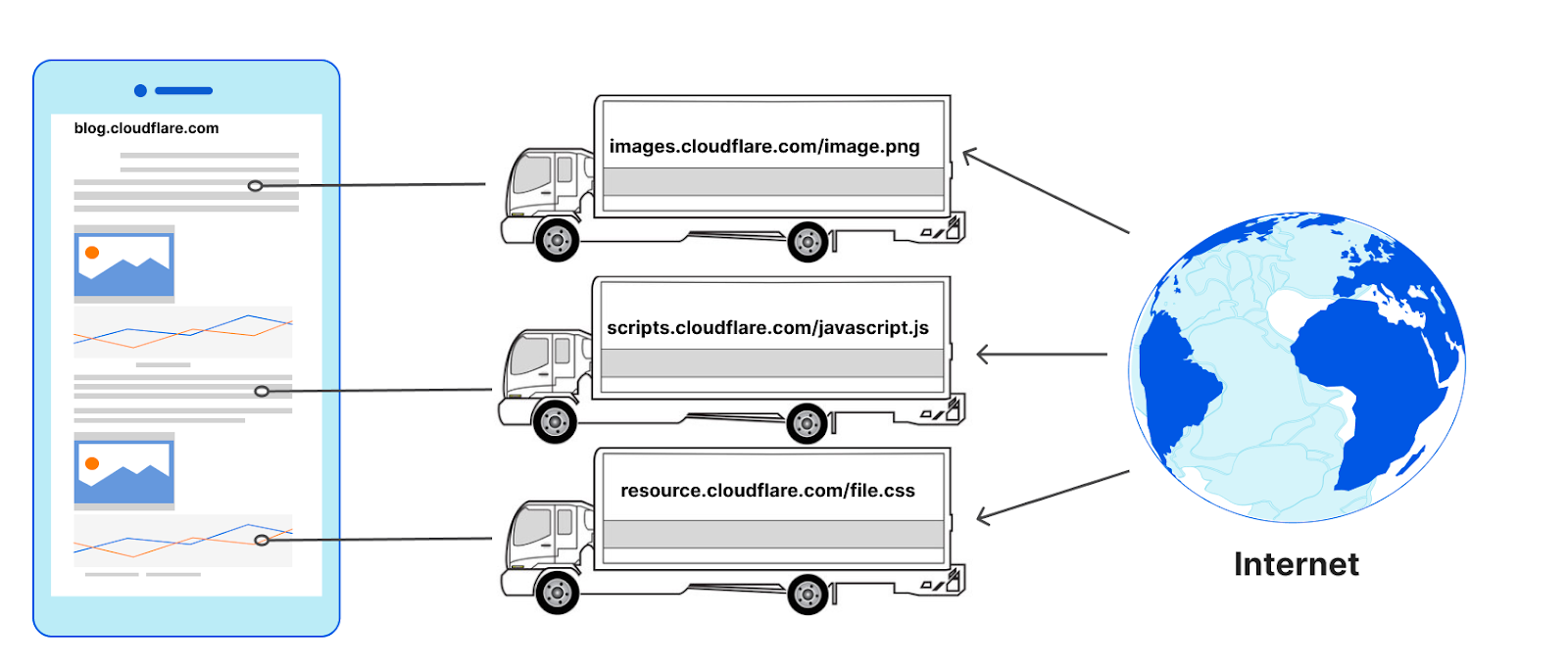

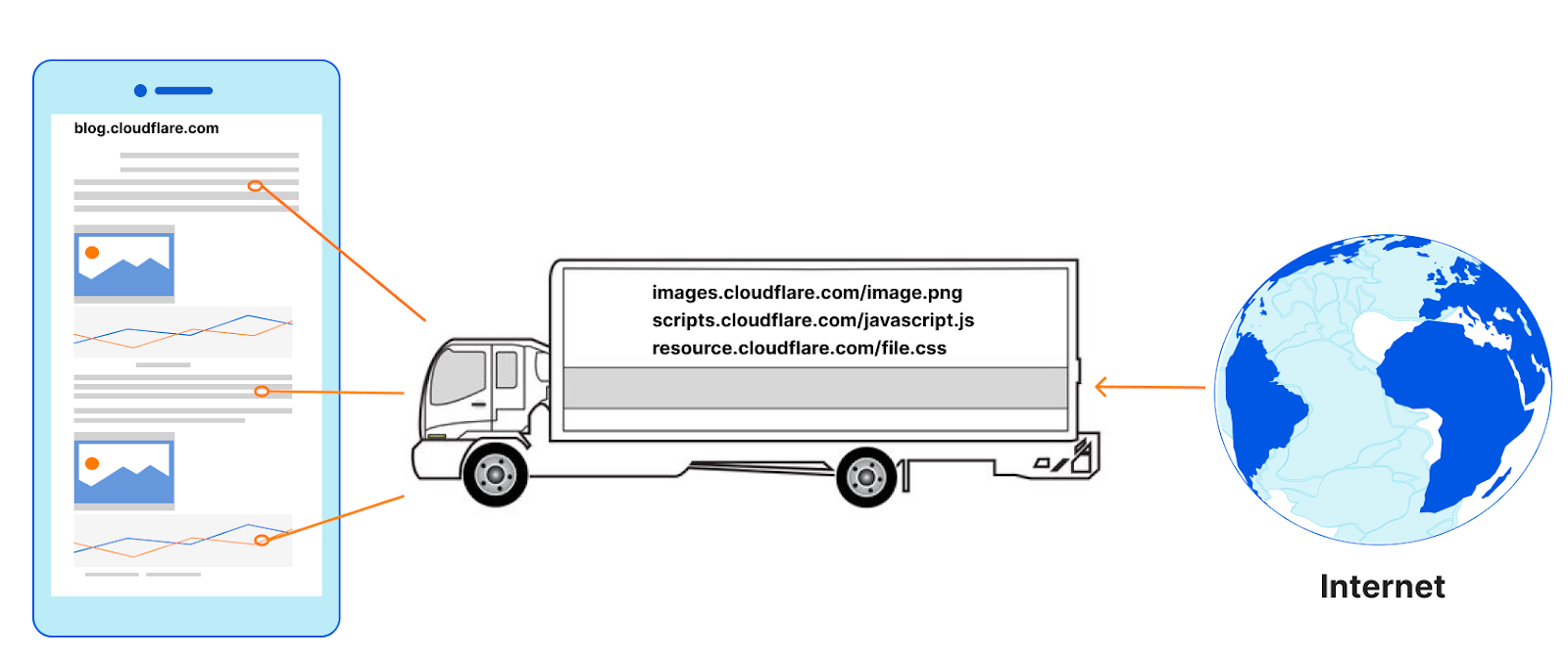

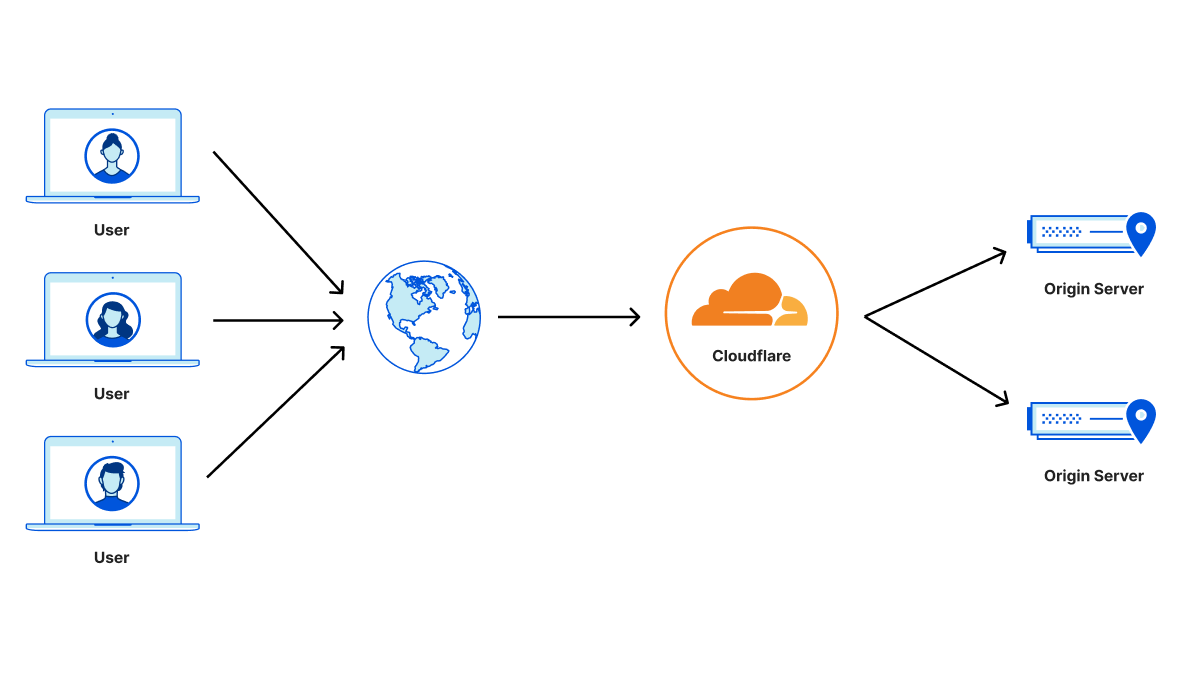

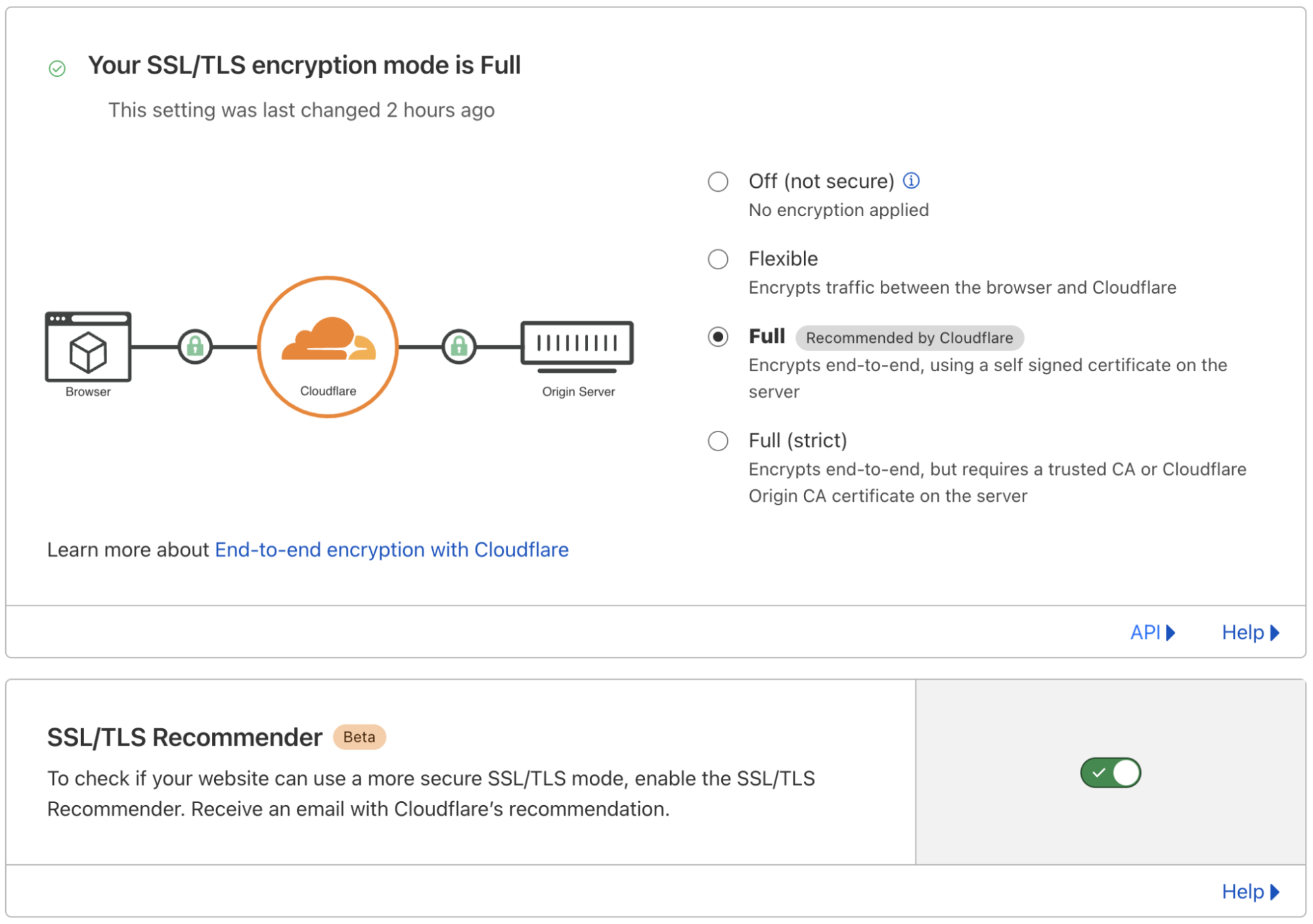

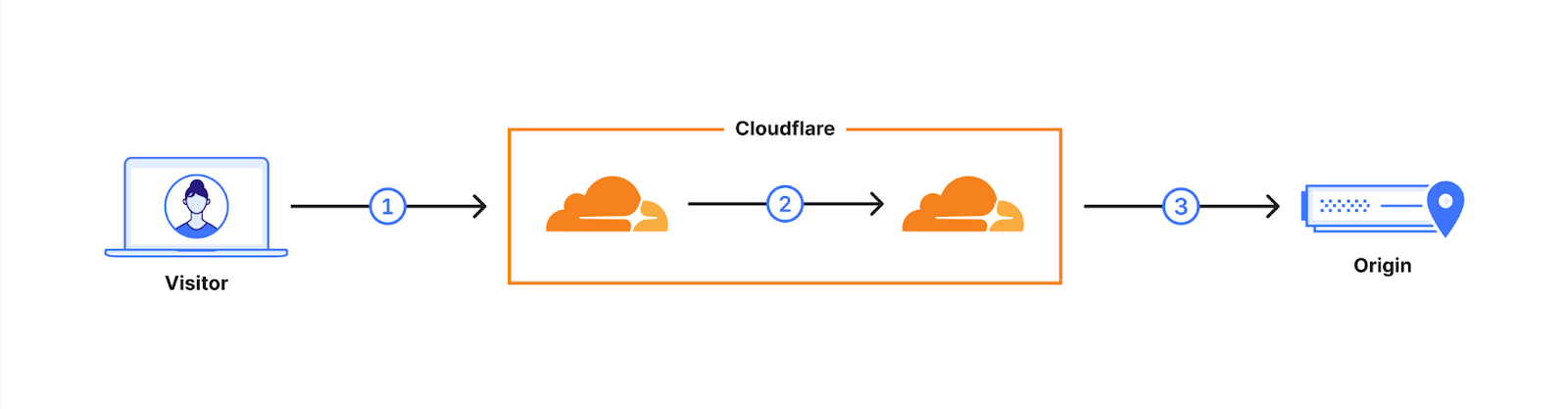

Cloudflare operates as a reverse proxy between clients (“visitors”) and customers’ web servers (“origins”), so that we can protect origin sites from attacks and improve site performance. In this post we explain how we secure the connection from Cloudflare to origin servers. To put that in context, let’s have a look at the connection involved when visiting an uncached page on a website served through Cloudflare.

The first connection is from the visitor’s browser to Cloudflare. In October 2022, we enabled X25519+Kyber as a beta for all websites and APIs served through Cloudflare. However, it takes two to tango: the connection is only secured if the browser also supports post-quantum cryptography. As of August 2023, Chrome is slowly enabling X25519+Kyber by default.

The visitor’s request is routed through Cloudflare’s network (2). We have upgraded many of these internal connections to use post-quantum cryptography, and expect to be done upgrading all of our internal connections by the end of 2024. That leaves as the final link the connection (3) between us and the origin server.

We are happy to announce that we are rolling out support for X25519+Kyber for most outbound connections, including origin servers and Cloudflare Workers fetch() calls.

| Plan | Support for post-quantum outbound connections |

|---|---|

| Free | Started roll-out. Aiming for 100% by the end of the October. |

| Pro and Business | Started roll-out. Aiming for 100% by the end of year. |

| Enterprise | Start roll-out February 2024. 100% by March 2024. |

You can skip the roll-out and opt-in your zone today, or opt-out ahead of time, using an API described below. Before rolling out this support for enterprise customers in February 2024, we will add a toggle on the dashboard to opt out.

In this post we will dive into the nitty-gritty of what we enabled; how we have to be a bit subtle to prevent breaking connections to origins that are not ready yet, and how you can add support to your (origin) server.

But before we dive in, for the impatient:

Quick start

To enable a post-quantum connection between Cloudflare and your origin server today, opt-in your zone to skip the gradual roll-out:

curl --request PUT \

--url https://api.cloudflare.com/client/v4/zones/(zone_id)/cache/origin_post_quantum_encryption \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer (API token)' \

--data '{"value": "preferred"}'

Then, make sure your server supports TLS 1.3; enable and prefer the key agreement X25519Kyber768Draft00; and ensure it’s configured with server cipher preference. For example, to configure nginx (compiled with a recent BoringSSL) like this, use

ssl_ecdh_curve X25519Kyber768Draft00:X25519;

ssl_prefer_server_ciphers on;

ssl_protocols TLSv1.3;

Replace (zone_id) and (API token) appropriately. Then, make sure your server supports TLS 1.3; enable and prefer the key agreement X25519Kyber768Draft00; and ensure it’s configured with server cipher preference. For example, to configure nginx (compiled with a recent BoringSSL) like this, use

ssl_ecdh_curve X25519Kyber768Draft00:X25519;

ssl_prefer_server_ciphers on;

ssl_protocols TLSv1.3;

To check your server is properly configured, you can use the bssl tool of BoringSSL:

$ bssl client -connect (your server):443 -curves X25519:X25519Kyber768Draft00

[...]

ECDHE curve: X25519Kyber768Draft00

[...]

We’re looking for X25519Kyber768Draft00 for a post-quantum connection as shown above instead of merely X25519.

For more client and server support, check out pq.cloudflareresearch.com. Now, let’s dive in.

Overview of a TLS 1.3 handshake

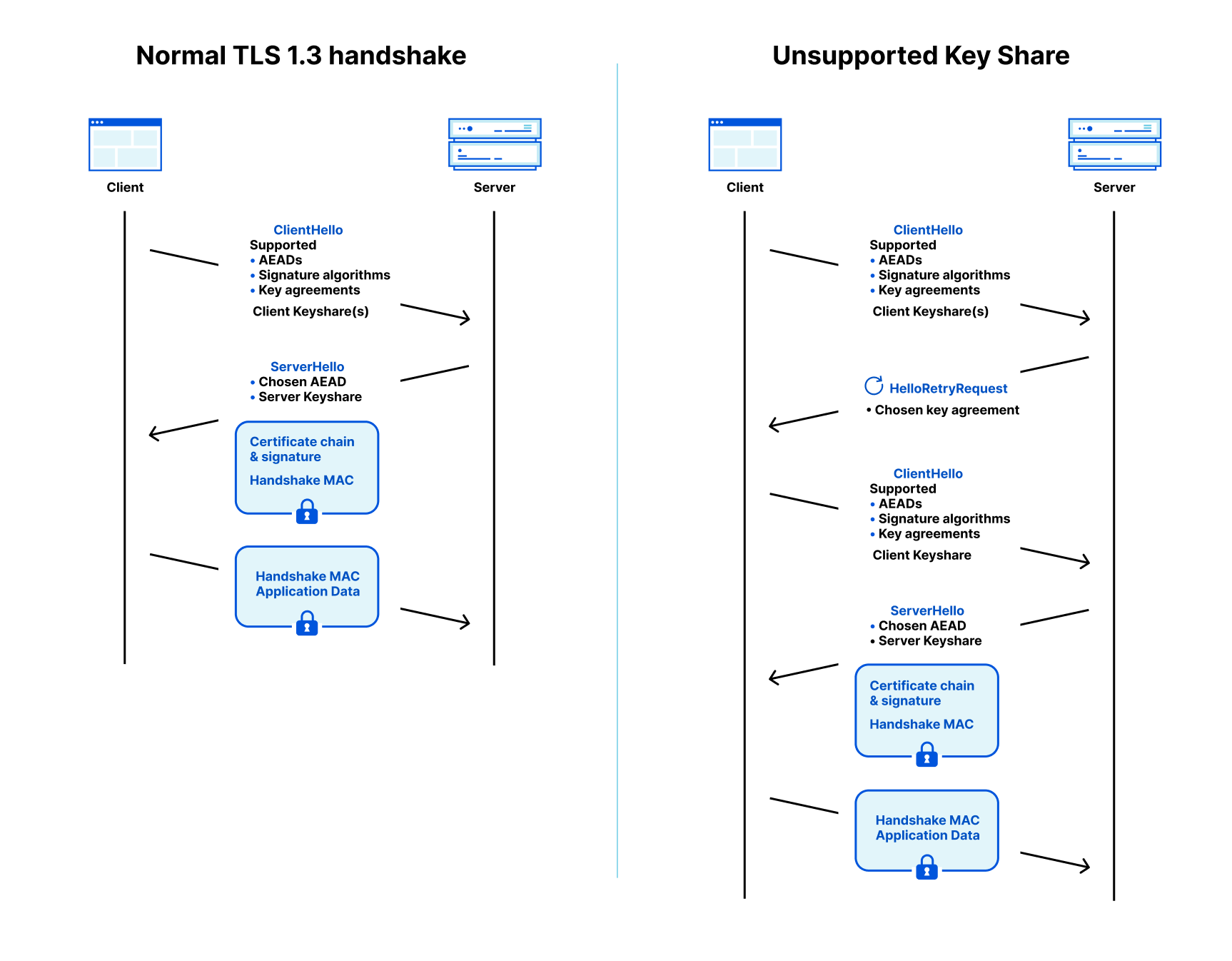

To understand how a smooth upgrade is possible, and where it might go wrong, we need to understand a few basics of the TLS 1.3 protocol, which is used to protect traffic on the Internet. A TLS connection starts with a handshake which is used to authenticate the server and derive a shared key. The browser (client) starts by sending a ClientHello message that contains among other things, the hostname (SNI) and the list of key agreement methods it supports.

To remove a round trip, the client is allowed to make a guess of what the server supports and start the key agreement by sending one or more client keyshares. That guess might be correct (on the left in the diagram below) or the client has to retry (on the right). By the way, this guessing of keyshares is a new feature of TLS 1.3, and it is the main reason why it’s faster than TLS 1.2.

In both cases the client sends a client keyshare to the server. From this client keyshare the server generates the shared key. The server then returns a server keyshare with which the client can also compute the shared key. This shared key is used to protect the rest of the connection using symmetric cryptography, such as AES.

Today X25519 is used as the key agreement in the vast majority of connections. To secure the connection against store-now/decrypt-later in the post-quantum world, a client can simply send a X25519+Kyber keyshare.

Hello! Retry Request? (HRR)

What we just described is the happy flow, where the client guessed correctly which key agreement the server supports. If the server does not support the keyshare that the client sent, then the server picks one of the supported key agreements that the client advertised, and asks for it in a HelloRetryRequest message.

This is not the only case where a server can use a HelloRetryRequest: even if the client sent keyshares that the server supports, the server is allowed to prefer a different key agreement the client advertised, and ask for it with a HelloRetryRequest. This will turn out to be very useful.

HelloRetryRequests are mostly undesirable: they add an extra round trip, and bring us back to the performance of TLS 1.2. We already had a transition of key agreement methods: back in the day P-256 was the de facto standard. When browsers couldn’t assume support for the newer X25519, some would send two keyshares, both X25519 and P-256 to prevent a HelloRetryRequest.

Also today, when enabling Kyber in Chrome, Chrome will send two keyshares: X25519 and X25519+Kyber to prevent a HelloRetryRequest. Sending two keyshares is not ideal: it requires the client to compute more, and it takes more space on the wire. This becomes more problematic when we want to send two post-quantum keyshares, as post-quantum keyshares are much larger. Talking about post-quantum keyshares, let’s have a look at X25519+Kyber.

The nitty-gritty of X25519+Kyber

The full name of the post-quantum key agreement we have enabled is X25519Kyber768Draft00, which has become the industry standard for early deployment. It is the combination (a so-called hybrid, more about that later) of two key agreements: X25519 and a preliminary version of NIST’s pick Kyber. Preliminary, because standardization of Kyber is not complete: NIST has released a draft standard for which it has requested public input. The final standard might change a little, but we do not expect any radical changes in security or performance. One notable change is the name: the NIST standard is set to be called ML-KEM. Once ML-KEM is released in 2024, we will promptly adopt support for the corresponding hybrid, and deprecate support for X25519Kyber768Draft00. We will announce deprecation on this blog and pq.cloudflareresearch.com.

Picking security level: 512 vs 768

Back in 2022, for incoming connections, we enabled hybrids with both Kyber512 and Kyber768. The difference is target security level: Kyber512 aims for the same security as AES-128, whereas Kyber768 matches up with AES-192. Contrary to popular belief, AES-128 is not broken in practice by quantum computers.

So why go with Kyber768? After years of analysis, there is no indication that Kyber512 fails to live up to its target security level. The designers of Kyber feel more comfortable, though, with the wider security margin of Kyber768, and we follow their advice.

Hybrid

It is not inconceivable though, that an unexpected improvement in cryptanalysis will completely break Kyber768. Notably Rainbow, GeMMS and SIDH survived several rounds of public review before being broken. We do have to add nuance here. For a big break you need some mathematical trick, and compared to other schemes, SIDH had a lot of mathematical attack surface. Secondly, even though a scheme participated in many rounds of review doesn’t mean it saw a lot of attention. Because of their performance characteristics, these three schemes have more niche applications, and therefore received much less scrutiny from cryptanalysts. In contrast, Kyber is the big prize: breaking it will ensure fame.

Notwithstanding, for the moment, we feel it’s wiser to stick with hybrid key agreement. We combine Kyber together with X25519, which is currently the de facto standard key agreement, so that if Kyber turns out to be broken, we retain the non-post quantum security of X25519.

Performance

Kyber is fast. Very fast. It easily beats X25519, which is already known for its speed:

| Size keyshares(in bytes) | Ops/sec (higher is better) | ||||

|---|---|---|---|---|---|

| Algorithm | PQ | Client | Server | Client | Server |

| X25519 | ❌ | 32 | 32 | 17,000 | 17,000 |

| Kyber768 | ✅ | 1,184 | 1,088 | 31,000 | 70,000 |

| X25519Kyber768Draft00 | ✅ | 1,216 | 1,120 | 11,000 | 14,000 |

Combined X25519Kyber768Draft00 is slower than X25519, but not by much. The big difference is its size: when connecting the client has to send 1,184 extra bytes for Kyber in the first message. That brings us to the next topic.

When things break, and how to move forward

Split ClientHello

As we saw, the ClientHello is the first message that is sent by the client when setting up a TLS connection. With X25519, the ClientHello almost always fits within one network packet. With Kyber, the ClientHello doesn’t fit anymore with typical packet sizes and needs to be split over two network packets.

The TLS standard allows for the ClientHello to be split in this way. However, it used to be so exceedingly rare to see a split ClientHello that there is plenty of software and hardware out there that falsely assumes it never happens.

This so-called protocol ossification is the major challenge rolling out post-quantum key agreement. Back in 2019, during earlier post-quantum experiments, middleboxes of a particular vendor dropped connections with a split ClientHello. Chrome is currently slowly ramping up the number of post-quantum connections to catch these issues early. Several reports are listed here, and luckily most vendors seem to fix issues promptly.

Over time, with the slow ramp up of browsers, many of these implementation bugs will be found and corrected. However, we cannot completely rely on this for our outbound connections since in many cases Cloudflare is the sole client to connect directly to the origin server. Thus, we must exercise caution when deploying post-quantum cryptography to ensure that we are still able to reach origin servers even in the presence of buggy implementations.

HelloRetryRequest to the rescue

To enable support for post-quantum key agreement on all outbound connections, without risking issues with split ClientHello for those servers that are not ready yet, we make clever use of HelloRetryRequest. Instead of sending a X25519+Kyber keyshare, we will only advertise support for it, and send a non-post quantum secure X25519 keyshare in the first ClientHello.

If the origin does not support X25519+Kyber, then nothing changes. One might wonder: could merely advertising support for it trip up any origins? This used to be a real concern in the past, but luckily browsers have adopted a clever mechanism called GREASE: they will send codepoints selected from unpredictable regions to make it hard to implement any software that could trip up on unknown codepoints.

If the origin does support X25519+Kyber, then it can use the HelloRetryRequest to request a post-quantum key agreement from us.

Things might still break then: for instance a malfunctioning middlebox, load-balancer, or the server software itself might still trip over the large ClientHello with X25519+Kyber sent in response to the HelloRetryRequest.

If we’re frank, the HRR trick kicks the can down the road: we as an industry will need to fix broken hardware and software before we can enable post-quantum on every last connection. The important thing though is that those past mistakes will not hold us back from securing the majority of connections. Luckily, from our experience, breakage will not be common.

So, when you have flipped the switch on your origin server, and things do break against expectation, what could be the root cause?

Debugging and examples

It’s impossible to exhaustively list all bugs that could interfere with the post-quantum connection, but we like to share a few we’ve seen.

The first step is to figure out what pieces of hardware and software are involved in the connection. Rarely it’s just the server: there could be a load-balancer, and even a humble router could be at fault.

One straightforward mistake is to conveniently assume the ClientHello is small by reserving only a small, say 1000 byte, buffer.

A variation of this is where a server uses a single call to recv() to read the ClientHello from the TCP connection. This works perfectly fine if it fits within one packet, but when split over multiple, it might require more calls.

Not all issues that we encountered relate directly to split ClientHello. For instance, servers using the Rust TLS library rustls did not implement HelloRetryRequest correctly before 0.21.7.

If you turned on post-quantum support for your origin, and hit issues, please do reach out: email [email protected].

Opting in and opting out

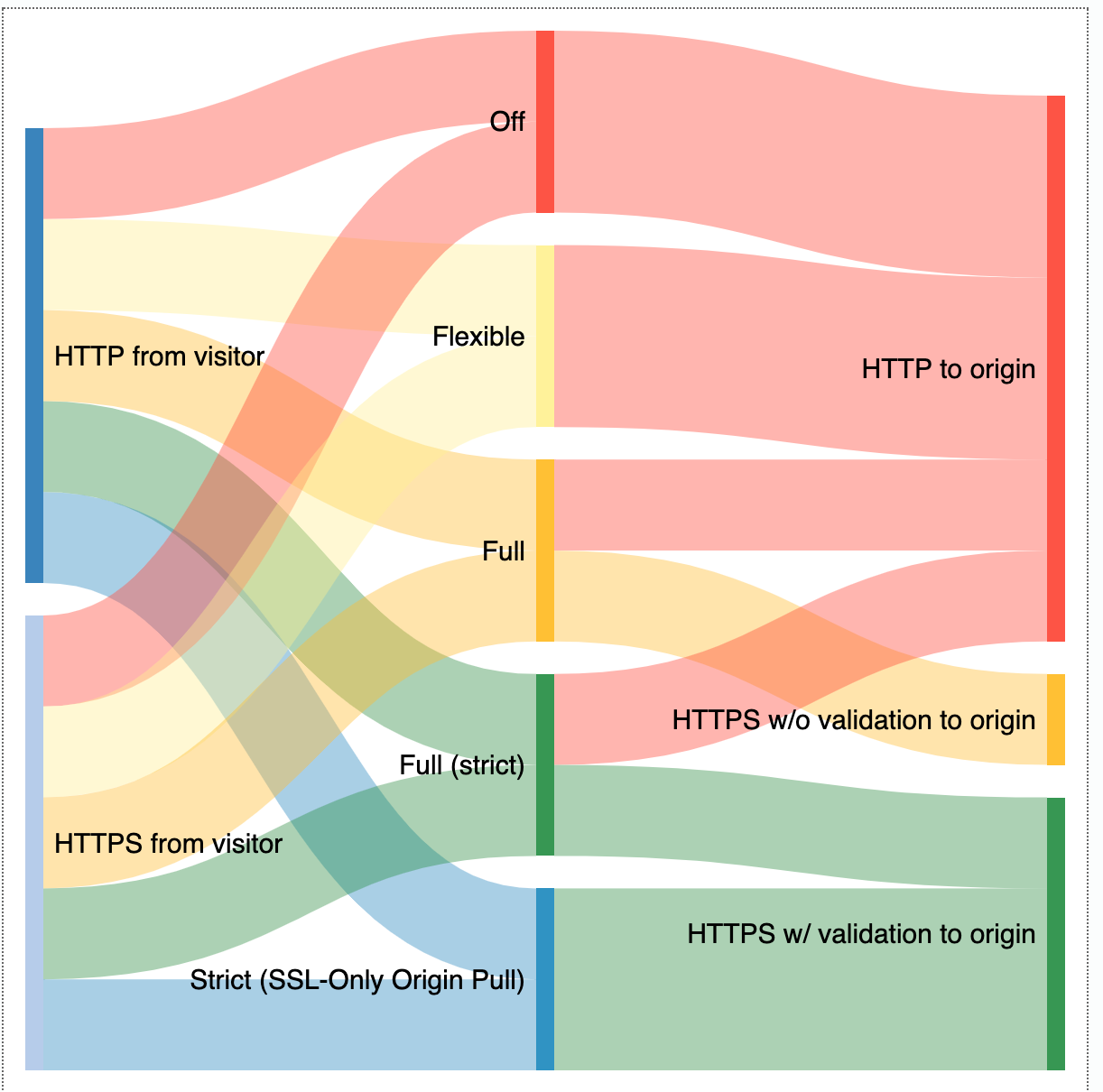

Now that you know what might lie in wait for you, let’s cover how to configure the outbound connections of your zone. There are three settings. The setting affects all outbound connections for your zone: to the origin server, but also for fetch() requests made by Workers on your zone.

| Setting | Meaning |

|---|---|

| supported | Advertise support for post-quantum key agreement, but send a classical keyshare in the first ClientHello.When the origin supports and prefers X25519+Kyber, a post-quantum connection will be established, but it incurs an extra roundtrip.This is the most compatible way to enable post-quantum. |

| preferred | Send a post-quantum keyshare in the first ClientHello.When the origin supports X25519+Kyber, a post-quantum connection will be established without an extra roundtrip.This is the most performant way to enable post-quantum. |

| off | Do not send or advertise support for post-quantum key agreement to the origin. |

| (default) | Allow us to determine the best behavior for your zone. (More about that later.) |

The setting can be adjusted using the following API call:

curl --request PUT \

--url https://api.cloudflare.com/client/v4/zones/(zone_id)/cache/origin_post_quantum_encryption \

--header 'Content-Type: application/json' \

--header 'Authorization: Bearer (API token)' \

--data '{"value": "(setting)"}'

Here, the parameters are as follows.

| Parameter | Value |

|---|---|

| setting | supported, preferred, or off, with meaning as described above |

| zone_id | Identifier of the zone to control. You can look up the zone_id in the dashboard. |

| API token | Token used to authenticate you. You can create one in the dashboard. Use create custom token and under permissions select zone → zone settings → edit. |

Testing whether your origin server is configured correctly

If you set your zone to preferred mode, you only need to check support for the proper post-quantum key agreement with your origin server. This can be done with the bssl tool of BoringSSL:

$ bssl client -connect (your server):443 -curves X25519:X25519Kyber768Draft00

[...]

ECDHE curve: X25519Kyber768Draft00

[...]

If you set your zone to supported mode, or if you wait for the gradual roll-out, you will need to make sure that your origin server prefers post-quantum key agreement even if we sent a classical keyshare in the initial ClientHello. This can be done with our fork of BoringSSL:

$ git clone https://github.com/cloudflare/boringssl-pq

[...]

$ cd boringssl-pq && cmake -B build && make -C build

$ build/bssl client -connect (your server):443 -curves X25519:X25519Kyber768Draft00 -disable-second-keyshare

[...]

ECDHE curve: X25519Kyber768Draft00

[...]

Scanning ahead to remove the extra roundtrip

With the HelloRetryRequest trick today, we can safely advertise support for post-quantum key agreement to all origins. The downside is that for those origins that do support post-quantum key agreement, we’re incurring an extra roundtrip for the HelloRetryRequest, which hurts performance.

You can remove the roundtrip by configuring your zone as preferred, but we can do better: the best setting is the one you shouldn’t have to touch.

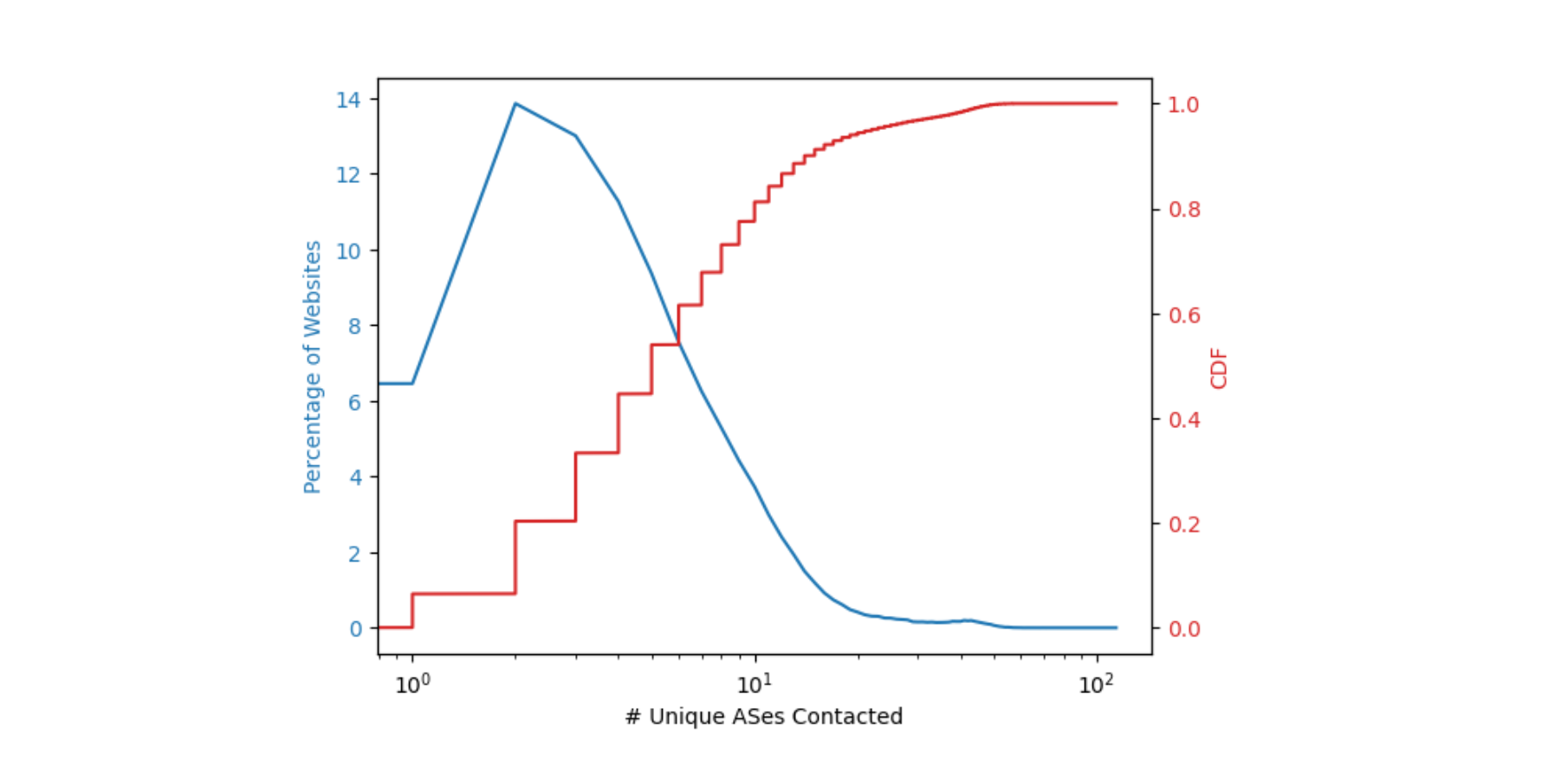

We have started scanning all active origins for support of post-quantum key agreement. Roughly every 24 hours, we will attempt a series of about ten TLS connections to your origin, to test support and preferences for the various key agreements.

Our preliminary results show that 0.5% of origins support a post-quantum connection. As expected, we found that a small fraction (<0.34%) of all origins do not properly establish a connection, when we send a post-quantum keyshare in the first ClientHello, which corresponds to the preferred setting. Unexpectedly the vast majority of these origins do return a HelloRetryRequest, but fail after receiving the second ClientHello with a classical keyshare. We are investigating the exact causes of these failures, and will reach out to vendors to help resolve them.

Later this year, we will start using these scan results to determine the best setting for zones that haven’t been configured yet. That means that for those zones whose origins support it reliably, we will send a post-quantum keyshare directly without extra roundtrip.

Also speeding up non post-quantum origins

The scanner pipeline we built will not just benefit post-quantum origins. By default we send X25519, but not every origin supports or prefers X25519. We find that 4% of origin servers will send us a HelloRetryRequest for other key agreements such as P-384.

| Key agreement | Fraction supported | Fraction preferred |

|---|---|---|

| X25519 | 96% | 96% |

| P-256 | 97% | 0.6% |

| P-384 | 89% | 2.3% |

| P-521 | 82% | 0.1% |

| X25519Kyber768Draft00 | 0.5% | 0.5% |

Also, later this year, we will use these scan results to directly send the most preferred keyshare to your origin removing the need for an extra roundtrip caused by HRR.

Wrapping up

To mitigate the store-now/decrypt-later threat, and ensure the Internet stays encrypted, the IT industry needs to work together to roll out post-quantum cryptography. We’re excited that today we’re rolling out support for post-quantum secure outbound connections: connections between Cloudflare and the origins.

We would love it if you would try and enable post-quantum key agreement on your origin. Please, do share your experiences, or reach out for any questions: [email protected].

To follow the latest developments of our deployment of post-quantum cryptography, and client/server support, check out pq.cloudflareresearch.com and keep an eye on this blog.