Post Syndicated from Luke Valenta original https://blog.cloudflare.com/privacy-preserving-compromised-credential-checking/

Today we’re announcing a public demo and an open-sourced Go implementation of a next-generation, privacy-preserving compromised credential checking protocol called MIGP (“Might I Get Pwned”, a nod to Troy Hunt’s “Have I Been Pwned”). Compromised credential checking services are used to alert users when their credentials might have been exposed in data breaches. Critically, the ‘privacy-preserving’ property of the MIGP protocol means that clients can check for leaked credentials without leaking any information to the service about the queried password, and only a small amount of information about the queried username. Thus, not only can the service inform you when one of your usernames and passwords may have become compromised, but it does so without exposing any unnecessary information, keeping credential checking from becoming a vulnerability itself. The ‘next-generation’ property comes from the fact that MIGP advances upon the current state of the art in credential checking services by allowing clients to not only check if their exact password is present in a data breach, but to check if similar passwords have been exposed as well.

For example, suppose your password last year was amazon20\$, and you change your password each year (so your current password is amazon21\$). If last year’s password got leaked, MIGP could tell you that your current password is weak and guessable as it is a simple variant of the leaked password.

The MIGP protocol was designed by researchers at Cornell Tech and the University of Wisconsin-Madison, and we encourage you to read the paper for more details. In this blog post, we provide motivation for why compromised credential checking is important for security hygiene, and how the MIGP protocol improves upon the current generation of credential checking services. We then describe our implementation and the deployment of MIGP within Cloudflare’s infrastructure.

Our MIGP demo and public API are not meant to replace existing credential checking services today, but rather demonstrate what is possible in the space. We aim to push the envelope in terms of privacy and are excited to employ some cutting-edge cryptographic primitives along the way.

The threat of data breaches

Data breaches are rampant. The regularity of news articles detailing how tens or hundreds of millions of customer records have been compromised have made us almost numb to the details. Perhaps we all hope to stay safe just by being a small fish in the middle of a very large school of similar fish that is being predated upon. But we can do better than just hope that our particular authentication credentials are safe. We can actually check those credentials against known databases of the very same compromised user information we learn about from the news.

Many of the security breaches we read about involve leaked databases containing user details. In the worst cases, user data entered during account registration on a particular website is made available (often offered for sale) after a data breach. Think of the addresses, password hints, credit card numbers, and other private details you have submitted via an online form. We rely on the care taken by the online services in question to protect those details. On top of this, consider that the same (or quite similar) usernames and passwords are commonly used on more than one site. Our information across all of those sites may be as vulnerable as the site with the weakest security practices. Attackers take advantage of this fact to actively compromise accounts and exploit users every day.

Credential stuffing is an attack in which malicious parties use leaked credentials from an account on one service to attempt to log in to a variety of other services. These attacks are effective because of the prevalence of reused credentials across services and domains. After all, who hasn’t at some point had a favorite password they used for everything? (Quick plug: please use a password manager like LastPass to generate unique and complex passwords for each service you use.)

Website operators have (or should have) a vested interest in making sure that users of their service are using secure and non-compromised credentials. Given the sophistication of techniques employed by malevolent actors, the standard requirement to “include uppercase, lowercase, digit, and special characters” really is not enough (and can be actively harmful according to NIST’s latest guidance). We need to offer better options to users that keep them safe and preserve the privacy of vulnerable information. Dealing with account compromise and recovery is an expensive process for all parties involved.

Users and organizations need a way to know if their credentials have been compromised, but how can they do it? One approach is to scour dark web forums for data breach torrent links, download and parse gigabytes or terabytes of archives to your laptop, and then search the dataset to see if their credentials have been exposed. This approach is not workable for the majority of Internet users and website operators, but fortunately there’s a better way — have someone with terabytes to spare do it for you!

Making compromise checking fast and easy

This is exactly what compromised credential checking services do: they aggregate breach datasets and make it possible for a client to determine whether a username and password are present in the breached data. Have I Been Pwned (HIBP), launched by Troy Hunt in 2013, was the first major public breach alerting site. It provides a service, Pwned Passwords, where users can efficiently check if their passwords have been compromised. The initial version of Pwned Passwords required users to send the full password hash to the service to check if it appears in a data breach. In a 2018 collaboration with Cloudflare, the service was upgraded to allow users to run range queries over the password dataset, leaking only the salted hash prefix rather than the entire hash. Cloudflare continues to support the HIBP project by providing CDN and security support for organizations to download the raw Pwned Password datasets.

The HIBP approach was replicated by Google Password Checkup (GPC) in 2019, with the primary difference that GPC alerts are based on username-password pairs instead of passwords alone, which limits the rate of false positives. Enzoic and Microsoft Password Monitor are two other similar services. This year, Cloudflare also released Exposed Credential Checks as part of our Web Application Firewall (WAF) to help inform opted-in website owners when login attempts to their sites use compromised credentials. In fact, we use MIGP on the backend for this service to ensure that plaintext credentials never leave the edge server on which they are being processed.

Most standalone credential checking services work by having a user submit a query containing their password’s or username-password pair’s hash prefix. However, this leaks some information to the service, which could be problematic if the service turns out to be malicious or is compromised. In a collaboration with researchers at Cornell Tech published at CCS’19, we showed just how damaging this leaked information can be. Malevolent actors with access to the data shared with most credential checking services can drastically improve the effectiveness of password-guessing attacks. This left open the question: how can you do compromised credential checking without sharing (leaking!) vulnerable credentials to the service provider itself?

What does a privacy-preserving credential checking service look like?

In the aforementioned CCS’19 paper, we proposed an alternative system in which only the hash prefix of the username is exposed to the MIGP server (independent work out of Google and Stanford proposed a similar system). No information about the password leaves the user device, alleviating the risk of password-guessing attacks. These credential checking services help to preserve password secrecy, but still have a limitation: they can only alert users if the exact queried password appears in the breach.

The present evolution of this work, Might I Get Pwned (MIGP), proposes a next-generation similarity-aware compromised credential checking service that supports checking if a password similar to the one queried has been exposed in the data breach. This approach supports the detection of credential tweaking attacks, an advanced version of credential stuffing.

Credential tweaking takes advantage of the fact that many users, when forced to change their password, use simple variants of their original password. Rather than just attempting to log in using an exact leaked password, say ‘password123’, a credential tweaking attacker might also attempt to log in with easily-predictable variants of the password such as ‘password124’ and ‘password123!’.

There are two main mechanisms described in the MIGP paper to add password variant support: client-side generation and server-side precomputation. With client-side generation, the client simply applies a series of transform rules to the password to derive the set of variants (e.g., truncating the last letter or adding a ‘!’ at the end), and runs multiple queries to the MIGP service with each username and password variant pair. The second approach is server-side precomputation, where the server applies the transform rules to generate the password variants when encrypting the dataset, essentially treating the password variants as additional entries in the breach dataset. The MIGP paper describes tradeoffs between the two approaches and techniques for generating variants in more detail. Our demo service includes variant support via server-side precomputation.

Breach extraction attacks and countermeasures

One challenge for credential checking services are breach extraction attacks, in which an adversary attempts to learn username-password pairs that are present in the breach dataset (which might not be publicly available) so that they can attempt to use them in future credential stuffing or tweaking attacks. Similarity-aware credential checking services like MIGP can make these attacks more effective, since adversaries can potentially check for more breached credentials per API query. Fortunately, additional measures can be incorporated into the protocol to help counteract these attacks. For example, if it is problematic to leak the number of ciphertexts in a given bucket, dummy entries and padding can be employed, or an alternative length-hiding bucket format can be used. Slow hashing and API rate limiting are other common countermeasures that credential checking services can deploy to slow down breach extraction attacks. For instance, our demo service applies the memory-hard slow hash algorithm scrypt to credentials as part of the key derivation function to slow down these attacks.

Let’s now get into the nitty-gritty of how the MIGP protocol works. For readers not interested in the cryptographic details, feel free to skip to the demo below!

MIGP protocol

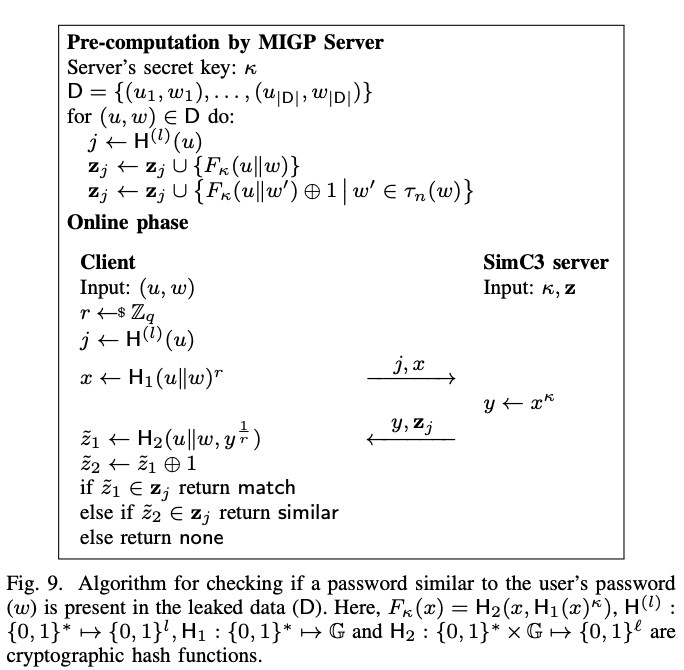

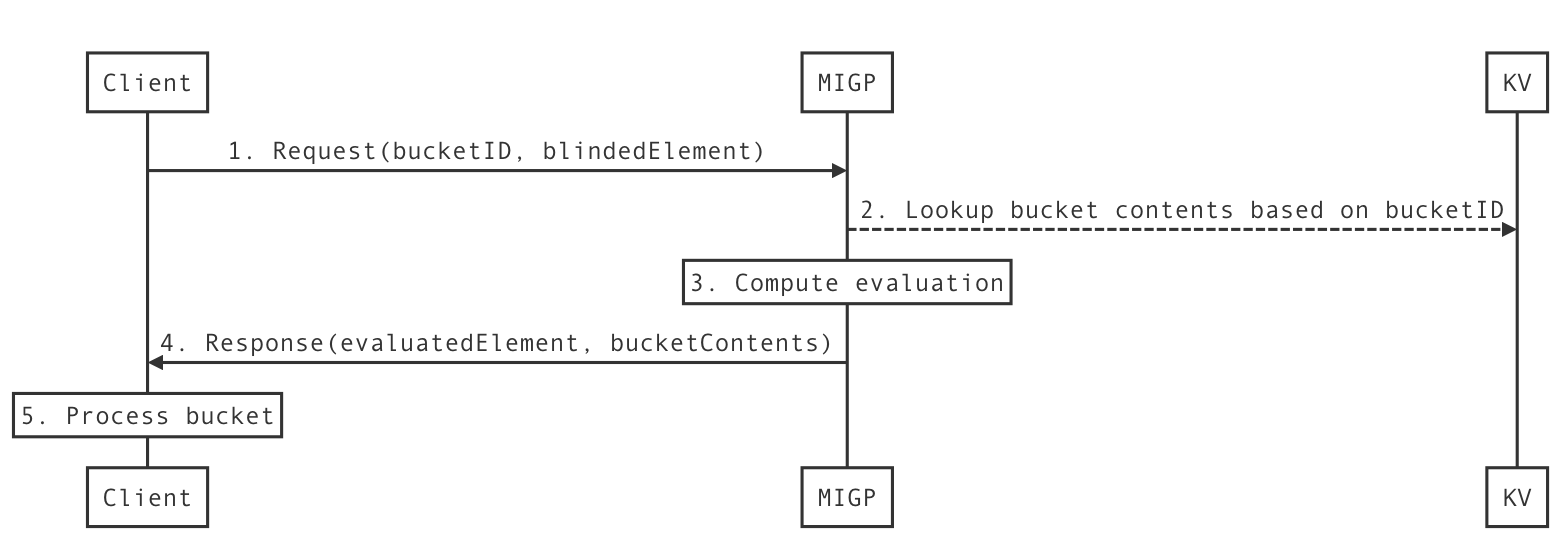

There are two parties involved in the MIGP protocol: the client and the server. The server has access to a dataset of plaintext breach entries (username-password pairs), and a secret key used for both the precomputation and the online portions of the protocol. In brief, the client performs some computation over the username and password and sends the result to the server; the server then returns a response that allows the client to determine if their password (or a similar password) is present in the breach dataset.

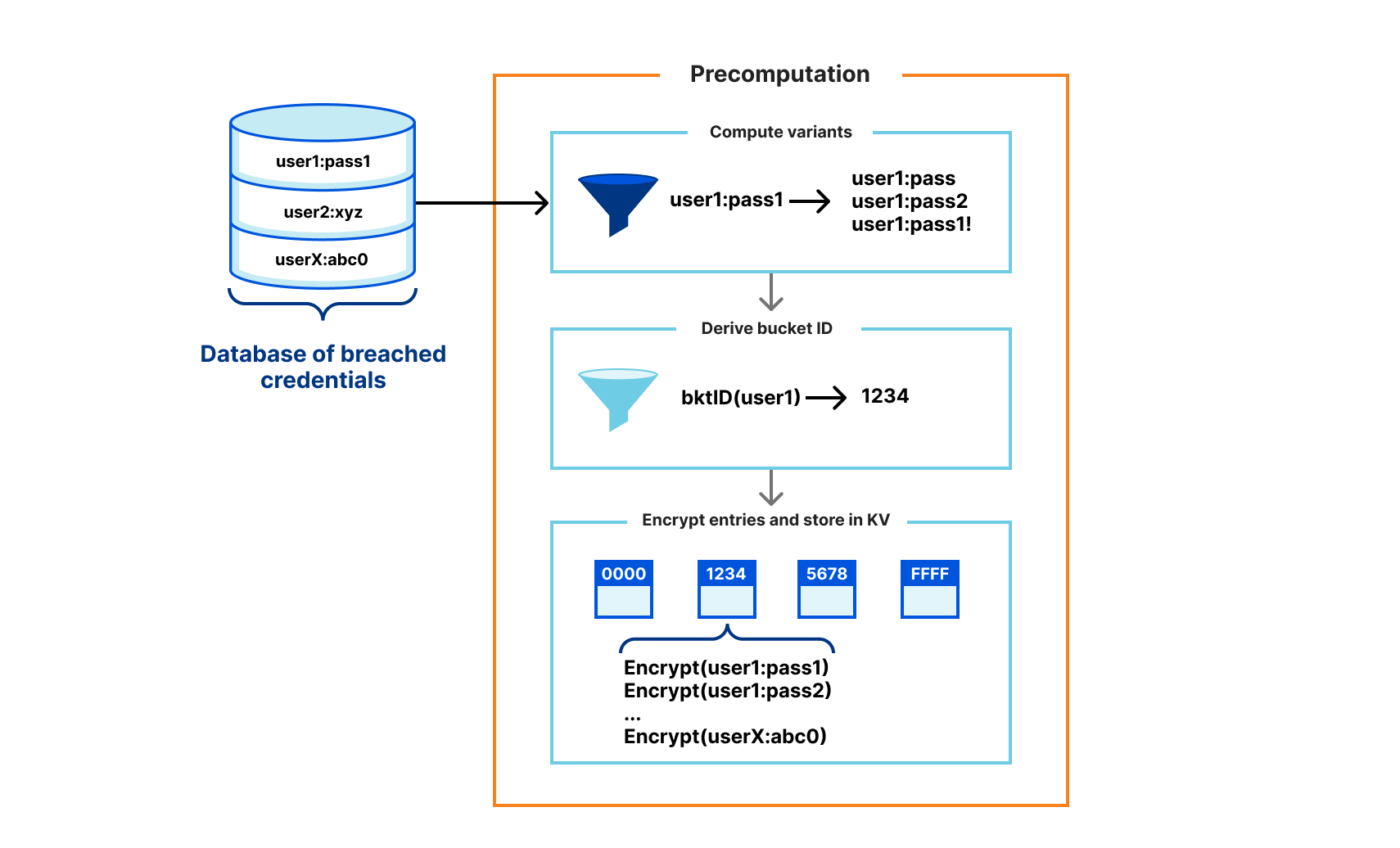

Precomputation

At a high level, the MIGP server partitions the breach dataset into buckets based on the hash prefix of the username (the bucket identifier), which is usually 16-20 bits in length.

We use server-side precomputation as the variant generation mechanism in our implementation. The server derives one ciphertext for each exact username-password pair in the dataset, and an additional ciphertext per password variant. A bucket consists of the set ciphertexts for all breach entries and variants with the same username hash prefix. For instance, suppose there are n breach entries assigned to a particular bucket. If we compute m variants per entry, counting the original entry as one of the variants, there will be n*m ciphertexts stored in the bucket. This introduces a large expansion in the size of the processed dataset, so in practice it is necessary to limit the number of variants computed per entry. Our demo server stores 10 ciphertexts per breach entry in the input: the exact entry, eight variants (see Appendix A of the MIGP paper), and a special variant for allowing username-only checks.

Each ciphertext is the encryption of a username-password (or password variant) pair along with some associated metadata. The metadata describes whether the entry corresponds to an exact password appearing in the breach, or a variant of a breached password. The server derives a per-entry secret key pad using a key derivation function (KDF) with the username-password pair and server secret as inputs, and uses XOR encryption to derive the entry ciphertext. The bucket format also supports storing optional encrypted metadata, such as the date the breach was discovered.

Input:

Secret sk // Server secret key

String u // Username

String w // Password (or password variant)

Byte mdFlag // Metadata flag

String mdString // Optional metadata string

Output:

String C // Ciphertext

function Encrypt(sk, u, w, mdFlag, mdString):

padHdr=KDF1(u, w, sk)

padBody=KDF2(u, w, sk)

zeros=[0] * KEY_CHECK_LEN

C=XOR(padHdr, zeros || mdFlag) || mdString.length || XOR(padBody, mdString)

The precomputation phase only needs to be done rarely, such as when the MIGP parameters are changed (in which case the entire dataset must be re-processed), or when new breach datasets are added (in which case the new data can be appended to the existing buckets).

Online phase

The online phase of the MIGP protocol allows a client to check if a username-password pair (or variant) appears in the server’s breach dataset, while only leaking the hash prefix of the username to the server. The client and server engage in an OPRF protocol message exchange to allow the client to derive the per-entry decryption key, without leaking the username and password to the server, or the server’s secret key to the client. The client then computes the bucket identifier from the queried username and downloads the corresponding bucket of entries from the server. Using the decryption key derived in the previous step, the client scans through the entries in the bucket attempting to decrypt each one. If the decryption succeeds, this signals to the client that their queried credentials (or a variant thereof) are in the server’s dataset. The decrypted metadata flag indicates whether the entry corresponds to the exact password or a password variant.

The MIGP protocol solves many of the shortcomings of existing credential checking services with its solution that avoids leaking any information about the client’s queried password to the server, while also providing a mechanism for checking for similar password compromise. Read on to see the protocol in action!

MIGP demo

As the state of the art in attack methodologies evolve with new techniques such as credential tweaking, so must the defenses. To that end, we’ve collaborated with the designers of the MIGP protocol to prototype and deploy the MIGP protocol within Cloudflare’s infrastructure.

Our MIGP demo server is deployed at migp.cloudflare.com, and runs entirely on top of Cloudflare Workers. We use Workers KV for efficient storage and retrieval of buckets of encrypted breach entries, capping out each bucket size at the current KV value limit of 25MB. In our instantiation, we set the username hash prefix length to 20 bits, so that there are a total of 2^20 (or just over 1 million) buckets.

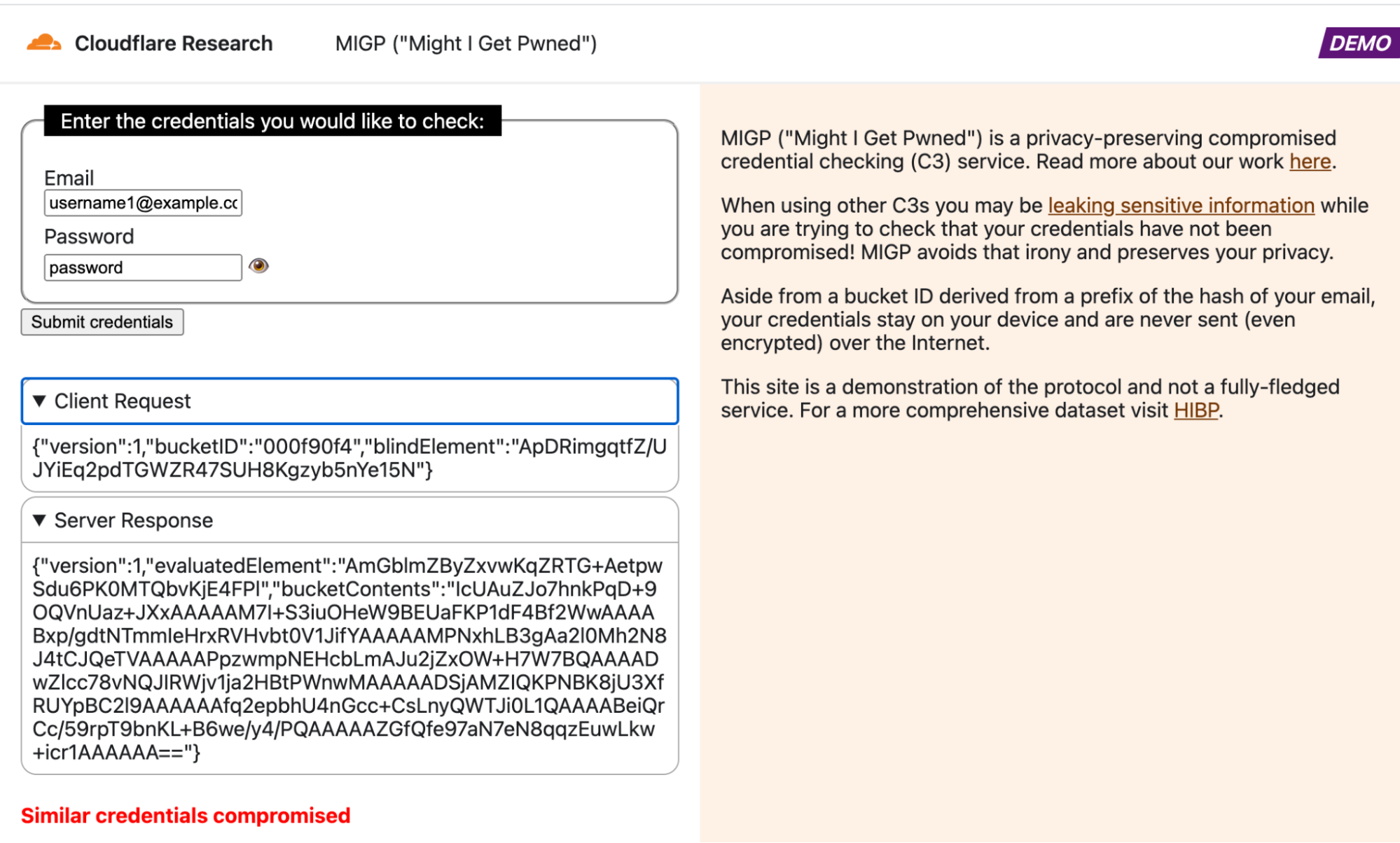

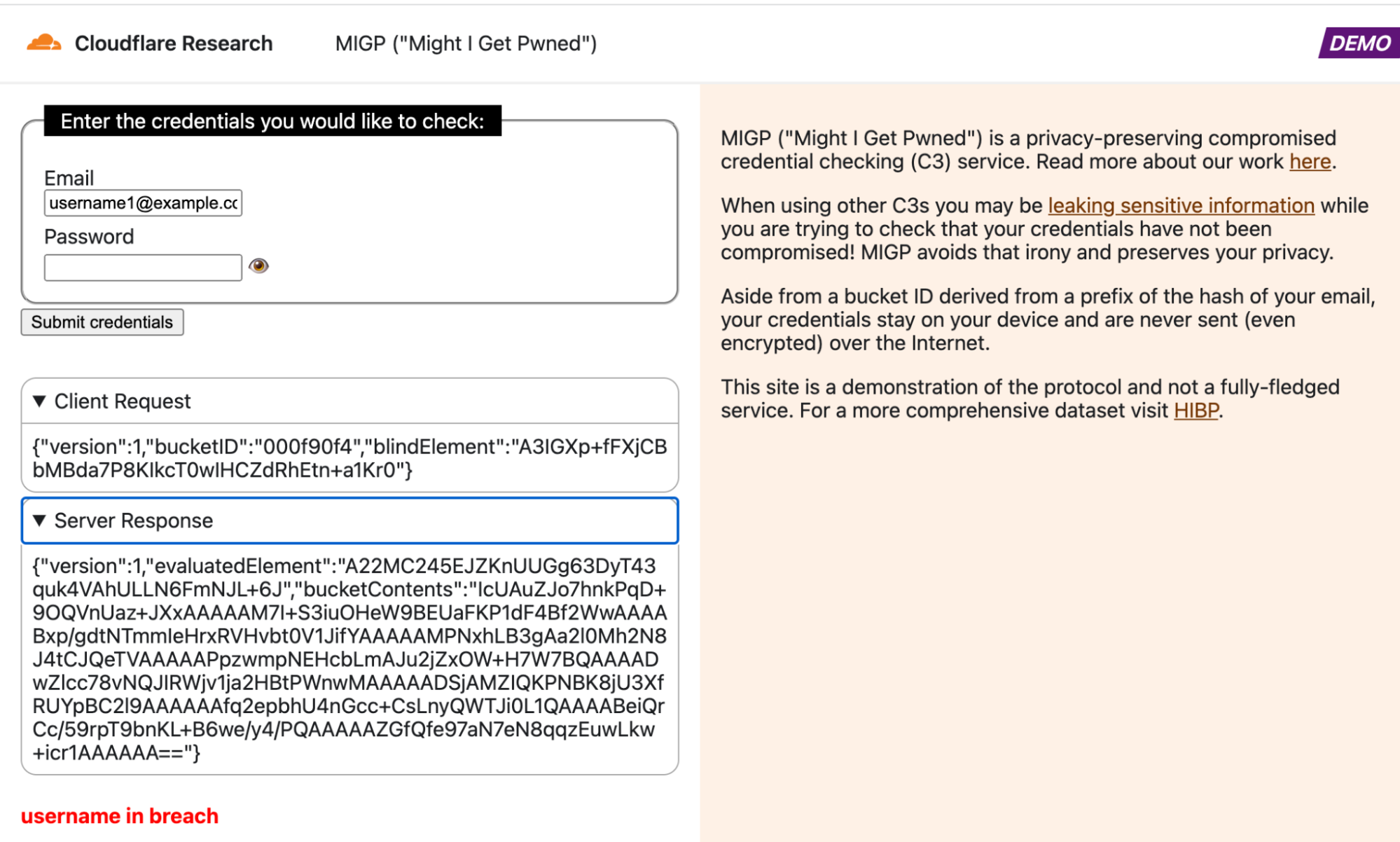

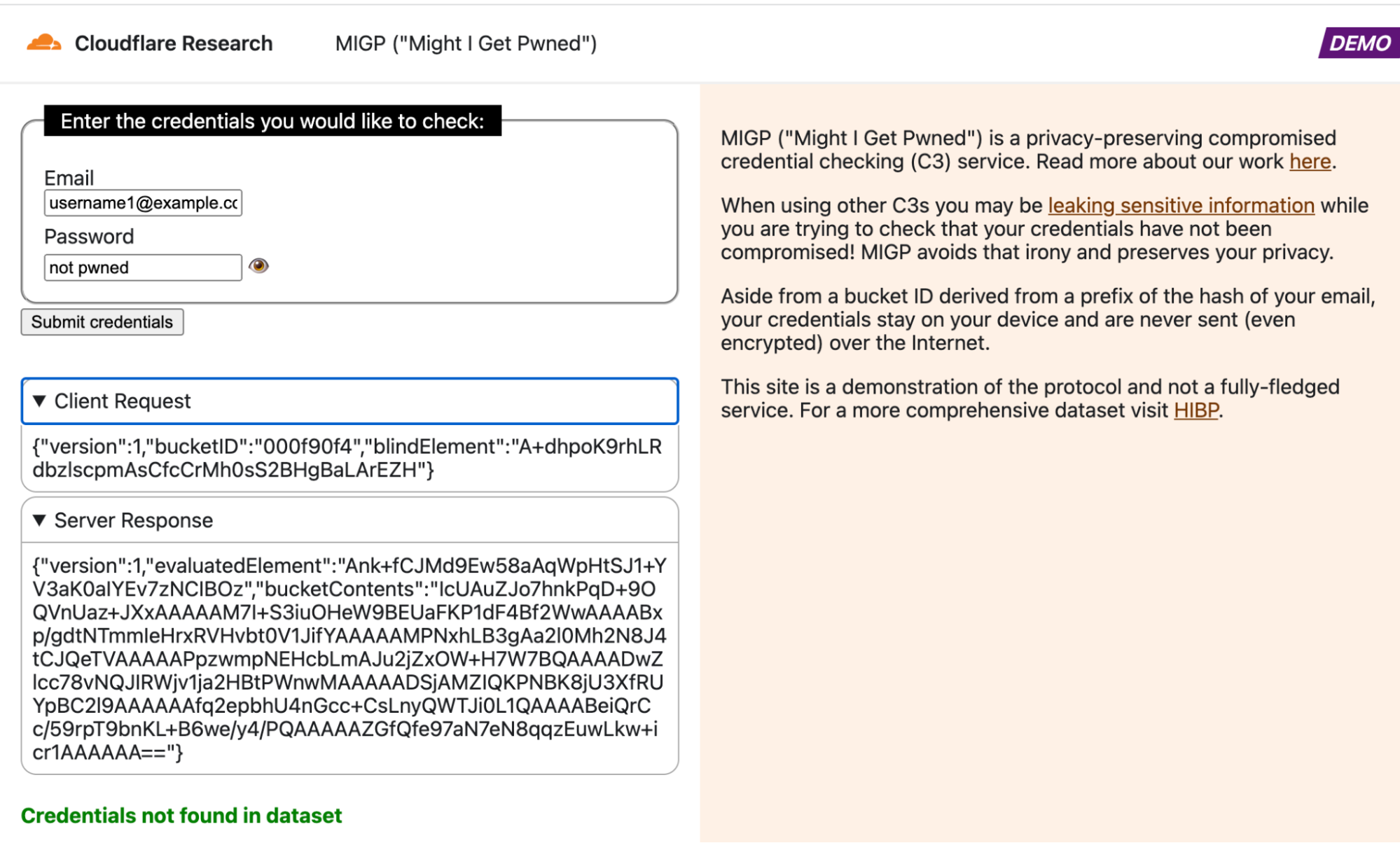

There are currently two ways to interact with the demo MIGP service: via the browser client at migp.cloudflare.com, or via the Go client included in our open-sourced MIGP library. As shown in the screenshots below, the browser client displays the request from your device and the response from the MIGP service. You should take caution to not input any sensitive credentials in a third-party service (feel free to use the test credentials [email protected] and password1 for the demo).

Keep in mind that “absence of evidence is not evidence of absence”, especially in the context of data breaches. We intend to periodically update the breach datasets used by the service as new public breaches become available, but no breach alerting service will be able to provide 100% accuracy in assuring that your credentials are safe.

See the MIGP demo in action in the attached screenshots. Note that in all cases, the username ([email protected]) and corresponding username prefix hash (000f90f4) remain the same, so the client retrieves the exact same bucket contents from the server each time. However, the blindElement parameter in the client request differs per request, allowing the client to decrypt different bucket elements depending on the queried credentials.

Open-sourced MIGP library

We are open-sourcing our implementation of the MIGP library under the BSD-3 License. The code is written in Go and is available at https://github.com/cloudflare/migp-go. Under the hood, we use Cloudflare’s CIRCL library for OPRF support and Go’s supplementary cryptography library for scrypt support. Check out the repository for instructions on setting up the MIGP client to connect to Cloudflare’s demo MIGP service. Community contributions and feedback are welcome!

Future directions

In this post, we announced our open-sourced implementation and demo deployment of MIGP, a next-generation breach alerting service. Our deployment is intended to lead the way for other credential compromise checking services to migrate to a more privacy-friendly model, but is not itself currently meant for production use. However, we identify several concrete steps that can be taken to improve our service in the future:

- Add more breach datasets to the database of precomputed entries

- Increase the number of variants in server-side precomputation

- Add library support in more programming languages to reach a broader developer base

- Hide the number of ciphertexts per bucket by padding with dummy entries

- Add support for efficient client-side variant checking by batching API calls to the server

For exciting future research directions that we are investigating — including one proposal to remove the transmission of plaintext passwords from client to server entirely — take a look at https://blog.cloudflare.com/research-directions-in-password-security.

We are excited to share and build upon these ideas with the wider Internet community, and hope that our efforts impact positive change in the password security ecosystem. We are particularly interested in collaborating with stakeholders in the space to develop, test, and deploy next-generation protocols to improve user security and privacy. You can reach us with questions, comments, and research ideas at [email protected]. For those interested in joining our team, please visit our Careers Page.