Post Syndicated from Rosa Brown original https://www.raspberrypi.org/blog/young-peoples-projects-for-a-sustainable-future/

This post has been adapted from issue 19 of Hello World magazine, which explored the interaction between technology and sustainability.

We may have had the Coolest Projects livestream, but we are still in awe of the 2092 projects that young people sent in for this year’s online technology showcase! To continue the Coolest Projects Global 2022 celebrations, we’re shining a light on some of the participants and the topics that inspired their projects.

In this year’s showcase, the themes of sustainability and the environment were extremely popular. We received over 300 projects related to the environment from young people all over the world. Games, apps, websites, hardware — we’ve seen so many creative projects that demonstrate how important the environment is to young people.

Here are some of these projects and a glimpse into how kids and teens across the world are using technology to look after their environment.

Using tech to make one simple change

Has anyone ever told you that a small change can lead to a big impact? Check out these two Coolest Projects entries that put this idea into practice with clever inventions to make positive changes to the environment.

Arik (15) from the UK wanted to make something to reduce the waste he noticed at home. Whenever lots of people visited Arik’s house, getting the right drink for everyone was a challenge and often resulted in wasted, spilled drinks. This problem was the inspiration behind Arik’s ‘Liquid Dispenser’ project, which can hold two litres of any desired liquid and has an outer body made from reused cardboard. As Arik says, “You don’t need a plastic bottle, you just need a cup!”

Amrit (13), Kingston (12), and Henry (12) from Canada were also inspired to make a project to reduce waste. ‘Eco Light’ is a light that automatically turns off when someone leaves their house to avoid wasted electricity. For the project, the team used a micro:bit to detect the signal strength and decide whether the LED should be on (if someone is in the house) or off (if the house is empty).

“We wanted to create something that hopefully would create a meaningful impact on the world.”

Amrit, Kingston, and Henry

Projects for local and global positive change

We love to see young people invent things to have positive changes in the community, on a local and global level.

This year, Sashrika (11) from the US shared her ‘Gas Leak Detector’ project, which she designed to help people who heat their homes with diesel. On the east coast of America, many people store their gas tanks in the basement. This means they may not realise if the gas is leaking. To solve this problem, Sashrika has combined programming with physical computing to make a device that can detect if there is a gas leak and send a notification to your phone.

Sashrika’s project has the power to help lots of people and she has even thought about how she would make more changes to her project in the name of sustainability:

“I would probably add a solar panel because there are lots of houses that have outdoor oil tanks. Solar panel[s] will reduce electricity consumption and reduce CO2 emission[s].”

Sashrika

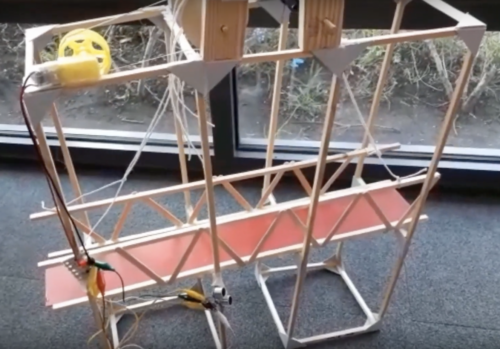

Amr in Syria was also thinking about renewable energy sources when he created his own ‘Smart Wind Turbine’.

The ‘Smart Wind Turbine’ is connected to a micro:bit to measure the electricity generated by a fan. Amr conducted tests that recorded that more electricity was generated when the turbine faced in the direction of the wind. So Amr made a wind vane to determine the wind’s direction and added another micro:bit to communicate the results to the turbine.

Creating projects for the future

We’ve also seen projects created by young people to make the world a better place for future generations.

Naira and Rhythm from India have designed houses that are suited for people and the planet. They carried out a survey and from their results they created the ‘Net Zero Home’. Naira and Rhythm’s project offers an idea for homes that are comfortable for people of all abilities and ages, while also being sustainable.

“Our future cities will require a lot of homes, this means we will require a lot of materials, energy, water and we will also produce a lot of waste. So we have designed this net zero home as a solution.”

Naira and Rhythm

Andrea (9) and Yuliana (10) from the US have also made something to benefit future generations. The ‘Bee Counter’ project uses sensors and a micro:bit to record bees’ activity around a hive. Through monitoring the bees, the team hope they can see (and then fix) any problems with the hive. Andrea and Yuliana want to maintain the bees’ home to help them continue to have a positive influence on our environment.

Knowledge is power: projects to educate and inspire

Some young creators use Coolest Projects as an opportunity to educate and inspire people to make environmental changes in their own lives.

Sabrina (13) from the UK created her own website, ‘A Guide to Climate Change’. It includes images, text, graphics of the Earth’s temperature change, and suggestions for people to minimise their waste. Sabrina also received the Broadcom Coding with Commitment award for using her skills to provide vital information about the effects of climate change.

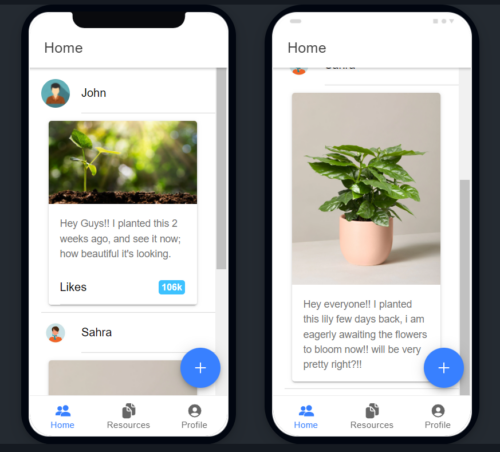

Kushal (12) from India wanted to use tech to encourage people to help save the environment. Kushal had no experience of app development before making his ‘Green Steps’ app. He says, “I have created a mobile app to connect like-minded people who want to do something about [the] environment.”

These projects are just some of the incredible ideas we’ve seen young people enter for Coolest Projects this year. It’s clear from the projects submitted that the context of the environment and protecting our planet resonates with so many students, summarised by Sabrina, “Some of us don’t understand how important the earth is to us. And I hope we don’t have to wait until it is gone to realise.”

Check out the Coolest Projects showcase for even more projects about the environment, alongside other topics that have inspired young creators.

The post Young people’s projects for a sustainable future appeared first on Raspberry Pi.