Post Syndicated from Achiel van der Mandele original http://blog.cloudflare.com/turbo-charge-gaming-and-streaming-with-argo-for-udp/

Today, Cloudflare is super excited to announce that we’re bringing traffic acceleration to customer’s UDP traffic. Now, you can improve the latency of UDP-based applications like video games, voice calls, and video meetings by up to 17%. Combining the power of Argo Smart Routing (our traffic acceleration product) with UDP gives you the ability to supercharge your UDP-based traffic.

When applications use TCP vs. UDP

Typically when people talk about the Internet, they think of websites they visit in their browsers, or apps that allow them to order food. This type of traffic is sent across the Internet via HTTP which is built on top of the Transmission Control Protocol (TCP). However, there’s a lot more to the Internet than just browsing websites and using apps. Gaming, live video, or tunneling traffic to different networks via a VPN are all common applications that don’t use HTTP or TCP. These popular applications leverage the User Datagram Protocol (or UDP for short). To understand why these applications use UDP instead of TCP, we’ll need to dig into how these different applications work.

When you load a web page, you generally want to see the entire web page; the website would be confusing if parts of it are missing. For this reason, HTTP uses TCP as a method of transferring website data. TCP ensures that if a packet ever gets lost as it crosses the Internet, that packet will be resent. Having a reliable protocol like TCP is generally a good idea when 100% of the information sent needs to be loaded. It’s worth noting that later HTTP versions like HTTP/3 actually deviated from TCP as a transmission protocol, but they still ensure packet delivery by handling packet retransmission using the QUIC protocol.

There are other applications that prioritize quickly sending real time data and are less concerned about perfectly delivering 100% of the data. Let’s explore Real-Time Communications (RTC) like video meetings as an example. If two people are streaming video live, all they care about is what is happening now. If a few packets are lost during the initial transmission, retransmission is usually too slow to render the lost packet data in the current video frame. TCP doesn’t really make sense in this scenario.

Instead, RTC protocols are built on top of UDP. TCP is like a formal back and forth conversation where every sentence matters. UDP is more like listening to your friend's stream of consciousness: you don’t care about every single bit as long as you get the gist of it. UDP transfers packet data with speed and efficiency without guaranteeing the delivery of those packets. This is perfect for applications like RTC where reducing latency is more important than occasionally losing a packet here or there. The same applies to gaming traffic; you generally want the most up-to-date information, and you don’t really care about retransmitting lost packets.

Gaming and RTC applications really care about latency. Latency is the length of time it takes a packet to be sent to a server plus the length of time to receive a response from the server (called round-trip time or RTT). In the case of video games, the higher the latency, the longer it will take for you to see other players move and the less time you’ll have to react to the game. With enough latency, games become unplayable: if the players on your screen are constantly blipping around it’s near impossible to interact with them. In RTC applications like video meetings, you’ll experience a delay between yourself and your counterpart. You may find yourselves accidentally talking over each other which isn’t a great experience.

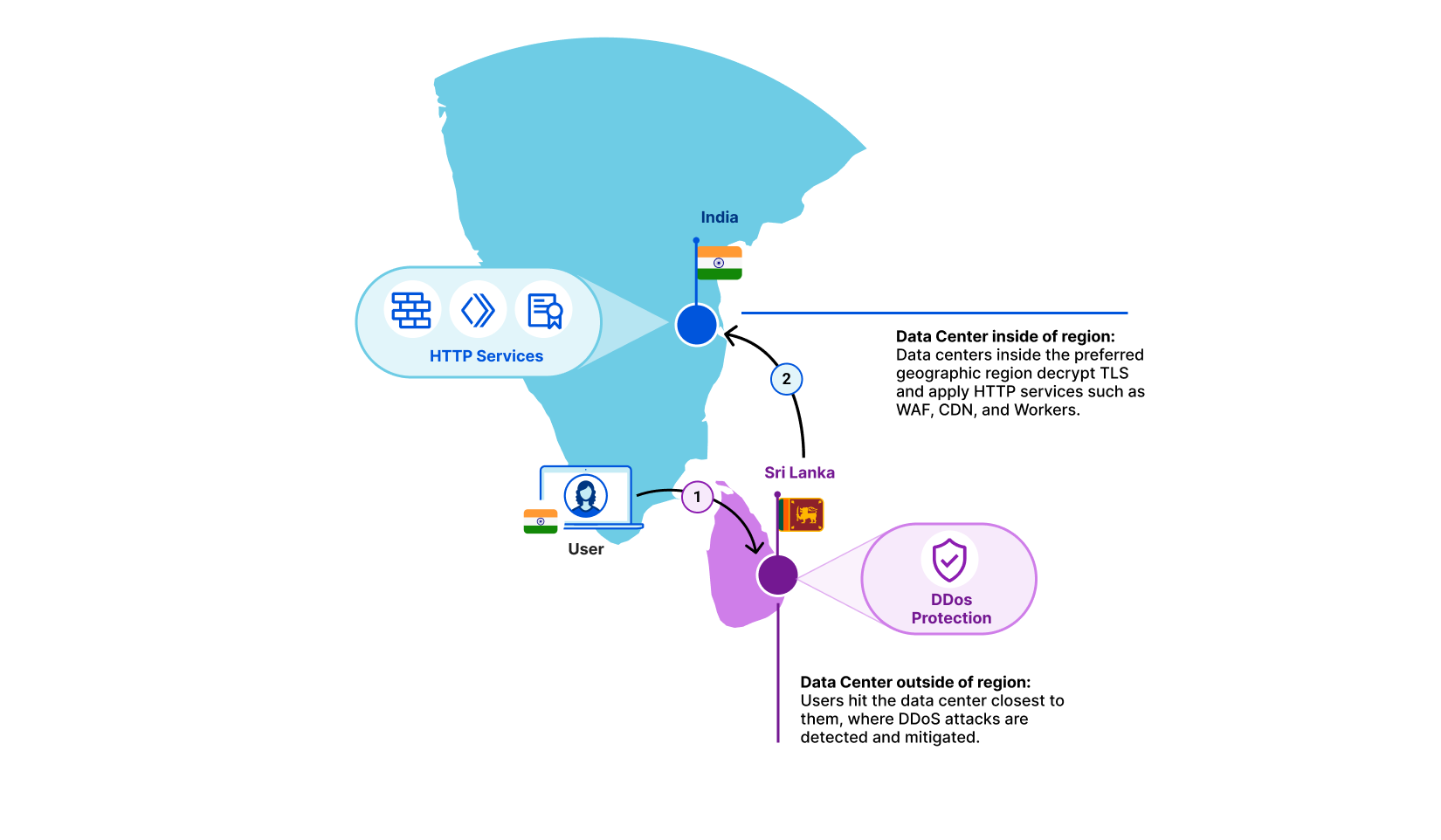

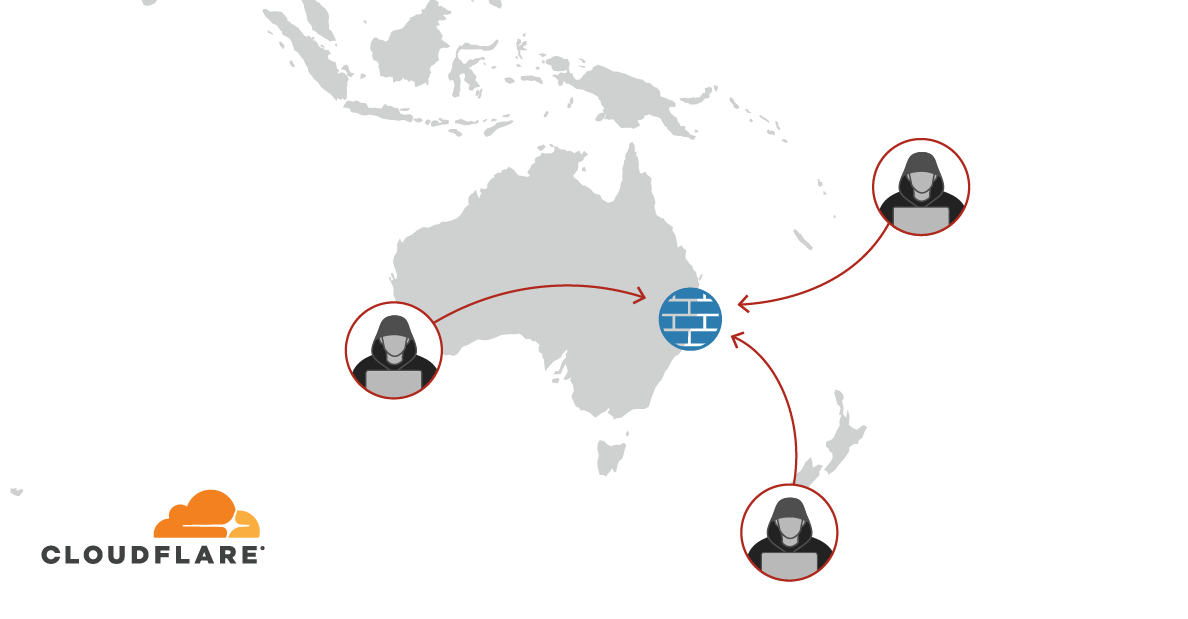

Companies that host gaming or RTC infrastructure often try to reduce latency by spinning up servers that are geographically closer to their users. However, it’s common to have two users that are trying to have a video call between distant locations like Amsterdam and Los Angeles. No matter where you install your servers, that's still a long distance for that traffic to travel. The longer the path, the higher the chances are that you're going to run into congestion along the way. Congestion is just like a traffic jam on a highway, but for networks. Sometimes certain paths get overloaded with traffic. This causes delays and packets to get dropped. This is where Argo Smart Routing comes in.

Argo Smart Routing

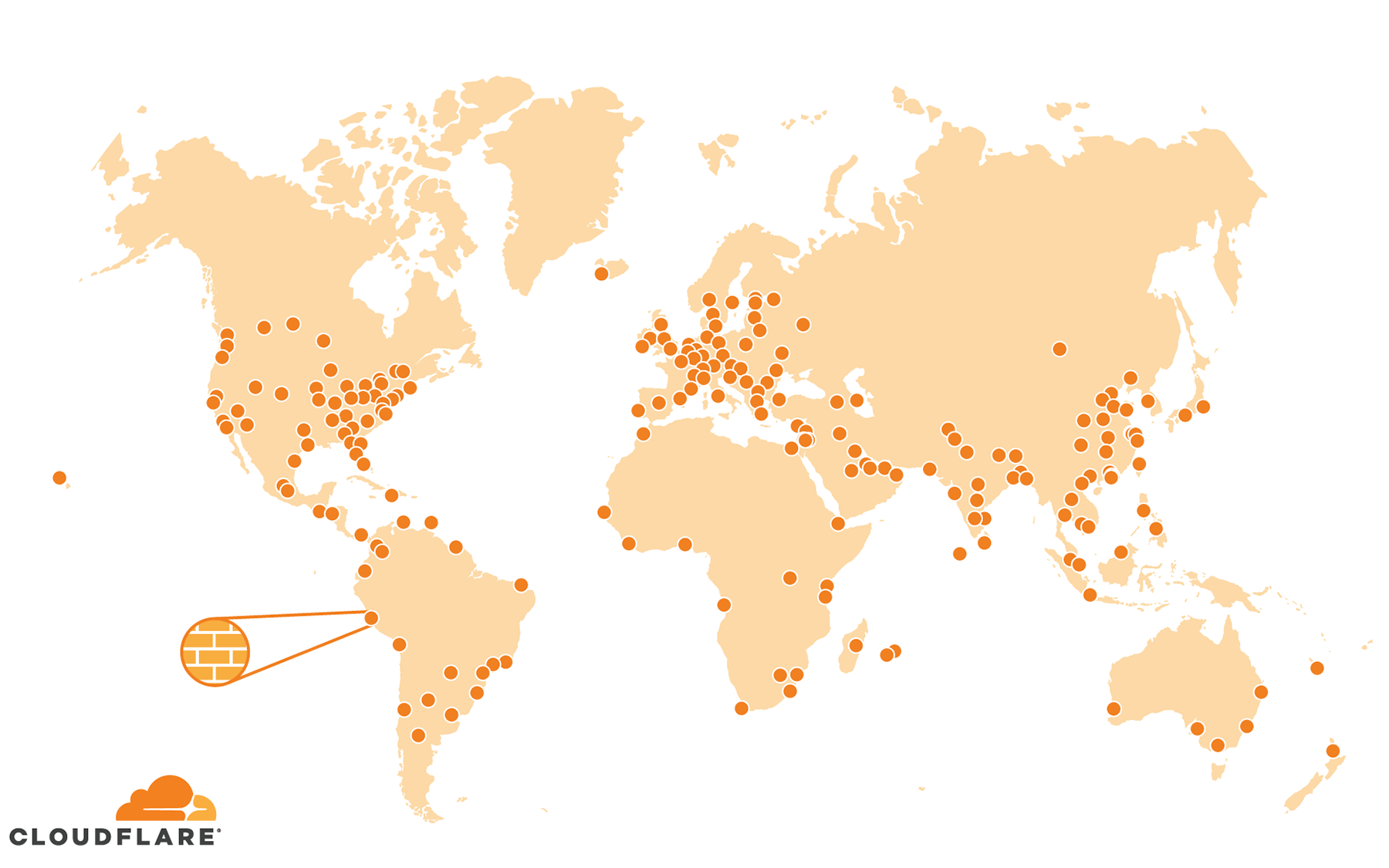

Cloudflare customers that want the best cross-Internet application performance rely on Argo Smart Routing’s traffic acceleration to reduce latency. Argo Smart Routing is like the GPS of the Internet. It uses real time global network performance measurements to accelerate traffic, actively route around Internet congestion, and increase your traffic’s stability by reducing packet loss and jitter.

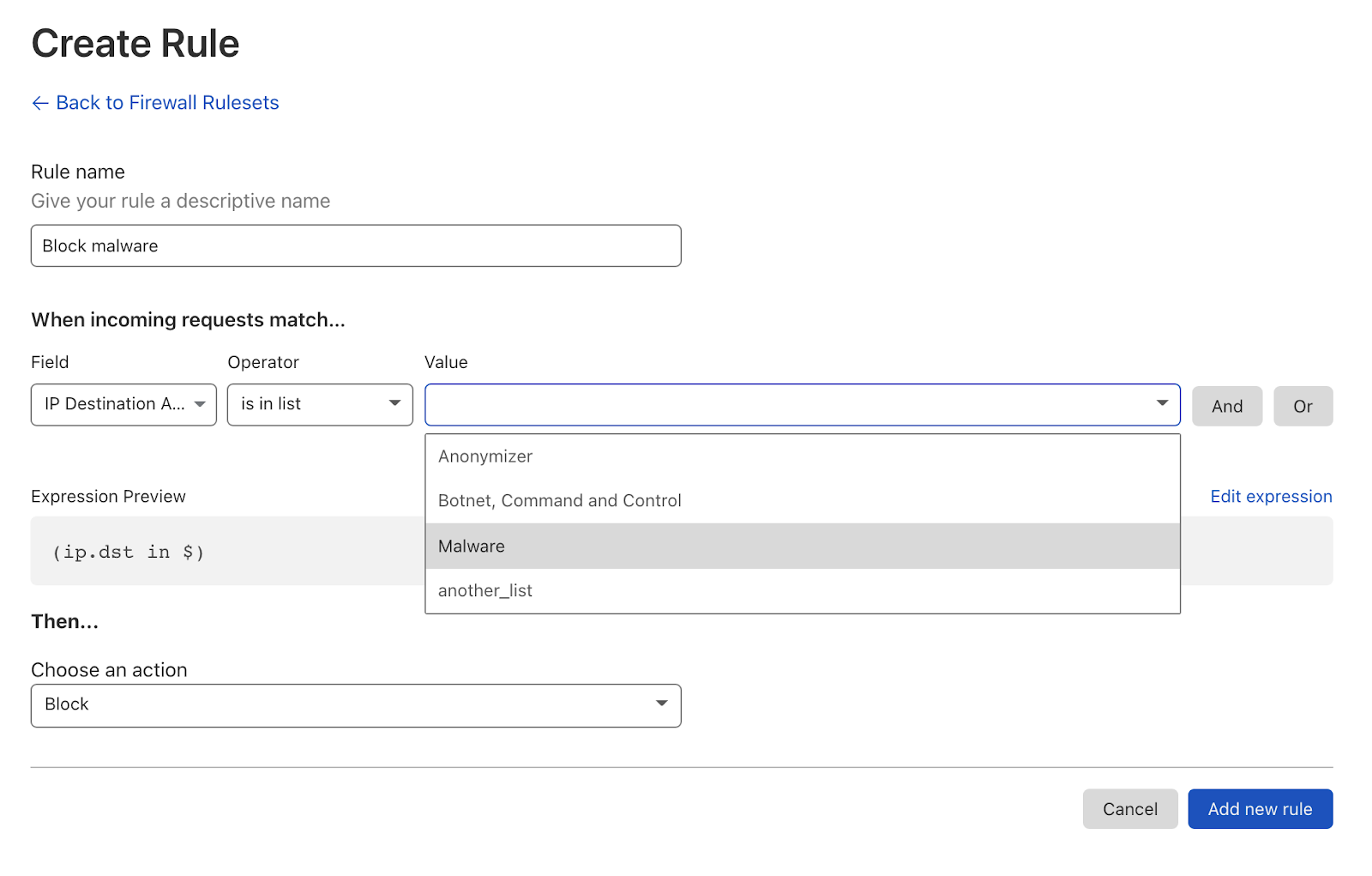

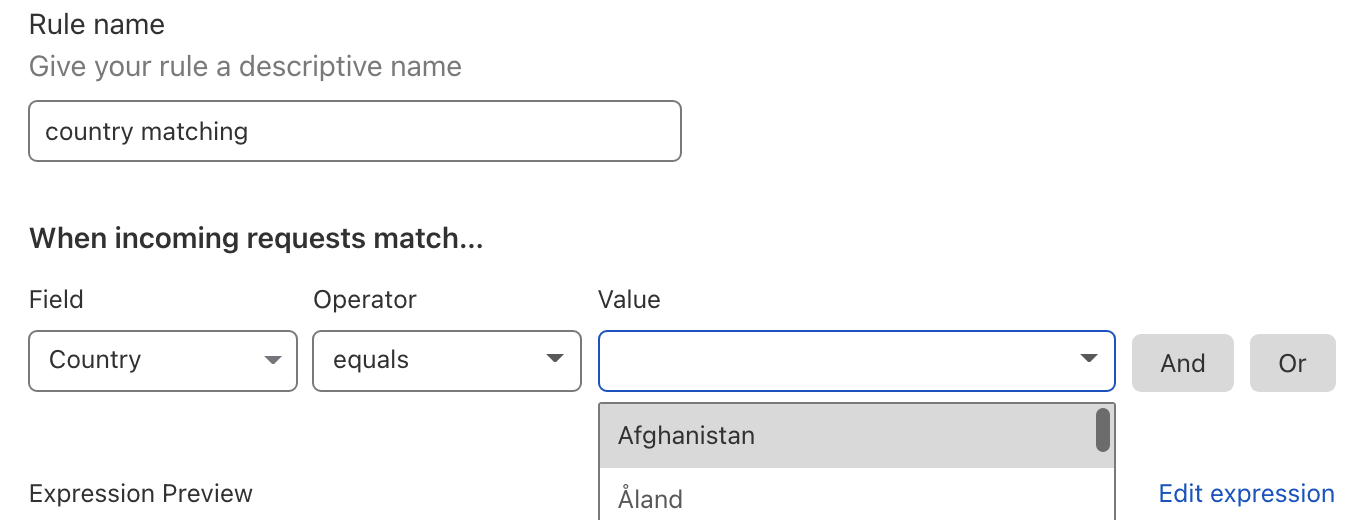

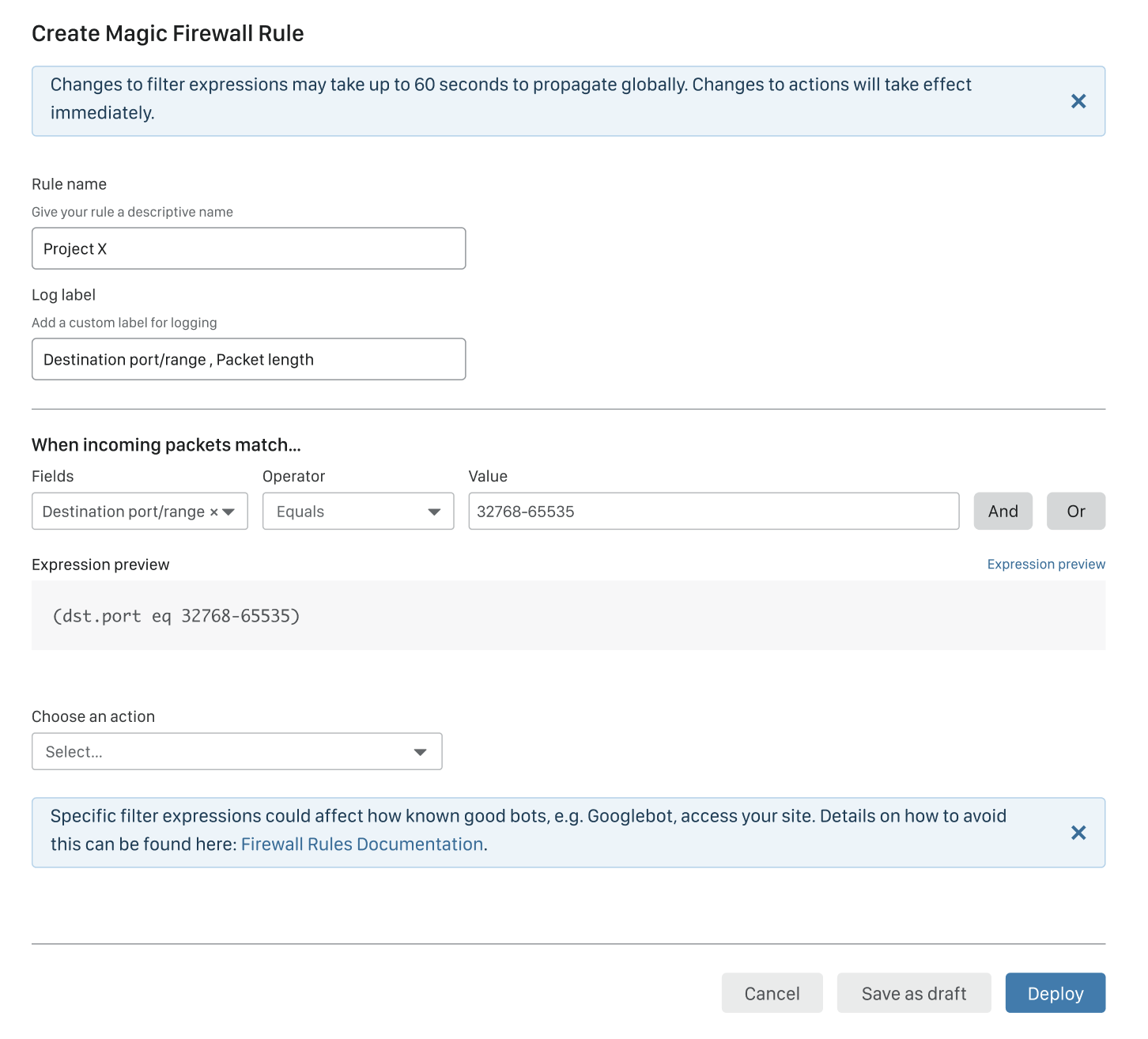

Argo Smart Routing was launched in May 2017, and its first iteration focused on reducing website traffic latency. Since then, we’ve improved Argo Smart Routing and also launched Argo Smart Routing for Spectrum TCP traffic which reduces latency in any TCP-based protocols. Today, we’re excited to bring the same Argo Smart Routing technology to customer’s UDP traffic which will reduce latency, packet loss, and jitter in gaming, and live audio/video applications.

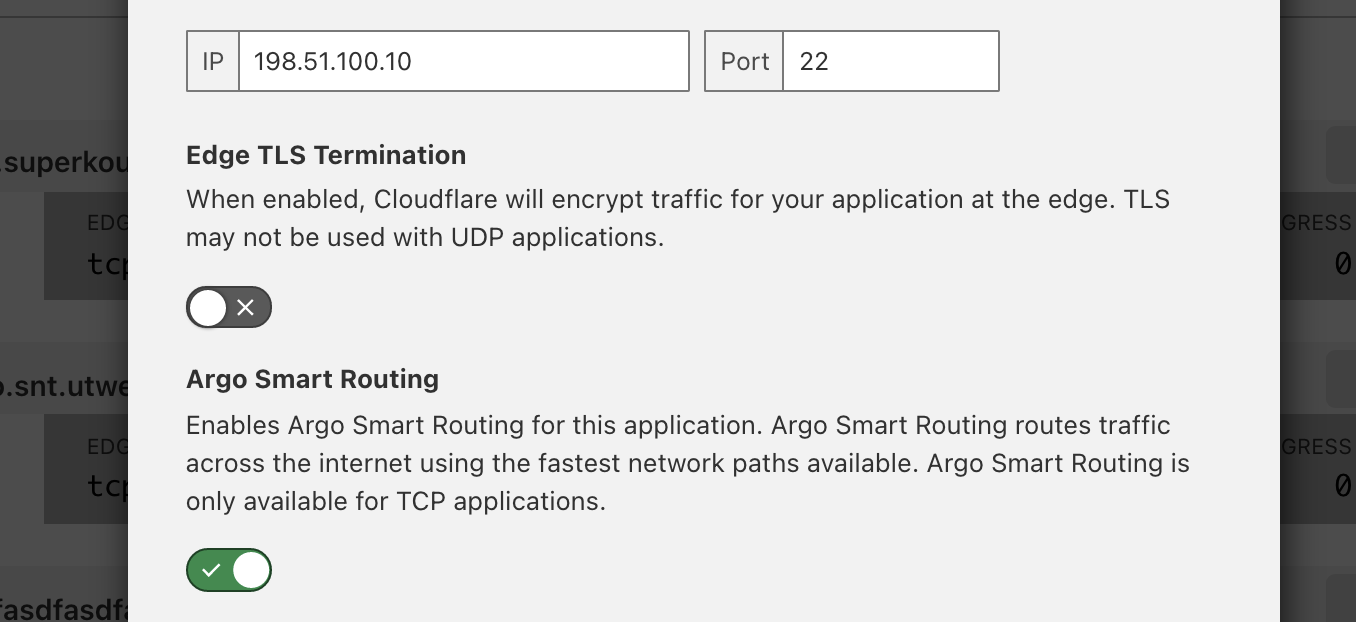

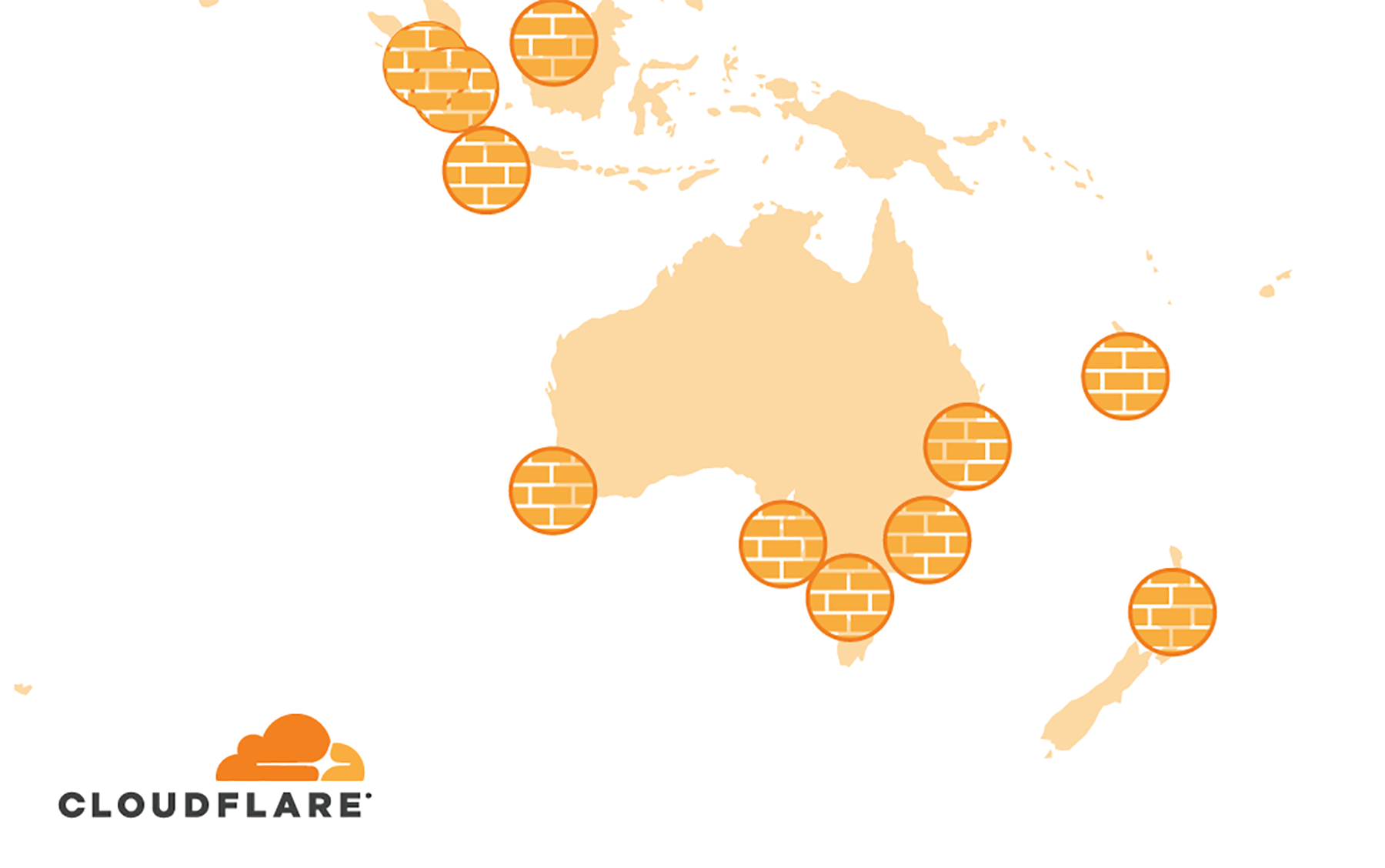

Argo Smart Routing accelerates Internet traffic by sending millions of synthetic probes from every Cloudflare data center to the origin of every Cloudflare customer. These probes measure the latency of all possible routes between Cloudflare’s data centers and a customer’s origin. We then combine that with probes running between Cloudflare’s data centers to calculate possible routes. When an Internet user makes a request to an origin, Cloudflare calculates the results of our real time global latency measurements, examines Internet congestion data, and calculates the optimal route for customer’s traffic. To enable Argo Smart Routing for UDP traffic, Cloudflare extended the route computations typically used for HTTP and TCP traffic and applied them to UDP traffic.

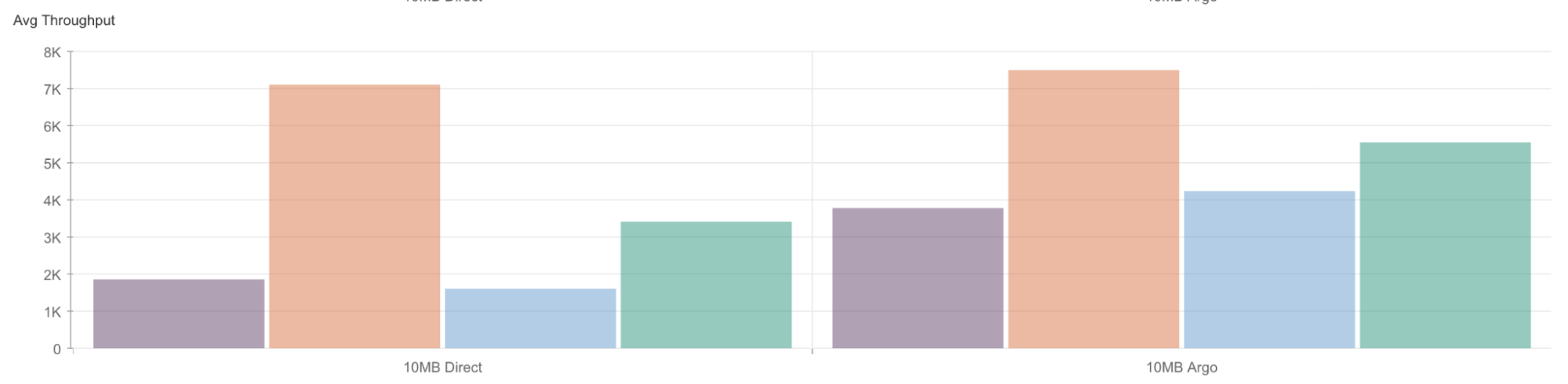

We knew that Argo Smart Routing offered impressive benefits for HTTP traffic, reducing time to first byte by up to 30% on average for customers. But UDP can be treated differently by networks, so we were curious to see if we would see a similar reduction in round-trip-time for UDP. To validate, we ran a set of tests. We set up an origin in Iowa, USA and had a client connect to it from Tokyo, Japan. Compared to a regular Spectrum setup, we saw a decrease in round-trip-time of up to 17.3% on average. For the standard setup, Spectrum was able to proxy packets to Iowa in 173.3 milliseconds on average. Comparatively, turning on Argo Smart Routing reduced the average round-trip-time down to 143.3 milliseconds. The distance between those two cities is 6,074 miles (9,776 kilometers), meaning we've effectively moved the two closer to each other by over a thousand miles (or 1,609 km) just by turning on this feature.

We're incredibly excited about Argo Smart Routing for UDP and what our customers will use it for. If you're in gaming or real-time-communications, or even have a different use-case that you think would benefit from speeding up UDP traffic, please contact your account team today. We are currently in closed beta but are excited about accepting applications.