Post Syndicated from Nathan Disidore original http://blog.cloudflare.com/powering-platforms-on-workers/

We launched Workers for Platforms, our Workers offering for SaaS businesses, almost exactly one year ago to the date! We’ve seen a wide array of customers using Workers for Platforms – from e-commerce to CMS, low-code/no-code platforms and also a new wave of AI businesses running tailored inference models for their end customers!

Let’s take a look back and recap why we built Workers for Platforms, show you some of the most interesting problems our customers have been solving and share new features that are now available!

What is Workers for Platforms?

SaaS businesses are all too familiar with the never ending need to keep up with their users' feature requests. Thinking back, the introduction of Workers at Cloudflare was to solve this very pain point. Workers gave our customers the power to program our network to meet their specific requirements!

Need to implement complex load balancing across many origins? Write a Worker. Want a custom set of WAF rules for each region your business operates in? Go crazy, write a Worker.

We heard the same themes coming up with our customers – which is why we partnered with early customers to build Workers for Platforms. We worked with the Shopify Oxygen team early on in their journey to create a built-in hosting platform for Hydrogen, their Remix-based eCommerce framework. Shopify’s Hydrogen/Oxygen combination gives their merchants the flexibility to build out personalized shopping for buyers. It’s an experience that storefront developers can make their own, and it’s powered by Cloudflare Workers behind the scenes. For more details, check out Shopify’s “How we Built Oxygen” blog post.

Oxygen is Shopify's built-in hosting platform for Hydrogen storefronts, designed to provide users with a seamless experience in deploying and managing their ecommerce sites. Our integration with Workers for Platforms has been instrumental to our success in providing fast, globally-available, and secure storefronts for our merchants. The flexibility of Cloudflare's platform has allowed us to build delightful merchant experiences that integrate effortlessly with the best that the Shopify ecosystem has to offer.

– Lance Lafontaine, Senior Developer Shopify Oxygen

Another customer that we’ve been working very closely with is Grafbase. Grafbase started out on the Cloudflare for Startups program, building their company from the ground up on Workers. Grafbase gives their customers the ability to deploy serverless GraphQL backends instantly. On top of that, their developers can build custom GraphQL resolvers to program their own business logic right at the edge. Using Workers and Workers for Platforms means that Grafbase can focus their team on building Grafbase, rather than having to focus on building and architecting at the infrastructure layer.

Our mission at Grafbase is to enable developers to deploy globally fast GraphQL APIs without worrying about complex infrastructure. We provide a unified data layer at the edge that accelerates development by providing a single endpoint for all your data sources. We needed a way to deploy serverless GraphQL gateways for our customers with fast performance globally without cold starts. We experimented with container-based workloads and FaaS solutions, but turned our attention to WebAssembly (Wasm) in order to achieve our performance targets. We chose Rust to build the Grafbase platform for its performance, type system, and its Wasm tooling. Cloudflare Workers was a natural fit for us given our decision to go with Wasm. On top of using Workers to build our platform, we also wanted to give customers the control and flexibility to deploy their own logic. Workers for Platforms gave us the ability to deploy customer code written in JavaScript/TypeScript or Wasm straight to the edge.

– Fredrik Björk, Founder & CEO at Grafbase

Over the past year, it’s been incredible seeing the velocity that building on Workers allows companies both big and small to move at.

New building blocks

Workers for Platforms uses Dynamic Dispatch to give our customers, like Shopify and Grafbase, the ability to run their own Worker before user code that’s written by Shopify and Grafbase’s developers is executed. With Dynamic Dispatch, Workers for Platforms customers (referred to as platform customers) can authenticate requests, add context to a request or run any custom code before their developer’s Workers (referred to as user Workers) are called.

This is a key building block for Workers for Platforms, but we’ve also heard requests for even more levels of visibility and control from our platform customers. Delivering on this theme, we’re releasing three new highly requested features:

Outbound Workers

Dynamic Dispatch gives platforms visibility into all incoming requests to their user’s Workers, but customers have also asked for visibility into all outgoing requests from their user’s Workers in order to do things like:

- Log all subrequests in order to identify malicious hosts or usage patterns

- Create allow or block lists for hostnames requested by user Workers

- Configure authentication to your APIs behind the scenes (without end developers needing to set credentials)

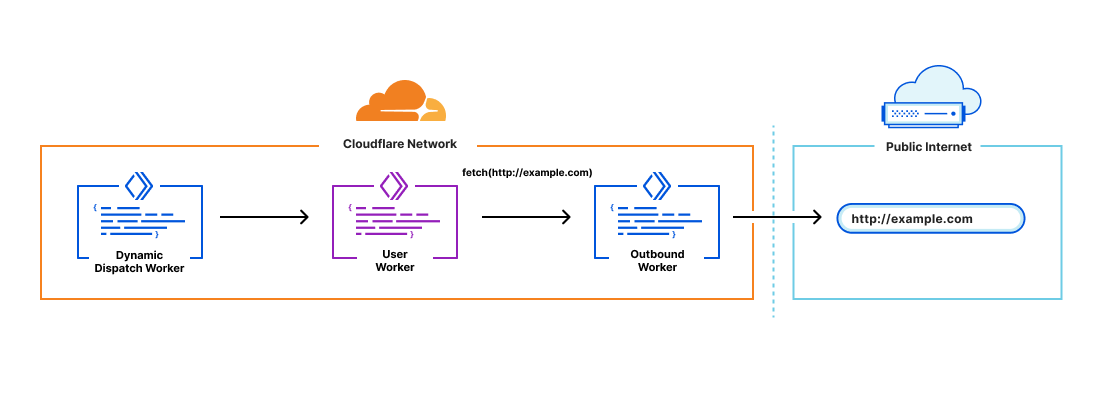

Outbound Workers sit between user Workers and fetch() requests out to the Internet. User Workers will trigger a FetchEvent on the Outbound Worker and from there platform customers have full visibility over the request before it’s sent out.

It’s also important to have context in the Outbound Worker to answer questions like “which user Worker is this request coming from?”. You can declare variables to pass through to the Outbound Worker in the dispatch namespaces binding:

[[dispatch_namespaces]]

binding = "dispatcher"

namespace = "<NAMESPACE_NAME>"

outbound = {service = "<SERVICE_NAME>", parameters = [customer_name,url]}

From there, the variables declared in the binding can be accessed in the Outbound Worker through env. <VAR_NAME>.

Custom Limits

Workers are really powerful, but, as a platform, you may want guardrails around their capabilities to shape your pricing and packaging model. For example, if you run a freemium model on your platform, you may want to set a lower CPU time limit for customers on your free tier.

Custom Limits let you set usage caps for CPU time and number of subrequests on your customer’s Workers. Custom limits are set from within your dynamic dispatch Worker allowing them to be dynamically scripted. They can also be combined to set limits based on script tags.

Here’s an example of a Dynamic Dispatch Worker that puts both Outbound Workers and Custom Limits together:

export default {

async fetch(request, env) {

try {

let workerName = new URL(request.url).host.split('.')[0];

let userWorker = env.dispatcher.get(

workerName,

{},

{// outbound arguments

outbound: {

customer_name: workerName,

url: request.url},

// set limits

limits: {cpuMs: 10, subRequests: 5}

}

);

return await userWorker.fetch(request);

} catch (e) {

if (e.message.startsWith('Worker not found')) {

return new Response('', { status: 404 });

}

return new Response(e.message, { status: 500 });

}

}

};

They’re both incredibly simple to configure, and the best part – the configuration is completely programmatic. You have the flexibility to build on both of these features with your own custom logic!

Tail Workers

Live logging is an essential piece of the developer experience. It allows developers to monitor for errors and troubleshoot in real time. On Workers, giving users real time logs though wrangler tail is a feature that developers love! Now with Tail Workers, platform customers can give their users the same level of visibility to provide a faster debugging experience.

Tail Worker logs contain metadata about the original trigger event (like the incoming URL and status code for fetches), console.log() messages and capture any unhandled exceptions. Tail Workers can be added to the Dynamic Dispatch Worker in order to capture logs from both the Dynamic Dispatch Worker and any User Workers that are called.

A Tail Worker can be configured by adding the following to the wrangler.toml file of the producing script

tail_consumers = [{service = "<TAIL_WORKER_NAME>", environment = "<ENVIRONMENT_NAME>"}]

From there, events are captured in the Tail Worker using a new tail handler:

export default {

async tail(events) => {

fetch("https://example.com/endpoint", {

method: "POST",

body: JSON.stringify(events),

})

}

}

Tail Workers are full-fledged Workers empowered by the usual Worker ecosystem. You can send events to any HTTP endpoint, like for example a logging service that parses the events and passes on real-time logs to customers.

Try it out!

All three of these features are now in open beta for users with access to Workers for Platforms. For more details and try them out for yourself, check out our developer documentation:

Workers for Platforms is an enterprise only product (for now) but we’ve heard a lot of interest from developers. In the later half of the year, we’ll be bringing Workers for Platforms down to our pay as you go plan! In the meantime, if you’re itching to get started, reach out to us through the Cloudflare Developer Discord (channel name: workers-for-platforms).