Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=P0ZPtTeVg94

How to automatically build forensic kernel modules for Amazon Linux EC2 instances

Post Syndicated from Jonathan Nguyen original https://aws.amazon.com/blogs/security/how-to-automatically-build-forensic-kernel-modules-for-amazon-linux-ec2-instances/

In this blog post, we will walk you through the EC2 forensic module factory solution to deploy automation to build forensic kernel modules that are required for Amazon Elastic Compute Cloud (Amazon EC2) incident response automation.

When an EC2 instance is suspected to have been compromised, it’s strongly recommended to investigate what happened to the instance. You should look for activities such as:

- Open network connections

- List of running processes

- Processes that contain injected code

- Memory-resident infections

- Other forensic artifacts

When an EC2 instance is compromised, it’s important to take action as quickly as possible. Before you shut down the EC2 instance, you first need to capture the contents of its volatile memory (RAM) in a memory dump because it contains the instance’s in-progress operations. This is key in determining the root cause of compromise.

In order to capture volatile memory in Linux, you can use a tool like Linux Memory Extractor (LiME). This requires you to have the kernel modules that are specific to the kernel version of the instance for which you want to capture volatile memory. We also recommend that you limit the actions you take on the instance where you are trying to capture the volatile memory in order to minimize the set of artifacts created as part of the capture process, so you need a method to build the tools for capturing volatile memory outside the instance under investigation. After you capture the volatile memory, you can use a tool like Volatility2 to analyze it in a dedicated forensics environment. You can use tools like LiME and Volatility2 on EC2 instances that use x86, x64, and Graviton instance types.

Prerequisites

This solution has the following prerequisites:

- The Target EC2 instance is using Amazon Linux 2 operating system.

- An AWS Identity and Access Management (IAM) role with permissions to deploy the required resources in an AWS account. More details about these permissions follow in the next section.

Solution overview

The EC2 forensic module factory solution consists of the following resources:

- One AWS Step Functions workflow

- Two AWS Lambda functions

- One AWS Systems Manager document (SSM document)

Important: The SSM document clones the LiME and Volatility2 GitHub repositories, and these tools use version 2.0 of the GNU General Public License. This SSM document can be updated to include your preferred tools, like fmem or Volatility3, for forensic analysis and capture.

- One Amazon Simple Storage Service (Amazon S3) bucket

- One Amazon Virtual Private Cloud (Amazon VPC)

- One security group for the EC2 instance that is provisioned during the automation

- The solution uses the following VPC endpoints for AWS services:

- ec2_endpoint

- ec2_msg_endpoint

- kms_endpoint

- ssm_endpoint

- ssm_msg_endpoint

- s3_endpoint

Figure 1 shows an overview of the EC2 forensic module factory solution workflow.

Figure 1: Automation to build forensic kernel modules for an Amazon Linux EC2 instance

The EC2 forensic module factory solution workflow in Figure 1 includes the following numbered steps:

- A Step Functions workflow is started, which creates a Step Functions task token and invokes the first Lambda function, createEC2module, to create EC2 forensic modules.

- A Step Functions task token is used to allow long-running processes to complete and to avoid a Lambda timeout error. The createEC2module function runs for approximately 9 minutes. The run time for the function can vary depending on any customizations to the createEC2module function or the SSM document.

- The createEC2module function launches an EC2 instance based on the Amazon Machine Image (AMI) provided.

- Once the EC2 instance is running, an SSM document is run, which includes the following steps:

- If a specific kernel version is provided in step 1, this kernel version will be installed on the EC2 instance. If no kernel version is provided, the default kernel version on the EC2 instance will be used to create the modules.

- If a specific kernel version was selected and installed, the system is rebooted to use this kernel version.

- The prerequisite build tools are installed, as well as the LiME and Volatility2 packages.

- The LiME kernel module and the Volatility2 profile are built.

- The kernel modules for LiME and Volatility2 are put into the S3 bucket.

- Upon completion, the Step Functions task token is sent to the Step Functions workflow to invoke the second cleanupEC2module Lambda function to terminate the EC2 instance that was launched in step 2.

Solution deployment

You can deploy the EC2 forensic module factory solution by using either the AWS Management Console or the AWS Cloud Development Kit (AWS CDK).

Option 1: Deploy the solution with AWS CloudFormation (console)

Sign in to your preferred security tooling account in the AWS Management Console, and choose the following Launch Stack button to open the AWS CloudFormation console pre-loaded with the template for this solution. It will take approximately 10 minutes for the CloudFormation stack to complete.

Option 2: Deploy the solution by using the AWS CDK

You can find the latest code for the EC2 forensic module factory solution in the ec2-forensic-module-factory GitHub repository, where you can also contribute to the sample code. For instructions and more information on using the AWS CDK, see Get Started with AWS CDK.

To deploy the solution by using the AWS CDK

- To build the app when navigating to the project’s root folder, use the following commands.

npm install -g aws-cdk

npm install - Run the following commands in your terminal while authenticated in your preferred security tooling AWS account. Be sure to replace <INSERT_AWS_ACCOUNT> with your account number, and replace <INSERT_REGION> with the AWS Region that you want the solution deployed to.

cdk bootstrap aws://<INSERT_AWS_ACCOUNT>/<INSERT_REGION>

cdk deploy

Run the solution to build forensic kernel objects

Now that you’ve deployed the EC2 forensic module factory solution, you need to invoke the Step Functions workflow in order to create the forensic kernel objects. The following is an example of manually invoking the workflow, to help you understand what actions are being performed. These actions can also be integrated and automated with an EC2 incident response solution.

To manually invoke the workflow to create the forensic kernel objects (console)

- In the AWS Management Console, sign in to the account where the solution was deployed.

- In the AWS Step Functions console, select the state machine named create_ec2_volatile_memory_modules.

- Choose Start execution.

- At the input prompt, enter the following JSON values.

{

"AMI_ID": "ami-0022f774911c1d690",

"kernelversion":"kernel-4.14.104-95.84.amzn2.x86_64"

} - Choose Start execution to start the workflow, as shown in Figure 2.

Figure 2: Step Functions step input example to build custom kernel version using Amazon Linux 2 AMI ID

Workflow progress

You can use the AWS Management Console to follow the progress of the Step Functions workflow. If the workflow is successful, you should see the image when you view the status of the Step Functions workflow, as shown in Figure 3.

Figure 3: Step Functions workflow success example

Note: The Step Functions workflow run time depends on the commands that are being run in the SSM document. The example SSM document included in this post runs for approximately 9 minutes. For information about possible Step Functions errors, see Error handling in Step Functions.

To verify that the artifacts are built

- After the Step Functions workflow has successfully completed, go to the S3 bucket that was provisioned in the EC2 forensic module factory solution.

- Look for two prefixes in the bucket for LiME and Volatility2, as shown in Figure 4.

Figure 4: S3 bucket prefix for forensic kernel modules

- Open each tool name prefix in S3 to find the actual module, such as in the following examples:

- LiME example: lime-4.14.104-95.84.amzn2.x86_64.ko

- Volatility2 example: 4.14.104-95.84.amzn2.x86_64.zip

Now that the objects have been created, the solution has successfully completed.

Incorporate forensic module builds into an EC2 AMI pipeline

Each organization has specific requirements for allowing application teams to use various EC2 AMIs, and organizations commonly implement an EC2 image pipeline using tools like EC2 Image Builder. EC2 Image Builder uses recipes to install and configure required components in the AMI before application teams can launch EC2 instances in their environment.

The EC2 forensic module factory solution we implemented here makes use of an existing EC2 instance AMI. As mentioned, the solution uses an SSM document to create forensic modules. The logic in the SSM document could be incorporated into your EC2 image pipeline to create the forensic modules and store them in an S3 bucket. S3 also allows additional layers of protection such as enforcing default bucket encryption with an AWS Key Management Service Customer Managed Key (CMK), verifying S3 object integrity with checksum, S3 Object Lock, and restrictive S3 bucket policies. These protections can help you to ensure that your forensic modules have not been modified and are only accessible by authorized entities.

It is important to note that incorporating forensic module creation into an EC2 AMI pipeline will build forensic modules for the specific kernel version used in that AMI. You would still need to employ this EC2 forensic module solution to build a specific forensic module version if it is missing from the S3 bucket where you are creating and storing these forensic modules. The need to do this can arise if the EC2 instance is updated after the initial creation of the AMI.

Incorporate the solution into existing EC2 incident response automation

There are many existing solutions to automate incident response workflow for quarantining and capturing forensic evidence for EC2 instances, but the majority of EC2 incident response automation solutions have a single dependency in common, which is the use of specific forensic modules for the target EC2 instance kernel version. The EC2 forensic module factory solution in this post enables you to be both proactive and reactive when building forensic kernel modules for your EC2 instances.

You can use the EC2 forensic module factory solution in two different ways:

- Ad-hoc – In this post, you walked through the solution by running the Step Functions workflow with specific parameters. You can do this to build a repository of kernel modules.

- Automated – Alternatively, you can incorporate this solution into existing automation by invoking the Step Functions workflow and passing the AMI ID and kernel version. An example could be the following:

- An existing EC2 incident response solution attempts to get the forensic modules to capture the volatile memory from an S3 bucket.

- If the specific kernel version is missing in the S3 bucket, the solution updates the automation to StartExecution on the create_ec2_volatile_memory_modules state machine.

- The Step Functions workflow builds the specific forensic modules.

- After the Step Functions workflow is complete, the EC2 incident response solution restarts its workflow to get the forensic modules to capture the volatile memory on the EC2 instance.

Now that you have the kernel modules, you can both capture the volatile memory by using LiME, and then conduct analysis on the memory dump by using a Volatility2 profile.

To capture and analyze volatile memory on the target EC2 instance (high-level steps)

- Copy the LiME module from the S3 bucket holding the module repository to the target EC2 instance.

- Capture the volatile memory by using the LiME module.

- Stream the volatile memory dump to a S3 bucket.

- Launch an EC2 forensic workstation instance, with Volatility2 installed.

- Copy the Volatility2 profile from the S3 bucket to the appropriate location.

- Copy the volatile memory dump to the EC2 forensic workstation.

- Run analysis on the volatile memory with Volatility2 by using the specific Volatility2 profile created for the target EC2 instance.

Automated self-service AWS solution

AWS has also released the Automated Forensics Orchestrator for Amazon EC2 solution that you can use to quickly set up and configure a dedicated forensics orchestration automation solution for your security teams. The Automated Forensics Orchestrator for Amazon EC2 allows you to capture and examine the data from EC2 instances and attached Amazon Elastic Block Store (Amazon EBS) volumes in your AWS environment. This data is collected as forensic evidence for analysis by the security team.

The Automated Forensics Orchestrator for Amazon EC2 creates the foundational components to enable the EC2 forensic module factory solution’s memory forensic acquisition workflow and forensic investigation and reporting service. Both the Automated Forensics Orchestrator for Amazon EC2, and the EC2 forensic module factory, are hosted in different GitHub projects. And you will need to reconcile the expected S3 bucket locations for the associated modules:

- Automated Forensics Orchestrator for Amazon EC2 modules: S3 bucket location for LiME and S3 bucket location for Volatility2

- EC2 forensic module factory modules: S3 bucket location for LiME and S3 bucket location for Volatility2

Customize the EC2 forensic module factory solution

The SSM document pulls open-source packages to build tools for the specific Linux kernel version. You can update the SSM document to your specific requirements for forensic analysis, including expanding support for other operating systems, versions, and tools.

You can also update the S3 object naming convention and object tagging, to allow external solutions to reference and copy the appropriate kernel module versions to enable the forensic workflow.

Clean up

If you deployed the EC2 forensic module factory solution by using the Launch Stack button in the AWS Management Console or the CloudFormation template ec2_module_factory_cfn, do the following to clean up:

- In the AWS CloudFormation console for the account and Region where you deployed the solution, choose the Ec2VolModules stack.

- Choose the option to Delete the stack.

If you deployed the solution by using the AWS CDK, run the following command.

cdk destroy

Conclusion

In this blog post, we walked you through the deployment and use of the EC2 forensic module factory solution to use AWS Step Functions, AWS Lambda, AWS Systems Manager, and Amazon EC2 to create specific versions of forensic kernel modules for Amazon Linux EC2 instances.

The solution provides a framework to create the foundational components required in an EC2 incident response automation solution. You can customize the solution to your needs to fit into an existing EC2 automation, or you can deploy this solution in tandem with the Automated Forensics Orchestrator for Amazon EC2.

If you have feedback about this post, submit comments in the Comments section below. If you have any questions about this post, start a thread on re:Post.

Want more AWS Security news? Follow us on Twitter.

Experience AI with the Raspberry Pi Foundation and DeepMind

Post Syndicated from Philip Colligan original https://www.raspberrypi.org/blog/experience-ai-deepmind-ai-education/

I am delighted to announce a new collaboration between the Raspberry Pi Foundation and a leading AI company, DeepMind, to inspire the next generation of AI leaders.

The Raspberry Pi Foundation’s mission is to enable young people to realise their full potential through the power of computing and digital technologies. Our vision is that every young person — whatever their background — should have the opportunity to learn how to create and solve problems with computers.

With the rapid advances in artificial intelligence — from machine learning and robotics, to computer vision and natural language processing — it’s increasingly important that young people understand how AI is affecting their lives now and the role that it can play in their future.

Experience AI is a new collaboration between the Raspberry Pi Foundation and DeepMind that aims to help young people understand how AI works and how it is changing the world. We want to inspire young people about the careers in AI and help them understand how to access those opportunities, including through their subject choices.

Experience AI

More than anything, we want to make AI relevant and accessible to young people from all backgrounds, and to make sure that we engage young people from backgrounds that are underrepresented in AI careers.

The program has two strands: Inspire and Experiment.

Inspire: To engage and inspire students about AI and its impact on the world, we are developing a set of free learning resources and materials including lesson plans, assembly packs, videos, and webinars, alongside training and support for educators. This will include an introduction to the technologies that enable AI; how AI models are trained; how to frame problems for AI to solve; the societal and ethical implications of AI; and career opportunities. All of this will be designed around real-world and relatable applications of AI, engaging a wide range of diverse interests and useful to teachers from different subjects.

Experiment: Building on the excitement generated through Inspire, we are also designing an AI challenge that will support young people to experiment with AI technologies and explore how these can be used to solve real-world problems. This will provide an opportunity for students to get hands-on with technology and data, along with support for educators.

Our initial focus is learners aged 11 to 14 in the UK. We are working with teachers, students, and DeepMind engineers to ensure that the materials and learning experiences are engaging and accessible to all, and that they reflect the latest AI technologies and their application.

As with all of our work, we want to be research-led and the Raspberry Pi Foundation research team has been working over the past year to understand the latest research on what works in AI education.

Next steps

Development of the Inspire learning materials is underway now, and we will release the whole set of resources early in 2023. Throughout 2023, we will design and pilot the Experiment challenge.

If you want to stay up to date with Experience AI, or if you’d like to be involved in testing the materials, fill in this form to register your interest.

The post Experience AI with the Raspberry Pi Foundation and DeepMind appeared first on Raspberry Pi.

[$] BPF for HID drivers

Post Syndicated from original https://lwn.net/Articles/909109/

The Human

Interface Device (HID) standard dates back to the Windows 95 era.

It describes how devices like mice and keyboards present themselves to the

host computer, and has created a world where a single driver can handle a

wide variety of devices from multiple manufacturers. Or it would have, if

there weren’t actual device manufacturers involved. In the real world,

devices stretch and break the standard, each in its own special way. At

the 2022 Linux Plumbers Conference,

Benjamin Tissoires described how BPF can be used to simplify the

task of supporting HID devices.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/909439/

Security updates have been issued by Debian (expat and poppler), Fedora (dokuwiki), Gentoo (fetchmail, grub, harfbuzz, libaacplus, logcheck, mrxvt, oracle jdk/jre, rizin, smarty, and smokeping), Mageia (tcpreplay, thunderbird, and webkit2), SUSE (dpdk, permissions, postgresql14, puppet, and webkit2gtk3), and Ubuntu (linux-gkeop and sosreport).

INSANE R44 Helicopter Flight – OG loved it!

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=AfOekoyZAoc

Bringing Zero Trust to mobile network operators

Post Syndicated from Mike Conlow original https://blog.cloudflare.com/zero-trust-for-mobile-operators/

At Cloudflare, we’re excited about the quickly-approaching 5G future. Increasingly, we’ll have access to high throughput and low-latency wireless networks wherever we are. It will make the Internet feel instantaneous, and we’ll find new uses for this connectivity such as sensors that will help us be more productive and energy-efficient. However, this type of connectivity doesn’t have to come at the expense of security, a concern raised in this recent Wired article. Today we’re announcing the creation of a new partnership program for mobile networks—Zero Trust for Mobile Operators—to jointly solve the biggest security and performance challenges.

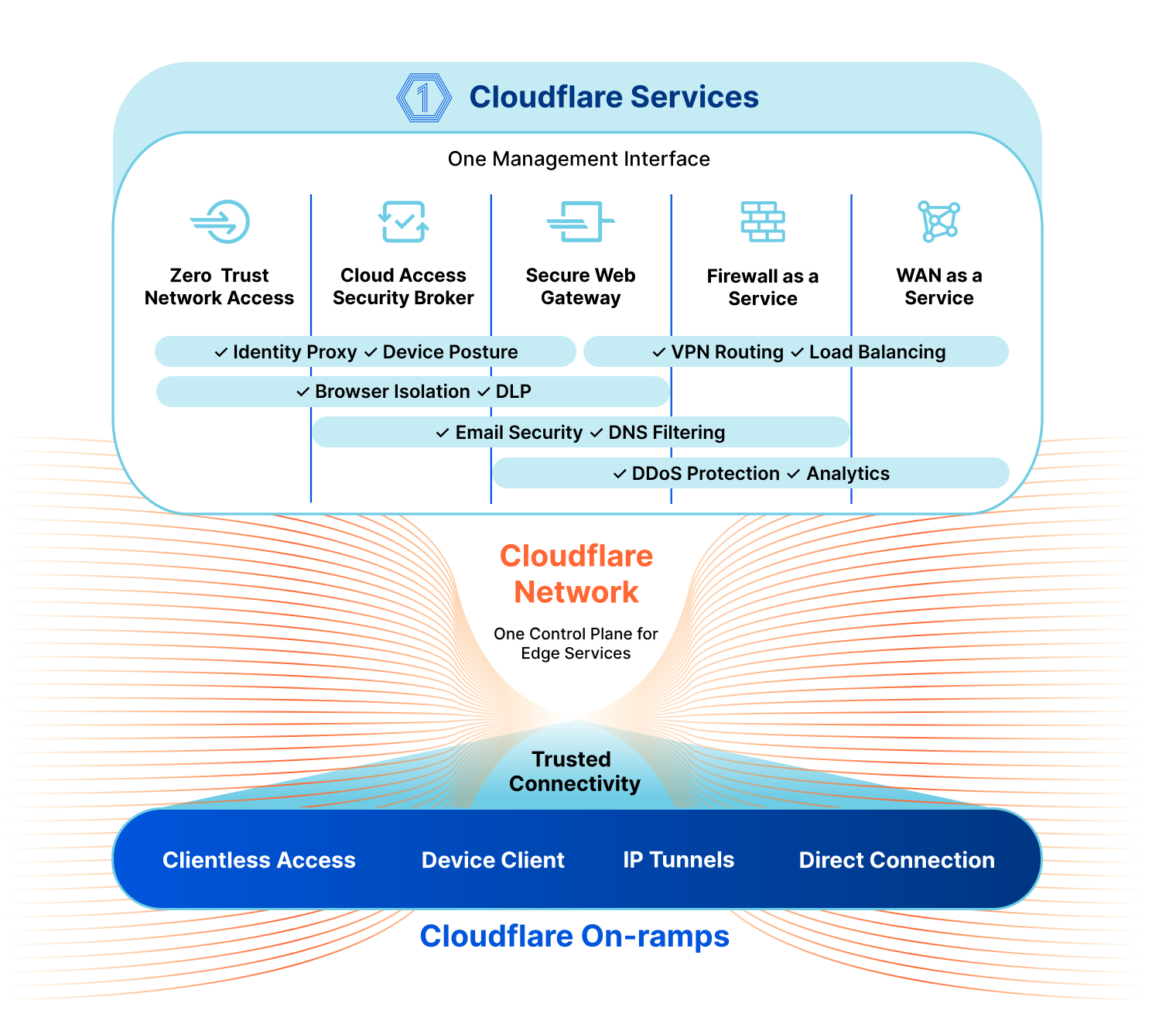

SASE for Mobile Networks

Every network is different, and the key to managing the complicated security environment of an enterprise network is having lots of tools in the toolbox. Most of these functions fall under the industry buzzword SASE, which stands for Secure Access Service Edge. Cloudflare’s SASE product is Cloudflare One, and it’s a comprehensive platform for network operators. It includes:

- Magic WAN, which offers secure Network-as-a-Service (NaaS) connectivity for your data centers, branch offices and cloud VPCs and integrates with your legacy MPLS networks

- Cloudflare Access, which is a Zero Trust Network Access (ZTNA) service requiring strict verification for every user and every device before authorizing them to access internal resources.

- Gateway, our Secure Web Gateway, which operates between a corporate network and the Internet to enforce security policies and protect company data.

- A Cloud Access Security Broker, which monitors the network and external cloud services for security threats.

- Cloudflare Area 1, an email threat detection tool to scan email for phishing, malware, and other threats.

We’re excited to partner with mobile network operators for these services because our networks and services are tremendously complementary. Let’s first think about SD-WAN (Software-Defined Wide Area Network) connectivity, which is the foundation on which much of the SASE framework rests. As an example, imagine a developer working from home developing a solution with a Mobile Network Operator’s (MNO) Internet of Things APIs. Maybe they’re developing tracking software for the number of drinks left in a soda machine, or want to track the routes for delivery trucks.

The developer at home and their fleet of devices should be on the same wide area network, securely, and at reasonable cost. What Cloudflare provides is the programmable software layer that enables this secure connectivity. The developer and the developer’s employer still need to have connectivity to the Internet at home, and for the fleet of devices. The ability to make a secure connection to your fleet of devices doesn’t do any good without enterprise connectivity, and the enterprise connectivity is only more valuable with the secure connection running on top of it. They’re the perfect match.

Once the connectivity is established, we can layer on a Zero Trust platform to ensure every user can only access a resource to which they’ve been explicitly granted permission. Any time a user wants to access a protected resource – via ssh, to a cloud service, etc. – they’re challenged to authenticate with their single-sign-on credentials before being allowed access. The networks we use are growing and becoming more distributed. A Zero Trust architecture enables that growth while protecting against known risks.

Edge Computing

Given the potential of low-latency 5G networks, consumers and operators are both waiting for a “killer 5G app”. Maybe it will be autonomous vehicles and virtual reality, but our bet is on a quieter revolution: moving compute – the “work” that a server needs to do to respond to a request – from big regional data centers to small city-level data centers, embedding the compute capacity inside wireless networks, and eventually even to the base of cell towers.

Cloudflare’s edge compute platform is called Workers, and it does exactly this – execute code at the edge. It’s designed to be simple. When a developer is building an API to support their product or service, they don’t want to worry about regions and availability zones. With Workers, a developer writes code they want executed at the edge, deploys it, and within seconds it’s running at every Cloudflare data center globally.

Some workloads we already see, and expect to see more of, include:

- IoT (Internet of Things) companies implementing complex device logic and security features directly at the edge, letting them add cutting-edge capabilities without adding cost or latency to their devices.

- eCommerce platforms storing and caching customized assets close to their visitors for improved customer experience and great conversion rates.

- Financial data platforms, including new Web3 players, providing near real-time information and transactions to their users.

- A/B testing and experimentation run at the edge without adding latency or introducing dependencies on the client-side.

- Fitness-type devices tracking a user’s movement and health statistics can offload compute-heavy workloads while maintaining great speed/latency.

- Retail applications providing fast service and a customized experience for each customer without an expensive on-prem solution.

The Cloudflare Case Studies section has additional examples from NCR, Edgemesh, BlockFi, and others on how they’re using the Workers platform. While these examples are exciting, we’re most excited about providing the platform for new innovation.

You may have seen last week we announced Workers for Platforms is now in General Availability. Workers for Platforms is an umbrella-like structure that allows a parent organization to enable Workers for their own customers. As an MNO, your focus is on providing the means for devices to send communication to clients. For IoT use cases, sending data is the first step, but the exciting potential of this connectivity is the applications it enables. With Workers for Platforms, MNOs can expose an embedded product that allows customers to access compute power at the edge.

Network Infrastructure

The complementary networks between mobile networks and Cloudflare is another area of opportunity. When a user is interacting with the Internet, one of the most important factors for the speed of their connection is the physical distance from their handset to the content and services they’re trying to access. If the data request from a user in Denver needs to wind its way to one of the major Internet hubs in Dallas, San Jose, or Chicago (and then all the way back!), that is going to be slow. But if the MNO can link to the service locally in Denver, the connection will be much faster.

One of the exciting developments with new 5G networks is the ability of MNOs to do more “local breakout”. Many MNOs are moving towards cloud-native and distributed radio access networks (RANs) which provides more flexibility to move and multiply packet cores. These packet cores are the heart of a mobile network and all of a subscriber’s data flows through one.

For Cloudflare – with a data center presence in 275+ cities globally – a user never has to wait long for our services. We can also take it a step further. In some cases, our services are embedded within the MNO or ISP’s own network. The traffic which connects a user to a device, authorizes the connection, and securely transmits data is all within the network boundary of the MNO – it never needs to touch the public Internet, incur added latency, or otherwise compromise the performance for your subscribers.

We’re excited to partner with mobile networks because our security services work best when our customers have excellent enterprise connectivity underneath. Likewise, we think mobile networks can offer more value to their customers with our security software added on top. If you’d like to talk about how to integrate Cloudflare One into your offerings, please email us at [email protected], and we’ll be in touch!

Securing the Internet of Things

Post Syndicated from Matt Silverlock original https://blog.cloudflare.com/rethinking-internet-of-things-security/

It’s hard to imagine life without our smartphones. Whereas computers were mostly fixed and often shared, smartphones meant that every individual on the planet became a permanent, mobile node on the Internet — with some 6.5B smartphones on the planet today.

While that represents an explosion of devices on the Internet, it will be dwarfed by the next stage of the Internet’s evolution: connecting devices to give them intelligence. Already, Internet of Things (IoT) devices represent somewhere in the order of double the number of smartphones connected to the Internet today — and unlike smartphones, this number is expected to continue to grow tremendously, since they aren’t bound to the number of humans that can carry them.

But the exponential growth in devices has brought with it an explosion in risk. We’ve been defending against DDoS attacks from Internet of Things (IoT) driven botnets like Mirai and Meris for years now. They keep growing, because securing IoT devices still remains challenging, and manufacturers are often not incentivized to secure them. This has driven NIST (the U.S. National Institute of Standards and Technology) to actively define requirements to address the (lack of) IoT device security, and the EU isn’t far behind.

It’s also the type of problem that Cloudflare solves best.

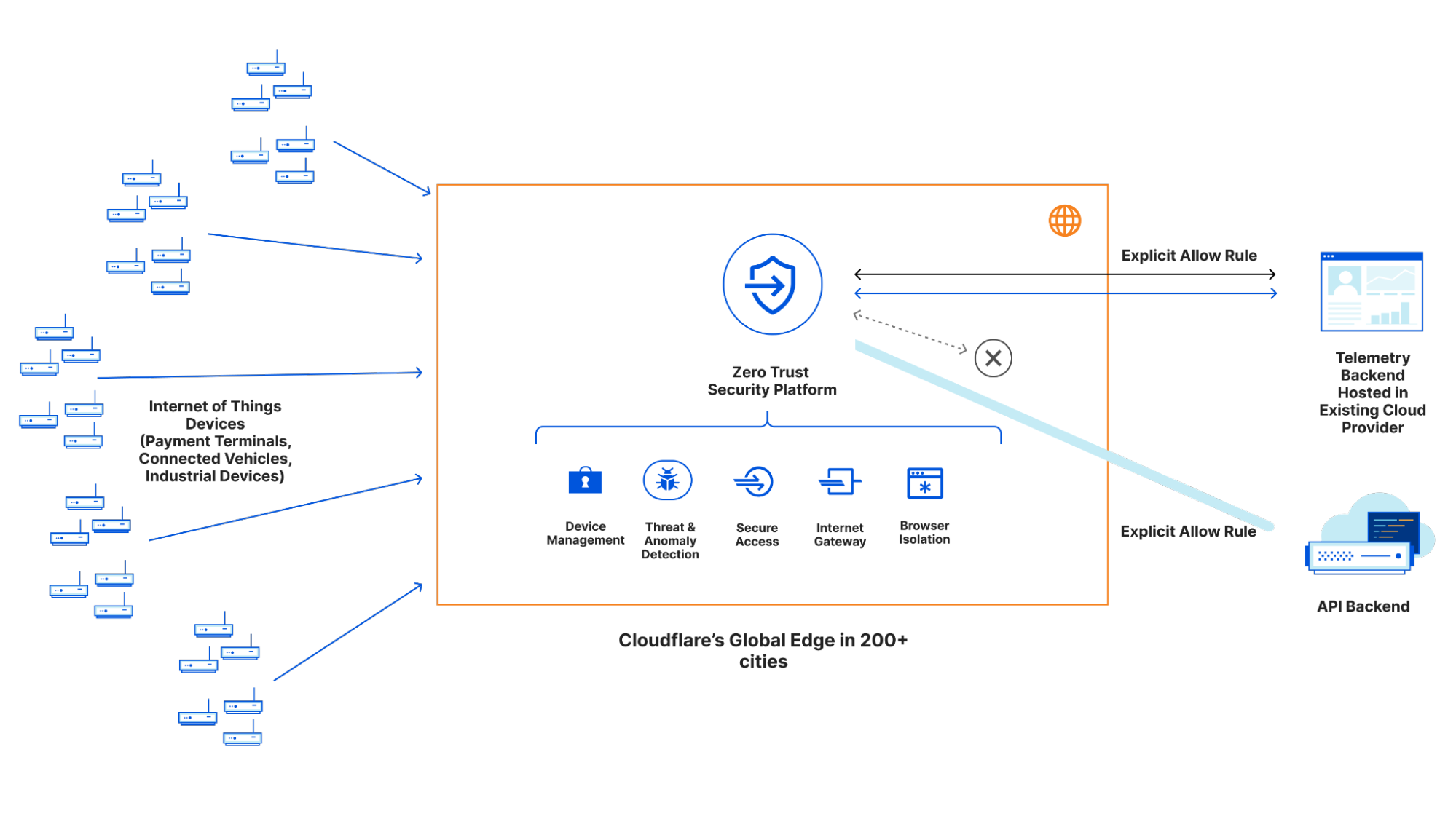

Today, we’re excited to announce our Internet of Things platform: with the goal to provide a single pane-of-glass view over your IoT devices, provision connectivity for new devices, and critically, secure every device from the moment it powers on.

Not just lightbulbs

It’s common to immediately think of lightbulbs or simple motion sensors when you read “IoT”, but that’s because we often don’t consider many of the devices we interact with on a daily basis as an IoT device.

Think about:

- Almost every payment terminal

- Any modern car with an infotainment or GPS system

- Millions of industrial devices that power — and are critical to — logistics services, industrial processes, and manufacturing businesses

You especially may not realize that nearly every one of these devices has a SIM card, and connects over a cellular network.

Cellular connectivity has become increasingly ubiquitous, and if the device can connect independently of Wi-Fi network configuration (and work out of the box), you’ve immediately avoided a whole class of operational support challenges. If you’ve just read our earlier announcement about the Zero Trust SIM, you’re probably already seeing where we’re headed.

Hundreds of thousands of IoT devices already securely connect to our network today using mutual TLS and our API Shield product. Major device manufacturers use Workers and our Developer Platform to offload authentication, compute and most importantly, reduce the compute needed on the device itself. Cloudflare Pub/Sub, our programmable, MQTT-based messaging service, is yet another building block.

But we realized there were still a few missing pieces: device management, analytics and anomaly detection. There are a lot of “IoT SIM” providers out there, but the clear majority are focused on shipping SIM cards at scale (great!) and less so on the security side (not so great) or the developer side (also not great). Customers have been telling us that they wanted a way to easily secure their IoT devices, just as they secure their employees with our Zero Trust platform.

Cloudflare’s IoT Platform will build in support for provisioning cellular connectivity at scale: we’ll support ordering, provisioning and managing cellular connectivity for your devices. Every packet that leaves each IoT device can be inspected, approved or rejected by policies you create before it reaches the Internet, your cloud infrastructure, or your other devices.

Emerging standards like IoT SAFE will also allow us to use the SIM card as a root-of-trust, storing device secrets (and API keys) securely on the device, whilst raising the bar to compromise.

This also doesn’t mean we’re leaving the world of mutual TLS behind: we understand that not every device makes sense to connect over solely over a cellular network, be it due to per-device costs, lack of coverage, or the need to support an existing deployment that can’t just be re-deployed.

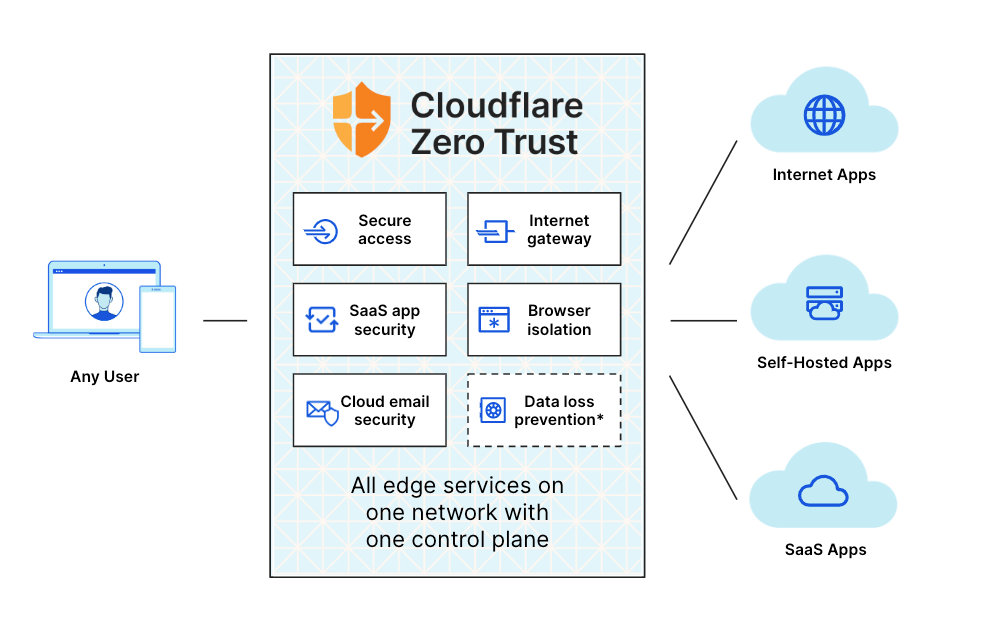

Bringing Zero Trust security to IoT

Unlike humans, who need to be able to access a potentially unbounded number of destinations (websites), the endpoints that an IoT device needs to speak to are typically far more bounded. But in practice, there are often few controls in place (or available) to ensure that a device only speaks to your API backend, your storage bucket, and/or your telemetry endpoint.

Our Zero Trust platform, however, has a solution for this: Cloudflare Gateway. You can create DNS, network or HTTP policies, and allow or deny traffic based not only on the source or destination, but on richer identity- and location- based controls. It seemed obvious that we could bring these same capabilities to IoT devices, and allow developers to better restrict and control what endpoints their devices talk to (so they don’t become part of a botnet).

At the same time, we also identified ways to extend Gateway to be aware of IoT device specifics. For example, imagine you’ve provisioned 5,000 IoT devices, all connected over cellular directly into Cloudflare’s network. You can then choose to lock these devices to a specific geography if there’s no need for them to “travel”; ensure they can only speak to your API backend and/or metrics provider; and even ensure that if the SIM is lifted from the device it no longer functions by locking it to the IMEI (the serial of the modem).

Building these controls at the network layer raises the bar on IoT device security and reduces the risk that your fleet of devices becomes the tool of a bad actor.

Get the compute off the device

We’ve talked a lot about security, but what about compute and storage? A device can be extremely secure if it doesn’t have to do anything or communicate anywhere, but clearly that’s not practical.

Simultaneously, doing non-trivial amounts of compute “on-device” has a number of major challenges:

- It requires a more powerful (and thus, more expensive) device. Moderately powerful (e.g. ARMv8-based) devices with a few gigabytes of RAM might be getting cheaper, but they’re always going to be more expensive than a lower-powered device, and that adds up quickly at IoT-scale.

- You can’t guarantee (or expect) that your device fleet is homogenous: the devices you deployed three years ago can easily be several times slower than what you’re deploying today. Do you leave those devices behind?

- The more business logic you have on the device, the greater the operational and deployment risk. Change management becomes critical, and the risk of “bricking” — rendering a device non-functional in a way that you can’t fix it remotely — is never zero. It becomes harder to iterate and add new features when you’re deploying to a device on the other side of the world.

- Security continues to be a concern: if your device needs to talk to external APIs, you have to ensure you have explicitly scoped the credentials they use to avoid them being pulled from the device and used in a way you don’t expect.

We’ve heard other platforms talk about “edge compute”, but in practice they either mean “run the compute on the device” or “in a small handful of cloud regions” (introducing latency) — neither of which fully addresses the problems highlighted above.

Instead, by enabling secure access to Cloudflare Workers for compute, Analytics Engine for device telemetry, D1 as a SQL database, and Pub/Sub for massively scalable messaging — IoT developers can both keep the compute off the device, but still keep it close to the device thanks to our global network (275+ cities and counting).

On top of that, developers can use modern tooling like Wrangler to both iterate more rapidly and deploy software more safely, avoiding the risk of bricking or otherwise breaking part of your IoT fleet.

Where do I sign up?

You can register your interest in our IoT Platform today: we’ll be reaching out over the coming weeks to better understand the problems teams are facing and working to get our closed beta into the hands of customers in the coming months. We’re especially interested in teams who are in the throes of figuring out how to deploy a new set of IoT devices and/or expand an existing fleet, no matter the use-case.

In the meantime, you can start building on API Shield and Pub/Sub (MQTT) if you need to start securing IoT devices today.

Aliza Knox | The 6 Mindshifts You Need to Rise and Thrive at Work | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=dUZOG-Qa3Os

The first Zero Trust SIM

Post Syndicated from Matt Silverlock original https://blog.cloudflare.com/the-first-zero-trust-sim/

This post is also available in Deutsch, and Français.

The humble cell phone is now a critical tool in the modern workplace; even more so as the modern workplace has shifted out of the office. Given the billions of mobile devices on the planet — they now outnumber PCs by an order of magnitude — it should come as no surprise that they have become the threat vector of choice for those attempting to break through corporate defenses.

The problem you face in defending against such attacks is that for most Zero Trust solutions, mobile is often a second-class citizen. Those solutions are typically hard to install and manage. And they only work at the software layer, such as with WARP, the mobile (and desktop) apps that connect devices directly into our Zero Trust network. And all this is before you add in the further complication of Bring Your Own Device (BYOD) that more employees are using — you’re trying to deploy Zero Trust on a device that doesn’t belong to the company.

It’s a tricky — and increasingly critical — problem to solve. But it’s also a problem which we think we can help with.

What if employers could offer their employees a deal: we’ll cover your monthly data costs if you agree to let us direct your work-related traffic through a network that has Zero Trust protections built right in? And what’s more, we’ll make it super easy to install — in fact, to take advantage of it, all you need to do is scan a QR code — which can be embedded in an employee’s onboarding material — from your phone’s camera.

Well, we’d like to introduce you to the Cloudflare SIM: the world’s first Zero Trust SIM.

In true Cloudflare fashion, we think that combining the software layer and the network layer enables better security, performance, and reliability. By targeting a foundational piece of technology that underpins every mobile device — the (not so) humble SIM card — we’re aiming to bring an unprecedented level of security (and performance) to the mobile world.

The threat is increasingly mobile

When we say that mobile is the new threat vector, we’re not talking in the abstract. Last month, Cloudflare was one of 130 companies that were targeted by a sophisticated phishing attack. Mobile was the cornerstone of the attack — employees were initially reached by SMS, and the attack relied heavily on compromising 2FA codes.

So far as we’re aware, we were the only company to not be compromised.

A big part of that was because we’re continuously pushing multi-layered Zero Trust defenses. Given how foundational mobile is to how companies operate today, we’ve been working hard to further shore up Zero Trust defenses in this sphere. And this is how we think about Zero Trust SIM: another layer of defense at a different level of the stack, making life even harder for those who are trying to penetrate your organization. With the Zero Trust SIM, you get the benefits of:

- Preventing employees from visiting phishing and malware sites: DNS requests leaving the device can automatically and implicitly use Cloudflare Gateway for DNS filtering.

- Mitigating common SIM attacks: an eSIM-first approach allows us to prevent SIM-swapping or cloning attacks, and by locking SIMs to individual employee devices, bring the same protections to physical SIMs.

- Enabling secure, identity-based private connectivity to cloud services, on-premise infrastructure and even other devices (think: fleets of IoT devices) via Magic WAN. Each SIM can be strongly tied to a specific employee, and treated as an identity signal in conjunction with other device posture signals already supported by WARP.

By integrating Cloudflare’s security capabilities at the SIM-level, teams can better secure their fleets of mobile devices, especially in a world where BYOD is the norm and no longer the exception.

Zero Trust works better when it’s software + On-ramps

Beyond all the security benefits that we get for mobile devices, the Zero Trust SIM transforms mobile into another on-ramp pillar into the Cloudflare One platform.

Cloudflare One presents a single, unified control plane: allowing organizations to apply security controls across all the traffic coming to, and leaving from, their networks, devices and infrastructure. It’s the same with logging: you want one place to get your logs, and one location for all of your security analysis. With the Cloudflare SIM, mobile is now treated as just one more way that traffic gets passed around your corporate network.

Working at the on-ramp rather than the software level has another big benefit — it grants the flexibility to allow devices to reach services not on the Internet, including cloud infrastructure, data centers and branch offices connected into Magic WAN, our Network-as-a-Service platform. In fact, under the covers, we’re using the same software networking foundations that our customers use to build out the connectivity layer behind the Zero Trust SIM. This will also allow us to support new capabilities like Geneve, a new network tunneling protocol, further expanding how customers can connect their infrastructure into Cloudflare One.

We’re following efforts like IoT SAFE (and parallel, non-IoT standards) that enable SIM cards to be used as a root-of-trust, which will enable a stronger association between the Zero Trust SIM, employee identity, and the potential to act as a trusted hardware token.

Get Zero Trust up and running on mobile immediately (and easily)

Of course, every Zero Trust solutions provider promises protection for mobile. But especially in the case of BYOD, getting employees up and running can be tough. To get a device onboarded, there is a deep tour of the Settings app of your phone: accepting profiles, trusting certificates, and (in most cases) a requirement for a mature mobile device management (MDM) solution.

It’s a pain to install.

Now, we’re not advocating the elimination of the client software on the phone any more than we would be on the PC. More layers of defense is always better than fewer. And it remains necessary to secure Wi-Fi connections that are established on the phone. But a big advantage is that the Cloudflare SIM gets employees protected behind Cloudflare’s Zero Trust platform immediately for all mobile traffic.

It’s not just the on-device installation we wanted to simplify, however. It’s companies’ IT supply chains, as well.

One of the traditional challenges with SIM cards is that they have been, until recently, a physical card. A card that you have to mail to employees (a supply chain risk in modern times), that can be lost, stolen, and that can still fail. With a distributed workforce, all of this is made even harder. We know that whilst security is critical, security that is hard to deploy tends to be deployed haphazardly, ad-hoc, and often, not at all.

But in recent years, nearly every modern phone shipped today has an eSIM — or more precisely, an eUICC (Embedded Universal Integrated Circuit Card) — that can be re-programmed dynamically. This is a huge advancement, for two major reasons:

- You avoid all the logistical issues of a physical SIM (mailing them; supply chain risk; getting users to install them!)

- You can deploy them automatically, either via QR codes, Mobile Device Management (MDM) features built into mobile devices today, or via an app (for example, our WARP mobile app).

We’re also exploring introducing physical SIMs (just like the ones above): although we believe eSIMs are the future, especially given their deployment & security advantages, we understand that the future is not always evenly distributed. We’ll be working to make sure that the physical SIMs we ship are as secure as possible, and we’ll be sharing more of how this works in the coming months.

Privacy and transparency for employees

Of course, more and more of the devices that employees use for work are their own. And while employers want to make sure their corporate resources are secure, employees also have privacy concerns when work and private life are blended on the same device. You don’t want your boss knowing that you’re swiping on Tinder.

We want to be thoughtful about how we approach this, from the perspective of both sides. We have sophisticated logging set up as part of Cloudflare One, and this will extend to Cloudflare SIM. Today, Cloudflare One can be explicitly configured to log only the resources it blocks — the threats it’s protecting employees from — without logging every domain visited beyond that. We’re working to make this as obvious and transparent as possible to both employers and employees so that, in true Cloudflare fashion, security does not have to compromise privacy.

What’s next?

Like any product at Cloudflare, we’re testing this on ourselves first (or “dogfooding”, to those in the know). Given the services we provide for over 30% of the Fortune 1000, we continue to observe, and be the target of, increasingly sophisticated cybersecurity attacks. We believe that running the service first is an important step in ensuring we make the Zero Trust SIM both secure and as easy to deploy and manage across thousands of employees as possible.

We’re also bringing the Zero Trust SIM to the Internet of Things: almost every vehicle shipped today has an expectation of cellular connectivity; an increasing number of payment terminals have a SIM card; and a growing number of industrial devices across manufacturing and logistics. IoT device security is under increasing levels of scrutiny, and ensuring that the only way a device can connect is a secure one — protected by Cloudflare’s Zero Trust capabilities — can directly prevent devices from becoming part of the next big DDoS botnet.

We’ll be rolling the Zero Trust SIM out to customers on a regional basis as we build our regional connectivity across the globe (if you’re an operator: reach out). We’d especially love to talk to organizations who don’t have an existing mobile device solution in place at all, or who are struggling to make things work today. If you’re interested, then sign up here.

Corsairs of the Spanish Main

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=TFmPR99tYpw

Leaking Passwords through the Spellchecker

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/09/leaking-passwords-through-the-spellchecker.html

Sometimes browser spellcheckers leak passwords:

When using major web browsers like Chrome and Edge, your form data is transmitted to Google and Microsoft, respectively, should enhanced spellcheck features be enabled.

Depending on the website you visit, the form data may itself include PII—including but not limited to Social Security Numbers (SSNs)/Social Insurance Numbers (SINs), name, address, email, date of birth (DOB), contact information, bank and payment information, and so on.

The solution is to only use the spellchecker options that keep the data on your computer—and don’t send it into the cloud.

Bolsonaro: Last Week Tonight with John Oliver (HBO)

Post Syndicated from LastWeekTonight original https://www.youtube.com/watch?v=uySgklnlX3Y

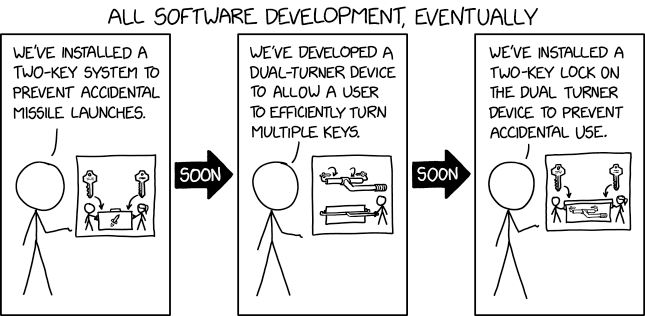

Two Key System

Post Syndicated from original https://xkcd.com/2677/

Kernel prepatch 6.0-rc7

Post Syndicated from original https://lwn.net/Articles/909392/

The 6.0-rc7 kernel prepatch is out for

testing.

So I was thinking rc7 might end up larger than usual due to travel

hitting rc6, but it doesn’t really seem to have happened.Yeah, maybe it’s marginally bigger than the historical average for

this time of the release cycle, but it definitely isn’t some

outlier, and it looks fairly normal. Which is all good, and makes

me think that the final release will happen right on schedule next

weekend, unless something unexpected happens. Knock wood.

Cloudflare’s 2022 Annual Founders’ Letter

Post Syndicated from Matthew Prince original https://blog.cloudflare.com/cloudflares-annual-founders-letter-2022/

Cloudflare launched on September 27, 2010. This week we’ll celebrate our 12th birthday. As has become our tradition, we’ll be announcing a series of products that we think of as our gifts back to the Internet. In previous years, these have included products and initiatives like Universal SSL, Cloudflare Workers, our Zero Markup Registrar, the Bandwidth Alliance, and R2 — our zero egress fee object store — which went GA last week.

We’re really excited for what we’ll be announcing this year and hope to surprise and delight all of you over the course of the week with the products and features we believe live up to our mission of helping build a better Internet.

Founders’ letter

While this will be our 12th Birthday Week of product announcements, for the last two years, as the cofounders of the company, we’ve also taken this time as an opportunity to write a letter publicly reflecting on the previous year and what’s on our minds as we go into the year ahead.

Since our last birthday, it’s been a tale of two halves of a very different year. At the end of 2021 and into the first two months of 2022, COVID infection rates were falling globally, effective vaccines were getting rolled out, and the world seemed to be returning to a sense of pre-pandemic normalcy.

Internally, we were starting to meet again in person with colleagues and customers. We’d weathered an unprecedented increase in traffic across our network caused by the pandemic and, with a few bumps along the way, used the challenges we’d faced through that time to rebuild our architecture to be more stable and reliable for the long term. We both felt optimistic for the future.

Russia’s invasion of Ukraine

Then, on February 24, Russia invaded Ukraine. While we were fortunate to not have team members working from Russia, Ukraine, or Belarus, we have many employees with families in the region and six offices within a train ride of the front lines. We watched in real time as Internet traffic patterns across Ukraine shifted, a disturbing reflection of what was happening on the ground as cities were bombed and families fled.

At the same time, Russia ratcheted up their efforts to censor their country’s Internet of all non-Russia media. While we had seen some Internet restrictions in Russia over the years, historically Russian citizens were generally able to freely access nearly any resources online. The dramatically increased censorship marked an extreme change in policy and the first time a country of any scale had tried to go from a generally open Internet to one that was fully censored.

Glimmers of hope

But, even as the war continues to rage, there is reason for optimism. In spite of a significant increase in censorship inside Russia, physical links to the rest of the world being cut in Ukraine, cyber attacks targeting Ukrainian infrastructure, and Russian forces actively rerouting BGP in invaded regions, by and large the Internet has continued to flow. As John Gilmore once famously said: “The Internet sees censorship as damage and routes around it.”

The private sector and governments around the world came together to help support Ukraine and render Russian cyberattacks largely moot. Our team provided our services for free to government, financial services, media, and civil society organizations that came under cyber attack, ensuring they stayed online. As the physical Internet links were severed in the country, our network teams worked to route traffic through every possible path to ensure not only could news from outside Ukraine get in but, equally importantly, pictures and news of the war could get out.

Those pictures and news of what is happening inside Ukraine continue to galvanize support. The Ukrainian government continues to function in spite of withering cyber attacks. Voices inside Russia pushing back against the regime are increasingly being heard. And ordinary Russian citizens have increasingly turned to services like Cloudflare’s 1.1.1.1 App to see uncensored news, in record numbers.

Our efforts to keep the Internet on in Russia led the Putin regime to officially sanction one of us (Matthew) — a sign we took that we were making a positive impact. Today we estimate approximately 5% of all households in the country are continuing to access the uncensored Internet using our 1.1.1.1 App, and that number continues to grow.

The Internet’s current battleground

2022 was not the first year in which the Internet became a battleground, but to us, it does feel like a turning point. In the last twelve months, we’ve seen more countries shut down Internet access than in any previous year. Sometimes this is just a misguided and ineffectual effort to keep students from cheating on national exams. Unfortunately, increasingly, it’s about repressive regimes attempting to assert control.

As we write this, the Iranian government is attempting to silence protests in the country through broad Internet censorship. While some may suggest this is business as usual, in fact it is not. The Internet and the broad set of news and opinions it brings have generally been available in places like Iran and Russia, and we shouldn’t accept that full censorship in them is the de facto status quo.

And these efforts to reign in the Internet are unfortunately not limited to Iran and Russia. Even in the liberal, democratic corners of Western Europe, incidents in which court ordered blocking at the infrastructure layer resulting in massive overblocking spiked dramatically over the last year. Those cases will set a dangerous precedent that a single court in a single country can block access to wide swaths of the Internet.

While it may seem ok to Austrians for an Austrian court to enforce Austrian values for an issue within Austria, if any country’s courts can block content at the core Internet infrastructure level even when it results in the blocking of unrelated sites then it will have a global impact. And, inherently, it will open the door for Afghanistan, Albania, Algeria, Andorra, Angola, Antigua, Argentina, Armenia, Australia, and Azerbaijan to do the same. And that’s just the countries that start with the letter A. If these precedents are upheld then the Internet risks falling to the lowest common denominator of what’s globally acceptable.

An old threat to permissionless innovation

The magic of the early Internet was that it was permissionless. Cloudflare was founded to counter an old and very different threat to that magic than we face today. Early in Cloudflare’s history, we used to get asked who we were competing against. We have never thought the answer was Akamai or EdgeCast. While, from a business perspective, we always thought of our business as replacing the vast catalog of Cisco’s hardware boxes with scalable services, that transition seemed inevitable. Instead, the existential competitor we faced was a threat to the permissionless Internet itself: Facebook.

If you find your eyebrow raised as you read that, know you’re not alone. It was the universal reaction we’d get whenever we said that back in 2010, and it remains the universal reaction we get when we say it today. But it has always rung true. In 2010, when Cloudflare launched, it was getting so difficult to be online — between spam, hackers, DDoS, reliability, and performance issues — that many people, organizations, and businesses gave up on the web and sought a safe space in Facebook’s walled garden.

If the challenges of being online weren’t solved in some other way, there was real risk that Facebook would, effectively, become the Internet. The magic of the Internet was that anyone with an idea could put it online and, if it resonated, thrive without having to pass through a gatekeeper. It seemed wrong to us that if those trends continued you’d have to effectively get Facebook’s permission just be online. Preserving the permissionless Internet was a big part of what motivated us to start Cloudflare.

So we set out to help solve the problems of cyberattacks, outages, and other performance challenges making sure that the Internet we believed in could continue to thrive. We built a global network able to mitigate the largest DDoS attacks easily, and to make anything connected to the Internet faster, more secure, and more reliable. We created tools to make it easy for developers to build and maintain new platforms, with the ability to deploy serverless code in an instant across the globe. We developed new ways for our customers to protect their internal systems from attack with Zero Trust services. And we made it all as widely available as possible, constantly striving to provide accessible tools not only to the Fortune 1000 but also to the small businesses, nonprofits, and developers with ideas about how to build something new, creative, and good for the world.

It’s not dissimilar to the story of another disruptive tech company that began a few years before we did. Shopify has been a long time Cloudflare customer using a number of our services, including our Workers developer platform. Their unofficial rallying cry of “arming the rebels” has always resonated with us.

In many ways, Shopify is to Amazon.com as Cloudflare is to Facebook. Both of the former providing the key infrastructure you need to innovate and then getting out of your way, both of the latter building a walled garden from which they can ultimately extract maximum rents.

A New Hope

Shopify framing their customers as the rebels taking on the Empire of Amazon is, of course, a reference to Star Wars and so it may not be surprising that we often talk internally about the Star Wars movies as a metaphor for the history of the Internet: past, present, and maybe future.

The first movie, Episode IV, was titled “A New Hope.” The plot of that movie feels a lot like how the world experienced the Internet for the 40 years prior to 2016. There was this magical thing called the Force, and it was controlled by these incredible people called Jedi. Except instead of the Force it was the Internet and instead of Jedi it was programmers and network engineers.

It’s easy to forget that it’s the stuff of not-too-long-ago science fiction that you could have a device in your pocket that could access the sum of all human knowledge. And yet, there are now more smartphones in active use than humans on Earth. Neither of us feel all that old, yet we both grew up in a time when if you had an opinion and wanted to get it out to a broad audience you had to write it up, send it in as a letter to the editor, and hope that it would get published.

Today in the world of Twitter and TikTok that is almost unimaginably quaint. The Internet blew that all up, just as Luke blew up the Death Star, and it’s hard to overstate how much that disrupted every traditional source of power and control.

The Empire Strikes Back

But after Episode IV came Episode V: “The Empire Strikes Back.” And make no mistake, the traditional centers of control are working hard to find ways to control the Internet. While we think the shift came somewhere around 2016, it feels like in 2022 the Empire has discovered the rebel base on Hoth and the AT-ATs are closing in.

Episode V is a pretty dark movie. Spoiler alert for the small percentage of you who may not have seen it, but the hero realizes his mortal enemy is his father, loses his hand, his rogue friend is encased in carbonite, and the girl he likes sold into slug slavery shortly after she declares her love for not him but the about-to-be-carbonite-encased friend. But it’s also the best movie because the stakes are so high.

The stakes are high for the Internet too, and we believe it’s important for us to engage on the hard technology and policy issues. The next several years will be challenging as we rebuild the legacy protocols of the Internet to be more private and secure by design, so they can accommodate what the Internet has become, and wrestle with hard policy issues around respecting local laws and norms on a network that is inherently global. The team at Cloudflare comes to work every day appreciating the challenges and importance of what we need to help do to live up to our mission.

Helping build a better Internet

Our mission is to help build a better Internet, and we are proud that more than 20% of the web and 30% of the Fortune 1,000 relies on Cloudflare to be fast, reliable, secure, efficient, and private for whatever they are doing online. Throughout the year we have Innovation Weeks usually dedicated to new products to sell to our customers. But, during our Birthday Week, we give back with products and initiatives that aren’t designed to generate revenue, but instead we provide them because they improve the fundamentals of how the Internet works.

And so this year we’ll be launching new services and partnerships to make the best security practices more affordable and bring them more easily to an increasingly mobile world. We’re helping developers access more resources they need to deliver the next generation of applications. And we’re launching privacy-preserving alternatives to widely used services because we believe a better Internet is a more private Internet.

We’re not ready to declare that it’s time for the Ewoks to start dancing, but we are proud of our continued innovation and the thoughtfulness of our team as we navigate these challenging times. Although the global economy continues to provide uncertain headwinds as we head into the new year, we are confident we have the plan and the team that will make us successful.

Thank you to our team, our customers, and our investors. Happy 12th birthday to Cloudflare. And, as always: we’re just getting started.

Freaky long weekend

Post Syndicated from Oglaf! -- Comics. Often dirty. original https://www.oglaf.com/freakylongweekend/

Perry Zurn & Dani S. Bassett | Curious Minds: The Power of Connection | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=bIXlhpnjttk

The State of Storytelling (With Author Tom Perrotta) | The Atlantic Festival 2022

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=-r2HiVeQkXQ

Is It Art or Artificial? (With Internet Archivist Jason Scott) | The Atlantic Festival 2022

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=j9YQUo3dUvg