Post Syndicated from Alasdair Allan original https://www.raspberrypi.org/blog/bring-on-the-documentation/

I joined Raspberry Pi eighteen months ago and spent my first year here keeping secrets and writing about Raspberry Silicon, and the chip that would eventually be known as RP2040. This is all (largely) completed work: Raspberry Pi Pico made its way out into the world back in January, and our own Raspberry Silicon followed last month.

The question is then, what have I done for you lately?

The Documentation

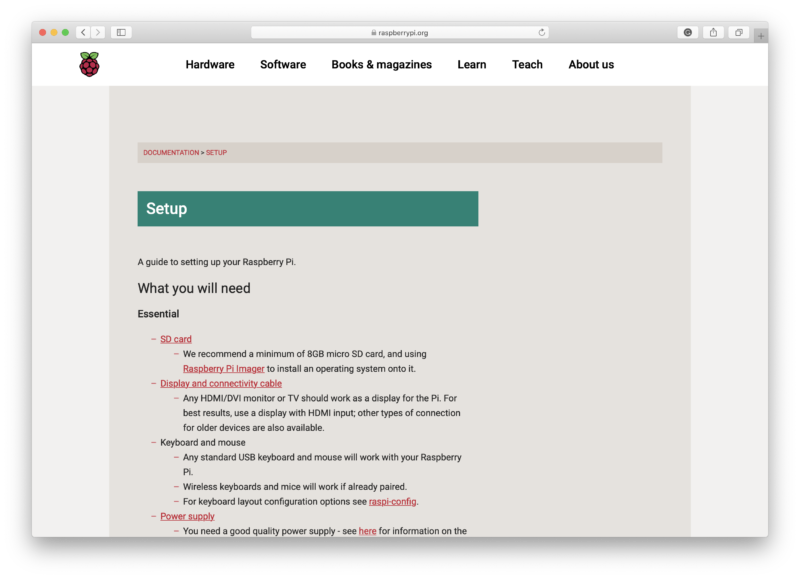

Until today our documentation for the “big” boards — as opposed to Raspberry Pi Pico — lived in a Github repository and was written in Github-flavoured Markdown. From there our documentation site was built from the Markdown source, which was pulled periodically from the repository, run through a script written many years ago which turned it into HTML, and then deployed onto our website.

This all worked really rather well in the early days of Raspberry Pi.

The documentation repository itself has been left to grow organically. When I arrived here, it needed to be restructured, and a great deal of non-Raspberry Pi specific documentation needed to be removed, while other areas were underserved and needed to be expanded. The documentation was created when there was a lot less third-party content around to support the Raspberry Pi, so a fair bit of it really isn’t that relevant anymore, and is better dealt with elsewhere on the web. And the structure was a spider’s web that, in places, made very little sense.

Frankly, it was all in a bit of a mess.

Enter the same team of folks that built the excellent PDF-based documentation for Raspberry Pi Pico and RP2040. The PDF documentation was built off an Asciidoc-based toolchain, and we knew from the outset that we’d want to migrate the Markdown-based documentation to Asciidoc. It’d offer us more powerful tools going forwards, and a lot more flexibility.

After working through the backlog of community pull requests, we took a snapshot of the current Markdown-based repository and built out a toolchain. A lot of which we intended to, and did, throw away after converting the Markdown to Asciidoc as our “source of truth.” This didn’t happen without a bit of a wrench; nobody throws working code away lightly. But it did mean we’d reached the point of no return.

The next generation of documentation

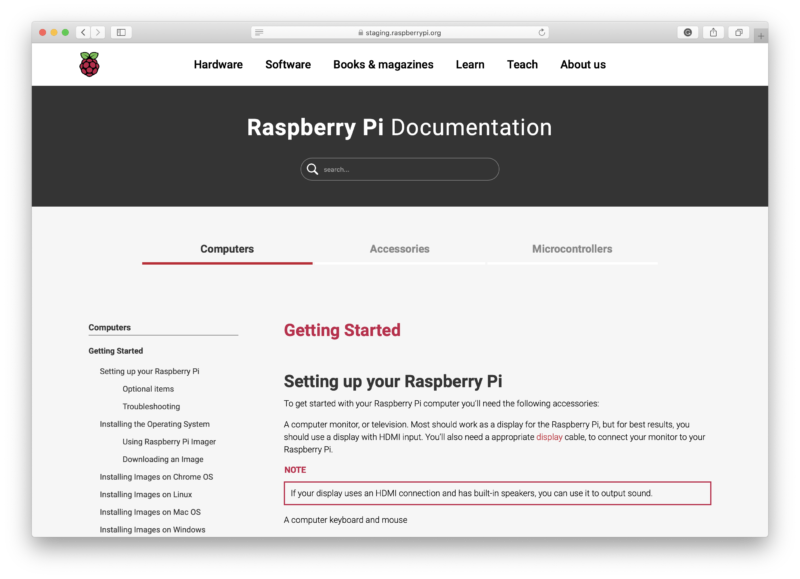

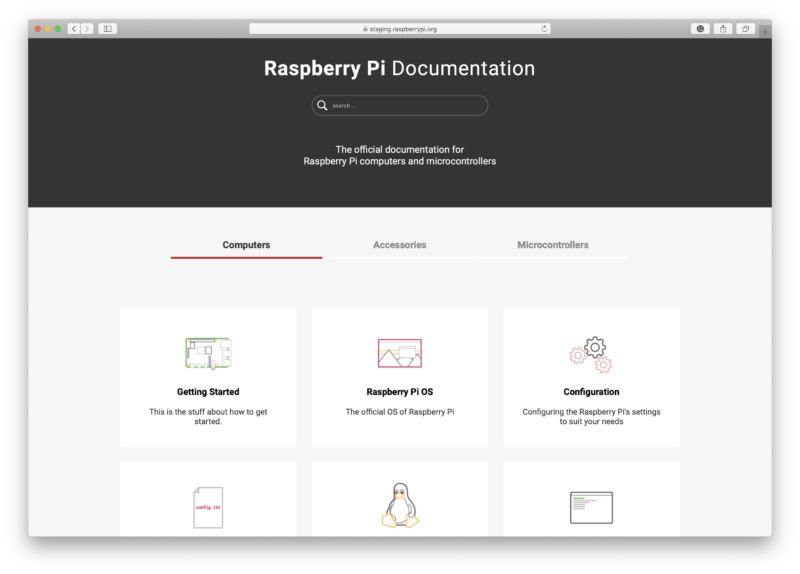

The result of our new documentation project launches today.

The new documentation site is built and deployed directly from the documentation repository using Github Actions when someone pushes to the master branch. However we’ll mostly be working on the develop branch in the repository, which is the default branch you’ll now get when you take a fresh checkout, and also the branch you should target for your pull requests.

We’ve always taken pull requests against the Markdown-based source behind our documentation site. Over the years as the documentation set has grown there have been hundreds of community contributors, who have made over 1,200 individual pull requests, ranging from fixing small typos, to contributing whole new sections.

With the introduction of the new site, we’re going to continue to take pull requests against the new Asciidoc-based documentation. However, we’re going to be a bit more targeted around what we’ll to accept into the documentation, and will be looking to keep the repository focussed on Raspberry Pi-specific things, rather than having generic Linux tutorial content.

The documentation itself will remain under a Creative Commons Attribution-Sharealike (CC BY-SA 4.0) license.

Product Information Portal

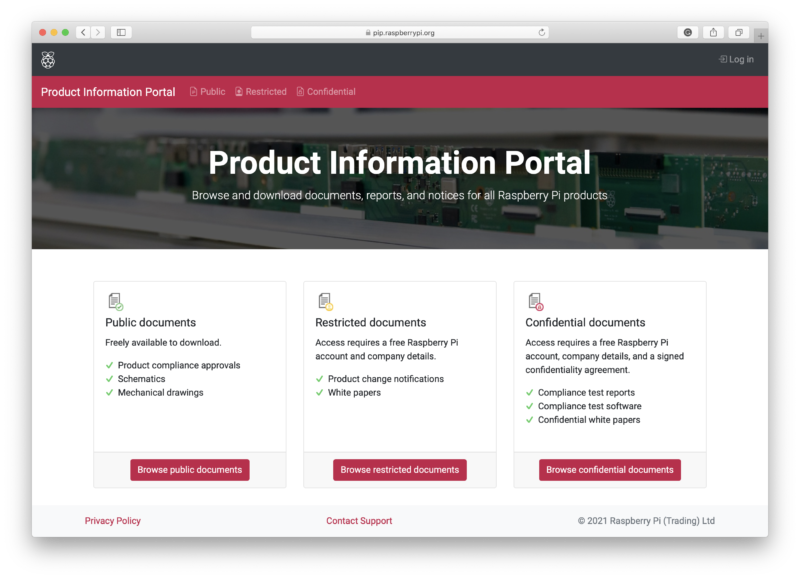

Supporting our customers in the best way we can when they build products around Raspberry Pi computers is important to us. A big part of this is being able to get customers access to the right documents easily. So alongside the new-look documentation, we have revamped how our customers (that’s you) get access to the documents you need for commercial applications of Raspberry Pi.

The Product Information Portal, or PIP as we’ve come to refer to it here at Pi Towers, is where documents such as regulatory paperwork, product change notices, and white papers will be stored and accessed from now on.

PIP has three tiers of document type: those which are publicly available; restricted documents that require a customer to sign up for a free account; and confidential documents which require a customer’s company to enter into a confidentiality agreement with Raspberry Pi.

PIP will also be a way for customers to get updates on products, allowing customers with a user account to subscribe to products, and receive email updates should there be a product change, regulatory update, or white paper release.

The portal can be found at pip.raspberrypi.org and will be constantly updated as new documents become available.

Where next?

I’m hoping that everyone that has contributed to the documentation over the years will see the new site as a big step towards making our documentation more accessible – and, as ever, we accept pull requests. However, if you’re already a contributor, the easiest thing to do is to take a fresh checkout of the repository, because things have changed a lot today.

This isn’t the end. Instead, it’s the beginning of a journey to try and pull together our documentation into something that feels a bit more cohesive. While the documentation set now looks, and feels, a lot better and is (I think) a lot easier to navigate if you don’t know it well, there is still a lot of pruning and re-writing ahead of me. But we’ve reached the stage where I’m happy, and want to, work on that in public so the community can see how things are changing and can help out.

The post Bring on the documentation appeared first on Raspberry Pi.