Post Syndicated from David Roberts original https://aws.amazon.com/blogs/big-data/keeping-your-data-lake-clean-and-compliant-with-amazon-athena/

With the introduction of CTAS support for Amazon Athena (see Use CTAS statements with Amazon Athena to reduce cost and improve performance), you can not only query but also create tables using Athena with the associated data objects stored in Amazon Simple Storage Service (Amazon S3). These tables are often temporary in nature and used to filter or aggregate data that already exists in another Athena table. Although this offers great flexibility to perform exploratory analytics, when tables are dropped, the underlying Amazon S3 data remains indefinitely. Over time, the accumulation of these objects can increase Amazon S3 costs, become administratively challenging to manage, and may inadvertently preserve data that should have been deleted for privacy or compliance reasons. Furthermore, the AWS Glue table entry is purged so there is no convenient way to trace back which Amazon S3 path was mapped to a deleted table.

This post shows how you can automate deleting Amazon S3 objects associated with a table after dropping it using Athena. AWS Glue is required to be the metadata store for Athena.

Overview of solution

The solution requires that the AWS Glue table record (database, table, Amazon S3 path) history is preserved outside of AWS Glue, because it’s removed immediately after a table is dropped. Without this record, you can’t delete the associated Amazon S3 object entries after the fact.

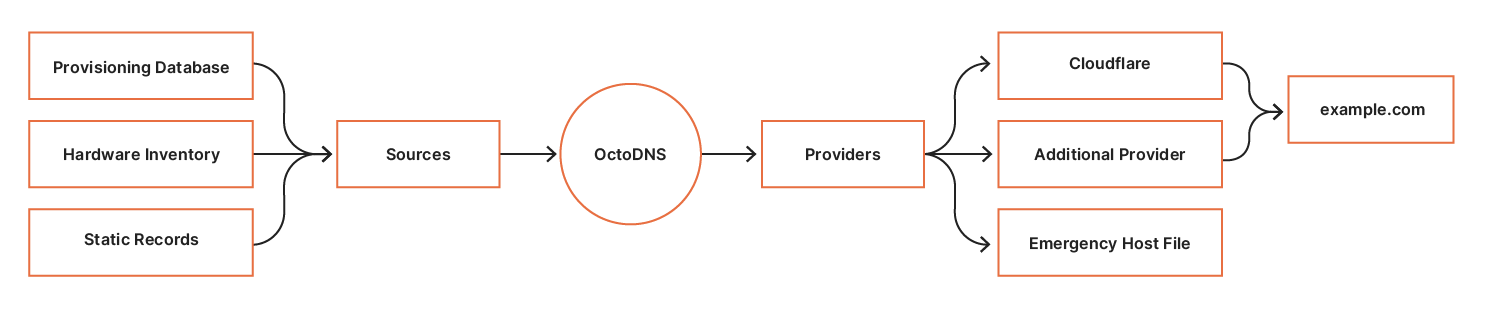

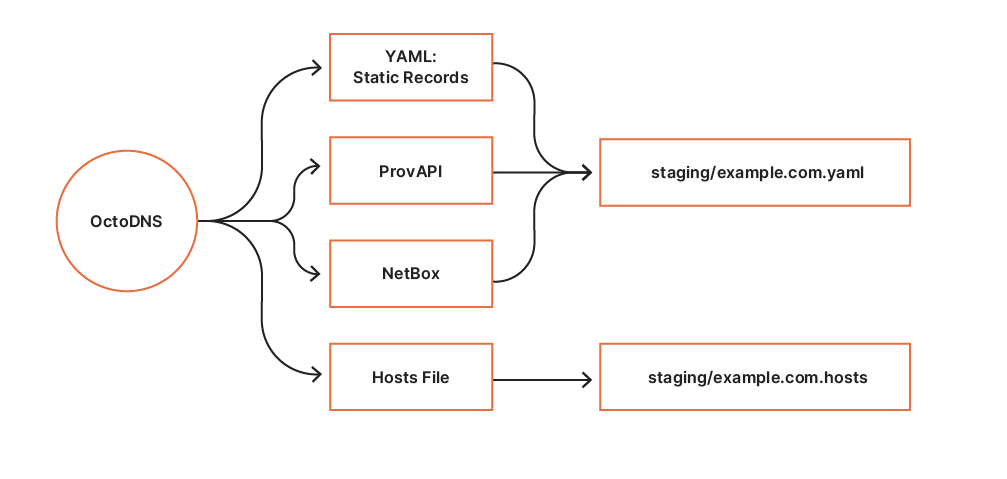

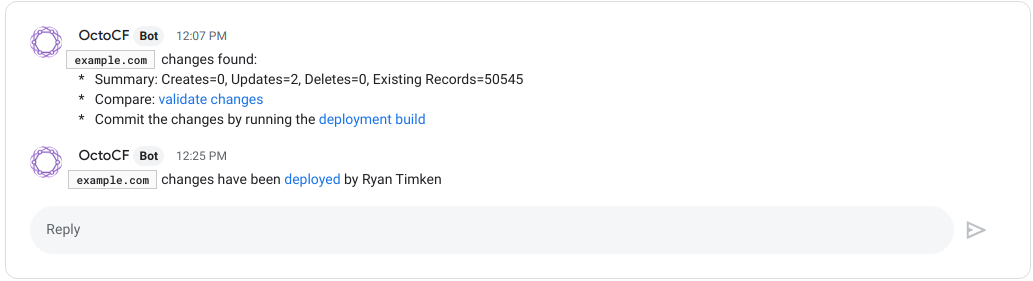

When Athena CTAS statements are issued, AWS Glue generates Amazon CloudWatch events that specify the database and table names. These events are available from Amazon EventBridge and can be used to trigger an AWS Lambda function (autoCleanS3) to fetch the new or updated Amazon S3 path from AWS Glue and write the database, table, and Amazon S3 path into an AWS Glue history table stored in Amazon DynamoDB (GlueHistoryDDB). When Athena drop table queries are detected, CloudWatch events are generated that trigger autoCleanS3 to look up the Amazon S3 path from GlueHistoryDDB and delete all related objects from Amazon S3.

Not all dropped tables should trigger Amazon S3 object deletion. For example, when you create a table using existing Amazon S3 data (not CTAS), it’s not advisable to automatically delete the associated Amazon S3 tables, because other analysts may have other tables referring to the same source data. For this reason, you must include a user-defined comment (--dropstore ) in the Athena drop table query to cause autoCleanS3 to purge the Amazon S3 objects.

Lastly, after objects are successfully deleted, the corresponding entry in GlueHistoryDDB is updated for historical and audit purposes. The overall workflow is described in the following diagram.

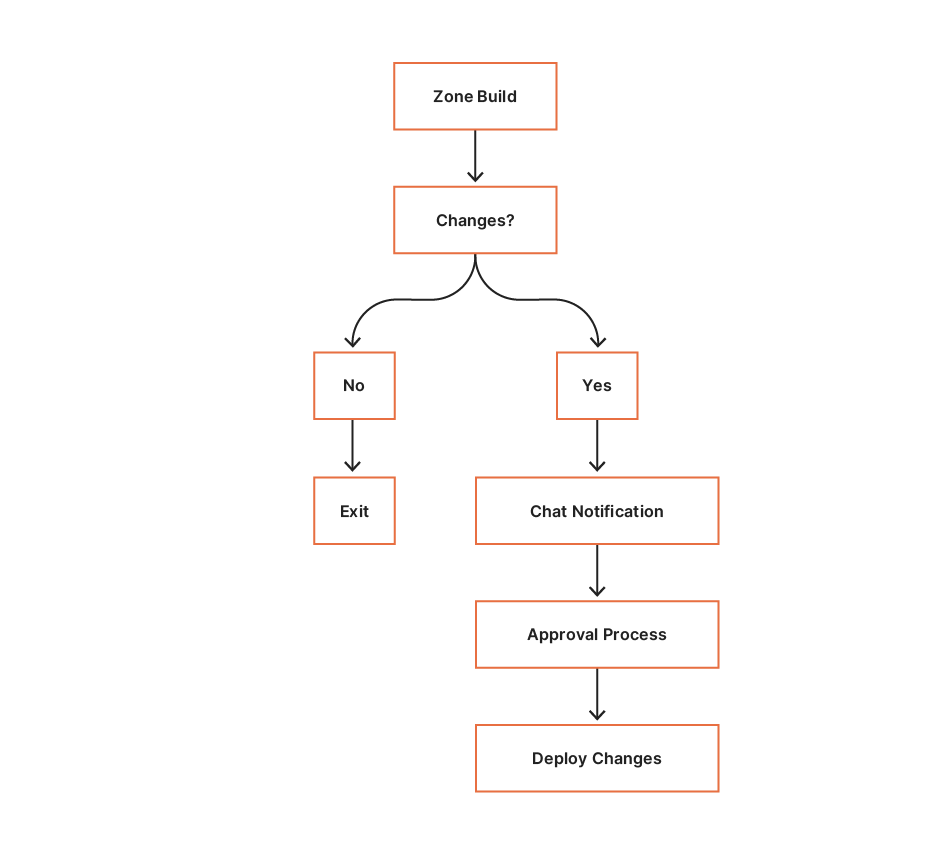

The workflow contains the following steps:

- A user creates a table either via Athena or the AWS Glue console or API.

- AWS Glue generates a CloudWatch event, and an EventBridge rule triggers the Lambda function.

- The function creates an entry in DynamoDB containing a copy of the AWS Glue record and Amazon S3 path.

- The user drops the table from Athena, including the special comment

--dropstore. - The Lambda function fetches the dropped table entry from DynamoDB, including the Amazon S3 path.

- The function deletes data from the Amazon S3 path, including manifest files, and marks the DynamoDB entry as purged.

Walkthrough overview

To implement this solution, we complete the following steps:

- Create the required AWS Identity and Access Management (IAM) policy and role.

- Create the AWS Glue history DynamoDB table.

- Create the Lambda

autoCleanS3function. - Create the EventBridge rules.

- Test the solution.

If you prefer to use a preconfigured CloudFormation template, launch one of the following stacks depending on your Region.

| Region | Launch Button |

| us-east-1 (N. Virginia) | |

| us-west-2 (Oregon) | |

| eu-west-1 (Ireland) |

Prerequisites

Before implementing this solution, create an AWS Glue database and table with the data residing in Amazon S3. Be sure your user has the necessary permissions to access Athena and perform CTAS operations writing in a sample Amazon S3 location.

For more information about building a data lake, see Build a Data Lake Foundation with AWS Glue and Amazon S3.

Creating an IAM policy and role

You need to first create the required IAM policy for the Lambda function role to use to query AWS Glue and write to DynamoDB.

- On the IAM console, choose Policies.

- Choose Create policy.

- On the JSON tab, enter the following code (update the Region, account ID, and S3 bucket accordingly, and the table name GlueHistoryDDB if you choose to change it):

- Choose Review policy.

- For Name, enter

autoCleanS3-LambdaPolicy. - For Description, enter

Policy used by Lambda role to purge S3 objects when an Amazon Athena table is dropped. - Choose Create policy.

Next, you need to create an IAM role and attach this policy.

- On the IAM console, choose Roles.

- Choose Create role.

- Choose AWS service.

- Choose Lambda.

- Choose Next: Permissions.

- For Filter policies, enter

autoCleanS3-LambdaPolicy. - Choose Next: Tags.

- Choose Next: Review.

- For Role name, enter

autoCleanS3-LambdaRole. - For Description, enter

Role used by Lambda to purge S3 objects when an Amazon Athena table is dropped. - Choose Create role.

Creating the AWS Glue history DynamoDB table

You use this DynamoDB table to hold the current and historical list of AWS Glue tables and their corresponding Amazon S3 path. Create the table as follows:

- On the DynamoDB console, choose Dashboard.

- Choose Create table.

- For Table name, enter

GlueHistoryDDB. - For Partition key, enter

database(leave type as String). - Select Add sort key.

- Enter

table_date(leave type as String). - For Table settings, select Use default settings.

- Choose Create.

The following table summarizes the GlueHistoryDDB table attributes that the Lambda function creates.

| Column | Type | Description |

| database | partition key | The name of the AWS Glue database. |

| table_date | sort key | A composite attribute of AWS Glue table name plus date created. Because the same database and table name can be created again, the date must be used to ensure uniqueness. |

| created_by | attribute | The user or Amazon EC2 instance ARN from which the table was created. |

| owner | attribute | The owner of the table or account number. |

| purged | attribute | A boolean indicating whether the Amazon S3 objects have been deleted (True/False). |

| s3_path | attribute | The Amazon S3 path containing objects associated with the table. |

| table | attribute | The AWS Glue table name. |

| update_time | attribute | The last time the table was updated (the Amazon S3 path changed or objects purged). |

| view_sql | attribute | The view DDL if a view was created. |

Creating the Lambda function autoCleanS3

A CloudWatch event triggers the Lambda function autoCleanS3 when a new table is created, updated, or dropped. If the --dropstore keyword is included in the Athena query comments, the associated Amazon S3 objects are also removed.

- On the Lambda console, choose Create function.

- Select Author from scratch.

- For Function name¸ enter

autoCleanS3. - For Runtime, choose Python 3.8.

- Under Permissions, for Execution role, select Use an existing role.

- Choose the role you created (

service-role/autoCleanS3-LambdaRole). - Choose Create function.

- Scroll down to the Function code section.

- If using Region

us-west-2, on the Actions menu, choose Upload a file to Amazon S3.

- Enter the following:

- Choose Save.

If using a Region other than us-west-2, download the Lambda .zip file locally. Then choose Upload a .zip file and choose the file from your computer to upload the Lambda function.

- In the Environment variables section, choose Edit.

- Choose Add environment variable.

- Enter the following key-values in the following table (customize as desired):

| Key | Value | Purpose |

| Athena_SQL_Drop_Phrase | --dropstore |

String to embed in Athena drop table queries to cause associated Amazon S3 objects to be removed |

| db_list |

|

Allows you to limit which databases may contain tables that autoCleanS3 is allowed to purge |

| ddb_history_table | GlueHistoryDDB |

The name of the AWS Glue history DynamoDB table |

| disable_s3_cleanup | False |

If set to True, it disables the Amazon S3 purge, still recording attempts in the history table |

| log_level | INFO |

Set to DEBUG to troubleshoot if needed |

You must use a standard regex expression, which can be a simple comma-separated list of the AWS Glue databases that you want autoCleanS3 to evaluate.

The following table shows example patterns for db_list.

| Example Regex Pattern | Result |

| .* | Default, includes all databases |

clickstream_web, orders_web, default |

Includes only clickstream_web, orders_web, default |

.*_web |

Includes all databases having names ending in _web |

.*stream.* |

Includes all databases containing stream in their name |

For a complete list or supported patterns, see https://docs.python.org/3/library/re.html#re.Pattern.match

- Choose Save.

Creating EventBridge rules

You need to create EventBridge rules that invoke your Lambda function whenever Athena query events and AWS Glue CreateTable and UpdateTable events are generated.

Creating the Athena event rule

To create the Athena query event rule, complete the following steps:

- On the EventBridge console, choose Create rule.

- For Name, enter

autoCleanS3-AthenaQueryEvent. - For Description, enter

Amazon Athena event for any query to trigger autoCleanS3. - For Define pattern, choose Event pattern.

- For Event matching pattern, choose Custom pattern.

- For Event pattern, enter the following:

- Choose Save.

- For Select targets, choose Lambda function.

- For Function¸ choose autoClean3.

- Choose Create.

Creating the AWS Glue event rule

To create the AWS Glue table event rule, complete the following steps:

- On the EventBridge console, choose Create rule.

- For Name, enter

autoCleanS3-GlueTableEvent. - For Description, enter

AWS Glue event for any table creation or update to trigger autoCleanS3. - For Define pattern, choose Event pattern.

- For Event matching pattern, choose Custom pattern.

- For Event pattern, enter the following:

- Choose Save.

- For Select targets, choose Lambda function.

- For Function¸ choose autoClean3.

- Choose Create.

You’re finished!

Testing the solution

Make sure you already have a data lake with tables defined in your AWS Glue Data Catalog and permission to access Athena. For this post, we use NYC taxi ride data. For more information, see Build a Data Lake Foundation with AWS Glue and Amazon S3.

- Create a new table using Athena CTAS.

Next, verify that the entry appears in the new GlueHistoryDDB table.

- On the DynamoDB console, open the

GlueHistoryDDBtable. - Choose Items.

- Confirm the

s3_pathvalue for the table.

You can also view the Amazon S3 table path and objects associated with the table.

- On the Amazon S3 console, navigate to the

s3_pathfound inGlueHistoryDDB. - Confirm the table and path containing the data folder and associated manifest and metadata objects.

- Drop the table using the keyword

--dropstore.

- Check the Amazon S3 path to verify both the table folder and associated manifest and metadata files have been removed.

You can also see the purged attribute for the entry in GlueHistoryDDB is now set to True, and update_time has been updated, which you can use if you ever need to look back and understand when a purge event occurred.

Considerations

The Lambda timeout may need to be increased for very large tables, because the object deletion operations may not complete in time.

To prevent accidental data deletion, it’s recommended to carefully limit which databases may participate (Lambda environment variable db_list) and to enable versioning on the Athena bucket path and set up Amazon S3 lifecycle policies to eventually remove older versions. For more information, see Deleting object versions.

Conclusion

In this post, we demonstrated how to automate the process of deleting Amazon S3 objects associated with dropped AWS Glue tables. Deleting Amazon S3 objects that are no longer associated with an AWS Glue table reduces ongoing storage expense, management overhead, and unnecessary exposure of potentially private data no longer needed within the organization, allowing you to meet regulatory requirements.

This serverless solution monitors Athena and AWS Glue table creation and drop events via CloudWatch, and triggers Lambda to perform Amazon S3 object deletion. We use DynamoDB to store the audit history of all AWS Glue tables that have been dropped over time. It’s strongly recommended to enable Amazon S3 bucket versioning to prevent accidental data deletion.

To restore the Amazon S3 objects for the deleted table, you first identify the s3_path value for the relevant table entry in GlueHistoryDDB and either copy or remove the delete marker from objects in that path. For more information, see How do I undelete a deleted S3 object?

About the Author

David Roberts is a Senior Solutions Architect at AWS. His passion is building efficient and effective solutions on the cloud, especially involving analytics and data lake governance. Besides spending time with his wife and two daughters, he likes drumming and watching movies, and is an avid video gamer.

David Roberts is a Senior Solutions Architect at AWS. His passion is building efficient and effective solutions on the cloud, especially involving analytics and data lake governance. Besides spending time with his wife and two daughters, he likes drumming and watching movies, and is an avid video gamer.

Kalyan Janaki is Senior Technical Account Manager with AWS. Kalyan enjoys working with customers and helping them migrate their workloads to the cloud. In his spare time, he tries to keep up with his 2-year-old.

Kalyan Janaki is Senior Technical Account Manager with AWS. Kalyan enjoys working with customers and helping them migrate their workloads to the cloud. In his spare time, he tries to keep up with his 2-year-old. Kapil Shardha is an AWS Solutions Architect and supports enterprise customers with their AWS adoption. He has background in infrastructure automation and DevOps.

Kapil Shardha is an AWS Solutions Architect and supports enterprise customers with their AWS adoption. He has background in infrastructure automation and DevOps. Aravind Singirikonda is a Solutions Architect at Amazon Web Services. He works with AWS Enterprise customers to provide guidance and technical assistance, helping them improve the value of their solutions when using AWS.

Aravind Singirikonda is a Solutions Architect at Amazon Web Services. He works with AWS Enterprise customers to provide guidance and technical assistance, helping them improve the value of their solutions when using AWS.

Какво пропуснахме в мерките за Ковид-а и защо няма да работят

Какво пропуснахме в мерките за Ковид-а и защо няма да работят