Post Syndicated from John Cosgrove original https://blog.cloudflare.com/protecting-apis-with-jwt-validation

Today, we are happy to announce that Cloudflare customers can protect their APIs from broken authentication attacks by validating incoming JSON Web Tokens (JWTs) with API Gateway. Developers and their security teams need to control who can communicate with their APIs. Using API Gateway’s JWT Validation, Cloudflare customers can ensure that their Identity Provider previously validated the user sending the request, and that the user’s authentication tokens have not expired or been tampered with.

What’s new in this release?

After our beta release in early 2023, we continued to gather feedback from customers on what they needed from JWT validation in API Gateway. We uncovered four main feature requests and shipped updates in this GA release to address them all:

| Old, Beta limitation | New, GA release capability |

|---|---|

| Only supported validating the raw JWT | Support for the Bearer token format |

| Only supported one JWKS configuration | Create up to four different JWKS configs to support different environments per zone |

| Only supported validating JWTs sent in HTTP headers | Validate JWTs if they are sent in a cookie, not just an HTTP header |

| JWT validation ran on all requests to the entire zone | Exclude any number of managed endpoints in a JWT validation rule |

What is the threat?

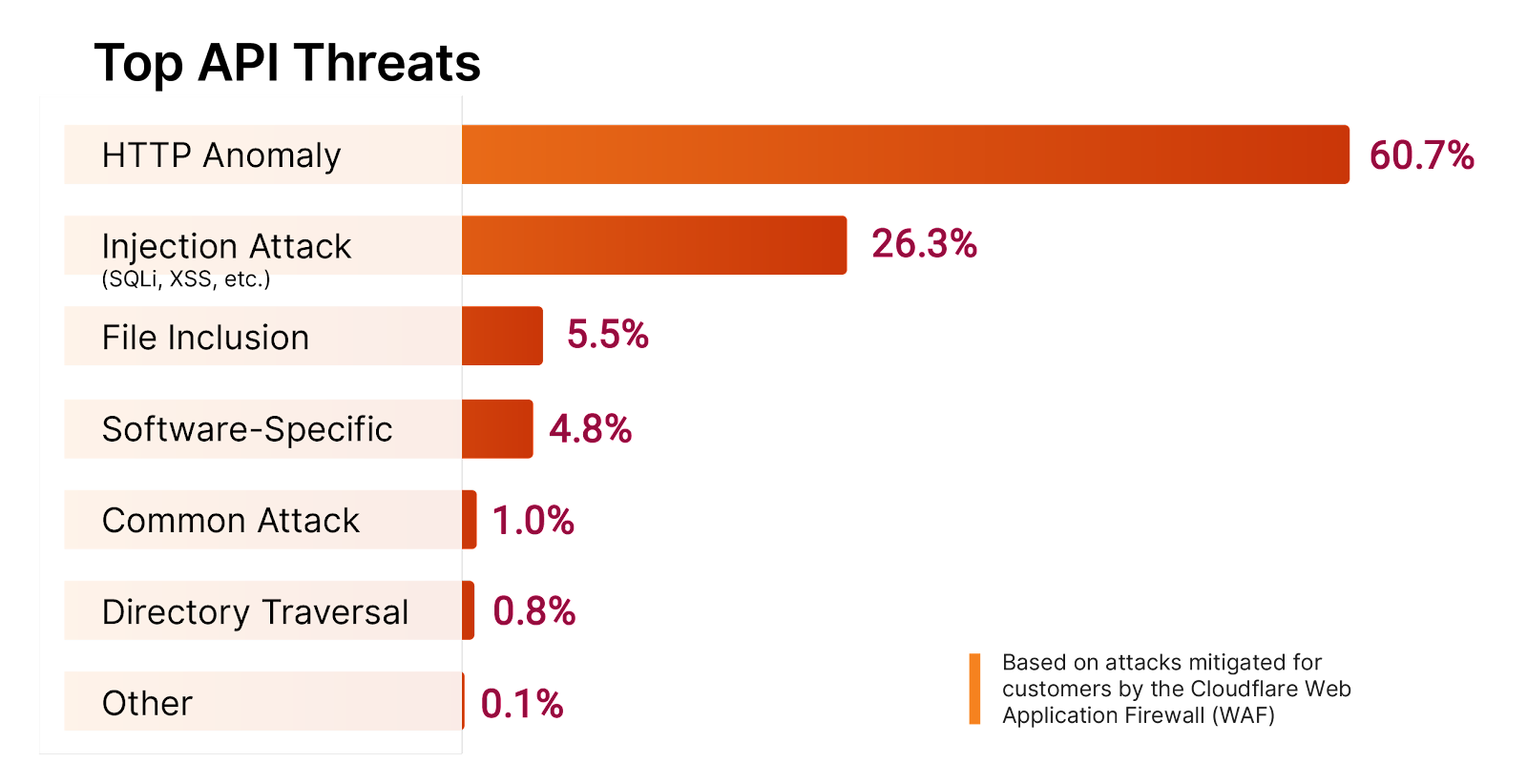

Broken authentication is the #1 threat on the OWASP Top 10 and the #2 threat on the OWASP API Top 10. We’ve written before about how flaws in API authentication and authorization at Optus led to a threat actor offering 10 million user records for sale, and government agencies have warned about these exact API attacks.

According to Gartner®1, “attacks and data breaches involving poorly secured application programming interfaces (APIs) are occurring frequently.” Getting authentication correct for your API users can be challenging, but there are best practices you can employ to cover your bases. JSON Web Token Validation in API Gateway fulfills one of these best practices by enforcing a positive security model for your authenticated API users.

A primer on authentication and authorization

Authentication establishes identity. Imagine you’re collaborating with multiple colleagues and writing a document in Google Docs. When you’re all authors of the document, you have the same privileges, and you can overwrite each other’s text. You can all see each other’s name next to your respective cursor while you’re typing. You’re all authenticated to Google Docs, so Docs can show all the users on a document who everyone is.

Authorization establishes ownership or permissions to objects. Imagine you’re collaborating with your colleague in Docs again, but this time they’ve written a document ahead of time and simply wish for you to review it and add comments without changing the document. As the owner of the document, your colleague sets an authorization policy to only allow you ‘comment’ access. As such, you cannot change their writing at all, but you can still view the document and leave comments.

While the words themselves might sound similar, the differences between them are hugely important for security. It’s not enough to simply check that a user logging in has the correct login credentials (authentication). If you never check their permissions (authorization), they would be free to overwrite, add, or delete other users’ content. When this happens for APIs, OWASP calls it a Broken Object Level Authorization attack.

A primer on API access tokens

Users authenticate to services in many different ways on the web today. Let’s take a look at the history of authentication with username and password authentication, API key authentication, and JWT authentication before we mention how JWTs can help stop API attacks.

In the early days, the web used HTTP Basic Authentication, where browsers transmitted username and password pairs as an HTTP header, posing significant security risks and making credentials visible to any observer when the application failed to adopt SSL/TLS certificates. Basic authentication also complicated API access, requiring hard-coded credentials and potentially giving broad authorization policies to a single user.

The introduction of API access keys improved security by detaching authentication from user credentials and instead sending secret text strings along with requests. This approach allowed for more nuanced access control by key instead of by user ID, though API keys still faced risks from man-in-the-middle attacks and problematic storage of secrets in source code.

JSON Web Tokens (JWTs) address these issues by removing the need to send long-lived secrets on every request, introducing cryptographically verifiable, auto-expiring, short-lived sessions. Think of a JWT like a tamper-evident seal on a bottle of medication. Along with the seal, medication also has an expiration date printed on it. Users notice when the seal is tampered with or missing altogether, and when the medication expires.

These attributes enhance security any time a JWT is used instead of a long-lived shared secret. JWTs are not an end-all-be-all solution, but they do represent an evolution in authentication technology and are widely used for authentication and authorization on the Internet today.

What’s the structure of a JWT?

JWTs are composed of three fields separated by periods. The first field is a header, the second a payload, and the third a signature:

eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJNeURlbW9JRFAiLCJzdWIiOiJqb2huZG9lIiwiYXVkIjoiTXlBcHAiLCJpYXQiOjE3MDg5ODU2MDEsImV4cCI6MTcwODk4NjIwMSwiY2xhc3MiOiJhZG1pbiJ9.v0nywcQemlEU4A18QD9UTgJLyH4ZPXppuW-n0iOmtj4x-hWJuExlMKeNS-vMZt4K6n0pDCFIAKo7_VZqACx4gILXObXMU4MEleFoKKd0f58KscNrC3BQqs3Gnq-vb5Ut9CmcvevQ5h9cBCI4XhpP2_LkYcZiuoSd3zAm2W_0LNZuFXp1wo8swDoKETYmtrdTjuF-IlVjLDAxNsWm2e7T5A8HmCnAWRItEPedm_8XVJAOemx_KqIH5w6zHY1U-M6PJkHK6D2gDU5eiN35A4FCrC5bQ1-0HSTtJkLIed2-1mRO1oANWHpscvpNLQBWQLLiIZ_evbcq_tnwh1X1sA3uxQ

If we base64 decode the first two sections, we arrive at the following structure (comments added for clarity):

{

"alg": "RS256", // JWT signature algorithm

"typ": "JWT" // JWT type

}

{

"iss": "MyDemoIDP", // Which identity provider issued this JWT

"sub": "johndoe", // Which user this JWT identifies

"aud": "MyApp", // Which app this JWT is destined for

"iat": 1708985601, // When this JWT was issued

"exp": 1709986201, // When this JWT expires

"class": "admin" // Extra, customer-defined metadata

}

We can then use the algorithm mentioned in the header (RS256) as well as the Identity Provider’s public key (example below) to check the last segment in the JWT, the signature (code not shown).

-----BEGIN PUBLIC KEY-----

MIIBIjANBgkqhkiG9w0BAQEFAAOCAQ8AMIIBCgKCAQEA3exXmNOELAnrtejo3jb2

S6p+GFR5FFlO0AqC4lA4HjNX9stgxX8tcbzv1yl5CT6VWl4kpnBweJzdBsNOauPz

uiCFQ0PtTfS0wDZm3inRPR1bTvJEuqsRTbsCxw/nRLU2+Dvu0zF41Wo4OkAbuKGS

3FwfdKOY/rX5tzjhnTe7uhWTarJG3nVnwmuD03INeNI+fbTgbUrOaVFT06Ussb9L

NNe6BHGQjs6NfG037Jk36dGY1Yiy/rutj6nJ7WkEK5ktQgWrvMMoXW9TfpYHi6sC

mnSEdaxNS8jtFodqpURUaLDIdTOGGgpUZsvzv3jDMYo5IxQK+6y+HUV8eRyDYd/o

rQIDAQAB

-----END PUBLIC KEY-----

The signature is what makes a JWT special. The token issuer, taking into account the claims, generates a signature based on a private secret or a public/private key pair. The public key can be published online, allowing anyone to check if a JWT was legitimately issued by an organization.

Proper authentication and authorization stop API attacks

No developer wants to release an insecure application, and no security team wants their developers to skip secure coding practices, but we know both happen. In the Enterprise Strategy Group report “Securing the API Attack Surface”2, a survey found that 39% of developers skip security processes due to the faster development cycles of continuous integration and continuous delivery (CI/CD). The same survey found more than half (57%) of responding organizations faced multiple security incidents related to insecure APIs in the last 12 months, and 35% of responding organizations faced at least one incident within the last year.

Along with its accompanying database, permissions, and user roles, your origin application is the ultimate security backstop of your API. However, Cloudflare can assist in keeping attacks away from your origin when you configure API Gateway with the correct context. Let’s examine three different API attacks and how to protect against them.

Missing or broken authentication

The ability for a user to send or receive data to an API and entirely bypass authentication falls into ‘broken authentication’. It’s easy to think of the expected use cases your users will take with your application. You may assume that just because a user logs in and your application is written so that users can only access their own data in their dashboard, that all users are logged in and would only access their own data. This assumption fails to account for a user making an HTTP request outside your application requesting or modifying another user’s data and there being nothing in the way to stop your API from replying. In the worst case, a lack of authorization policy checks can enable an API client to change data without an authentication token at all!

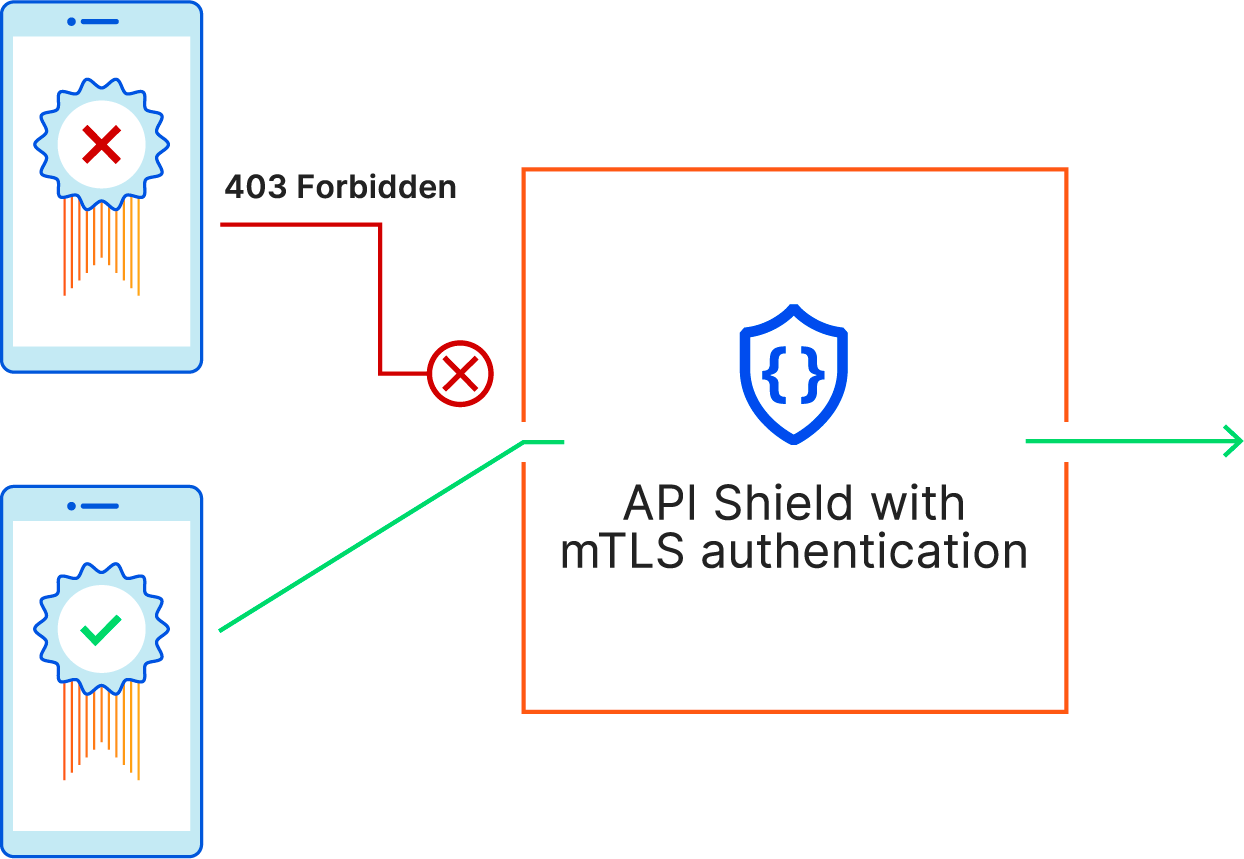

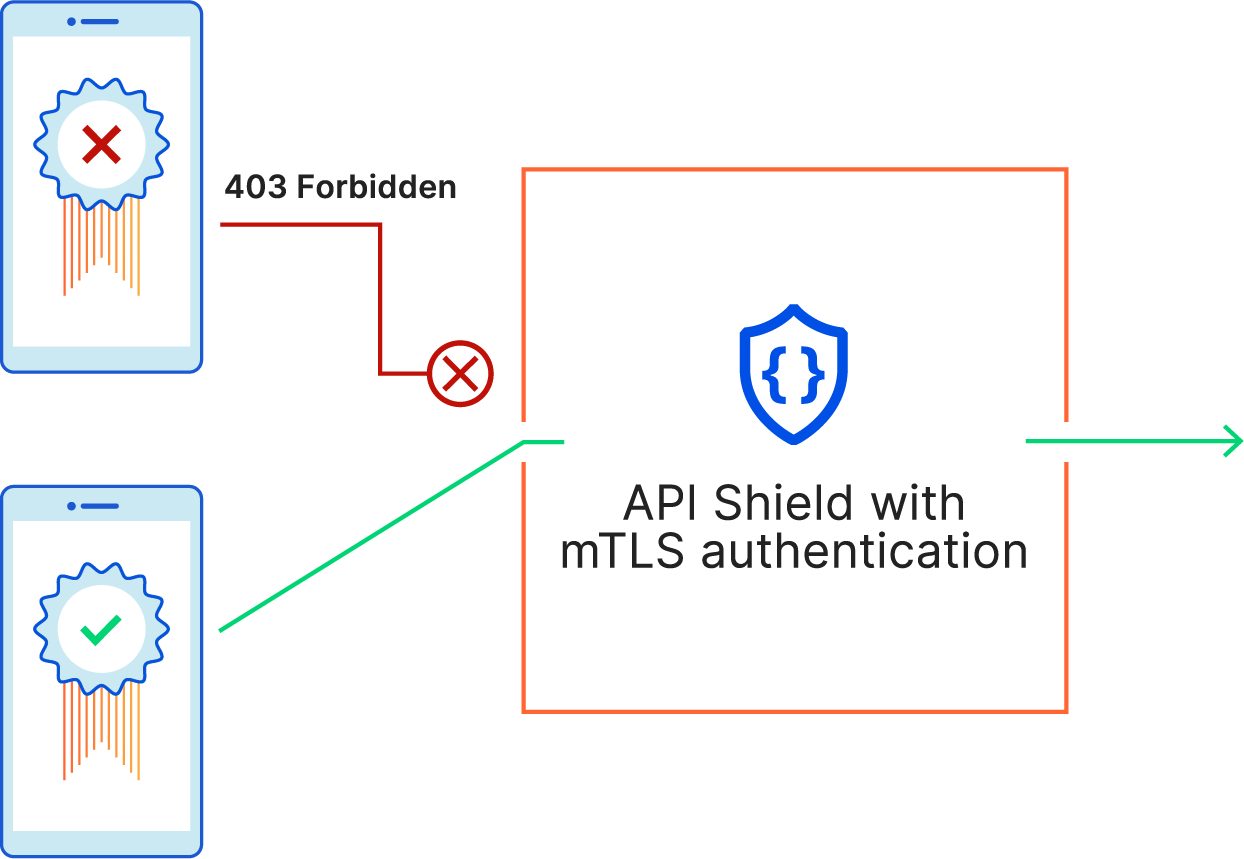

Ensuring that incoming requests have an authentication token attached to them and dropping the requests that don’t is a great way to stop the simplest API attacks.

Expired token reuse

Maybe your application already uses JWTs for user authentication. Your application decodes the JWT and looks for user claims for group membership, and you validate the claims before allowing customers access to your API. But are you checking the JWT expiration time?

Imagine a user pays for your service, but they secretly know they will soon downgrade to a free account. If the user’s tier is stored within the JWT and the application or gateway doesn’t validate the expiration time of the JWT, the user could save an old JWT and replay it to continue their access to their paid benefits. Validating JWT expiration time can prevent this type of replay attack.

Broken Function Level Authorization attacks: Tampering with claims

Let’s say you’re using JWTs for authentication, validating the claims inside them, and also validating expiration time. But do you verify the JWT signature? Practically every JWT is signed by its issuer such that API admins and security teams that know the issuer’s signing key can verify that the JWT hasn’t been tampered with. Without the API Gateway or application checking the JWT signature, a malicious user could change their JWT claims, elevating their privileges to assume an administrator role in an application by starting with a normal, non-privileged user account.

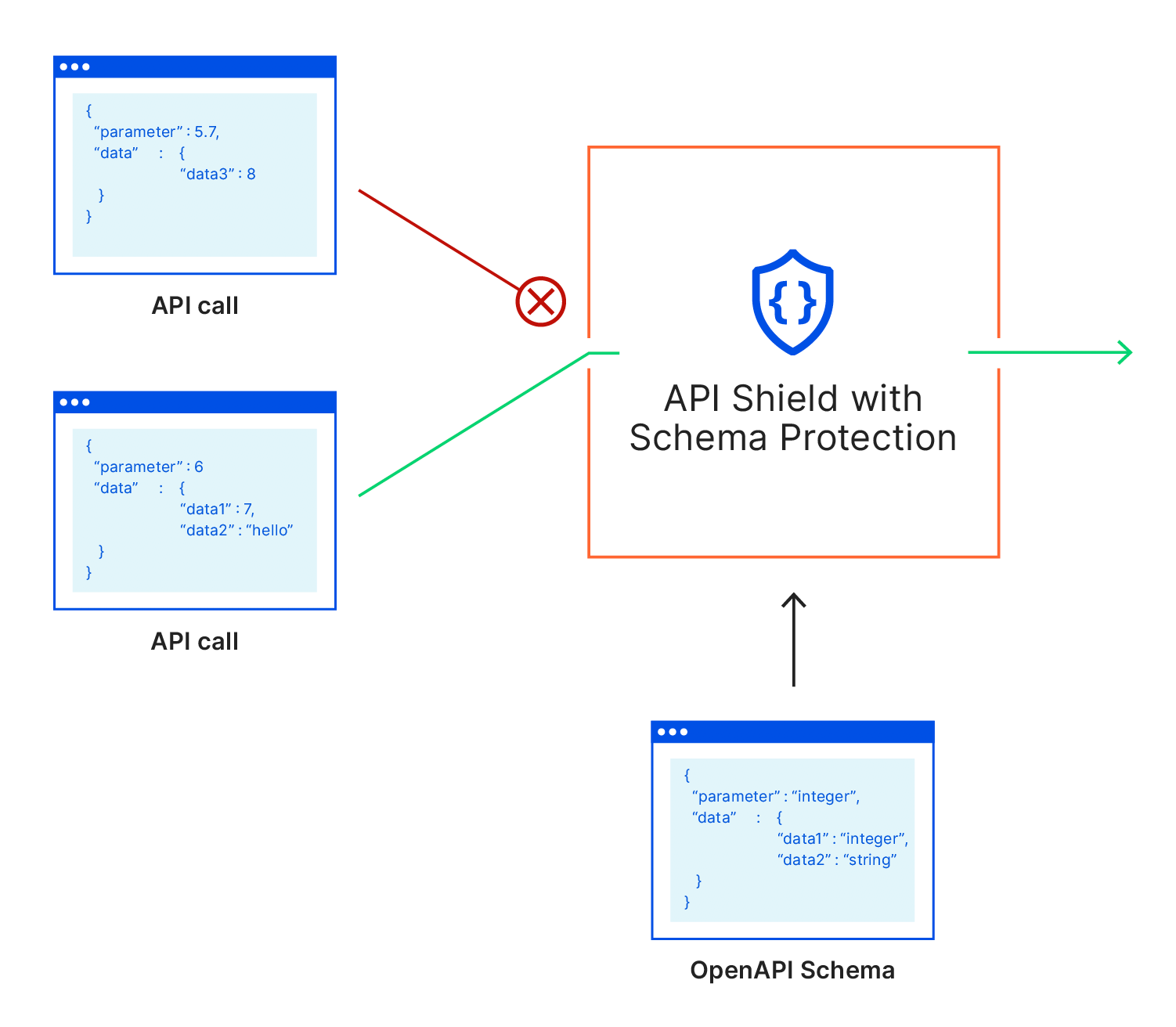

JWT Validation from API Gateway safeguards your API from broken authentication and authorization attacks by checking that JWT signatures are intact, expiry times haven’t yet passed, and that authentication tokens are present to begin with.

Don’t other Cloudflare products do this?

Other Cloudflare products also use JWTs. Cloudflare Access is part of our suite of Zero Trust products, and is meant to tie into your Identity Provider. As a best practice, customers should validate the JWT that Access creates and sends to the origin.

Conversely, JWT Validation for API Gateway is a security layer compatible with any API without changing the setup, management, or expectation of the existing user flow. API Gateway’s JWT Validation is meant to validate pre-existing JWTs that may be used by any number of services at your API origin. You really need both: Access for your internal users or employees and API Gateway for your external users.

In addition, some customers use a custom Cloudflare Worker to validate JWTs, which is a great use case for the Workers platform. However, for straightforward use cases customers may find the JWT Validation experience of API Gateway easier to interact with and manage over the lifecycle of their application. If you are validating JWTs with a Worker and today’s release of JWT Validation isn’t yet at feature parity for your custom Worker, let your account representative know. We’re interested in expanding our capabilities to meet your requirements.

What’s next?

In a future release, we will go beyond checking pre-existing JWTs, and customers will be able to generate and enforce authorization policies entirely within API Gateway. We’ll also upgrade our on-demand developer portal creation with the ability to issue keys and authentication tokens to your development team directly, streamlining API management with Cloudflare.

In addition, stay tuned for future API Gateway feature launches where we’ll use our knowledge of API traffic norms to automatically suggest security policies that highlight and stop Broken Object/Function Level Authorization attacks outside the JWT Validation use case.

Existing API Gateway customers can try the new feature now. Enterprise customers without API Gateway should sign up for the trial to try the latest from API Gateway.

—

1Gartner, “API Security: What You Need to Do to Protect Your APIs”, Analyst(s) Mark O’Neill, Dionisio Zumerle, Jeremy D’Hoinne, January 13, 2023

2Enterprise Strategy Group, “Securing the API Attack Surface”, Analyst, Melinda Marks, May 2023