Post Syndicated from Daniele Molteni original https://blog.cloudflare.com/security-week-2024-wrap-up

The next 12 months have the potential to reshape the global political landscape with elections occurring in more than 80 nations, in 2024, while new technologies, such as AI, capture our imagination and pose new security challenges.

Against this backdrop, the role of CISOs has never been more important. Grant Bourzikas, Cloudflare’s Chief Security Officer, shared his views on what the biggest challenges currently facing the security industry are in the Security Week opening blog.

Over the past week, we announced a number of new products and features that align with what we believe are the most crucial challenges for CISOs around the globe. We released features that span Cloudflare’s product portfolio, ranging from application security to securing employees and cloud infrastructure. We have also published a few stories on how we take a Customer Zero approach to using Cloudflare services to manage security at Cloudflare.

We hope you find these stories interesting and are excited by the new Cloudflare products. In case you missed any of these announcements, here is a recap of Security Week:

Responding to opportunity and risk from AI

| Title | Excerpt |

|---|---|

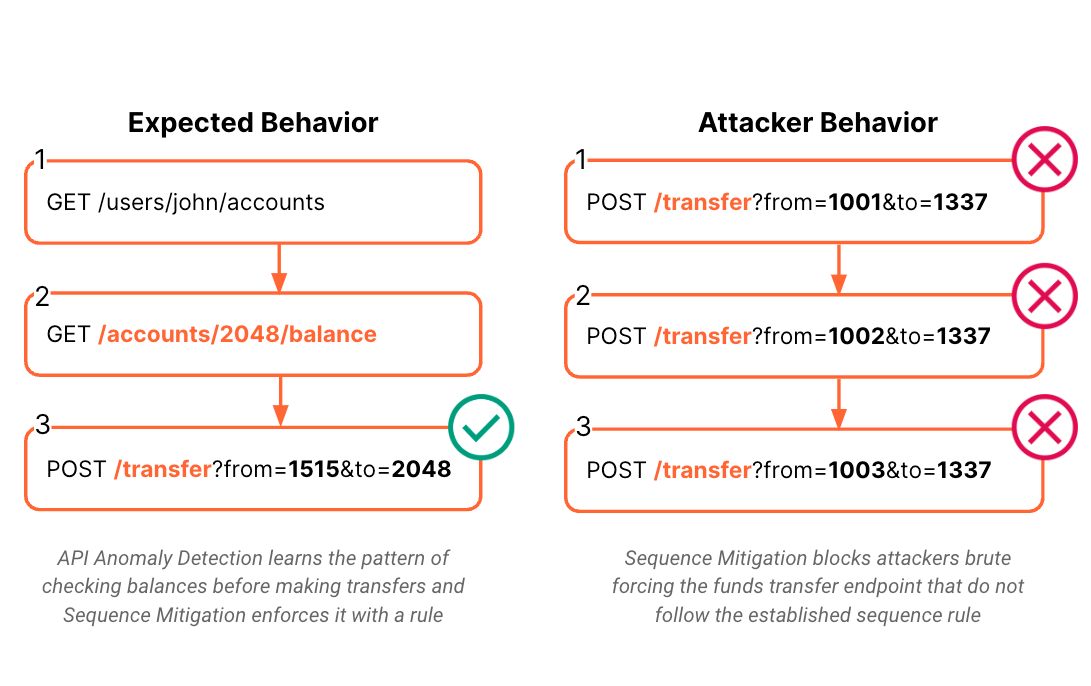

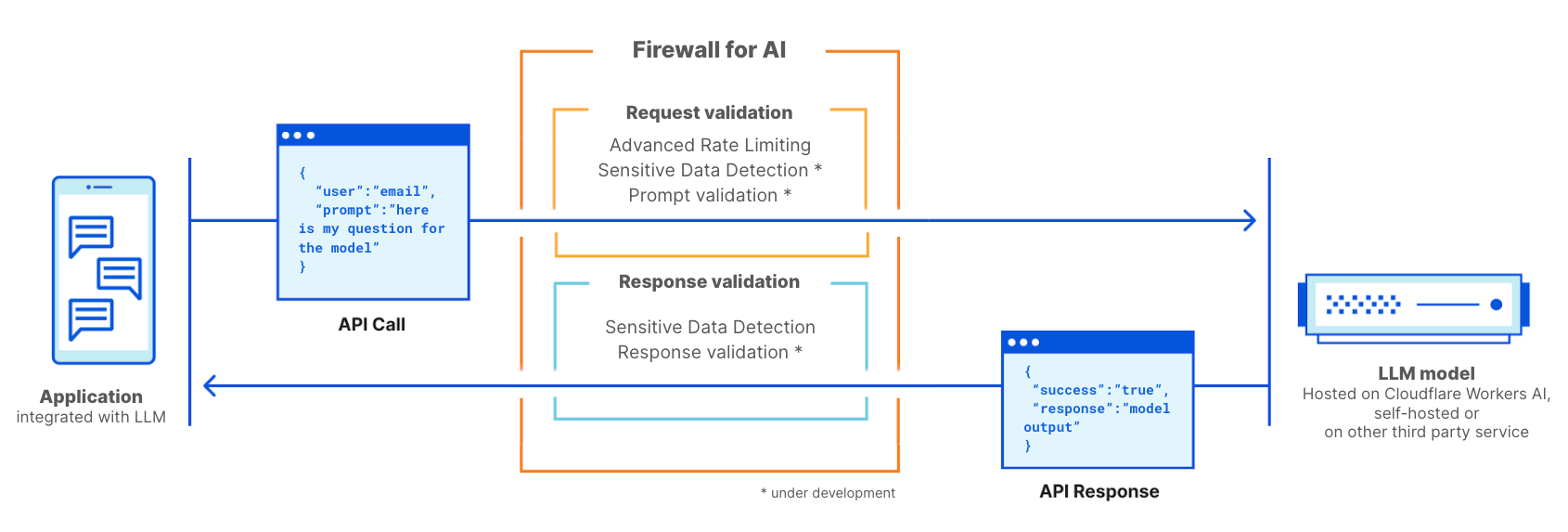

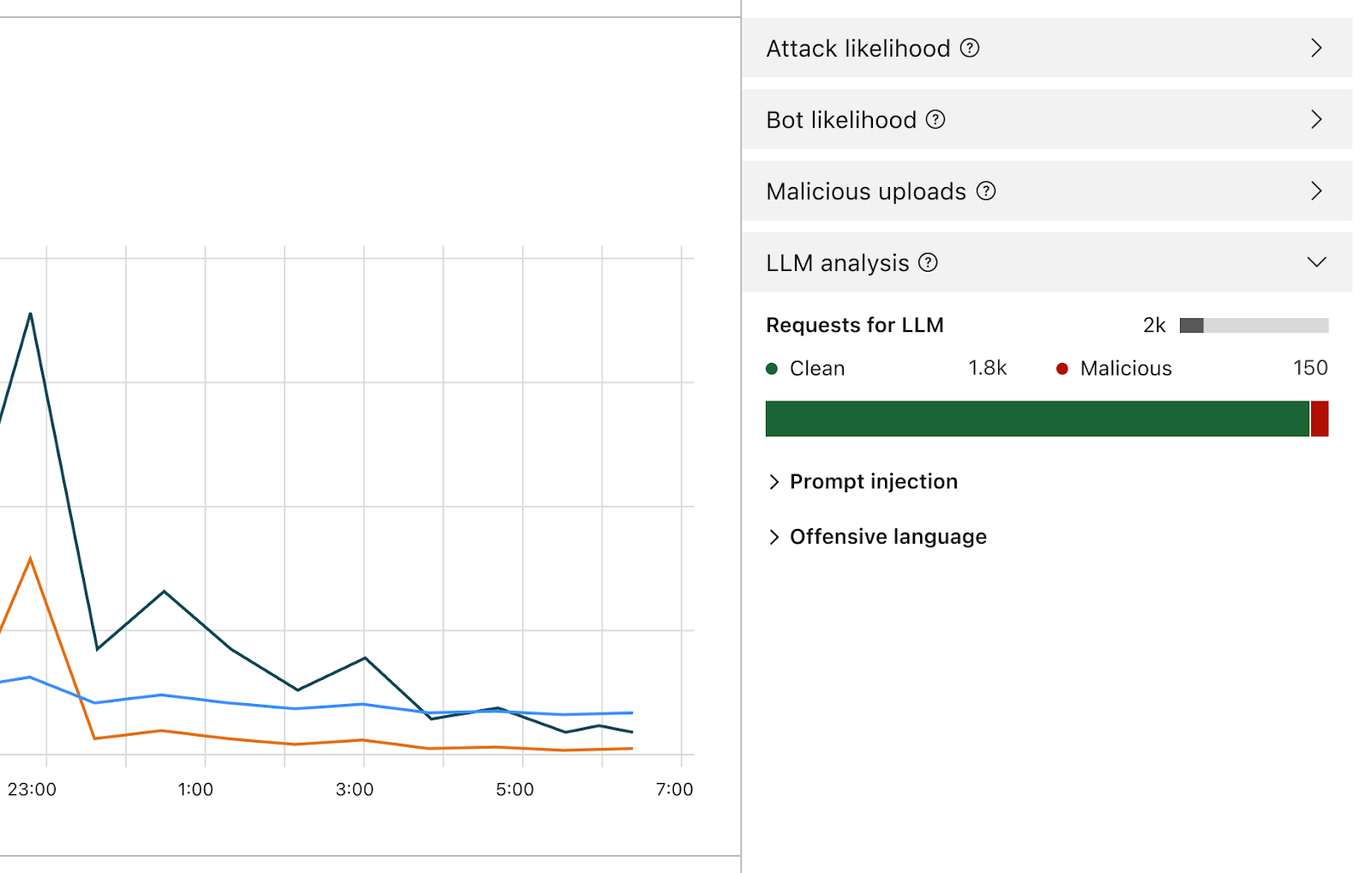

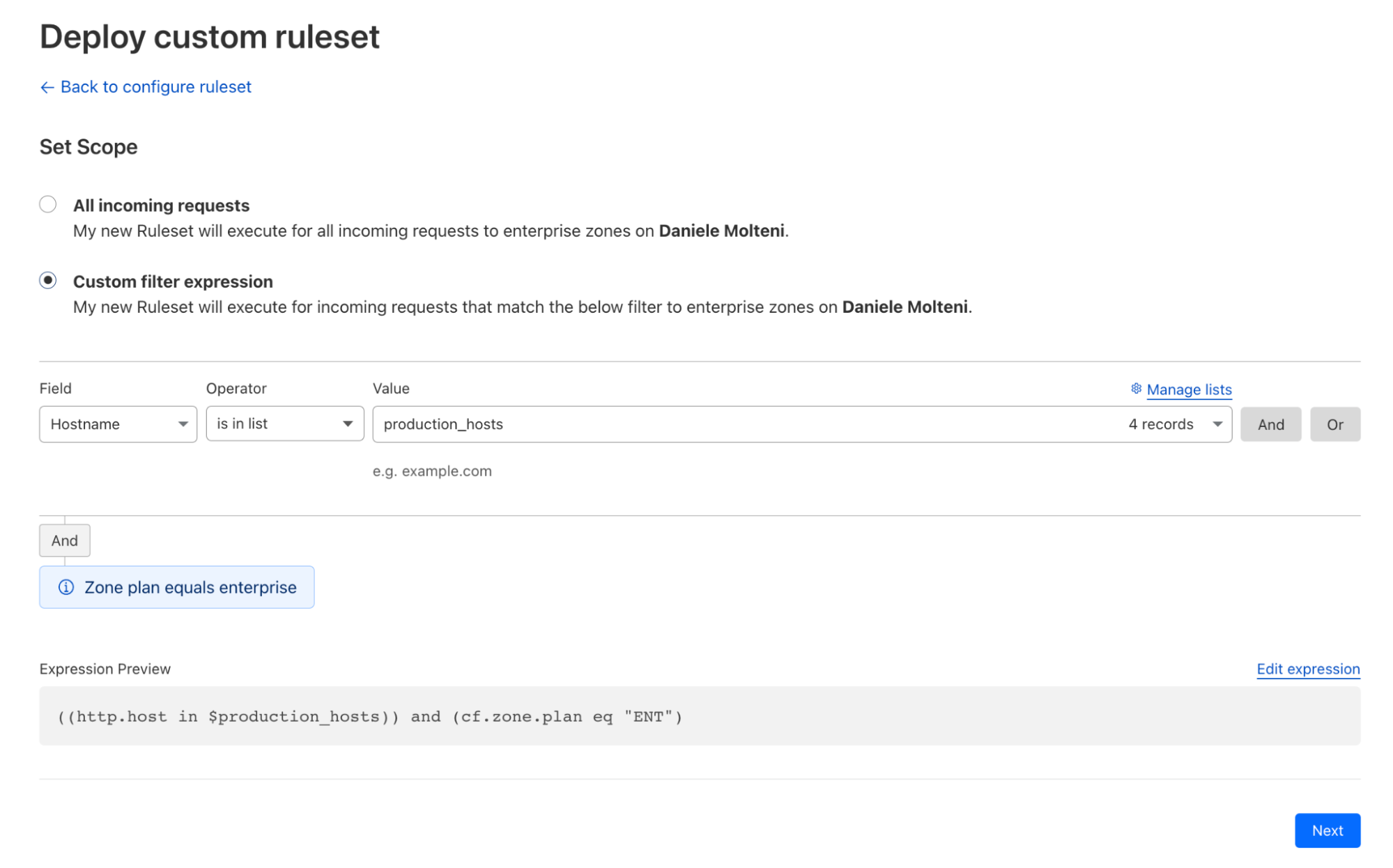

| Cloudflare announces Firewall for AI | Cloudflare announced the development of Firewall for AI, a protection layer that can be deployed in front of Large Language Models (LLMs) to identify abuses and attacks. |

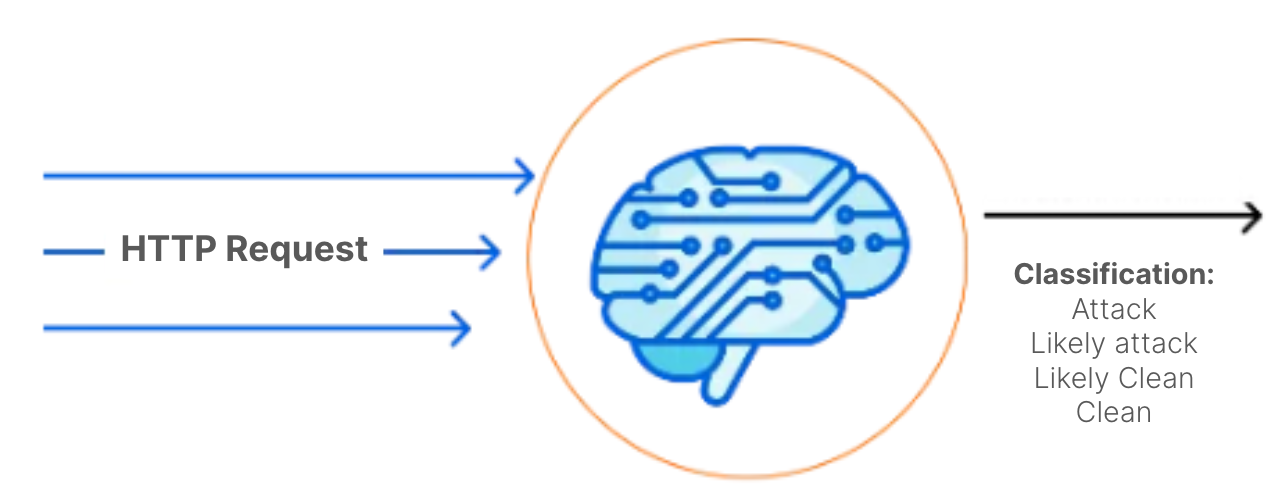

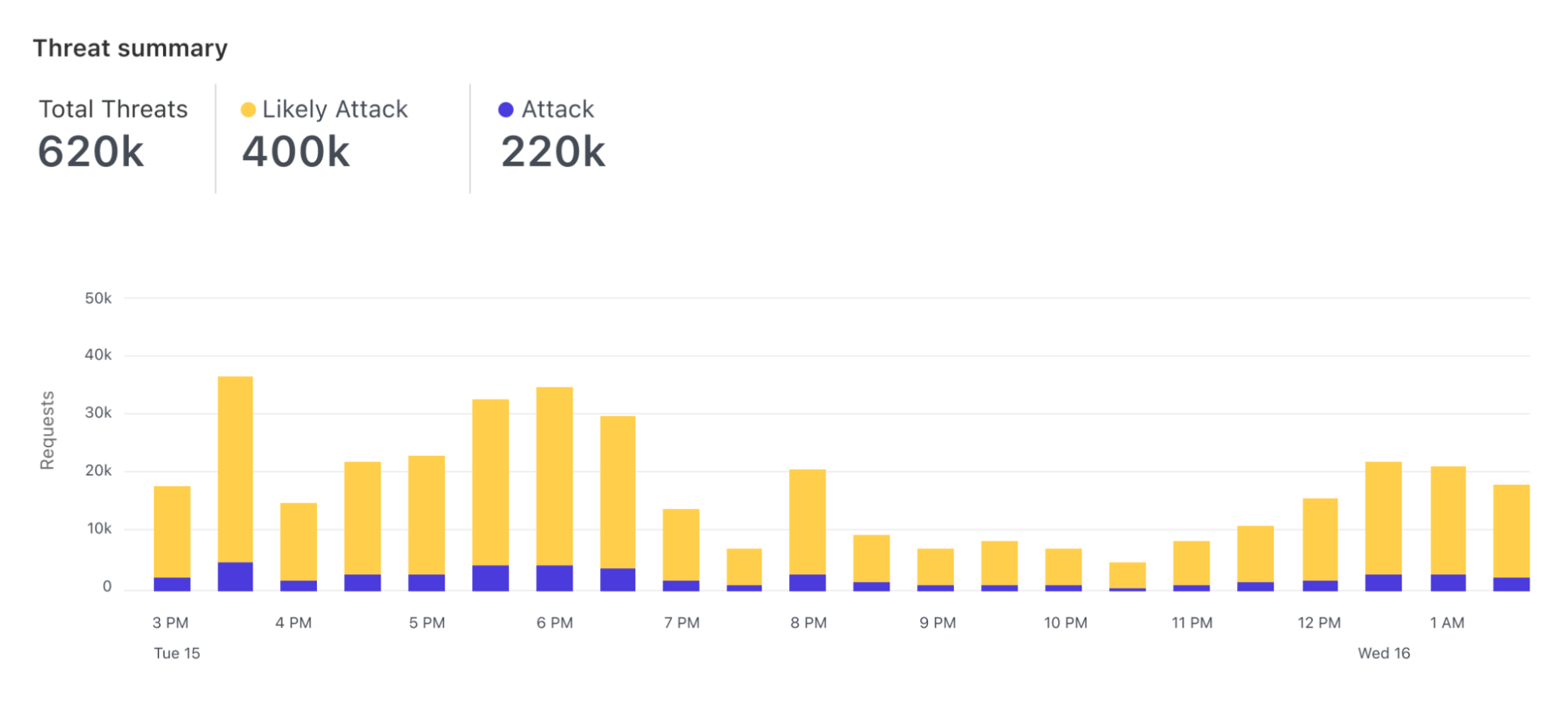

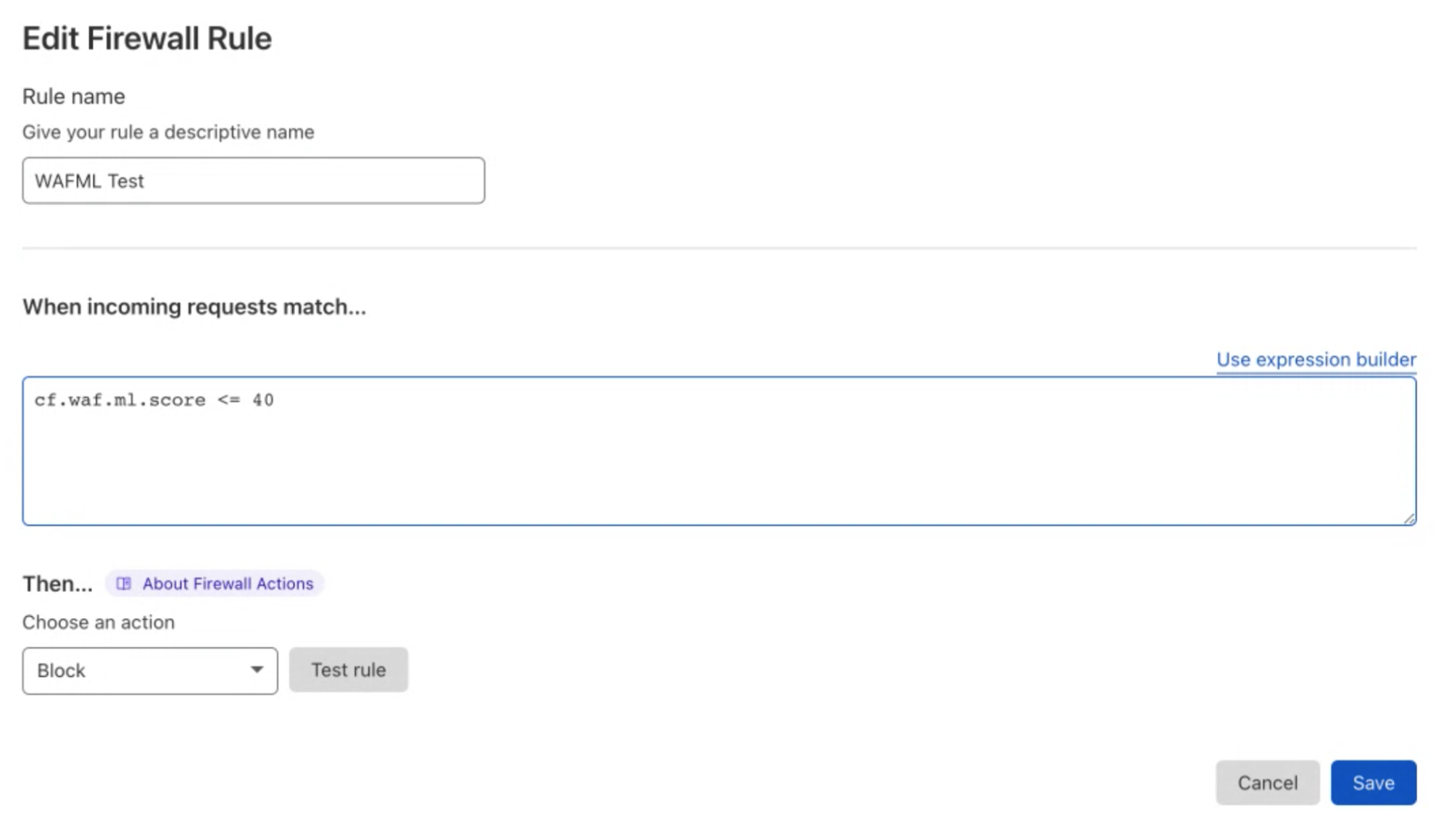

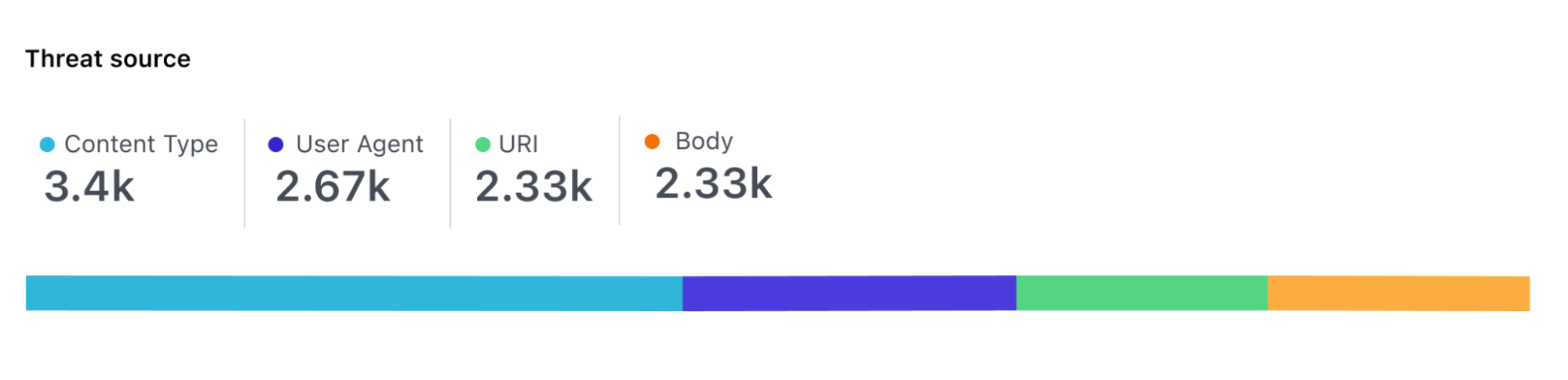

| Defensive AI: Cloudflare’s framework for defending against next-gen threats | Defensive AI is the framework Cloudflare uses when integrating intelligent systems into its solutions. Cloudflare’s AI models look at customer traffic patterns, providing that organization with a tailored defense strategy unique to their environment. |

| Cloudflare launches AI Assistant for Security Analytics | We released a natural language assistant as part of Security Analytics. Now it is easier than ever to get powerful insights about your applications by exploring log and security events using the new natural language query interface. |

| Dispelling the Generative AI fear: how Cloudflare secures inboxes against AI-enhanced phishing | Generative AI is being used by malicious actors to make phishing attacks much more convincing. Learn how Cloudflare’s email security systems are able to see past the deception using advanced machine learning models. |

Maintaining visibility and control as applications and clouds change

| Title | Excerpt |

|---|---|

| Magic Cloud Networking simplifies security, connectivity, and management of public clouds | Introducing Magic Cloud Networking, a new set of capabilities to visualize and automate cloud networks to give our customers easy, secure, and seamless connection to public cloud environments. |

| Secure your unprotected assets with Security Center: quick view for CISOs | Security Center now includes new tools to address a common challenge: ensuring comprehensive deployment of Cloudflare products across your infrastructure. Gain precise insights into where and how to optimize your security posture. |

| Announcing two highly requested DLP enhancements: Optical Character Recognition (OCR) and Source Code Detections | Cloudflare One now supports Optical Character Recognition and detects source code as part of its Data Loss Prevention service. These two features make it easier for organizations to protect their sensitive data and reduce the risks of breaches. |

| Introducing behavior-based user risk scoring in Cloudflare One | We are introducing user risk scoring as part of Cloudflare One, a new set of capabilities to detect risk based on user behavior, so that you can improve security posture across your organization. |

| Eliminate VPN vulnerabilities with Cloudflare One | The Cybersecurity & Infrastructure Security Agency issued an Emergency Directive due to the Ivanti Connect Secure and Policy Secure vulnerabilities. In this post, we discuss the threat actor tactics exploiting these vulnerabilities and how Cloudflare One can mitigate these risks. |

| Zero Trust WARP: tunneling with a MASQUE | This blog discusses the introduction of MASQUE to Zero Trust WARP and how Cloudflare One customers will benefit from this modern protocol. |

| Collect all your cookies in one jar with Page Shield Cookie Monitor | Protecting online privacy starts with knowing what cookies are used by your websites. Our client-side security solution, Page Shield, extends transparent monitoring to HTTP cookies. |

| Protocol detection with Cloudflare Gateway | Cloudflare Secure Web Gateway now supports the detection, logging, and filtering of network protocols using packet payloads without the need for inspection. |

| Introducing Requests for Information (RFIs) and Priority Intelligence Requirements (PIRs) for threat intelligence teams | Our Security Center now houses Requests for Information and Priority Intelligence Requirements. These features are available via API as well and Cloudforce One customers can start leveraging them today for enhanced security analysis. |

Consolidating to drive down costs

| Title | Excerpt |

|---|---|

| Log Explorer: monitor security events without third-party storage | With the combined power of Security Analytics and Log Explorer, security teams can analyze, investigate, and monitor logs natively within Cloudflare, reducing time to resolution and overall cost of ownership by eliminating the need of third-party logging systems. |

| Simpler migration from Netskope and Zscaler to Cloudflare: introducing Deskope and a Descaler partner update | Cloudflare expands the Descaler program to Authorized Service Delivery Partners (ASDPs). Cloudflare is also launching Deskope, a new set of tooling to help migrate existing Netskope customers to Cloudflare One. |

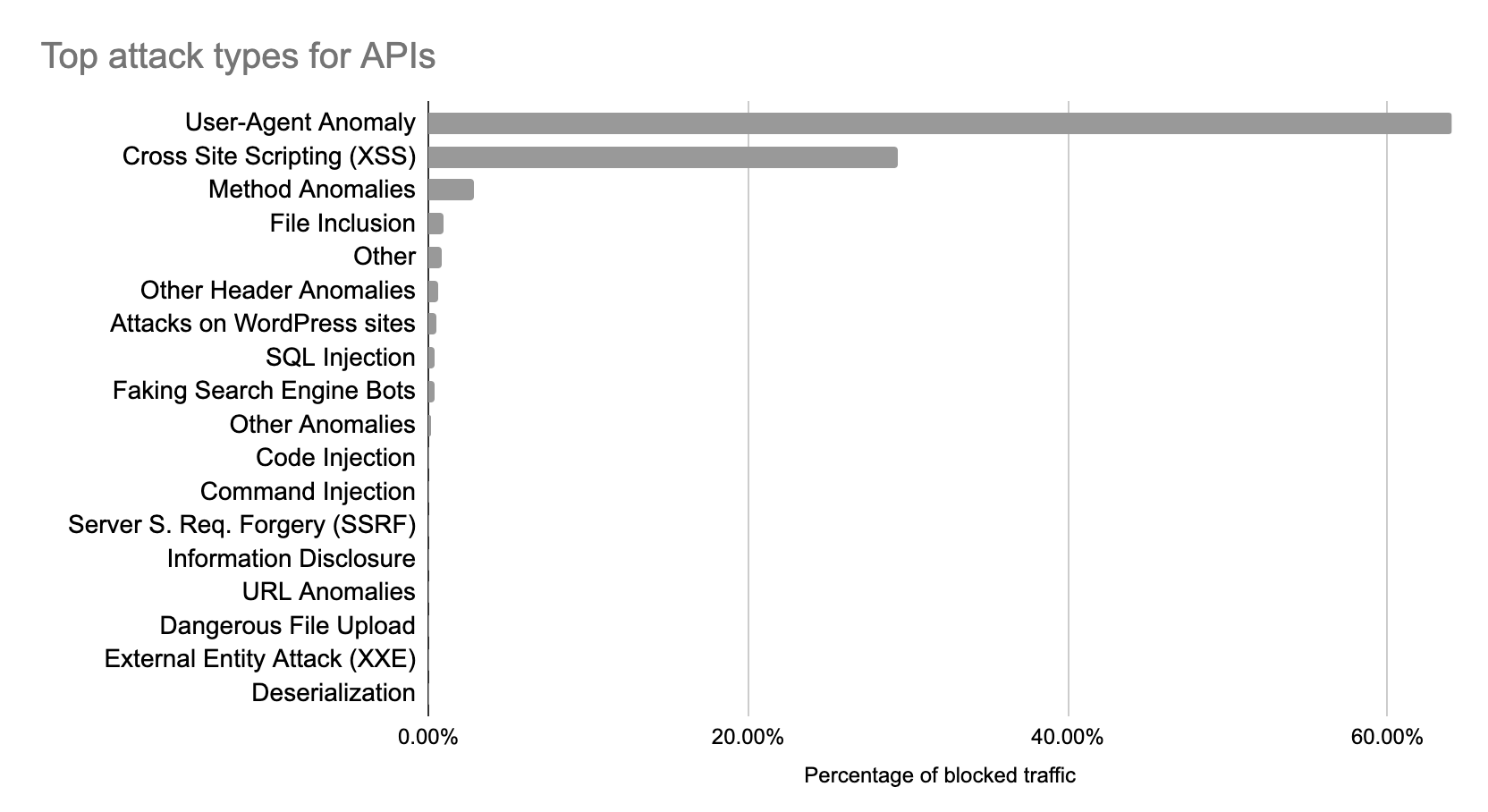

| Protecting APIs with JWT Validation | Cloudflare customers can now protect their APIs from broken authentication attacks by validating incoming JSON Web Tokens with API Gateway. |

| Simplifying how enterprises connect to Cloudflare with Express Cloudflare Network Interconnect | Express Cloudflare Network Interconnect makes it fast and easy to connect your network to Cloudflare. Customers can now order Express CNIs directly from the Cloudflare dashboard. |

| Cloudflare treats SASE anxiety for VeloCloud customers | The turbulence in the SASE market is driving many customers to seek help. We’re doing our part to help VeloCloud customers who are caught in the crosshairs of shifting strategies. |

| Free network flow monitoring for all enterprise customers | Announcing a free version of Cloudflare’s network flow monitoring product, Magic Network Monitoring. Now available to all Enterprise customers. |

| Building secure websites: a guide to Cloudflare Pages and Turnstile Plugin | Learn how to use Cloudflare Pages and Turnstile to deploy your website quickly and easily while protecting it from bots, without compromising user experience. |

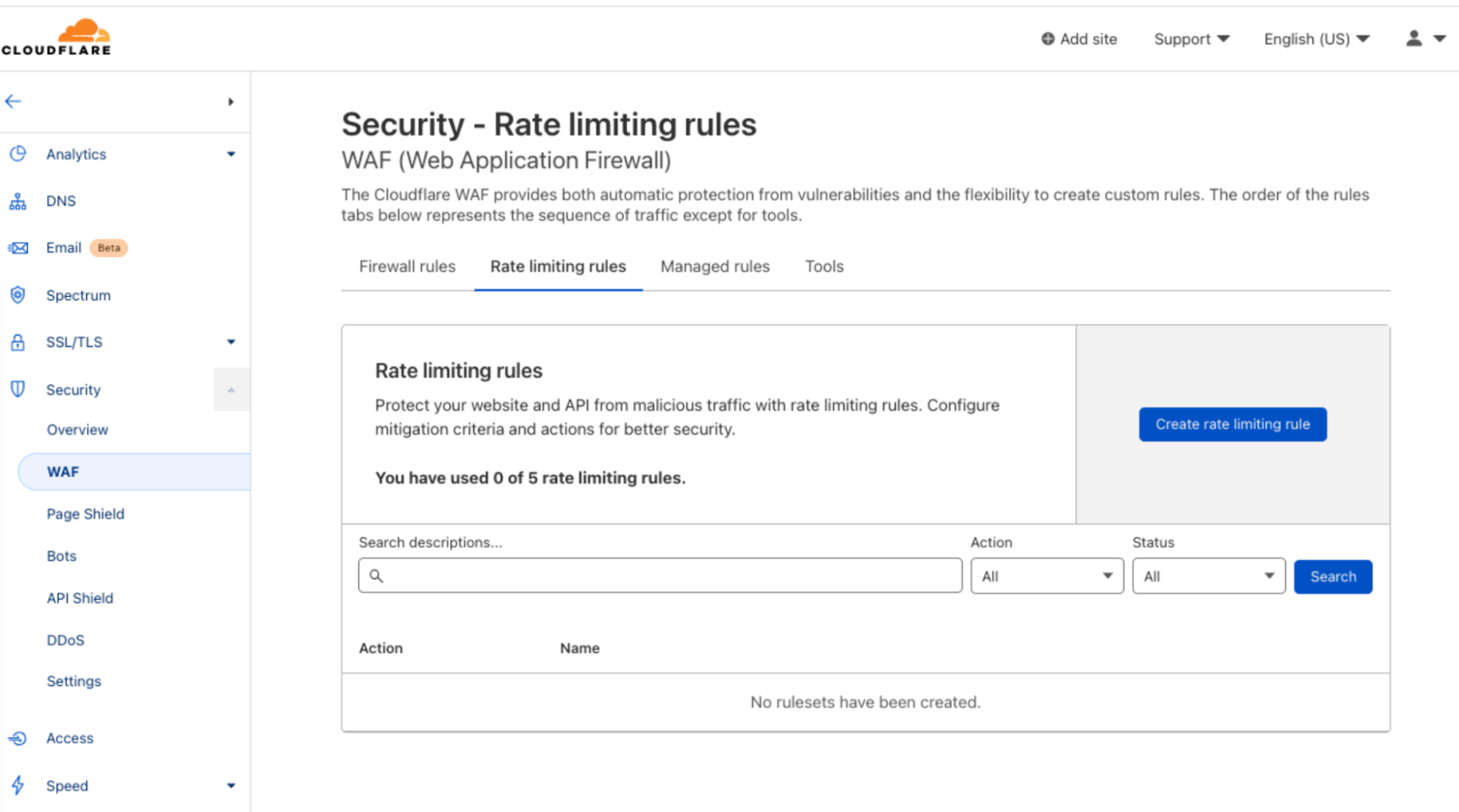

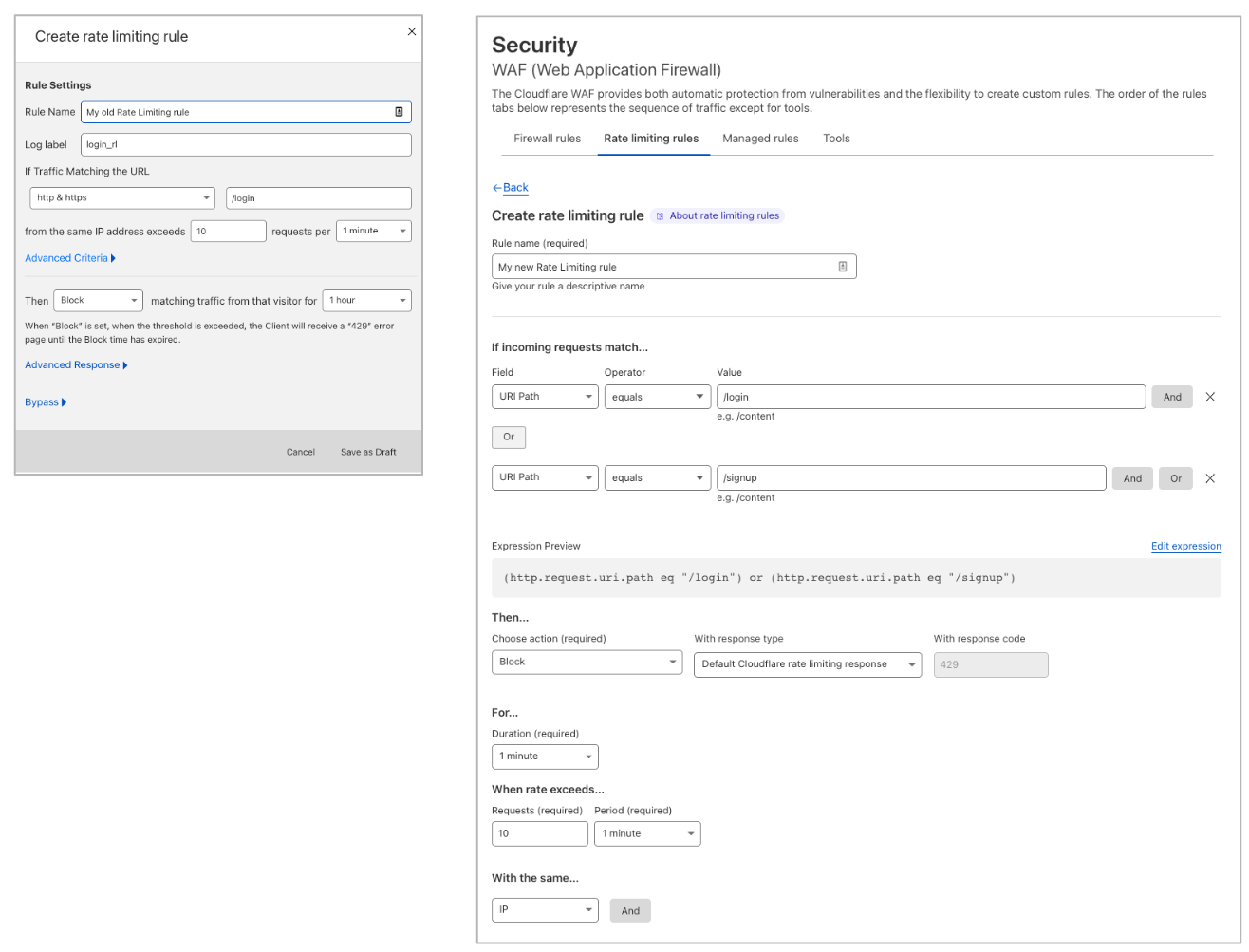

| General availability for WAF Content Scanning for file malware protection | Announcing the General Availability of WAF Content Scanning, protecting your web applications and APIs from malware by scanning files in-transit. |

How can we help make the Internet better?

| Title | Excerpt |

|---|---|

| Cloudflare protects global democracy against threats from emerging technology during the 2024 voting season | At Cloudflare, we’re actively supporting a range of players in the election space by providing security, performance, and reliability tools to help facilitate the democratic process. |

| Navigating the maze of Magecart: a cautionary tale of a Magecart impacted website | Learn how a sophisticated Magecart attack was behind a campaign against e-commerce websites. This incident underscores the critical need for a strong client side security posture. |

| Cloudflare’s URL Scanner, new features, and the story of how we built it | Discover the enhanced URL Scanner API, now integrated with the Security Center Investigate Portal. Enjoy unlisted scans, multi-device screenshots, and seamless integration with the Cloudflare ecosystem. |

| Changing the industry with CISA’s Secure by Design principles | Security considerations should be an integral part of software’s design, not an afterthought. Explore how Cloudflare adheres to Cybersecurity & Infrastructure Security Agency’s Secure by Design principles to shift the industry. |

| The state of the post-quantum Internet | Nearly two percent of all TLS 1.3 connections established with Cloudflare are secured with post-quantum cryptography. In this blog post we discuss where we are now in early 2024, what to expect for the coming years, and what you can do today. |

| Advanced DNS Protection: mitigating sophisticated DNS DDoS attacks | Introducing the Advanced DNS Protection system, a robust defense mechanism designed to protect against the most sophisticated DNS-based DDoS attacks. |

Sharing the Cloudflare way

| Title | Excerpt |

|---|---|

| Linux kernel security tunables everyone should consider adopting | This post illustrates some of the Linux kernel features that are helping Cloudflare keep its production systems more secure. We do a deep dive into how they work and why you should consider enabling them. |

| Securing Cloudflare with Cloudflare: a Zero Trust journey | A deep dive into how we have deployed Zero Trust at Cloudflare while maintaining user privacy. |

| Network performance update: Security Week 2024 | Cloudflare is the fastest provider for 95th percentile connection time in 44% of networks around the world. We dig into the data and talk about how we do it. |

| Harnessing chaos in Cloudflare offices | This blog discusses the new sources of “chaos” that have been added to LavaRand and how you can make use of that harnessed chaos in your next application. |

| Launching email security insights on Cloudflare Radar | The new Email Security section on Cloudflare Radar provides insights into the latest trends around threats found in malicious email, sources of spam and malicious email, and the adoption of technologies designed to prevent abuse of email. |

A final word

Thanks for joining us this week, and stay tuned for our next Innovation Week in early April, focused on the developer community.