Post Syndicated from Derek Pitts original https://blog.cloudflare.com/securing-cloudflare-with-cloudflare-zero-trust

Cloudflare is committed to providing our customers with industry-leading network security solutions. At the same time, we recognize that establishing robust security measures involves identifying potential threats by using processes that may involve scrutinizing sensitive or personal data, which in turn can pose a risk to privacy. As a result, we work hard to balance privacy and security by building privacy-first security solutions that we offer to our customers and use for our own network.

In this post, we’ll walk through how we deployed Cloudflare products like Access and our Zero Trust Agent in a privacy-focused way for employees who use the Cloudflare network. Even though global legal regimes generally afford employees a lower level of privacy protection on corporate networks, we work hard to make sure our employees understand their privacy choices because Cloudflare has a strong culture and history of respecting and furthering user privacy on the Internet. We’ve found that many of our customers feel similarly about ensuring that they are protecting privacy while also securing their networks.

So how do we balance our commitment to privacy with ensuring the security of our internal corporate environment using Cloudflare products and services? We start with the basics: We only retain the minimum amount of data needed, we de-identify personal data where we can, we communicate transparently with employees about the security measures we have in place on corporate systems and their privacy choices, and we retain necessary information for the shortest time period needed.

How we secure Cloudflare using Cloudflare

We take a comprehensive approach to securing our globally distributed hybrid workforce with both organizational controls and technological solutions. Our organizational approach includes a number of measures, such as a company-wide Acceptable Use Policy, employee privacy notices tailored by jurisdiction, required annual and new-hire privacy and security trainings, role-based access controls (RBAC), and least privilege principles. These organizational controls allow us to communicate expectations for both the company and the employees that we can implement with technological controls and that we enforce through logging and other mechanisms.

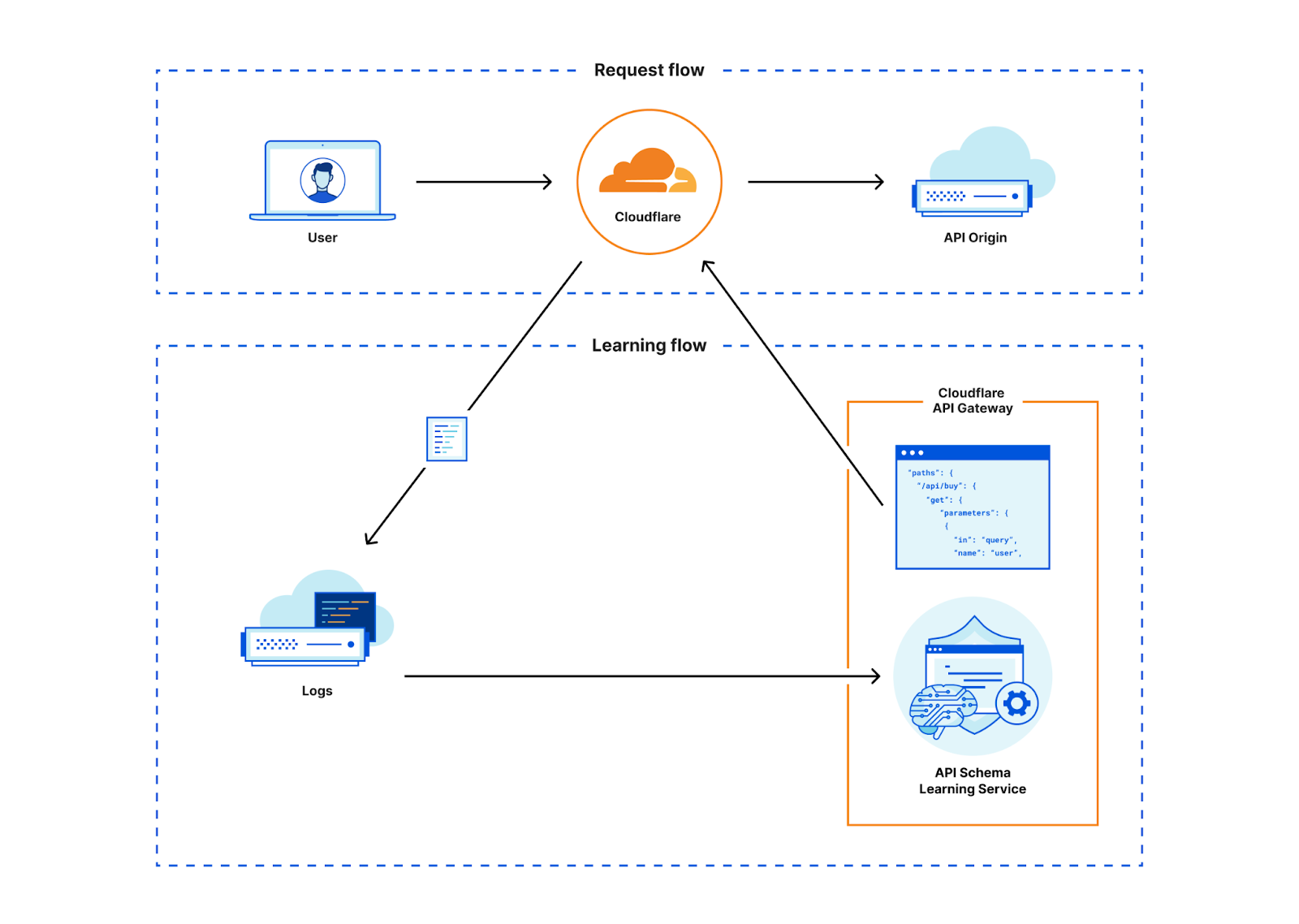

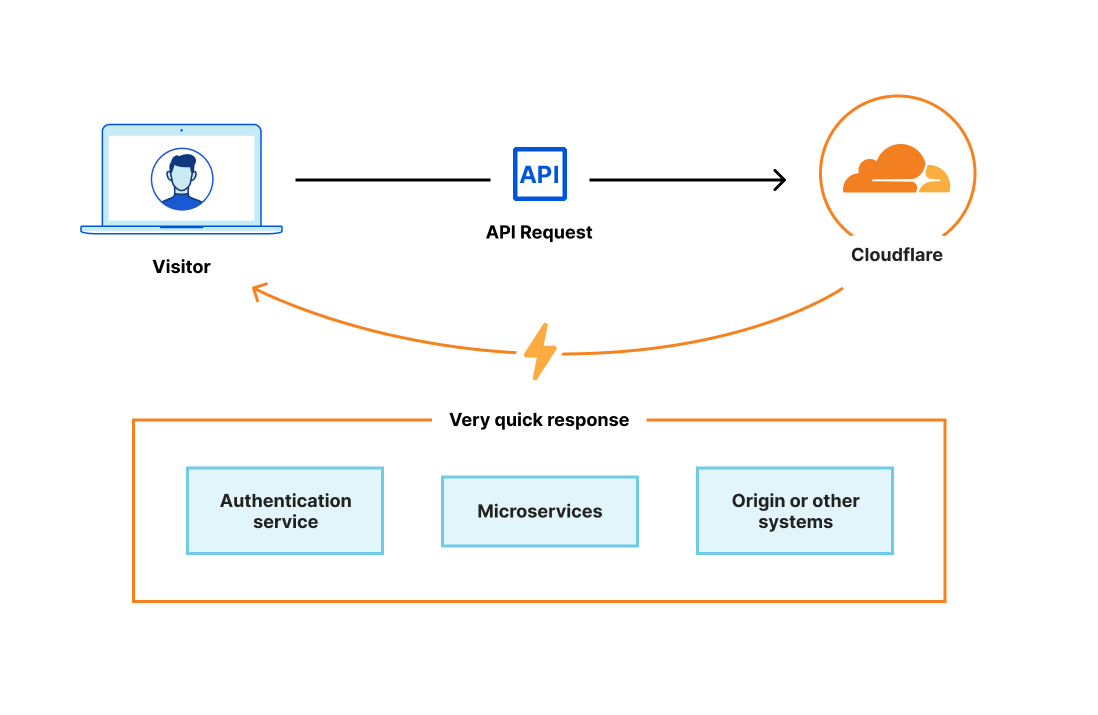

Our technological controls are rooted in Zero Trust best practices and start with a focus on our Cloudflare One services to secure our workforce as described below.

Securing access to applications

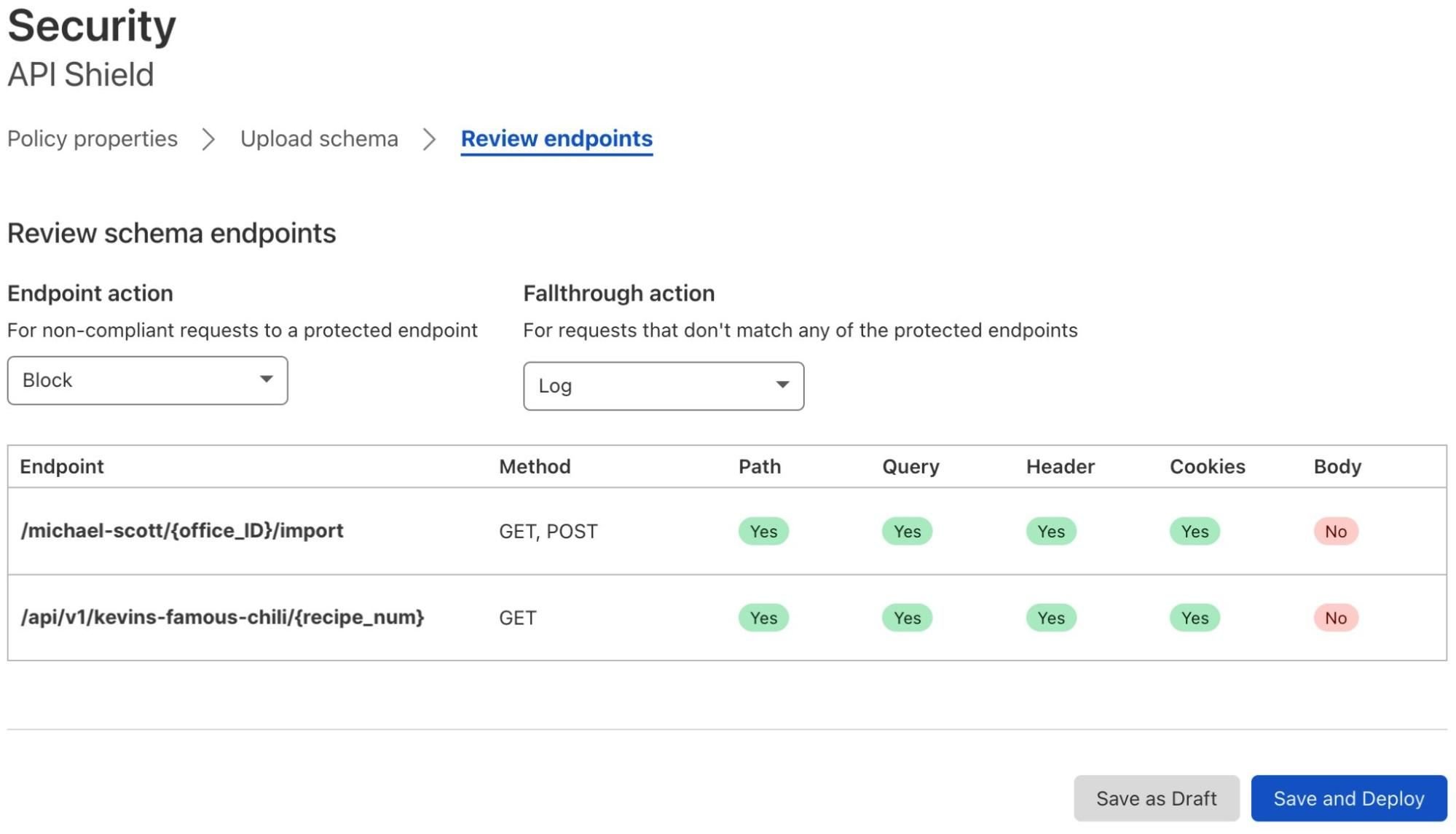

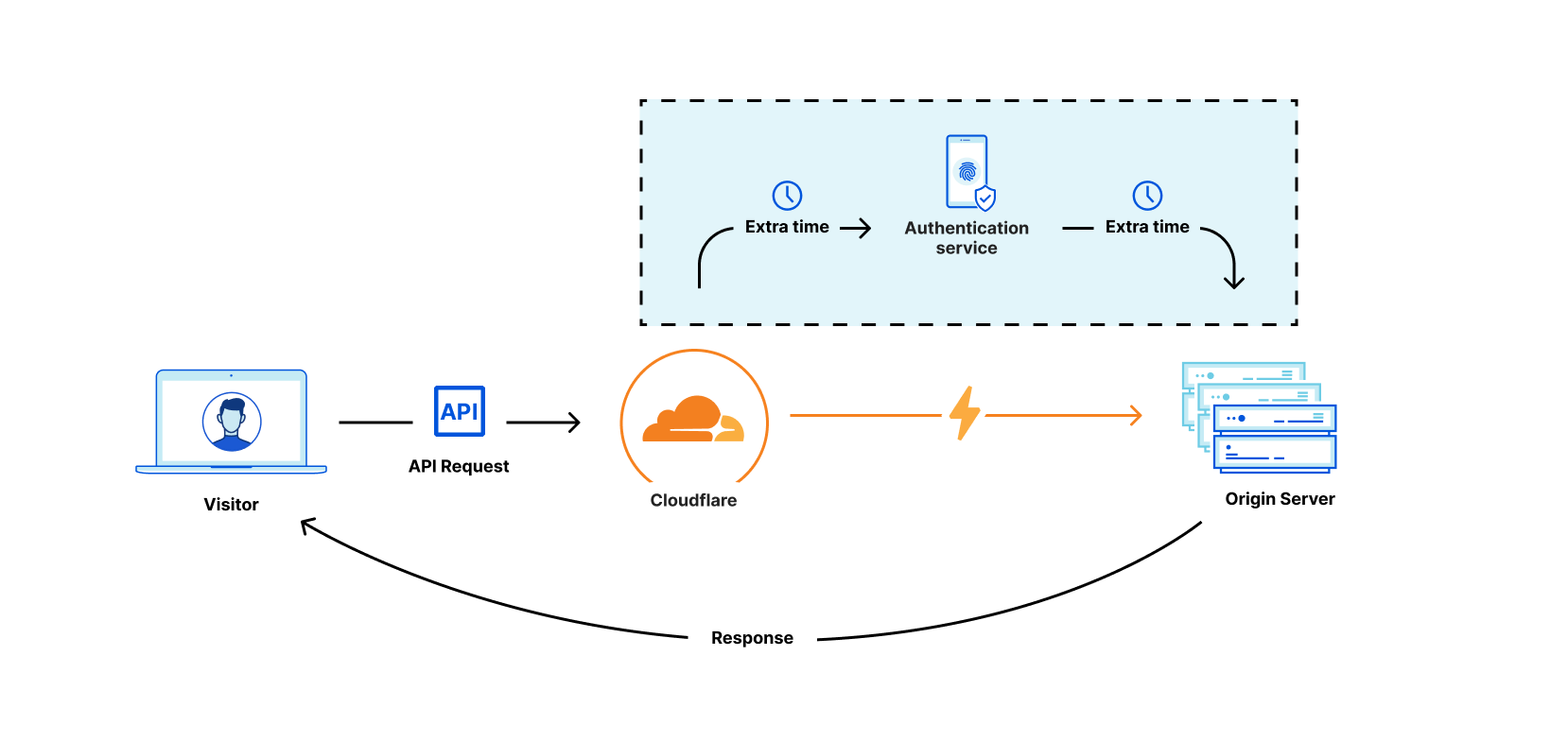

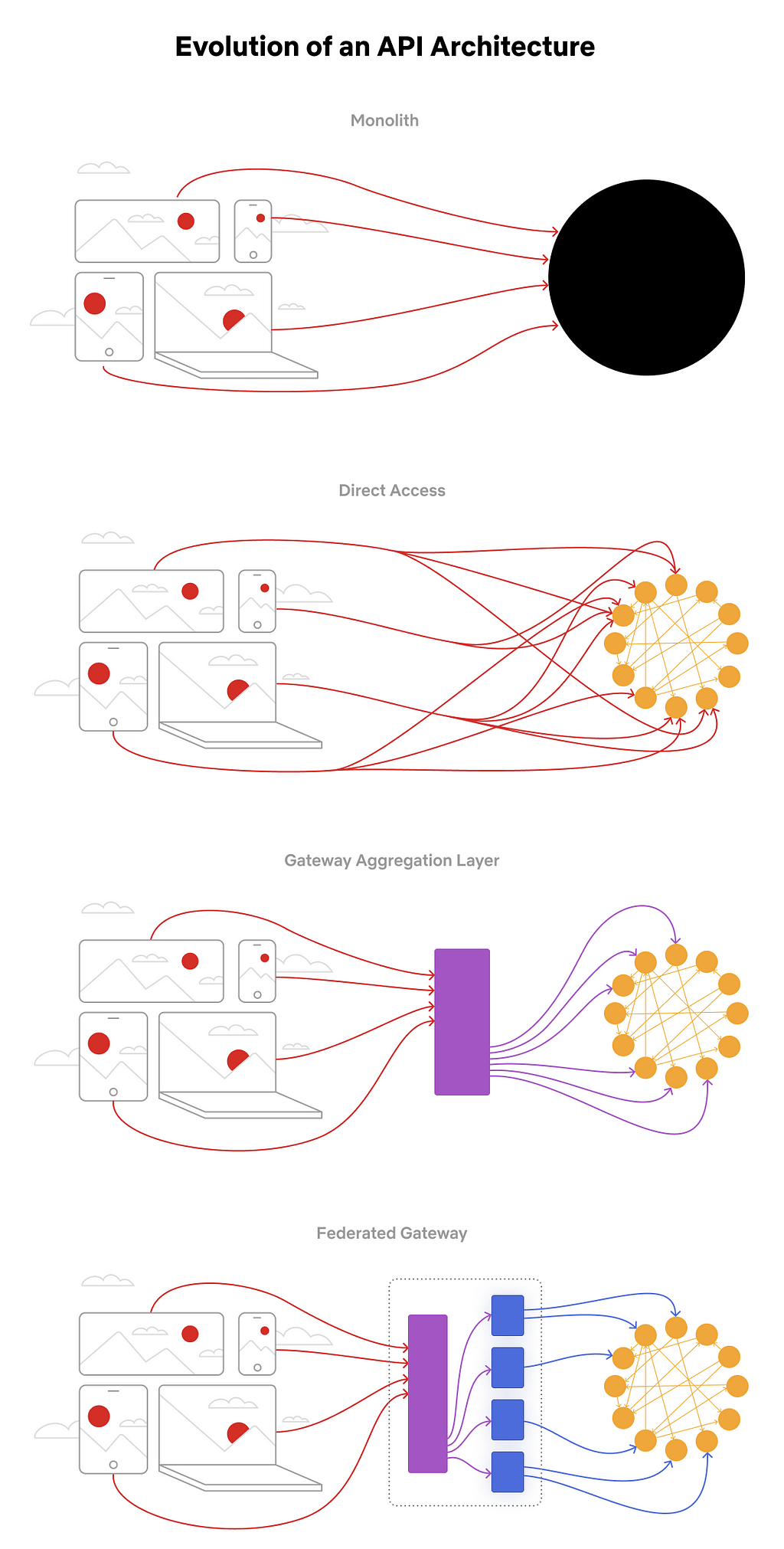

Cloudflare secures access to self-hosted and SaaS applications for our workforce, whether remote or in-office, using our own Zero Trust Network Access (ZTNA) service, Cloudflare Access, to verify identity, enforce multi-factor authentication with security keys, and evaluate device posture using the Zero Trust client for every request. This approach evolved over several years and has enabled Cloudflare to more effectively protect our growing workforce.

Defending against cyber threats

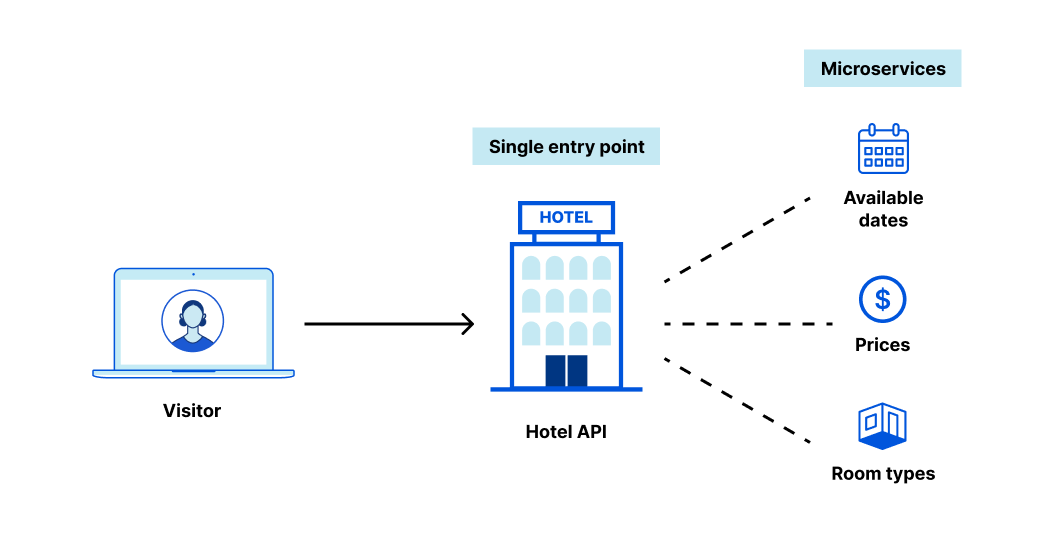

Cloudflare leverages Cloudflare Magic WAN to secure our office networks and the Cloudflare Zero Trust agent to secure our workforce. We use both of these technologies as an onramp to our own Secure Web Gateway (also known as Gateway) to secure our workforce from a rise in online threats.

As we have evolved our hybrid work and office configurations, our security teams have benefited from additional controls and visibility for forward-proxied Internet traffic, including:

- Granular HTTP controls: Our security teams inspect HTTPS traffic to block access to specific websites identified as malicious by our security team, conduct antivirus scanning, and apply identity-aware browsing policies.

- Selectively isolating Internet browsing: With remote browser isolated (RBI) sessions, all web code is run on Cloudflare’s network far from local devices, insulating users from any untrusted and malicious content. Today, Cloudflare isolates social media, news outlets, personal email, and other potentially risky Internet categories, and we have set up feedback loops for our employees to help us fine-tune these categories.

- Geography-based logging: Seeing where outbound requests originate helps our security teams understand the geographic distribution of our workforce, including our presence in high-risk areas.

- Data Loss Prevention: To keep sensitive data inside our corporate network, this tool allows us to identify data we’ve flagged as sensitive in outbound HTTP/S traffic and prevent it from leaving the network.

- Cloud Access Security Broker: This tool allows us to monitor our SaaS apps for misconfigurations and sensitive data that is potentially exposed or shared too broadly.

Protecting inboxes with cloud email security

Additionally, we have deployed our Cloud Email Security solution to protect our workforce from increased phishing and business email compromise attacks that we have not only seen directed against our employees, but that are plaguing organizations globally. One key feature we use is email link isolation, which uses RBI and email security functionality to open potentially suspicious links in an isolated browser. This allows us to be slightly more relaxed with blocking suspicious links without compromising security. This is a big win for productivity for our employees and the security team, as both sets of employees aren’t having to deal with large volumes of false positives.

More details on our implementation can be found in our Securing Cloudflare with Cloudflare One case study.

How we respect privacy

The very nature of these powerful security technologies Cloudflare has created and deployed underscores the responsibility we have to use privacy-first principles in handling this data, and to recognize that the data should be respected and protected at all times.

The journey to respecting privacy starts with the products themselves. We develop products that have privacy controls built in at their foundation. To achieve this, our product teams work closely with Cloudflare’s product and privacy counsels to practice privacy by design. A great example of this collaboration is the ability to manage personally identifiable information (PII) in the Secure Web Gateway logs. You can choose to exclude PII from Gateway logs entirely or redact PII from the logs and gain granular control over access to PII with the Zero Trust PII Role.

In addition to building privacy-first security products, we are also committed to communicating transparently with Cloudflare employees about how these security products work and what they can – and can’t – see about traffic on our internal systems. This empowers employees to see themselves as part of the security solution, rather than set up an “us vs. them” mentality around employee use of company systems.

For example, while our employee privacy policies and our Acceptable Use Policy provide broad notice to our employees about what happens to data when they use the company’s systems, we thought it was important to provide even more detail. As a result, our security team collaborated with our privacy team to create an internal wiki page that plainly explains the data our security tools collect and why. We also describe the privacy choices available to our employees. This is particularly important for the “bring your own device” (BYOD) employees who have opted for the convenience of using their personal mobile device for work. BYOD employees must install endpoint management (provided by a third party) and Cloudflare’s Zero trust client on their devices if they want to access Cloudflare systems. We described clearly to our employees what this means about what traffic on their devices can be seen by Cloudflare teams, and we explained how they can take steps to protect their privacy when they are using their devices for purely personal purposes.

For the teams that develop for and support our Zero Trust services, we ensure that data is available only on a strict, need-to-know basis and is restricted to Cloudflare team members that require access as an essential part of their job. The set of people with access are required to take training that reminds them of their responsibility to respect this data and provides them with best practices for handling sensitive data. Additionally, to ensure we have full auditability, we log all the queries run against this database and by whom they are run.

Cloudflare has also made it easy for our employees to express any concerns they may have about how their data is handled or what it is used for. We have mechanisms in place that allow employees to ask questions or express concerns about the use of Zero Trust Security on Cloudflare’s network.

In addition, we make it easy for employees to reach out directly to the leaders responsible for these tools. All of these efforts have helped our employees better understand what information we collect and why. This has helped to expand our strong foundation for security and privacy at Cloudflare.

Encouraging privacy-first security for all

We believe firmly that great security is critical for ensuring data privacy, and that privacy and security can co-exist harmoniously. We also know that it is possible to secure a corporate network in a way that respects the employees using those systems.

For anyone looking to secure a corporate network, we encourage focusing on network security products and solutions that build in personal data protections, like our Zero Trust suite of products. If you are curious to explore how to implement these Cloudflare services in your own organizations, request a consultation here.

We also urge organizations to make sure they communicate clearly with their users. In addition to making sure company policies are transparent and accessible, it is important to help employees understand their privacy choices. Under the laws of almost every jurisdiction globally, individuals have a lower level of privacy on a company device or a company’s systems than they do on their own personal accounts or devices, so it’s important to communicate clearly to help employees understand the difference. If an organization has privacy champions, works councils, or other employee representation groups, it is critical to communicate early and often with these groups to help employees understand what controls they can exercise over their data.