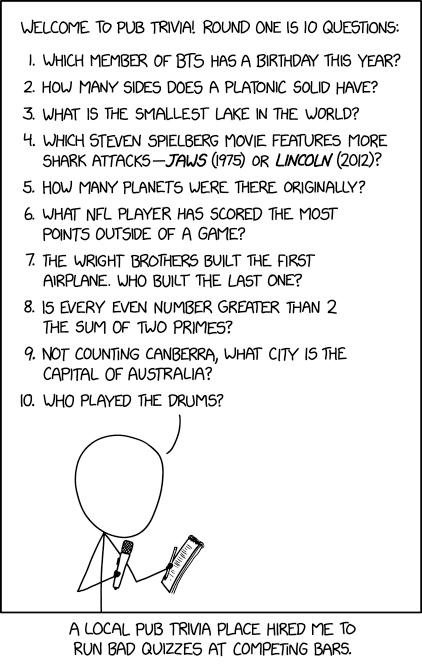

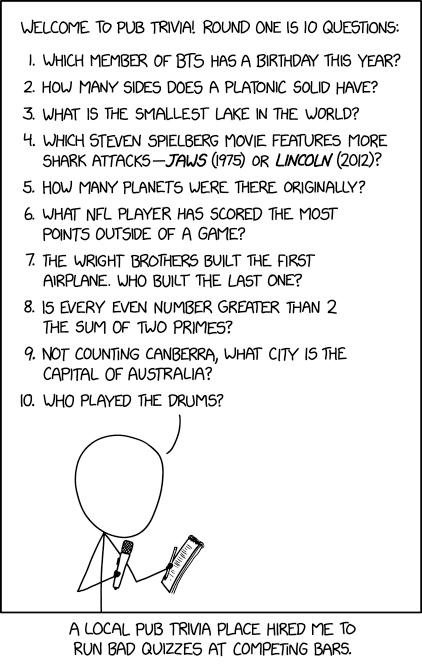

Post Syndicated from xkcd.com original https://xkcd.com/2922/

Post Syndicated from xkcd.com original https://xkcd.com/2922/

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=QI4--VyGijc

Post Syndicated from Michelle Chen original https://blog.cloudflare.com/meta-llama-3-available-on-cloudflare-workers-ai

Workers AI’s initial launch in beta included support for Llama 2, as it was one of the most requested open source models from the developer community. Since that initial launch, we’ve seen developers build all kinds of innovative applications including knowledge sharing chatbots, creative content generation, and automation for various workflows.

At Cloudflare, we know developers want simplicity and flexibility, with the ability to build with multiple AI models while optimizing for accuracy, performance, and cost, among other factors. Our goal is to make it as easy as possible for developers to use their models of choice without having to worry about the complexities of hosting or deploying models.

As soon as we learned about the development of Llama 3 from our partners at Meta, we knew developers would want to start building with it as quickly as possible. Workers AI’s serverless inference platform makes it extremely easy and cost effective to start using the latest large language models (LLMs). Meta’s commitment to developing and growing an open AI-ecosystem makes it possible for customers of all sizes to use AI at scale in production. All it takes is a few lines of code to run inference to Llama 3:

export interface Env {

// If you set another name in wrangler.toml as the value for 'binding',

// replace "AI" with the variable name you defined.

AI: any;

}

export default {

async fetch(request: Request, env: Env) {

const response = await env.AI.run('@cf/meta/llama-3-8b-instruct', {

messages: [

{role: "user", content: "What is the origin of the phrase Hello, World?"}

]

}

);

return new Response(JSON.stringify(response));

},

};

Llama 3 offers leading performance on a wide range of industry benchmarks. You can learn more about the architecture and improvements on Meta’s blog post. Cloudflare Workers AI supports Llama 3 8B, including the instruction fine-tuned model.

Meta’s testing shows that Llama 3 is the most advanced open LLM today on evaluation benchmarks such as MMLU, GPQA, HumanEval, GSM-8K, and MATH. Llama 3 was trained on an increased number of training tokens (15T), allowing the model to have a better grasp on language intricacies. Larger context windows doubles the capacity of Llama 2, and allows the model to better understand lengthy passages with rich contextual data. Although the model supports a context window of 8k, we currently only support 2.8k but are looking to support 8k context windows through quantized models soon. As well, the new model introduces an efficient new tiktoken-based tokenizer with a vocabulary of 128k tokens, encoding more characters per token, and achieving better performance on English and multilingual benchmarks. This means that there are 4 times as many parameters in the embedding and output layers, making the model larger than the previous Llama 2 generation of models.

Under the hood, Llama 3 uses grouped-query attention (GQA), which improves inference efficiency for longer sequences and also renders their 8B model architecturally equivalent to Mistral-7B. For tokenization, it uses byte-level byte-pair encoding (BPE), similar to OpenAI’s GPT tokenizers. This allows tokens to represent any arbitrary byte sequence — even those without a valid utf-8 encoding. This makes the end-to-end model much more flexible in its representation of language, and leads to improved performance.

Along with the base Llama 3 models, Meta has released a suite of offerings with tools such as Llama Guard 2, Code Shield, and CyberSec Eval 2, which we are hoping to release on our Workers AI platform shortly.

Meta Llama 3 8B is available in the Workers AI Model Catalog today! Check out the documentation here and as always if you want to share your experiences or learn more, join us in the Developer Discord.

Post Syndicated from Stacy Conant original https://aws.amazon.com/blogs/messaging-and-targeting/serverless-iot-email-capture-attachment-processing-and-distribution/

Many customers need to automate email notifications to a broad and diverse set of email recipients, sometimes from a sensor network with a variety of monitoring capabilities. Many sensor monitoring software products include an SMTP client to achieve this goal. However, managing email server infrastructure requires specialty expertise and operating an email server comes with additional cost and inherent risk of breach, spam, and storage management. Organizations also need to manage distribution of attachments, which could be large and potentially contain exploits or viruses. For IoT use cases, diagnostic data relevance quickly expires, necessitating retention policies to regularly delete content.

This solution uses the Amazon Simple Email Service (SES) SMTP interface to receive SMTP client messages, and processes the message to replace an attachment with a pre-signed URL in the resulting email to its intended recipients. Attachments are stored separately in an Amazon Simple Storage Service (S3) bucket with a lifecycle policy implemented. This reduces the storage requirements of recipient email server receiving notification emails. Additionally, this solution leverages built-in anti-spam and security scanning capabilities to deal with spam and potentially malicious attachments while at the same time providing the mechanism by which pre-signed attachment links can be revoked should the emails be distributed to unintended recipients.

For this solution you will need an email account where you can receive emails. You’ll also need a (sub)domain for which you control the mail exchanger (MX) record. You can obtain your (sub)domain either from Amazon Route53 or another domain hosting provider.

You’ll need to follow the instructions to Verify an email address for all identities that you use as “From”, “Source”, ” Sender”, or “Return-Path” addresses. You’ll also need to follow these instructions for any identities you wish to send emails to during initial testing while your SES account is in the “Sandbox” (see next “Moving out of the SES Sandbox” section).

Amazon SES accounts are “in the Sandbox” by default, limiting email sending only to verified identities. AWS does this to prevent fraud and abuse as well as protecting your reputation as an email sender. When your account leaves the Sandbox, SES can send email to any recipient, regardless of whether the recipient’s address or domain is verified by SES. However, you still have to verify all identities that you use as “From”, “Source”, “Sender”, or “Return-Path” addresses.

Follow the Moving out of the SES Sandbox instructions in the SES Developer Guide. Approval is usually within 24 hours.

Follow the workshop lab instructions to set up email sending from your SMTP client using the SES SMTP interface. Once you’ve completed this step, your SMTP client can open authenticated sessions with the SES SMTP interface and send emails. The workshop will guide you through the following steps:

In order to replace email attachments with a pre-signed URL and other application logic, you’ll need to set up SES to receive emails on a domain or subdomain you control.

The solution is implemented using AWS CDK with Python. First clone the solution repository to your local machine or Cloud9 development environment. Then deploy the solution by entering the following commands into your terminal:

Note:

The RecipientEmail CDK context parameter in the cdk deploy command above can be any email address in the domain you verified as part of the Verify the domain step. In other words, if the verified domain is acme-corp.com, then the emails can be [email protected], [email protected], etc.

The ConfigurationSetName CDK context can be obtained by navigating to Identities in Amazon SES console, selecting the verified domain (same as above), switching to “Configuration set” tab and selecting name of the “Default configuration set”

After deploying the solution, please, navigate to Amazon SES Email receiving in AWS console, edit the rule set and set it to Active.

Create a small file and generate a base64 encoding so that you can attach it to an SMTP message:

Install openssl (which includes an SMTP client capability) using the following command:

Now run the SMTP client (openssl is used for the proof of concept, be sure to complete the steps in the workshop lab instructions first):

and feed in the commands (replacing the brackets [] and everything between them) to send the SMTP message with the attachment you created.

Note: For base64 SMTP username and password above, use values obtained in Set up the SES SMTP interface, step 1. So for example, if the username is AKZB3LJAF5TQQRRPQZO1, then you can obtain base64 encoded value using following command:

This makes base64 encoded value QUtaQjNMSkFGNVRRUVJSUFFaTzE= Repeat same process for SMTP username and password values in the example above.

The openssl command should result in successful SMTP authentication and send. You should receive an email that looks like this:

To cleanup, start by navigating to Amazon SES Email receiving in AWS console, and setting the rule set to Inactive.

Once completed, delete the stack:

In Amazon SES Console, select Manage existing SMTP credentials, select the username for which credentials were created in Set up the SES SMTP interface above, navigate to the Security credentials tab and in the Access keys section, select Action -> Delete to delete AWS SES access credentials.

If you are not receiving the email or email is not being sent correctly there are a number of common causes of these errors:

SenderEmail=<verified sender email> email address used in cdk deploy.Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=aNqIa1QOQKE

Post Syndicated from Atulsing Patil original https://aws.amazon.com/blogs/security/2023-iso-27001-certificate-available-in-spanish-and-french-and-2023-iso-22301-certificate-available-in-spanish/

Amazon Web Services (AWS) is pleased to announce that a translated version of our 2023 ISO 27001 and 2023 ISO 22301 certifications are now available:

Translated certificates are available to customers through AWS Artifact.

These translated certificates will help drive greater engagement and alignment with customer and regulatory requirements across France, Latin America, and Spain.

We continue to listen to our customers, regulators, and stakeholders to understand their needs regarding audit, assurance, certification, and attestation programs at AWS. If you have questions or feedback about ISO compliance, reach out to your AWS account team.

Nous restons à l’écoute de nos clients, des autorités de régulation et des parties prenantes pour mieux comprendre leurs besoins en matière de programmes d’audit, d’assurance, de certification et d’attestation au sein d’Amazon Web Services (AWS). La certification ISO 27001 2023 est désormais disponible en espagnol et en français. La certification ISO 22301 2023 est également désormais disponible en espagnol. Ces certifications traduites contribueront à renforcer notre engagement et notre conformité aux exigences des clients et de la réglementation en France, en Amérique latine et en Espagne.

Les certifications traduites sont mises à la disposition des clients via AWS Artifact.

Si vous avez des commentaires sur cet article, soumettez-les dans la section Commentaires ci-dessous.

Vous souhaitez davantage de contenu, d’actualités et d’annonces sur les fonctionnalités AWS Security ? Suivez-nous sur Twitter.

Seguimos escuchando a nuestros clientes, reguladores y partes interesadas para comprender sus necesidades en relación con los programas de auditoría, garantía, certificación y atestación en Amazon Web Services (AWS). El certificado ISO 27001 2023 ya está disponible en español y francés. Además, el certificado ISO 22301 de 2023 ahora está disponible en español. Estos certificados traducidos ayudarán a impulsar un mayor compromiso y alineación con los requisitos normativos y de los clientes en Francia, América Latina y España.

Los certificados traducidos están disponibles para los clientes en AWS Artifact.

Si tienes comentarios sobre esta publicación, envíalos en la sección Comentarios a continuación.

¿Desea obtener más noticias sobre seguridad de AWS? Síguenos en Twitter.

Post Syndicated from Atul Khare original https://aws.amazon.com/blogs/big-data/how-salesforce-optimized-their-detection-and-response-platform-using-aws-managed-services/

This is a guest blog post co-authored with Atul Khare and Bhupender Panwar from Salesforce.

Headquartered in San Francisco, Salesforce, Inc. is a cloud-based customer relationship management (CRM) software company building artificial intelligence (AI)-powered business applications that allow businesses to connect with their customers in new and personalized ways.

The Salesforce Trust Intelligence Platform (TIP) log platform team is responsible for data pipeline and data lake infrastructure, providing log ingestion, normalization, persistence, search, and detection capability to ensure Salesforce is safe from threat actors. It runs miscellaneous services to facilitate investigation, mitigation, and containment for security operations. The TIP team is critical to securing Salesforce’s infrastructure, detecting malicious threat activities, and providing timely responses to security events. This is achieved by collecting and inspecting petabytes of security logs across dozens of organizations, some with thousands of accounts.

In this post, we discuss how the Salesforce TIP team optimized their architecture using Amazon Web Services (AWS) managed services to achieve better scalability, cost, and operational efficiency.

The main key performance indicator (KPI) for the TIP platform is its capability to ingest a high volume of security logs from a variety of Salesforce internal systems in real time and process them with high velocity. The platform ingests more than 1 PB of data per day, more than 10 million events per second, and more than 200 different log types. The platform ingests log files in JSON, text, and Common Event Format (CEF) formats.

The message bus in TIP’s existing architecture mainly uses Apache Kafka for ingesting different log types coming from the upstream systems. Kafka had a single topic for all the log types before they were consumed by different downstream applications including Splunk, Streaming Search, and Log Normalizer. The Normalized Parquet Logs are stored in an Amazon Simple Storage Service (Amazon S3) data lake and cataloged into Hive Metastore (HMS) on an Amazon Relational Database Service (Amazon RDS) instance based on S3 event notifications. The data lake consumers then use Apache Presto running on Amazon EMR cluster to perform one-time queries. Other teams including the Data Science and Machine Learning teams use the platform to detect, analyze, and control security threats.

Some of the main challenges that TIP’s existing architecture was facing include:

All these challenges motivated the TIP team to embark on a journey to create a more optimized platform that’s easier to scale with less operational overhead and lower CTS.

The Salesforce TIP log platform engineering team, in collaboration with AWS, started building the new architecture to replace the Kafka-based message bus solution with the fully managed AWS messaging and notification solutions Amazon Simple Queue Service (Amazon SQS) and Amazon Simple Notification Service (Amazon SNS). In the new design, the upstream systems send their logs to a central Amazon S3 storage location, which invokes a process to partition the logs and store them in an S3 data lake. Consumer applications such as Splunk get the messages delivered to their system using Amazon SQS. Similarly, the partitioned log data through Amazon SQS events initializes a log normalization process that delivers the normalized log data to open source Delta Lake tables on an S3 data lake. One of the major changes in the new architecture is the use of an AWS Glue Data Catalog to replace the previous Hive Metastore. The one-time analysis applications use Apache Trino on an Amazon EMR cluster to query the Delta Tables cataloged in AWS Glue. Other consumer applications also read the data from S3 data lake files stored in Delta Table format. More details on some of the important processes are as follows:

This service ingests logs from the Amazon S3 SNS SQS-based store and stores them in the partitioned (by log types) format in S3 for further downstream consumptions from the Amazon SNS SQS subscription. This is the bronze layer of the TIP data lake.

One of the downstream consumers of log partitioner (Splunk Ingestor is another one), the log normalizer ingests the data from Partitioned Output S3, using Amazon SNS SQS notifications, and enriches them using Salesforce custom parsers and tags. Finally, this enriched data is landed in the data lake on S3. This is the silver layer of the TIP data lake.

These consumers consume from the silver layer of the TIP data lake and run analytics for security detection use cases. The earlier Kafka interface is now converted to delta streams ingestion, which concludes the total removal of the Kafka bus from the TIP data pipeline.

The main advantages realized by the Salesforce TIP team based on this new architecture using Amazon S3, Amazon SNS, and Amazon SQS include:

In this blog post we discussed how the Salesforce Trust Intelligence Platform (TIP) optimized their data pipeline by replacing the Kafka-based message bus solution with fully managed AWS messaging and notification solutions using Amazon SQS and Amazon SNS. Salesforce and AWS teams worked together to make sure this new platform seamlessly scales to ingest more than 1 PB of data per day, more than 10 millions events per second, and more than 200 different log types. Reach out to your AWS account team if you have similar use cases and you need help architecting your platform to achieve operational efficiencies and scale.

Atul Khare is a Director of Engineering at Salesforce Security, where he spearheads the Security Log Platform and Data Lakehouse initiatives. He supports diverse security customers by building robust big data ETL pipeline that is elastic, resilient, and easy to use, providing uniform & consistent security datasets for threat detection and response operations, AI, forensic analysis, analytics, and compliance needs across all Salesforce clouds. Beyond his professional endeavors, Atul enjoys performing music with his band to raise funds for local charities.

Atul Khare is a Director of Engineering at Salesforce Security, where he spearheads the Security Log Platform and Data Lakehouse initiatives. He supports diverse security customers by building robust big data ETL pipeline that is elastic, resilient, and easy to use, providing uniform & consistent security datasets for threat detection and response operations, AI, forensic analysis, analytics, and compliance needs across all Salesforce clouds. Beyond his professional endeavors, Atul enjoys performing music with his band to raise funds for local charities.

Bhupender Panwar is a Big Data Architect at Salesforce and seasoned advocate for big data and cloud computing. His background encompasses the development of data-intensive applications and pipelines, solving intricate architectural and scalability challenges, and extracting valuable insights from extensive datasets within the technology industry. Outside of his big data work, Bhupender loves to hike, bike, enjoy travel and is a great foodie.

Bhupender Panwar is a Big Data Architect at Salesforce and seasoned advocate for big data and cloud computing. His background encompasses the development of data-intensive applications and pipelines, solving intricate architectural and scalability challenges, and extracting valuable insights from extensive datasets within the technology industry. Outside of his big data work, Bhupender loves to hike, bike, enjoy travel and is a great foodie.

Avijit Goswami is a Principal Solutions Architect at AWS specialized in data and analytics. He supports AWS strategic customers in building high-performing, secure, and scalable data lake solutions on AWS using AWS managed services and open-source solutions. Outside of his work, Avijit likes to travel, hike in the San Francisco Bay Area trails, watch sports, and listen to music.

Avijit Goswami is a Principal Solutions Architect at AWS specialized in data and analytics. He supports AWS strategic customers in building high-performing, secure, and scalable data lake solutions on AWS using AWS managed services and open-source solutions. Outside of his work, Avijit likes to travel, hike in the San Francisco Bay Area trails, watch sports, and listen to music.

Vikas Panghal is the Principal Product Manager leading the product management team for Amazon SNS and Amazon SQS. He has deep expertise in event-driven and messaging applications and brings a wealth of knowledge and experience to his role, shaping the future of messaging services. He is passionate about helping customers build highly scalable, fault-tolerant, and loosely coupled systems. Outside of work, he enjoys spending time with his family outdoors, playing chess, and running.

Vikas Panghal is the Principal Product Manager leading the product management team for Amazon SNS and Amazon SQS. He has deep expertise in event-driven and messaging applications and brings a wealth of knowledge and experience to his role, shaping the future of messaging services. He is passionate about helping customers build highly scalable, fault-tolerant, and loosely coupled systems. Outside of work, he enjoys spending time with his family outdoors, playing chess, and running.

Post Syndicated from Cliff Robinson original https://www.servethehome.com/intel-foundry-high-na-euv-milestone-readies-for-14a-production-asml/

Intel Foundry hits a High NA EUV milestone as it has a new ASML machine installed in Oregon and is on a path to 14A process in 2025

The post Intel Foundry High NA EUV Milestone Readies for 14A Production appeared first on ServeTheHome.

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=GbYBYDjql_g

Post Syndicated from Joaquin Manuel Rinaudo original https://aws.amazon.com/blogs/security/integrate-kubernetes-policy-as-code-solutions-into-security-hub/

Using Kubernetes policy-as-code (PaC) solutions, administrators and security professionals can enforce organization policies to Kubernetes resources. There are several publicly available PAC solutions that are available for Kubernetes, such as Gatekeeper, Polaris, and Kyverno.

PaC solutions usually implement two features:

This post presents a solution to send policy violations from PaC solutions using Kubernetes policy report format (for example, using Kyverno) or from Gatekeeper’s constraints status directly to AWS Security Hub. With this solution, you can visualize Kubernetes security misconfigurations across your Amazon Elastic Kubernetes Service (Amazon EKS) clusters and your organizations in AWS Organizations. This can also help you implement standard security use cases—such as unified security reporting, escalation through a ticketing system, or automated remediation—on top of Security Hub to help improve your overall Kubernetes security posture and reduce manual efforts.

The solution uses the approach described in A Container-Free Way to Configure Kubernetes Using AWS Lambda to deploy an AWS Lambda function that periodically synchronizes the security status of a Kubernetes cluster from a Kubernetes or Gatekeeper policy report with Security Hub. Figure 1 shows the architecture diagram for the solution.

Figure 1: Diagram of solution

This solution works using the following resources and configurations:

Optional: EKS cluster administrators can raise the limit of reported policy violations by using the –constraint-violations-limit flag in their Gatekeeper audit operation.

You can download the solution from this GitHub repository.

In the walkthrough, I show you how to deploy a Kubernetes policy-as-code solution and forward the findings to Security Hub. We’ll configure Kyverno and a Kubernetes demo environment with findings in an existing EKS cluster to Security Hub.

The code provided includes an example constraint and noncompliant resource to test against.

An EKS cluster is required to set up this solution within your AWS environments. The cluster should be configured with either aws-auth ConfigMap or access entries. Optional: You can use eksctl to create a cluster.

The following resources need to be installed on your computer:

The first step is to install Kyverno on an existing Kubernetes cluster. Then deploy examples of a Kyverno policy and noncompliant resources.

You should see output similar to the following:

The next step is to clone and deploy the solution that integrates with Security Hub.

You should see the following output:

You need to create the Kubernetes Group read-only-group to allow read-only permissions to the IAM role of the Lambda function. If you aren’t using access entries, you will also need to modify the aws-auth ConfigMap of the Kubernetes clusters.

The next step is to verify that the Lambda integration to Security Hub is running.

Figure 2: Sample Kyverno findings in Security Hub

The final step is to clean up the resources that you created for this walkthrough.

In this post, you learned how to integrate Kubernetes policy report findings with Security Hub and tested this setup by using the Kyverno policy engine. If you want to test the integration of this solution with Gatekeeper, you can find alternative commands for step 1 of this post in the GitHub repository’s README file.

Using this integration, you can gain visibility into your Kubernetes security posture across EKS clusters and join it with a centralized view, together with other security findings such as those from AWS Config, Amazon Inspector, and more across your organization. You can also try this solution with other tools, such as kube-bench or Gatekeeper. You can extend this setup to notify security teams of critical misconfigurations or implement automated remediation actions by using AWS Security Hub.

For more information on how to use PaC solutions to secure Kubernetes workloads in the AWS cloud, see Amazon Elastic Kubernetes Service (Amazon EKS) workshop, Amazon EKS best practices, Using Gatekeeper as a drop-in Pod Security Policy replacement in Amazon EKS and Policy-based countermeasures for Kubernetes.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=k6amxpqH2TQ

Post Syndicated from jzb original https://lwn.net/Articles/970072/

Gentoo Council member Michał Górny posted

an RFC to the gentoo-dev mailing

list in late February about banning “‘AI’-backed (LLM/GPT/whatever)

” to the Gentoo Linux project. Górny wrote that the spread of the

contributions

“AI bubble

” indicated a need for Gentoo to formally take a stand on AI

tools. After a lengthy discussion, the Gentoo Council voted

unanimously this week to adopt his proposal and ban contributions generated with AI/ML tools.

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=rNQGsgTUYh0

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=FoTp0K2H3RM

Post Syndicated from Sam Rhea original https://blog.cloudflare.com/cloudflare-sse-gartner-magic-quadrant-2024

Gartner has once again named Cloudflare to the Gartner® Magic Quadrant™ for Security Service Edge (SSE) report1. We are excited to share that Cloudflare is one of only ten vendors recognized in this report. For the second year in a row, we are recognized for our ability to execute and the completeness of our vision. You can read more about our position in the report here.

Last year, we became the only new vendor named in the 2023 Gartner® Magic Quadrant™ for SSE. We did so in the shortest amount of time as measured by the date since our first product launched. We also made a commitment to our customers at that time that we would only build faster. We are happy to report back on the impact that has had on customers and the Gartner recognition of their feedback.

Cloudflare can bring capabilities to market quicker, and with greater cost efficiency, than competitors thanks to the investments we have made in our global network over the last 14 years. We believe we were able to become the only new vendor in 2023 by combining existing advantages like our robust, multi-use global proxy, our lightning-fast DNS resolver, our serverless compute platform, and our ability to reliably route and accelerate traffic around the world.

We believe we advanced further in the SSE market over the last year by building on the strength of that network as larger customers adopted Cloudflare One. We took the ability of our Web Application Firewall (WAF) to scan for attacks without compromising speed and applied that to our now comprehensive Data Loss Prevention (DLP) approach. We repurposed the tools that we use to measure our own network and delivered an increasingly mature Digital Experience Monitoring (DEX) suite for administrators. And we extended our Cloud Access Security Broker (CASB) toolset to scan more applications for new types of data.

We are grateful to the customers who have trusted us on this journey so far, and we are especially proud of our customer reviews in the Gartner® Peer Insights™ panel as those customers report back on their experience with Cloudflare One. The feedback has been so consistently positive that Gartner named Cloudflare a Customers’ Choice2 for 2024. We are going to make the same commitment to you today that we made in 2023: Cloudflare will only build faster as we continue to build out the industry’s best SSE platform.

A Security Service Edge (SSE) “secures access to the web, cloud services and private applications. Capabilities include access control, threat protection, data security, security monitoring, and acceptable-use control enforced by network-based and API-based integration. SSE is primarily delivered as a cloud-based service, and may include on-premises or agent-based components.”3

The SSE solutions in the market began to take shape as companies dealt with users, devices, and data leaving their security perimeters at scale. In previous generations, teams could keep their organization safe by hiding from the rest of the world behind a figurative castle-and-moat. The firewalls that protected their devices and data sat inside the physical walls of their space. The applications their users needed to reach sat on the same intranet. When users occasionally left the office they dealt with the hassle of backhauling their traffic through a legacy virtual private network (VPN) client.

This concept started to fall apart when applications left the building. SaaS applications offered a cheaper, easier alternative to self-hosting your resources. The cost and time savings drove IT departments to migrate and security teams had to play catch up as all of their most sensitive data also migrated.

At the same time, users began working away from the office more often. The rarely used VPN infrastructure inside an office suddenly struggled to stay afloat with the new demands from more users connecting to more of the Internet.

As a result, the band-aid boxes in an organization failed — in some cases slowly and in other situations all at once. SSE vendors offer a cloud-based answer. SSE providers operate their own security services from their own data centers or on a public cloud platform. Like the SaaS applications that drove the first wave of migration, these SSE services are maintained by the vendor and scale in a way that offers budget savings. The end user experience improves by avoiding the backhaul and security administrators can more easily build smarter, safer policies to defend their team.

The SSE space covers a broad category. If you ask five security teams what an SSE or Zero Trust solution is, you’ll probably get six answers. In general, SSE provides a helpful framing that gives teams guard rails as they try to adopt a Zero Trust architecture. The concept breaks down into a few typical buckets:

The SSE space is a component of the larger Secure Access Service Edge (SASE) market. You can think of the SSE capabilities as the security half of SASE while the other half consists of the networking technologies that connect users, offices, applications, and data centers. Some vendors only focus on the SSE side and rely on partners to connect customers to their security solutions. Other companies just provide the networking pieces. While today’s announcement highlights our SSE capabilities, Cloudflare offers both components as a comprehensive, single-vendor SASE provider.

Customers can rely on Cloudflare to solve the entire range of security problems represented by the SSE category. They also can just start with a single component. We know that an entire “digital transformation” can be an overwhelming prospect for any organization. While all the use cases below work better together, we make it simple for teams to start by just solving one problem at a time.

Most organizations begin that problem-solving journey by attacking their virtual private network (VPN). In many cases, a legacy VPN operates in a model where anyone on that private network is trusted by default to access anything else. The applications and data sitting on that network become vulnerable to any user who can connect. Augmenting or replacing legacy VPNs is one of the leading Zero Trust use cases we see customers adopting, in part to eliminate pains related to the ongoing series of high-impact VPN vulnerabilities in on-premises firewalls and gateways.

Cloudflare provides teams with the ability to build Zero Trust rules that replace the security model of a traditional VPN with one that evaluates every request and connection for trust signals like identity, device posture, location, and multifactor authentication method. Through Zero Trust Network Access (ZTNA), administrators can make applications available to employees and third-party contractors through a fully clientless option that makes traditional tools feel just like SaaS applications. Teams that need more of a private network can still build one on Cloudflare that supports arbitrary TCP, UDP, and ICMP traffic, including bidirectional traffic, while still enforcing Zero Trust rules.

Cloudflare One can also apply these rules to the applications that sit outside your infrastructure. You can deploy Cloudflare’s identity proxy to enforce consistent and granular policies that determine how team members log into their SaaS applications, as well.

Cloudflare operates the world’s fastest DNS resolver, helping users connect safely to the Internet whether they are working from a coffee shop or operating inside some of the world’s largest networks.

Beyond just DNS filtering, Cloudflare also provides organizations with a comprehensive Secure Web Gateway (SWG) that inspects the HTTP traffic leaving a device or entire network. Cloudflare filters each request for dangerous destinations or potentially malicious downloads. Besides SSE use cases, Cloudflare operates one of the largest forward proxies in the world for Internet privacy used by Apple iCloud Private Relay, Microsoft Edge Secure Network, and beyond.

You can also mix-and-match how you want to send traffic to Cloudflare. Your team can decide to send all traffic from every mobile device or just plug in your office or data center network to Cloudflare’s network. Each request or DNS query is logged and made available for review in our dashboard or can be exported to a 3rd party logging solution.

SaaS applications relieve IT teams of the burden to host, maintain, and monitor the tools behind their business. They also create entirely new headaches for corresponding security teams.

Any user in an enterprise now needs to connect to an application on the public Internet to do their work, and some users prefer to use their favorite application rather than the ones vetted and approved by the IT department. This kind of Shadow IT infrastructure can lead to surprise fees, compliance violations, and data loss.

Cloudflare offers comprehensive scanning and filtering to detect when team members are using unapproved tools. With a single click, administrators can block those tools outright or control how those applications can be used. If your marketing team needs to use Google Drive to collaborate with a vendor, you can apply a quick rule that makes sure they can only download files and never upload. Alternatively, allow users to visit an application and read from it while blocking all text input. Cloudflare’s Shadow IT policies offer easy-to-deploy controls over how your organization uses the Internet.

Beyond unsanctioned applications, even approved resources can cause trouble. Your organization might rely on Microsoft OneDrive for day-to-day work, but your compliance policies prohibit your HR department from storing files with employee Social Security numbers in the tool. Cloudflare’s Cloud Access Security Broker (CASB) can routinely scan the SaaS applications your team relies on to detect improper usage, missing controls, or potential misconfiguration.

Enterprise users have consumer expectations about how they connect to the Internet. When they encounter delays or latency, they turn to IT help desks to complain. Those complaints only get louder when help desks lack the proper tools to granularly understand or solve the issues.

Cloudflare One provides teams with a Digital Experience Monitoring toolkit that we built based on the tools we have used for years inside of Cloudflare to monitor our own global network. Administrators can measure global, regional, or individual latency to applications on the Internet. IT teams can open our dashboard to troubleshoot connectivity issues with single users. The same capabilities we use to proxy approximately 20% of the web are now available to teams of any size, so they can help their users.

The most pressing concern we have heard from CIOs and CISOs over the last year is the fear around data protection. Whether data loss is malicious or accidental, the consequences can erode customer trust and create penalties for the business.

We also hear that deploying any sort of effective data security is just plain hard. Customers tell us anecdotes about expensive point solutions they purchased with the intention to implement them quickly and keep data safe, that ultimately just didn’t work or slowed down their teams to the point that they became shelfware.

We have spent the last year aggressively improving our solution to that problem as the single largest focus area of investment in the Cloudflare One team. Our data security portfolio, including data loss prevention (DLP), can now scan for data leaving your organization, as well as data stored inside your SaaS applications, and prevent loss based on exact data matches that you provide or through fuzzier patterns. Teams can apply optical character recognition (OCR) to find potential loss in images, scan for public cloud keys in a single click, and software companies can rely on predefined ML-based source code detections.

Data security will continue to be our largest area of focus in Cloudflare One over the next year. We are excited to continue to deliver an SSE platform that gives administrators comprehensive control without interrupting or slowing down their users.

The scope of an SSE solution captures a wide range of the security problems that plague enterprises. We also know that issues beyond that definition can compromise a team. In addition to offering an industry-leading SSE platform, Cloudflare gives your team a full range of tools to protect your organization, to connect your team, and to secure all of your applications.

IT compromise tends to start with email. The majority of attacks begin with some kind of multi-channel phishing campaign or social engineering attack sent to the largest hole in any organization’s perimeter: their employees’ email inboxes. We believe that you should be protected from that too, even before the layers of our SSE platform kick in to catch malicious links or files from those emails, so Cloudflare One also features best-in-class cloud email security. The capabilities just work with the rest of Cloudflare One to help stop all phishing channels — inbox (cloud email security), social media (SWG), SMS (ZTNA together with hard keys), and cloud collaboration (CASB). For example, you can allow team members to still click on potentially malicious links in an email while forcing those destinations to load in an isolated browser that is transparent to the user.

Most SSE solutions stop there, though, and only solve the security challenge. Team members, devices, offices, and data centers still need to connect in a way that is performant and highly available. Other SSE vendors partner with networking providers to solve that challenge while adding extra hops and latency. Cloudflare customers don’t have to compromise. Cloudflare One offers a complete WAN connectivity solution delivered in the same data centers as our security components. Organizations can rely on a single vendor to solve how they connect and how they do so securely. No extra hops or invoices needed.

We also know that security problems do not distinguish between what happens inside your enterprise and the applications you make available to the rest of the world. You can secure and accelerate the applications that you build to serve your own customers through Cloudflare, as well. Analysts have also recognized Cloudflare’s Web Application and API Protection (WAAP) platform, which protects some of the world’s largest Internet destinations.

Tens of thousands of organizations trust Cloudflare One to secure their teams every day. And they love it. Over 200 enterprises have reviewed Cloudflare’s Zero Trust platform as part of Gartner® Peer Insights™. As mentioned previously, the feedback has been so consistently positive that Gartner named Cloudflare a Customers’ Choice for 2024.

We talk to customers directly about that feedback, and they have helped us understand why CIOs and CISOs choose Cloudflare One. For some teams, we offer a cost-efficient opportunity to consolidate point solutions. Others appreciate that our ease-of-use means that many practitioners have set up our platform before they even talk to our team. We also hear that speed matters to ensure a slick end user experience when we are 46% faster than Zscaler, 56% faster than Netskope, and 10% faster than Palo Alto Networks.

We kicked off 2024 with a week focused on new security features that teams can begin deploying now. Looking ahead to the rest of the year, you can expect additional investment as we add depth to our Secure Web Gateway product. We also have work underway to make our industry-leading access control features even easier to use. Our largest focus areas will include our data protection platform, digital experience monitoring, and our in-line and at-rest CASB tools. And stay tuned for an overhaul to how we surface analytics and help teams meet compliance needs, too.

Our commitment to our customers in 2024 is the same as it was in 2023. We are going to continue to help your teams solve more security problems so that you can focus on your own mission.

Ready to hold us to that commitment? Cloudflare offers something unique among the leaders in this space — you can start using nearly every feature in Cloudflare One right now at no cost. Teams of up to 50 users can adopt our platform for free, whether for their small team or as part of a larger enterprise proof of concept. We believe that organizations of any size should be able to start their journey to deploy industry-leading security.

***

1Gartner, Magic Quadrant for Security Service Edge, By Charlie Winckless, Thomas Lintemuth, Dale Koeppen, April 15, 2024

2Gartner, Voice of the Customer for Zero Trust Network Access, By Peer Contributors, 30 January 2024

3https://www.gartner.com/en/information-technology/glossary/security-service-edge-sse

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally, MAGIC QUADRANT and PEER INSIGHTS are registered trademarks and The GARTNER PEER INSIGHTS CUSTOMERS’ CHOICE badge is a trademark and service mark of Gartner, Inc. and/or its affiliates and is used herein with permission. All rights reserved.

Gartner® Peer Insights content consists of the opinions of individual end users based on their own experiences, and should not be construed as statements of fact, nor do they represent the views of Gartner or its a iliates. Gartner does not endorse any vendor, product or service depicted in this content nor makes any warranties, expressed or implied, with respect to this content, about its accuracy or completeness, including any warranties of merchantability or fitness for a particular purpose.

Gartner does not endorse any vendor, product or service depicted in its research publications and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

Post Syndicated from corbet original https://lwn.net/Articles/969923/

Kernel developers, like conscientious developers for many projects, will

often include checks in the code for conditions that are never expected to

occur, but which would indicate a serious problem should that expectation

turn out to be incorrect. For years, developers have been encouraged (to

put it politely) to avoid using assertions that crash the machine for such

conditions unless there is truly no alternative. Increasingly, though, use

of the kernel’s WARN_ON() family of macros, which developers were

told to use instead, is also being discouraged.

Post Syndicated from digiblur DIY original https://www.youtube.com/watch?v=oqObC1b66uM

Post Syndicated from Jack Fults original https://backblazeprod.wpenginepowered.com/blog/moving-670-network-connections/

We’re constantly upgrading our storage cloud, but we don’t always have ways to tangibly show what multi-exabyte infrastructure looks like. When data center manager, Jack Fults, shared photos from a recent network switch migration, though, it felt like exactly the kind of thing that makes The Cloud real in a physical, visual sense. We figured it was a good opportunity to dig into some of our more recent upgrades.

real in a physical, visual sense. We figured it was a good opportunity to dig into some of our more recent upgrades.

If your parents ever tried to enforce restrictions on internet time, and in response, you hardwired a secret 120ft Ethernet cable from the router in your basement through the rafters and up into your room so you could game whenever you wanted, this story is for you.

Replacing 670 network switches in a data center is kind of like that, times 1,000. And that’s exactly what we did in our Sacramento data center recently.

I’m a data center manager here at Backblaze, and I’m in charge of making sure our hardware can meet our production needs, interfacing with the data center ownership, and generally keeping the building running, all in service of delivering easy cloud storage and backup services to our customers. I lead an intrepid team of data center technicians who deserve a ton of kudos for making this project happen as well as our entire Cloud Operations team.

We’re constantly looking for ways to make our infrastructure better, faster, and smarter, and in that effort, we wanted to upgrade to new network switches. The new switches would allow us to consolidate connections and mitigate any potential future failures. We have plenty of redundancy and protocols in place in the event that happens, but it was a risk we knew we’d be wise to get ahead of as we continued to grow our data under management.

In order to make the move, we faced a few challenges:

Racking new switches in cabinets that are already fully populated isn’t ideal, but it is totally doable with a little planning (okay, a lot of planning). It’s a good thing I love nothing more than a good Google sheet, and believe me we tracked everything down to the length of the cables (3,272ft to be exact, but more on that later). Here’s a breakdown of our process:

Each 1U Dell had 48 connections, which handled two Backblaze Vaults. We were able to upgrade to 2U switches with the new Aristas, which each had 96 connections, fitting four Backblaze Vaults plus 16 core servers. So, every time we moved to the next four vaults, we’d go through this process until we were through the network switch migration for 27 Vaults plus core servers, comprising the 670 network connections.

Using the transfer switch allowed us to decommission the old switch then rack and configure the new switch so that we only lost a second or two of network connectivity as one of the DC techs moved the connection. That was one of the things we had to be very planful about—making sure the Vault would remain available, with the exception of one server that would be down for a split second during the swap. Then, our DC techs would confirm that connectivity was back up before moving on to the next server in the Vault.

We ran into a wrinkle early on in the project. We had two cabinets side by side where the switches are located, so sometimes we’d rack the temporary switch in one and the new Arista switch in the other. Some of the old cables weren’t long enough to reach the new switches. There’s not much else you can do at that point but run new cables, so we decided to replace all of the cables wholesale—3,272ft of new cable went in.

We had to fine-tune our plans even more to balance decommissioning with racking the new switches in order to make room for the new cables, but it also ended up solving another issue we hadn’t even set out to address. It allowed us to eliminate a lot of slack from cables that were too long. Over time, with the amount of cables we had, the slack made it difficult to work in the racks, so we were happy to see that go away.

While we still have some cable management and decommissioning to be done, migrating to the Arista switches was the mission critical piece to mitigate our risk and plan for ongoing improvements.

As a data center manager, we get to work on the side of tech that takes the abstract internet and makes it tangible, and that’s pretty cool. It can be hard for people to visualize The Cloud, but it’s made up of cables and racks and network switches just like these. Even though my mom loves to bring up that secret Ethernet cable story at family events, I think she’s pretty happy that it led that mischievous kid to a project like this.

While not every project has great pictures to go along with it, we’re always upgrading our systems for performance, security, and reliability. Some other projects we’re completed in the last few months include reconfiguring much of our space to make it more efficient and ready for enterprise-level hardware, moving our physical media operations, and decommissioning 4TB Vaults as we migrate them to larger Vaults with larger drives. Stay tuned for a longer post about that from our very own Andy Klein.

The post Moving 670 Network Connections appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from jake original https://lwn.net/Articles/970324/

Security updates have been issued by Debian (firefox-esr, jetty9, libdatetime-timezone-perl, tomcat10, and tzdata), Fedora (cockpit, filezilla, and libfilezilla), Red Hat (firefox, gnutls, java-1.8.0-openjdk, java-17-openjdk, kernel, kernel-rt, less, mod_http2, nodejs:18, rhc-worker-script, and shim), Slackware (mozilla), SUSE (kernel), and Ubuntu (apache2, glibc, and linux-xilinx-zynqmp).

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=X0f0wkme58c

By continuing to use the site, you agree to the use of cookies. more information

The cookie settings on this website are set to "allow cookies" to give you the best browsing experience possible. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this.