Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=C8JYxvfqhqs

An LGR Oddware Christmas: The Gift of Tech Nonsense

Post Syndicated from LGR original https://www.youtube.com/watch?v=yAY_GFwmVow

Training teachers and empowering students in Machakos, Kenya

Post Syndicated from Wariara Waireri original https://www.raspberrypi.org/blog/computing-education-machakos-kenya-edtech-hub-launch/

Over the past months, we’ve been working with two partner organisations, Team4Tech and Kenya Connect, to support computing education across the rural county of Machakos, Kenya.

Working in rural Kenya

In line with our 2025 strategy, we have started work to improve computing education for young people in Kenya and South Africa. We are especially eager to support communities that experience educational disadvantage. One of our projects in this area is in partnership with Team4Tech and Kenya Connect. Together we have set up the Dr Isaac Minae EdTech Hub in the community Kenya Connect supports in the rural county of Machakos, and we are training teachers so they can equip their learners with coding and physical computing skills.

“Watching teachers and students find joy and excitement in learning has been tremendous! The Raspberry Pi Foundation’s hands-on approach is helping learners make connections through seeing how technology can be used for innovation to solve problems. We are excited to be partnering with Raspberry Pi Foundation and Team4Tech in bringing technology to our rural community.”

– Sharon Runge, Executive Director, Kenya Connect

We are providing the Wamunyu community with the hardware and the skills and knowledge training they need to use digital technology to create solutions to problems they see. The training will make sure that teachers across Machakos can sustain the EdTech Hub and computing education activities independently. This is important because we want the community to be empowered to solve problems that matter to them and for all the local young people to have opportunities that are open to their peers in Nairobi, Kisumu, Mombasa, and other cities in Kenya.

Launching the Dr Isaac Minae EdTech Hub in Wamunyu

In October this year, we travelled to Wamunyu to help Kenya Connect set up and launch the Dr Isaac Minae EdTech Hub, for which we provided hardware including Raspberry Pi 400 computers and physical computing kits with Raspberry Pi Pico microcontrollers, LEDs, buzzers, buttons, motors and more. We also held a teacher training session to start setting up the local educators with the skills and knowledge they need to teach coding and physical computing. In the training, educators brought a range of experiences with using computers. Some were unfamiliar with computer hardware, but at the end of the training session, they all had designed and created physical computing projects using electronic circuits and code. It was hugely inspiring to work with these teachers and see their enthusiasm and commitment to learning.

Through our two-year partnership with Kenya Connect, we aim to reach at least 1000 learners between the ages of 9 to 14 from 62 schools in Machakos county. We will work with at least 150 teachers to build their knowledge, skills, and confidence to teach coding, digital making, and robotics, and to run after-school Code Clubs. We’ll help teachers offer learning experiences based on our established learning paths to their students, and these experiences will include basic coding skills aligned to Kenya’s Competency Based Curriculum (CBC). We are putting particular focus on adapting our learning content so that teachers in Machakos can offer culturally relevant educational activities in their community.

“Our partnership with the Raspberry Pi Foundation will open up new avenues for teachers to learn coding and physical computing. This is in line with the current Competency Based Curriculum that requires students to start learning coding at an early age. Though coding is entrenched in the curriculum, teachers are ill-prepared and schools lack devices. We are so grateful to the Raspberry Pi Foundation for providing teachers and students access to devices and the Raspberry Pi learning paths.”

– Patrick Munguti, Director of Education and Technology, Kenya Connect

Looking to the future

Next up for our work on this project is to continue supporting Kenya Connect to scale the program in the county.

In all our work in Sub-Saharan Africa, we are committed to strengthening and growing our partnerships with locally led youth and community organisations, the private sector, and the public sector, in line with our mission to open up more opportunities for young people to realise their full potential through the power of computing and digital technologies.

Our work in Sub-Saharan Africa is generously funded by the Ezra Charitable Trust.

The post Training teachers and empowering students in Machakos, Kenya appeared first on Raspberry Pi.

The unintended consequences of blocking IP addresses

Post Syndicated from Alissa Starzak original https://blog.cloudflare.com/consequences-of-ip-blocking/

In late August 2022, Cloudflare’s customer support team began to receive complaints about sites on our network being down in Austria. Our team immediately went into action to try to identify the source of what looked from the outside like a partial Internet outage in Austria. We quickly realized that it was an issue with local Austrian Internet Service Providers.

But the service disruption wasn’t the result of a technical problem. As we later learned from media reports, what we were seeing was the result of a court order. Without any notice to Cloudflare, an Austrian court had ordered Austrian Internet Service Providers (ISPs) to block 11 of Cloudflare’s IP addresses.

In an attempt to block 14 websites that copyright holders argued were violating copyright, the court-ordered IP block rendered thousands of websites inaccessible to ordinary Internet users in Austria over a two-day period. What did the thousands of other sites do wrong? Nothing. They were a temporary casualty of the failure to build legal remedies and systems that reflect the Internet’s actual architecture.

Today, we are going to dive into a discussion of IP blocking: why we see it, what it is, what it does, who it affects, and why it’s such a problematic way to address content online.

Collateral effects, large and small

The craziest thing is that this type of blocking happens on a regular basis, all around the world. But unless that blocking happens at the scale of what happened in Austria, or someone decides to highlight it, it is typically invisible to the outside world. Even Cloudflare, with deep technical expertise and understanding about how blocking works, can’t routinely see when an IP address is blocked.

For Internet users, it’s even more opaque. They generally don’t know why they can’t connect to a particular website, where the connection problem is coming from, or how to address it. They simply know they cannot access the site they were trying to visit. And that can make it challenging to document when sites have become inaccessible because of IP address blocking.

Blocking practices are also wide-spread. In their Freedom on the Net report, Freedom House recently reported that 40 out of the 70 countries that they examined – which vary from countries like Russia, Iran and Egypt to Western democracies like the United Kingdom and Germany – did some form of website blocking. Although the report doesn’t delve into exactly how those countries block, many of them use forms of IP blocking, with the same kind of potential effects for a partial Internet shutdown that we saw in Austria.

Although it can be challenging to assess the amount of collateral damage from IP blocking, we do have examples where organizations have attempted to quantify it. In conjunction with a case before the European Court of Human Rights, the European Information Society Institute, a Slovakia-based nonprofit, reviewed Russia’s regime for website blocking in 2017. Russia exclusively used IP addresses to block content. The European Information Society Institute concluded that IP blocking led to “collateral website blocking on a massive scale” and noted that as of June 28, 2017, “6,522,629 Internet resources had been blocked in Russia, of which 6,335,850 – or 97% – had been blocked collaterally, that is to say, without legal justification.”

In the UK, overbroad blocking prompted the non-profit Open Rights Group to create the website Blocked.org.uk. The website has a tool enabling users and site owners to report on overblocking and request that ISPs remove blocks. The group also has hundreds of individual stories about the effect of blocking on those whose websites were inappropriately blocked, from charities to small business owners. Although it’s not always clear what blocking methods are being used, the fact that the site is necessary at all conveys the amount of overblocking. Imagine a dressmaker, watchmaker or car dealer looking to advertise their services and potentially gain new customers with their website. That doesn’t work if local users can’t access the site.

One reaction might be, “Well, just make sure there are no restricted sites sharing an address with unrestricted sites.” But as we’ll discuss in more detail, this ignores the large difference between the number of possible domain names and the number of available IP addresses, and runs counter to the very technical specifications that empower the Internet. Moreover, the definitions of restricted and unrestricted differ across nations, communities, and organizations. Even if it were possible to know all the restrictions, the designs of the protocols — of the Internet, itself — mean that it is simply infeasible, if not impossible, to satisfy every agency’s constraints.

Legal and human rights concerns

Overblocking websites is not only a problem for users; it has legal implications. Because of the effect it can have on ordinary citizens looking to exercise their rights online, government entities (both courts and regulatory bodies) have a legal obligation to make sure that their orders are necessary and proportionate, and don’t unnecessarily affect those who are not contributing to the harm.

It would be hard to imagine, for example, that a court in response to alleged wrongdoing would blindly issue a search warrant or an order based solely on a street address without caring if that address was for a single family home, a six-unit condo building, or a high rise with hundreds of separate units. But those sorts of practices with IP addresses appear to be rampant.

In 2020, the European Court of Human Rights (ECHR) – the court overseeing the implementation of the Council of Europe’s European Convention on Human Rights – considered a case involving a website that was blocked in Russia not because it had been targeted by the Russian government, but because it shared an IP address with a blocked website. The website owner brought suit over the block. The ECHR concluded that the indiscriminate blocking was impermissible, ruling that the block on the lawful content of the site “amounts to arbitrary interference with the rights of owners of such websites.” In other words, the ECHR ruled that it was improper for a government to issue orders that resulted in the blocking of sites that were not targeted.

Using Internet infrastructure to address content challenges

Ordinary Internet users don’t think a lot about how the content they are trying to access online is delivered to them. They assume that when they type a domain name into their browser, the content will automatically pop up. And if it doesn’t, they tend to assume the website itself is having problems unless their entire Internet connection seems to be broken. But those basic assumptions ignore the reality that connections to a website are often used to limit access to content online.

Why do countries block connections to websites? Maybe they want to limit their own citizens from accessing what they believe to be illegal content – like online gambling or explicit material – that is permissible elsewhere in the world. Maybe they want to prevent the viewing of a foreign news source that they believe to be primarily disinformation. Or maybe they want to support copyright holders seeking to block access to a website to limit viewing of content that they believe infringes their intellectual property.

To be clear, blocking access is not the same thing as removing content from the Internet. There are a variety of legal obligations and authorities designed to permit actual removal of illegal content. Indeed, the legal expectation in many countries is that blocking is a matter of last resort, after attempts have been made to remove content at the source.

Blocking just prevents certain viewers – those whose Internet access depends on the ISP that is doing the blocking – from being able to access websites. The site itself continues to exist online and is accessible by everyone else. But when the content originates from a different place and can’t be easily removed, a country may see blocking as their best or only approach.

We recognize the concerns that sometimes drive countries to implement blocking. But fundamentally, we believe it’s important for users to know when the websites they are trying to access have been blocked, and, to the extent possible, who has blocked them from view and why. And it’s critical that any restrictions on content should be as limited as possible to address the harm, to avoid infringing on the rights of others.

Brute force IP address blocking doesn’t allow for those things. It’s fully opaque to Internet users. The practice has unintended, unavoidable consequences on other content. And the very fabric of the Internet means that there is no good way to identify what other websites might be affected either before or during an IP block.

To understand what happened in Austria and what happens in many other countries around the world that seek to block content with the bluntness of IP addresses, we have to understand what is going on behind the scenes. That means diving into some technical details.

Identity is attached to names, never addresses

Before we even get started describing the technical realities of blocking, it’s important to stress that the first and best option to deal with content is at the source. A website owner or hosting provider has the option of removing content at a granular level, without having to take down an entire website. On the more technical side, a domain name registrar or registry can potentially withdraw a domain name, and therefore a website, from the Internet altogether.

But how do you block access to a website, if for whatever reason the content owner or content source is unable or unwilling to remove it from the Internet? There are only three possible control points.

The first is via the Domain Name System (DNS), which translates domain names into IP addresses so that the site can be found. Instead of returning a valid IP address for a domain name, the DNS resolver could lie and respond with a code, NXDOMAIN, meaning that “there is no such name.” A better approach would be to use one of the honest error numbers standardized in 2020, including error 15 for blocked, error 16 for censored, 17 for filtered, or 18 for prohibited, although these are not widely used currently.

Interestingly, the precision and effectiveness of DNS as a control point depends on whether the DNS resolver is private or public. Private or ‘internal’ DNS resolvers are operated by ISPs and enterprise environments for their own known clients, which means that operators can be precise in applying content restrictions. By contrast, that level of precision is unavailable to open or public resolvers, not least because routing and addressing is global and ever-changing on the Internet map, and in stark contrast to addresses and routes on a fixed postal or street map. For example, private DNS resolvers may be able to block access to websites within specified geographic regions with at least some level of accuracy in a way that public DNS resolvers cannot, which becomes profoundly important given the disparate (and inconsistent) blocking regimes around the world.

The second approach is to block individual connection requests to a restricted domain name. When a user or client wants to visit a website, a connection is initiated from the client to a server name, i.e. the domain name. If a network or on-path device is able to observe the server name, then the connection can be terminated. Unlike DNS, there is no mechanism to communicate to the user that access to the server name was blocked, or why.

The third approach is to block access to an IP address where the domain name can be found. This is a bit like blocking the delivery of all mail to a physical address. Consider, for example, if that address is a skyscraper with its many unrelated and independent occupants. Halting delivery of mail to the address of the skyscraper causes collateral damage by invariably affecting all parties at that address. IP addresses work the same way.

Notably, the IP address is the only one of the three options that has no attachment to the domain name. The website domain name is not required for routing and delivery of data packets; in fact it is fully ignored. A website can be available on any IP address, or even on many IP addresses, simultaneously. And the set of IP addresses that a website is on can change at any time. The set of IP addresses cannot definitively be known by querying DNS, which has been able to return any valid address at any time for any reason, since 1995.

The idea that an address is representative of an identity is anathema to the Internet’s design, because the decoupling of address from name is deeply embedded in the Internet standards and protocols, as is explained next.

The Internet is a set of protocols, not a policy or perspective

Many people still incorrectly assume that an IP address represents a single website. We’ve previously stated that the association between names and addresses is understandable given that the earliest connected components of the Internet appeared as one computer, one interface, one address, and one name. This one-to-one association was an artifact of the ecosystem in which the Internet Protocol was deployed, and satisfied the needs of the time.

Despite the one-to-one naming practice of the early Internet, it has always been possible to assign more than one name to a server (or ‘host’). For example, a server was (and is still) often configured with names to reflect its service offerings such as mail.example.com and www.example.com, but these shared a base domain name. There were few reasons to have completely different domain names until the need to colocate completely different websites onto a single server. That practice was made easier in 1997 by the Host header in HTTP/1.1, a feature preserved by the SNI field in a TLS extension in 2003.

Throughout these changes, the Internet Protocol and, separately, the DNS protocol, have not only kept pace, but have remained fundamentally unchanged. They are the very reason that the Internet has been able to scale and evolve, because they are about addresses, reachability, and arbitrary name to IP address relationships.

The designs of IP and DNS are also entirely independent, which only reinforces that names are separate from addresses. A closer inspection of the protocols’ design elements illuminates the misperceptions of policies that lead to today’s common practice of controlling access to content by blocking IP addresses.

By design, IP is for reachability and nothing else

Much like large public civil engineering projects rely on building codes and best practice, the Internet is built using a set of open standards and specifications informed by experience and agreed by international consensus. The Internet standards that connect hardware and applications are published by the Internet Engineering Task Force (IETF) in the form of “Requests for Comment” or RFCs — so named not to suggest incompleteness, but to reflect that standards must be able to evolve with knowledge and experience. The IETF and its RFCs are cemented in the very fabric of communications, for example, with the first RFC 1 published in 1969. The Internet Protocol (IP) specification reached RFC status in 1981.

Alongside the standards organizations, the Internet’s success has been helped by a core idea known as the end-to-end (e2e) principle, codified also in 1981, based on years of trial and error experience. The end-to-end principle is a powerful abstraction that, despite taking many forms, manifests a core notion of the Internet Protocol specification: the network’s only responsibility is to establish reachability, and every other possible feature has a cost or a risk.

The idea of “reachability” in the Internet Protocol is also enshrined in the design of IP addresses themselves. Looking at the Internet Protocol specification, RFC 791, the following excerpt from Section 2.3 is explicit about IP addresses having no association with names, interfaces, or anything else.

Addressing

A distinction is made between names, addresses, and routes [4]. A

name indicates what we seek. An address indicates where it is. A

route indicates how to get there. The internet protocol deals

primarily with addresses. It is the task of higher level (i.e.,

host-to-host or application) protocols to make the mapping from

names to addresses. The internet module maps internet addresses to

local net addresses. It is the task of lower level (i.e., local net

or gateways) procedures to make the mapping from local net addresses

to routes.

[ RFC 791, 1981 ]

Just like postal addresses for skyscrapers in the physical world, IP addresses are no more than street addresses written on a piece of paper. And just like a street address on paper, one can never be confident about the entities or organizations that exist behind an IP address. In a network like Cloudflare’s, any single IP address represents thousands of servers, and can have even more websites and services — in some cases numbering into the millions — expressly because the Internet Protocol is designed to enable it.

Here’s an interesting question: could we, or any content service provider, ensure that every IP address matches to one and only one name? The answer is an unequivocal no, and here too, because of a protocol design — in this case, DNS.

The number of names in DNS always exceeds the available addresses

A one-to-one relationship between names and addresses is impossible given the Internet specifications for the same reasons that it is infeasible in the physical world. Ignore for a moment that people and organizations can change addresses. Fundamentally, the number of people and organizations on the planet exceeds the number of postal addresses. We not only want, but need for the Internet to accommodate more names than addresses.

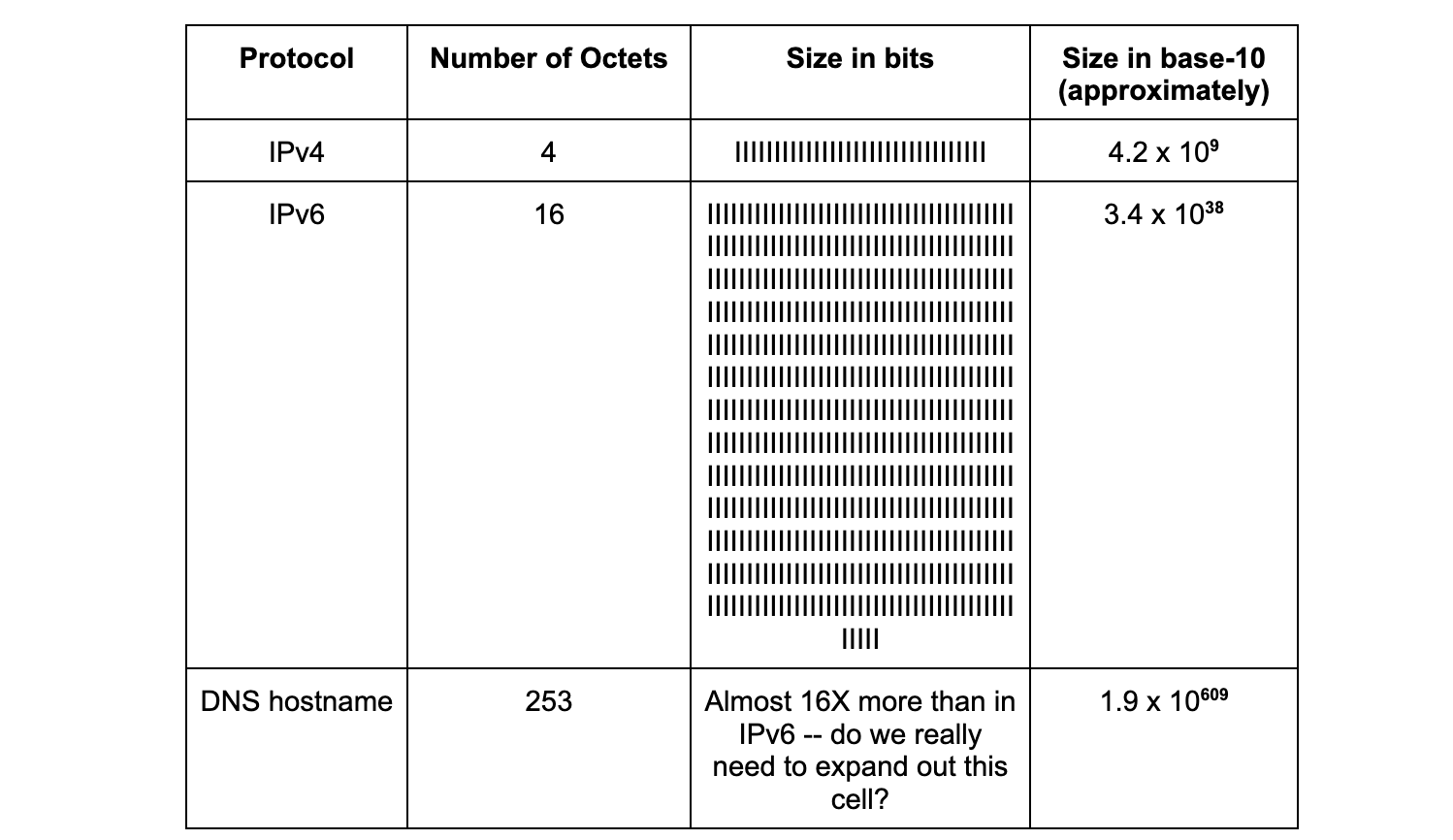

The difference in magnitude between names and addresses is also codified in the specifications. IPv4 addresses are 32 bits, and IPv6 addresses are 128 bits. The size of a domain name that can be queried by DNS is as many as 253 octets, or 2,024 bits (from Section 2.3.4 in RFC 1035, published 1987). The table below helps to put those differences into perspective:

On November 15, 2022, the United Nations announced the population of the Earth surpassed eight billion people. Intuitively, we know that there cannot be anywhere near as many postal addresses. The difference between the number of possible names on the planet, and similarly on the Internet, does and must exceed the number of available addresses.

The proof is in the pudding names!

Now that those two relevant principles about IP addresses and DNS names in the international standards are understood – that IP address and domain names serve distinct purposes and there is no one to one relationship between the two – an examination of a recent case of content blocking using IP addresses can help to see the reasons it is problematic. Take, for example, the IP blocking incident in Austria late August 2022. The goal was to restrict access to 14 target domains, by blocking 11 IP addresses (source: RTR.Telekom. Post via the Internet Archive) — the mismatch between those two numbers should have been a warning flag that IP blocking might not have the desired effect.

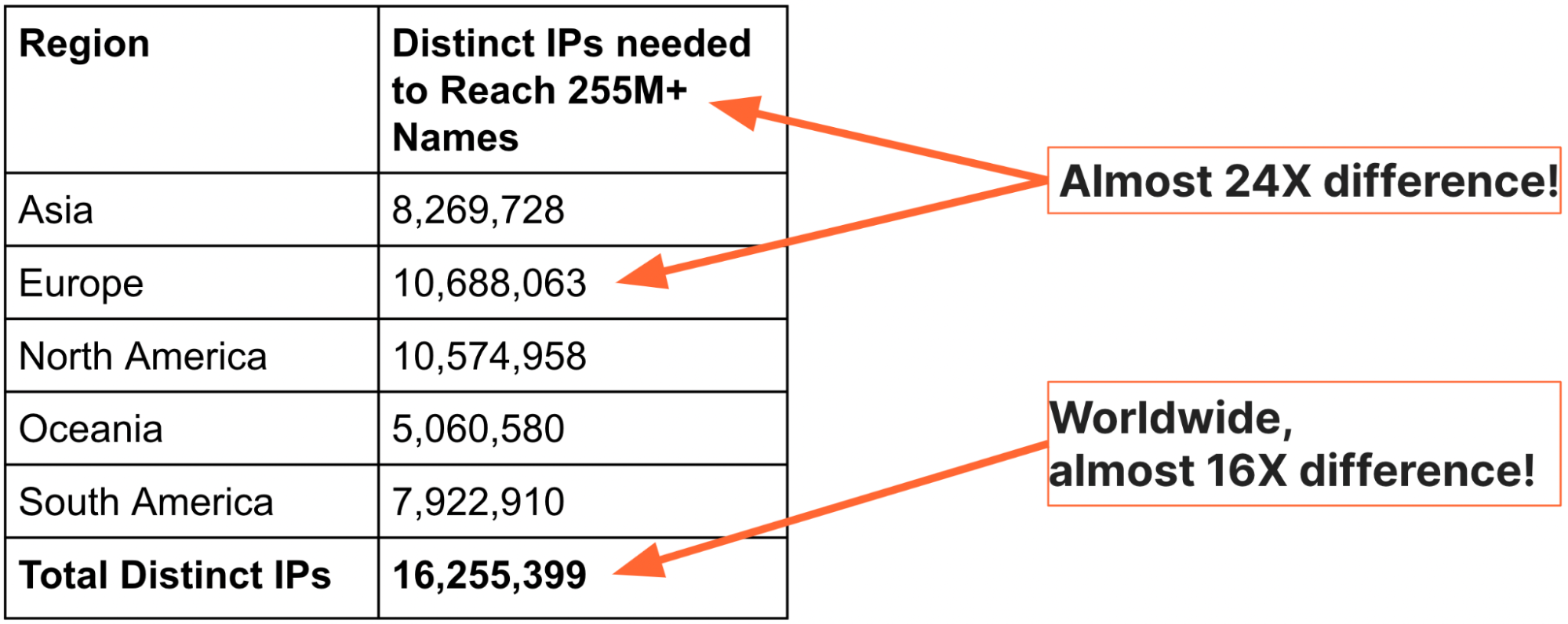

Analogies and international standards may explain the reasons that IP blocking should be avoided, but we can see the scale of the problem by looking at Internet-scale data. To better understand and explain the severity of IP blocking, we decided to generate a global view of domain names and IP addresses (thanks are due to a PhD research intern, Sudheesh Singanamalla, for the effort). In September 2022, we used the authoritative zone files for the top-level domains (TLDs) .com, .net, .info, and .org, together with top-1M website lists, to find a total of 255,315,270 unique names. We then queried DNS from each of five regions and recorded the set of IP addresses returned. The table below summarizes our findings:

The table above makes clear that it takes no more than 10.7 million addresses to reach 255,315,270 million names from any region on the planet, and the total set of IP addresses for those names from everywhere is about 16 million — the ratio of names to IP addresses is nearly 24x in Europe and 16x globally.

There is one more worthwhile detail about the numbers above: The IP addresses are the combined totals of both IPv4 and IPv6 addresses, meaning that far fewer addresses are needed to reach all 255M websites.

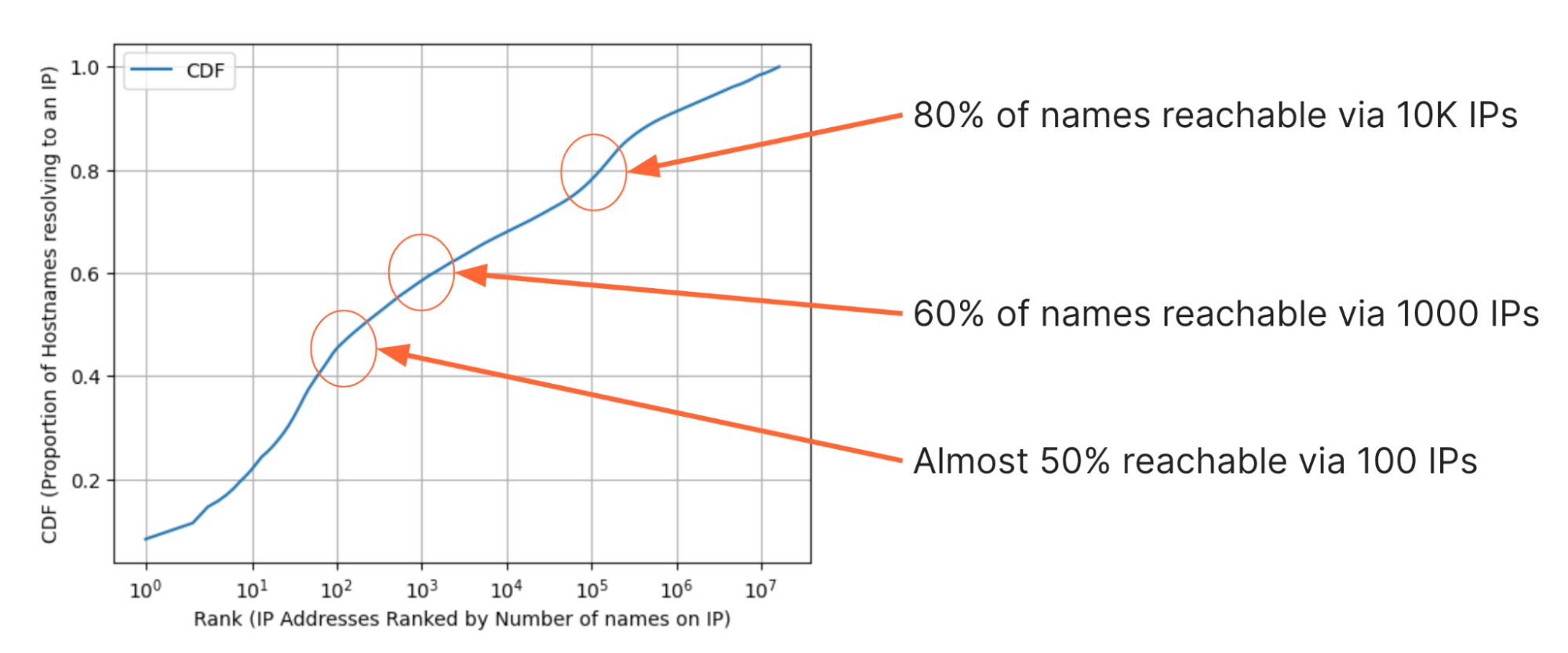

We’ve also inspected the data a few different ways to find some interesting observations. For example, the figure below shows the cumulative distribution (CDF) of the proportion of websites that can be visited with each additional IP address. On the y-axis is the proportion of websites that can be reached given some number of IP addresses. On the x-axis, the 16M IP addresses are ranked from the most domains on the left, to the least domains on the right. Note that any IP address in this set is a response from DNS and so it must have at least one domain name, but the highest numbers of domains on IP addresses in the set number are in the 8-digit millions.

By looking at the CDF there are a few eye-watering observations:

- Fewer than 10 IP addresses are needed to reach 20% of, or approximately 51 million, domains in the set;

- 100 IPs are enough to reach almost 50% of domains;

- 1000 IPs are enough to reach 60% of domains;

- 10,000 IPs are enough to reach 80%, or about 204 million, domains.

In fact, from the total set of 16 million addresses, fewer than half, 7.1M (43.7%), of the addresses in the dataset had one name. On this ‘one’ point we must be additionally clear: we are unable to ascertain if there was only one and no other names on those addresses because there are many more domain names than those contained only in .com, .org, .info., and .net — there might very well be other names on those addresses.

In addition to having a number of domains on a single IP address, any IP address may change over time for any of those domains. Changing IP addresses periodically can be helpful with certain security, performance, and to improve reliability for websites. One common example in use by many operations is load balancing. This means DNS queries may return different IP addresses over time, or in different places, for the same websites. This is a further, and separate, reason why blocking based on IP addresses will not serve its intended purpose over time.

Ultimately, there is no reliable way to know the number of domains on an IP address without inspecting all names in the DNS, from every location on the planet, at every moment in time — an entirely infeasible proposition.

Any action on an IP address must, by the very definitions of the protocols that rule and empower the Internet, be expected to have collateral effects.

Lack of transparency with IP blocking

So if we have to expect that the blocking of an IP address will have collateral effects, and it’s generally agreed that it’s inappropriate or even legally impermissible to overblock by blocking IP addresses that have multiple domains on them, why does it still happen? That’s hard to know for sure, so we can only speculate. Sometimes it reflects a lack of technical understanding about the possible effects, particularly from entities like judges who are not technologists. Sometimes governments just ignore the collateral damage – as they do with Internet shutdowns – because they see the blocking as in their interest. And when there is collateral damage, it’s not usually obvious to the outside world, so there can be very little external pressure to have it addressed.

It’s worth stressing that point. When an IP is blocked, a user just sees a failed connection. They don’t know why the connection failed, or who caused it to fail. On the other side, the server acting on behalf of the website doesn’t even know it’s been blocked until it starts getting complaints about the fact that it is unavailable. There is virtually no transparency or accountability for the overblocking. And it can be challenging, if not impossible, for a website owner to challenge a block or seek redress for being inappropriately blocked.

Some governments, including Austria, do publish active block lists, which is an important step for transparency. But for all the reasons we’ve discussed, publishing an IP address does not reveal all the sites that may have been blocked unintentionally. And it doesn’t give those affected a means to challenge the overblocking. Again, in the physical world example, it’s hard to imagine a court order on a skyscraper that wouldn’t be posted on the door, but we often seem to jump over such due process and notice requirements in virtual space.

We think talking about the problematic consequences of IP blocking is more important than ever as an increasing number of countries push to block content online. Unfortunately, ISPs often use IP blocks to implement those requirements. It may be that the ISP is newer or less robust than larger counterparts, but larger ISPs engage in the practice, too, and understandably so because IP blocking takes the least effort and is readily available in most equipment.

And as more and more domains are included on the same number of IP addresses, the problem is only going to get worse.

Next steps

So what can we do?

We believe the first step is to improve transparency around the use of IP blocking. Although we’re not aware of any comprehensive way to document the collateral damage caused by IP blocking, we believe there are steps we can take to expand awareness of the practice. We are committed to working on new initiatives that highlight those insights, as we’ve done with the Cloudflare Radar Outage Center.

We also recognize that this is a whole Internet problem, and therefore has to be part of a broader effort. The significant likelihood that blocking by IP address will result in restricting access to a whole series of unrelated (and untargeted) domains should make it a non-starter for everyone. That’s why we’re engaging with civil society partners and like-minded companies to lend their voices to challenge the use of blocking IP addresses as a way of addressing content challenges and to point out collateral damage when they see it.

To be clear, to address the challenges of illegal content online, countries need legal mechanisms that enable the removal or restriction of content in a rights-respecting way. We believe that addressing the content at the source is almost always the best and the required first step. Laws like the EU’s new Digital Services Act or the Digital Millennium Copyright Act provide tools that can be used to address illegal content at the source, while respecting important due process principles. Governments should focus on building and applying legal mechanisms in ways that least affect other people’s rights, consistent with human rights expectations.

Very simply, these needs cannot be met by blocking IP addresses.

We’ll continue to look for new ways to talk about network activity and disruption, particularly when it results in unnecessary limitations on access. Check out Cloudflare Radar for more insights about what we see online.

Introducing Cloudflare’s Third Party Code of Conduct

Post Syndicated from Andria Jones original https://blog.cloudflare.com/introducing-cloudflares-third-party-code-of-conduct/

Cloudflare is on a mission to help build a better Internet, and we are committed to doing this with ethics and integrity in everything that we do. This commitment extends beyond our own actions, to third parties acting on our behalf. Cloudflare has the same expectations of ethics and integrity of our suppliers, resellers, and other partners as we do of ourselves.

Our new code of conduct for third parties

We first shared publicly our Code of Business Conduct and Ethics during Cloudflare’s initial public offering in September 2019. All Cloudflare employees take legal training as part of their onboarding process, as well as an annual refresher course, which includes the topics covered in our Code, and they sign an acknowledgement of our Code and related policies as well.

While our Code of Business Conduct and Ethics applies to all directors, officers and employees of Cloudflare, it has not extended to third parties. Today, we are excited to share our Third Party Code of Conduct, specifically formulated with our suppliers, resellers, and other partners in mind. It covers such topics as:

- Human Rights

- Fair Labor

- Environmental Sustainability

- Anti-Bribery and Anti-Corruption

- Trade Compliance

- Anti-Competition

- Conflicts of Interest

- Data Privacy and Security

- Government Contracting

But why have another Code?

We work with a wide range of third parties in all parts of the world, including countries with a high risk of corruption, potential for political unrest, and also countries that are just not governed by the same laws that we may see as standard in the United States. We wanted a Third Party Code of Conduct that serves as a statement of Cloudflare’s core values and commitments, and a call for third parties who share the same.

The following are just a few examples of how we want to ensure our third parties act with ethics and integrity on our behalf, even when we aren’t watching:

We want to ensure that the servers and other equipment in our supply chain are sourced responsibly, from manufacturers who respect human rights — free of forced or child labor, with environmental sustainability at the forefront.

We want to provide our products and services to customers based on the quality of Cloudflare, not because a third party reseller may bribe a customer to enter into an agreement.

We want to ensure there are no conflicts of interest with our third parties, that might give someone an unfair advantage.

As a government contractor, we want to ensure that we do not have telecommunications or video surveillance equipment, systems, or services from prohibited parties in our supply chain to protect national security interests.

Having a Third Party Code of Conduct is also industry standard. As Cloudflare garners an increasing number of Fortune 500 and other enterprise customers, we find ourselves reviewing and committing to their Third Party Codes of Conduct as well.

How it works

Our Third Party Code of Conduct is not meant to replace our terms of service or other contractual agreements. Rather, it is meant to supplement them, highlighting Cloudflare’s ethical commitments and encouraging our suppliers, resellers, and other partners to commit to the same. We will be cascading this new Code to all existing third parties, and include it at onboarding for all new third parties going forward. A violation of the Code, or any contractual agreements between Cloudflare and our third parties, may result in termination of the relationship.

This Third Party Code of Conduct is only one facet of Cloudflare’s third party due diligence program, and it complements the other work that Cloudflare does in this area. Cloudflare rigorously screens and vets our suppliers and partners at onboarding, and we continue to routinely monitor and audit them over time. We are always looking for ways to communicate with, educate, and learn from our third parties as well.

Join our mission

Are you a supplier, reseller or other partner who shares these values of ethics and integrity? Come work with us and join Cloudflare on its mission to help build a better, more ethical Internet.

Helping build a safer Internet by measuring BGP RPKI Route Origin Validation

Post Syndicated from Carlos Rodrigues original https://blog.cloudflare.com/rpki-updates-data/

The Border Gateway Protocol (BGP) is the glue that keeps the entire Internet together. However, despite its vital function, BGP wasn’t originally designed to protect against malicious actors or routing mishaps. It has since been updated to account for this shortcoming with the Resource Public Key Infrastructure (RPKI) framework, but can we declare it to be safe yet?

If the question needs asking, you might suspect we can’t. There is a shortage of reliable data on how much of the Internet is protected from preventable routing problems. Today, we’re releasing a new method to measure exactly that: what percentage of Internet users are protected by their Internet Service Provider from these issues. We find that there is a long way to go before the Internet is protected from routing problems, though it varies dramatically by country.

Why RPKI is necessary to secure Internet routing

The Internet is a network of independently-managed networks, called Autonomous Systems (ASes). To achieve global reachability, ASes interconnect with each other and determine the feasible paths to a given destination IP address by exchanging routing information using BGP. BGP enables routers with only local network visibility to construct end-to-end paths based on the arbitrary preferences of each administrative entity that operates that equipment. Typically, Internet traffic between a user and a destination traverses multiple AS networks using paths constructed by BGP routers.

BGP, however, lacks built-in security mechanisms to protect the integrity of the exchanged routing information and to provide authentication and authorization of the advertised IP address space. Because of this, AS operators must implicitly trust that the routing information exchanged through BGP is accurate. As a result, the Internet is vulnerable to the injection of bogus routing information, which cannot be mitigated by security measures at the client or server level of the network.

An adversary with access to a BGP router can inject fraudulent routes into the routing system, which can be used to execute an array of attacks, including:

- Denial-of-Service (DoS) through traffic blackholing or redirection,

- Impersonation attacks to eavesdrop on communications,

- Machine-in-the-Middle exploits to modify the exchanged data, and subvert reputation-based filtering systems.

Additionally, local misconfigurations and fat-finger errors can be propagated well beyond the source of the error and cause major disruption across the Internet.

Such an incident happened on June 24, 2019. Millions of users were unable to access Cloudflare address space when a regional ISP in Pennsylvania accidentally advertised routes to Cloudflare through their capacity-limited network. This was effectively the Internet equivalent of routing an entire freeway through a neighborhood street.

Traffic misdirections like these, either unintentional or intentional, are not uncommon. The Internet Society’s MANRS (Mutually Agreed Norms for Routing Security) initiative estimated that in 2020 alone there were over 3,000 route leaks and hijacks, and new occurrences can be observed every day through Cloudflare Radar.

The most prominent proposals to secure BGP routing, standardized by the IETF focus on validating the origin of the advertised routes using Resource Public Key Infrastructure (RPKI) and verifying the integrity of the paths with BGPsec. Specifically, RPKI (defined in RFC 7115) relies on a Public Key Infrastructure to validate that an AS advertising a route to a destination (an IP address space) is the legitimate owner of those IP addresses.

RPKI has been defined for a long time but lacks adoption. It requires network operators to cryptographically sign their prefixes, and routing networks to perform an RPKI Route Origin Validation (ROV) on their routers. This is a two-step operation that requires coordination and participation from many actors to be effective.

The two phases of RPKI adoption: signing origins and validating origins

RPKI has two phases of deployment: first, an AS that wants to protect its own IP prefixes can cryptographically sign Route Origin Authorization (ROA) records thereby attesting to be the legitimate origin of that signed IP space. Second, an AS can avoid selecting invalid routes by performing Route Origin Validation (ROV, defined in RFC 6483).

With ROV, a BGP route received by a neighbor is validated against the available RPKI records. A route that is valid or missing from RPKI is selected, while a route with RPKI records found to be invalid is typically rejected, thus preventing the use and propagation of hijacked and misconfigured routes.

One issue with RPKI is the fact that implementing ROA is meaningful only if other ASes implement ROV, and vice versa. Therefore, securing BGP routing requires a united effort and a lack of broader adoption disincentivizes ASes from commiting the resources to validate their own routes. Conversely, increasing RPKI adoption can lead to network effects and accelerate RPKI deployment. Projects like MANRS and Cloudflare’s isbgpsafeyet.com are promoting good Internet citizenship among network operators, and make the benefits of RPKI deployment known to the Internet. You can check whether your own ISP is being a good Internet citizen by testing it on isbgpsafeyet.com.

Measuring the extent to which both ROA (signing of addresses by the network that controls them) and ROV (filtering of invalid routes by ISPs) have been implemented is important to evaluating the impact of these initiatives, developing situational awareness, and predicting the impact of future misconfigurations or attacks.

Measuring ROAs is straightforward since ROA data is readily available from RPKI repositories. Querying RPKI repositories for publicly routed IP prefixes (e.g. prefixes visible in the RouteViews and RIPE RIS routing tables) allows us to estimate the percentage of addresses covered by ROA objects. Currently, there are 393,344 IPv4 and 86,306 IPv6 ROAs in the global RPKI system, covering about 40% of the globally routed prefix-AS origin pairs1.

Measuring ROV, however, is significantly more challenging given it is configured inside the BGP routers of each AS, not accessible by anyone other than each router’s administrator.

Measuring ROV deployment

Although we do not have direct access to the configuration of everyone’s BGP routers, it is possible to infer the use of ROV by comparing the reachability of RPKI-valid and RPKI-invalid prefixes from measurement points within an AS2.

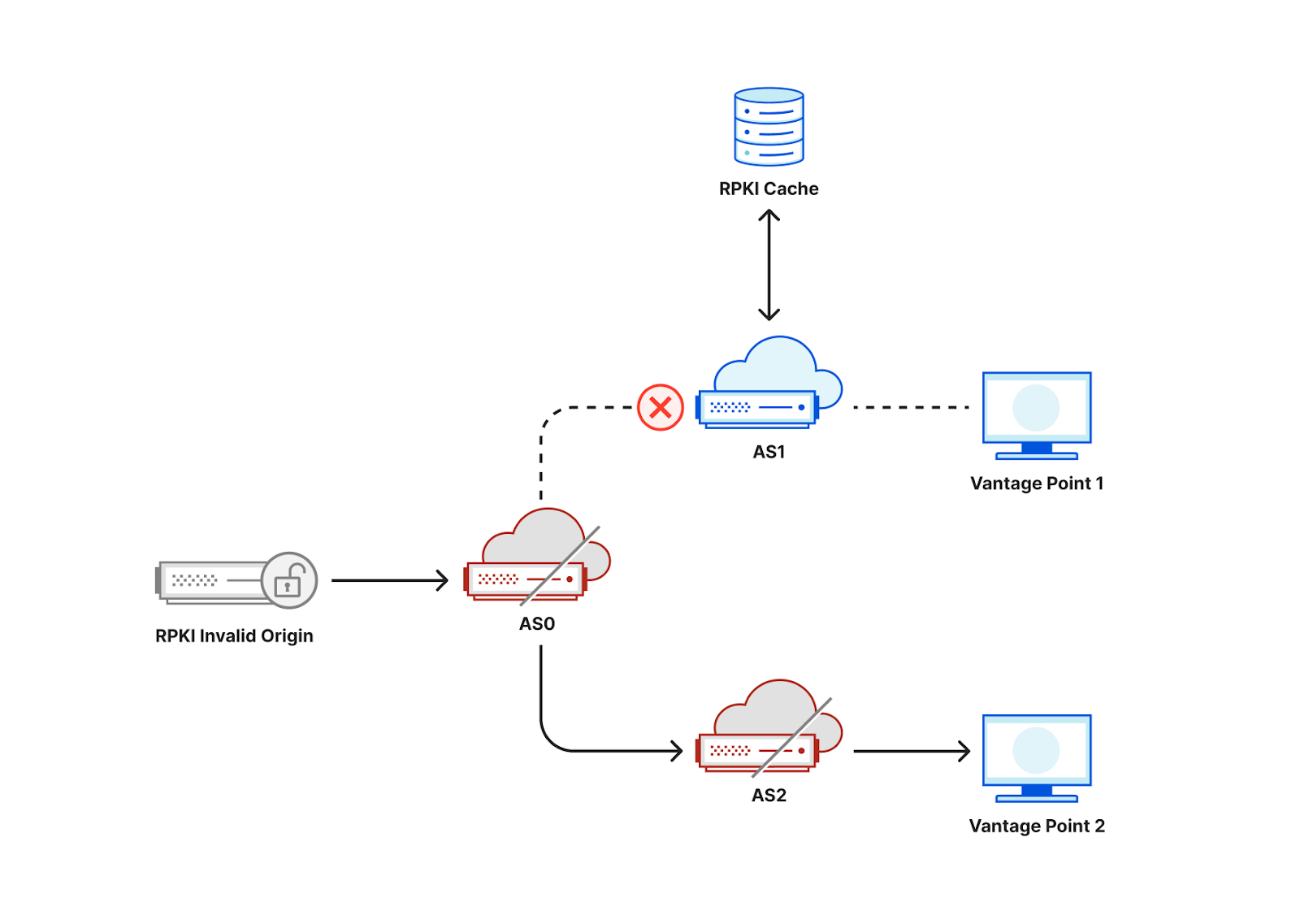

Consider the following toy topology as an example, where an RPKI-invalid origin is advertised through AS0 to AS1 and AS2. If AS1 filters and rejects RPKI-invalid routes, a user behind AS1 would not be able to connect to that origin. By contrast, if AS2 does not reject RPKI invalids, a user behind AS2 would be able to connect to that origin.

While occasionally a user may be unable to access an origin due to transient network issues, if multiple users act as vantage points for a measurement system, we would be able to collect a large number of data points to infer which ASes deploy ROV.

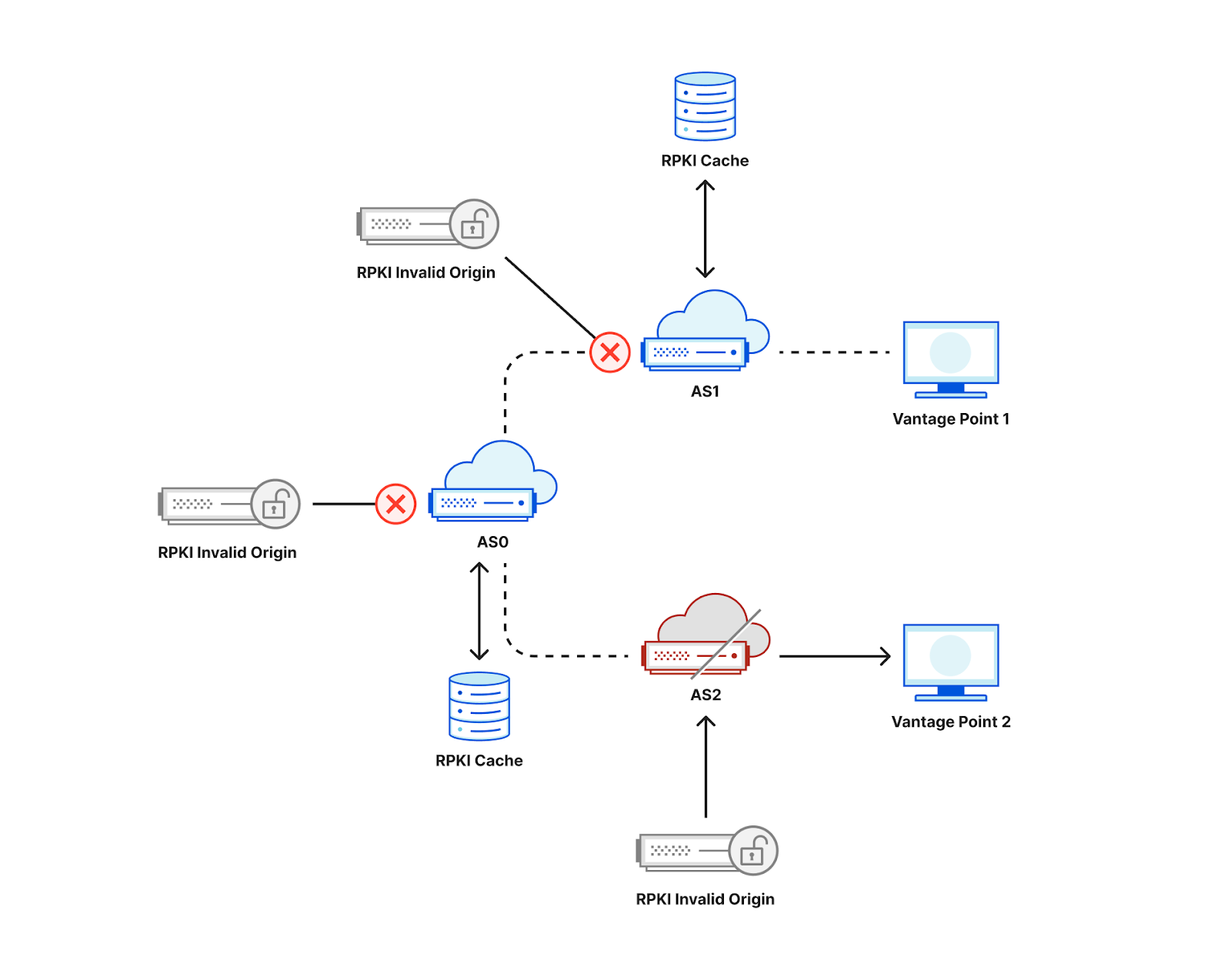

If, in the figure above, AS0 filters invalid RPKI routes, then vantage points in both AS1 and AS2 would be unable to connect to the RPKI-invalid origin, making it hard to distinguish if ROV is deployed at the ASes of our vantage points or in an AS along the path. One way to mitigate this limitation is to announce the RPKI-invalid origin from multiple locations from an anycast network taking advantage of its direct interconnections to the measurement vantage points as shown in the figure below. As a result, an AS that does not itself deploy ROV is less likely to observe the benefits of upstream ASes using ROV, and we would be able to accurately infer ROV deployment per AS3.

Note that it’s also important that the IP address of the RPKI-invalid origin should not be covered by a less specific prefix for which there is a valid or unknown RPKI route, otherwise even if an AS filters invalid RPKI routes its users would still be able to find a route to that IP.

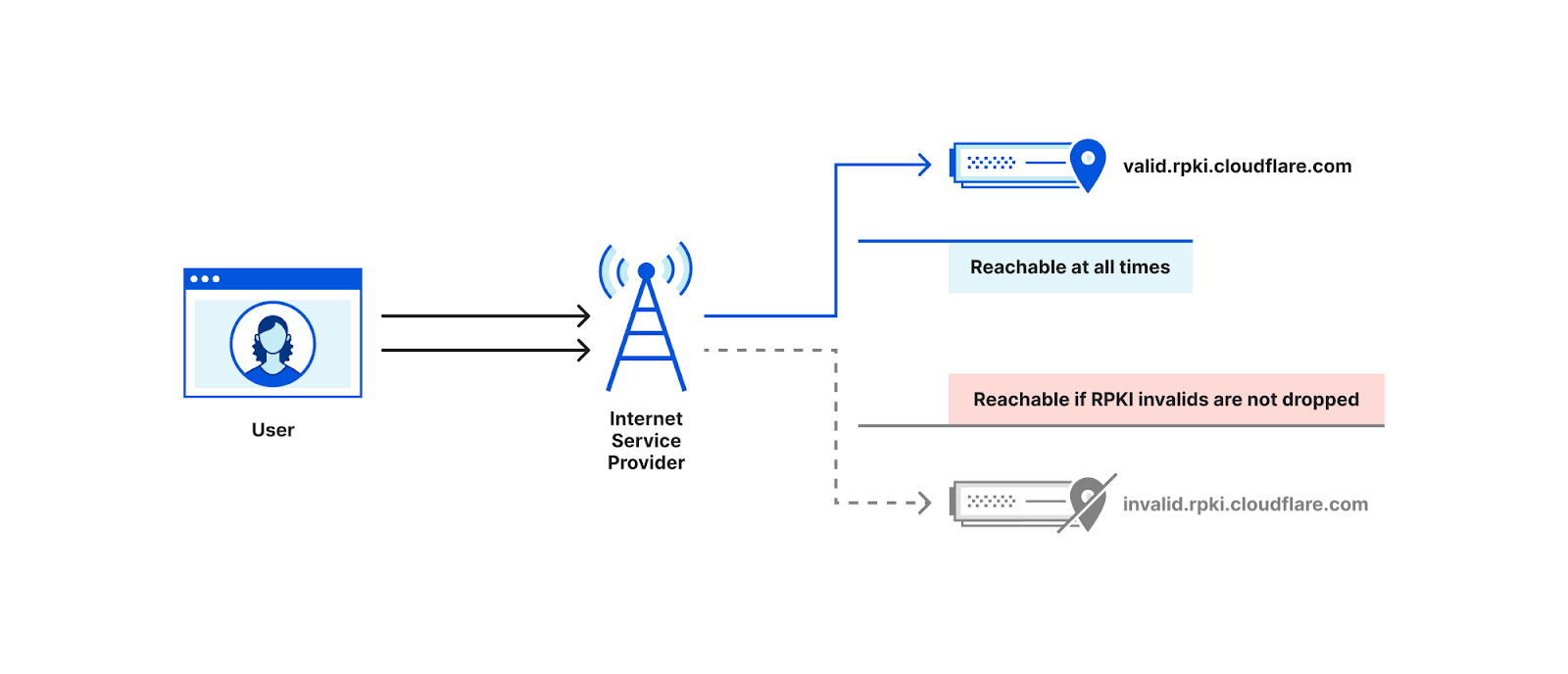

The measurement technique described here is the one implemented by Cloudflare’s isbgpsafeyet.com website, allowing end users to assess whether or not their ISPs have deployed BGP ROV.

The isbgpsafeyet.com website itself doesn’t submit any data back to Cloudflare, but recently we started measuring whether end users’ browsers can successfully connect to invalid RPKI origins when ROV is present. We use the same mechanism as is used for global performance data4. In particular, every measurement session (an individual end user at some point in time) attempts a request to both valid.rpki.cloudflare.com, which should always succeed as it’s RPKI-valid, and invalid.rpki.cloudflare.com, which is RPKI-invalid and should fail when the user’s ISP uses ROV.

This allows us to have continuous and up-to-date measurements from hundreds of thousands of browsers on a daily basis, and develop a greater understanding of the state of ROV deployment.

The state of global ROV deployment

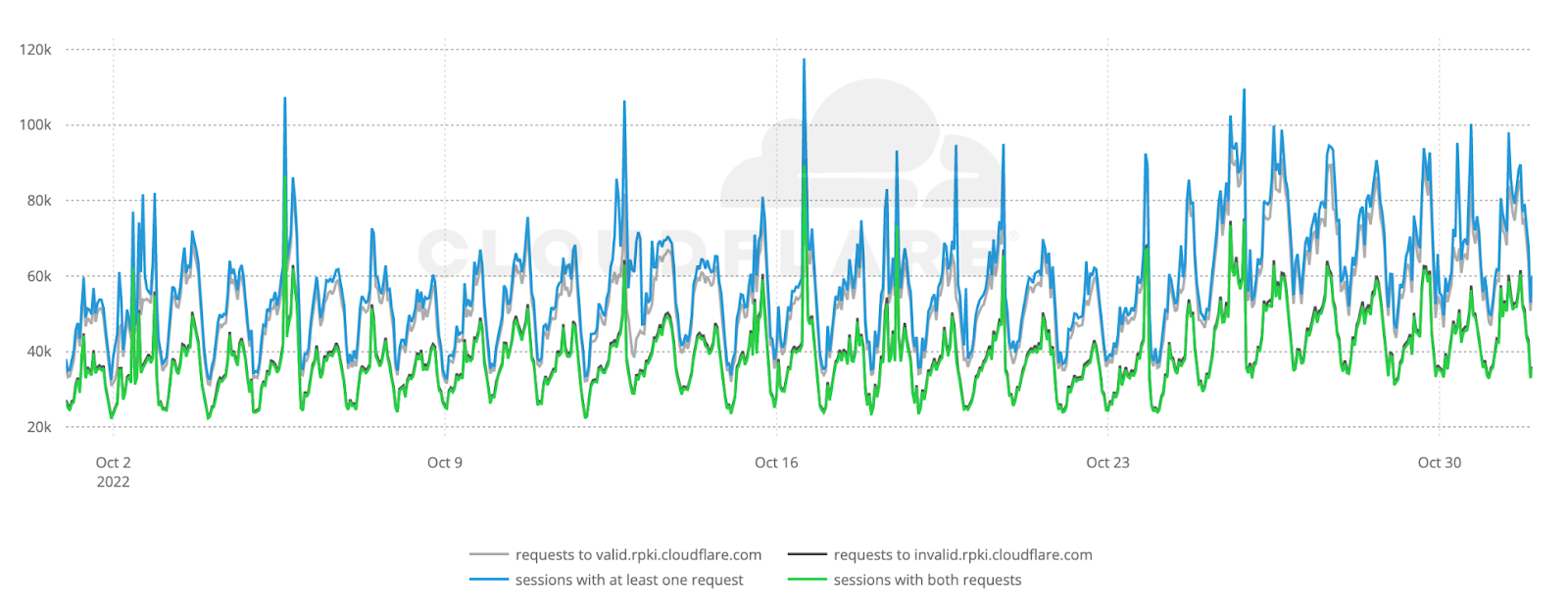

The figure below shows the raw number of ROV probe requests per hour during October 2022 to valid.rpki.cloudflare.com and invalid.rpki.cloudflare.com. In total, we observed 69.7 million successful probes from 41,531 ASNs.

Based on APNIC’s estimates on the number of end users per ASN, our weighted5 analysis covers 96.5% of the world’s Internet population. As expected, the number of requests follow a diurnal pattern which reflects established user behavior in daily and weekly Internet activity6.

We can also see that the number of successful requests to valid.rpki.cloudflare.com (gray line) closely follows the number of sessions that issued at least one request (blue line), which works as a smoke test for the correctness of our measurements.

As we don’t store the IP addresses that contribute measurements, we don’t have any way to count individual clients and large spikes in the data may introduce unwanted bias. We account for that by capturing those instants and excluding them.

Overall, we estimate that out of the four billion Internet users, only 261 million (6.5%) are protected by BGP Route Origin Validation, but the true state of global ROV deployment is more subtle than this.

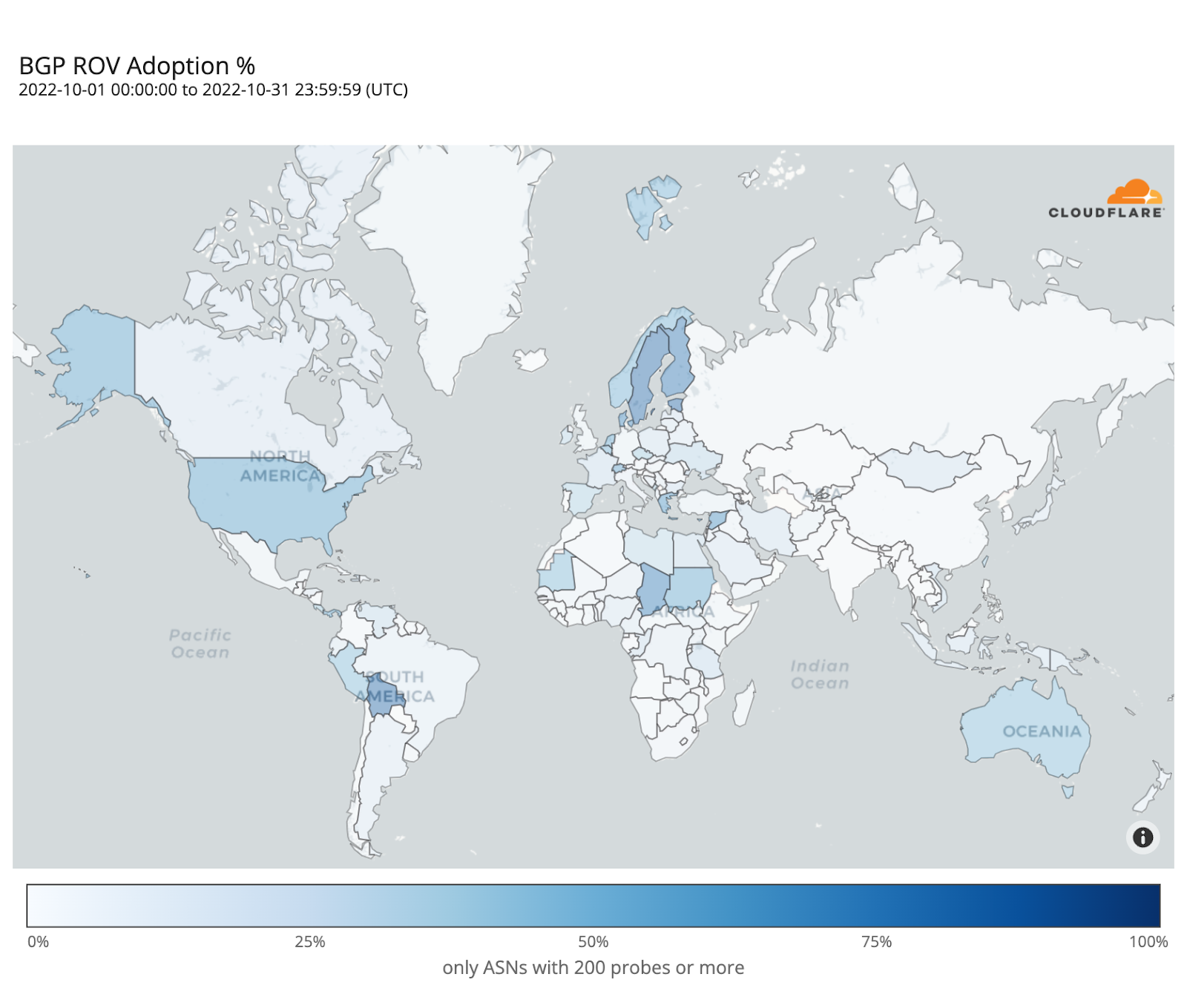

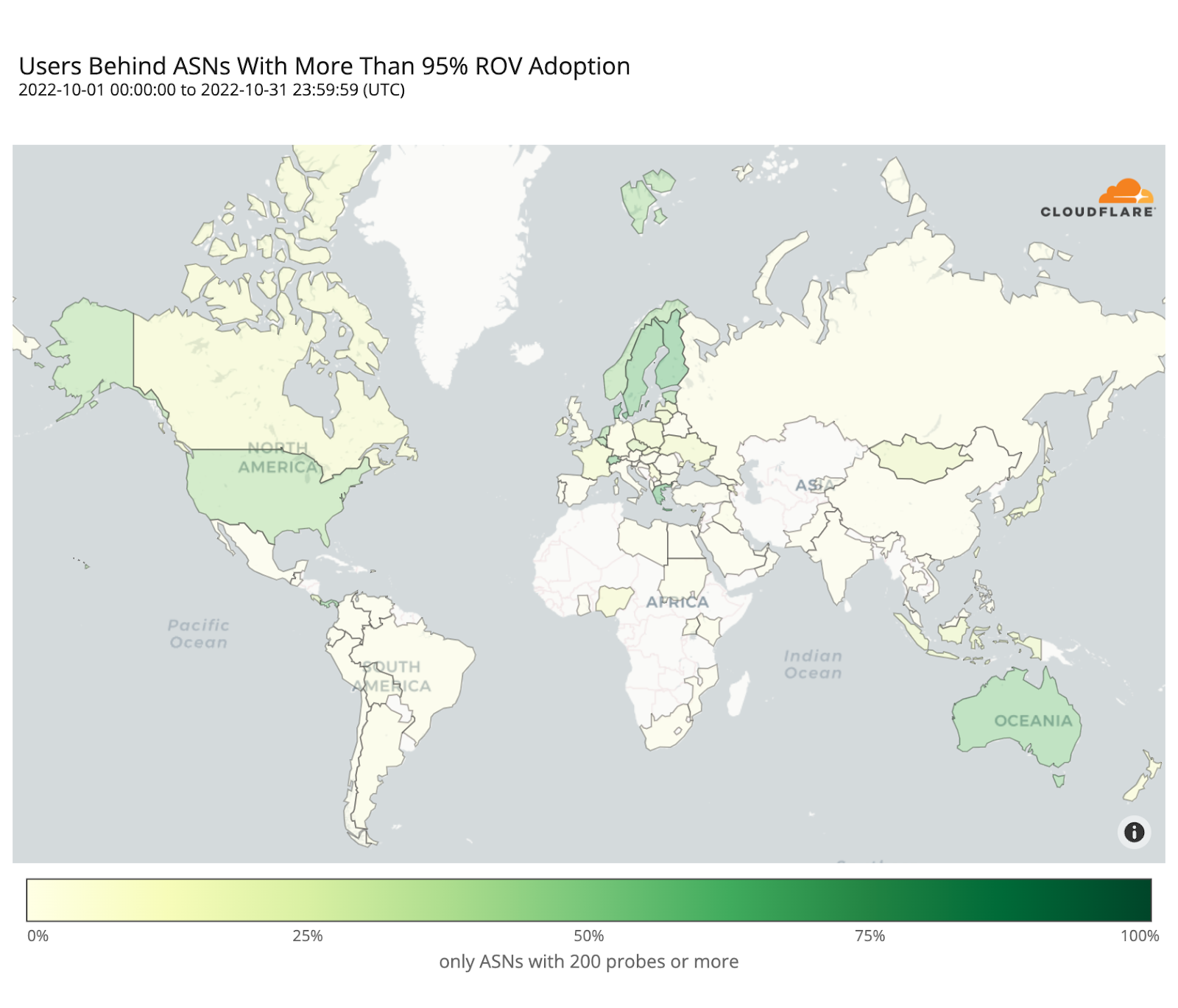

The following map shows the fraction of dropped RPKI-invalid requests from ASes with over 200 probes over the month of October. It depicts how far along each country is in adopting ROV but doesn’t necessarily represent the fraction of protected users in each country, as we will discover.

Sweden and Bolivia appear to be the countries with the highest level of adoption (over 80%), while only a few other countries have crossed the 50% mark (e.g. Finland, Denmark, Chad, Greece, the United States).

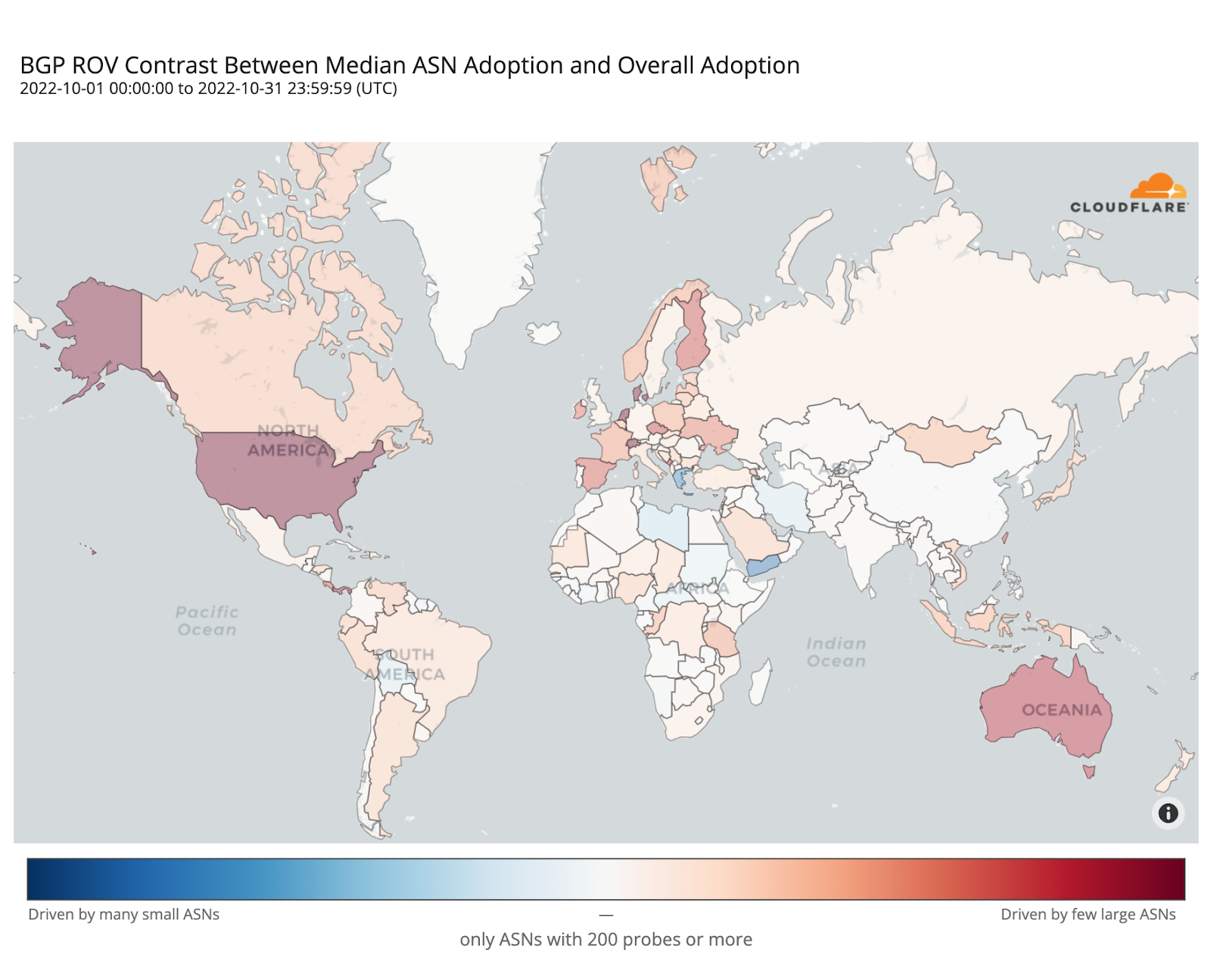

ROV adoption may be driven by a few ASes hosting large user populations, or by many ASes hosting small user populations. To understand such disparities, the map below plots the contrast between overall adoption in a country (as in the previous map) and median adoption over the individual ASes within that country. Countries with stronger reds have relatively few ASes deploying ROV with high impact, while countries with stronger blues have more ASes deploying ROV but with lower impact per AS.

In the Netherlands, Denmark, Switzerland, or the United States, adoption appears mostly driven by their larger ASes, while in Greece or Yemen it’s the smaller ones that are adopting ROV.

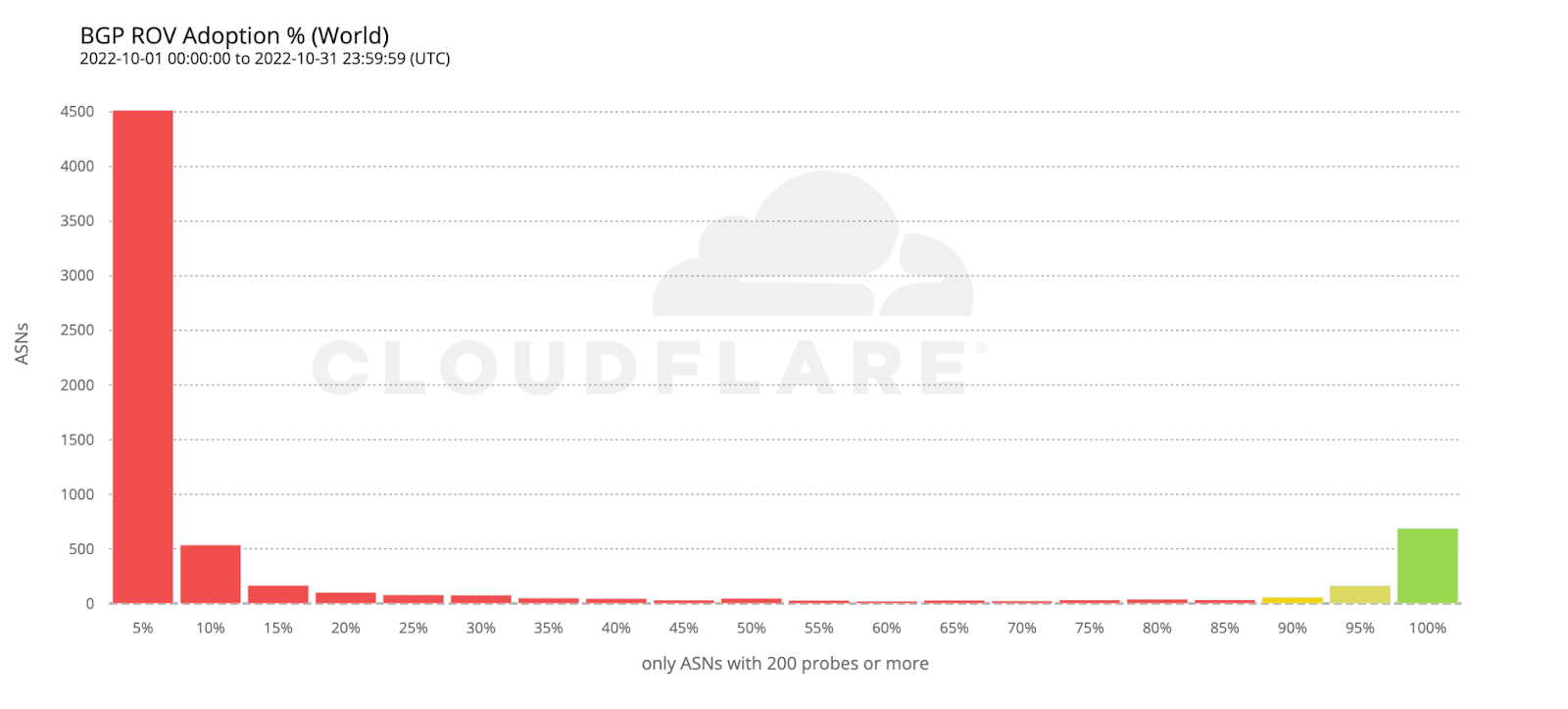

The following histogram summarizes the worldwide level of adoption for the 6,765 ASes covered by the previous two maps.

Most ASes either don’t validate at all, or have close to 100% adoption, which is what we’d intuitively expect. However, it’s interesting to observe that there are small numbers of ASes all across the scale. ASes that exhibit partial RPKI-invalid drop rate compared to total requests may either implement ROV partially (on some, but not all, of their BGP routers), or appear as dropping RPKI invalids due to ROV deployment by other ASes in their upstream path.

To estimate the number of users protected by ROV we only considered ASes with an observed adoption above 95%, as an AS with an incomplete deployment still leaves its users vulnerable to route leaks from its BGP peers.

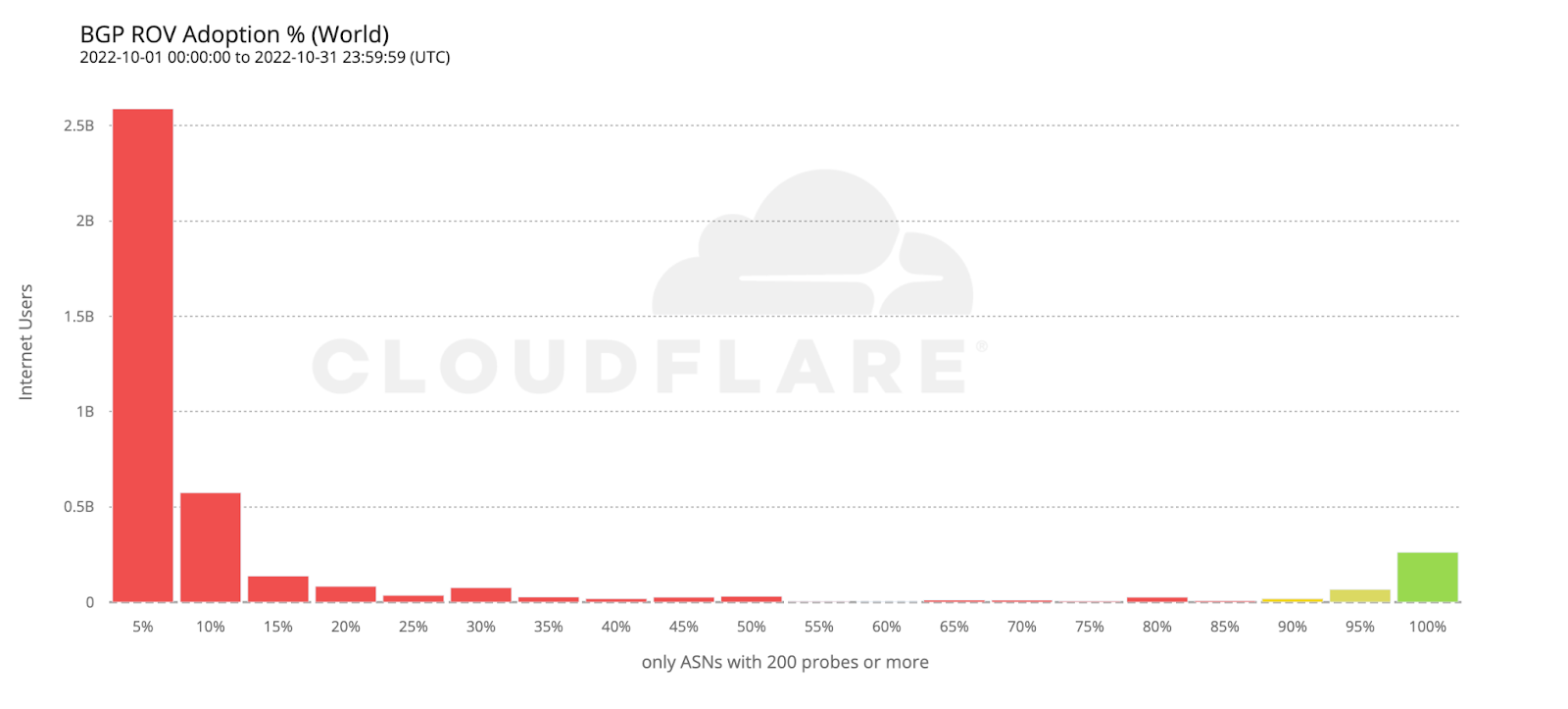

If we take the previous histogram and summarize by the number of users behind each AS, the green bar on the right corresponds to the 261 million users currently protected by ROV according to the above criteria (686 ASes).

Looking back at the country adoption map one would perhaps expect the number of protected users to be larger. But worldwide ROV deployment is still mostly partial, lacking larger ASes, or both. This becomes even more clear when compared with the next map, plotting just the fraction of fully protected users.

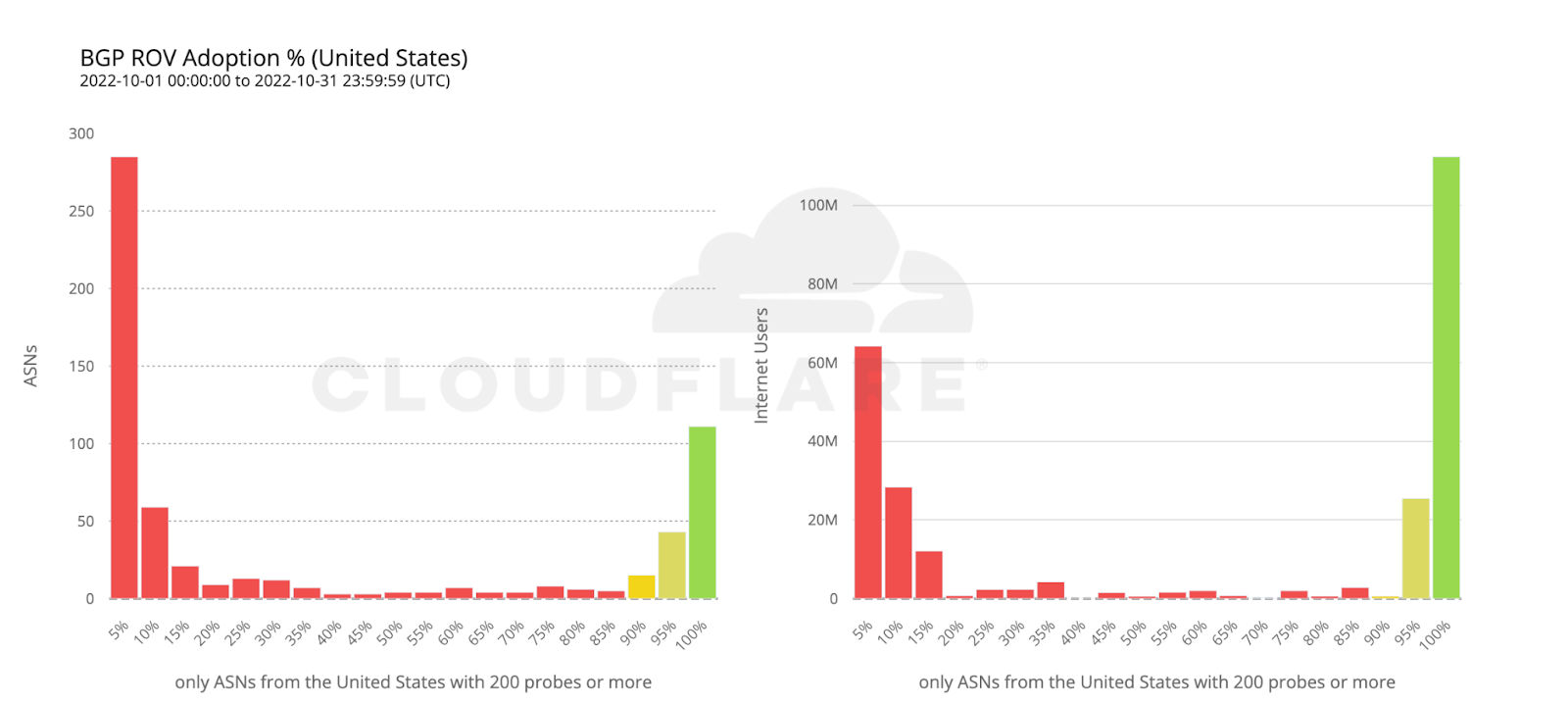

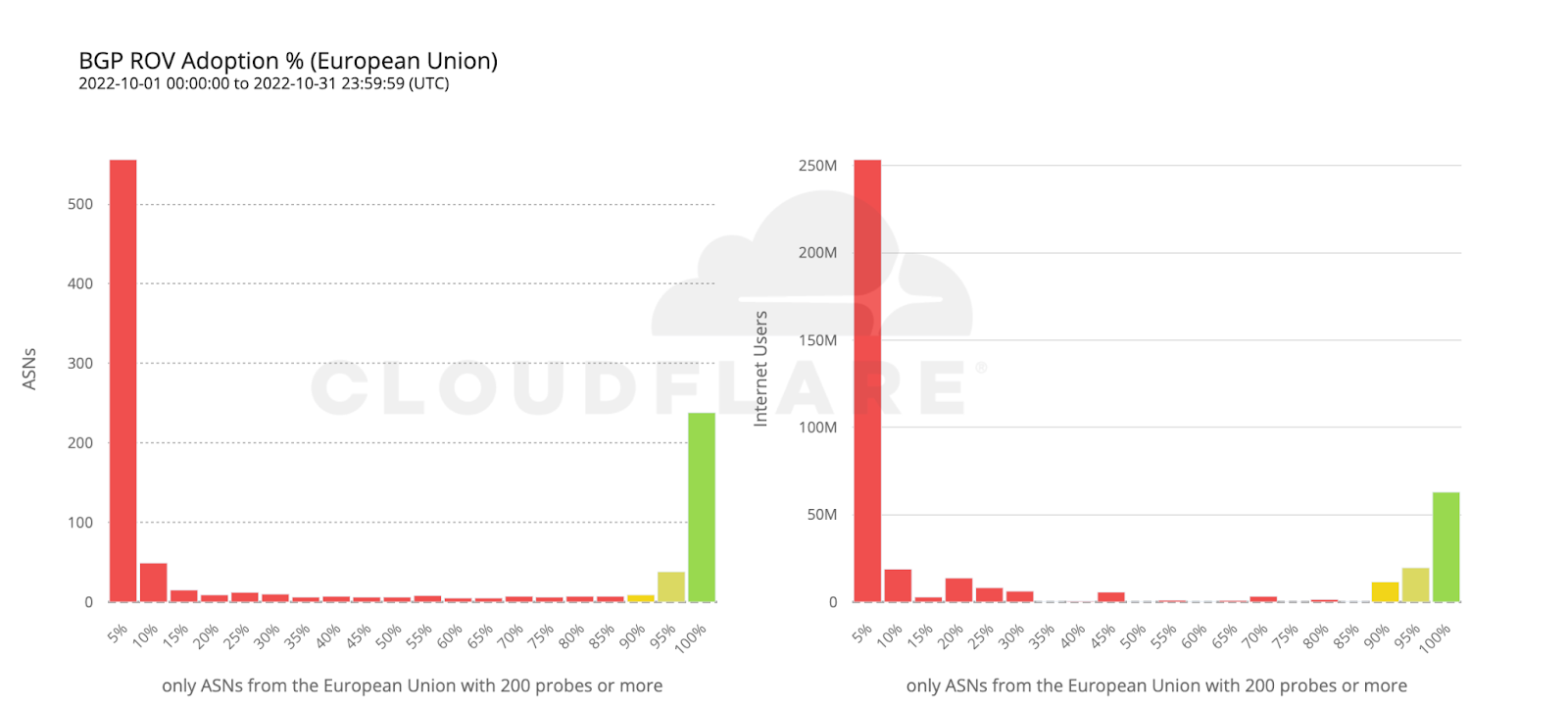

To wrap up our analysis, we look at two world economies chosen for their contrasting, almost symmetrical, stages of deployment: the United States and the European Union.

112 million Internet users are protected by 111 ASes from the United States with comprehensive ROV deployments. Conversely, more than twice as many ASes from countries making up the European Union have fully deployed ROV, but end up covering only half as many users. This can be reasonably explained by end user ASes being more likely to operate within a single country rather than span multiple countries.

Conclusion

Probe requests were performed from end user browsers and very few measurements were collected from transit providers (which have few end users, if any). Also, paths between end user ASes and Cloudflare are often very short (a nice outcome of our extensive peering) and don’t traverse upper-tier networks that they would otherwise use to reach the rest of the Internet.

In other words, the methodology used focuses on ROV adoption by end user networks (e.g. ISPs) and isn’t meant to reflect the eventual effect of indirect validation from (perhaps validating) upper-tier transit networks. While indirect validation may limit the “blast radius” of (malicious or accidental) route leaks, it still leaves non-validating ASes vulnerable to leaks coming from their peers.

As with indirect validation, an AS remains vulnerable until its ROV deployment reaches a sufficient level of completion. We chose to only consider AS deployments above 95% as truly comprehensive, and Cloudflare Radar will soon begin using this threshold to track ROV adoption worldwide, as part of our mission to help build a better Internet.

When considering only comprehensive ROV deployments, some countries such as Denmark, Greece, Switzerland, Sweden, or Australia, already show an effective coverage above 50% of their respective Internet populations, with others like the Netherlands or the United States slightly above 40%, mostly driven by few large ASes rather than many smaller ones.

Worldwide we observe a very low effective coverage of just 6.5% over the measured ASes, corresponding to 261 million end users currently safe from (malicious and accidental) route leaks, which means there’s still a long way to go before we can declare BGP to be safe.

……

1https://rpki.cloudflare.com/

2Gilad, Yossi, Avichai Cohen, Amir Herzberg, Michael Schapira, and Haya Shulman. "Are we there yet? On RPKI’s deployment and security." Cryptology ePrint Archive (2016).

3Geoff Huston. “Measuring ROAs and ROV”. https://blog.apnic.net/2021/03/24/measuring-roas-and-rov/

4Measurements are issued stochastically when users encounter 1xxx error pages from default (non-customer) configurations.

5Probe requests are weighted by AS size as calculated from Cloudflare’s worldwide HTTP traffic.

6Quan, Lin, John Heidemann, and Yuri Pradkin. "When the Internet sleeps: Correlating diurnal networks with external factors." In Proceedings of the 2014 Conference on Internet Measurement Conference, pp. 87-100. 2014.

The latest from Cloudflare’s seventeen Employee Resource Groups

Post Syndicated from Sofia Good original https://blog.cloudflare.com/the-latest-from-cloudflares-seventeen-employee-resource-groups/

In this blog post, we’ll highlight a few stories from some of our 17 Employee Resource Groups (ERGs), including the most recent, Persianflare. But first, let me start with a personal story.

Do you remember being in elementary school and sitting in a classroom with about 30 other students when the teacher was calling on your classmates to read out loud from a book? The opportunity to read out loud was an exciting moment for many of my peers; one that made them feel proud of themselves. I, on the other hand, was frozen, in a state of panic, worried that I wouldn’t be able to sound out a word or completely embarrass myself by stuttering. I would practice reading the next paragraph in hopes that I wouldn’t mess up when I was called on. What I didn’t know at the time was that I was dyslexic, and I could barely read, especially out loud to a large group of people.

That is where I began to know the feeling of isolation. This feeling compounded year after year, when I wasn’t able to perform the way my peers did. My isolation prevailed from elementary school to middle school, through high school and even into college.

In college, I found a community that changed everything called Eye to Eye – a national non-profit organization that provides mentorship programs for students with learning disabilities. I attended one of their conferences with 200 other students. It was a profound moment when I realized that everyone in the room shared the experience of anxiety and fear around learning. Joining this community made me feel that I was not alone. Community for me is a group of people who have shared experience. Community allowed me to see my learning disability as an asset not a deficit. This is what I think the author, Nilofer Merchant, meant in the 11 Rules for Creating Value in the Social Era, when she said “The social object that unites people isn’t a company or a product; the social object that most unites people is a shared value or purpose.“

When I came to work at Cloudflare, I decided to become an ERG leader for Flarability, which provides a space for discussing disability and accessibility at Cloudflare. The same deep sense of community that I felt at Eye to Eye was available to me when I joined Flarability.

Globally, 85% of the company participates in ERGs and this year alone they hosted over 54 initiatives and events. As the pandemic persisted over the last year, Cloudflare remained a hybrid workforce which posed many challenges for our company culture. ERGs had to rethink the way they foster connection. Today, we are highlighting Persianflare, Afroflare, Greencloud and Desiflare because they have taken different approaches to building community.

Persianflare is our newest ERG, and in a very short amount of time, ERG Leader Shadnaz Nia has already brought together an entire community of folks who have created lasting impact. Here’s how…

Persianflare: Amplify the voice of Iranian people

Shadnaz Nia, Solutions Engineer, Toronto

“Persianflare is the newest ERG which strives to nurture a community for all Persians and allies, where we perpetuate freedom of speech, proudly celebrate rich Persian heritage, appending growth and Persian convention. We have assembled with a desire to amplify the voice of the Iranian people, and bring awareness to human rights violations there, during unprecedented and unbearable times in history.

Cloudflare’s mission is to build a better Internet and one of our initiatives this year was to support building a better Internet that provides a more private Internet for the people of Iran. At Cloudflare, we are fortunate to have executive leadership that takes action and works tirelessly to provide more uncensored and accessible Internet in countries such as Iran. We’ve done this by offering WARP solutions in native Farsi language for all Persian-speaking users, empowering them to access uncensored information and news, in turn, strengthening the voice of Persian people living in Iran.

This project was accidentally routed to my team by the Product Team when they needed a translator. At the time, myself and other Persian employees were feeling powerless as a result of Mahsa Amini being murdered in the custody of the Iranian morality police. This was the birth of Persianflare. Through this project, I started collaborating with other employees and discovered that many of my colleagues really cared about this cause and wanted to help. Throughout the course of this initiative, we found more Persian employees in other regions and let them know about our progress. Words cannot describe how I felt when the app was released. It was one of the purest moments in my life. By bringing together Persian employees, allies of Persianflare, and the Product Team, this community was able to create real change for the people of Iran. To me, that is the power of community.

We plan to circle together to celebrate the global Human Rights Day on December 10, 2022, to continue discussing and growing our community. As the newest ERG, we are just getting started.”

Afroflare: Sharing experiences

Sieh Johnson, Engineering Manager, Austin

Trudi Bawah-Atalia, Recruiting Coordinator, London

“Afroflare is a community for People of African Diaspora to build connections while learning from each others’ lives, perspectives and experiences. Our initiatives in 2022 centered on creating and cultivating community by supporting and echoing Black voices and achievements within and outside of Cloudflare. Earlier in the year, given that the pandemic was slowing down but still active, we provided curated content that would allow members and allies to create, contribute and learn about cultures of the African diaspora at their own pace.

For our celebration of US Black History Month, we aggregated lists of Black-owned businesses and non-profit communities in various cities to support. We also hosted internal chats called “The Plug,” which highlight the immense talent of our members at Cloudflare. Lastly, Afroflare worked with allies to present an Allyship workshop called “Celebrating Black Joy- An Upskilling Session for Allies.”

As 2022 progressed, we shifted to a hybrid of in-person and virtual events in order to foster more interaction and strengthen bonds. Our celebration of UK Black History Month included a cross-culture party in our Lisbon office. We collaborated with Latinflare & Wineflare on an International Wine tasting event featuring South African wines, and an African + Caribbean food tasting event called “Taste from Home. We wrapped up the festivities with a Black Woman in Tech panel to discuss bias and how to navigate various obstacles faced by the BIPOC community in tech.

Virtually or in-person, this community has become a family – we laugh together, cry together, teach each other, and continue to grow together year after year. Each member’s experiences and culture are valued as we forge spaces for everyone to feel free to be authentic. Afroflare looks forward to continuing its goals of creating safe spaces, as well as educating, championing, and supporting our members and allies in 2023.”

Greencloud: A more sustainable Internet

Annika Garbers, Product Manager, Georgia

“Greencloud is a coalition of Cloudflare employees who are passionate about the environment. Our vision is to address the climate crisis through an intersectional lens and help Cloudflare become a clear leader in sustainable practices among tech companies. Greencloud was initially founded in 2019 but experienced most of its growth in membership, engagement, and activities after the pandemic started. The group became an outlet for current and new employees to connect on shared passions and channel our COVID-fueled anxieties for the world into productive climate-focused action.

Greencloud’s organizing since 2020 has primarily centered around “two weeks of action.” The first is Impact Week (happening now!), which includes projects driven by Greencloud members to help our customers build a more sustainable Internet using Cloudflare products. The second is Earth Week, scheduled around the global Earth Day celebration in April, which focuses on awareness and education. We’ve leveraged the tools available to Cloudflare employees and our community, like our blog and Cloudflare TV, to share information with a broader audience and on a wider range of topics than would be possible with in-person events. This year, publicly available Earth Week programming included sessions about Cloudflare’s sustainability focus for our hardware lifecycle, an interview with a sustainability-focused Project Galileo participant, a Policy team overview of our sustainability reporting practices, and a conversation about sustainable transportation with our People team. Covering a wide range of topics throughout our events and content created by our members not only helps everyone learn something new, it also reminds us of the importance of embracing and encouraging diverse perspectives in every community. The diversity of the Greencloud collective is a small demonstration of the reality that climate change is only successfully addressed through holistic action by people with many outlooks and skills working together.

As we embrace more flexible modes of work, the Greencloud crew is looking forward to maintaining our virtual events as well as introducing more in-person opportunities to engage with each other and our local communities. Building and maintaining deep connections with each other is key to the momentum and sustainability(!) of this work in the long term.”

Desiflare: South Asian delights

Arwa Ginwala, Solutions Engineering Manager, San Francisco

“Our goal for Desiflare is to build a sense of community among Cloudflare employees using the rich South Asian culture as a platform to bring people together. The Desiflare initiatives that had the most impact were in-person events after the two long years of pandemic. People were longing for a sense of community and belonging after months of Zoom fatigue. It was a fresh breath of air to see fellow Desis in person, across multiple Cloudflare offices. Folks hired during the pandemic got an opportunity to come visit the newly-renovated office in San Francisco. Desis enjoyed South Asian food at the Austin office for the first time since Desiflare’s inception. Diwali was celebrated in Singapore and Sydney offices, and the community in London played cricket, a sport very popular and well-loved in the South Asian community. Regardless of country of origin, gender, age or cultural beliefs, and in the presence of a competitive atmosphere, everyone shared and rejoiced with memories from their childhoods.

We realize that Desiflare members have different levels of comfort regarding meeting people in person or traveling for in-person events. But everyone wants to feel a sense of community and connection with people who share the same interests. Keeping this in mind, it was important to organize events where everyone felt included and had a chance to be part of the community based on their preferences. We met virtually for weekly chai time, monthly lunches, and are now organizing virtual jam sessions for many Desis to showcase their talent and enjoy South Asian music regardless of where they are located. The community has been most engaged on the Desiflare chat room. It has provided a platform for discussing common topics that help people feel supported. Desiflare gives a unique opportunity to employees to connect with their culture and roots regardless of their job title and team. It’s a way to network cross-functionally, and allows you to bring your whole self to work, which is one of the best things about working at Cloudflare.”

Conclusion

The ERGs at Cloudflare have helped us realize the power of community and how critical it is for hybrid work. What I have learned alongside our ERG leaders is that if we as individuals want to feel connected, understood and seen, our ERG communities are essential. You can check out all the incredible ERGs on the Life at Cloudflare page, and I encourage you to consider starting an ERG at your company.

Working to help the HBCU Smart Cities Challenge

Post Syndicated from Nikole Phillips original https://blog.cloudflare.com/working-to-help-the-hbcu-smart-cities-challenge/

Anyone who knows me knows that I am a proud member of the HBCU (Historically Black College or University) alumni. The HBCU Smart Cities Challenge invites all HBCUs across the United States to build technological solutions to solve real-world problems. When I learned that Cloudflare would be supporting the HBCU Smart Cities Challenge, I was on board immediately for so many personal reasons.

In addition to volunteering mentors as part of this partnership, Cloudflare offered HBCU Smart Cities the opportunity to apply for Project Galileo to protect and accelerate their online presence. Project Galileo provides free cyber security protection to free speech, public interest, and civil society organizations that are vulnerable to cyber attacks. After more than three years working at Cloudflare, I know that we can make the difference in bridging the gap in accessibility to the digital landscape by directly securing the Internet against today’s threats as well as optimizing performance, which plays a bigger role than most would think.

What is an HBCU?

A Historically Black College or University is defined as “any historically black college or university that was established prior to 1964, whose principal mission was, and is, the education of black Americans, and that is accredited by a nationally recognized accrediting agency or association determined by the Secretary of Education.” (Source: What is an HBCU? HBCU Lifestyle). I had the honor of graduating from the nation’s first degree-granting HBCU, Lincoln University of Pennsylvania.

One of the main reasons that I decided to attend a HBCU is that the available data suggests that HBCUs close the socioeconomic gap for Black students more than other high-education institutions (Source: HBCUs Close Socioeconomic Gap, Here’s How, 2021). This is exemplified by my own experience — I was a student that came from a low-income background, and became the first generation college graduate in my family. I believe it is due to HBCUs providing a united, supportive, and safe space for people from the African diaspora which equips us to be our best.

The HBCU Smart Cities Challenge

There are a wide range of problems the HBCU Smart Cities Challenge invites students to tackle. These problems include water management in Tuskegee, AL; broadband and security access in Raleigh, NC; public health for the City of Columbia, SC; and affordable housing in Winston-Salem, NC—just to name a few. Applying skills with smart technology to real-life problems helps improve upon the existing infrastructure in these cities.

To solve these problems, the challenge brings together students at HBCUs to build smart city applications. Over several months, developers, entrepreneurs, designers, and engineers will develop tech solutions using Internet of Things technology. In October, Cloudflare presented as part of a town hall in the HBCU Smart Cities series. We encouraged local leaders to think about using historic investments in broadband buildout to also lay the foundation for Smart Cities infrastructure at the same time. We described how, with solid infrastructure in place, the Smart Cities applications that are built on top of that infrastructure- would be fast, reliable, and secure — which is a necessity for infrastructure that residents rely on.

Here are some quotes from Norma McGowan Jackson, District 1 Councilwoman of City of Tuskegee and HBCU Smart City Fellow Arnold Bhebhe:

“As the council person for District 1 in the City of Tuskegee, which represents Tuskegee University, as the Council liaison for the HBCU Smart Cities Challenge, as a Tuskegee native, and as a Tuskegee Institute, (now University) alumnae, I am delighted to be a part of this collaboration. Since the days of Dr. Booker T. Washington, the symbiotic relationship between the Institute (now University) and the surrounding community has been acknowledged as critical for both entities and this opportunity to further enhance that relationship is a sure win-win!“

– Norma McGowan Jackson, District 1 Councilwoman of City of Tuskegee

“The HBCU Smart Cities Challenge has helped me to better understand that even though we live in an unpredictable world, our ability to learn and adapt to change can make us better innovators. I’m super grateful to have the opportunity to reinforce my problem-solving, creativity, and communication skills alongside like-minded HBCU students who are passionate about making a positive impact in our community.“

– Arnold Bhebhe, Junior at Alabama State University majoring in computer science

How Cloudflare helps

Attending an HBCU was one of the best decisions I have made in my life, and my motivation was seeing the product of HBCU graduates — noting that the first woman Vice President of the United States, Kamala Harris, is a HBCU graduate from Howard University.

The biggest honor for me is having the opportunity to build on the brilliance of these college students in this partnership, because I was in their shoes almost 25 years ago.

Further, to help protect websites associated with HBCU Smart Cities projects, Cloudflare has invited students in the program to apply for Project Galileo.

Finally, the HBCU Smart Cities Challenge are continually looking for mentors, sponsors and partnerships, as well as support and resources for the students. If you’re interested, please go here to learn more.

How Cloudflare advocates for a better Internet

Post Syndicated from Christiaan Smits original https://blog.cloudflare.com/how-cloudflare-advocates-for-a-better-internet/

We mean a lot of things when we talk about helping to build a better Internet. Sometimes, it’s about democratizing technologies that were previously only available to the wealthiest and most technologically savvy companies, sometimes it’s about protecting the most vulnerable groups from cyber attacks and online prosecution. And the Internet does not exist in a vacuum.

As a global company, we see the way that the future of the Internet is affected by governments, regulations, and people. If we want to help build a better Internet, we have to make sure that we are in the room, sharing Cloudflare’s perspective in the many places where important conversations about the Internet are happening. And that is why we believe strongly in the value of public policy.

We thought this week would be a great opportunity to share Cloudflare’s principles and our theories behind policy engagement. Because at its core, a public policy approach needs to reflect who the company is through their actions and rhetoric. And as a company, we believe there is real value in helping governments understand how companies work, and helping our employees understand how governments and law-makers work. Especially now, during a time in which many jurisdictions are passing far-reaching laws that shape the future of the Internet, from laws on content moderation, to new and more demanding regulations on cybersecurity.

Principled, Curious, Transparent

At Cloudflare, we have three core company values: we are Principled, Curious, and Transparent. By principled, we mean thoughtful, consistent, and long-term oriented about what the right course of action is. By curious, we mean taking on big challenges and understanding the why and how behind things. Finally, by transparent, we mean being clear on why and how we decide to do things both internally and externally.

Our approach to public policy aims to integrate these three values into our engagement with stakeholders. We are thoughtful when choosing the right issues to prioritize, and are consistent once we have chosen to take a position on a particular topic. We are curious about the important policy conversations that governments and institutions around the world are having about the future of the Internet, and want to understand the different points of view in that debate. And we aim to be as transparent as possible when talking about our policy stances, by, for example, writing blogs, submitting comments to public consultations, or participating in conversations with policymakers and our peers in the industry. And, for instance with this blog, we also aim to be transparent about our actual advocacy efforts.

What makes Cloudflare different?

With approximately 20 percent of websites using our service, including those who use our free tier, Cloudflare protects a wide variety of customers from cyberattack. Our business model relies on economies of scale, and customers choosing to add products and services to our entry-level cybersecurity protections. This means our policy perspective can be broad: we are advocating for a better Internet for our customers who are Fortune 1000 companies, as well as for individual developers with hobby blogs or small business websites. It also means that our perspective is distinct: we have a business model that is unique, and therefore a perspective that often isn’t represented by others.

Strategy

We are not naive: we do not believe that a growing company can command the same attention as some of the Internet giants, or has the capacity to engage on as many issues as those bigger companies. So how do we prioritize? What’s our rule of thumb on how and when we engage?

Our starting point is to think about the policy developments that have the largest impact on our own activities. Which issues could force us to change our model? Cause significant (financial) impact? Skew incentives for stronger cybersecurity? Then we do the exercise again, this time, thinking about whether our perspective on that policy issue is dramatically different from those of other companies in the industry. Is it important to us, but we share the same perspective as other cybersecurity, infrastructure, or cloud companies? We pass. For example, while changing corporate tax rates could have a significant financial impact on our business, we don’t exactly have a unique perspective on that. So that’s off the list. But privacy? There we think we have a distinct perspective, as a company that practices privacy by design, and supports and develops standards that help ensure privacy on the Internet. And crucially: we think privacy will be critical to the future of the Internet. So on public policy ideas related to privacy we engage. And then there is our unique vantage point, derived from our global network. This often gives us important insight and data, which we can use to educate policymakers on relevant issues.

Our engagement channels

Our Public Policy team includes people who have worked in government, law firms and the tech industry before they joined Cloudflare. The informal networks, professional relationships, and expertise that they have built over the course of their careers are instrumental in ensuring that Cloudflare is involved in important policy conversations about the Internet. We do not have a Political Action Committee, and we do not make political contributions.

As mentioned, we try to focus on the issues where we can make a difference, where we have a unique interest, perspective and expertise. Nonetheless, there are many policies and regulations that could affect not only us at Cloudflare, but the entire Internet ecosystem. In order to track policy developments worldwide, and ensure that we are able to share information, we are members of a number of associations and coalitions.

Some of these advocacy groups represent a particular industry, such as software companies, or US based technology firms, and engage with lawmakers on a wide variety of relevant policy issues for their particular sector. Other groups, in contrast, focus their advocacy on a more specific policy issue.

In addition to formal trade association memberships, we will occasionally join coalitions of companies or civil society organizations assembled for particular advocacy purposes. For example, we periodically engage with the Stronger Internet coalition, to share information about policies around encryption, privacy, and free expression around the world.

It almost goes without saying that, given our commitment to transparency as a company and entirely in line with our own ethics code and legal compliance, we fully comply with all relevant rules around advocacy in jurisdictions across the world. You can also find us in transparency registers of governmental entities, where these exist. Because we want to be transparent about how we advocate for a better Internet, today we have published an overview of the organizations we work with on our website.

[$] The intersection of shadow stacks and CRIU

Post Syndicated from original https://lwn.net/Articles/915728/

Shadow stacks are one of the methods employed to enforce control-flow

integrity and thwart attackers; they are a mechanism for fine-grained,

backward-edge protection. Most of the time, applications are not even

aware that shadow stacks are in use. As is so often the case, though, life

gets more complicated when the Checkpoint/Restore in Userspace

(CRIU) mechanism is in use. Not breaking CRIU turns out to be one of the

big challenges facing developers working to get user-space shadow-stack