Post Syndicated from Nivas Shankar original https://aws.amazon.com/blogs/big-data/easily-manage-your-data-lake-at-scale-using-tag-based-access-control-in-aws-lake-formation/

Thousands of customers are building petabyte-scale data lakes on AWS. Many of these customers use AWS Lake Formation to easily build and share their data lakes across the organization. As the number of tables and users increase, data stewards and administrators are looking for ways to manage permissions on data lakes easily at scale. Customers are struggling with “role explosion” and need to manage hundreds or even thousands of user permissions to control data access. For example, for an account with 1,000 resources and 100 principals, the data steward would have to create and manage up to 100,000 policy statements. Furthermore, as new principals and resources get added or deleted, these policies have to be updated to keep the permissions current.

Lake Formation Tag-based access control solves this problem by allowing data stewards to create LF-tags (based on their data classification and ontology) that can then be attached to resources. You can create policies on a smaller number of logical tags instead of specifying policies on named resources. LF-tags enable you to categorize and explore data based on taxonomies, which reduces policy complexity and scales permissions management. You can create and manage policies with tens of logical tags instead of the thousands of resources. LF-tags access control decouples policy creation from resource creation, which helps data stewards manage permissions on a large number of databases, tables, and columns by removing the need to update policies every time a new resource is added to the data lake. Finally, LF-tags access allows you to create policies even before the resources come into existence. All you have to do is tag the resource with the right LF-tags to ensure it is managed by existing policies.

This post focuses on managing permissions on data lakes at scale using LF-tags in Lake Formation. When it comes to managing data lake catalog tables from AWS Glue and administering permission to Lake Formation, data stewards within the producing accounts have functional ownership based on the functions they support, and can grant access to various consumers, external organizations, and accounts. You can now define LF-tags; associate at the database, table, or column level; and then share controlled access across analytic, machine learning (ML), and extract, transform, and load (ETL) services for consumption. LF-tags ensures that governance can be scaled easily by replacing the policy definitions of thousands of resources with a small number of logical tags.

LF-tags access has three main components:

- Tag ontology and classification – Data stewards can define a LF-tag ontology based on data classification and grant access based on LF-tags to AWS Identity and Access Management (IAM) principals and SAML principals or groups

- Tagging resources – Data engineers can easily create, automate, implement, and track all LF-tags and permissions against AWS Glue catalogs through the Lake Formation API

- Policy evaluation – Lake Formation evaluates the effective permissions based on LF-tags at query time and allows access to data through consuming services such as Amazon Athena, Amazon Redshift Spectrum, Amazon SageMaker Data Wrangler, and Amazon EMR Studio, based on the effective permissions granted across multiple accounts or organization-level data shares

Solution overview

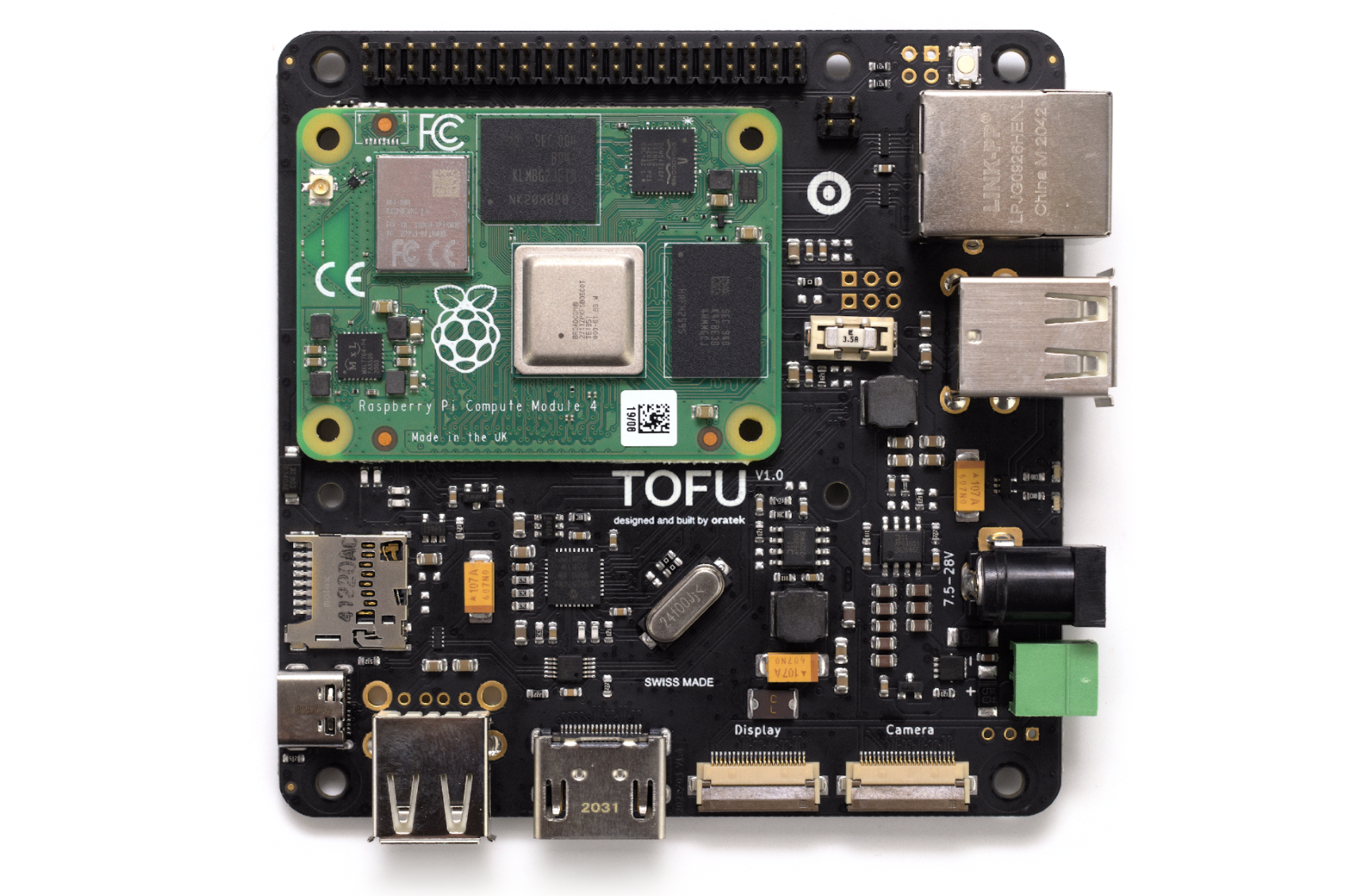

The following diagram illustrates the architecture of the solution described in this post.

In this post, we demonstrate how you can set up a Lake Formation table and create Lake Formation tag-based policies using a single account with multiple databases. We walk you through the following high-level steps:

- The data steward defines the tag ontology with two LF-tags:

Confidential and Sensitive. Data with “Confidential = True” has tighter access controls. Data with “Sensitive = True” requires specific analysis from the analyst.

- The data steward assigns different permission levels to the data engineer to build tables with different LF-tags.

- The data engineer builds two databases:

tag_database and col_tag_database. All tables in tag_database are configured with “Confidential = True”. All tables in the col_tag_database are configured with “Confidential = False”. Some columns of the table in col_tag_database are tagged with “Sensitive = True” for specific analysis needs.

- The data engineer grants read permission to the analyst for tables with specific expression condition “

Confidential = True” and “Confidential = False , Sensitive = True”.

- With this configuration, the data analyst can focus on performing analysis with the right data.

Provision your resources

This post includes an AWS CloudFormation template for a quick setup. You can review and customize it to suit your needs. The template creates three different personas to perform this exercise and copies the nyc-taxi-data dataset to your local Amazon Simple Storage Service (Amazon S3) bucket.

To create these resources, complete the following steps:

- Sign in to the AWS CloudFormation console in the

us-east-1 Region.

- Choose Launch Stack:

- Choose Next.

- In the User Configuration section, enter password for three personas: DataStewardUserPassword, DataEngineerUserPassword and DataAnalystUserPassword.

- Review the details on the final page and select I acknowledge that AWS CloudFormation might create IAM resources.

- Choose Create.

The stack takes up to 5 minutes and creates all the required resources, including:

- An S3 bucket

- The appropriate Lake Formation settings

- The appropriate Amazon Elastic Compute Cloud (Amazon EC2) resources

- Three user personas with user ID credentials:

- Data steward (administrator) – The

lf-data-steward user has the following access:

- Read access to all resources in the Data Catalog

- Can create LF-tags and associate to the data engineer role for grantable permission to other principals

- Data engineer – The

lf-data-engineer user has the following access:

- Full read, write, and update access to all resources in the Data Catalog

- Data location permissions in the data lake

- Can associate LF-tags and associate to the Data Catalog

- Can attach LF-tags to resources, which provides access to principals based on any policies created by data stewards

- Data analyst – The

lf-data-analyst user has the following access:

- Fine-grained access to resources shared by Lake Formation Tag-based access policies

Register your data location and create an LF-tag ontology

We perform this first step as the data steward user (lf-data-steward) to verify the data in Amazon S3 and the Data Catalog in Lake Formation.

- Sign in to the Lake Formation console as

lf-data-steward with the password used while deploying the CloudFormation stack.

- In the navigation pane, under Permissions¸ choose Administrative roles and tasks.

- For IAM users and roles, choose the user

lf-data-steward.

- Choose Save to add

lf-data-steward as a Lake Formation admin.

Next, we will update the Data catalog settings to use Lake Formation permission to control catalog resources instead of IAM based access control.

- In the navigation pane, under Data catalog¸ choose Settings.

- Uncheck Use only IAM access control for new databases.

- Uncheck Use only IAM access control for new tables in new databases.

- Click Save.

Next, we need to register the data location for the data lake.

- In the navigation pane, under Register and ingest, choose Data lake locations.

- For Amazon S3 path, enter

s3://lf-tagbased-demo-<<Account-ID>>.

- For IAM role¸ leave it as the default value

AWSServiceRoleForLakeFormationDataAccess.

- Choose Register location.

Next, we create the ontology by defining a LF-tag.

Next, we create the ontology by defining a LF-tag.

- Under Permissions in the navigation pane, under Administrative roles, choose LF-Tags.

- Choose Add LF-tags.

- For Key, enter

Confidential.

- For Values, add

True and False.

- Choose Add LF-tag.

- Repeat the steps to create the LF-tag

Sensitive with the value True.

You have created all the necessary LF-tags for this exercise.Next, we give specific IAM principals the ability to attach newly created LF-tags to resources.

You have created all the necessary LF-tags for this exercise.Next, we give specific IAM principals the ability to attach newly created LF-tags to resources.

- Under Permissions in the navigation pane, under Administrative roles, choose LF-tag permissions.

- Choose Grant.

- Select IAM users and roles.

- For IAM users and roles, search for and choose the

lf-data-engineer role.

- In the LF-tag permission scope section, add the key

Confidential with values True and False, and the key Sensitive with value True.

- Under Permissions¸ select Describe and Associate for LF-tag permissions and Grantable permissions.

- Choose Grant.

Next, we grant permissions to lf-data-engineer to create databases in our catalog and on the underlying S3 bucket created by AWS CloudFormation.

- Under Permissions in the navigation pane, choose Administrative roles.

- In the Database creators section, choose Grant.

- For IAM users and roles, choose the

lf-data-engineer role.

- For Catalog permissions, select Create database.

- Choose Grant.

Next, we grant permissions on the S3 bucket (s3://lf-tagbased-demo-<<Account-ID>>) to the lf-data-engineer user.

- In the navigation pane, choose Data locations.

- Choose Grant.

- Select My account.

- For IAM users and roles, choose the

lf-data-engineer role.

- For Storage locations, enter the S3 bucket created by the CloudFormation template (

s3://lf-tagbased-demo-<<Account-ID>>).

- Choose Grant.

Next, we grant

Next, we grant lf-data-engineer grantable permissions on resources associated with the LF-tag expression Confidential=True.

- In the navigation pane, choose Data permissions.

- Choose Grant.

- Select IAM users and roles.

- Choose the role

lf-data-engineer.

- In the LF-tag or catalog resources section, Select Resources matched by LF-Tags.

- Choose Add LF-Tag.

- Add the key

Confidential with the values True.

- In the Database permissions section, select Describe for Database permissions and Grantable permissions.

- In the Table and column permissions section, select Describe, Select, and Alter for both Table permissions and Grantable permissions.

- Choose Grant.

Next, we grant

Next, we grant lf-data-engineer grantable permissions on resources associated with the LF-tag expression Confidential=False.

- In the navigation pane, choose Data permissions.

- Choose Grant.

- Select IAM users and roles.

- Choose the role

lf-data-engineer.

- Select Resources matched by LF-tags.

- Choose Add LF-tag.

- Add the key

Confidential with the values False.

- In the Database permissions section, select Describe for Database permissions and Grantable permissions.

- In the Table and column permissions section, do not select anything.

- Choose Grant.

Next, we grant

Next, we grant lf-data-engineer grantable permissions on resources associated with the LF-tag expression Confidential=False and Sensitive=True.

- In the navigation pane, choose Data permissions.

- Choose Grant.

- Select IAM users and roles.

- Choose the role

lf-data-engineer.

- Select Resources matched by LF-tags.

- Choose Add LF-tag.

- Add the key

Confidential with the values False.

- Choose Add LF-tag.

- Add the key

Sensitive with the values True.

- In the Database permissions section, select Describe for Database permissions and Grantable permissions.

- In the Table and column permissions section, select Describe, Select, and Alter for both Table permissions and Grantable permissions.

- Choose Grant.

Create the Lake Formation databases

Now, sign in as lf-data-engineer with the password used while deploying the CloudFormation stack. We create two databases and attach LF-tags to the databases and specific columns for testing purposes.

Create your database and table for database-level access

We first create the database tag_database, the table source_data, and attach appropriate LF-tags.

- On the Lake Formation console, choose Databases.

- Choose Create database.

- For Name, enter

tag_database.

- For Location, enter the S3 location created by the CloudFormation template (

s3://lf-tagbased-demo-<<Account-ID>>/tag_database/).

- Deselect Use only IAM access control for new tables in this database.

- Choose Create database.

Next, we create a new table within tag_database.

- On the Databases page, select the database

tag_database.

- Choose View Tables and click Create table.

- For Name, enter

source_data.

- For Database, choose the database

tag_database.

- For Data is located in, select Specified path in my account.

- For Include path, enter the path to

tag_database created by the CloudFormation template (s3://lf-tagbased-demo-<<Account-ID>>/tag_database/).

- For Data format, select CSV.

- Under Upload schema, enter the following schema JSON:

[

{

"Name": "vendorid",

"Type": "string"

},

{

"Name": "lpep_pickup_datetime",

"Type": "string"

},

{

"Name": "lpep_dropoff_datetime",

"Type": "string"

},

{

"Name": "store_and_fwd_flag",

"Type": "string"

},

{

"Name": "ratecodeid",

"Type": "string"

},

{

"Name": "pulocationid",

"Type": "string"

},

{

"Name": "dolocationid",

"Type": "string"

},

{

"Name": "passenger_count",

"Type": "string"

},

{

"Name": "trip_distance",

"Type": "string"

},

{

"Name": "fare_amount",

"Type": "string"

},

{

"Name": "extra",

"Type": "string"

},

{

"Name": "mta_tax",

"Type": "string"

},

{

"Name": "tip_amount",

"Type": "string"

},

{

"Name": "tolls_amount",

"Type": "string"

},

{

"Name": "ehail_fee",

"Type": "string"

},

{

"Name": "improvement_surcharge",

"Type": "string"

},

{

"Name": "total_amount",

"Type": "string"

},

{

"Name": "payment_type",

"Type": "string"

}

]

- Choose Upload.

After uploading the schema, the table schema should look like the following screenshot.

- Choose Submit.

Now we’re ready to attach LF-tags at the database level.

- On the Databases page, find and select

tag_database.

- On the Actions menu, choose Edit LF-tags.

- Choose Assign new LF-tag.

- For Assigned keys¸ choose the

Confidential LF-tag you created earlier.

- For Values, choose True.

- Choose Save.

This completes the LF-tag assignment to the tag_database database.

Create your database and table for column-level access

Now we repeat these steps to create the database col_tag_database and table source_data_col_lvl, and attach LF-tags at the column level.

- On the Databases page, choose Create database.

- For Name, enter

col_tag_database.

- For Location, enter the S3 location created by the CloudFormation template (

s3://lf-tagbased-demo-<<Account-ID>>/col_tag_database/).

- Deselect Use only IAM access control for new tables in this database.

- Choose Create database.

- On the Databases page, select your new database (

col_tag_database).

- Choose View tables and Click Create table.

- For Name, enter

source_data_col_lvl.

- For Database, choose your new database (

col_tag_database).

- For Data is located in, select Specified path in my account.

- Enter the S3 path for

col_tag_database (s3://lf-tagbased-demo-<<Account-ID>>/col_tag_database/).

- For Data format, select CSV.

- Under Upload schema, enter the following schema JSON:

[

{

"Name": "vendorid",

"Type": "string"

},

{

"Name": "lpep_pickup_datetime",

"Type": "string"

},

{

"Name": "lpep_dropoff_datetime",

"Type": "string"

},

{

"Name": "store_and_fwd_flag",

"Type": "string"

},

{

"Name": "ratecodeid",

"Type": "string"

},

{

"Name": "pulocationid",

"Type": "string"

},

{

"Name": "dolocationid",

"Type": "string"

},

{

"Name": "passenger_count",

"Type": "string"

},

{

"Name": "trip_distance",

"Type": "string"

},

{

"Name": "fare_amount",

"Type": "string"

},

{

"Name": "extra",

"Type": "string"

},

{

"Name": "mta_tax",

"Type": "string"

},

{

"Name": "tip_amount",

"Type": "string"

},

{

"Name": "tolls_amount",

"Type": "string"

},

{

"Name": "ehail_fee",

"Type": "string"

},

{

"Name": "improvement_surcharge",

"Type": "string"

},

{

"Name": "total_amount",

"Type": "string"

},

{

"Name": "payment_type",

"Type": "string"

}

]

- Choose Upload.

After uploading the schema, the table schema should look like the following screenshot.

- Choose Submit to complete the creation of the table

Now you associate the Sensitive=True LF-tag to the columns vendorid and fare_amount.

- On the Tables page, select the table you created (

source_data_col_lvl).

- On the Actions menu, choose Edit Schema.

- Select the column

vendorid and choose Edit LF-tags.

- For Assigned keys, choose Sensitive.

- For Values, choose True.

- Choose Save.

Repeat the steps for the Sensitive LF-tag update for fare_amount column.

- Select the column

fare_amount and choose Edit LF-tags.

- Add the

Sensitive key with value True.

- Choose Save.

- Choose Save as new version to save the new schema version with tagged columns.The following screenshot shows column properties with the LF-tags updated.

Next we associate the

Next we associate the Confidential=False LF-tag to col_tag_database. This is required for lf-data-analyst to be able to describe the database col_tag_database when logged in from Athena.

- On the Databases page, find and select

col_tag_database.

- On the Actions menu, choose Edit LF-tags.

- Choose Assign new LF-tag.

- For Assigned keys¸ choose the

Confidential LF-tag you created earlier.

- For Values, choose

False.

- Choose Save.

Grant table permissions

Now we grant permissions to data analysts for consumption of the database tag_database and the table col_tag_database.

- Sign in to the Lake Formation console as

lf-data-engineer.

- On the Permissions page, select Data Permissions

- Choose Grant.

- Under Principals, select IAM users and roles.

- For IAM users and roles, choose lf-data-analyst.

- Select Resources matched by LF-tags.

- Choose Add LF-tag.

- For Key, choose Confidential.

- For Values¸ choose True.

- For Database permissions, select Describe

- For Table permissions, choose Select and Describe.

- Choose Grant.

This grants permissions to the lf-data-analyst user on the objects associated with the LF-tag Confidential=True (Database : tag_database) to describe the database and the select permission on tables.Next, we repeat the steps to grant permissions to data analysts for LF-tag expression for Confidential=False . This LF-tag is used for describing the col_tag_database and the table source_data_col_lvl when logged in as lf-data-analyst from Athena. And so, we only grant describe access to the resources through this LF-tag expression.

- Sign in to the Lake Formation console as

lf-data-engineer.

- On the Databases page, select the database

col_tag_database.

- Choose Action and Grant.

- Under Principals, select IAM users and roles.

- For IAM users and roles, choose lf-data-analyst.

- Select Resources matched by LF-tags.

- Choose Add LF-tag.

- For Key, choose Confidential.

- For Values¸ choose False.

- For Database permissions, select Describe.

- For Table permissions, do not select anything.

- Choose Grant.

Next, we repeat the steps to grant permissions to data analysts for LF-tag expression for Confidential=False and Sensitive=True. This LF-tag is used for describing the col_tag_database and the table source_data_col_lvl (Column level) when logged in as lf-data-analyst from Athena.

- Sign in to the Lake Formation console as

lf-data-engineer.

- On the Databases page, select the database

col_tag_database.

- Choose Action and Grant.

- Under Principals, select IAM users and roles.

- For IAM users and roles, choose lf-data-analyst.

- Select Resources matched by LF-tags.

- Choose Add LF-tag.

- For Key, choose Confidential.

- For Values¸ choose False.

- Choose Add LF-tag.

- For Key, choose Sensitive.

- For Values¸ choose True.

- For Database permissions, select Describe.

- For Database permissions, select Describe.

- For Table permissions, select Select and Describe.

- Choose Grant.

Run a query in Athena to verify the permissions

For this step, we sign in to the Athena console as lf-data-analyst and run SELECT queries against the two tables (source_data and source_data_col_lvl). We use our S3 path as the query result location (s3://lf-tagbased-demo-<<Account-ID>>/athena-results/).

- In the Athena query editor, choose

tag_database in the left panel.

- Choose the additional menu options icon (three vertical dots) next to

source_data and choose Preview table.

- Choose Run query.

The query should take a few minutes to run. The following screenshot shows our query results.

The first query displays all the columns in the output because the LF-tag is associated at the database level and the source_data table automatically inherited the LF-tag from the database tag_database.

- Run another query using

col_tag_database and source_data_col_lvl.

The second query returns just the two columns that were tagged (Non-Confidential and Sensitive).

As a thought experiment, you can also check to see the Lake Formation Tag-based access policy behavior on columns to which the user doesn’t have policy grants.

When an untagged column is selected from the table source_data_col_lvl, Athena returns an error. For example, you can run the following query to choose untagged columns geolocationid:

SELECT geolocationid FROM "col_tag_database"."source_data_col_lvl" limit 10;

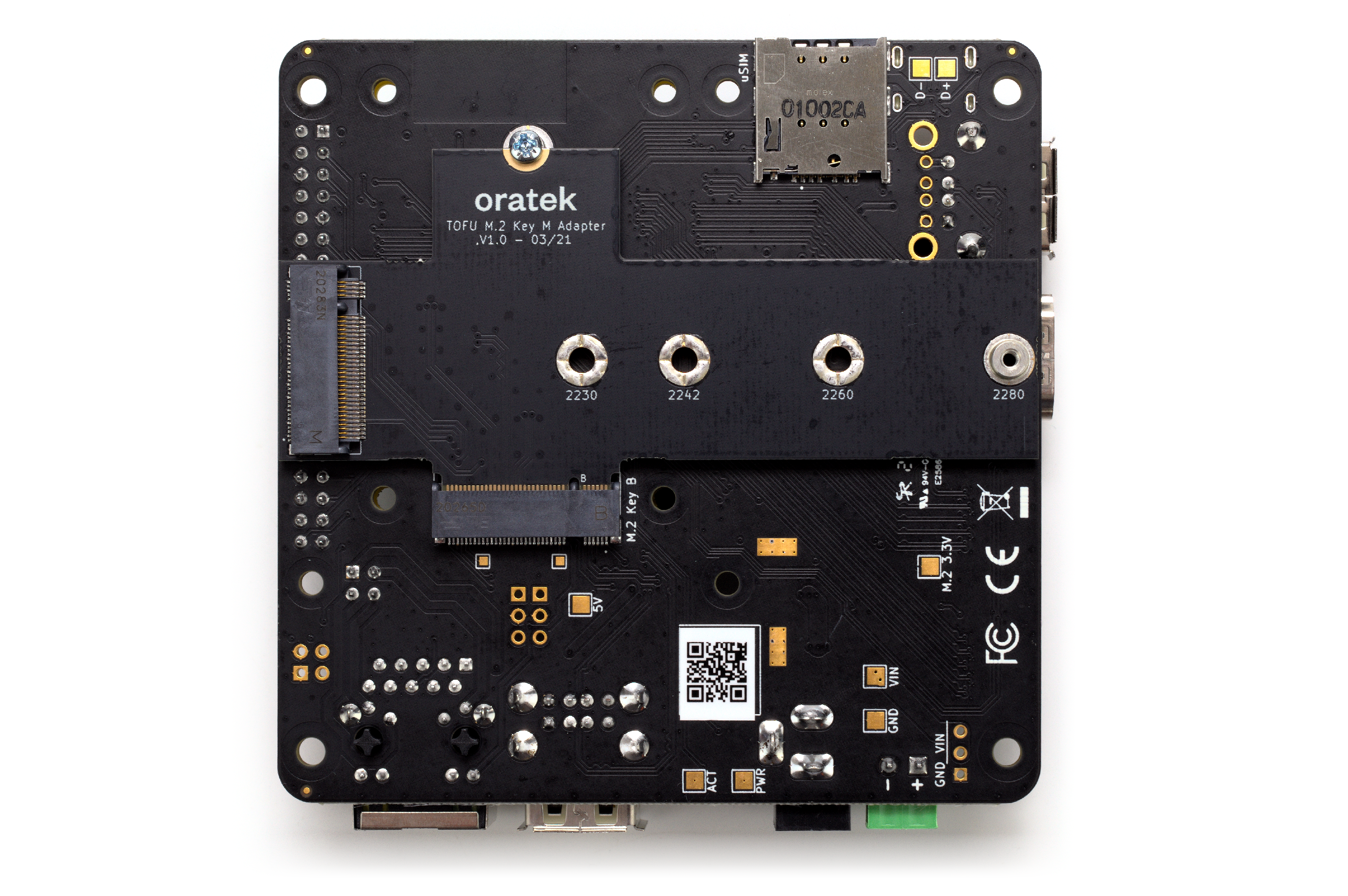

Extend the solution to cross-account scenarios

You can extend this solution to share catalog resources across accounts. The following diagram illustrates a cross-account architecture.

We describe this in more detail in a subsequent post.

Clean up

To help prevent unwanted charges to your AWS account, you can delete the AWS resources that you used for this walkthrough.

- Sign in as

lf-data-engineer Delete the databases tag_database and col_tag_database

- Now, Sign in as

lf-data-steward and clean up all the LF-tag Permissions, Data Permissions and Data Location Permissions that were granted above that were granted lf-data-engineer and lf-data-analyst.

- Sign in to the Amazon S3 console as the account owner (the IAM credentials you used to deploy the CloudFormation stack).

- Delete the following buckets:

lf-tagbased-demo-accesslogs-<acct-id>lf-tagbased-demo-<acct-id>

- On the AWS CloudFormation console, delete the stack you created.

- Wait for the stack status to change to

DELETE_COMPLETE.

Conclusion

In this post, we explained how to create a LakeFormation Tag-based access control policy in Lake Formation using an AWS public dataset. In addition, we explained how to query tables, databases, and columns that have LakeFormation Tag-based access policies associated with them.

You can generalize these steps to share resources across accounts. You can also use these steps to grant permissions to SAML identities. In subsequent posts, we highlight these use cases in more detail.

About the Authors

Sanjay Srivastava is a principal product manager for AWS Lake Formation. He is passionate about building products, in particular products that help customers get more out of their data. During his spare time, he loves to spend time with his family and engage in outdoor activities including hiking, running, and gardening.

Sanjay Srivastava is a principal product manager for AWS Lake Formation. He is passionate about building products, in particular products that help customers get more out of their data. During his spare time, he loves to spend time with his family and engage in outdoor activities including hiking, running, and gardening.

Nivas Shankar is a Principal Data Architect at Amazon Web Services. He helps and works closely with enterprise customers building data lakes and analytical applications on the AWS platform. He holds a master’s degree in physics and is highly passionate about theoretical physics concepts.

Nivas Shankar is a Principal Data Architect at Amazon Web Services. He helps and works closely with enterprise customers building data lakes and analytical applications on the AWS platform. He holds a master’s degree in physics and is highly passionate about theoretical physics concepts.

Pavan Emani is a Data Lake Architect at AWS, specialized in big data and analytics solutions. He helps customers modernize their data platforms on the cloud. Outside of work, he likes reading about space and watching sports.

Pavan Emani is a Data Lake Architect at AWS, specialized in big data and analytics solutions. He helps customers modernize their data platforms on the cloud. Outside of work, he likes reading about space and watching sports.

Next, we create the ontology by defining a LF-tag.

Next, we create the ontology by defining a LF-tag.

You have created all the necessary LF-tags for this exercise.Next, we give specific IAM principals the ability to attach newly created LF-tags to resources.

You have created all the necessary LF-tags for this exercise.Next, we give specific IAM principals the ability to attach newly created LF-tags to resources.

Next, we grant

Next, we grant

Next, we grant

Next, we grant

Next, we grant

Next, we grant

Next we associate the

Next we associate the

Sanjay Srivastava is a principal product manager for AWS Lake Formation. He is passionate about building products, in particular products that help customers get more out of their data. During his spare time, he loves to spend time with his family and engage in outdoor activities including hiking, running, and gardening.

Sanjay Srivastava is a principal product manager for AWS Lake Formation. He is passionate about building products, in particular products that help customers get more out of their data. During his spare time, he loves to spend time with his family and engage in outdoor activities including hiking, running, and gardening. Nivas Shankar is a Principal Data Architect at Amazon Web Services. He helps and works closely with enterprise customers building data lakes and analytical applications on the AWS platform. He holds a master’s degree in physics and is highly passionate about theoretical physics concepts.

Nivas Shankar is a Principal Data Architect at Amazon Web Services. He helps and works closely with enterprise customers building data lakes and analytical applications on the AWS platform. He holds a master’s degree in physics and is highly passionate about theoretical physics concepts. Pavan Emani is a Data Lake Architect at AWS, specialized in big data and analytics solutions. He helps customers modernize their data platforms on the cloud. Outside of work, he likes reading about space and watching sports.

Pavan Emani is a Data Lake Architect at AWS, specialized in big data and analytics solutions. He helps customers modernize their data platforms on the cloud. Outside of work, he likes reading about space and watching sports.