Post Syndicated from Bryan Dragon original https://github.blog/2023-01-19-unlocking-security-updates-for-transitive-dependencies-with-npm/

Dependabot helps developers secure their software with automated security updates: when a security advisory is published that affects a project dependency, Dependabot will try to submit a pull request that updates the vulnerable dependency to a safe version if one is available. Of course, there’s no rule that says a security vulnerability will only affect direct dependencies—dependencies at any level of a project’s dependency graph could become vulnerable.

Until recently, Dependabot did not address vulnerabilities on transitive dependencies, that is, on the dependencies sitting one or more levels below a project’s direct dependencies. Developers would encounter an error message in the GitHub UI and they would have to manually update the chain of ancestor dependencies leading to the vulnerable dependency to bring it to a safe version.

Internally, this would show up as a failed background job due to an update-not-possible error—and we would see a lot of these errors.

Understanding the challenge

Dependabot offers two strategies for updating dependencies: scheduled version updates and security updates. With version updates, the explicit goal is to keep project dependencies updated to the latest available version, and Dependabot can be configured to widen or increase a version requirement so that it accommodates the latest version. With security updates, Dependabot tries to make the most conservative update that removes the vulnerability while respecting version requirements. In this post we’ll be looking at security updates.

As an example, let’s say we have a repository with security updates enabled that contains an npm project with a single dependency on react-scripts@^4.0.3.

Not all package managers handle version requirements in the same way, so let’s quickly refresh. A version requirement like ^4.0.3 (a “caret range”) in npm permits updates to versions that don’t change the leftmost nonzero element in the MAJOR.MINOR.PATCH semver version number. The version requirement ^4.0.3, then, can be understood as allowing versions greater than or equal to 4.0.3 and less than 5.0.0.

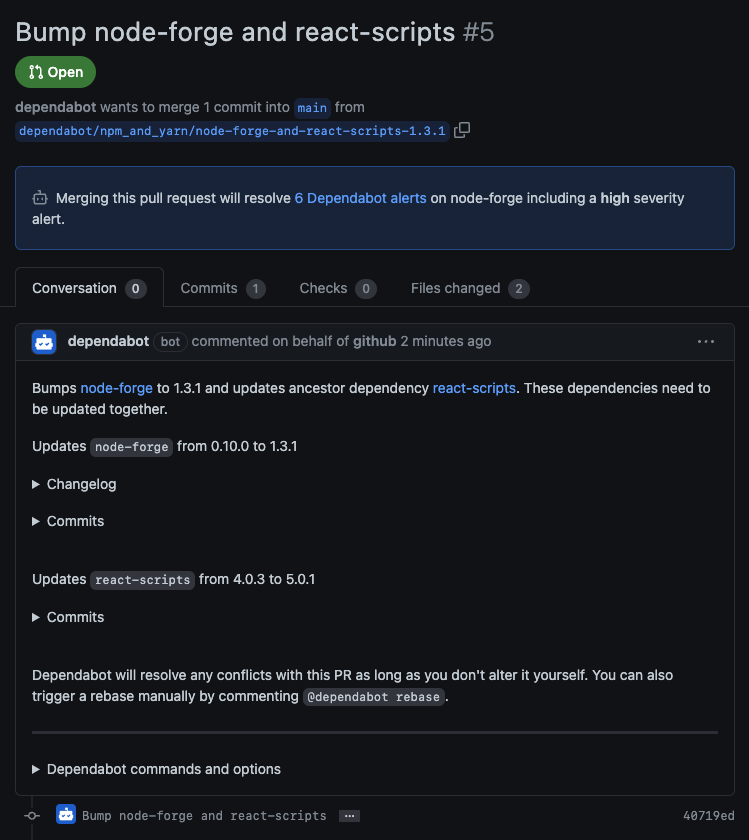

On March 18, 2022, a high-severity security advisory was published for node-forge, a popular npm package that provides tools for writing cryptographic and network-heavy applications. The advisory impacts versions earlier than 1.3.0, the patched version released the day before the advisory was published.

While we don’t have a direct dependency on node-forge, if we zoom in on our project’s dependency tree we can see that we do indirectly depend on a vulnerable version:

react-scripts@^4.0.3 4.0.3

- [email protected] 3.11.1

- selfsigned@^1.10.7 1.10.14

- node-forge@^0.10.0 0.10.0

In order to resolve the vulnerability, we need to bring node-forge from 0.10.0 to 1.3.0, but a sequence of conflicting ancestor dependencies prevents us from doing so:

4.0.3is the latest version ofreact-scriptspermitted by our project3.11.1is the only version ofwebpack-dev-serverpermitted by[email protected]1.10.14is the latest version ofselfsignedpermitted by[email protected]0.10.0is the latest version ofnode-forgepermitted by[email protected]

This is the point at which the security update would fail with an update-not-possible error. The challenge is in finding the version of selfsigned that permits [email protected], the version of webpack-dev-server that permits that version of selfsigned, and so on up the chain of ancestor dependencies until we reach react-scripts.

How we chose npm

When we set out to reduce the rate of update-not-possible errors, the first thing we did was pull data from our data warehouse in order to identify the greatest opportunities for impact.

JavaScript is the most popular ecosystem that Dependabot supports, both by Dependabot enablement and by update volume. In fact, more than 80% of the security updates that Dependabot performs are for npm and Yarn projects. Given their popularity, improving security update outcomes for JavaScript projects promised the greatest potential for impact, so we focused our investigation there.

npm and Yarn both include an operation that audits a project’s dependencies for known security vulnerabilities, but currently only npm natively has the ability to additionally make the updates needed to resolve the vulnerabilities that it finds.

After a successful engineering spike to assess the feasibility of integrating with npm’s audit functionality, we set about productionizing the approach.

Tapping into npm audit

When you run the npm audit command, npm collects your project’s dependencies, makes a bulk request to the configured npm registry for all security advisories affecting them, and then prepares an audit report. The report lists each vulnerable dependency, the dependency that requires it, the advisories affecting it, and whether a fix is possible—in other words, almost everything Dependabot should need to resolve a vulnerable transitive dependency.

node-forge <=1.2.1

Severity: high

Open Redirect in node-forge - https://github.com/advisories/GHSA-8fr3-hfg3-gpgp

Prototype Pollution in node-forge debug API. - https://github.com/advisories/GHSA-5rrq-pxf6-6jx5

Improper Verification of Cryptographic Signature in node-forge - https://github.com/advisories/GHSA-cfm4-qjh2-4765

URL parsing in node-forge could lead to undesired behavior. - https://github.com/advisories/GHSA-gf8q-jrpm-jvxq

fix available via `npm audit fix --force`

Will install [email protected], which is a breaking change

node_modules/node-forge

selfsigned 1.1.1 - 1.10.14

Depends on vulnerable versions of node-forge

node_modules/selfsigned

There were two ways in which we had to supplement npm audit to meet our requirements:

- The audit report doesn’t include the chain of dependencies linking a vulnerable transitive dependency, which a developer may not recognize, to a direct dependency, which a developer should recognize. The last step in a security update job is creating a pull request that removes the vulnerability and we wanted to include some context that lets developers know how changes relate to their project’s direct dependencies.

- Dependabot performs security updates for one vulnerable dependency at a time. (Updating one dependency at a time keeps diffs to a minimum and reduces the likelihood of introducing breaking changes.)

npm auditandnpm audit fix, however, operate on all project dependencies, which means Dependabot wouldn’t be able to tell which of the resulting updates were necessary for the dependency it’s concerned with.

Fortunately, there’s a JavaScript API for accessing the audit functionality underlying the npm audit and npm audit fix commands via Arborist, the component npm uses to manage dependency trees. Since Dependabot is a Ruby application, we wrote a helper script that uses the Arborist.audit() API and can be invoked in a subprocess from Ruby. The script takes as input a vulnerable dependency and a list of security advisories affecting it and returns as output the updates necessary to remove the vulnerabilities as reported by npm.

To meet our first requirement, the script uses the audit results from Arborist.audit() to perform a depth-first traversal of the project’s dependency tree, starting with direct dependencies. This top-down, recursive approach allows us to maintain the chain of dependencies linking the vulnerable dependency to its top-level ancestor(s) (which we’ll want to mention later when creating a pull request), and its worst-case time complexity is linear in the total number of dependencies.

function buildDependencyChains(auditReport, name) {

const helper = (node, chain, visited) => {

if (!node) {

return []

}

if (visited.has(node.name)) {

// We've already seen this node; end path.

return []

}

if (auditReport.has(node.name)) {

const vuln = auditReport.get(node.name)

if (vuln.isVulnerable(node)) {

return [{ fixAvailable: vuln.fixAvailable, nodes: [node, ...chain.nodes] }]

} else if (node.name == name) {

// This is a non-vulnerable version of the advisory dependency; end path.

return []

}

}

if (!node.edgesOut.size) {

// This is a leaf node that is unaffected by the vuln; end path.

return []

}

return [...node.edgesOut.values()].reduce((chains, { to }) => {

// Only prepend current node to chain/visited if it's not the project root.

const newChain = node.isProjectRoot ? chain : { nodes: [node, ...chain.nodes] }

const newVisited = node.isProjectRoot ? visited : new Set([node.name, ...visited])

return chains.concat(helper(to, newChain, newVisited))

}, [])

}

return helper(auditReport.tree, { nodes: [] }, new Set())

}

To meet our second requirement of operating on one vulnerable dependency at a time, the script takes advantage of the fact that the Arborist constructor accepts a custom audit registry URL to be used when requesting bulk advisory data. We initialize a mock audit registry server using nock that returns only the list of advisories (in the expected format) for the dependency that was passed into the script and we tell the Arborist instance to use it.

const arb = new Arborist({

auditRegistry: 'http://localhost:9999',

// ...

})

const scope = nock('http://localhost:9999')

.persist()

.post('/-/npm/v1/security/advisories/bulk')

.reply(200, convertAdvisoriesToRegistryBulkFormat(advisories))

We see both of these use cases—linking a vulnerable dependency to its top-level ancestor and conducting an audit for a single package or a particular set of vulnerabilities—as opportunities to extend Arborist and we’re working on integrating them upstream.

Back in the Ruby code, we parse and verify the audit results emitted by the helper script, accounting for scenarios such as a dependency being downgraded or removed in order to fix a vulnerability, and we incorporate the updates recommended by npm into the remainder of the security update job.

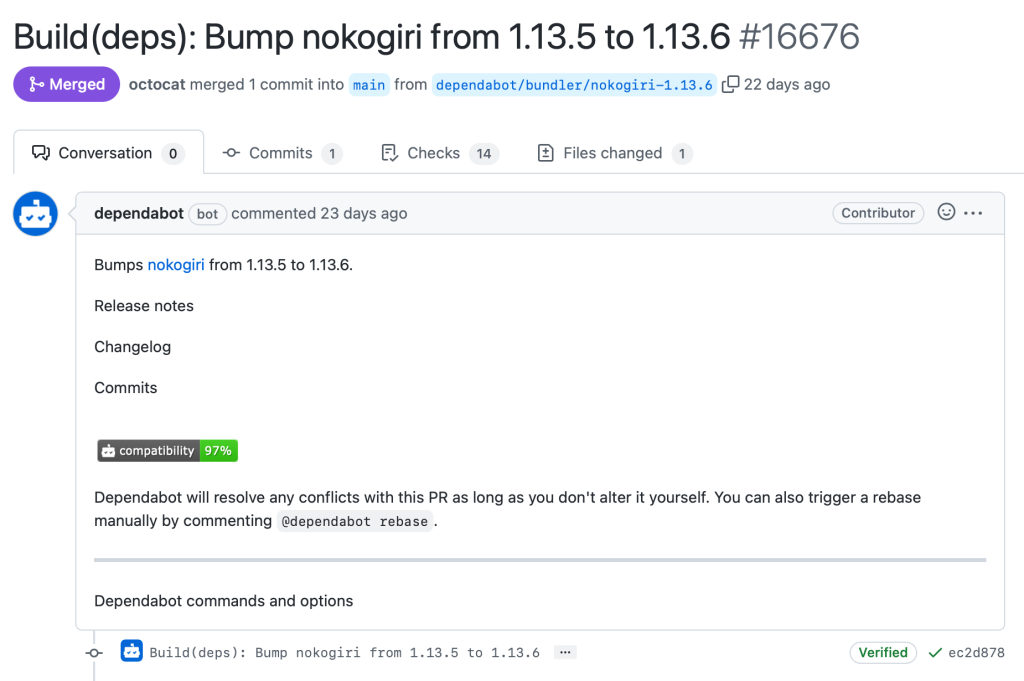

With a viable update path in hand, Dependabot is able to make the necessary updates to remove the vulnerability and submit a pull request that tells the developer about the transitive dependency and its top-level ancestor.

Caveats

When npm audit decides that a vulnerability can only be fixed by changing major versions, it requires use of the force option with npm audit fix. When the force option is used, npm will update to the latest version of a package, even if it means jumping several major versions. This breaks with Dependabot’s previous security update behavior. It also achieves our goal: to unlock conflicting dependencies in order to bring the vulnerable dependency to an unaffected version. Of course, you should still always review the changelog for breaking changes when jumping minor or major versions of a package.

Impact

We rolled out support for transitive security updates with npm in September 2022. Now, having a full quarter of data with the changes in place, we’re able to measure the impact: between Q1Y22 and Q4Y22 we saw a 42% reduction in update-not-possible errors for security updates on JavaScript projects.

If you have Dependabot security updates enabled on your npm projects, there’s nothing extra for you to do—you’re already benefiting from this improvement.

Looking ahead

I hope this post illustrates some of the considerations and trade-offs that are necessary when making improvements to an established system like Dependabot. We prefer to leverage the native functionality provided by package managers whenever possible, but as package managers come in all shapes and sizes, the approach may vary substantially from one ecosystem to the next.

We hope other package managers will introduce functionality similar to npm audit and npm audit fix that Dependabot can integrate with and we look forward to extending support for transitive security updates to those ecosystems as they do.

Dependencies

Dependencies