Post Syndicated from Max Wagner original https://github.blog/2023-09-26-how-github-uses-github-actions-and-actions-larger-runners-to-build-and-test-github-com/

The Developer Experience (DX) team at GitHub collaborated with a number of other teams to work on moving our continuous integration (CI) system to GitHub Actions to support the development and scaling demands of our engineering team. Our goal as a team is to enable our engineers to confidently and quickly ship software. To that end, we’ve worked on providing paved paths, a suite of automated tools and applications to streamline our development, runtime platforms, and deployments. Recently, we’ve been working to make our CI experience better by leveraging the newly released GitHub feature, Actions larger runners, to run our CI.

Read on to see how we run 15,000 CI jobs within an hour across 150,000 cores of compute!

Brief history of CI at GitHub

GitHub has invested in a variety of different CI systems throughout its history. With each system, our aim has been to enhance the development experience for both GitHub engineers writing and deploying code and for engineers maintaining the systems.

However, with past CI systems we faced challenges with scaling the system to meet the needs of our engineering team to provide both stable and ephemeral build environments. Neither of these challenges allowed us to provide the optimal developer experience.

Then, GitHub released GitHub Actions larger runners. This gave us an opportunity not only to transition to a fully featured CI system, but also to develop, experience, and utilize the systems we are creating for our customers and to drive feedback to help build the product. For the GitHub DX team, this transition was a great opportunity to move away from maintaining our past CI systems while delivering a superior developer experience.

What are larger runners?

Larger runners are GitHub Actions runners that are hosted by GitHub. They are managed virtual machines (VMs) with more RAM, CPU, and disk space than standard GitHub-hosted runners. There are a variety of different machine sizes offered for the runners as well as some additional features compared to the standard GitHub-hosted runners.

Larger runners are available to GitHub Team and GitHub Enterprise Cloud customers. Check out these docs to learn more about larger runners.

Why did we pick larger runners?

Autoscaling and managed

Coming from previous iterations of GitHub’s CI systems, we needed the ability to create CI machines on demand to meet the fast feedback cycles needed by GitHub engineers and to scale with the rate of change of the site.

With larger runners, we maintain the ability to autoscale our CI system because GitHub will automatically create multiple instances of a runner that scale up and down to match the job demands of our engineers. An added benefit is that the GitHub DX team no longer has to worry about the scaling of the runners since all of those complexities are handled by GitHub itself!

We wanted to share some raw numbers on our current peak utilization of larger runners:

- Uses 4,500 concurrent 32-core runners

- Runs 125,000 build minutes per hour

- Queues and runs approximately 15,000 jobs within an hour

- Allocates around 150,000 cores of compute

(Beta) Custom VM image support

GitHub Actions provides runners with a lot of tools already baked in, which is sufficient and convenient for a variety of projects across the company. However, for some complex production GitHub services, the prebuilt runners did not satisfy all our requirements.

To maintain an efficient and fast CI system, the DX team needed the ability to provide machines with all the tools needed to build those production services. We didn’t want to spend extra time installing tools or compiling projects during CI jobs.

We are currently building features into larger runners so they have the ability to be launched from a custom VM image, called custom images. While this feature is still in beta, using custom images is a huge benefit to GitHub’s CI lifecycle for a couple of reasons.

First, custom images allows GitHub to bundle all the required software and tools needed to build and test complex production bearing services. Anything that is unique to GitHub or one of our projects can be pre-installed on the image before a GitHub Actions workflow even starts.

Second, custom images enable GitHub to dramatically speed up our GitHub Actions workflows by acting as a bootstrapping cache for some projects. During custom image creation, we bundle a pre-built version of a project’s source code into the image. Subsequently, when the project starts a GitHub Actions workflow, it can utilize a cached version of its source code, and any other build artifacts, to speed up its build process.

The cached project source code on the custom VM image can quickly become out of date due to the rapid rate of development within GitHub. This, in turn, causes workflow durations to increase. The DX team worked with the GitHub Actions engineering team to create an API on GitHub to regularly update the custom image multiple times a day to keep the project source up to date.

In practice, this has reduced the bootstrapping time of our projects significantly. Without custom images, our workflows would take around 50 minutes from start to finish, versus the 12 minutes they take today. This is a game changer for our engineers.

We’re working on a way to offer this functionality at scale. If you are interested in custom images for your CI/CD workflows, please reach out to your account manager to learn more!

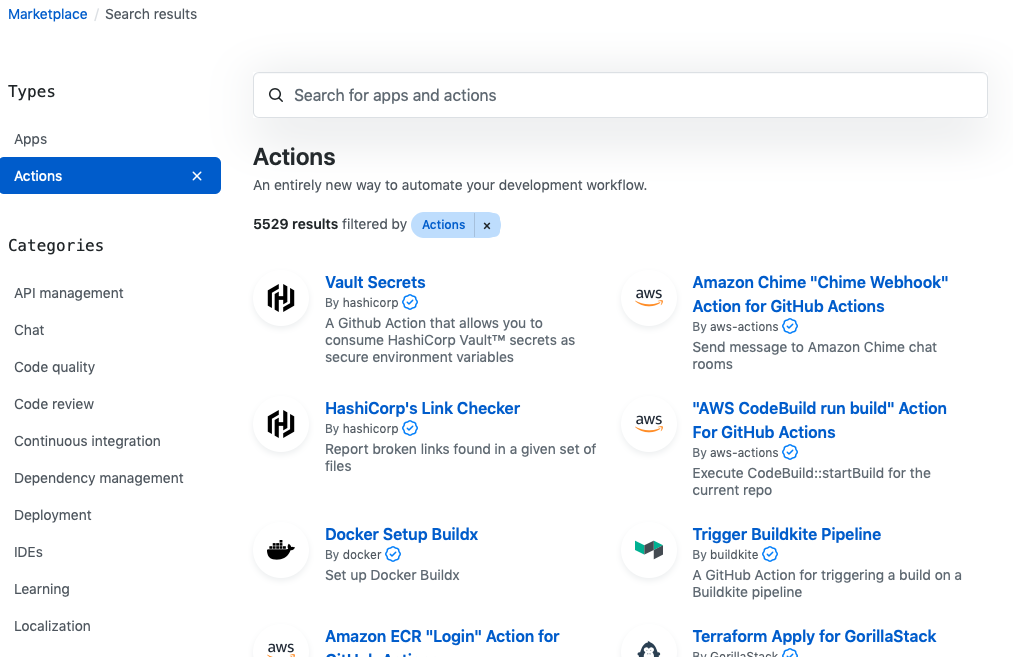

Important GitHub Actions features

There are thousands of projects at GitHub — from services that run production workloads to small tools that need to run CI to perform their daily operations. To make this a reality, GitHub leverages several important features in GitHub Actions that enable us to use the platform efficiently and securely across the company at scale.

Reusable workflows

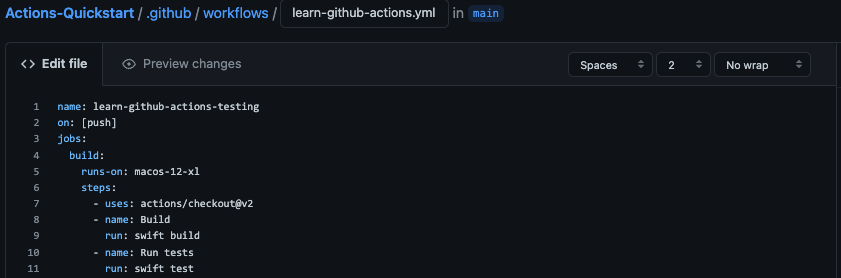

One of the DX team’s driving goals is to pave paths for all repositories to run CI without introducing unnecessary repetition across repositories. Prior to GitHub Actions, we created single job configurations that could be used across multiple projects. In GitHub Actions, this was not as easy because any repository can define its own workflows. Reusable workflows to the rescue!

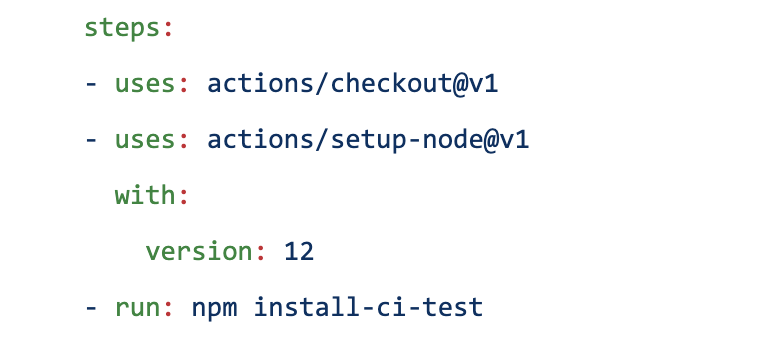

The reusable workflows feature in GitHub Actions provides a way to centrally manage a workflow in a repository that can be utilized by many other repositories in an organization. This was critical in our transition from our previous CI system to GitHub Actions. We were able to create several prebuilt workflows in a single repository, and many repositories could then use those workflows. This makes the process of adding CI to an existing or new project very much plug and play.

In our central repository hosting our reusable workflows, we can have workflows defined like:

on:

workflow_call:

inputs:

cibuild-script:

description: 'Which cibuild script to run.'

type: string

required: false

default: "script/cibuild"

secrets:

service-api-key:

required: true

jobs:

reusable_workflow_job:

runs-on: gh-larger-runner-medium

name: Simple Workflow Job

timeout-minutes: 20

steps:

- name: Checkout Project

uses: actions/checkout@v3

- name: Run cibuild script

run: |

bash ${{ inputs.cibuild-script }}

shell: bash

And in consuming repositories, they can simply utilize the reusable workflow, with just a few lines of code!

name: my-new-project

on:

workflow_dispatch:

push:

jobs:

call-reusable-workflow:

uses: github/internal-actions/.github/workflows/default.yml@main

with:

cibuild-script: "script/cibuild-my-tests"

secrets:

service-api-key: ${{ secrets.SERVICE_API_KEY }}

Another great benefit of the reusable workflows feature is that the runner can be defined in the Reusable Workflow, meaning that we can guarantee all users of the workflow will run on our designated larger runner pool. Now, projects don’t need to worry about which runner they need to use!

(Beta) Reusing previous workflow outcomes

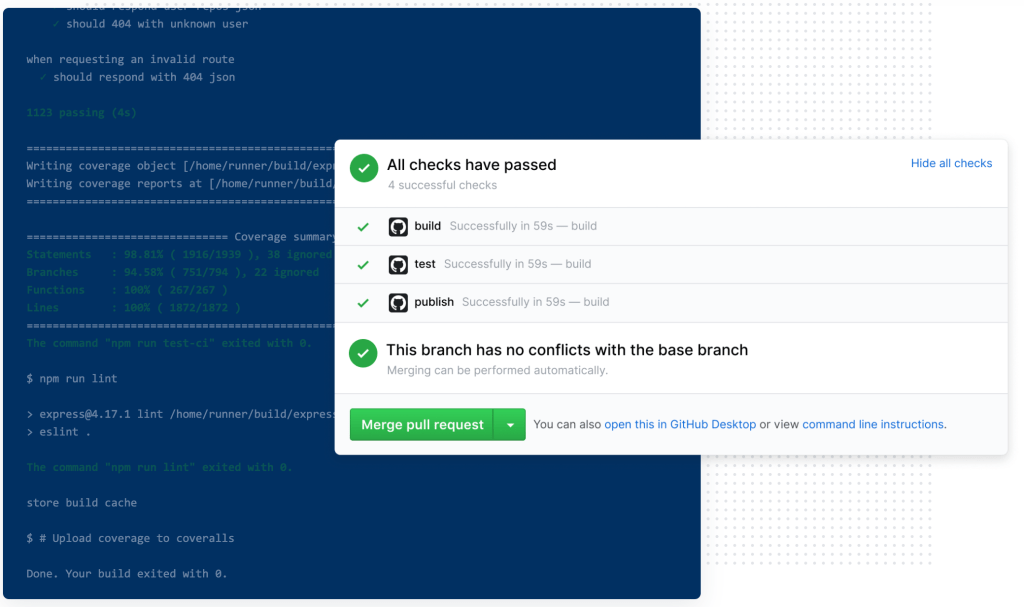

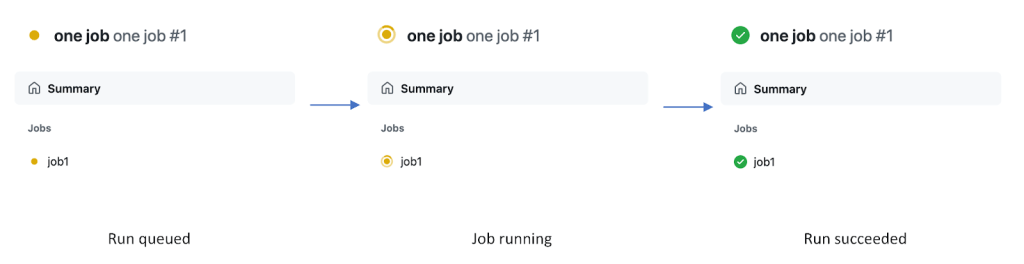

To optimize our developer experience, the DX team worked with our engineering team to create a feature for GitHub Actions that allows workflows to reuse the outcome of a previous workflow run where the outcomes would be the same.

In some cases, the file contents of a repository are exactly the same between workflow runs that run on different commits. That is, the Git tree IDs for the current commit is the same as the previous commit (there are no file differences). In these cases, we can bypass CI checks by reusing the previous workflow outcomes and allow engineers to not have to wait for CI to run again.

This feature saves GitHub engineers from running anywhere from 300 to 500 workflows runs a day!

Other challenges faced

Private service access

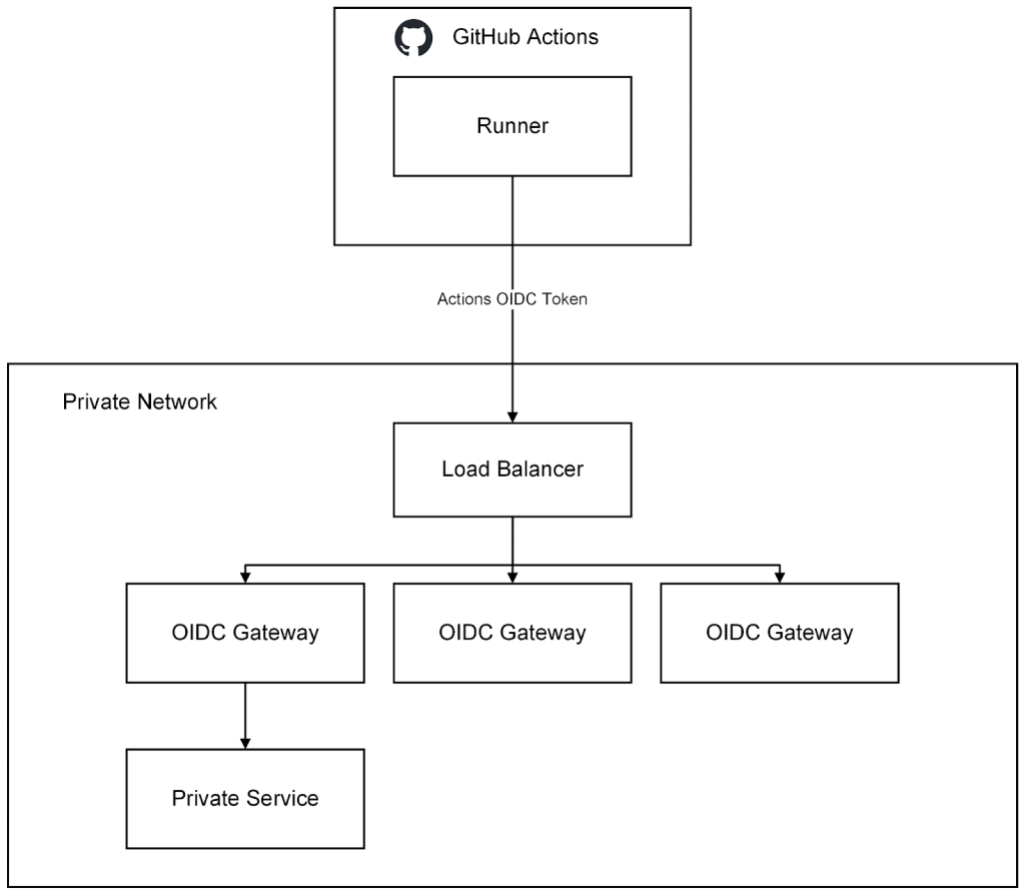

During some internal GitHub Actions workflow runs, the workflows need the ability to access some GitHub private services, within a GitHub virtual private cloud (VPC), over the network. These could be resources such as artifact storage, application metadata services, and other services that enable invocation of our test harness.

When we moved to larger runners, this requirement to access private services became a top-of-mind concern. In previous iterations of our CI infrastructure, these private services were accessible through other cloud and network configurations. However, larger runners are isolated from other production environments, meaning they cannot access our private services.

Like all companies, we need to focus on both the security of our platform as well as the developer experience. To satisfy these two requirements, GitHub developed a remote access solution that allows clients residing outside of our VPCs (larger runners) to securely access select private services.

This remote access solution works on the principle of minting an OIDC token in GitHub Actions, passing the OIDC token to a remote access gateway that authorizes the request by validating the OIDC token, and then proxying the request to the private service residing in a private network.

With this solution we are able to securely provide remote access from larger runners running GitHubActions to our private resources within our VPC.

GitHub has open sourced the basic scaffolding of this remote access gateway in the github/actions-oidc-gateway-example repository, so be sure to check it out!

Conclusion

GitHub Actions provides a robust and smooth developer experience for GitHub engineers working on GitHub.com. We have been able to accomplish this by using the power of GitHub Actions features, such as reusable workflows and reusable workflow outcomes, and by leveraging the scalability and manageability of the GitHub Actions larger runners. We have also used this effort to enhance the GitHub Actions product. To put it simply, GitHub runs on GitHub.

The post How GitHub uses GitHub Actions and Actions larger runners to build and test GitHub.com appeared first on The GitHub Blog.

Let’s hear more from

Let’s hear more from

Detects when IssueOps commands are used on a pull request.

Detects when IssueOps commands are used on a pull request.  Configurable: choose your command syntax, environment, noop trigger, base branch, reaction, and more.

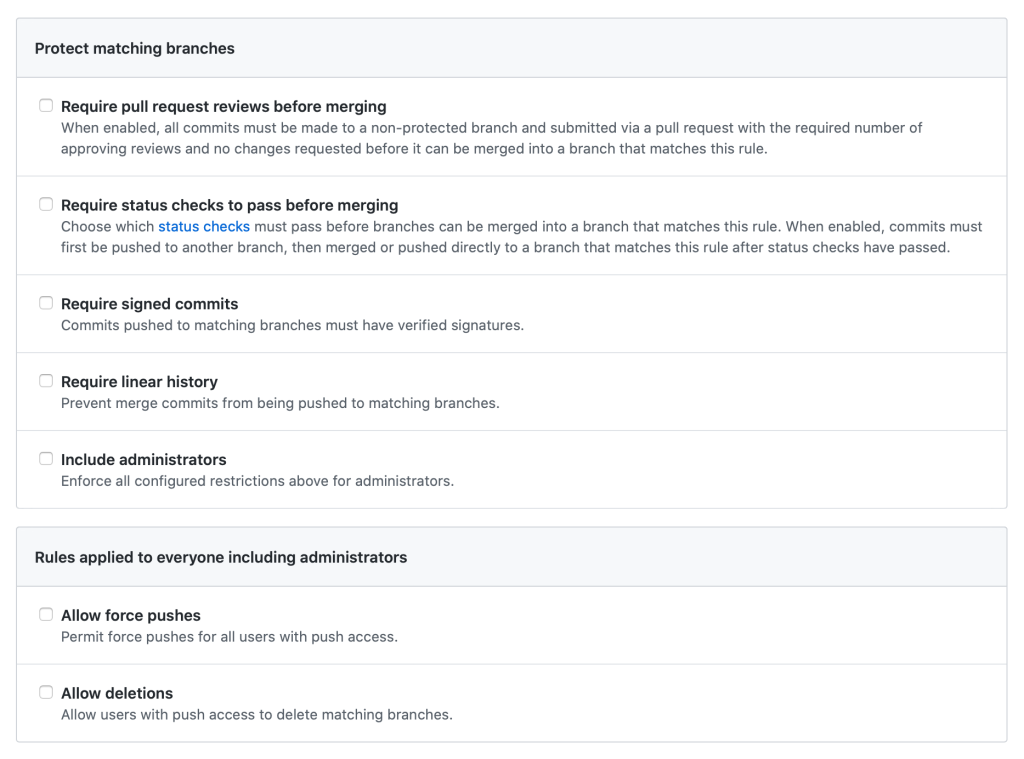

Configurable: choose your command syntax, environment, noop trigger, base branch, reaction, and more. Respects your branch protection settings configured for the repository.

Respects your branch protection settings configured for the repository. Comments and reacts to your IssueOps commands.

Comments and reacts to your IssueOps commands. Triggers GitHub deployments for you with simple configuration.

Triggers GitHub deployments for you with simple configuration. Deploy locks to prevent multiple deployments from clashing.

Deploy locks to prevent multiple deployments from clashing. Configurable environment targets.

Configurable environment targets.

Share company updates to GitHub’s intranet

Share company updates to GitHub’s intranet Create weekly reports on program status updates

Create weekly reports on program status updates Turn weekly team photos into GIFs and upload to README

Turn weekly team photos into GIFs and upload to README

or merge pull requests whilst lounging on the couch.

or merge pull requests whilst lounging on the couch.