Post Syndicated from Annika Garbers original https://blog.cloudflare.com/welcome-to-cio-week/

The world of a CIO has changed — today’s corporate networks look nothing like those of even five or ten years ago — and these changes have created gaps in visibility and security, introduced high costs and operational burdens, and made networks fragile and brittle.

We’re optimistic that CIOs have a brighter future to look forward to. The Internet has evolved from a research project into integral infrastructure companies depend on, and we believe a better Internet is the path forward to solving the most challenging problems CIOs face today. Cloudflare is helping build an Internet that’s faster, more secure, more reliable, more private, and programmable, and by doing so, we’re enabling organizations to build their next-generation networks on ours.

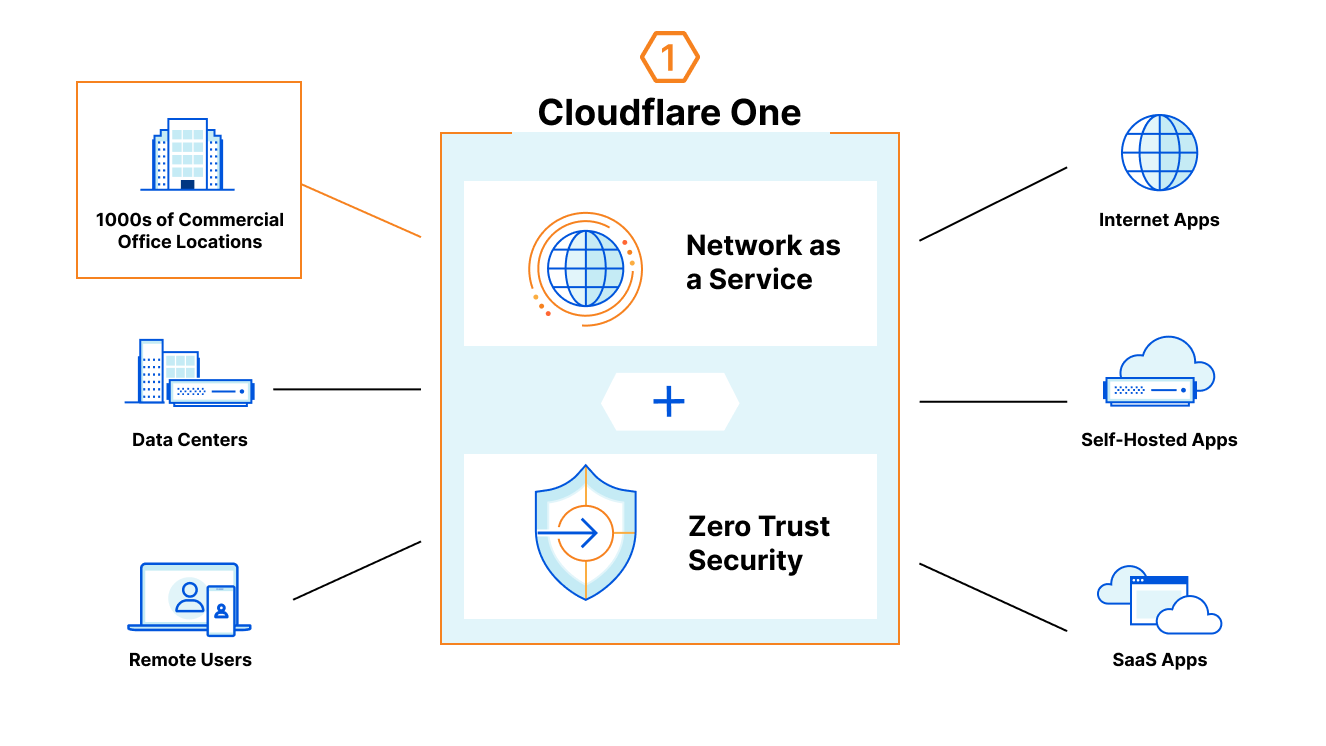

This week, we’ll demonstrate how Cloudflare One, our Zero Trust Network-as-a-Service, is helping CIOs transform their corporate networks. We’ll also introduce new functionality that expands the scope of Cloudflare’s platform to address existing and emerging needs for CIOs. But before we jump into the week, we wanted to spend some time on our vision for the corporate network of the future. We hope this explanation will clarify language and acronyms used by vendors and analysts who have realized the opportunity in this space (what does Zero Trust Network-as-a-Service mean, anyway?) and set context for how our innovative approach is realizing this vision for real CIOs today.

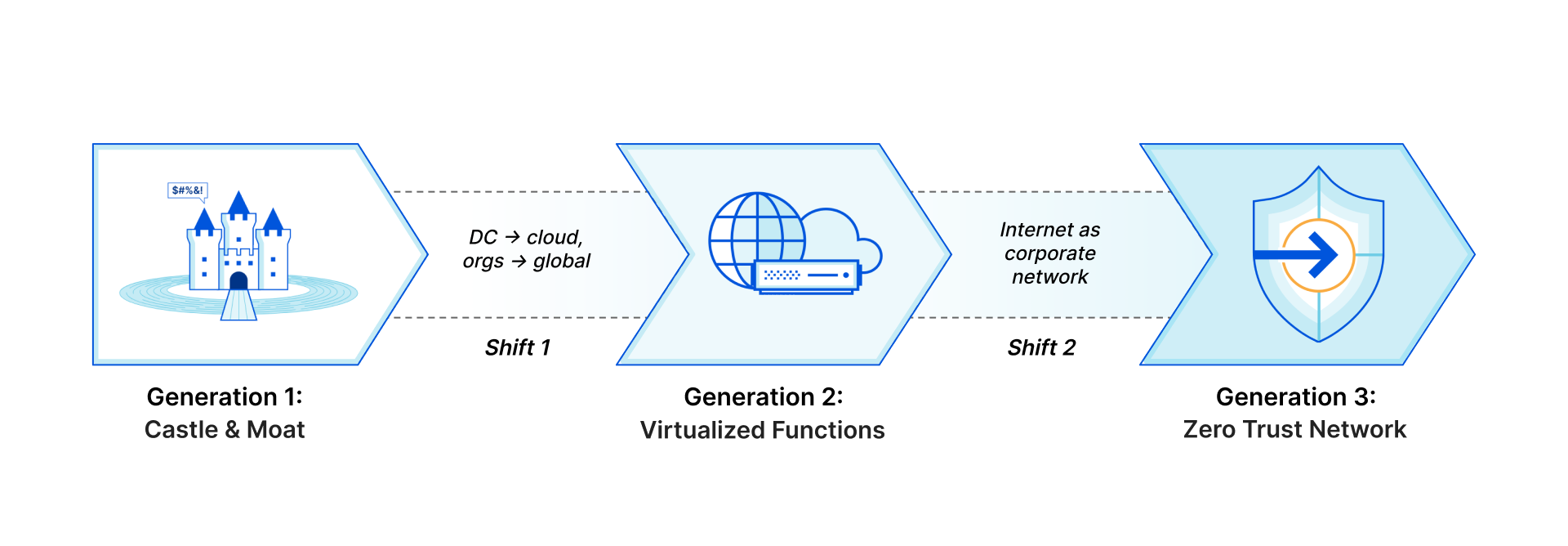

Generation 1: Castle and moat

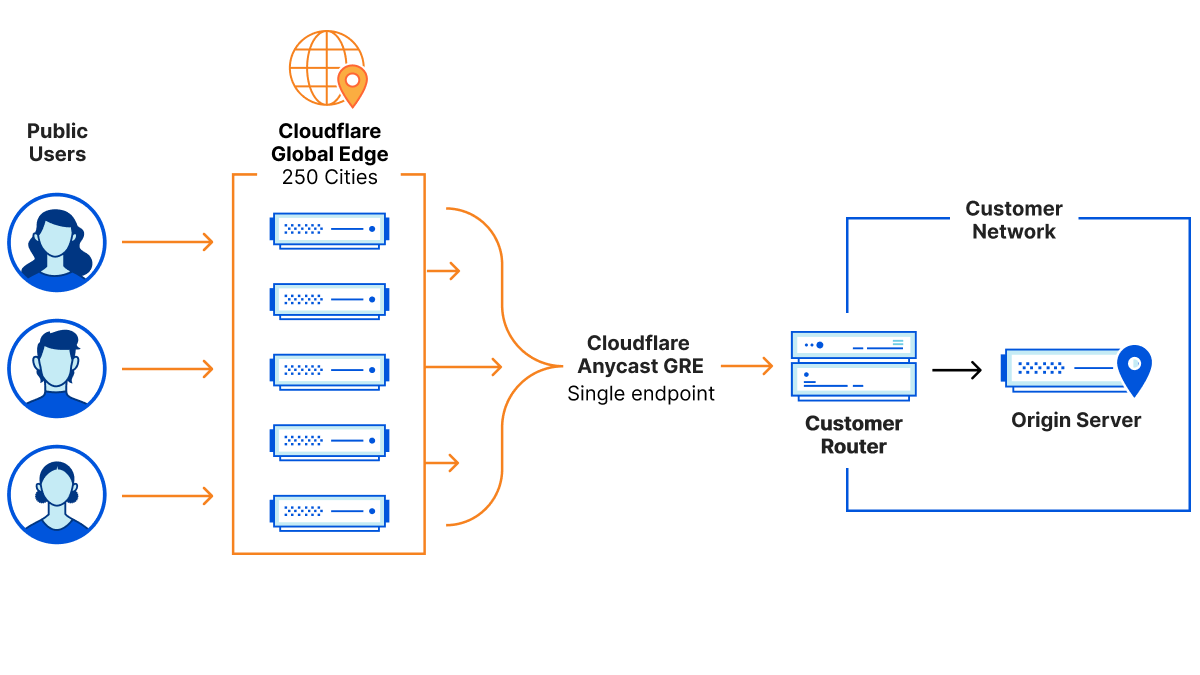

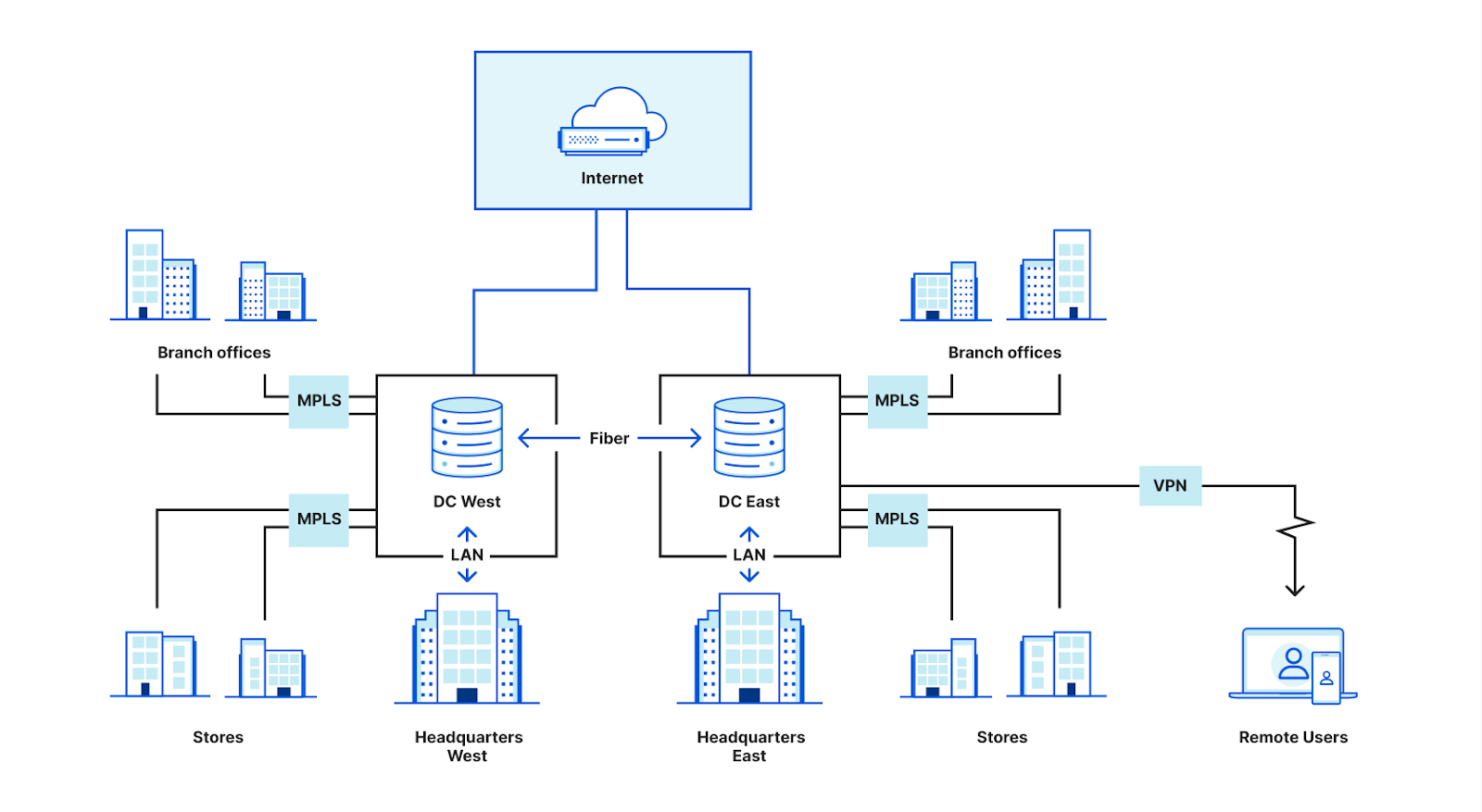

For years, corporate networks looked like this:

Companies built or rented space in data centers that were physically located within or close to major office locations. They hosted business applications — email servers, ERP systems, CRMs, etc. — on servers in these data centers. Employees in offices connected to these applications through the local area network (LAN) or over private wide area network (WAN) links from branch locations. A stack of security hardware (e.g., firewalls) in each data center enforced security for all traffic flowing in and out. Once on the corporate network, users could move laterally to other connected devices and hosted applications, but basic forms of network authentication and physical security controls like employee badge systems generally prevented untrusted users from getting access.

Network Architecture Scorecard: Generation 1

| Characteristic | Score | Description |

|---|---|---|

| Security | ⭐⭐ | All traffic flows through perimeter security hardware. Network access restricted with physical controls. Lateral movement is only possible once on network. |

| Performance | ⭐⭐⭐ | Majority of users and applications stay within the same building or regional network. |

| Reliability | ⭐⭐ | Dedicated data centers, private links, and security hardware present single points of failure. There are cost tradeoffs to purchase redundant links and hardware. |

| Cost | ⭐⭐ | Private connectivity and hardware are high cost capital expenditures, creating a high barrier to entry for small or new businesses. However, a limited number of links/boxes are required (trade off with redundancy/reliability). Operational costs are low to medium after initial installation. |

| Visibility | ⭐⭐⭐ | All traffic is routed through central location, so it’s possible to access NetFlow/packet captures and more for 100% of flows. |

| Agility | ⭐ | Significant network changes have a long lead time. |

| Precision | ⭐ | Controls are primarily exercised at the network layer (e.g., IP ACLs). Accomplishing “allow only HR to access employee payment data” looks like: IP in range X allowed to access IP in range Y (and requires accompanying spreadsheet to track IP allocation). |

Applications and users left the castle

So what changed? In short, the Internet. Faster than anyone expected, the Internet became critical to how people communicate and get work done. The Internet introduced a radical shift in how organizations thought about their computing resources: if any computer can talk to any other computer, why would companies need to keep servers in the same building as employees’ desktops? And even more radical, why would they need to buy and maintain their own servers at all? From these questions, the cloud was born, enabling companies to rent space on other servers and host their applications while minimizing operational overhead. An entire new industry of Software-as-a-Service emerged to simplify things even further, allowing companies to completely abstract away questions of capacity planning, server reliability, and other operational struggles.

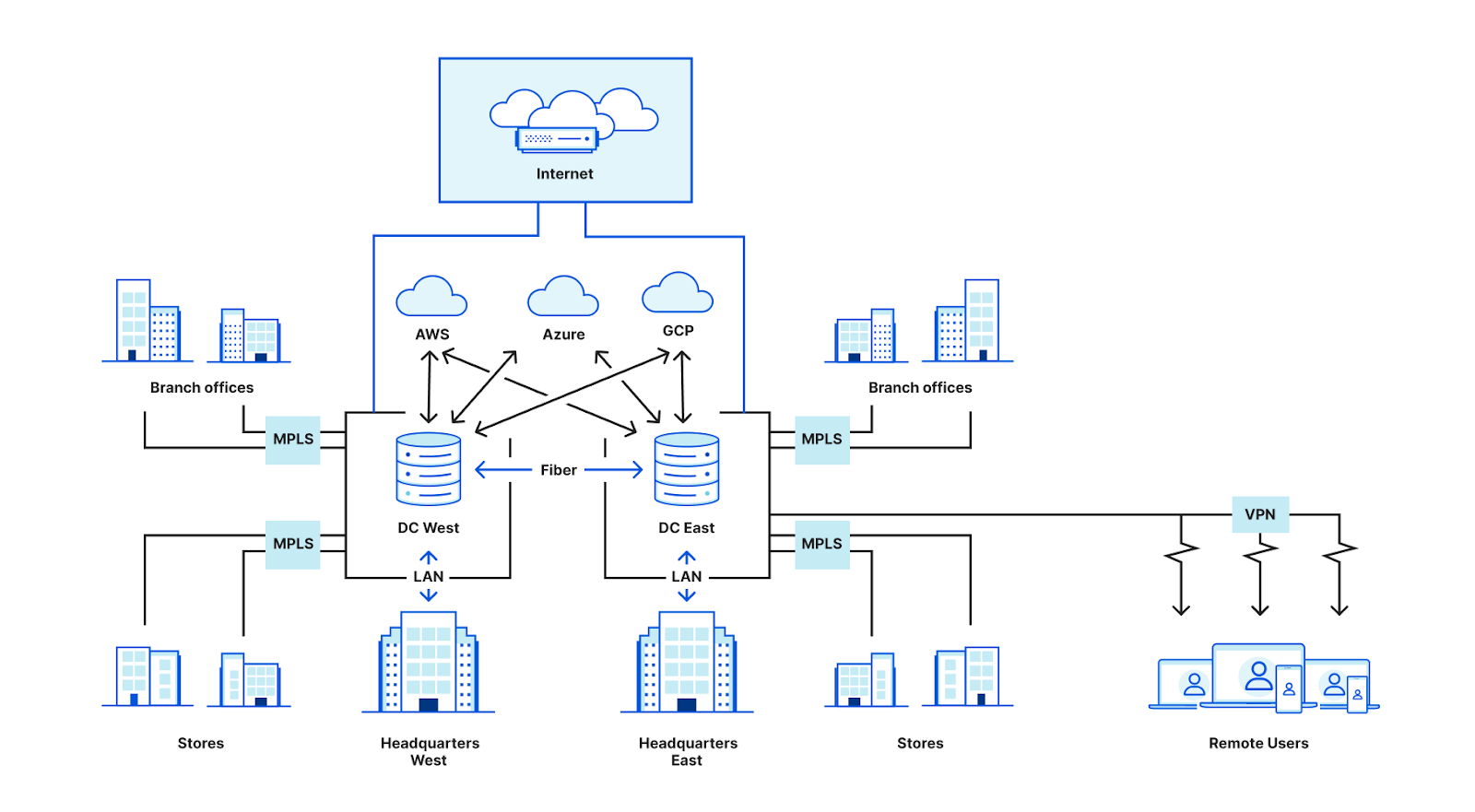

This golden, Internet-enabled future — cloud and SaaS everything — sounds great! But CIOs quickly ran into problems. Established corporate networks with castle-and-moat architecture can’t just go down for months or years during a large-scale transition, so most organizations are in a hybrid state, one foot still firmly in the world of data centers, hardware, and MPLS. And traffic to applications still needs to stay secure, so even if it’s no longer headed to a server in a company-owned data center, many companies have continued to send it there (backhauled through private lines) to flow through a stack of firewall boxes and other hardware before it’s set free.

As more applications moved to the Internet, the volume of traffic leaving branches — and being backhauled through MPLS lines through data centers for security — continued to increase. Many CIOs faced an unpleasant surprise in their bandwidth charges the month after adopting Office 365: with traditional network architecture, more traffic to the Internet meant more traffic over expensive private links.

As if managing this first dramatic shift — which created complex hybrid architectures and brought unexpected cost increases — wasn’t enough, CIOs had another to handle in parallel. The Internet changed the game not just for applications, but also for users. Just as servers don’t need to be physically located at a company’s headquarters anymore, employees don’t need to be on the office LAN to access their tools. VPNs allow people working outside of offices to get access to applications hosted on the company network (whether physical or in the cloud).

These VPNs grant remote users access to the corporate network, but they’re slow, clunky to use, and can only support a limited number of people before performance degrades to the point of unusability. And from a security perspective, they’re terrifying — once a user is on the VPN, they can move laterally to discover and gain access to other resources on the corporate network. It’s much harder for CIOs and CISOs to control laptops with VPN access that could feasibly be brought anywhere — parks, public transportation, bars — than computers used by badged employees in the traditional castle-and-moat office environment.

In 2020, COVID-19 turned these emerging concerns about VPN cost, performance, and security into mission-critical, business-impacting challenges, and they’ll continue to be even as some employees return to offices.

Generation 2: Smörgåsbord of point solutions

Lots of vendors have emerged to tackle the challenges introduced by these major shifts, often focusing on one or a handful of use cases. Some providers offer virtualized versions of hardware appliances, delivered over different cloud platforms; others have cloud-native approaches that address a specific problem like application access or web filtering. But stitching together a patchwork of point solutions has caused even more headaches for CIOs and most products available focused only on shoring up identity, endpoint, and application security without truly addressing network security.

Gaps in visibility

Compared to the castle and moat model, where traffic all flowed through a central stack of appliances, modern networks have extremely fragmented visibility. IT teams need to piece together information from multiple tools to understand what’s happening with their traffic. Often, a full picture is impossible to assemble, even with the support of tools including SIEM and SOAR applications that consolidate data from multiple sources. This makes troubleshooting issues challenging: IT support ticket queues are full of unsolved mysteries. How do you manage what you can’t see?

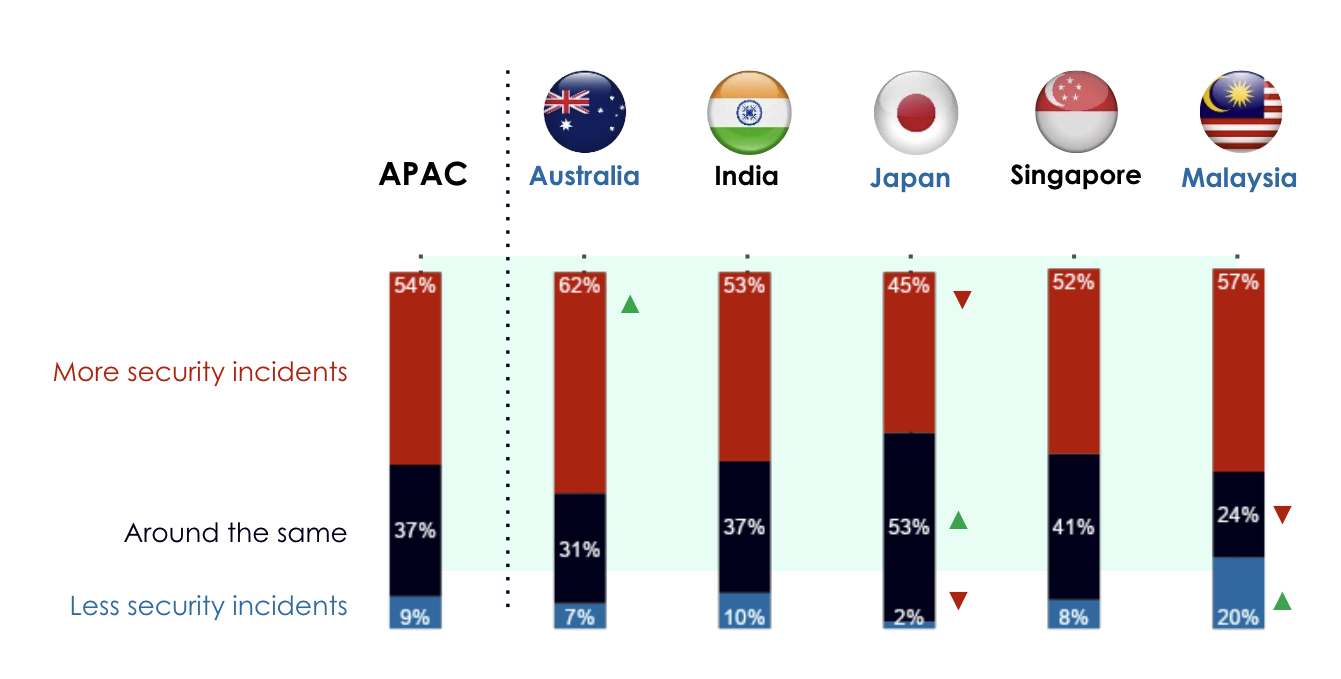

Gaps in security

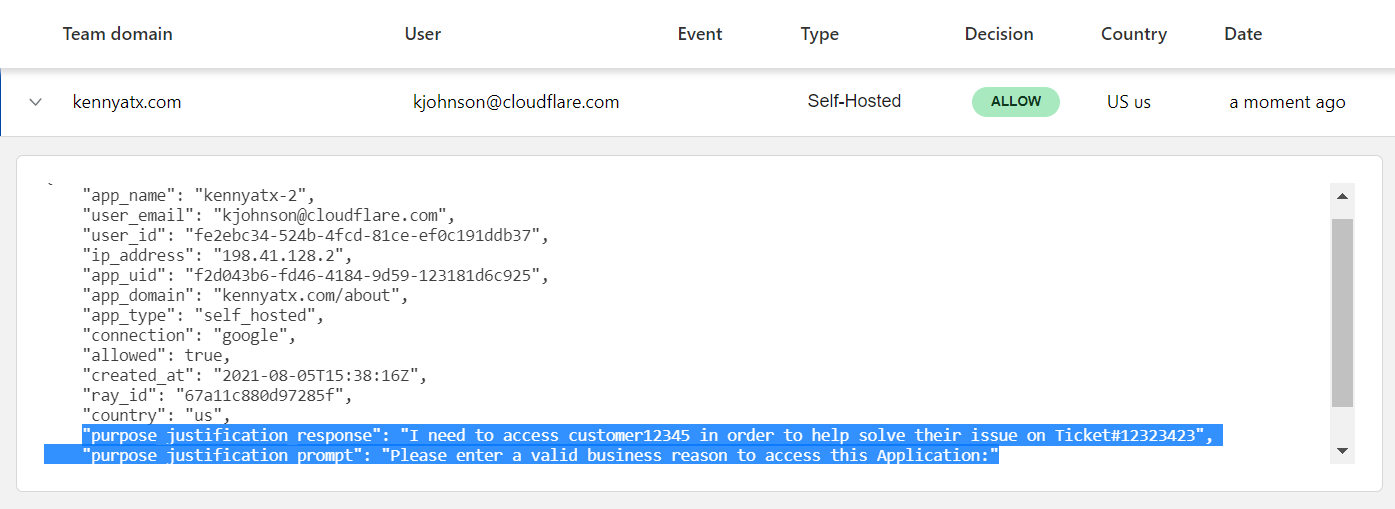

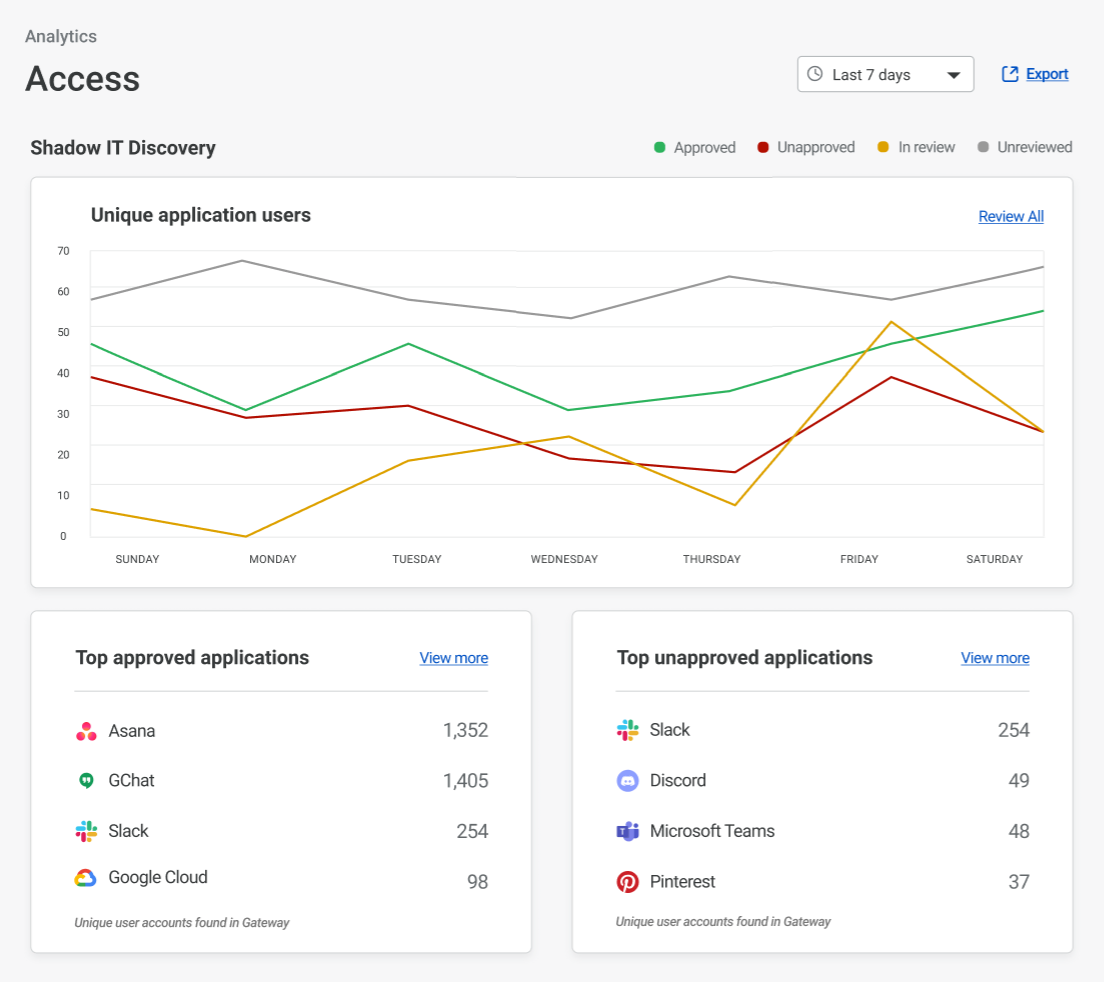

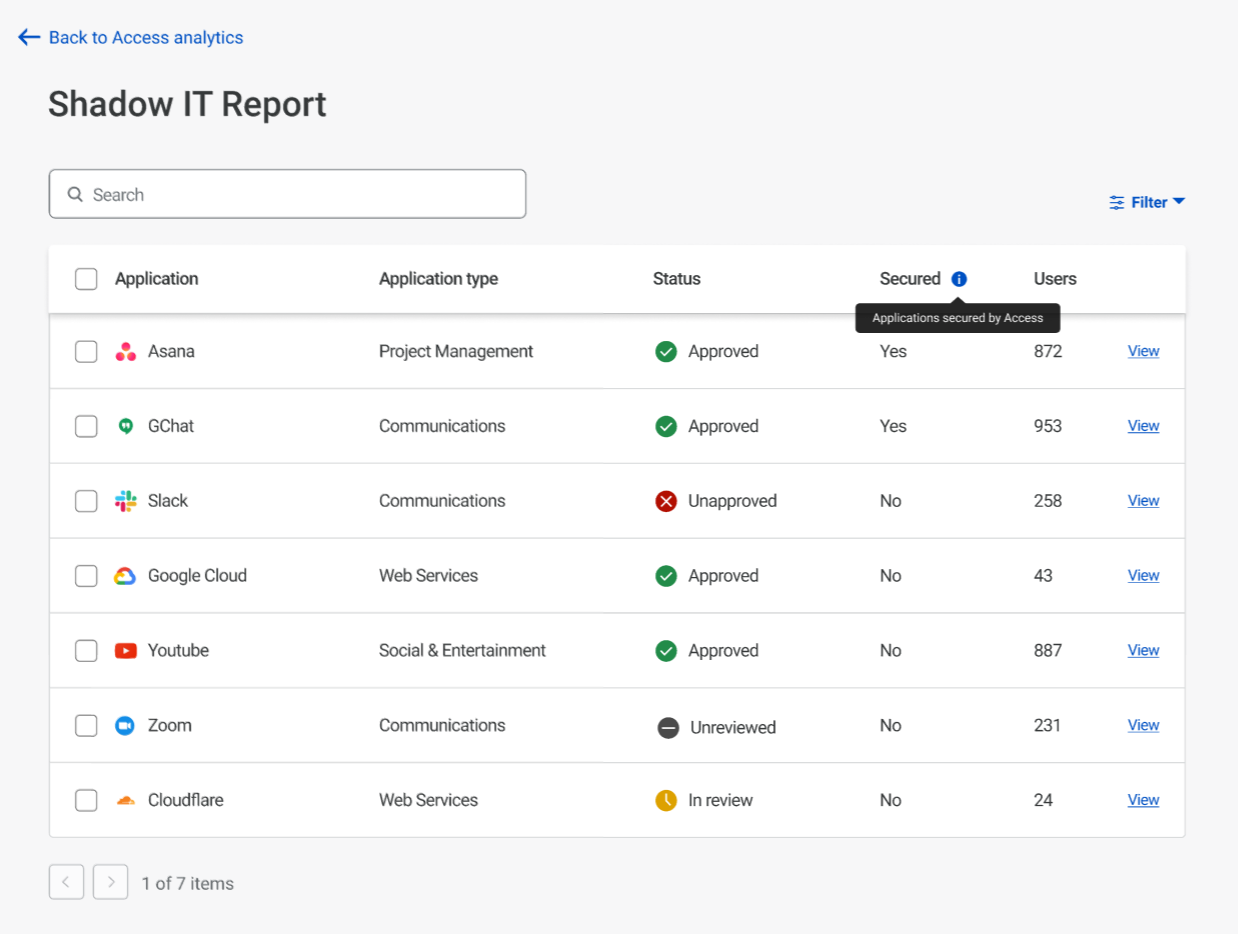

This patchwork architecture — coupled with the visibility gaps it introduced — also creates security challenges. The concept of “Shadow IT” emerged to describe services that employees have adopted and are using without explicit IT permission or integration into the corporate network’s traffic flow and security policies. Exceptions to filtering policies for specific users and use cases have become unmanageable, and our customers have described a general “wild west” feeling about their networks as Internet use grew faster than anyone could have anticipated. And it’s not just gaps in filtering that scare CIOs — the proliferation of Shadow IT means company data can and does now exist in a huge number of unmanaged places across the Internet.

Poor user experience

Backhauling traffic through central locations to enforce security introduces latency for end users, amplified as they work in locations farther and farther away from their former offices. And the Internet, while it’s come a long way, is still fundamentally unpredictable and unreliable, leaving IT teams struggling to ensure availability and performance of apps for users with many factors (even down to shaky coffee shop Wi-Fi) out of their control.

High (and growing) cost

CIOs are still paying for MPLS links and hardware to enforce security across as much traffic as possible, but they’ve now taken on additional costs of point solutions to secure increasingly complex networks. And because of fragmented visibility and security gaps, coupled with performance challenges and rising expectations for a higher quality of user experience, the cost of providing IT support is growing.

Network fragility

All this complexity means that making changes can be really hard. On the legacy side of current hybrid architectures, provisioning MPLS lines and deploying new security hardware come with long lead times, only worsened by recent issues in the global hardware supply chain. And with the medley of point solutions introduced to manage various aspects of the network, a change to one tool can have unintended consequences for another. These effects compound in IT departments often being the bottleneck for business changes, limiting the flexibility of organizations to adapt to an only-accelerating rate of change.

Network Architecture Scorecard: Generation 2

| Characteristic | Score | Description |

|---|---|---|

| Security | ⭐ | Many traffic flows are routed outside of perimeter security hardware, Shadow IT is rampant, and controls that do exist are enforced inconsistently and across a hodgepodge of tools. |

| Performance | ⭐ | Traffic backhauled through central locations introduces latency as users move further away; VPNs and a bevy of security tools introduce processing overhead and additional network hops. |

| Reliability | ⭐⭐ | The redundancy/cost tradeoff from Generation 1 is still present; partial cloud adoption grants some additional resiliency but growing use of unreliable Internet introduces new challenges. |

| Cost | ⭐ | Costs from Generation 1 architecture are retained (few companies have successfully deprecated MPLS/security hardware so far), but new costs of additional tools added, and operational overhead is growing. |

| Visibility | ⭐ | Traffic flows and visibility are fragmented; IT stitches partial picture together across multiple tools. |

| Agility | ⭐⭐ | Some changes are easier to make for aspects of business migrated to cloud; others have grown more painful as additional tools introduce complexity. |

| Precision | ⭐⭐ | Mix of controls exercised at network layer and application layer. Accomplishing “allow only HR to access employee payment data” looks like: Users in group X allowed to access IP in range Y (and accompanying spreadsheet to track IP allocation) |

In summary — to reiterate where we started — modern CIOs have really hard jobs. But we believe there’s a better future ahead.

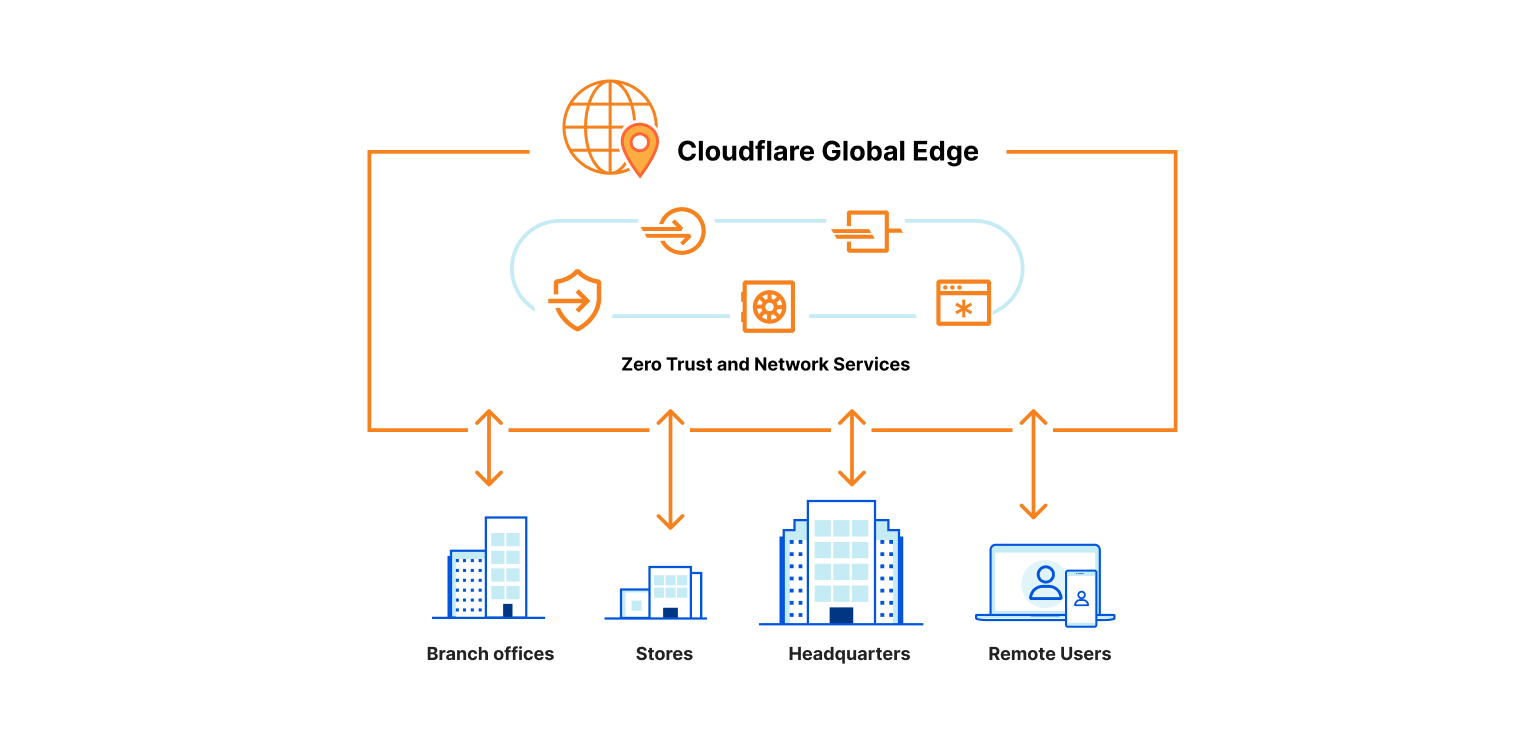

Generation 3: The Internet as the new corporate network

The next generation of corporate networks will be built on the Internet. This shift is already well underway, but CIOs need a platform that can help them get access to a better Internet — one that’s more secure, faster, more reliable, and preserves user privacy while navigating complex global data regulations.

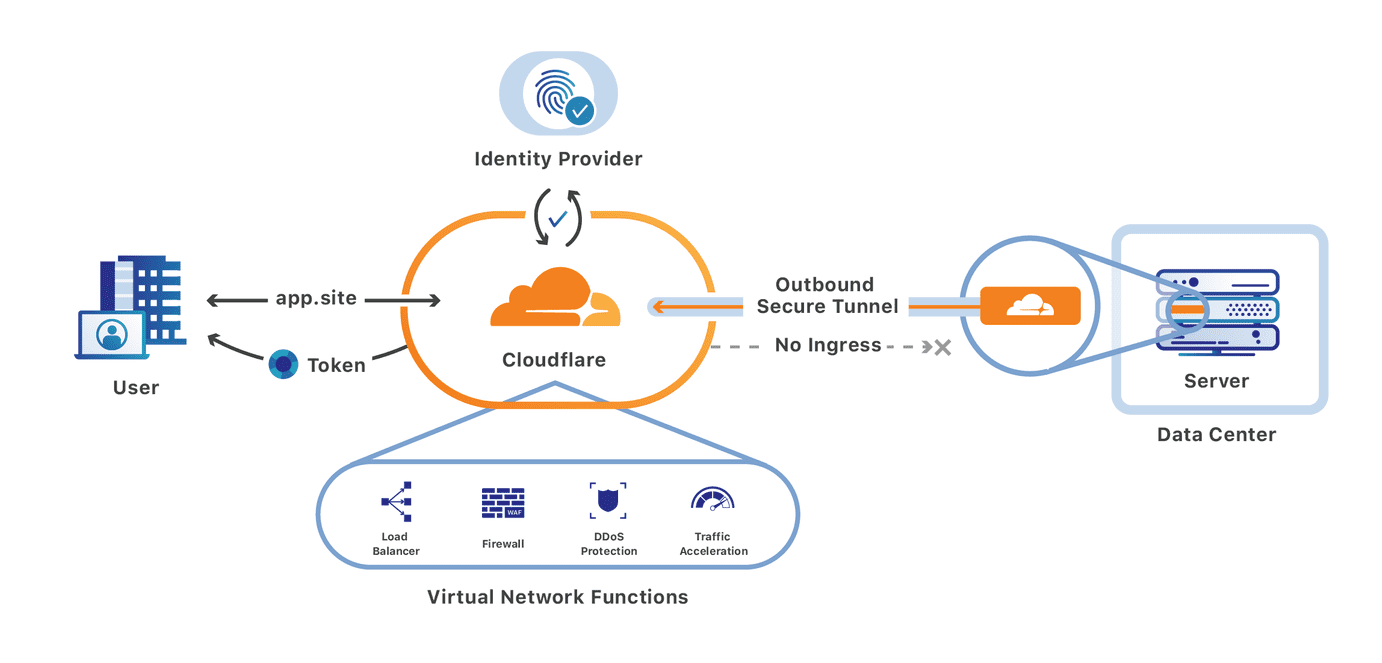

Zero Trust security at Internet scale

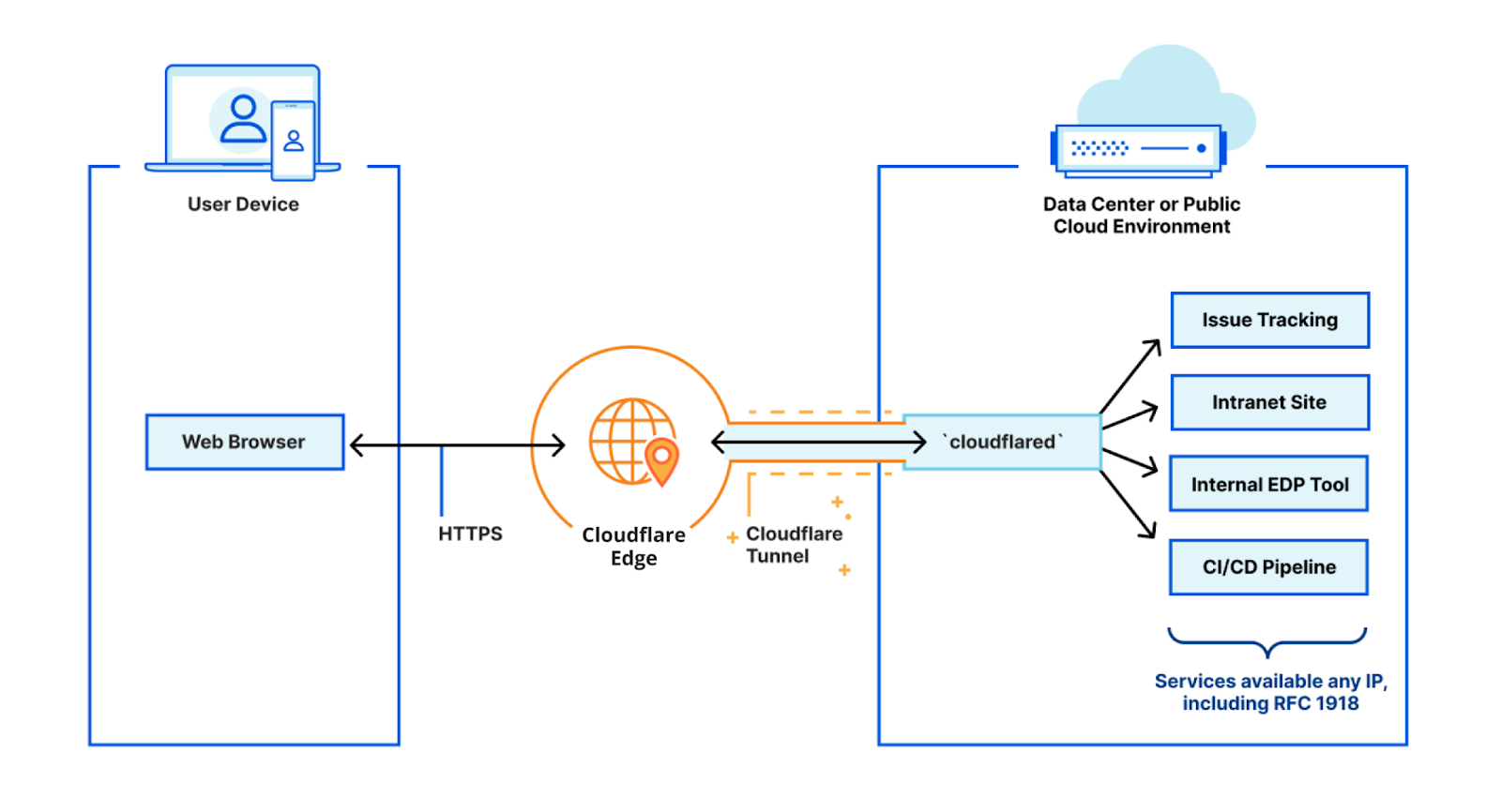

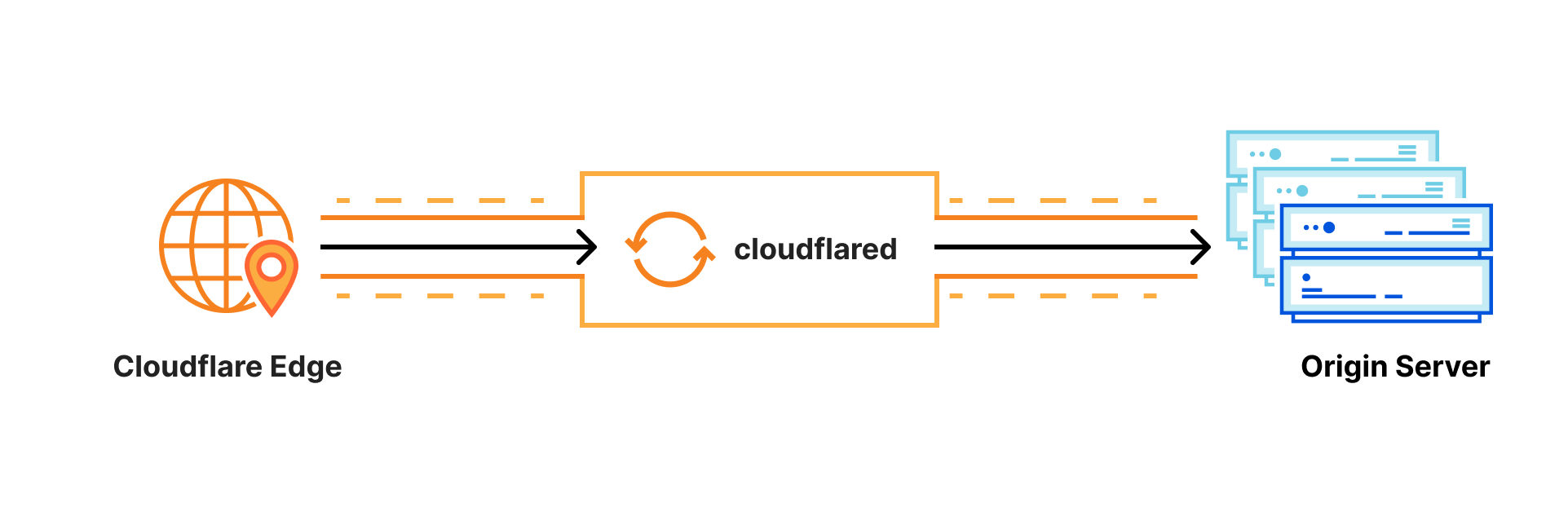

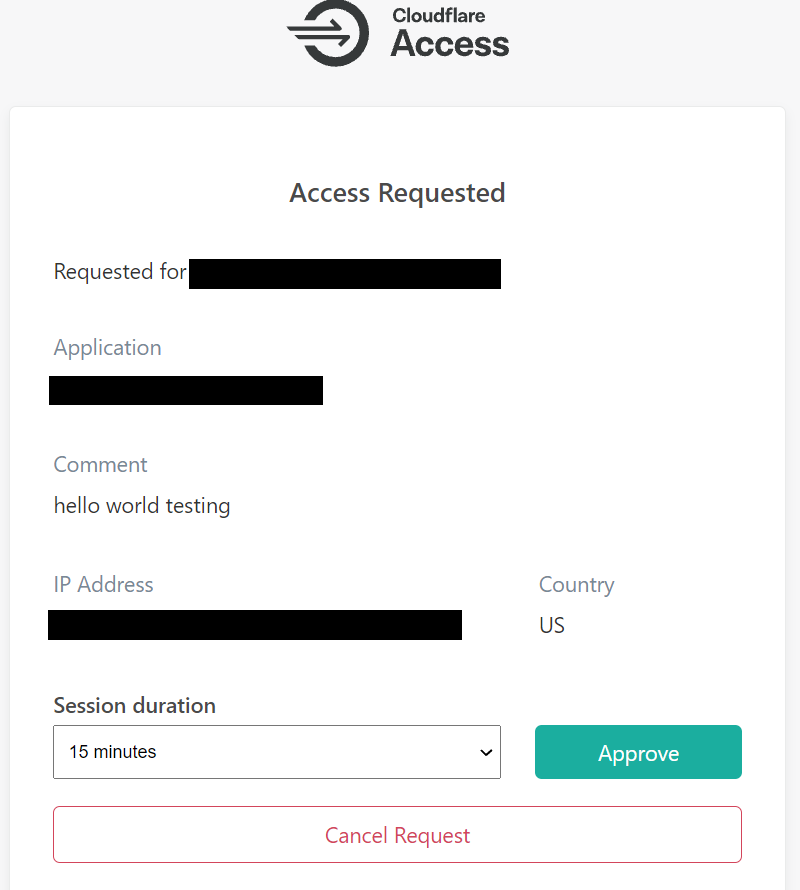

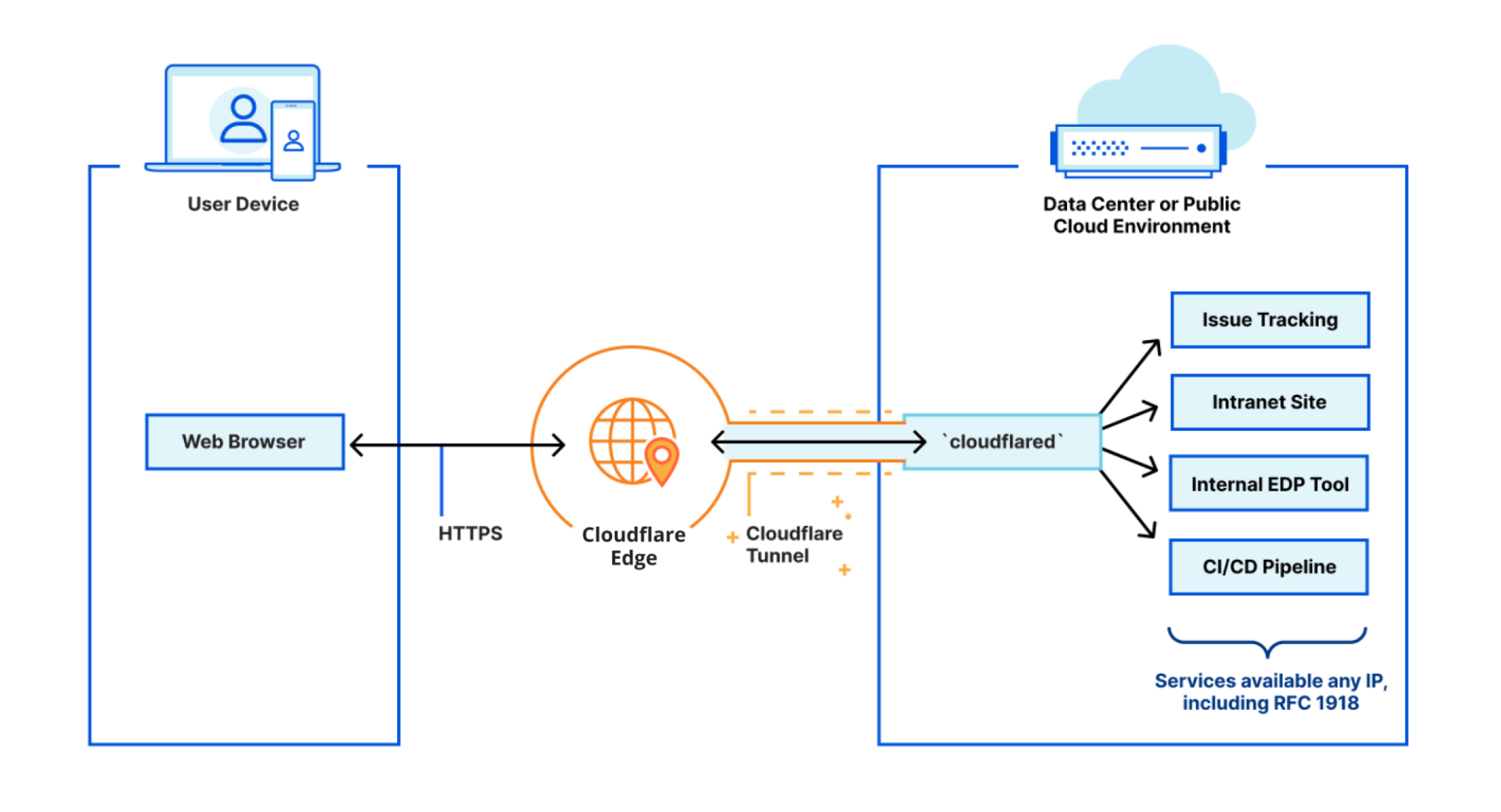

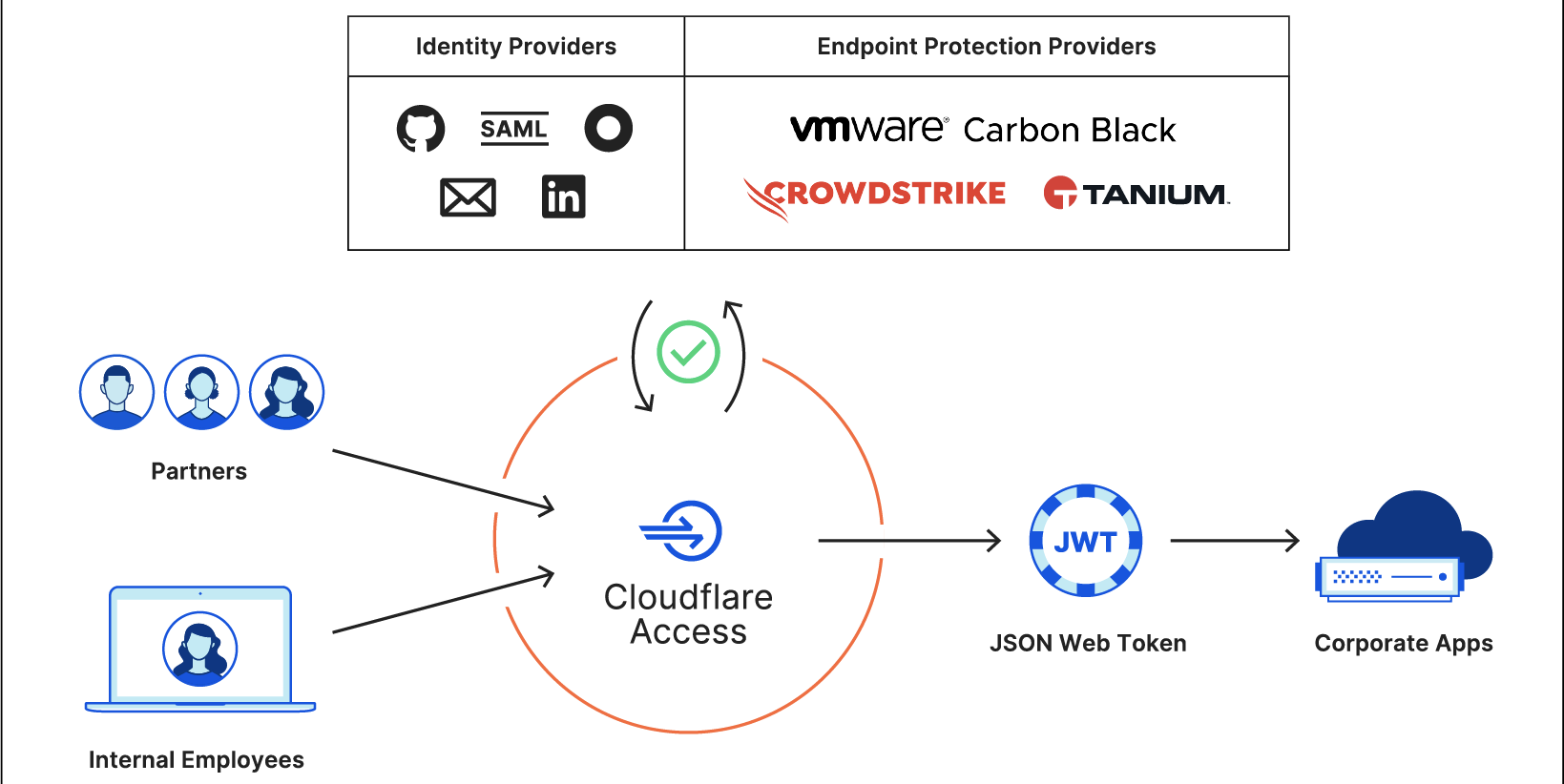

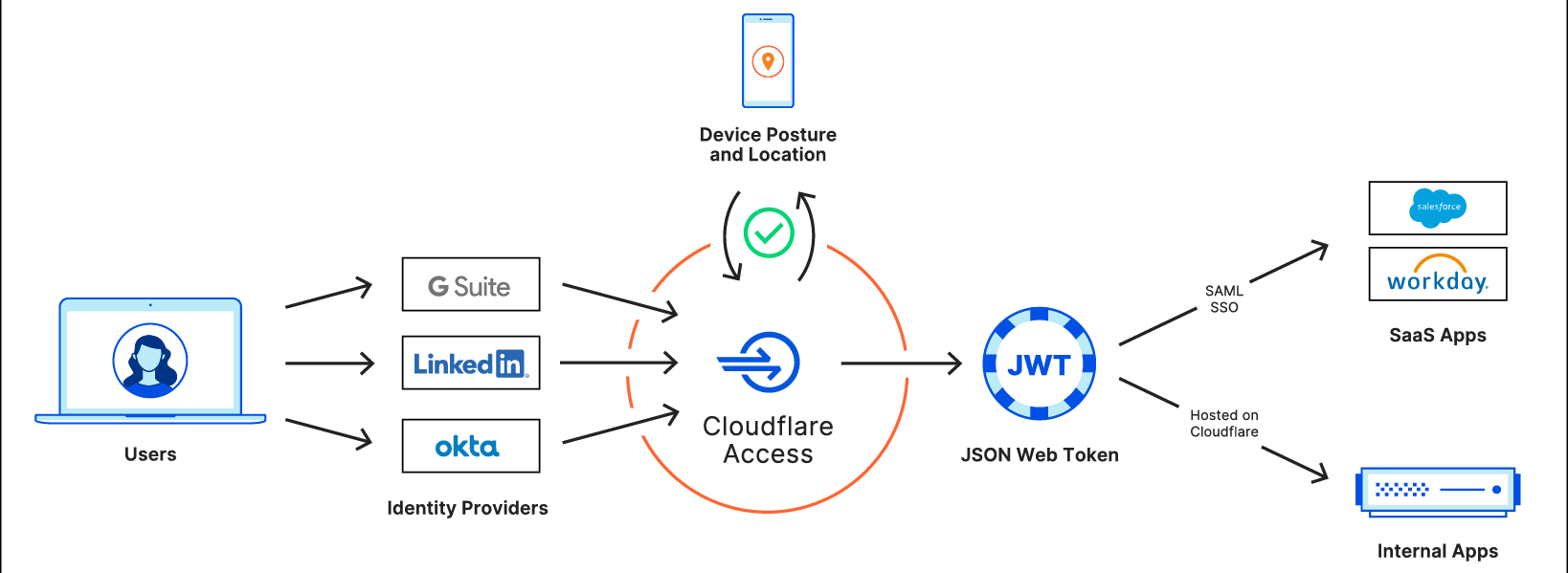

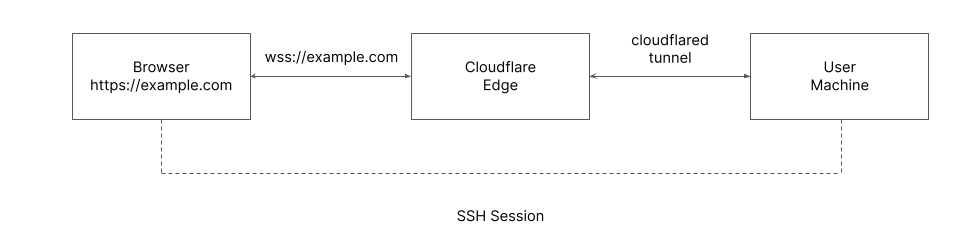

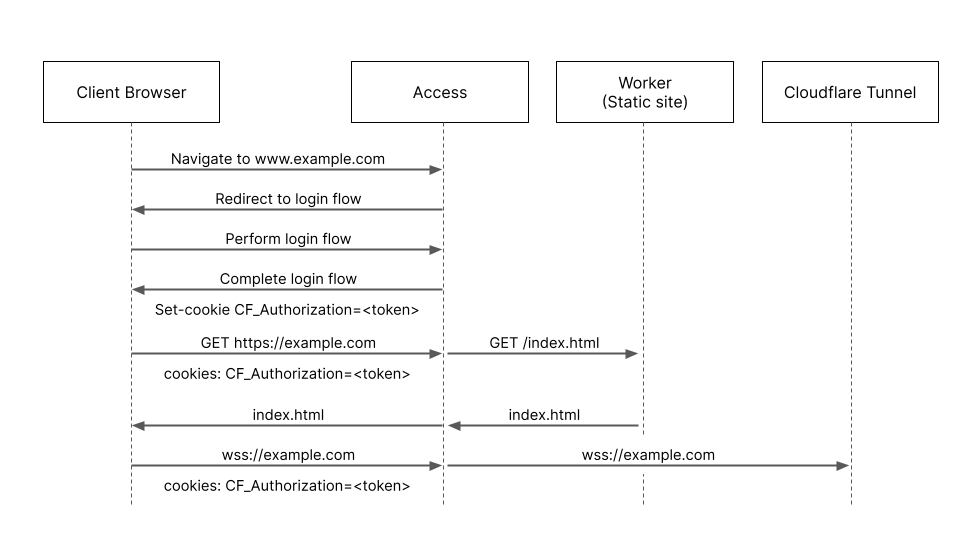

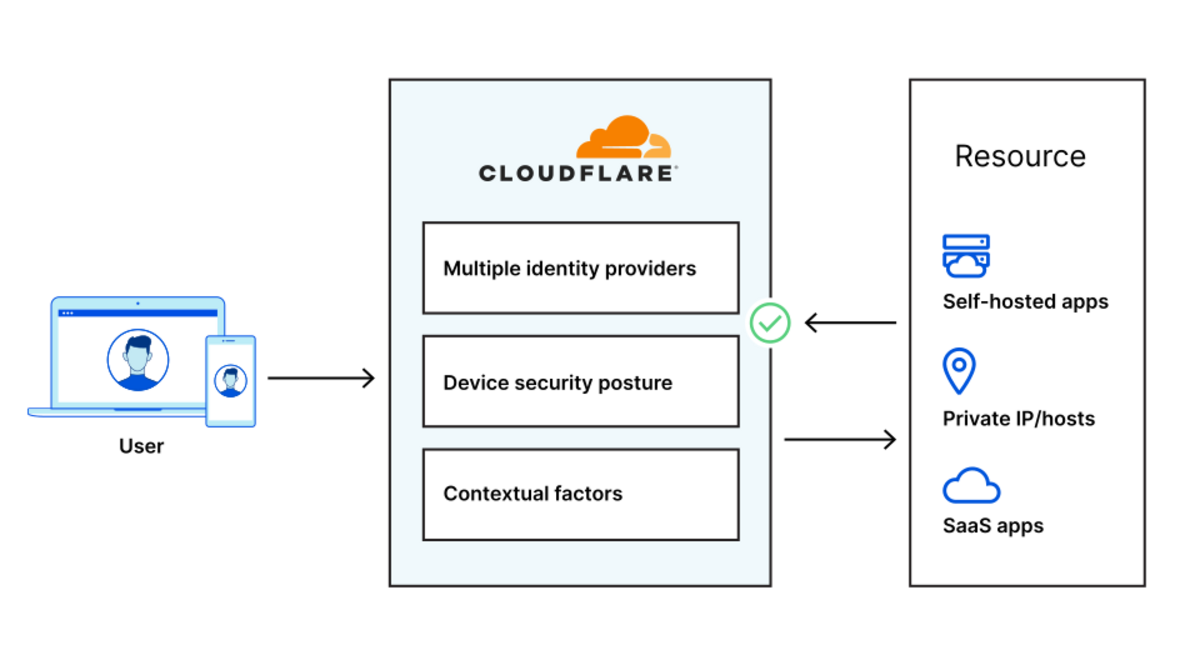

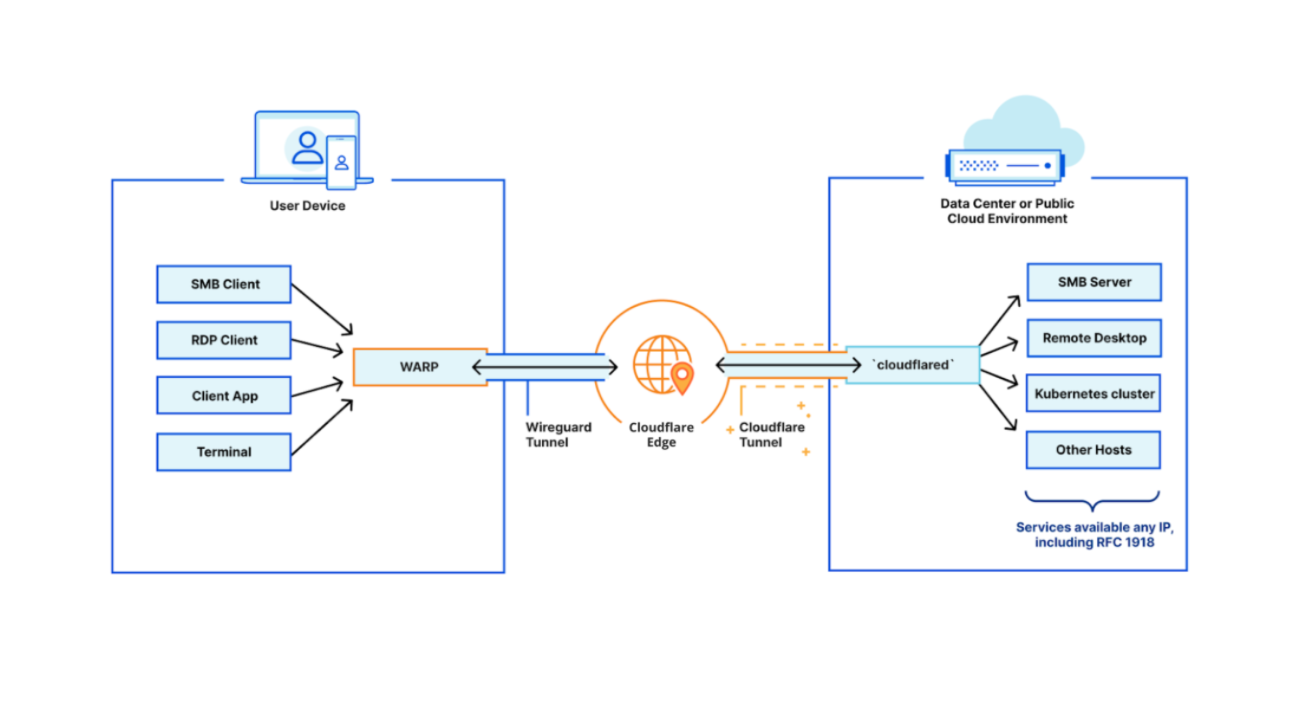

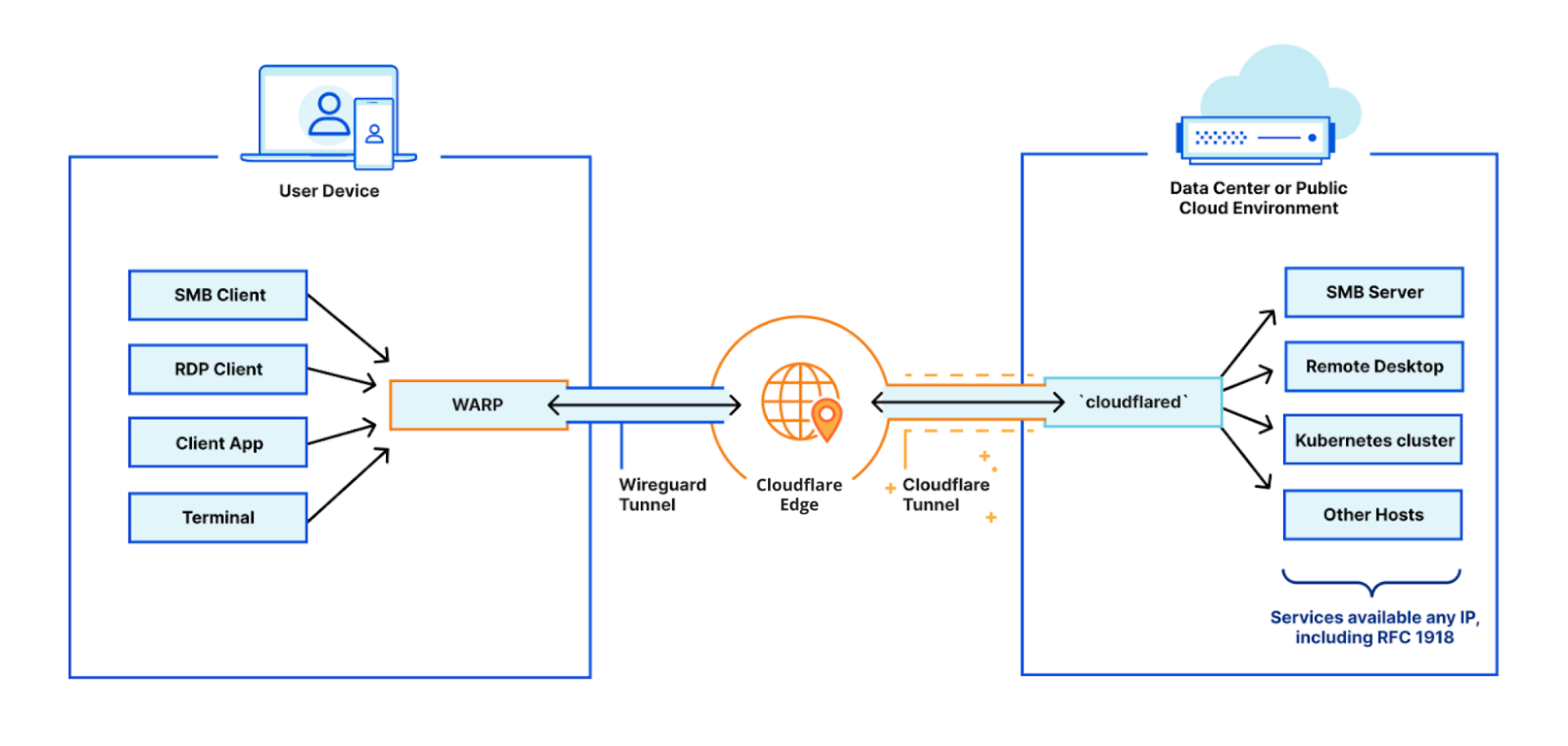

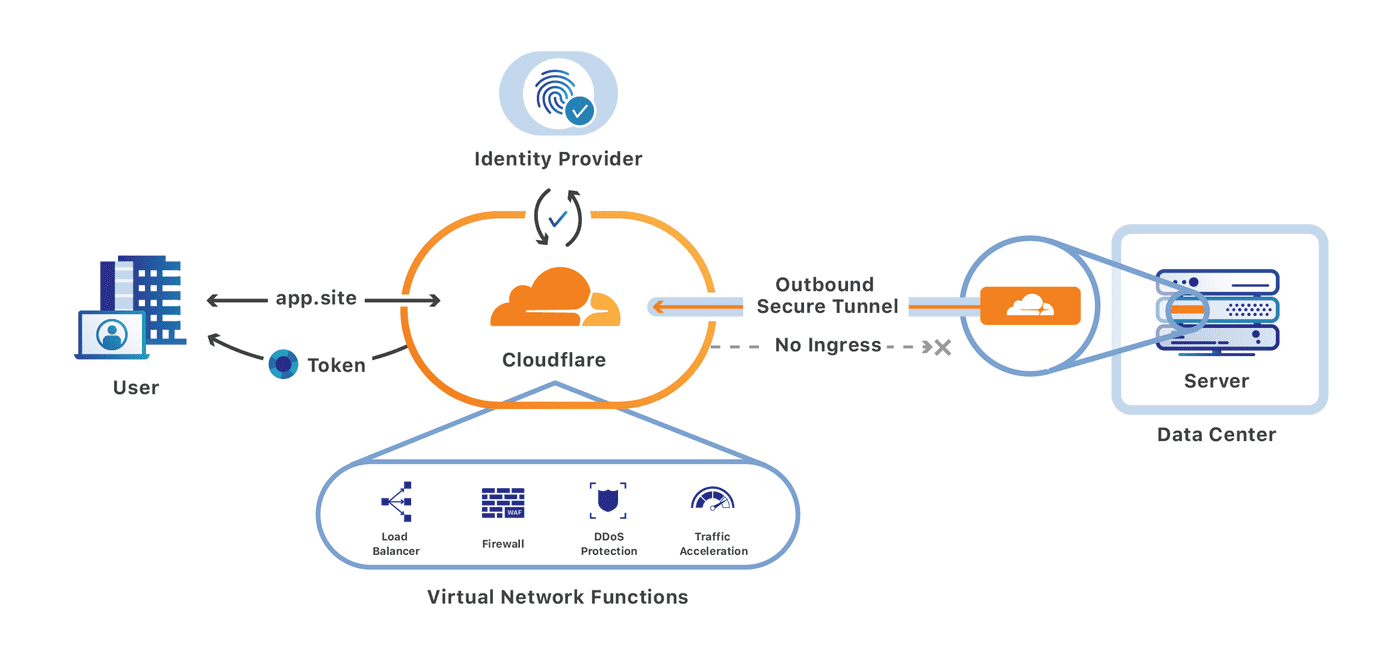

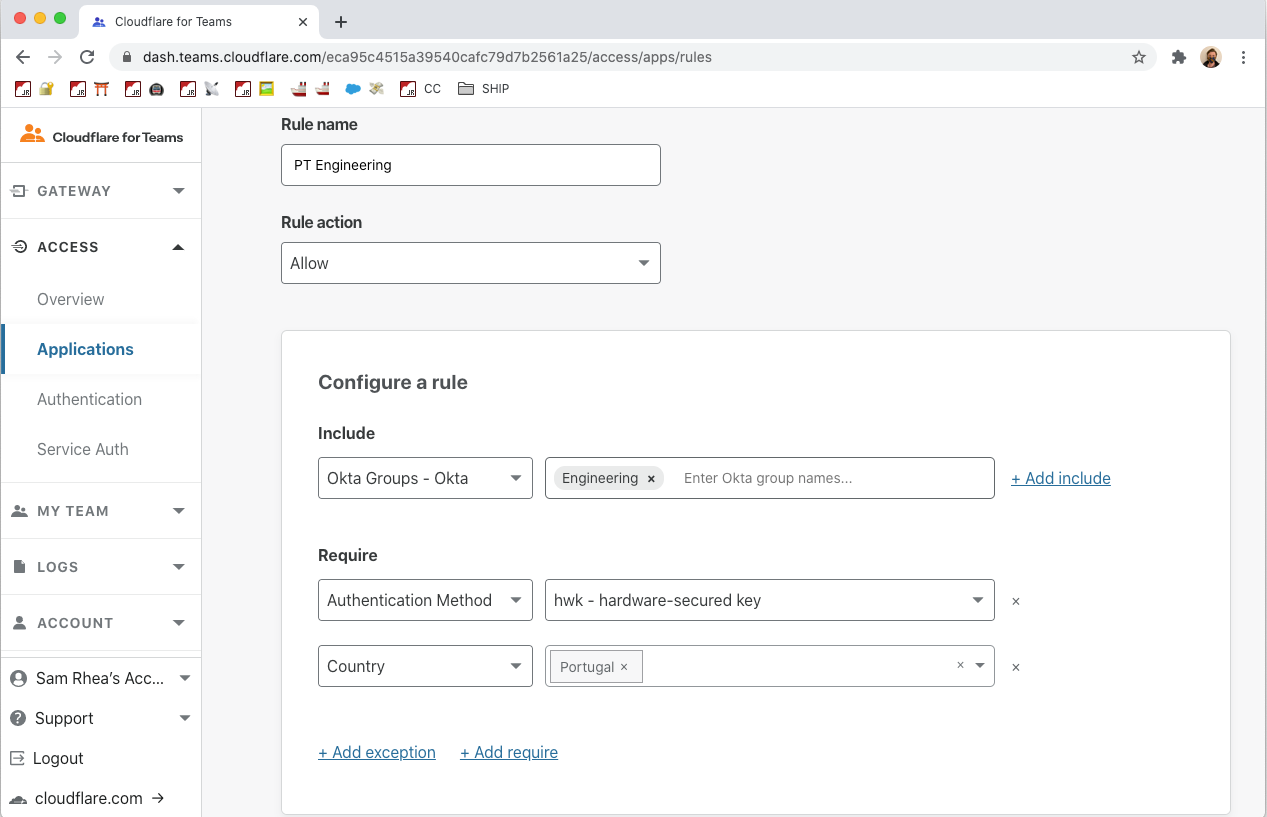

CIOs are hesitant to give up expensive forms of private connectivity because they feel more secure than the public Internet. But a Zero Trust approach, delivered on the Internet, dramatically increases security versus the classic castle and moat model or a patchwork of appliances and point software solutions adopted to create “defense in depth.” Instead of trusting users once they’re on the corporate network and allowing lateral movement, Zero Trust dictates authenticating and authorizing every request into, out of, and between entities on your network, ensuring that visitors can only get to applications they’re explicitly allowed to access. And delivering this authentication and policy enforcement from an edge location close to the user enables radically better performance, rather than forcing traffic to backhaul through central data centers or traverse a huge stack of security tools.

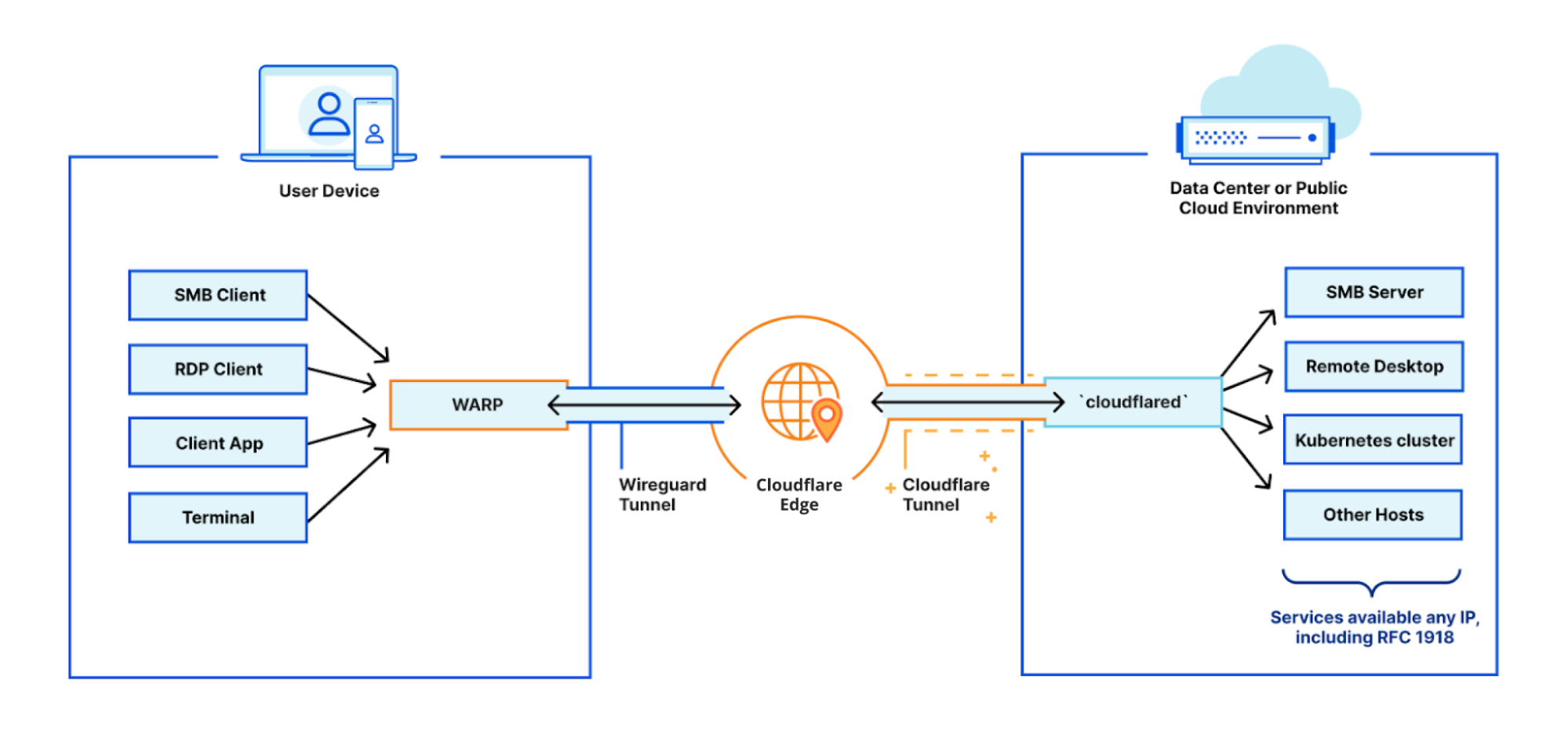

In order to enable this new model, CIOs need a platform that can:

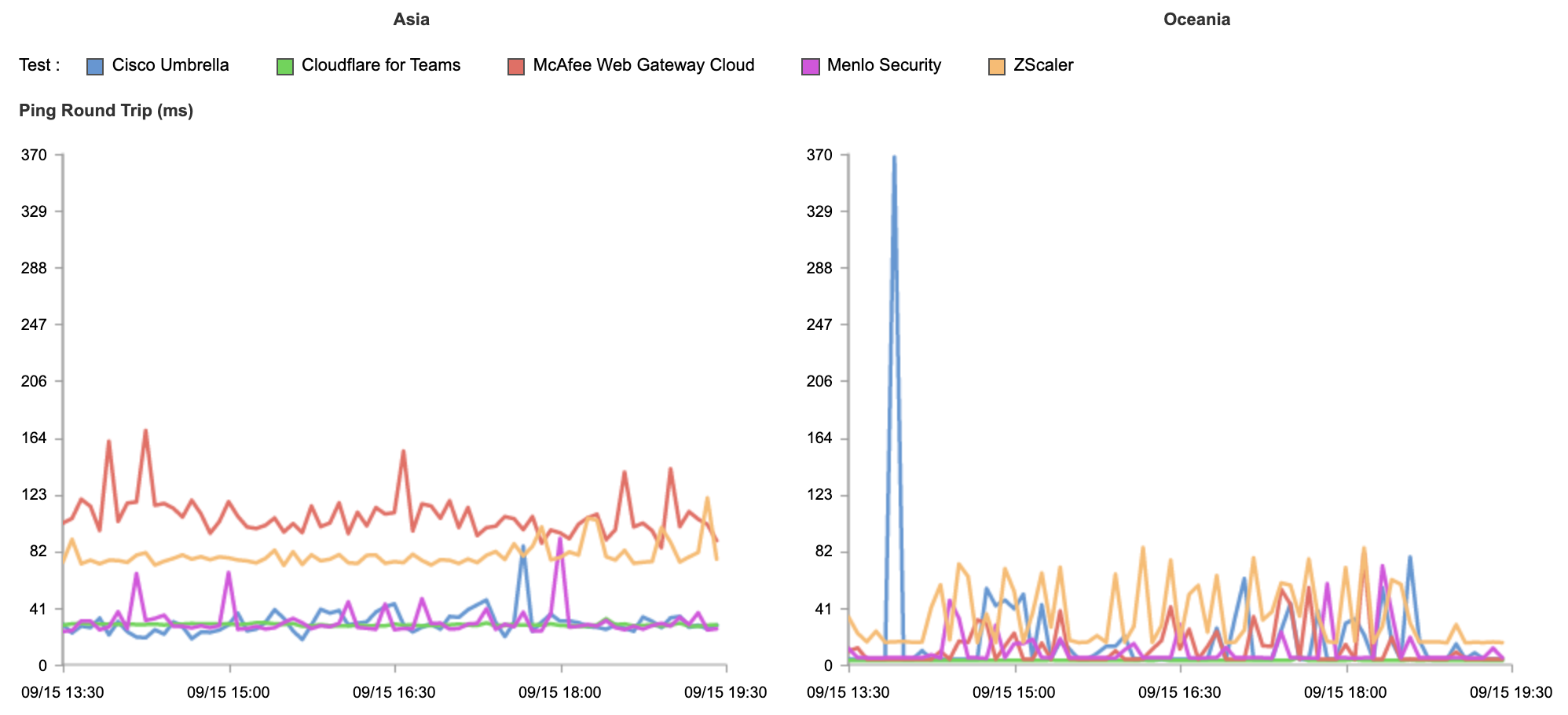

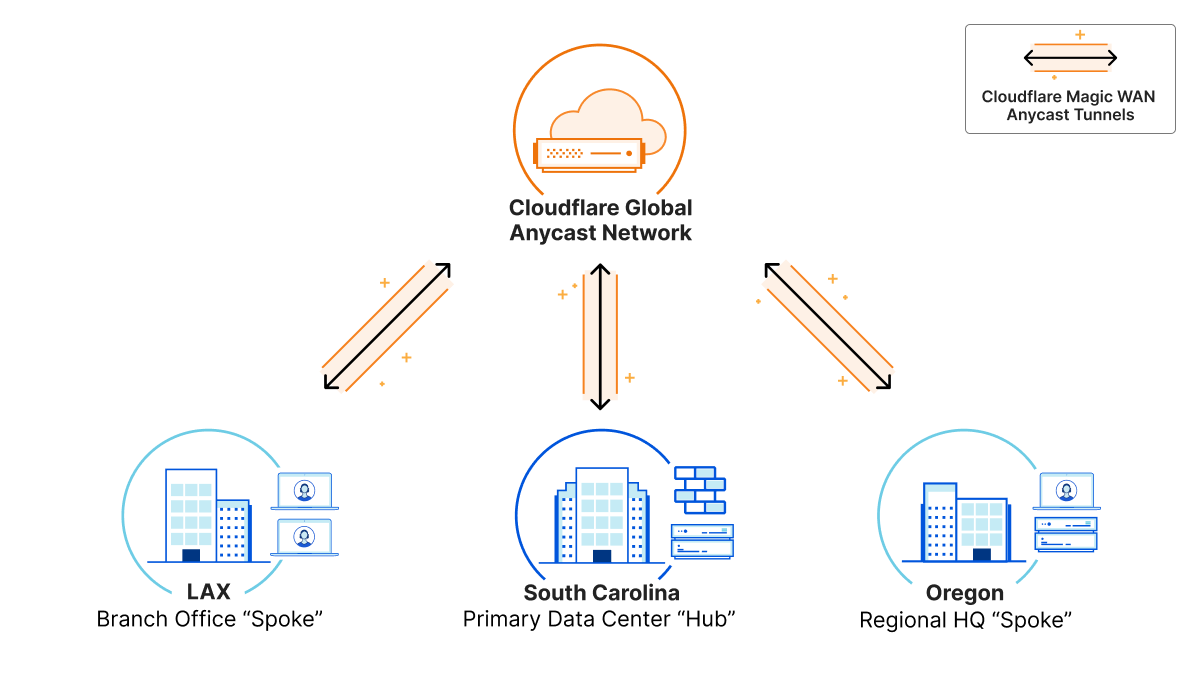

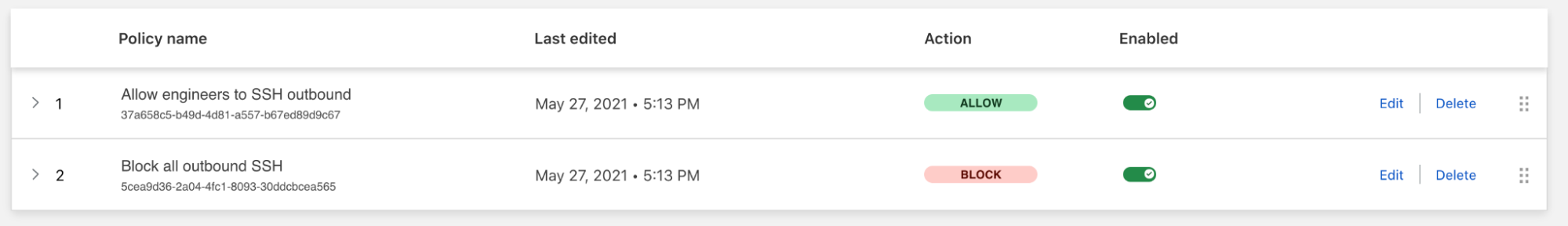

Connect all the entities on their corporate network.

It has to not just be possible, but also easy and reliable to connect users, applications, offices, data centers, and cloud properties to each other as flexibly as possible. This means support for the hardware and connectivity methods customers have today, from enabling mobile clients to operate across OS versions to compatibility with standard tunneling protocols and network peering with global telecom providers.

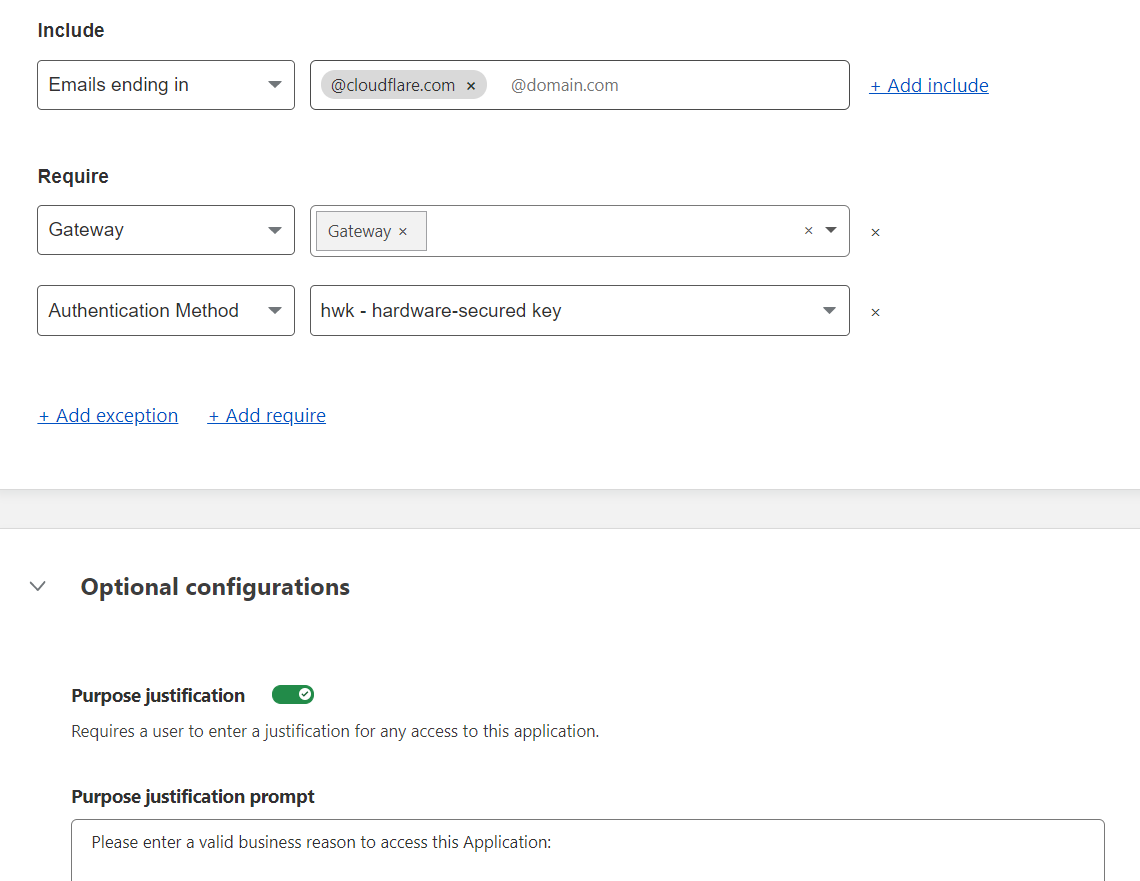

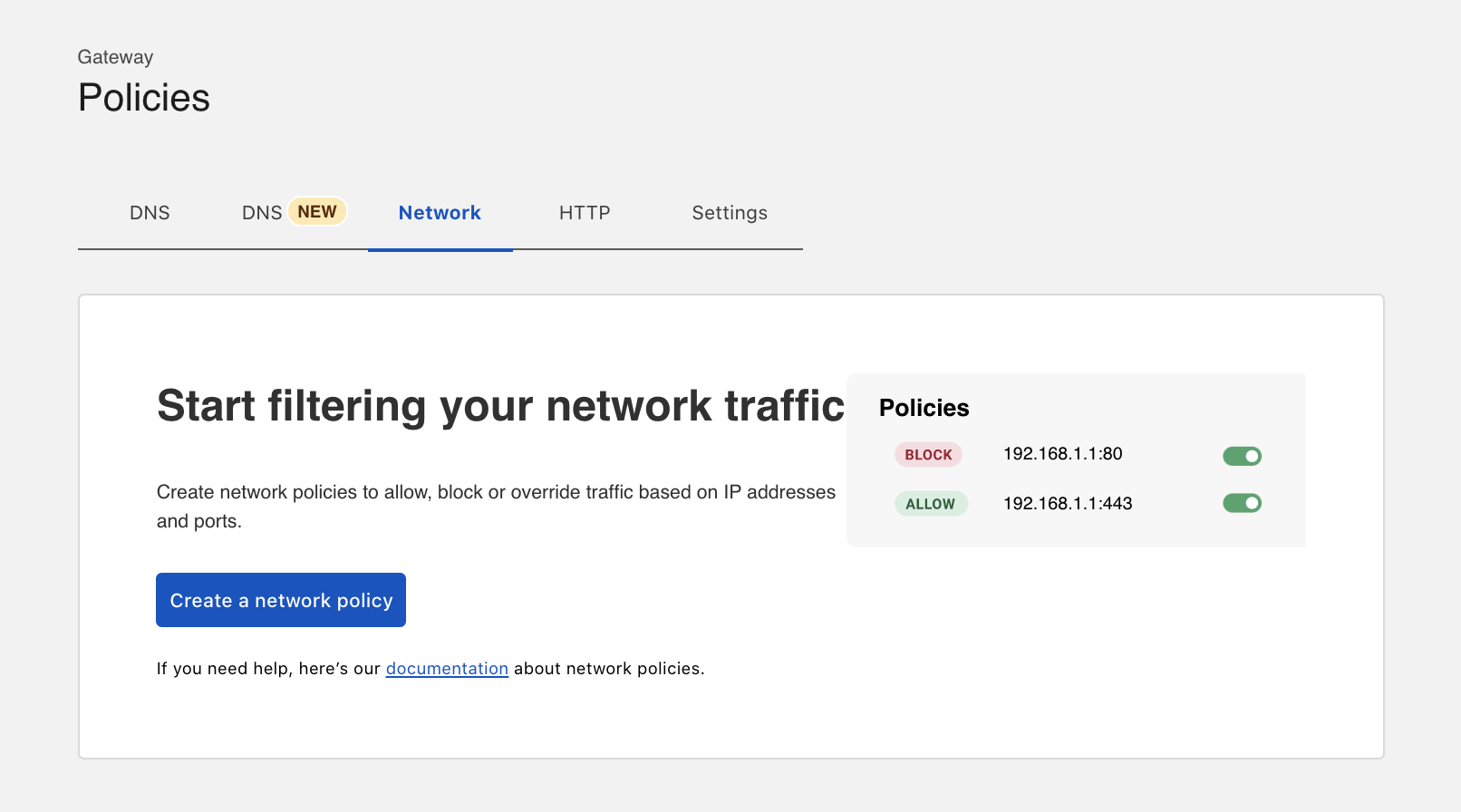

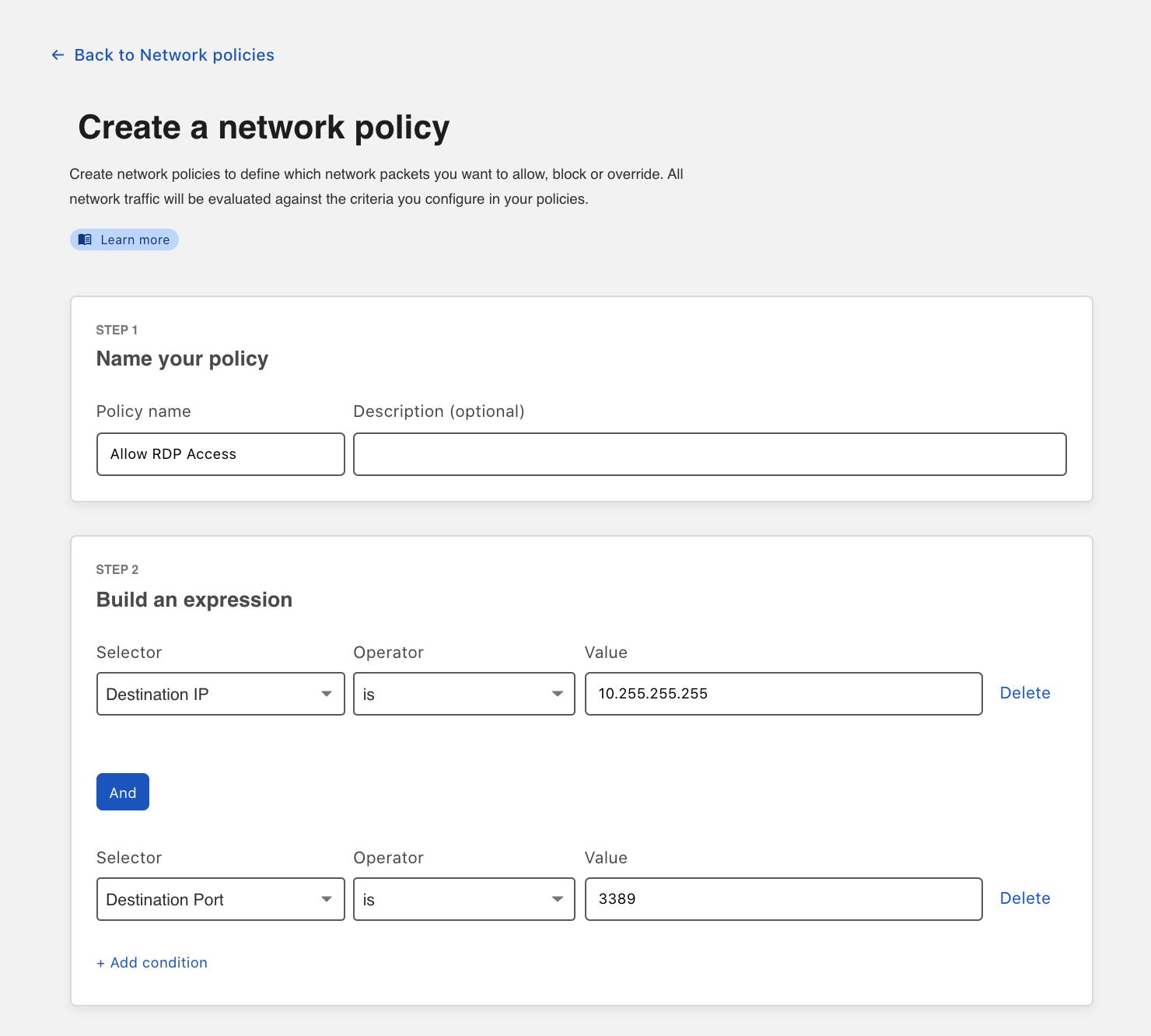

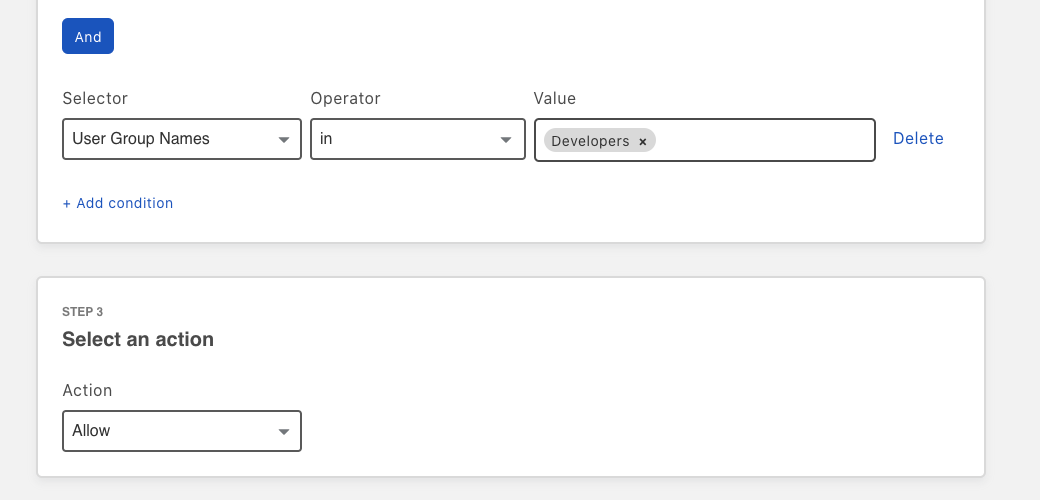

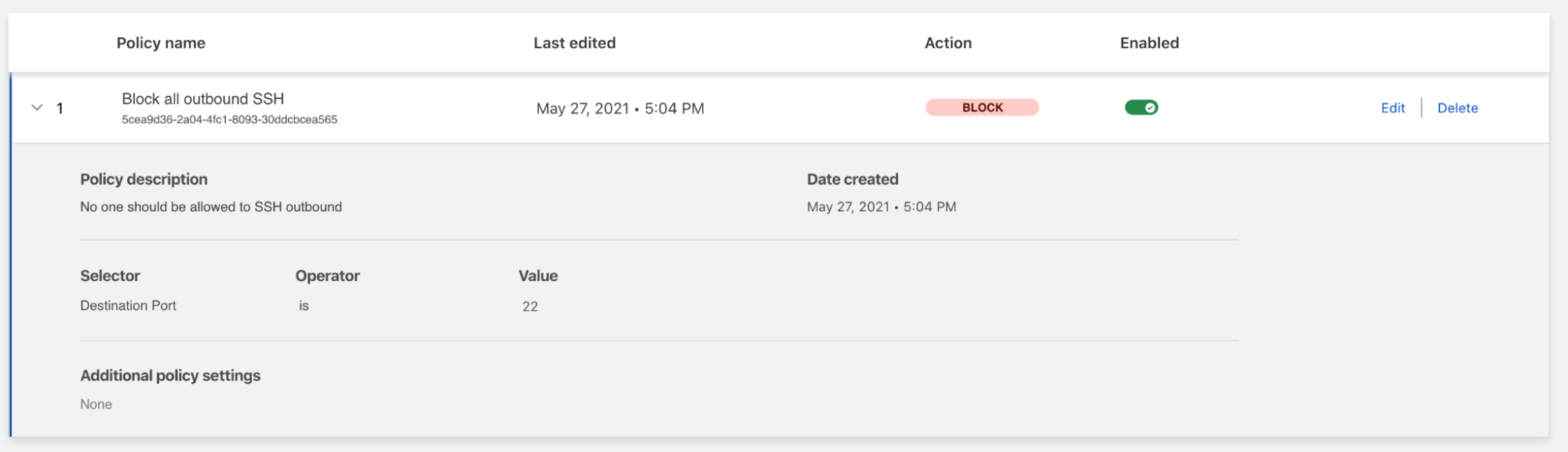

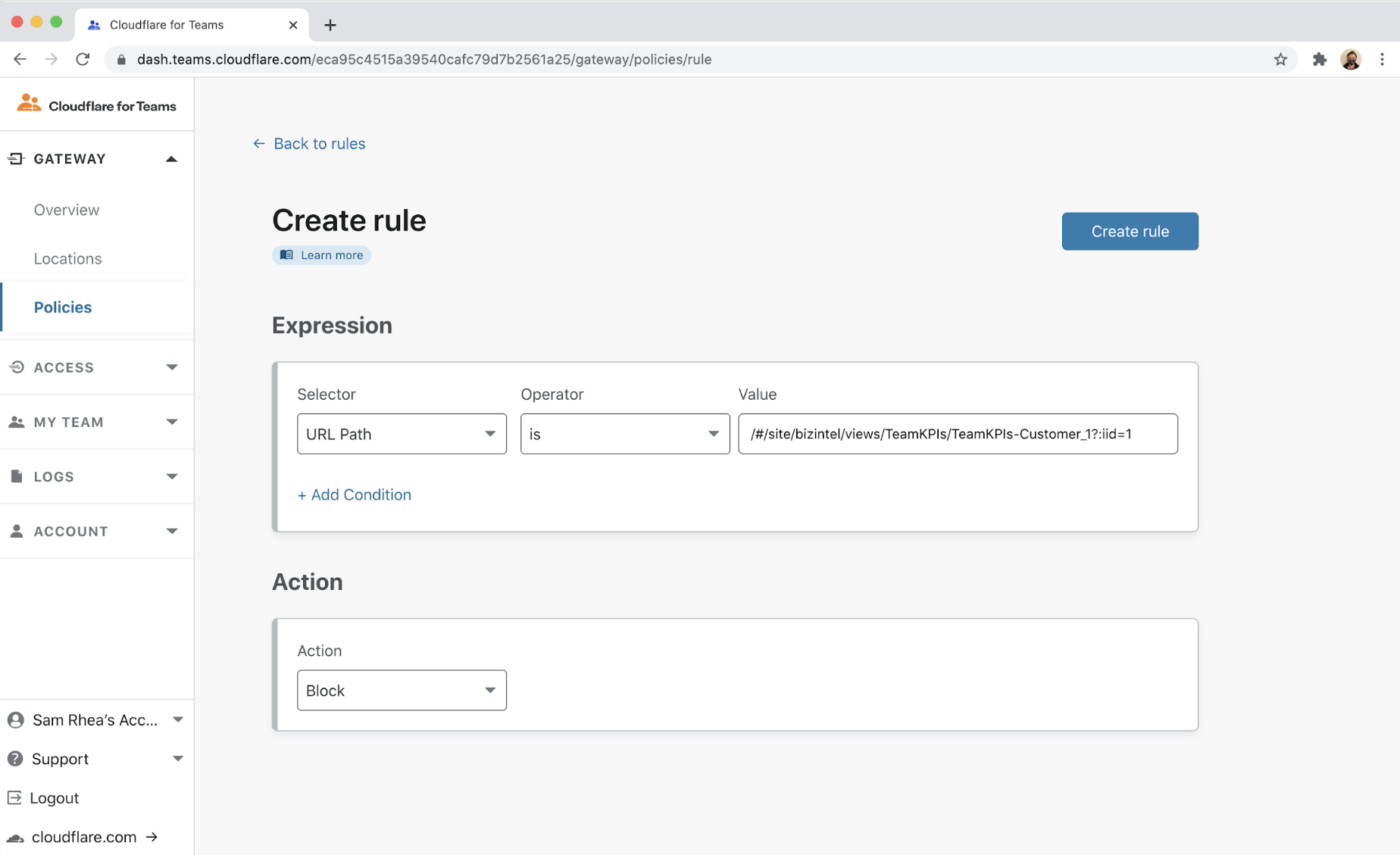

Apply comprehensive security policies.

CIOs need a solution that integrates tightly with their existing identity and endpoint security providers and provides Zero Trust protection at all layers of the OSI stack across traffic within their network. This includes end-to-end encryption, microsegmentation, sophisticated and precise filtering and inspection for traffic between entities on their network (“East/West”) and to/from the Internet (“North/South”), and protection from other threats like DDoS and bot attacks.

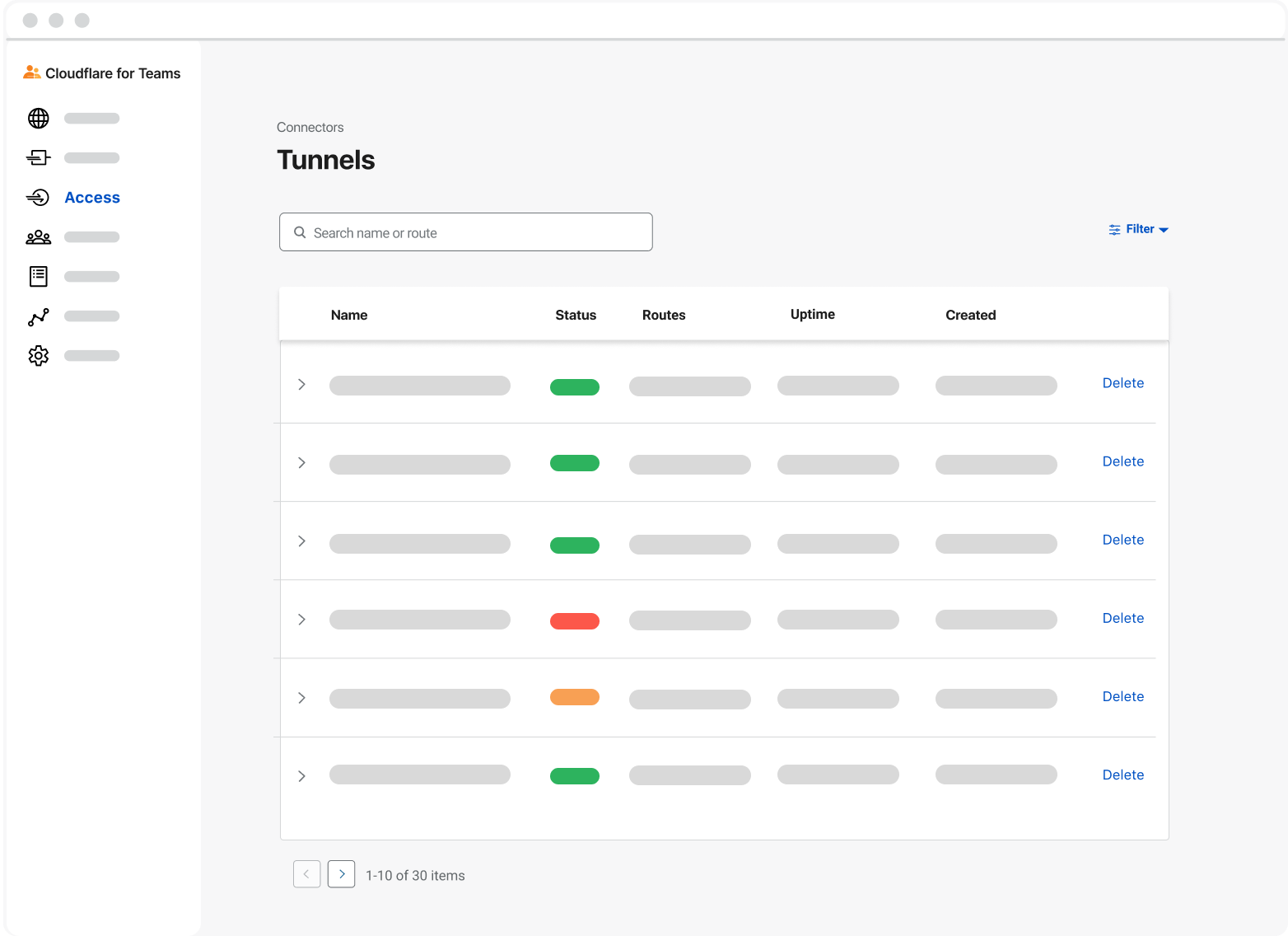

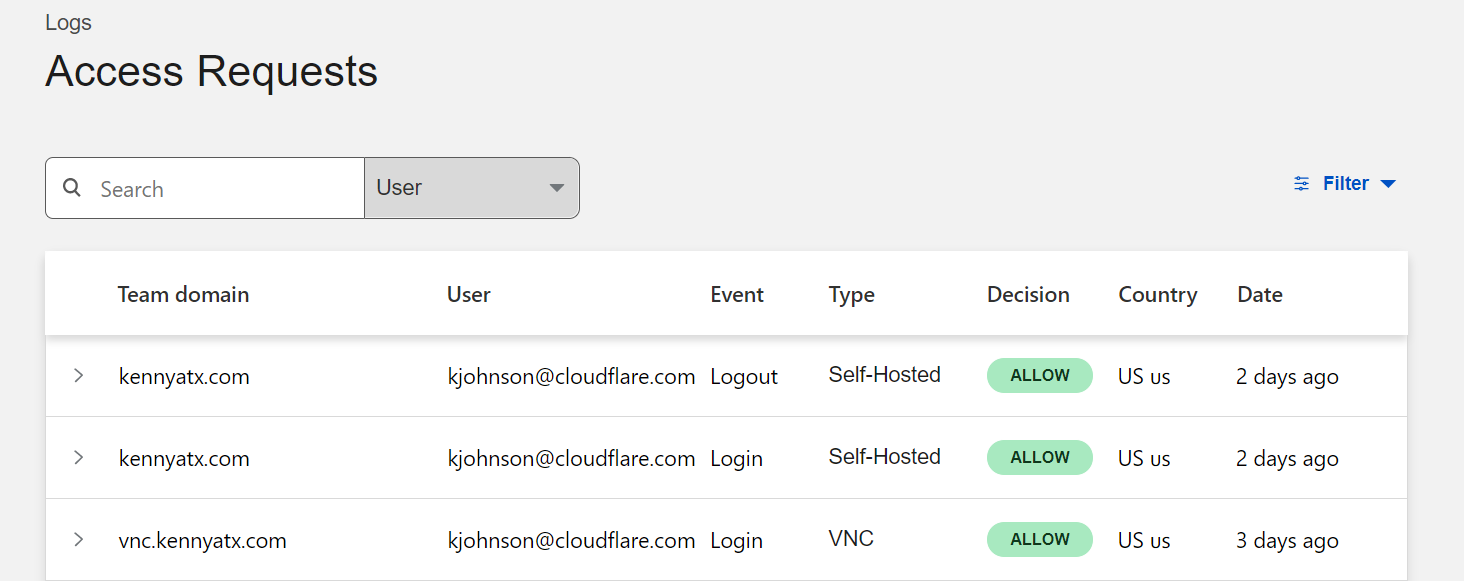

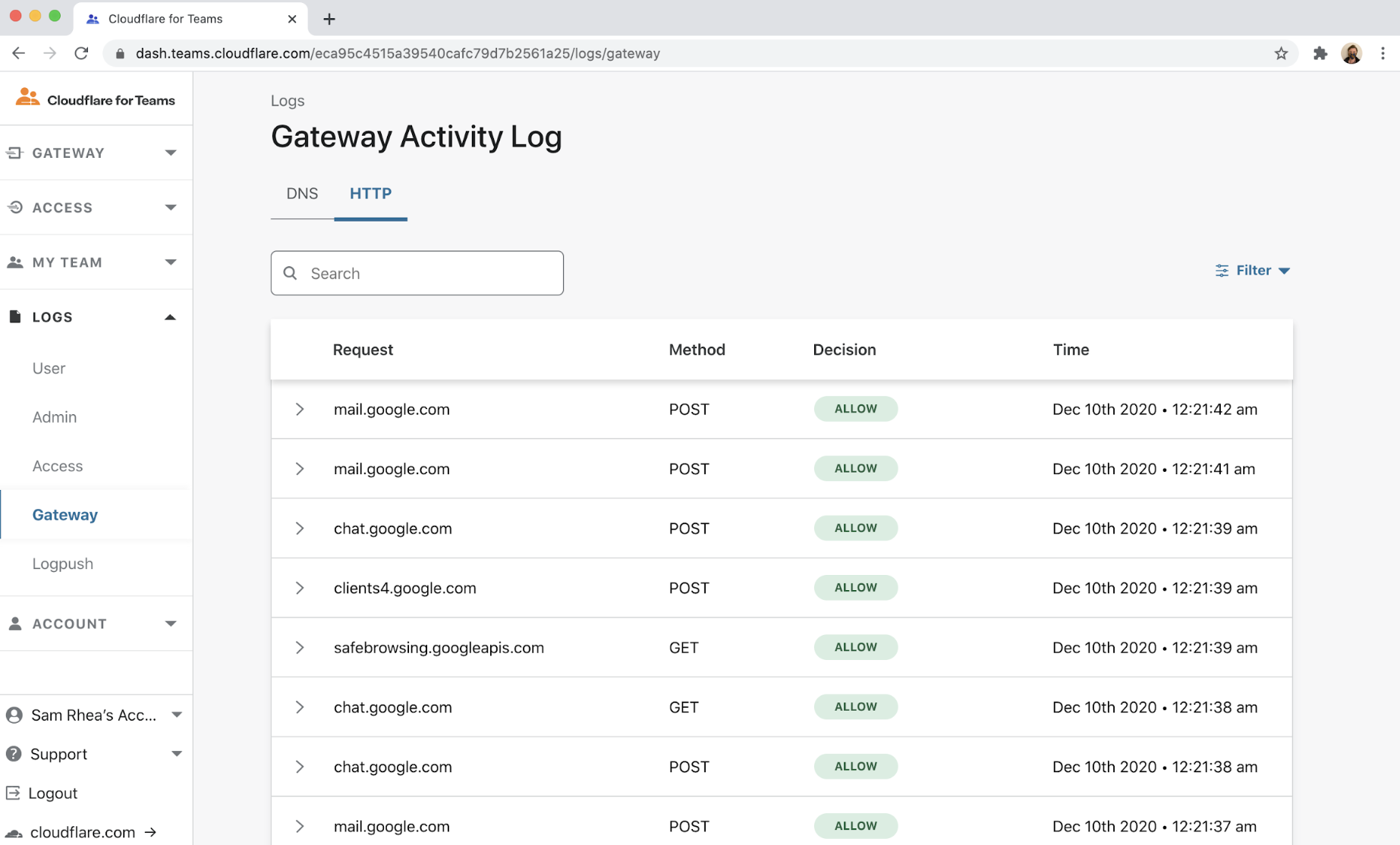

Visualize and provide insight on traffic.

At a base level, CIOs need to understand the full picture of their traffic: who’s accessing what resources and what does performance (latency, jitter, packet loss) look like? But beyond providing the information necessary to answer basic questions about traffic flows and user access, next-generation visibility tools should help users understand trends and highlight potential problems proactively, and they should provide easy-to-use controls to respond to those potential problems. Imagine logging into one dashboard that provides a comprehensive view of your network’s attack surface, user activity, and performance/traffic health, receiving customized suggestions to tighten security and optimize performance, and being able to act on those suggestions with a single click.

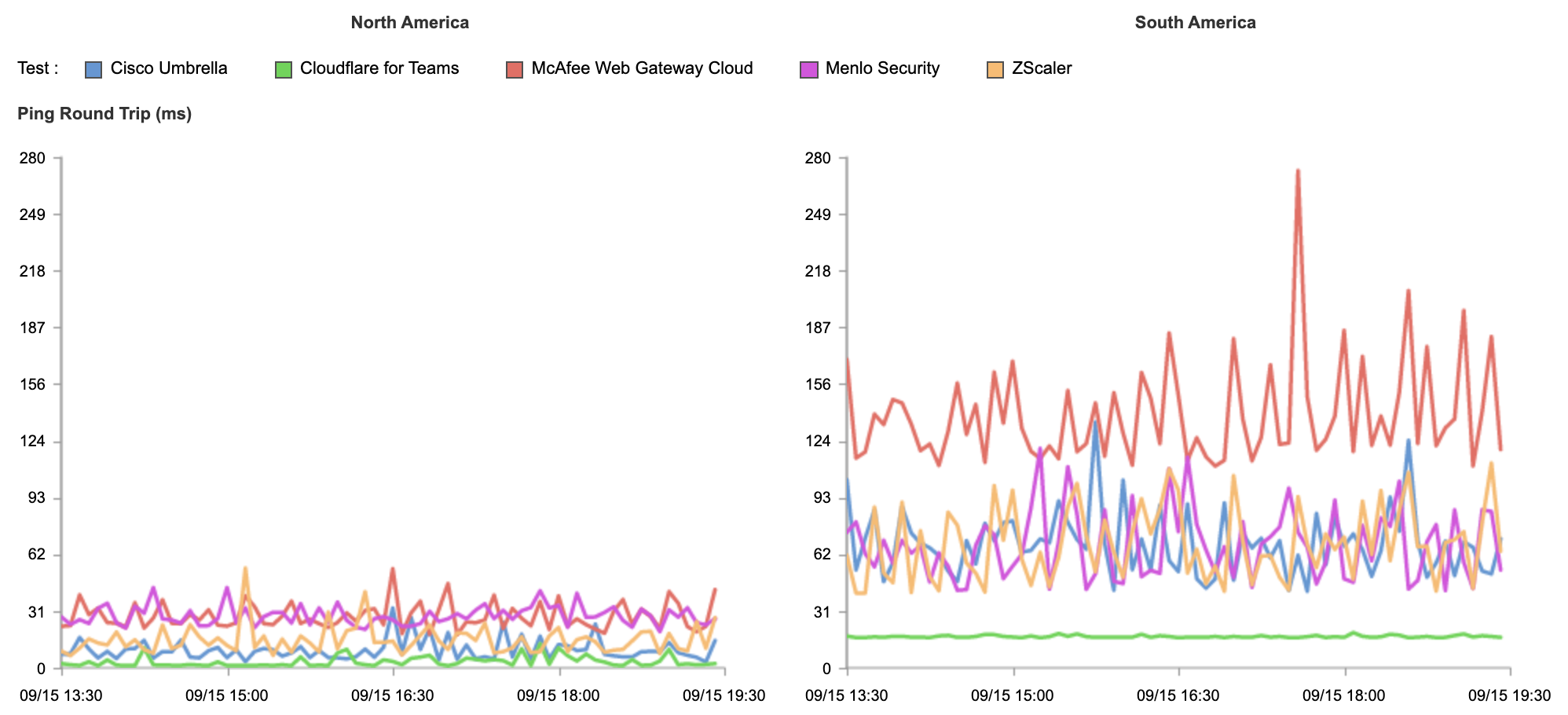

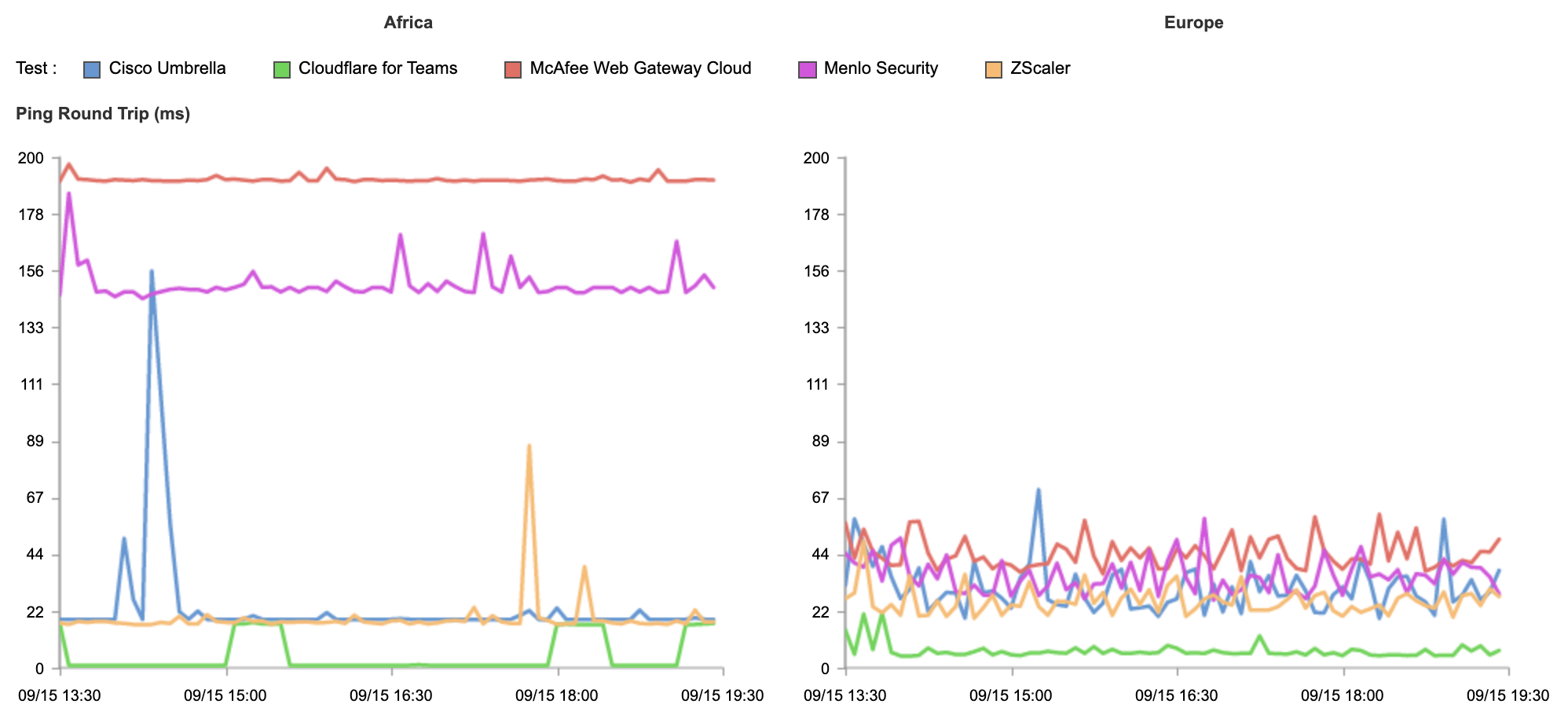

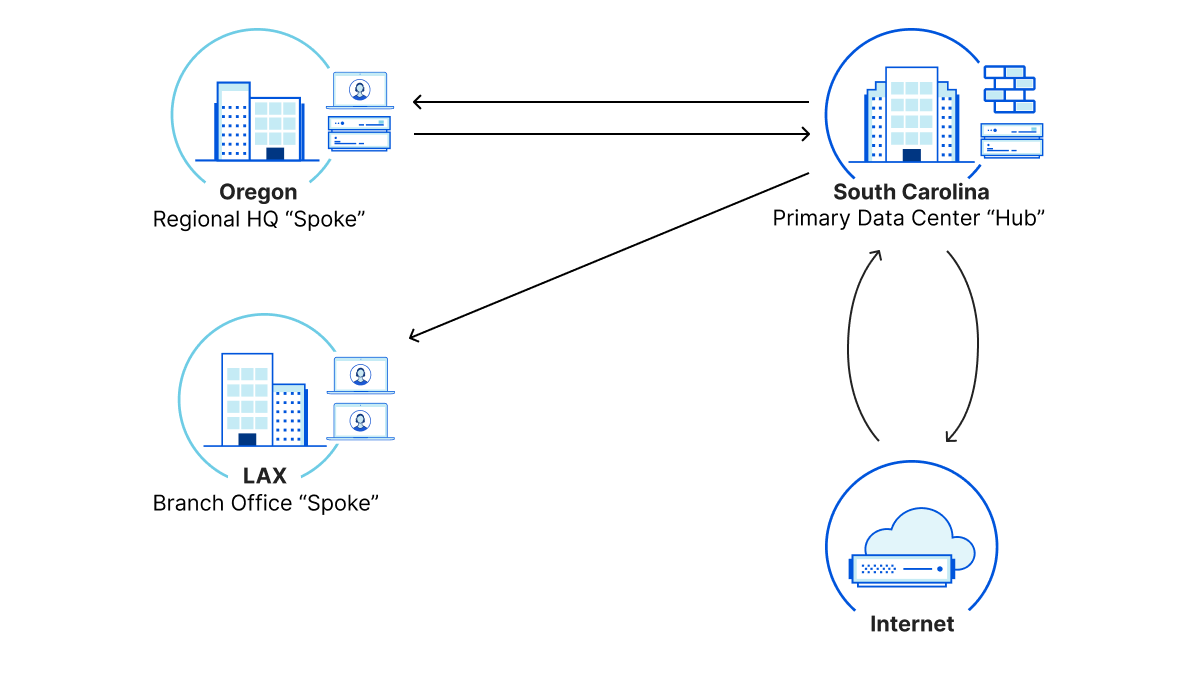

Better quality of experience, everywhere in the world

More classic critiques of the public Internet: it’s slow, unreliable, and increasingly subject to complicated regulations that make operating on the Internet as a CIO of a globally distributed company exponentially challenging. The platform CIOs need will make intelligent decisions to optimize performance and ensure reliability, while offering flexibility to make compliance easy.

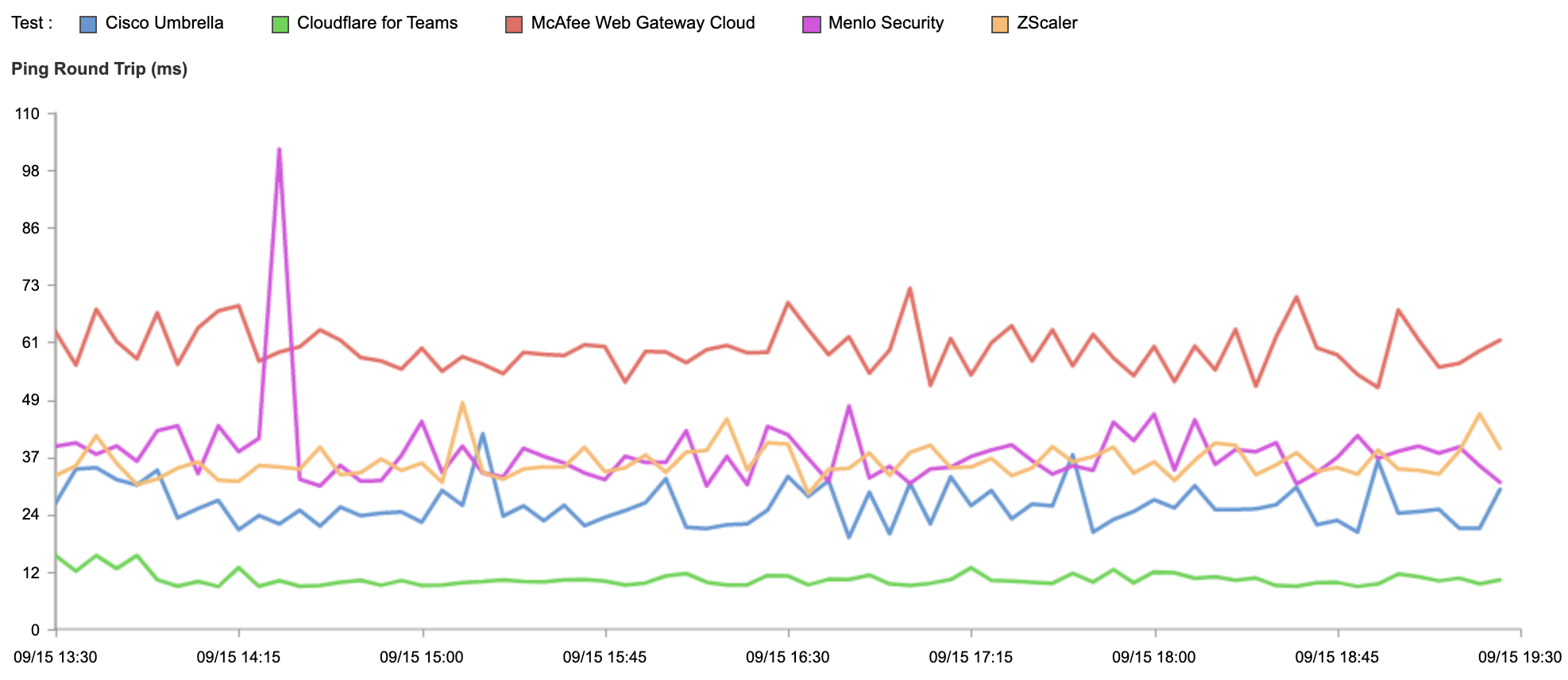

Fast, in the ways that matter most.

Traditional methods of measuring network performance, like speed tests, don’t tell the full story of actual user experience. Next-generation platforms will measure performance holistically and consider application-specific factors, along with using real-time data on Internet health, to optimize traffic end-to-end.

Reliable, despite factors out of your control.

Scheduled downtime is a luxury of the past: today’s CIOs need to operate 24×7 networks with as close as possible to 100% uptime and reachability from everywhere in the world. They need a provider that’s resilient in its own services, but also has the capacity to handle massive attacks with grace and flexibility to route around issues with intermediary providers. Network teams should also not need to take action for their provider’s planned or unplanned data center outages, such as needing to manually configure new data center connections. And they should be able to onboard new locations at any time without waiting for vendors to provision additional capacity close to their network.

Localized and compliant with data privacy regulations.

Data sovereignty laws are rapidly evolving. CIOs need to bet on a platform that will give them the flexibility to adapt as new protections are rolled out across the globe, with one interface to manage their data (not fractured solutions in different regions).

A paradigm shift that’s possible starting today

These changes sound radical and exciting. But they’re also intimidating — wouldn’t a shift this large be impossible to execute, or at least take an unmanageably long time, in complex modern networks? Our customers have proven this doesn’t have to be the case.

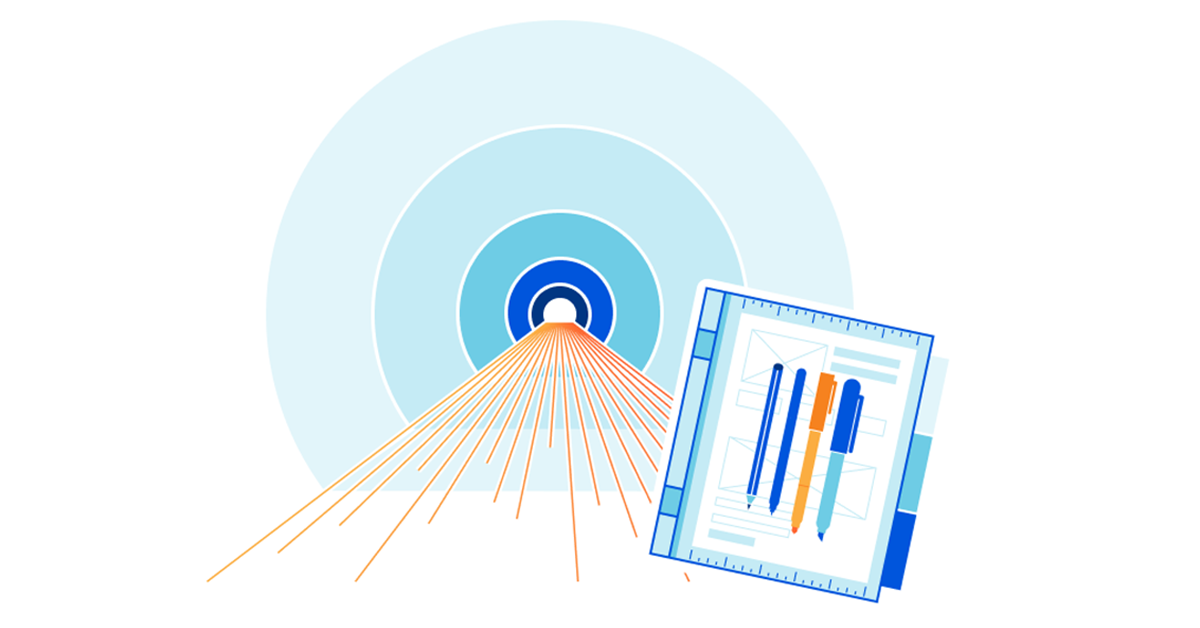

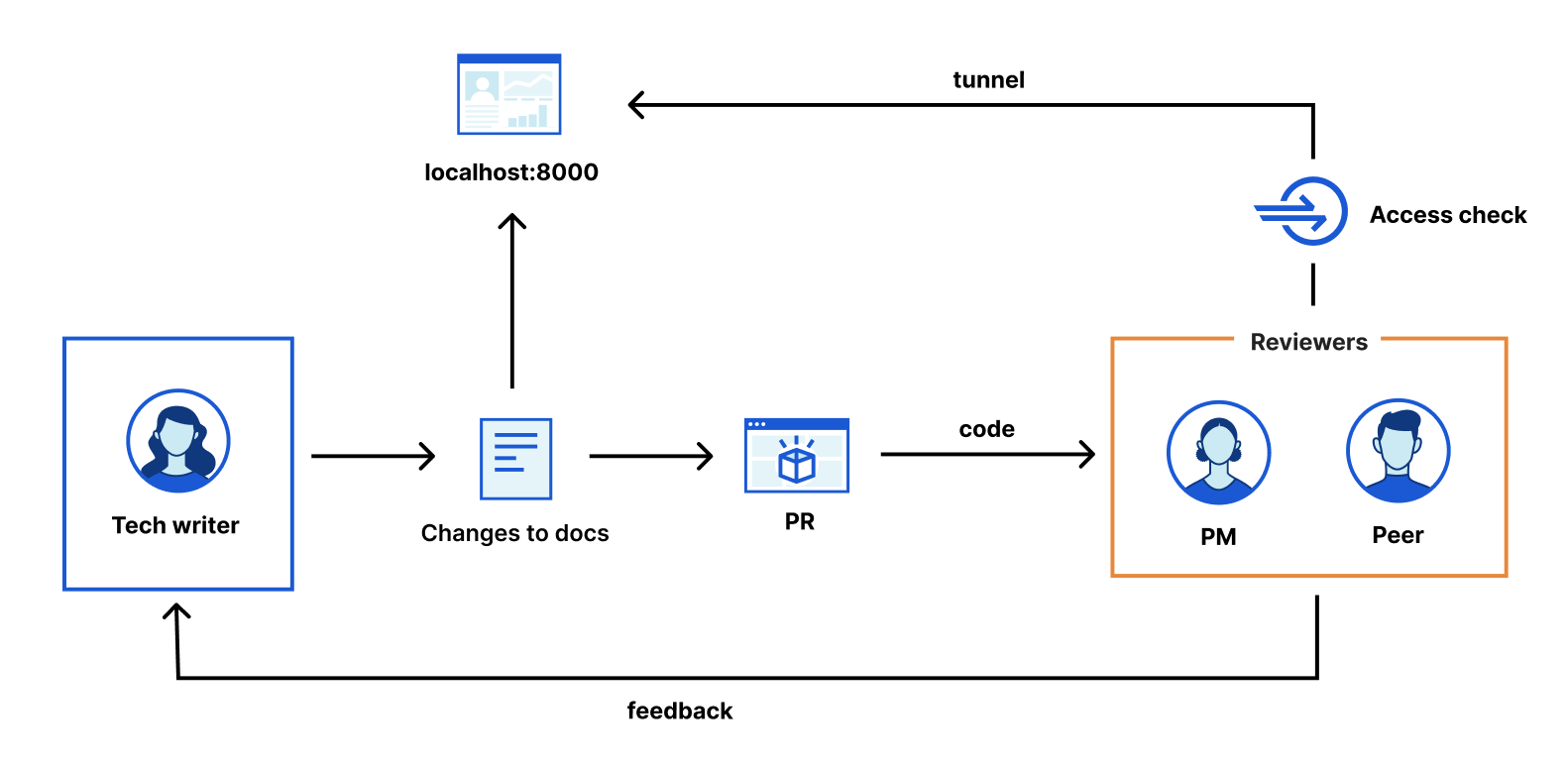

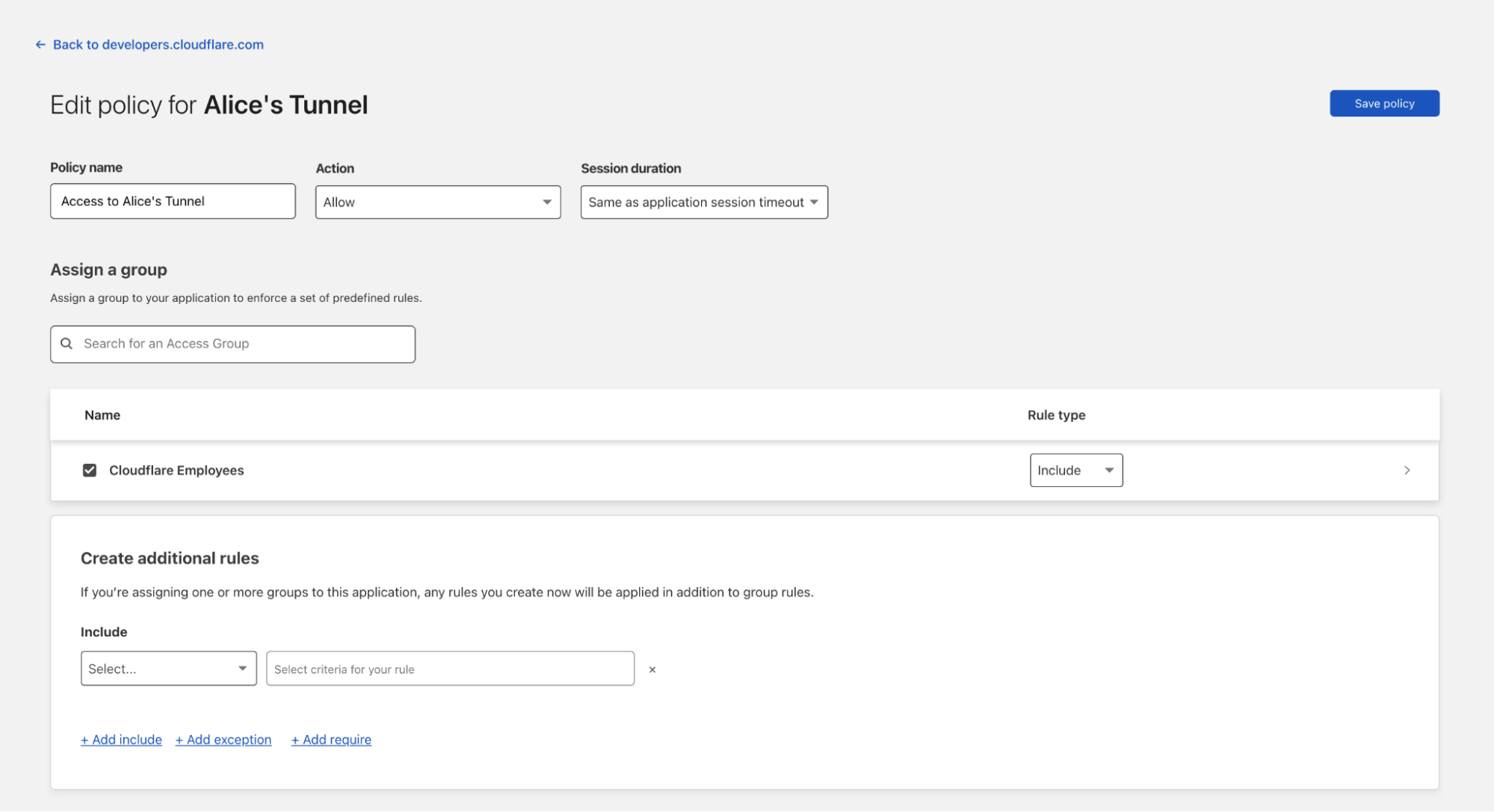

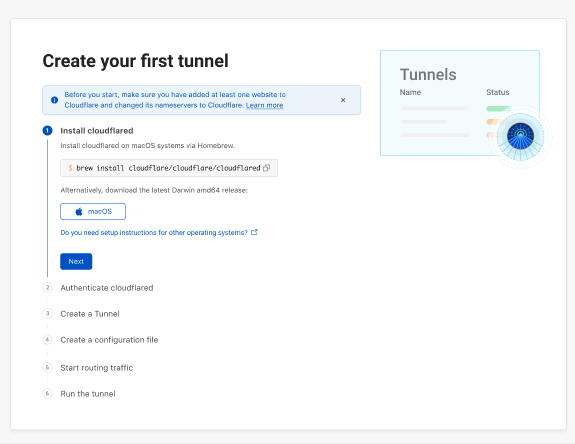

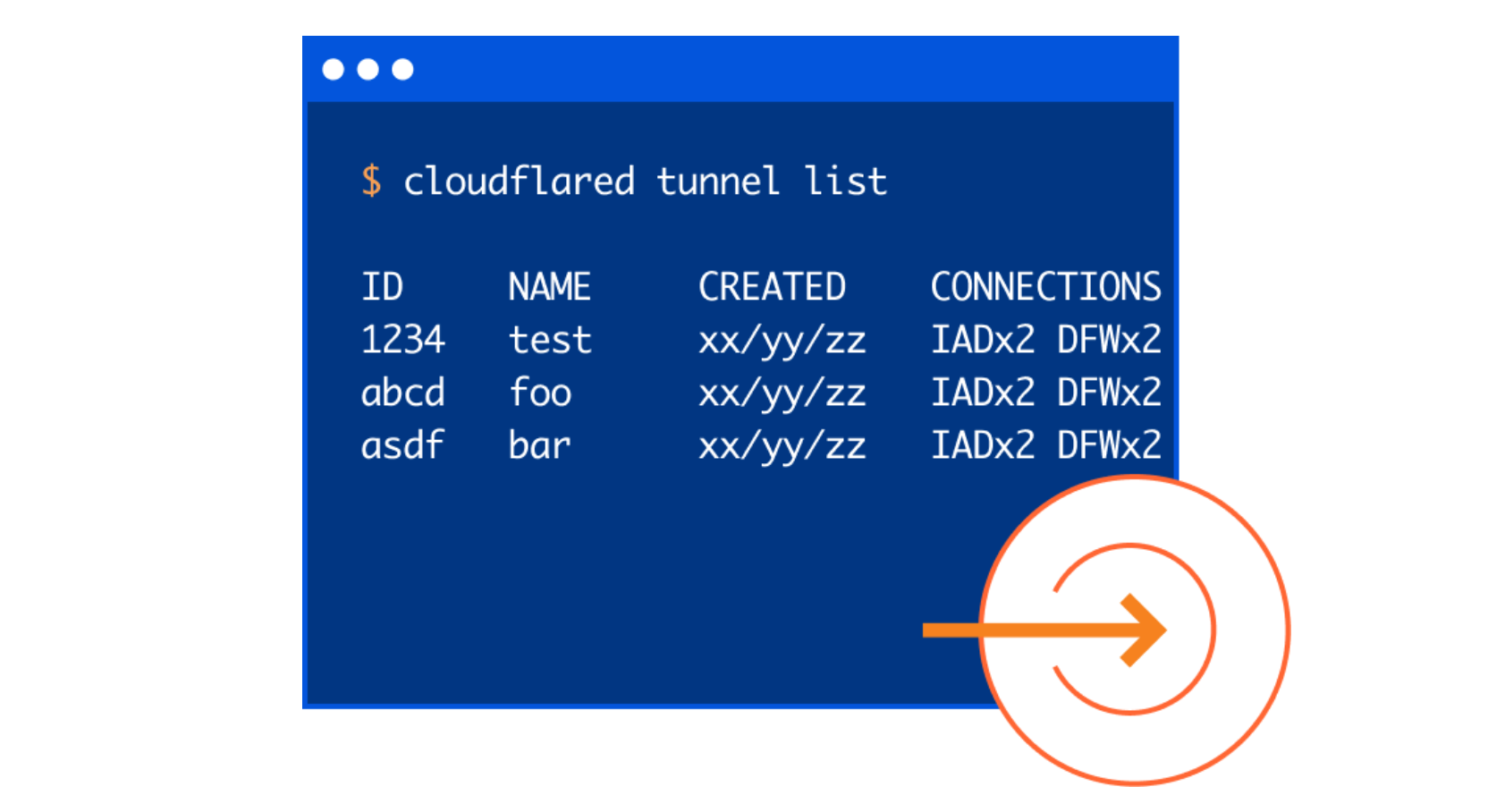

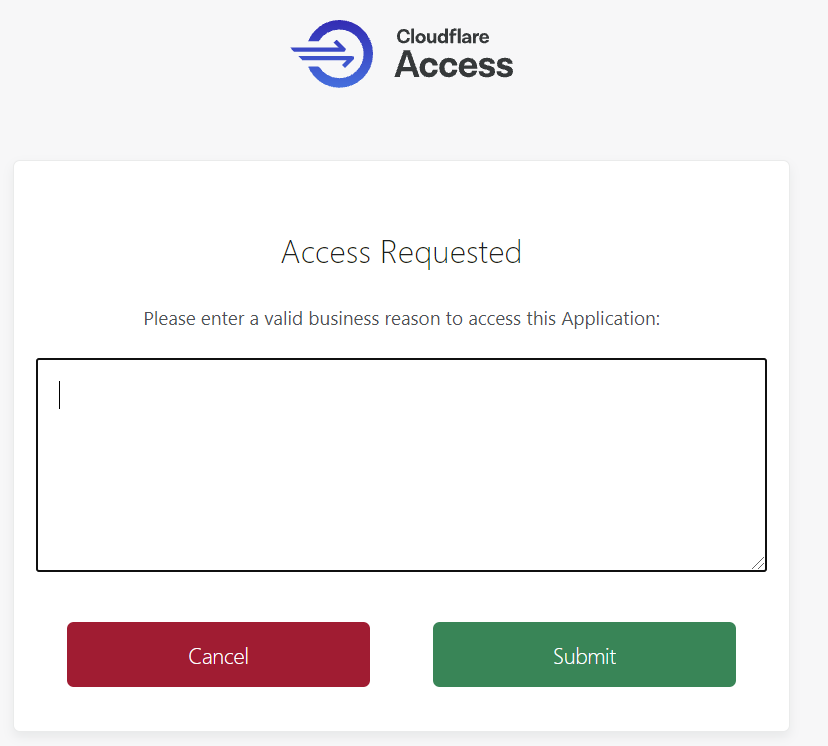

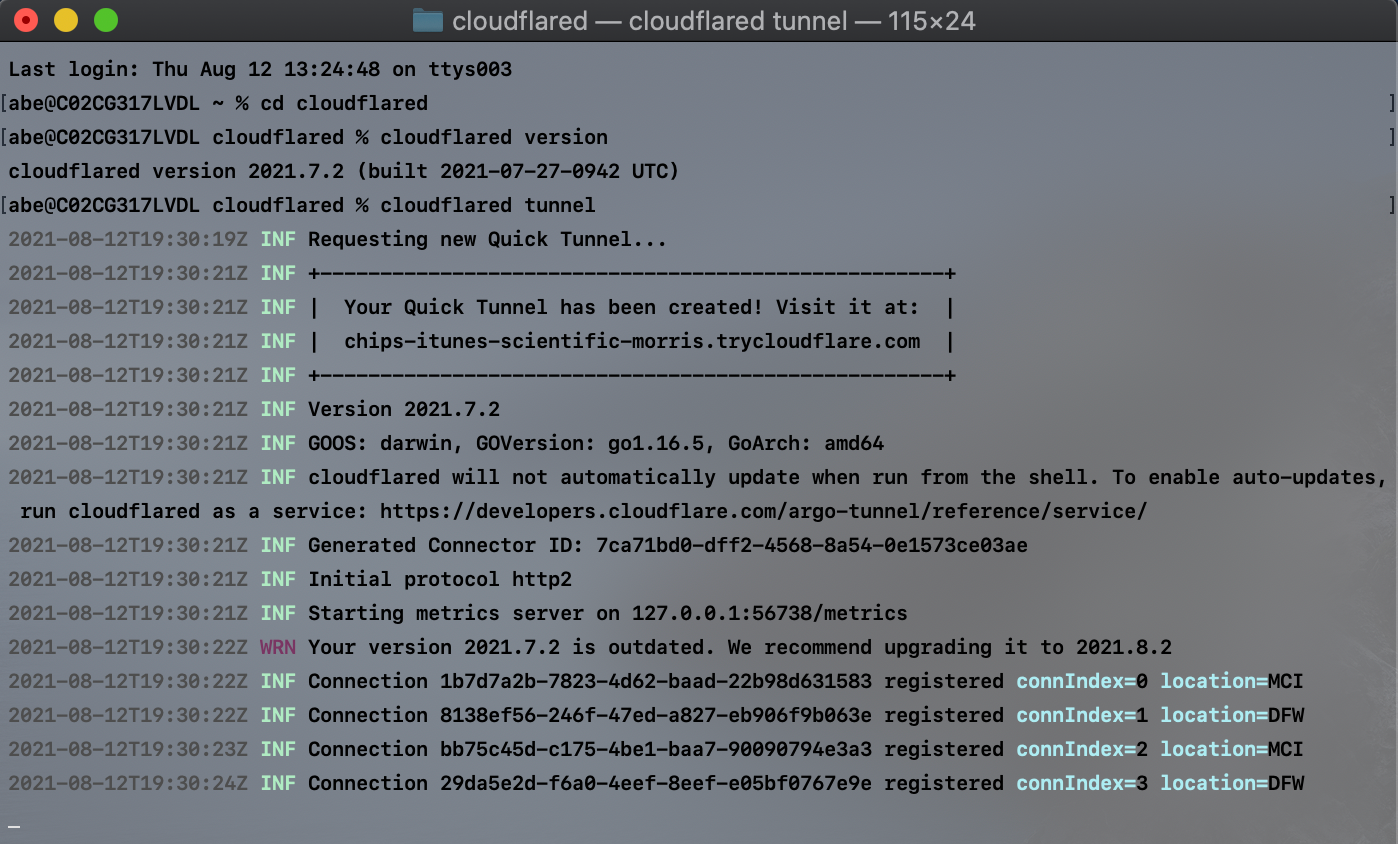

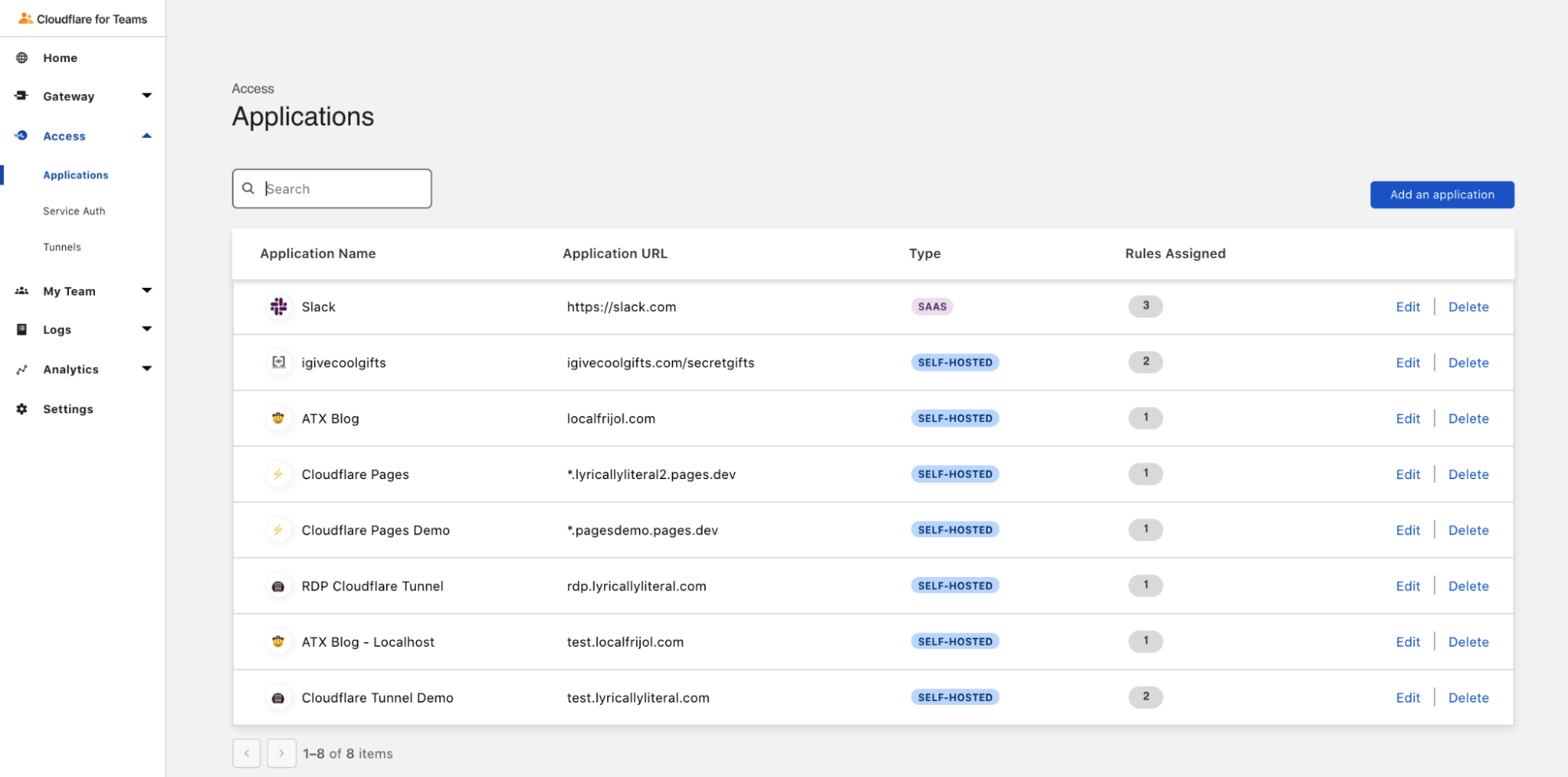

Meaningful change starting with just one flow

Generation 3 platforms should prioritize ease of use. It should be possible for companies to start their Zero Trust journey with just one traffic flow and grow momentum from there. There’s lots of potential angles to start with, but we think one of the easiest is configuring clientless Zero Trust access for one application. Anyone, from the smallest to the largest organizations, should be able to pick an app and prove the value of this approach within minutes.

A bridge between the old & new world

Shifting from network-level access controls (IP ACLs, VPNs, etc.) to application and user-level controls to enforce Zero Trust across your entire network will take time. CIOs should pick a platform that makes it easy to migrate infrastructure over time by allowing:

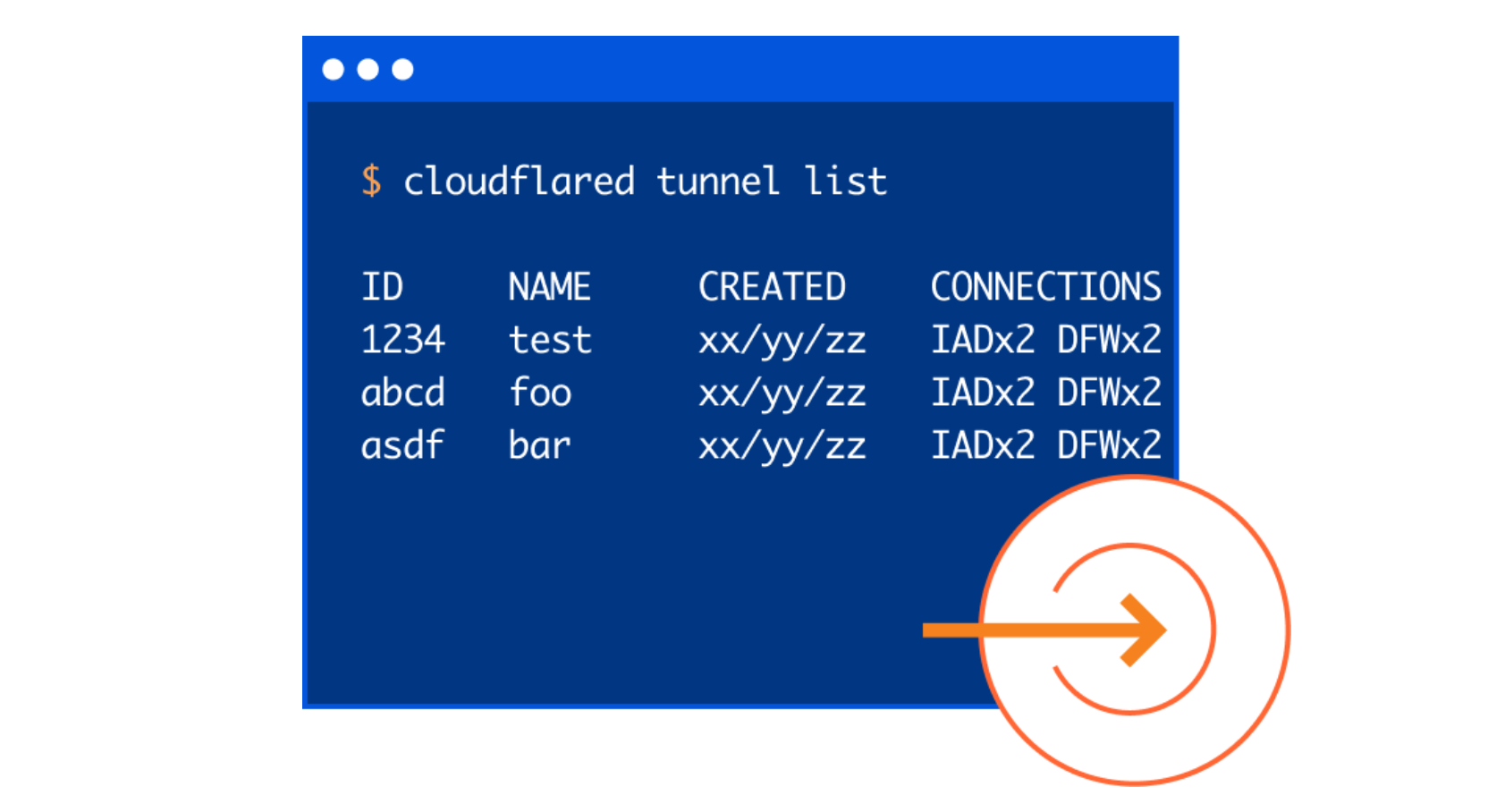

- Upgrading from IP-level to application-level architecture over time: Start by connecting with a GRE or IPsec tunnel, then use automatic service discovery to identify high-priority applications to target for finer-grained connection.

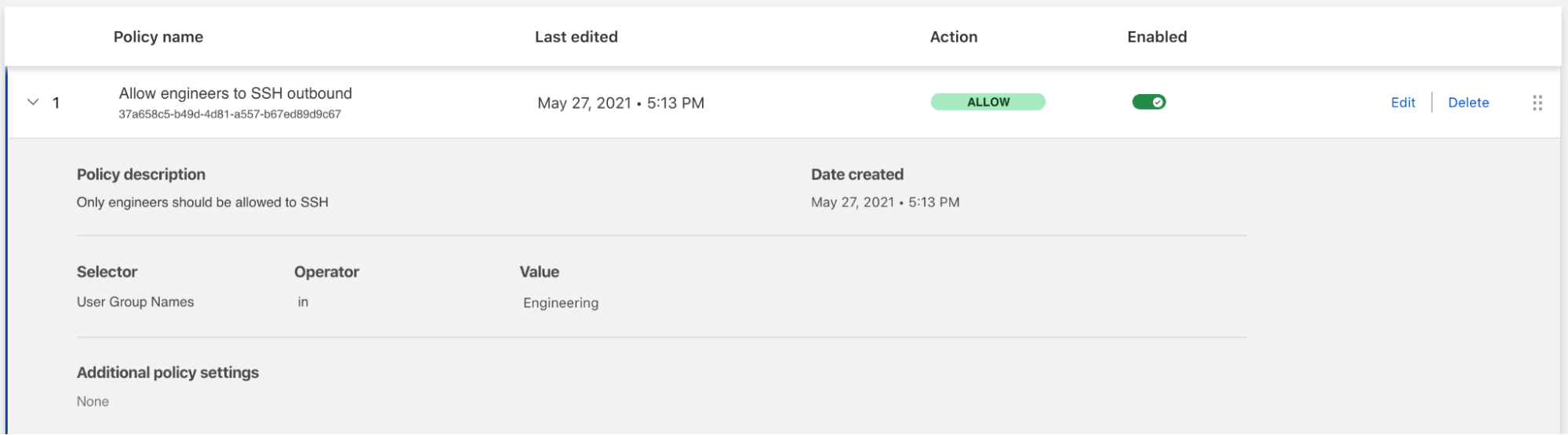

- Upgrading from more open to more restrictive policies over time: Start with security rules that mirror your legacy architecture, then leverage analytics and logs to implement more restrictive policies once you can see who’s accessing what.

- Making changes to be quick and easy: Design your next-generation network using a modern SaaS interface.

Network Architecture Scorecard: Generation 3

| Characteristic | Score | Description |

|---|---|---|

| Security | ⭐⭐⭐ | Granular security controls are exercised on every traffic flow; attacks are blocked close to their source; technologies like Browser Isolation keep malicious code entirely off of user devices. |

| Performance | ⭐⭐⭐ | Security controls are enforced at location closest to each user; intelligent routing decisions ensure optimal performance for all types of traffic. |

| Reliability | ⭐⭐⭐ | The platform leverages redundant infrastructure to ensure 100% availability; no one device is responsible for holding policy and no one link is responsible for carrying all critical traffic. |

| Cost | ⭐⭐ | Total cost of ownership is reduced by consolidating functions. |

| Visibility | ⭐⭐⭐ | Data from across the edge is aggregated, processed and presented along with insights and controls to act on it. |

| Agility | ⭐⭐⭐ | Making changes to network configuration or policy is as simple as pushing buttons in a dashboard; changes propagate globally within seconds. |

| Precision | ⭐⭐⭐ | Controls are exercised at the user and application layer. Accomplishing “allow only HR to access employee payment data” looks like: Users in HR on trusted devices allowed to access employee payment data |

Cloudflare One is the first built-from-scratch, unified platform for next-generation networks

In order to achieve the ambitious vision we’ve laid out, CIOs need a platform that can combine Zero Trust and network services operating on a world-class global network. We believe Cloudflare One is the first platform to enable CIOs to fully realize this vision.

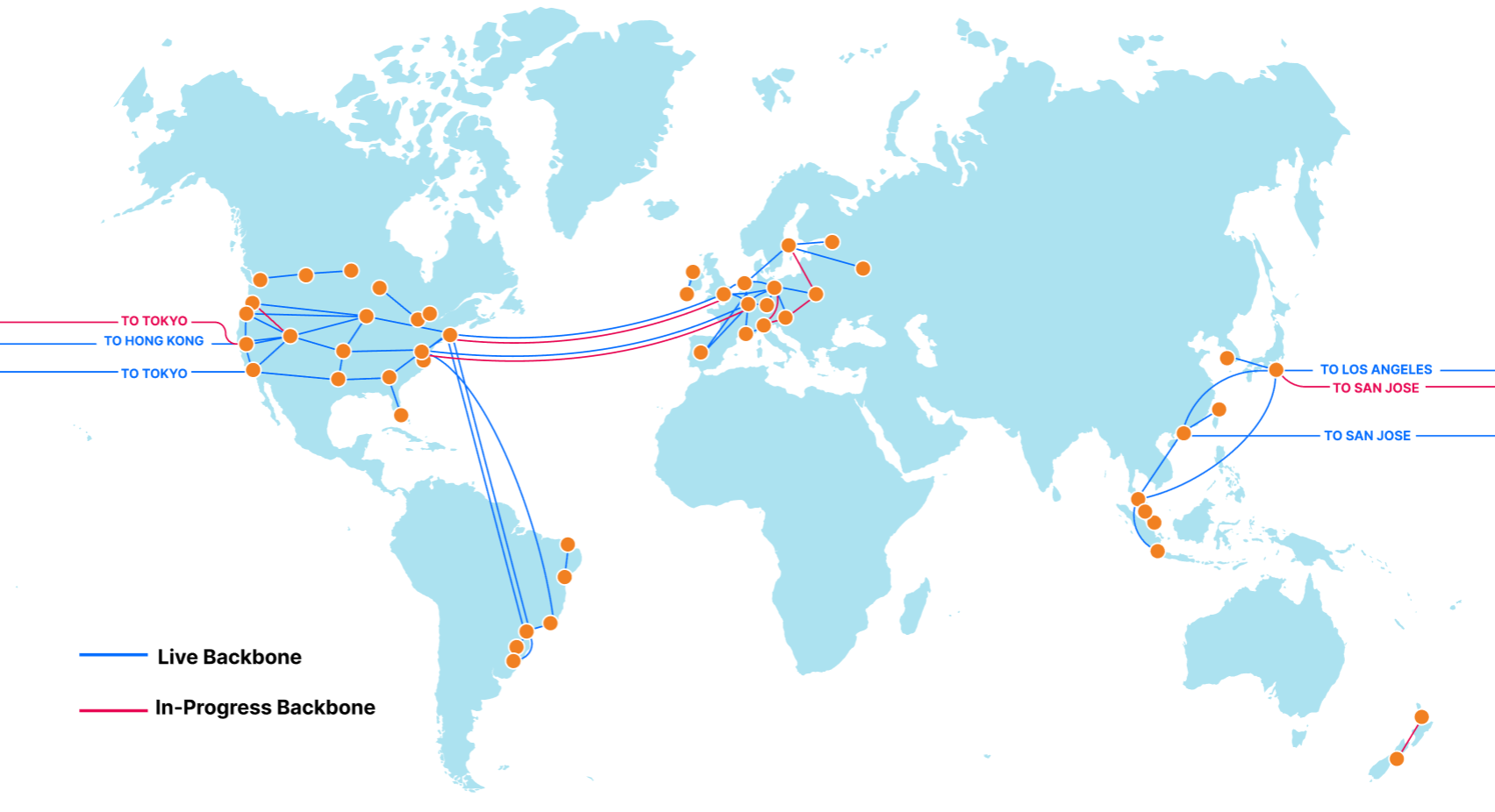

We built Cloudflare One, our combined Zero Trust network-as-a-service platform, on our global network in software on commodity hardware. We initially started on this journey to serve the needs of our own IT and security teams and extended capabilities to our customers over time as we realized their potential to help other companies transform their networks. Every Cloudflare service runs on every server in over 250 cities with over 100 Tbps of capacity, providing unprecedented scale and performance. Our security services themselves are also faster — our DNS filtering runs on the world’s fastest public DNS resolver and identity checks run on Cloudflare Workers, the fastest serverless platform.

We leverage insights from over 28 million requests per second and 10,000+ interconnects to make smarter security and performance decisions for all of our customers. We provide both network connectivity and security services in a single platform with single-pass inspection and single-pane management to fill visibility gaps and deliver exponentially more value than the sum of point solutions could alone. We’re giving CIOs access to our globally distributed, blazing-fast, intelligent network to use as an extension of theirs.

This week, we’ll recap and expand on Cloudflare One, with examples from real customers who are building their next-generation networks on Cloudflare. We’ll dive more deeply into the capabilities that are available today and how they’re solving the problems introduced in Generation 2, as well as introduce some new product areas that will make CIOs’ lives easier by eliminating the cost and complexity of legacy hardware, hardening security across their networks and from multiple angles, and making all traffic routed across our already fast network even faster.

We’re so excited to share how we’re making our dreams for the future of corporate networks reality — we hope CIOs (and everyone!) reading this are excited to hear about it.