Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/now-open-aws-asia-pacific-jakarta-region/

The AWS Region in Jakarta, Indonesia, is now open and you can start using it today. The official name is Asia Pacific (Jakarta) and the API name is ap-southeast-3. The AWS Asia Pacific (Jakarta) Region is the tenth active AWS Region in Asia Pacific and mainland China along with Beijing, Hong Kong, Mumbai, Ningxia, Osaka, Seoul, Singapore, Sydney, and, Tokyo. With this launch, AWS now spans 84 Availability Zones within 26 geographic regions around the world. We have also announced plans for 24 more Availability Zones and eight more AWS Regions in Australia, Canada, India, Israel, New Zealand, Spain, Switzerland, and the United Arab Emirates.

The AWS Region in Jakarta, Indonesia, is now open and you can start using it today. The official name is Asia Pacific (Jakarta) and the API name is ap-southeast-3. The AWS Asia Pacific (Jakarta) Region is the tenth active AWS Region in Asia Pacific and mainland China along with Beijing, Hong Kong, Mumbai, Ningxia, Osaka, Seoul, Singapore, Sydney, and, Tokyo. With this launch, AWS now spans 84 Availability Zones within 26 geographic regions around the world. We have also announced plans for 24 more Availability Zones and eight more AWS Regions in Australia, Canada, India, Israel, New Zealand, Spain, Switzerland, and the United Arab Emirates.

Instances and Services

Applications running in this 3-AZ region can use C5, C5d, I3, I3en, M5, M5d, R5, R5d, and T3 instances, and can use a long list of AWS services including Amazon API Gateway, Application Auto Scaling, AWS Certificate Manager (ACM), AWS CloudFormation, Amazon CloudFront, AWS CloudTrail, Amazon CloudWatch, CloudWatch Events, Amazon CloudWatch Logs, AWS CodeDeploy, AWS Config, AWS Database Migration Service, AWS Direct Connect, Amazon DynamoDB, EC2 Auto Scaling, Amazon Elastic Block Store (EBS), Amazon Elastic Compute Cloud (Amazon EC2), Amazon Elastic Container Registry, Amazon Elastic Container Service (Amazon ECS), Application Load Balancers (Classic, Network, and Application), Amazon EMR, Amazon ElastiCache, Amazon Elasticsearch Service, Amazon Glacier, AWS Identity and Access Management (IAM), Amazon Kinesis Data Streams, AWS Key Management Service (KMS), AWS Lambda, AWS Marketplace, AWS Organizations, AWS Personal Health Dashboard, Amazon Redshift, Amazon Relational Database Service (RDS), Amazon Aurora, Amazon Route 53 (including Private DNS for VPCs), Amazon Simple Notification Service (SNS), Amazon Simple Queue Service (SQS), Amazon Simple Storage Service (Amazon S3), Amazon Simple Workflow Service (SWF), AWS Step Functions, AWS Support API, AWS Systems Manager, AWS Trusted Advisor, Amazon Virtual Private Cloud (VPC), and VM Import/Export.

Using the Asia Pacific (Jakarta) Region

As is the case with all of the newer AWS Regions, you need to explicitly enable this one in order to be able to create and manage resources within it. To learn how to do this, read Using the Asia Pacific (Hong Kong) Region in my post, Now Open – AWS Asia Pacific (Hong Kong) Region.

Connectivity, Edge Locations, and Latency

Jakarta is already home to a Amazon CloudFront edge location that was opened earlier this year, along with two brand-new AWS Direct Connect locations. In addition to this in-country infrastructure, there are more than sixty other edge locations and multiple regional edge caches in Asia, as detailed on the AWS Global Infrastructure page.

The region offers low-latency connections to other AWS regions in the area. Here are the latest numbers:

Many AWS services give you options to replicate your data across multiple AWS regions. You can replicate S3 buckets to multiple destinations (and use Multi-Region Access Points so your users access the closest one), copy EC2 AMIs between regions, set up cross-region Amazon Aurora Read Replicas, replicate container images, and more. You can set up Amazon DynamoDB Global Tables that span any desired regions, and you can set up inter-region VPC peering. To learn more about how to build applications that span regions, be sure to check out our Multi-Region Application Architecture solution.

AWS in Indonesia

With this launch we are making a long-term commitment to growing our business in Indonesia, and expect to create an average of 24,700 jobs annually over the next 15 years. This includes the direct AWS supply chain (construction, facility maintenance, electricity, and telecommunications) along with the growth that this drives in the broader Indonesian economy.

We have been investing in Southeast Asia and Indonesia for many years. The first AWS office in Jakarta opened in 2018 to help support our customers, and now employs developer advocates, solutions architects, account managers, and partner managers, with hiring for other roles now underway.

Back in 2019 we announced a goal to train and empower hundreds of thousands of Indonesians with proficiency in cloud services by 2025. In collaboration with the Indonesian government and with the help of both AWS partners and educational institutions, we have already trained over 200,000 people. We are doing this through multiple routes and programs including:

Laptops for Builders – This is a free program that teaches high school and vocational student in Bahasa, Indonesia about cloud fundamentals.

Scholarship Programs – Working closely with tech-education startup Dicoding, we are offering a free scholarship program for up to 100,000 cloud and back-end developers.

AWS Training & Certification – Attendees are gaining new skills and certifications in areas such as AWS Cloud fundamentals, big data, security, and machine learning, with several training options available.

AWS Customers in Indonesia

We have many amazing customers in Indonesia! Here are a few success stories:

Traveloka is a lifestyle superapp with a focus on Indonesia, Thailand, Vietnam, Singapore, Malaysia, the Philippines, and Australia. They offer customers in those countries an end-to-end solution that spans travel, local services, and financial services, all powered by AWS. The company was born in the cloud, and counts on AWS to let them build apps quickly and with high scalability. The Traveloka app has been downloaded over 60 million times, making it the most popular travel and lifestyle booking app in Southeast Asia.

Halodoc is an Indonesian digital health startup. They are currently running a digital reservation program to help Indonesian citizens to book and receive their COVID-19 vaccinations, while also providing the government with easier monitoring and evaluation of the vaccine rollout. During the pandemic, they have also helped to provide testing and telemedicine services, all powered by a digital platform that runs on AWS and that allows them to scale in real-time according to market demand.

Under the national movement of Learning Freedom (“Merdeka Belajar”), the Indonesian government is working to allow students to access educational resources from anywhere and at any time. Simak Online allows 300,000 students from 430 schools across Jakarta to access their learning materials and assignments, complete homework, take examples, and participate in online forum discussions. Previously hosted on-premises, Simak Online moved to AWS shortly before COVID-19 broke out in Indonesia. Before the move, they could support exams at just 50 schools simultaneously. Thanks to AWS, they can now scale up and down as needed and can support the national movement and allow students to learn online and on-demand.

A translated version of this post is available on the AWS Indonesia Blog.

— Jeff;

Then I define a pattern that matches the bucket and the events of interest:

Then I define a pattern that matches the bucket and the events of interest: One pattern can match one or more buckets and one or more events; the following events are supported:

One pattern can match one or more buckets and one or more events; the following events are supported:

I add a tag (User) to the rule, and click Create retention rule:

I add a tag (User) to the rule, and click Create retention rule:

Scaling is a really interesting problem, with challenges around performance, storage, availability, cost, and effectiveness. In addition to handling hundreds of millions of ad requests per second (trillions of ads per day) within a latency budget of 120 ms, the ad server must be able to:

Scaling is a really interesting problem, with challenges around performance, storage, availability, cost, and effectiveness. In addition to handling hundreds of millions of ad requests per second (trillions of ads per day) within a latency budget of 120 ms, the ad server must be able to: The presentation wraps up by discussing some of the ways that they were able to apply machine learning at scale. For example, to select the right ad for each request, Amazon Ads uses deep learning models to predict relevant ads to show shoppers, predict whether a shopper will click or purchase, and allocate and price an ad. In order to do this, they needed to be able to score thousands of ads per request within a 20 ms window at over 100K transactions per second, all across hundreds of models that each required different hardware and software optimizations.

The presentation wraps up by discussing some of the ways that they were able to apply machine learning at scale. For example, to select the right ad for each request, Amazon Ads uses deep learning models to predict relevant ads to show shoppers, predict whether a shopper will click or purchase, and allocate and price an ad. In order to do this, they needed to be able to score thousands of ads per request within a 20 ms window at over 100K transactions per second, all across hundreds of models that each required different hardware and software optimizations. The

The

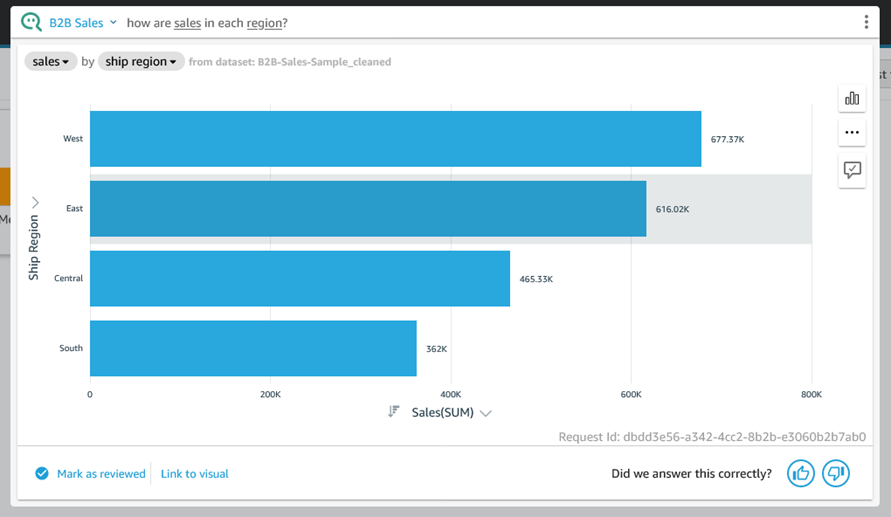

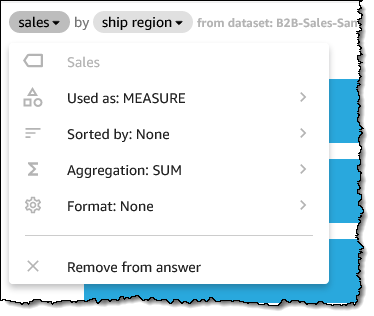

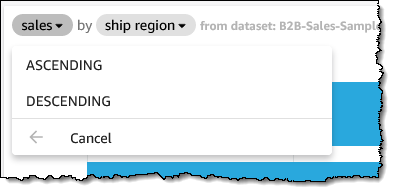

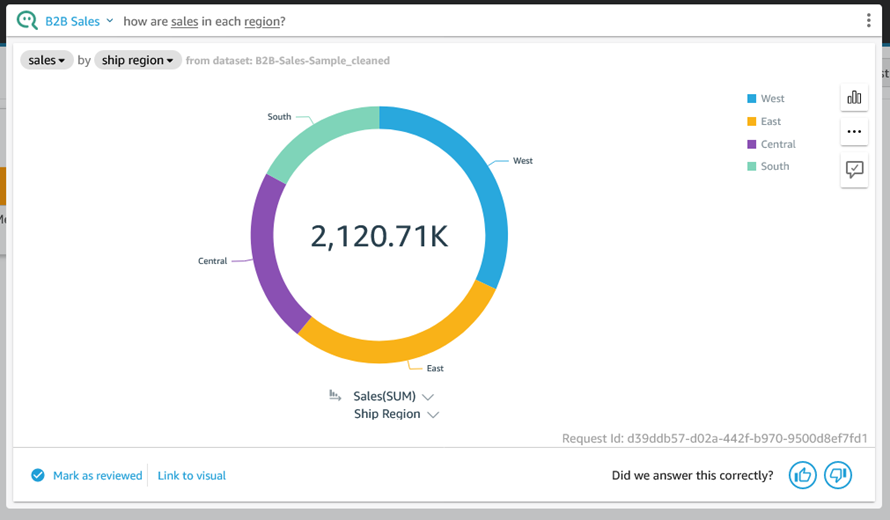

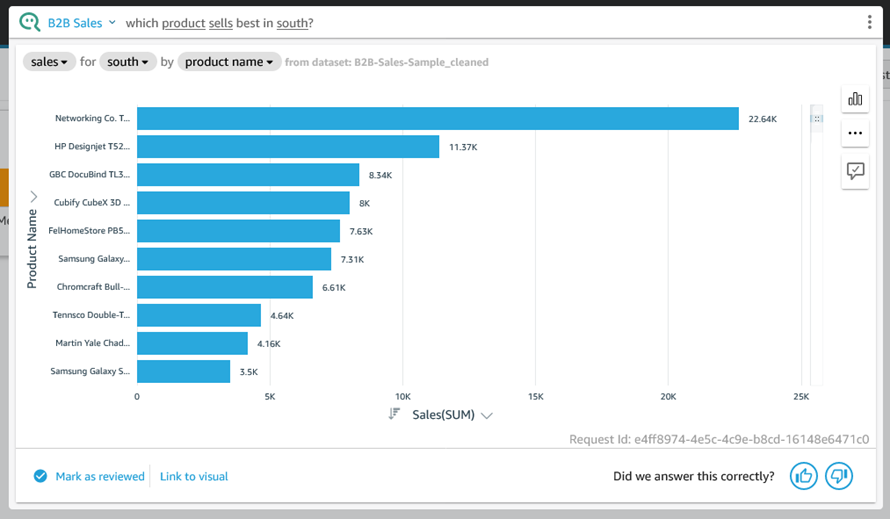

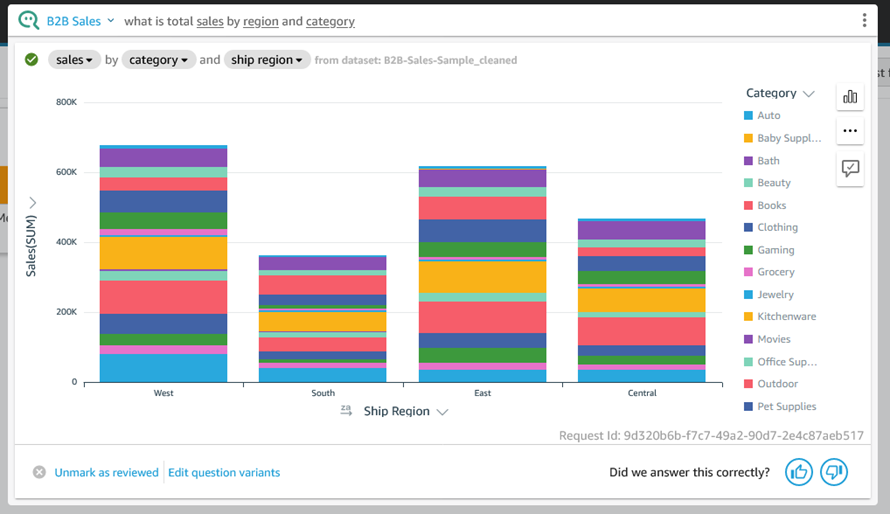

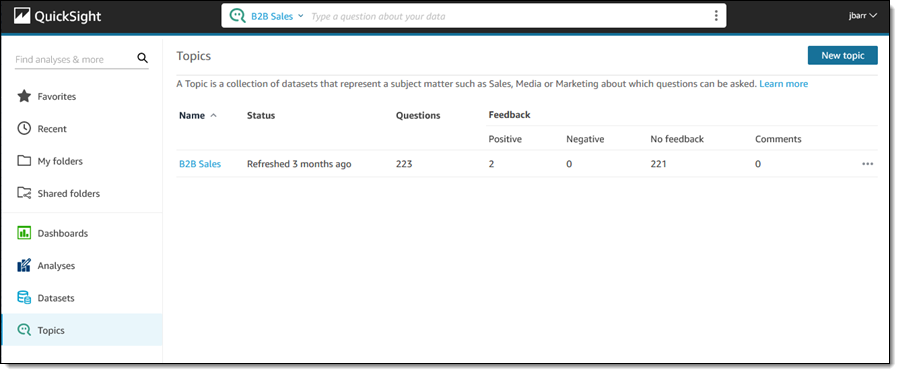

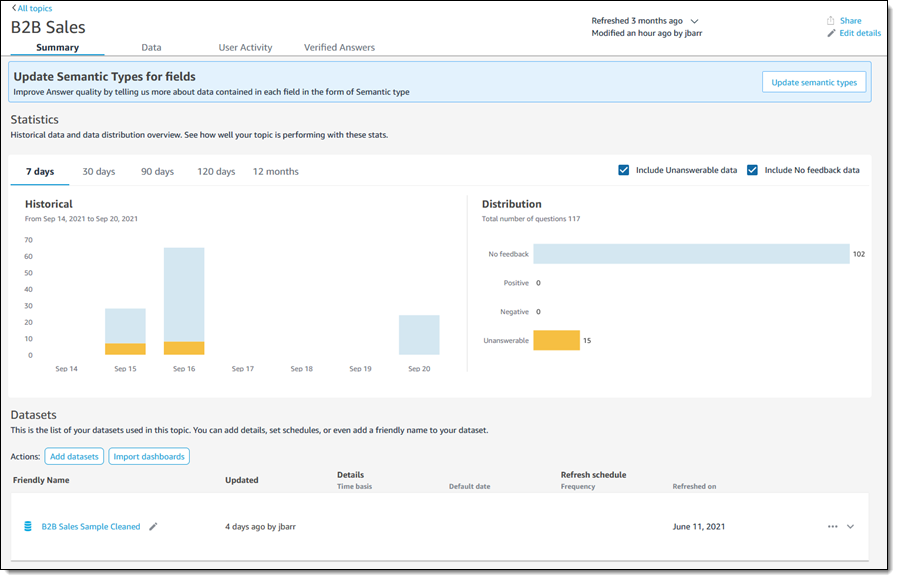

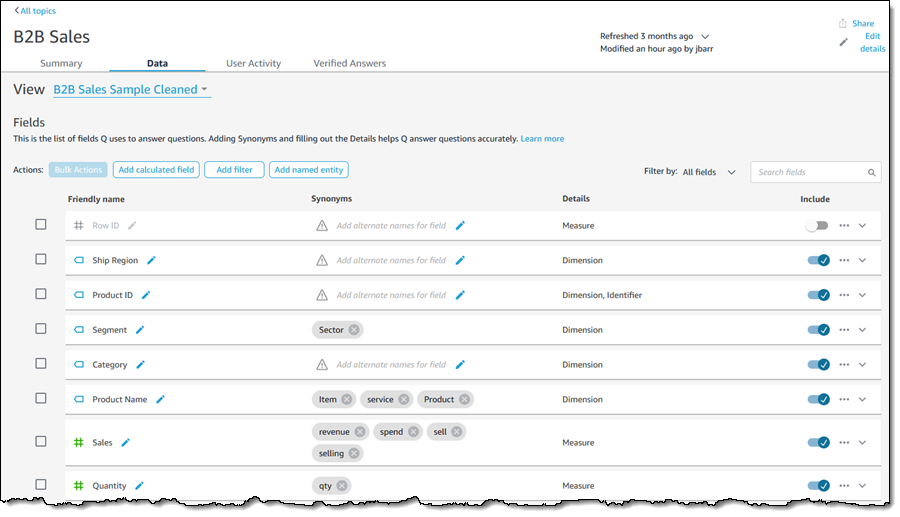

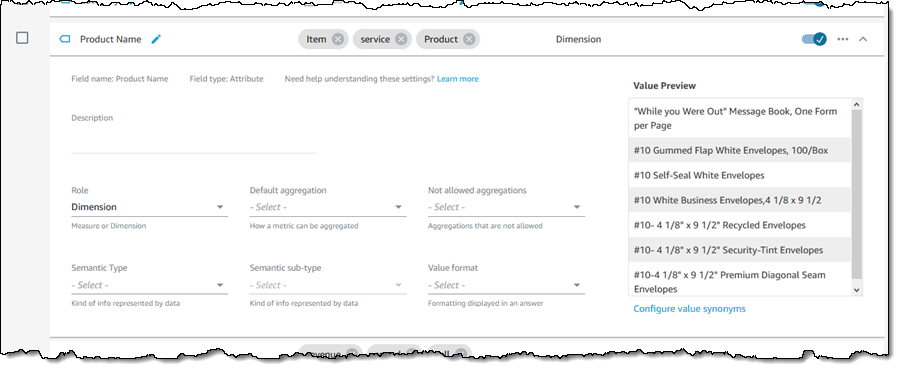

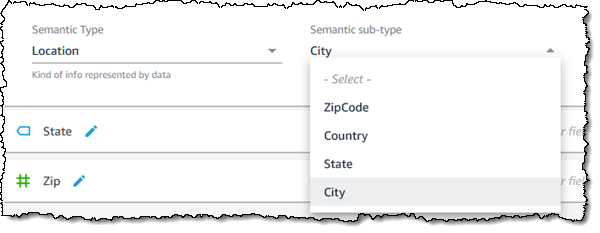

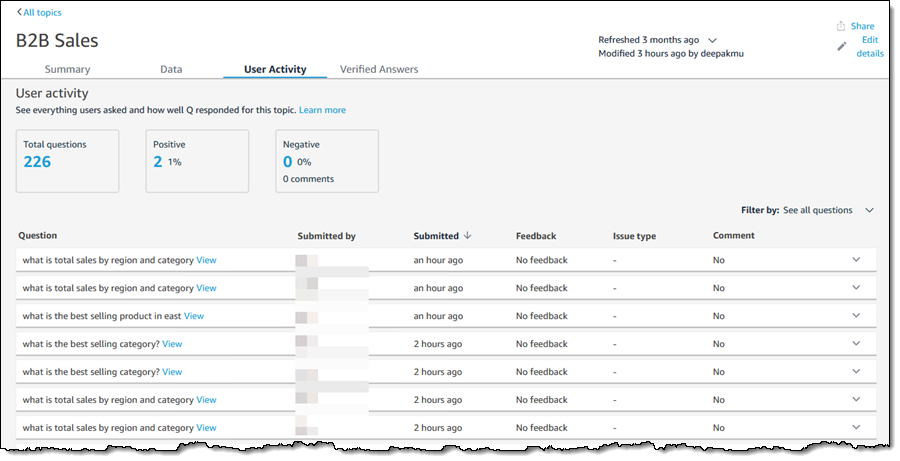

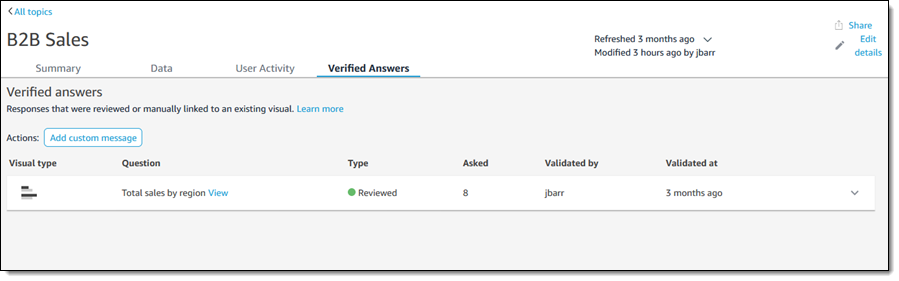

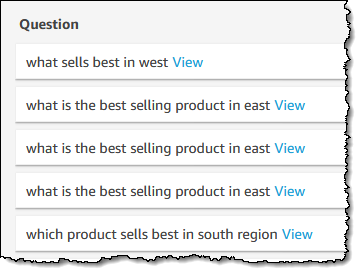

To recap, Q is a natural language query tool for the Enterprise Edition of

To recap, Q is a natural language query tool for the Enterprise Edition of