Post Syndicated from The Hook Up original https://www.youtube.com/watch?v=nO7Yo-Xj-qA

Use Amazon Redshift RA3 with managed storage in your modern data architecture

Post Syndicated from Bhanu Pittampally original https://aws.amazon.com/blogs/big-data/use-amazon-redshift-ra3-with-managed-storage-in-your-modern-data-architecture/

Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. You can start with just a few hundred gigabytes of data and scale to a petabyte or more. This enables you to use your data to acquire new insights for your business and customers.

Over the years, Amazon Redshift has evolved a lot to meet our customer demands. Its journey started as a standalone data warehousing appliance that provided a low-cost, high-performance, cloud-based data warehouse. Support for Amazon Redshift Spectrum compute nodes was later added to extend your data warehouse to data lakes, and the concurrency scaling feature was added to support burst activity and scale your data warehouse to support thousands of queries concurrently. In its latest offering, Amazon Redshift runs on third-generation architecture where storage and compute layers are decoupled and scaled independent of each other. This latest generation powers the several modern data architecture patterns our customers are actively embracing to build flexible and scalable analytics platforms.

When spinning up a new instance of Amazon Redshift, you get to choose either Amazon Redshift Serverless, for when you need a data warehouse that can scale seamlessly and automatically as your demand evolves unpredictably, or you can choose an Amazon Redshift provisioned cluster for steady-state workloads and greater control over your Amazon Redshift cluster’s configuration.

An Amazon Redshift provisioned cluster is a collection of computing resources called nodes, which are organized into a group called a cluster. Each cluster runs the Amazon Redshift engine and contains one or more databases. Creating an Amazon Redshift cluster is the first step in your process of building an Amazon Redshift data warehouse. While launching a provisioned cluster, one option that you specify is the node type. The node type determines the CPU, RAM, storage capacity, and storage drive type for each node.

In this post, we cover the current generation node RA3 architecture, different RA3 node types, important capabilities that are available only on RA3 node types, and how you can upgrade your current Amazon Redshift node types to RA3.

Amazon Redshift RA3 nodes

RA3 nodes with managed storage enable you to optimize your data warehouse by scaling and paying for compute and managed storage independently. RA3 node types are the latest node type for Amazon Redshift. With RA3, you choose the number of nodes based on your performance requirements and only pay for the managed storage that you use. RA3 architecture gives you the ability to size your cluster based on the amount of data you process daily or the amount of data that you want to store in your warehouse; there is no need to account for both storage and processing needs together.

Other node types that we previously offered include the following:

- Dense compute – DC2 nodes enable you to have compute-intensive data warehouses with local SSD storage included. You choose the number of nodes you need based on data size and performance requirements.

- Dense storage (deprecated) – DS2 nodes enable you to create large data warehouses using hard disk drives (HDDs). If you’re using the DS2 node type, we strongly recommend that you upgrade to RA3 to get twice as much storage and improved performance for the same on-demand cost.

When you use the RA3 node size and choose your number of nodes, you can provision the compute independent of storage. RA3 nodes are built on the AWS Nitro System and feature high bandwidth networking and large high-performance SSDs as local caches. RA3 nodes use your workload patterns and advanced data management techniques to deliver the performance of local SSD while scaling storage automatically to Amazon Simple Storage Service (Amazon S3).

RA3 node types come in three different sizes to accommodate your analytical workloads. You can quickly start experimenting with the RA3 node type by creating a single-node ra3.xlplus cluster and explore various features that are available. If you’re running a medium-sized data warehouse, you can size your cluster with ra3.4xlarge nodes. For large data warehouses, you can start with ra3.16xlarge. The following table gives more information about RA3 node types and their specifications as of this writing.

| Node Type | vCPU |

RAM (GiB) |

Default Slices Per Node | Managed Storage Quota Per Node | Node Range with Create Cluster | Total Managed Storage Capacity |

| ra3.xlplus | 4 | 32 | 2 | 32 TB | 1-16 | 1024 TB |

| ra3.4xlarge | 12 | 96 | 4 | 128 TB | 2-32 | 8192 TB |

| ra3.16xlarge | 48 | 384 | 16 | 128 TB | 2-128 | 16384 TB |

Amazon Redshift with managed storage

Amazon Redshift with a managed storage architecture (RMS) still boasts the same resiliency and industry-leading hardware. With managed storage, Amazon Redshift uses intelligent data prefetching and data evictions based on the temperature of your data. This method helps you decide where to store your most-queried data. Most frequently used blocks (hot data) are cached locally on SSD, and infrequently used blocks (cold data) are stored on an RMS layer backed by Amazon S3. The following diagram depicts the leader node, compute node, and Amazon Redshift managed storage.

In the following sections, we discuss the capabilities that Amazon Redshift RA3 with managed storage can provide.

Independently scale compute and storage

As the scale of an organization grows, data continues to grow—reaching petabytes. The amount of data you ingest into your Amazon Redshift data warehouse also grows. You may be looking for ways to cost-effectively analyze all your data and at the same time have control over choosing the right compute or storage resource at the right time. For customers who are looking to be cost conscientious and cost-effective, the RA3 platform provides the option to scale and pay for your compute and storage resources separately.

With RA3 instances with managed storage, you can choose the number of nodes based on your performance requirements, and only pay for the managed storage that you use. This gives you the flexibility to size your RA3 cluster based on the amount of data you process daily without increasing your storage costs. It allows you to pay per hour for the compute and separately scale your data warehouse storage capacity without adding any additional compute resources and paying only for what you use.

Another benefit of RMS is that Amazon Redshift manages which data should be stored locally for fastest access, and data that is slightly colder is still kept within fast-access reach.

Advanced hardware

RA3 instances use high-bandwidth networking built on the AWS Nitro System to further reduce the time taken for data to be offloaded to and retrieved from Amazon S3. Managed storage uses high-performance SSDs for your hot data and Amazon S3 for your cold data, providing ease of use, cost-effective storage, and fast query performance.

Additional security options

Amazon Redshift managed VPC endpoints enable you to set up a private connection to securely access your Amazon Redshift cluster within your virtual private cloud (VPC) from client applications in another VPC within the same AWS account, another AWS account, or a subnet without using public IPs and without requiring the traffic to traverse across the internet.

The following scenarios describe common reasons to allow access to a cluster using an Amazon Redshift managed VPC endpoint:

- AWS account A wants to allow a VPC in AWS account B to have access to a cluster

- AWS account A wants to allow a VPC that is also in AWS account A to have access to a cluster

- AWS account A wants to allow a different subnet in the cluster’s VPC within AWS account A to have access to a cluster

For information about access options to another VPC, refer to Enable private access to Amazon Redshift from your client applications in another VPC.

Further optimize your workload

In this section, we discuss two ways to further optimize your workload.

AQUA

AQUA (Advanced Query Accelerator) is a new distributed and hardware-accelerated cache that enables Amazon Redshift to run up to 10 times faster than other enterprise cloud data warehouses by automatically boosting certain types of queries. AQUA is available with the ra3.16xlarge, ra3.4xlarge, or ra3.xlplus nodes at no additional charge and with no code changes.

AQUA is an analytics query accelerator for Amazon Redshift that uses custom-designed hardware to speed up queries that scan large datasets. AQUA automatically optimizes query performance on subsets of the data that require extensive scans, filters, and aggregation. With this approach, you can use AQUA to run queries that scan, filter, and aggregate large datasets.

For more information about using AQUA, refer to How to evaluate the benefits of AQUA for your Amazon Redshift workloads.

Concurrency scaling for write operations

With RA3 nodes, you can take advantage of concurrency scaling for write operations, such as extract, transform, and load (ETL) statements. Concurrency scaling for write operations is especially useful when you want to maintain consistent response times when your cluster receives a large number of requests. It improves throughput for write operations contending for resources on the main cluster.

Concurrency scaling supports COPY, INSERT, DELETE, and UPDATE statements. In some cases, you might follow DDL statements, such as CREATE, with write statements in the same commit block. In these cases, the write statements are not sent to the concurrency scaling cluster.

When you accrue credit for concurrency scaling, this credit accrual applies to both read and write operations.

Increased agility to scale compute resources

Elastic resize allows you to scale your Amazon Redshift cluster up and down in minutes to get the performance you need, when you need it. However, there are limits on the nodes that you can add to a cluster. With some RA3 node types, you can increase the number of nodes up to four times the existing count. All RA3 node types support a decrease in the number of nodes to a quarter of the existing count. The following table lists growth and reduction limits for each RA3 node type.

| Node Type | Growth Limit | Reduction Limit |

| ra3.xlplus | 2 times (from 4 to 8 nodes, for example) | To a quarter of the number |

| ra3.4xlarge | 4 times (from 4 to 16 nodes, for example) | To a quarter of the number (from 16 to 4 nodes, for example) |

| ra3.16xlarge | 4 times (from 4 to 16 nodes, for example) | To a quarter of the number (from 16 to 4 nodes, for example) |

RA3 node types also have a shorter duration of snapshot restoration time because of the separation of storage and compute.

Improved resiliency

Amazon Redshift employs extensive fault detection and auto remediation techniques in order to maximize the availability of a cluster. With the RA3 architecture, you can enable cluster relocation, which provides additional resiliency by having the ability to relocate a cluster across Availability Zones without losing any data (RPO is zero) or having to change your client applications. The cluster’s endpoint remains the same after the relocation occurs so applications can continue operating without modifications. As the existing cluster fails, a new cluster is created on demand in another Availability Zone so cost of a standby replica cluster is avoided.

Accelerate data democratization

In this section, we share two techniques to accelerate data democratization.

Data sharing

Data sharing provides instant, granular, and high-performance access without copying data and data movement. You can query live data constantly across all consumers on different RA3 clusters in the same AWS account, in a different AWS account, or in a different AWS Region. Data is shared securely and provides governed collaboration. You can provide access in different granularity, including schema, database, tables, views, and user-defined functions.

This opens up various new use cases where you may have one ETL cluster that is producing data and have multiple consumers such as ad-hoc querying, dashboarding, and data science clusters to view the same data. This also enables bi-directional collaboration where groups such as marketing and finance can share data with one another. Queries accessing shared data use the compute resources of the consumer Amazon Redshift cluster and don’t impact the performance of the producer cluster.

For more information about data sharing, refer to Sharing Amazon Redshift data securely across Amazon Redshift clusters for workload isolation.

AWS Data Exchange for Amazon Redshift

AWS Data Exchange for Amazon Redshift enables you to find and subscribe to third-party data in AWS Data Exchange that you can query in an Amazon Redshift data warehouse in minutes. You can also license your data in Amazon Redshift through AWS Data Exchange. Access is automatically granted when a customer subscribes to your data and is automatically revoked when their subscription ends. Invoices are automatically generated, and payments are automatically collected and disbursed through AWS. This feature empowers you to quickly query, analyze, and build applications with third-party data.

For details on how to publish a data product and subscribe to a data product using AWS Data Exchange for Amazon Redshift, refer to New – AWS Data Exchange for Amazon Redshift.

Cross-database queries for Amazon Redshift

Amazon Redshift supports the ability to query across databases in a Redshift cluster. With cross-database queries, you can seamlessly query data from any database in the cluster, regardless of which database you are connected to. Cross-database queries can eliminate data copies and simplify your data organization to support multiple business groups on the same cluster.

One of many use cases where Cross-database query helps you is when data is organized across multiple databases in a Redshift cluster to support multi-tenant configurations. For example, different business groups and teams that own and manage data sets in their specific database in the same data warehouse need to collaborate with other groups. You might want to perform common ETL staging and processing while your raw data is spread across multiple databases. Organizing data in multiple Redshift databases is also a common scenario when migrating from traditional data warehouse systems. With cross-database queries, you can now access data from any of the databases on the Redshift cluster without having to connect to that specific database. You can also join data sets from multiple databases in a single query

You can read more about cross-database queries here.

Upgrade to RA3

You can upgrade to RA3 instances within minutes no matter the size of your current Amazon Redshift clusters. Simply take a snapshot of your cluster and restore it to a new RA3 cluster. For more information, refer to Upgrading to RA3 node types.

You can also simplify your migration efforts with Amazon Redshift Simple Replay. For more information, refer to Simplify Amazon Redshift RA3 migration evaluation with Simple Replay utility.

Summary

In this post, we talked about the RA3 node types, the benefits of Amazon Redshift managed storage, and the additional capabilities that you get by using Amazon Redshift RA3 with managed storage. Migrating to RA3 node types isn’t a complicated effort, you can get started today.

About the Authors

Bhanu Pittampally is an Analytics Specialist Solutions Architect based out of Dallas. He specializes in building analytic solutions. His background is in data warehouses—architecture, development, and administration. He has been in the data and analytics field for over 13 years.

Bhanu Pittampally is an Analytics Specialist Solutions Architect based out of Dallas. He specializes in building analytic solutions. His background is in data warehouses—architecture, development, and administration. He has been in the data and analytics field for over 13 years.

Jason Pedreza is an Analytics Specialist Solutions Architect at AWS with data warehousing experience handling petabytes of data. Prior to AWS, he built data warehouse solutions at Amazon.com. He specializes in Amazon Redshift and helps customers build scalable analytic solutions.

Jason Pedreza is an Analytics Specialist Solutions Architect at AWS with data warehousing experience handling petabytes of data. Prior to AWS, he built data warehouse solutions at Amazon.com. He specializes in Amazon Redshift and helps customers build scalable analytic solutions.

AWS Security Profile: Ely Kahn, Principal Product Manager for AWS Security Hub

Post Syndicated from Maddie Bacon original https://aws.amazon.com/blogs/security/aws-security-profile-ely-kahn-principal-product-manager-for-aws-security-hub/

In the AWS Security Profile series, I interview some of the humans who work in Amazon Web Services Security and help keep our customers safe and secure. This interview is with Ely Kahn, principal product manager for AWS Security Hub. Security Hub is a cloud security posture management service that performs security best practice checks, aggregates alerts, and facilitates automated remediation.

How long have you been at AWS and what do you do in your current role?

I’ve been with AWS just over 4 years. I came to AWS through the acquisition of a company I co-founded called Sqrrl, which then became Amazon Detective. Shortly after the acquisition, I moved from the Sqrrl/Detective team and helped launch AWS Security Hub. In my current role, I’m the head of product for Security Hub, which means I lead our product roadmap and our product strategy, and I translate customer requirements into technical specifications.

How did you get started in the world of security?

My career started inside the U.S. federal government, first inside the Department of Homeland Security and, specifically, inside the Transportation Security Administration (TSA). At the time, the TSA had uncovered a vulnerability concerning boarding passes and the terrorist no-fly list. I was tasked with figuring out how to close that vulnerability, and I came up with a new way to embed a digital signature inside the barcode to help ensure the authenticity of the boarding pass. After that, people thought I was a cybersecurity expert, and I began working on a lot of cybersecurity strategy and policy at the Department of Homeland Security and then at the White House.

How do you explain your job to your non-tech friends?

I actually explain it the same way to technical and non-technical friends. I head up a service called Security Hub, which is designed to help you do a couple of different things. It helps you understand your security posture on AWS—what sort of risks you face and the most urgent security issues that you need to address across your AWS accounts. It also gives you the tools to improve your security posture and help you fix as many of those security issues as possible. We do that through three primary functions. First, we aggregate all of your security alerts into a standardized data format that’s available in one place. Second, we do our own automated security checks. We look at all the resources you’ve enabled on AWS and help check that those resources are configured in accordance with best practices that we define, and in alignment with various regulatory frameworks. Third, we help you auto-remediate and auto-respond to as many of those issues as possible.

What are you currently working on that you’re excited about?

Our number one priority with Security Hub is to expand coverage of the automated security checks that we provide. We have almost 200 automated security checks today covering several dozen AWS services. Over the next few years, we plan to expand this to more AWS services, which will add a large number of additional security checks. This is important because customers don’t want to have to write these security checks themselves. They want the one-click capability to turn on the checks—or controls, as we call them in Security Hub—and they should be automatically on in all of your accounts. They should only run if you’re using resources that are actually in-scope for those checks, and they should produce a security score to help you quickly understand the security posture of different accounts and of your organization as a whole.

What would you say is the coolest feature of Security Hub?

The coolest feature is probably the one that gets the least attention. It’s what we call our AWS Security Finding Format (ASFF). The ASFF is really just a data standard—it consists of over 1,000 JSON fields and objects, and it’s how you normalize all of your different security alerts. We’ve integrated 75 different services and partner products. The real advantage of Security Hub is that we automatically take all of those different alerts from all of those different integration partners and normalize them into this standardized data format, so that when you’re searching the findings you have a common set of fields to search against if you’re trying to do correlations. For example, you can imagine a situation where Amazon GuardDuty detects unusual activity in an Amazon Simple Storage Service (Amazon S3) bucket, one of our Security Hub checks detects that the bucket is open, and Amazon Macie determines that the bucket contains sensitive information. It’s much easier to do correlations for situations like this when the alerts from those different tools are in the same format. Similarly, building auto-response, auto-remediation workflows is much easier when all of your alerts are in the same format. One of our biggest customers at AWS called the ASFF the gold standard for how to normalize security alerts, which is something we’re super proud of.

As you mentioned, Security Hub integrates with a lot of other AWS services, like GuardDuty and Macie. How do you work with other service teams?

We work across AWS in a couple of different ways. We build out these integrations with other AWS services to either send or receive findings from those services. So, we receive findings from services like GuardDuty and Macie, and we send our findings to other services like AWS Trusted Advisor to give them the same view of security that we see in Security Hub. In general, we try to make it as simple and as low impact as possible because every service team is extremely busy. Wherever possible, we do the integration work and don’t put the onus of effort on the other service team.

The other way we work with other service teams is to formally define the best practices for that service. We have a security engineering team on Security Hub, and we partner with AWS Professional Services and their security consultants. Together, we have been working through the list of the most popular AWS services using a standard taxonomy of control categories to define security controls and best practices for that service. We then work with product managers and engineers on those service teams to review the controls we’re proposing, get their feedback, and then finally code them up as AWS Config rules before deploying them in Security Hub. We have a very well-honed process now to partner with the service teams to integrate with and define the security controls for each service.

Where do you suggest customers start with Security Hub if they are newer in their cloud journey?

The first step with Security Hub is just to turn it on across all of your accounts and AWS Regions. When you do, you’re likely going to see a lot of alerts. Don’t get overwhelmed with the number of alerts you see. Focus initially on the critical and high-severity alerts and work them as campaigns. Identify the owners for all open critical and high-severity alerts and start tracking burndown on a weekly basis. Coordinate with the leadership in your organization so you can identify which teams are keeping up with the alerts and which ones aren’t.

What’s your favorite Leadership Principle and why?

My favorite is one that I initially discounted: frugality. When I first joined AWS, what came to mind was Jeff Bezos using doors as desks. Although that’s certainly a component of frugality, I’ve found that for me, this principle means that we need to be frugal with each other’s time. There are so many competing demands on everyone’s time, and it’s extremely important in a place like AWS to be mindful of that. Make sure you’ve done your due diligence on something before you broadly ask the question or escalate.

What’s the thing you’re most proud of in your career?

There are two things. First is the acquisition of Sqrrl by AWS. I couldn’t have picked a better landing spot for Sqrrl and the team. I feel really lucky that I joined AWS through this acquisition. I’ve really learned a lot here in a short amount of time.

The other thing I’m especially proud of is to have been selected to do a stint through the White House National Security Council staff as the Department of Homeland Security representative to the Council. I sat in the cybersecurity directorate from 2009–2010 as part of that detail to the White House and got a chance to work in the West Wing and attend meetings in the Situation Room, which was just such a special experience.

If you had to pick an industry outside of security, what would you want to do?

This is pretty similar to security, but I got very close to going into the military. Out of high school, I was being recruited for lacrosse at the U.S. Air Force Academy. I had convinced myself that I wanted to go fly jets. I have the utmost respect for our military community, and I certainly could’ve seen myself taking that path.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Want more AWS Security news? Follow us on Twitter.

[$] Unique identifiers for NFS

Post Syndicated from original https://lwn.net/Articles/895556/

In a combined filesystem and storage session at the

2022 Linux Storage,

Filesystem, Memory-management and BPF Summit (LSFMM), Chuck Lever

wanted to discuss the need for a permanent, globally unique ID for network

filesystems. He was joined by Hannes Reinecke who has worked on the

problem for NVMe storage devices; Lever said something along those

lines is needed for NFSv4. He was hoping to find a solution during the

session, though it would seem that the solution may lie in user space—and

documentation.

Ingest Stripe data in a fast and reliable way using Stripe Data Pipeline for Amazon Redshift

Post Syndicated from Jessica Ho original https://aws.amazon.com/blogs/big-data/ingest-stripe-data-in-a-fast-and-reliable-way-using-stripe-data-pipeline-for-amazon-redshift/

Enterprises typically host a myriad of business applications for varying data needs. As companies grow, so does the demand for insights from a complete set of business data. Having data from various applications that store data in disparate silos can delay the decision-making process. However, building and maintaining an API integration or a third-party extract, transform, and load (ETL) pipeline to move data into a destination data store can be time-consuming and expensive.

Today we’re delighted to introduce Stripe Data Pipeline for Amazon Redshift to help you access your Stripe data and extract insight securely and easily from Amazon Redshift. This data, including billing, issuing, and payment records, can be shared in a consistent and automated fashion. You can integrate your Stripe data with data from other sources in your Amazon Redshift clusters to create a single source of truth.

In this post, we discuss the benefits of Stripe Data Pipeline and some of its use cases.

Solution overview

Amazon Redshift is a fast, fully managed, petabyte-scale cloud data warehousing service that makes it simple and cost-effective to efficiently analyze all your data using your existing business intelligence (BI) tools. It’s optimized for datasets ranging from a few hundred gigabytes to petabytes or more. This columnar data warehouse provides provisioned as well as serverless deployment options and uses an industry-standard SQL interface to analyze structured and semi-structured data with fast query performance.

Stripe Data Pipeline is powered by Amazon Redshift’s latest RA3 instances, which provide cross-account data sharing capability. RA3 takes a performant, cost-effective approach to address rapidly growing data volume by decoupling data processing from managed storage. You can then scale compute and storage independently and only pay for what you use. Data sharing provides read access directly to data stored across Amazon Redshift clusters without data movement. This capability removes the complexity and delays that are often associated with managing large distributed datasets across multiple accounts.

The solution provides the following core features and benefits:

- Scalable and managed data pipeline – You don’t need to build, maintain, and scale custom ETL jobs. You can set up Stripe Data Pipeline in minutes, and it and scales automatically to handle increased business activities and data volume.

- Up-to-date financial data – You automatically receive and refresh a complete set of your Stripe data and reports in Amazon Redshift on a low-latency schedule. Stripe Data Pipeline is built into Stripe and always provides accurate data.

- Security and compliance – Data is shared directly from Stripe with your Amazon Redshift cluster, and confidentiality of the data is protected in transit and at rest. Amazon Redshift offers comprehensive security controls and monitoring via native integration with AWS CloudTrail and Amazon CloudWatch (for more information, see Logging Amazon Redshift API calls with AWS CloudTrail and Monitoring Amazon Redshift using CloudWatch metrics, respectively). You can define and audit who has access to what and ensure the compliance requirements are met.

- Extensibility – Once the data is accessible in AWS, you benefit from the breadth of native integrations Amazon Redshift supports. You can join datasets from other data stores in operational databases, build reports and dashboards with BI tools, or identify patterns and generate prediction using Amazon Redshift ML.

The following architecture diagram provides a quick overview of how data sharing works and how other AWS services can be used together. We dive deeper into different use cases in the following sections.

Accept datashares from Stripe

You can configure the solution in a few steps with no code necessary.

Once Stripe creates a datashare from the producer cluster and authorizes your AWS account, you can view this datashare on your Amazon Redshift console. You need to associate it with specific or all clusters in your AWS account as the consumer. Clusters can be specified by namespaces as globally unique identifiers. Next, you create a database from the datashare in order to start querying data.

Query data from the consumer Amazon Redshift cluster

You can now access your Stripe data and schema directly from Amazon Redshift’s web-based query editor. This direct connection enables teams to pull accurate analysis of various functions of the business. For example:

- Finance – “How does my cash flow change based on seasonality?”

- Sales – “How many customers do we have in the US?”

- Product – “How many active users do we have on each subscription plan?”

- Sales operations – “Which customers haven’t paid their invoices?”

The following screenshot shows an example in which the query editor displays the number of charges blocked per Stripe’s connected account.

Use federated queries

The modern data architecture of Amazon Redshift enables you to store data in purpose-built data stores based on specific use cases, and allows querying external databases on Amazon Relational Database Service (Amazon RDS) or datasets in an Amazon Simple Storage Service (Amazon S3) data lake without moving these datasets to Amazon Redshift clusters. You can drive deeper by incorporating data from Amazon RDS, or from an S3 data lake through Amazon Redshift Spectrum. This capability provides a native integration without requiring additional ETL jobs.

The following syntax allows you to create an external schema from an Amazon Aurora MySQL-Compatible Edition database to an Amazon Redshift cluster. Amazon Redshift assumes an AWS Identity and Access Management (IAM) role and uses AWS Secrets Manager to access external data stores. For more information and examples with other supported data stores, refer to Querying data with federated queries in Amazon Redshift.

Coming back to Stripe Data Pipeline, now you can combine the data from an Aurora table and create further analysis. For example, you can correlate the trends of customer acquisition against sales campaign by region, so you can gain an understanding of the campaign effectiveness and make adjustment to marketing strategies.

Create visualizations and dashboards

Now that your complete set of business data is accessible from Amazon Redshift, you can start to explore the data and create visualizations. Amazon QuickSight is a serverless BI service that allows you to easily connect to a data source, create analyses, publish dashboards, and share between teams. QuickSight seamlessly integrates with AWS services such as Amazon Redshift, Amazon S3, and many more.

The following screenshot illustrates how straightforward it is to connect an Amazon Redshift instance to QuickSight as a new data source.

The following screenshot is of a sample QuickSight dashboard pulling data from Amazon Redshift.

Key considerations

When using Stripe Data Pipeline, consider the following:

- Instance type – This solution is available for all RA3 node types. If you run an existing DS2 or DC2 cluster, there are multiple options to migrate to RA3, including elastic resize, snapshot and restore, and classic resize. For more information, including an upgrade sizing reference between different node types, refer to Upgrading to RA3 node types.

- RI migration – If you have Amazon Redshift Reserved Instances (RIs), you can use the RI migration feature to migrate the DS2 RI clusters to equivalent RA3 RI clusters as part of a cross-instance resize or cross-instance snapshot restore operation. The RA3 RI covering the new cluster will be the same cost and on the same calendar terms as the original DS2 RI for supported configurations.

- Encryption – The consumer cluster must be encrypted as part of the enhanced security control for cross-account sharing. You can enable encryption at cluster creation time, or modify an unencrypted cluster with either AWS Key Management Service (AWS KMS) or AWS CloudHSM.

- Federated queries – This capability works with external DB instances, including Amazon RDS for PostgreSQL, Amazon Aurora PostgreSQL-Compatible Edition, Amazon RDS for MySQL, and Aurora MySQL-Compatible Edition. You should also ensure that you have an Amazon Redshift cluster with a cluster maintenance version that supports federated queries.

Conclusion

In this post, we introduced Stripe Data Pipeline for Amazon Redshift and discussed options to further integrate with AWS services. Stripe Data Pipeline removes the need to build custom API integration or adopt a third-party ETL pipeline, making data accessible with a few clicks and with no code required. Businesses can automatically receive up-to-date data from Stripe in their data warehouse on AWS, reduce data silos, and extract deep insights to address business needs.

Check out Stripe Data Pipeline for more information about the solution and how to get started.

About the Authors

Jessica Ho is a Sr. Partner Solutions Architect at AWS supporting ISV partners who build business applications. She is passionate about creating differentiated solutions that promote cloud adoption. Outside of work, she enjoys spoiling her garden into a mini jungle.

Jessica Ho is a Sr. Partner Solutions Architect at AWS supporting ISV partners who build business applications. She is passionate about creating differentiated solutions that promote cloud adoption. Outside of work, she enjoys spoiling her garden into a mini jungle.

Alexander Mahabir is a Sr. Partner Solutions Architect at AWS based in the D.C metropolitan area. Alex has over 16 year of experience building cloud, and on-premise solutions for small, medium, and large enterprises. Alex currently works with ISV partners in the Digital Customer Experience segment.

Alexander Mahabir is a Sr. Partner Solutions Architect at AWS based in the D.C metropolitan area. Alex has over 16 year of experience building cloud, and on-premise solutions for small, medium, and large enterprises. Alex currently works with ISV partners in the Digital Customer Experience segment.

Coming June 2022: An updated Amazon QuickSight dashboard experience

Post Syndicated from Rushabh Vora original https://aws.amazon.com/blogs/big-data/coming-june-2022-an-updated-amazon-quicksight-dashboard-experience/

Starting June 30, 2022, Amazon QuickSight is introducing the new look and feel for your dashboards. In this post, we walk through the changes to expect with the new look. The new dashboard experience includes the following improvements:

- Simplified toolbar

- Discoverable visual menu

- Polished controls, menu, and submenus

- Non-blocking right pane for secondary experiences like filters, threshold alerts, and downloads

Simplified toolbar

The simplified toolbar experience offers updated icons for key actions for better visual clarity.

The following screenshot shows the old look.

The following screenshot shows the new look with updated icons.

Discoverable visual menu

The visual menu is visible on-hover to improve the discoverability of drills, export, and filter restatements.

To use the visual menu with the old version, you needed to select the visual.

With the new look, you can view the menu by hovering over it.

Polished controls, menu, and submenus

The new look features improved controls, menu, submenus, toast notifications, and other dashboard elements to provide more polished visual experience.

For example, the following screenshot shows the old look for calendar controls.

The following screenshot shows the new look.

The following screenshot shows the old look for the menu and submenus.

The following screenshot shows the new look.

Non-blocking right pane

The new look features a non-blocking right pane for secondary experiences like filters, threshold alerts, and downloads, to improve focus on the content of the dashboard.‘

The following animation shows the old look for ad-hoc filtering.

The filters are now moved to the right pane.

The following animation shows the old look for creating threshold alerts.

Threshold alert creation is now in the right pane.

The following animation shows the old look for the downloading experience.

The new look offers a downloads pane.

Summary

The new look for the QuickSight dashboard experience will be available starting June 30, 2022. If you have any questions or feedback, please reach out to us by leaving a comment.

About the Author

Rushabh Vora is a Senior Technical Product Manager for Amazon QuickSight, Amazon Web Service’s cloud-native, fully managed BI service. He is passionate about Data Visualization. Prior to QuickSight, he was working with Amazon Business as a Product Manager.

Rushabh Vora is a Senior Technical Product Manager for Amazon QuickSight, Amazon Web Service’s cloud-native, fully managed BI service. He is passionate about Data Visualization. Prior to QuickSight, he was working with Amazon Business as a Product Manager.

Douglas Tallamy | Nature’s Best Hope | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=XCek-3S__js

Go Serverless with Rising Cloud and Backblaze B2

Post Syndicated from Pat Patterson original https://www.backblaze.com/blog/go-serverless-with-rising-cloud-and-backblaze-b2/

In my last blog post, I explained how to use a Cloudflare Worker to send notifications on Backblaze B2 events. That post focused on how a Worker could proxy requests to Backblaze B2 Cloud Storage, sending a notification to a webhook at Pipedream that logged each request to a Google Spreadsheet.

Developers integrating applications and solutions with Backblaze B2 can use the same technique to solve a wide variety of use cases. As an example, in this blog post, I’ll explain how you can use that same Cloudflare Worker to trigger a serverless function at our partner Rising Cloud that automatically creates thumbnails as images are uploaded to a Backblaze B2 bucket, without incurring any egress fees for retrieving the full-size images.

What is Rising Cloud?

Rising Cloud hosts customer applications on a cloud platform that it describes as Intelligent-Workloads-as-a-Service. You package your application as a Linux executable or a Docker-style container, and Rising Cloud provisions instances as your application receives HTTP requests. If you’re familiar with AWS Lambda, Rising Cloud satisfies the same set of use cases while providing more intelligent auto-scaling, greater flexibility in application packaging, multi-cloud resiliency, and lower cost.

Rising Cloud’s platform uses artificial intelligence to predict when your application is expected to receive heavy traffic volumes and scales up server resources by provisioning new instances of your application in advance of when they are needed. Similarly, when your traffic is low, Rising Cloud spins down resources.

So far, so good, but, as we all know, artificial intelligence is not perfect. What happens when Rising Cloud’s algorithm predicts a rise in traffic and provisions new instances, but that traffic doesn’t arrive? Well, Rising Cloud picks up the tab—you only pay for the resources your application actually uses.

As is common with most cloud platforms, Rising Cloud applications must be stateless—that is, they cannot themselves maintain state from one request to the next. If your application needs to maintain state, you have to bring your own data store. Our use case, creating image thumbnails, is a perfect match for this model. Each thumbnail creation is a self-contained operation and has no effect on any other task.

Creating Image Thumbnails on Demand

As I explained in the previous post, the Cloudflare Worker will send a notification to a configured webhook URL for each operation that it proxies to Backblaze B2 via the Backblaze S3 Compatible API. That notification contains JSON-formatted metadata regarding the bucket, file, and operation. For example, on an image download, the notification looks like this:

{

"contentLength": 3015523,

"contentType": "image/png",

"method": "GET",

"signatureTimestamp": "20220224T193204Z",

"status": 200,

"url": "https://s3.us-west-001.backblazeb2.com/my-bucket/image001.png"

}

If the metadata indicates an image upload (i.e. the method is PUT, the content type starts with image, and so on), the Rising Cloud app will retrieve the full-size image from the Backblaze B2 bucket, create a thumbnail image, and write that image back to the same bucket, modifying the filename to distinguish it from the original.

Here’s the message flow between the user’s app, the Cloudflare Worker, Backblaze B2, and the Rising Cloud app:

- A user uploads an image in a Backblaze B2 client application.

- The client app creates a signed upload request, exactly as it would for Backblaze B2, but sends it to the Cloudflare Worker rather than directly to Backblaze B2.

- The Worker validates the client’s signature and creates its own signed request.

- The Worker sends the signed request to Backblaze B2.

- Backblaze B2 validates the signature and processes the upload.

- Backblaze B2 returns the response to the Worker.

- The Worker forwards the response to the client app.

- The Worker sends a notification to the Rising Cloud Web Service.

- The Web Service downloads the image from Backblaze B2.

- The Web Service creates a thumbnail for the image.

- The Web Service uploads the thumbnail to Backblaze B2.

These steps are illustrated in the diagram below.

I decided to write the application in JavaScript, since the Node.js runtime environment and its Express web application framework are well-suited to handling HTTP requests. Also, the open-source Sharp Node.js module performs this type of image processing task 4x-5x faster than either ImageMagick or GraphicsMagick. The source code is available on GitHub.

The entire JavaScript application is less than 150 lines of well-commented JavaScript and uses the AWS SDK’s S3 client library to interact with Backblaze B2 via the Backblaze S3 Compatible API. The core of the application is quite straightforward:

// Get the image from B2 (returns a readable stream as the body)

console.log(`Fetching image from ${inputUrl}`);

const obj = await client.getObject({

Bucket: bucket,

Key: keyBase + (extension ? "." + extension : "")

});

// Create a Sharp transformer into which we can stream image data

const transformer = sharp()

.rotate() // Auto-orient based on the EXIF Orientation tag

.resize(RESIZE_OPTIONS); // Resize according to configured options

// Pipe the image data into the transformer

obj.Body.pipe(transformer);

// We can read the transformer output into a buffer, since we know

// that thumbnails are small enough to fit in memory

const thumbnail = await transformer.toBuffer();

// Remove any extension from the incoming key and append '_tn.'

const outputKey = path.parse(keyBase).name + TN_SUFFIX

+ (extension ? "." + extension : "");

const outputUrl = B2_ENDPOINT + '/' + bucket + '/'

+ encodeURIComponent(outputKey);

// Write the thumbnail buffer to the same B2 bucket as the original

console.log(`Writing thumbnail to ${outputUrl}`);

await client.putObject({

Bucket: bucket,

Key: outputKey,

Body: thumbnail,

ContentType: 'image/jpeg'

});

// We're done - reply with the thumbnail's URL

response.json({

thumbnail: outputUrl

});

One thing you might notice in the above code is that neither the image nor the thumbnail is written to disk. The getObject() API provides a readable stream; the app passes that stream to the Sharp transformer, which reads the image data from B2 and creates the thumbnail in memory. This approach is much faster than downloading the image to a local file, running an image-processing tool such as ImageMagick to create the thumbnail on disk, then uploading the thumbnail to Backblaze B2.

Deploying a Rising Cloud Web Service

With my app written and tested running locally on my laptop, it was time to deploy it to Rising Cloud. There are two types of Rising Cloud applications: Web Services and Tasks. A Rising Cloud Web Service directly accepts HTTP requests and returns HTTP responses synchronously, with the condition that it must return an HTTP response within 44 seconds to avoid a timeout—an easy fit for my thumbnail creator app. If I was transcoding video, on the other hand, an operation that might take several minutes, or even hours, a Rising Cloud Task would be more suitable. A Rising Cloud Task is a queueable function, implemented as a Linux executable, which may not require millisecond-level response times.

Rising Cloud uses Docker-style containers to deploy, scale, and manage apps, so the next step was to package my app as a Docker image to deploy as a Rising Cloud Web Service by creating a Dockerfile.

With that done, I was able to configure my app with its Backblaze B2 Application Key and Key ID, endpoint, and the required dimensions for the thumbnail. As with many other cloud platforms, apps can be configured via environment variables. Using the AWS SDK’s variable names for the app’s Backblaze B2 credentials meant that I didn’t have to explicitly handle them in my code—the SDK automatically uses the variables if they are set in the environment.

Notice also that the RESIZE_OPTIONS value is formatted as JSON, allowing maximum flexibility in configuring the resize operation. As you can see, I set the withoutEnlargement parameter as well as the desired width, so that images already smaller than the width would not be enlarged.

Calling a Rising Cloud Web Service

By default, Rising Cloud requires that app clients supply an API key with each request as an HTTP header with the name X-RisingCloud-Auth:

So, to test the Web Service, I used the curl command-line tool to send a POST request containing a JSON payload in the format emitted by the Cloudflare Worker and the API key:

curl -d @example-request.json \ -H 'Content-Type: application/json' \ -H 'X-RisingCloud-Auth:' \ https://b2-risingcloud-demo.risingcloud.app/thumbnail

As expected, the Web Service responded with the URL of the newly created thumbnail:

{

"thumbnail":"https://s3.us-west-001.backblazeb2.com/my-bucket/image001_tn.jpg"

}

(JSON formatted for clarity)

The final piece of the puzzle was to create a Cloudflare Worker from the Backblaze B2 Proxy template, and add a line of code to include the Rising Cloud API key HTTP header in its notification. The Cloudflare Worker configuration includes its Backblaze B2 credentials, Backblaze B2 endpoint, Rising Cloud API key, and the Web Service endpoint (webhook):

This short video shows the application in action, and how Rising Cloud spins up new instances to handle an influx of traffic:

Process Your Own B2 Files in Rising Cloud

You can deploy an application on Rising Cloud to respond to any Backblaze B2 operation(s). You might want to upload a standard set of files whenever a bucket is created, or keep an audit log of Backblaze B2 operations performed on a particular set of buckets. And, of course, you’re not limited to triggering your Rising Cloud application from a Cloudflare worker—your app can respond to any HTTP request to its endpoint.

Submit your details here to set up a free trial of Rising Cloud. If you’re not already building on Backblaze B2, sign up to create an account today—the first 10 GB of storage is free!

The post Go Serverless with Rising Cloud and Backblaze B2 appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Use a linear learner algorithm in Amazon Redshift ML to solve regression and classification problems

Post Syndicated from Phil Bates original https://aws.amazon.com/blogs/big-data/use-a-linear-learner-algorithm-in-amazon-redshift-ml-to-solve-regression-and-classification-problems/

Amazon Redshift is the fastest, most widely used, fully managed, and petabyte-scale cloud data warehouse. Tens of thousands of customers use Amazon Redshift to process exabytes of data every day to power their analytics workloads. Amazon Redshift ML, powered by Amazon SageMaker, makes it easy for SQL users such as data analysts, data scientists, and database developers to create, train, and deploy machine learning (ML) models using familiar SQL commands and then use these models to make predictions on new data for use cases such as churn prediction, customer lifetime value prediction, and product recommendations. Redshift ML makes the model available as a SQL function within the Amazon Redshift data warehouse so you can easily use it in queries and reports. Customers across all verticals are using Redshift ML to derive better insights from their data. For example, Jobcase uses Redshift ML to recommend job search at scale. Magellan RX Management uses Redshift ML to predict drug therapeutic use conditions.

Amazon Redshift supports supervised learning, including regression, binary classification, multi-class classification, and unsupervised learning using K-Means. You can optionally specify XGBoost, MLP, and now linear learner model types, which are supervised learning algorithms used for solving either classification or regression problems, and provide a significant increase in speed over traditional hyperparameter optimization techniques. Amazon Redshift also supports bring-your-own-model to invoke remote SageMaker endpoints.

In this post, we show you how to use Redshift ML to solve regression and classification problems using the SageMaker linear learner algorithm, which explores different training objectives and chooses the best solution from a validation set.

Solution overview

We first solve a linear regression problem, followed by a multi-class classification problem.

The following table shows some common use cases and algorithms used.

| Use Case | Algorithm / Problem Type |

| Customer churn prediction | Classification |

| Predict if a sales lead will close | Classification |

| Fraud detection | Classification |

| Price and revenue prediction | Linear regression |

| Customer lifetime value prediction | Linear regression |

To use the linear learner algorithm, you need to provide inputs or columns representing dimensional values and also the label or target, which is the value you’re trying to predict. The linear learner algorithm trains many models in parallel, and automatically determines the most optimized model.

Prerequisites

To get started, we need an Amazon Redshift cluster or an Amazon Redshift Serverless endpoint and an AWS Identity and Access Management (IAM) role attached that provides access to SageMaker and permissions to an Amazon Simple Storage Service (Amazon S3) bucket.

For an introduction to Redshift ML and instructions on setting it up, see Create, train, and deploy machine learning models in Amazon Redshift using SQL with Amazon Redshift ML.

To create a simple cluster with a default IAM role, see Use the default IAM role in Amazon Redshift to simplify accessing other AWS services.

Use case 1: Linear regression

In this use case, we analyze the Abalone dataset and determine the relationship between the physical measurements and use that to determine the age of abalone. The age of abalone is determined by cutting the shell through the cone, staining it, and counting the number of rings through a microscope, which is a time-consuming task. We want to predict the age using different physical measurements, which is easier to measure. The age of abalone is (number of rings + 1.5) years.

Prepare the data

Load the Abalone dataset into Amazon Redshift using the following SQL. You can use the Amazon Redshift query editor v2 or your preferred SQL tool to run these commands.

To create the table, use the following commands:

To load data into Amazon Redshift, use the following COPY command:

To train the model, we use the abalone table and 80% of the data to train the model, and then test the accuracy of that model by seeing if it correctly predicts the age of ring label attribute on the remaining 20% of the data. Run the following command to create training and validation tables:

Create a model in Redshift ML

To create a model in Amazon Redshift, use the following command:

We define the following parameters in the CREATE MODEL statement:

- Problem type – We use the linear learner problem type, which is newly added to extend upon typical linear models by training many models in parallel, in a computationally efficient manner.

- Objective – We specified MSE (mean square error) as our objective, which is a common metric for evaluation of regression problems.

- Max runtime –This parameter denotes how long the model training can run. Specifying a larger value may help create a better tuned model. The default value for this parameter is 5400 (90 minutes). For this example, we set it to 15000.

The preceding statement takes a few seconds to complete. It initiates an Amazon SageMaker Autopilot process in the background to automatically build, train, and tune the best ML model for the input data. It then uses Amazon SageMaker Neo to deploy that model locally in the Amazon Redshift cluster or Amazon Redshift Serverless as a user-defined function (UDF). You can use the SHOW MODEL command in Amazon Redshift to track the progress of your model creation, which should be in the READY state within the max_runtime parameter you defined while creating the model.

To check the status of the model, use the following command:

The following is the tabular outcome for the preceding command after model training was done. It took approximately 120 minutes to train the model.

| Key | Value |

| Model Name | model_abalone_ring_prediction |

| Schema Name | public |

| Owner | awsuser |

| Creation Time | Tue, 10.05.2022 19:42:33 |

| Model State | READY |

| validation:mse | 4.082088 |

| Estimated Cost | 5.423719 |

| . | . |

| TRAINING DATA: | . |

| Query | SELECT SEX , LENGTH , DIAMETER , HEIGHT , WHOLE , SHUCKED , VISCERA , SHELL, RINGS AS TARGET_LABEL |

| . | FROM ABALONE_TRAINING |

| Target Column | TARGET_LABEL |

| . | . |

| PARAMETERS: | . |

| Model Type | linear_learner |

| Problem Type | Regression |

| Objective | MSE |

| AutoML Job Name | redshiftml-20220510194233380173 |

| Function Name | f_abalone_ring_prediction |

| Function Parameters | sex length diameter height whole shucked viscera shell |

| Function Parameter Types | bpchar float8 float8 float8 float8 float8 float8 float8 |

| IAM Role | default-aws-iam-role |

| S3 Bucket | poc-generic-bkt |

| Max Runtime | 15000 |

Model validation

We notice from the preceding table that the MSE for the training data is 4.08. Now let’s run the prediction query and validate the accuracy of the model on the testing and validation dataset:

The following is the outcome from the query:

| mse | rmse |

| 5.08 | 2.25 |

The MSE value from the preceding query results indicates that our model is accurate enough to the actual values from our validation dataset.

We can also observe that Redshift ML is able to identify the right combination of features to come up with a usable prediction model. We can further check the impact of each attribute and its contribution and weightage in the model selection using the following command:

The following is the outcome, where each attribute weightage is representative of its role in model decision-making:

Use case 2: Multi-class classification

For this use case, we use the Covertype dataset (copyright Jock A. Blackard and Colorado State University), which contains information collected by the US Geological Survey and the US Forest Service about wilderness areas in northern Colorado. This has been downloaded to an S3 bucket to make it simple to create the model. You may want to download the dataset description. This dataset contains various measurements such as elevation, distance to waters and roadways, as well as the wilderness area designation and the soil type. Our ML task is to create a model to predict the cover type for a given area.

Prepare the data

To prepare the data for this model, you need to create and populate the table public.covertype_data in Amazon Redshift using the Covertype dataset. You may use the following SQL in Amazon Redshift query editor v2 or your preferred SQL tool:

Now that our dataset is loaded, we run the following SQL statements to split the data into three sets for training (80%), validation (10%), and prediction (10%). Note that Redshift ML Autopilot automatically splits the data into training and validation, but by splitting it here, you’re able to verify the accuracy of your model.

To prepare the dataset, assign random values to split the data:

Use the following code for the training set:

Use the following code for the validation set:

Use the following code for the test set:

Now that we have our datasets, it’s time to create the model.

Create a model in Redshift ML using linear learner

Run the following SQL command to create your model—note our target is cover_type and we use all the inputs from our training set:

You can use the SHOW MODEL command to view the status of the model.

You can see that the model has an accuracy score of .730279 and is in the READY state. Now let’s run a query to do some validation of our own.

Model validation

Run the following SQL query against the validation table, using the function created by our model:

You can see that our accuracy is very close to our score from the SHOW MODEL output.

Run a prediction query

Let’s run a prediction query in Amazon Redshift ML using our function against our test dataset to see the most common class of cover type for the Neota Wilderness Area. We can denote this by checking wildnerness_area2 for a value of 1.

The dataset includes the following wilderness areas:

- Rawah Wilderness Area

- Neota Wilderness Area

- Comanche Peak Wilderness Area

- Cache la Poudre Wilderness Area

The cover types are in seven different classes:

- Spruce/Fir

- Lodgepole Pine

- Ponderosa Pine

- Cottonwood/Willow

- Aspen

- Douglas Fir

- Krummholz

There are also 40 different soil type definitions, which you can see in the dataset description, with a value of 0 or 1 to note if it’s applicable for a particular row. The following are a few example soil types:

- Cathedral family – Rock outcrop complex, extremely stony

- Vanet-Ratake families –Rocky outcrop complex, very stony

- Haploborolis family – Rock outcrop complex, rubbly

- Ratake family – Rock outcrop complex, rubbly

- Vanet family – Rock outcrop complex, rubbly

- Vanet-Wetmore families – Rock outcrop complex, stony

Our model has predicted that the majority of cover is Spruce and Fir.

You can experiment with various combinations, such as determining which soil types are most likely to occur in a predicted cover type.

Conclusion

Redshift ML makes it easy for users of all levels to create, train, and tune models using a SQL interface. In this post, we walked you through how to use the linear learner algorithm to create regression and multi-class classification models. You can then use those models to make predictions using simple SQL commands and gain valuable insights.

To learn more about RedShift ML, visit Amazon Redshift ML.

About the Authors

Phil Bates is a Senior Analytics Specialist Solutions Architect at AWS with over 25 years of data warehouse experience.

Phil Bates is a Senior Analytics Specialist Solutions Architect at AWS with over 25 years of data warehouse experience.

Sohaib Katariwala is an Analytics Specialist Solutions Architect at AWS. He has 12+ years of experience helping organizations derive insights from their data.

Sohaib Katariwala is an Analytics Specialist Solutions Architect at AWS. He has 12+ years of experience helping organizations derive insights from their data.

Tahir Aziz is an Analytics Solution Architect at AWS. He has worked with building data warehouses and big data solutions for over 13 years. He loves to help customers design end-to-end analytics solutions on AWS. Outside of work, he enjoys traveling and cooking.

Tahir Aziz is an Analytics Solution Architect at AWS. He has worked with building data warehouses and big data solutions for over 13 years. He loves to help customers design end-to-end analytics solutions on AWS. Outside of work, he enjoys traveling and cooking.

Jiayuan Chen is a Senior Software Development Engineer at AWS. He is passionate about designing and building data-intensive applications, and has been working in the areas of data lake, query engine, ingestion, and analytics. He keeps up with latest technologies and innovates things that spark joy.

Jiayuan Chen is a Senior Software Development Engineer at AWS. He is passionate about designing and building data-intensive applications, and has been working in the areas of data lake, query engine, ingestion, and analytics. He keeps up with latest technologies and innovates things that spark joy.

Debu Panda is a Senior Manager, Product Management at AWS, is an industry leader in analytics, application platform, and database technologies, and has more than 25 years of experience in the IT world. Debu has published numerous articles on analytics, enterprise Java, and databases and has presented at multiple conferences such as re:Invent, Oracle Open World, and Java One. He is lead author of the EJB 3 in Action (Manning Publications 2007, 2014) and Middleware Management (Packt).

Debu Panda is a Senior Manager, Product Management at AWS, is an industry leader in analytics, application platform, and database technologies, and has more than 25 years of experience in the IT world. Debu has published numerous articles on analytics, enterprise Java, and databases and has presented at multiple conferences such as re:Invent, Oracle Open World, and Java One. He is lead author of the EJB 3 in Action (Manning Publications 2007, 2014) and Middleware Management (Packt).

Bosworth Field: The Climactic Final Battle of the Wars of the Roses

Post Syndicated from Geographics original https://www.youtube.com/watch?v=dO1l9cN0dV8

Receive notification and automate YouTube in Home Assistant

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=-xmQ16oMik4

openSUSE Leap Micro 5.2 released

Post Syndicated from original https://lwn.net/Articles/895668/

OpenSUSE Leap Micro is a new distribution, described as “an

ultra-reliable, lightweight operating system built for containerized and

virtualized workloads“. The initial release (5.2) is

now available. More information can be found in the

5.2 release notes.

[$] Snapshots, inodes, and filesystem identifiers

Post Syndicated from original https://lwn.net/Articles/895444/

A longstanding problem with Btrfs subvolumes

and duplicate inode numbers was the topic of a late-breaking filesystem session

at the

2022 Linux Storage,

Filesystem, Memory-management and BPF Summit (LSFMM). The problem had

cropped up in the bcachefs session but

Josef Bacik deferred that discussion to this just-created session, which he led. The

problem is not limited to Btrfs, though, since filesystem snapshots for

other filesystems can

have similar kinds of problems.

Find, Fix, and Report OWASP Top 10 Vulnerabilities in InsightAppSec

Post Syndicated from Adrian Stewart original https://blog.rapid7.com/2022/05/18/find-fix-and-report-owasp-top-10-vulnerabilities-in-insightappsec/

With the release of the new 2021 OWASP Top 10 late last year, OWASP made some fundamental and impactful changes to its ubiquitous reference framework. We published a high-level breakdown of the changes, followed by some deep dives into specific types of threats that made the new Top 10.

But the question remains: How do you apply the latest iteration of the OWASP Top 10 to your application security program?

To help answer this question, we released an OWASP 2021 Attack Template and Report for InsightAppSec. This new feature helps you use the updated categories from OWASP to inform and focus your AppSec program, work closely with development teams to remediate the discovered vulnerabilities, and move toward best practices for achieving compliance.

Let’s take a closer look.

Find

Before we can fix vulnerabilities, we need to find them, and to do that, we need to scan. We may know where to look, but we often lack the specialist knowledge of industry trends and the general threat landscape required to determine what we should be looking for.

Luckily, the OWASP organization has done the hard work for us. The new InsightAppSec OWASP 2021 attack template includes all the relevant attacks for the categories defined in the latest OWASP version.

The new attack module enables you to leverage the knowledge that went into the latest version of the OWASP Top 10 – even with little or no subject matter knowledge – to generate a focused, hopefully small, set of vulnerabilities. Where security and development resources are over-utilized and expensive, using the OWASP scan template ensures we are focusing on the right vulnerabilities.

Fix

Finding vulnerabilities is only part of the journey. If you can’t enable your development teams to remediate vulnerabilities, the entire exercise becomes academic.

That’s why InsightAppSec provides guidance in the form of detailed remediation reports, specifically formatted to provide development teams with all the information and tools required to confirm and remediate the vulnerabilities.

The remediation report includes the Attack Replay feature found in the product that allows developers to quickly and easily validate the vulnerabilities by replaying the traffic used to identify them.

Report

Although OWASP is not a compliance standard, auditors may view the inclusion of Top 10 scanning as an indication of intent toward good practice, which therefore implies adherence to other compliance standards.

To facilitate this and make it easy for organizations to show good practice, InsightAppSec provides an OWASP report that automatically groups vulnerabilities into the relevant OWASP categories but also includes areas where no vulns have been found.

The OWASP 2021 report gives you an excellent overview of the categories you are successfully addressing and those that may require more focus and attention, giving you actionable information to move your security program forward.

By leveraging the analysis and intel of OWASP and providing workflows right in the product, InsightAppSec gives you control over your AppSec program from scan to remediation enabling the right people, at the right time, with the right information.

Additional reading:

- XSS in JSON: Old-School Attacks for Modern Applications

- Cloud-Native Application Protection (CNAPP): What’s Behind the Hype?

- Rapid7 Named a Visionary in 2022 Magic Quadrant™ for Application Security Testing Second Year in a Row

- Let’s Dance: InsightAppSec and tCell Bring New DevSecOps Improvements in Q1

Yet another set of stable kernel updates

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/895642/

Security updates have been issued by Debian (elog, needrestart, openssl, and waitress), Fedora (curl, libxml2, slurm, and vim), Scientific Linux (zlib), SUSE (e2fsprogs, nodejs10, php72, and thunderbird), and Ubuntu (apport, clamav, needrestart, and pcre3).

Internship Experience: Software Development Intern

Post Syndicated from Ulysses Kee original https://blog.cloudflare.com/internship-experience-software-development-intern/

Before we dive into my experience interning at Cloudflare, let me quickly introduce myself. I am currently a master’s student at the National University of Singapore (NUS) studying Computer Science. I am passionate about building software that improves people’s lives and making the Internet a better place for everyone. Back in December 2021, I joined Cloudflare as a Software Development Intern on the Partnerships team to help improve the experience that Partners have when using the platform. I was extremely excited about this opportunity and jumped at the prospect of working on serverless technology to build viable tools for our partners and customers. In this blog post, I detail my experience working at Cloudflare and the many highlights of my internship.

Interview Experience

The process began for me back when I was taking a software engineering module at NUS where one of my classmates had shared a job post for an internship at Cloudflare. I had known about Cloudflare’s DNS service prior and was really excited to learn more about the internship opportunity because I really resonated with the company’s mission to help build a better Internet.

I knew right away that this would be a great opportunity and submitted my application. Soon after, I heard back from the recruiting team and went through the interview process – the entire interview process was extremely accommodating and is definitely the most enjoyable interview experience I have had. Throughout the process, I was constantly asked about the kind of things I would like to work on and the relevance of the work that I would be doing. I felt that this thorough communication carried on throughout the internship and really was a cornerstone of my experience interning at Cloudflare.

My Internship

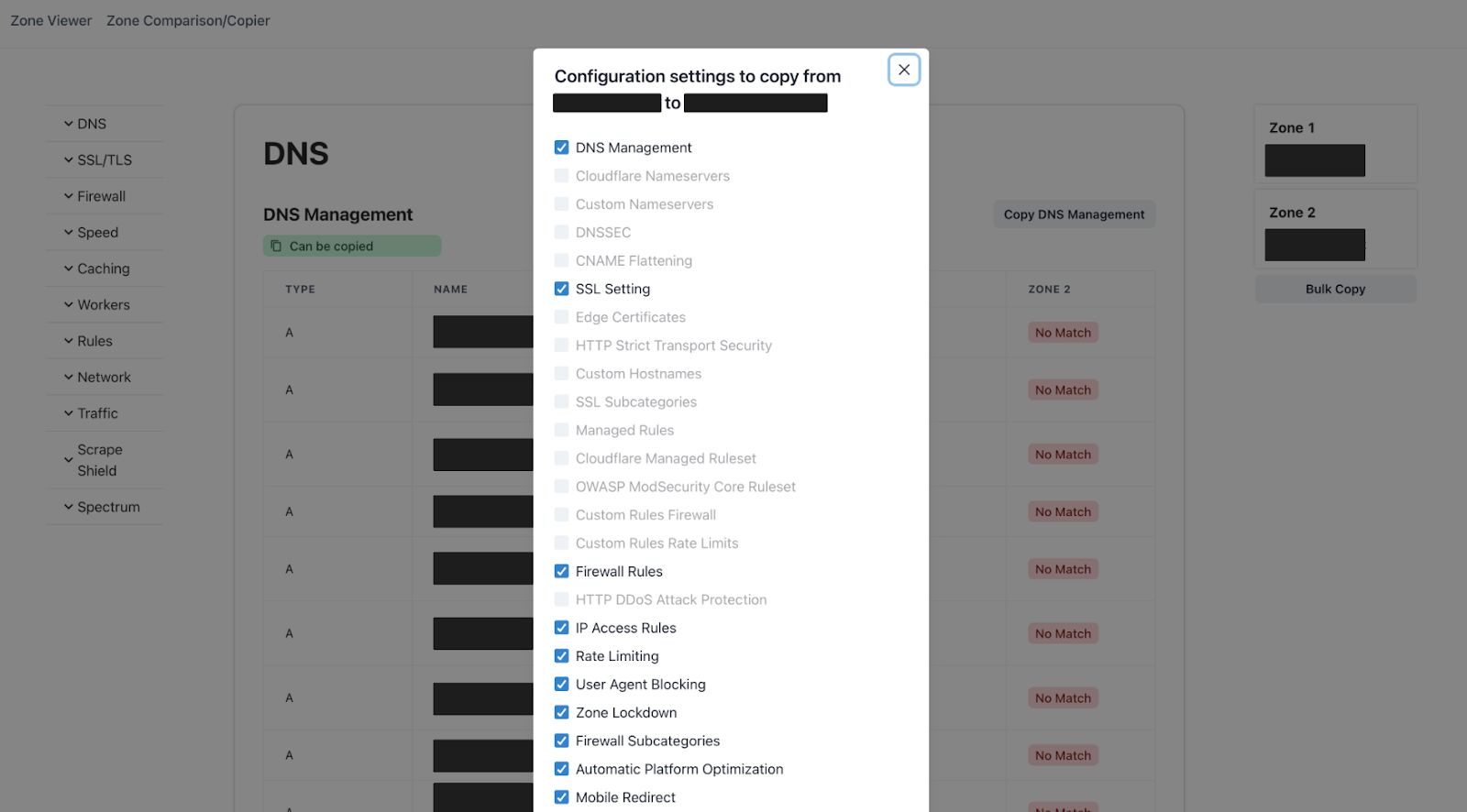

My internship began with onboarding and training, and then after, I had discussions with my mentor, Ayush Verma, on the projects we aimed to complete during the internship and the order of objectives. The main issues we wanted to address was the current manual process that our internal teams and partners go through when they want to duplicate the configuration settings on a zone, or when they want to compare one zone to other zones to ensure that there are no misconfigurations. As you can imagine, with the number of different configurations offered on the Cloudflare dashboard for customers, it could take significant time to copy over every setting and rule manually from one zone to another. Additionally, this process, when done manually, poses a potential threat for misconfigurations due to human error. Furthermore, as more and more customers onboard different zones onto Cloudflare, there needs to be a more automated and improved way for them to make these configuration setups.

Initially, we discussed using Terraform as Cloudflare already supports terraform automation. However, this approach would only cater towards customers and users that have more technical resources and, in true Cloudflare spirit, we wanted to keep it simple such that it could be used by any and everyone. Therefore, we decided to leverage the publicly available Cloudflare APIs and create a browser-based application that interacts with these APIs to display configurations and make changes easily from a simple UI.

With the end goal of simplifying the experience for our partners and customers in duplicating zone configurations, we decided to build a Zone Copier web application solely built on Cloudflare Workers. This tool would, in a click of a button, automatically copy over every setting that can be copied from one zone to another, significantly reducing the amount of time and effort required to make the changes.

Alongside the Zone Copier, we would have some auxiliary tools such as a Zone Viewer, and Zone Comparison, where a customer can easily have a full view of their configurations on a single webpage and be able to compare different zones that they use respectively. These other applications improve upon the existing methods through which Cloudflare users can view their zone configurations, and allow for the direct comparison between different zones.

Importantly, these applications are not to replace the Cloudflare Dashboard, but to complement it instead – for deeper dives into a single particular configuration setting, the Cloudflare Dashboard remains the way to go.

To begin building the web application, I spent the first few weeks diving into the publicly available APIs offered by Cloudflare as part of the v4 API to verify the outputs of each endpoint, and the type of data that would be sent as a response from a request. This took much longer than expected as certain endpoints provided different default responses for a zone that has either an empty setting – for example, not having any Firewall Rules created – or uses a nested structure for its relevant response. These different potential responses have to be examined so that when the web application calls the respective API endpoint, the responses are handled appropriately. This process was quite manual as each endpoint had to be verified individually to ensure the output would work seamlessly with the application.

Once I completed my research, I was able to start designing the web application. Building the web application was a very interesting experience as the stack rested solely on Workers, a serverless application platform. My prior experiences building web applications used servers that require the deployment of a server built using Express and Node.js, whereas for my internship project, I completely relied on a backend built using the itty-router library on Workers to interface with the publicly available Cloudflare APIs. I found this extremely exciting as building a serverless application required less overhead compared to setting up a server and deploying it, and using Workers itself has many other added benefits such as zero cold starts. This introduction to serverless technology and my experience deep-diving into the capabilities of Workers has really opened my eyes to the possibilities that Workers as a platform can offer. With Workers, you can deploy any application on Cloudflare’s global network like I did!

For the frontend of the web application, I used React and the Chakra-UI library to build the user interface for which the Zone Viewer, Zone Comparison, and Zone Copier, is based on. The routing between different pages was done using React Router and the application is deployed directly through Workers.

Here is a screenshot of the application:

Presenting the prototype application