Post Syndicated from original https://lwn.net/Articles/883697/

The LWN.net Weekly Edition for February 10, 2022 is available.

Post Syndicated from original https://lwn.net/Articles/883697/

The LWN.net Weekly Edition for February 10, 2022 is available.

Post Syndicated from original https://lwn.net/Articles/883697/rss

The LWN.net Weekly Edition for February 10, 2022 is available.

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=tTQkQBpF1BY

Post Syndicated from Netflix Technology Blog original https://netflixtechblog.com/data-pipeline-asset-management-with-dataflow-86525b3e21ca

by Sam Setegne, Jai Balani, Olek Gorajek

The problem of managing scheduled workflows and their assets is as old as the use of cron daemon in early Unix operating systems. The design of a cron job is simple, you take some system command, you pick the schedule to run it on and you are done. Example:

0 0 * * MON /home/alice/backup.sh

In the above example the system would wake up every Monday morning and execute the backup.sh script. Simple right? But what if the script does not exist in the given path, or what if it existed initially but then Alice let Bob access her home directory and he accidentally deleted it? Or what if Alice wanted to add new backup functionality and she accidentally broke existing code while updating it?

The answers to these questions is something we would like to address in this article and propose a clean solution to this problem.

Let’s define some requirements that we are interested in delivering to the Netflix data engineers or anyone who would like to schedule a workflow with some external assets in it. By external assets we simply mean some executable carrying the actual business logic of the job. It could be a JAR compiled from Scala, a Python script or module, or a simple SQL file. The important thing is that this business logic can be built in a separate repository and maintained independently from the workflow definition. Keeping all that in mind we would like to achieve the following properties for the whole workflow deployment:

While all the above goals are our North Star, we also don’t want to negatively affect fast deployment, high availability and arbitrary life span of any deployed asset.

The basic approach to pulling down arbitrary workflow resources during workflow execution has been known to mankind since the invention of cron, and with the advent of “infinite” cloud storage systems like S3, this approach has served us for many years. Its apparent flexibility and convenience can often fool us into thinking that by simply replacing the asset in the S3 location we can, without any hassle, introduce changes to our business logic. This method often proves very troublesome especially if there is more than one engineer working on the same pipeline and they are not all aware of the other folks’ “deployment process”.

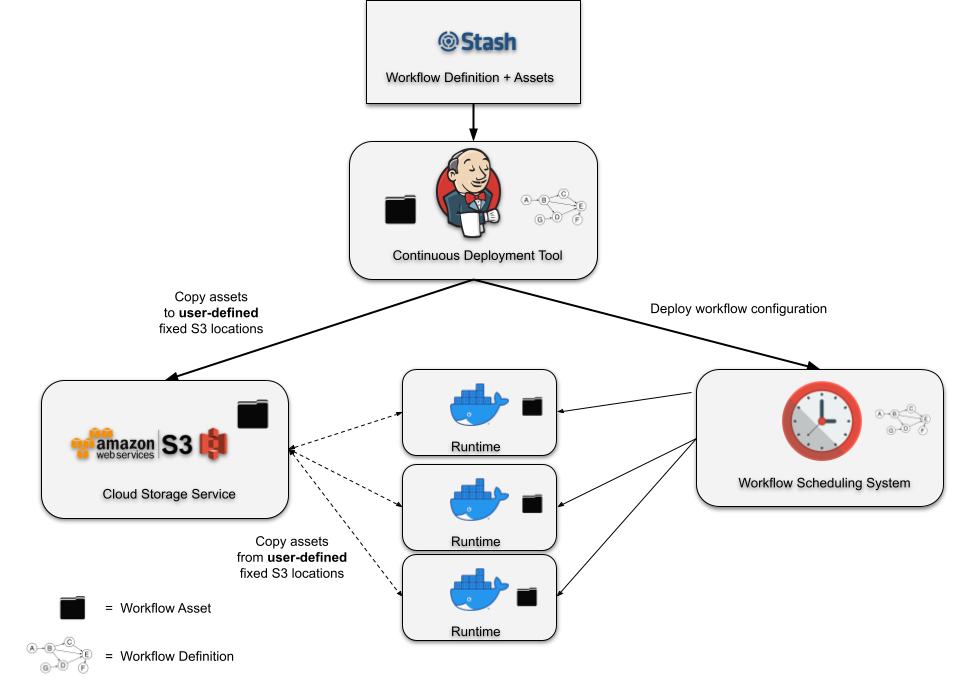

The slightly improved approach is shown on the diagram below.

In Figure 1, you can see an illustration of a typical deployment pipeline manually constructed by a user for an individual project. The continuous deployment tool submits a workflow definition with pointers to assets in fixed S3 locations. These assets are then separately deployed to these fixed locations. At runtime, the assets are retrieved from the defined locations in S3 and executed in the runtime container. Despite requiring users to construct the deployment pipeline manually, often by writing their own scripts from scratch, this design works and has been successfully used by many teams for years. That being said, it does have some drawbacks that are revealed as you try to add any amount of complexity to your deployment logic. Let’s discuss a few of them.

In any production pipeline, you want the flexibility of having a “safe” alternative deployment logic. For example, you may want to build your Scala code and deploy it to an alternative location in S3 while pushing a sandbox version of your workflow that points to this alternative location. Something this simple gets very complicated very quickly and requires the user to consider a number of things. Where should this alternative location be in S3? Is a single location enough? How do you set up your deployment logic to know when to deploy the workflow to a test or dev environment? Answers to these questions often end up being more custom logic inside of the user’s deployment scripts.

When you deploy a workflow, you really want it to encapsulate an atomic and idempotent unit of work. Part of the reason for that is the desire for the ability to rollback to a previous workflow version and knowing that it will always behave as it did in previous runs. There can be many reasons to rollback but the typical one is when you’ve recognized a regression in a recent deployment that was not caught during testing. In the current design, reverting to a previous workflow definition in your scheduling system is not enough! You have to rebuild your assets from source and move them to your fixed S3 location that your workflow points to. To enable atomic rollbacks, you can add more custom logic to your deployment scripts to always deploy your assets to a new location and generate new pointers for your workflows to use, but that comes with higher complexity that often just doesn’t feel worth it. More commonly, teams will opt to do more testing to try and catch regressions before deploying to production and will accept the extra burden of rebuilding all of their workflow dependencies in the event of a regression.

At runtime, the container must reach out to a user-defined storage location to retrieve the assets required. This causes the user-managed storage system to be a critical runtime dependency. If we zoom out to look at an entire workflow management system, the runtime dependencies can become unwieldy if it relies on various storage systems that are arbitrarily defined by the workflow developers!

In the attempt to deliver a simple and robust solution to the managed workflow deployments we created a command line utility called Dataflow. It is a Python based CLI + library that can be installed anywhere inside the Netflix environment. This utility can build and configure workflow definitions and their assets during testing and deployment. See below diagram:

In Figure 2, we show a variation of the typical manually constructed deployment pipeline. Every asset deployment is released to some newly calculated UUID. The workflow definition can then identify a specific asset by its UUID. Deploying the workflow to the scheduling system produces a “Deployment Bundle”. The bundle includes all of the assets that have been referenced by the workflow definition and the entire bundle is deployed to the scheduling system. At every scheduled runtime, the scheduling system can create an instance of your workflow without having to gather runtime dependencies from external systems.

The asset management system that we’ve created for Dataflow provides a strong abstraction over this deployment design. Deploying the asset, generating the UUID, and building the deployment bundle is all handled automatically by the Dataflow build logic. The user does not need to be aware of anything that’s happening on S3, nor that S3 is being used at all! Instead, the user is given a flexible UUID referencing system that’s layered on top of our scheduling system’s workflow DSL. Later in the article we’ll cover this referencing system in some detail. But first, let’s look at an example of deploying an asset and a workflow.

Let’s walk through an example of a workflow asset build and deployment. Let’s assume we have a repository called stranger-data with the following structure:

.

├── dataflow.yaml

├── pyspark-workflow

│ ├── main.sch.yaml

│ └── hello_world

│ ├── ...

│ └── setup.py

└── scala-workflow

├── build.gradle

├── main.sch.yaml

└── src

├── main

│ └── ...

└── test

└── ...

Let’s now use Dataflow command to see what project components are visible:

stranger-data$ dataflow project list

Python Assets:

-> ./pyspark-workflow/hello_world/setup.py

Summary: 1 found.

Gradle Assets:

-> ./scala-workflow/build.gradle

Summary: 1 found.

Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml

-> ./pyspark-workflow/main.sch.yaml

Summary: 2found.

Before deploying the assets, and especially if we made any changes to them, we can run unit tests to make sure that we didn’t break anything. In a typical Dataflow configuration this manual testing is optional because Dataflow continuous integration tests will do that for us on any pull-request.

stranger-data$ dataflow project test

Testing Python Assets:

-> ./pyspark-workflow/hello_world/setup.py... PASSED

Summary: 1 successful, 0 failed.

Testing Gradle Assets:

-> ./scala-workflow/build.gradle... PASSED

Summary: 1 successful, 0 failed.

Building Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml... CREATED ./.workflows/scala-workflow.main.sch.rendered.yaml

-> ./pyspark-workflow/main.sch.yaml... CREATED ./.workflows/pyspark-workflow.main.sch.rendered.yaml

Summary: 2 successful, 0 failed.

Testing Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml... PASSED

-> ./pyspark-workflow/main.sch.yaml... PASSED

Summary: 2 successful, 0 failed.

Notice that the test command we use above not only executes unit test suites defined in our Scala and Python sub-projects, but it also renders and statically validates all the workflow definitions in our repo, but more on that later…

Assuming all tests passed, let’s now use the Dataflow command to build and deploy a new version of the Scala and Python assets into the Dataflow asset registry.

stranger-data$ dataflow project deploy

Building Python Assets:

-> ./pyspark-workflow/hello_world/setup.py... CREATED ./pyspark-workflow/hello_world/dist/hello_world-0.0.1-py3.7.egg

Summary: 1 successful, 0 failed.

Deploying Python Assets:

-> ./pyspark-workflow/hello_world/setup.py... DEPLOYED AS dataflow.egg.hello_world.user.stranger-data.master.39206ee8.3

Summary: 1 successful, 0 failed.

Building Gradle Assets:

-> ./scala-workflow/build.gradle... CREATED ./scala-workflow/build/libs/scala-workflow-all.jar

Summary: 1 successful, 0 failed.

Deploying Gradle Assets:

-> ./scala-workflow/build.gradle... DEPLOYED AS dataflow.jar.scala-workflow.user.stranger-data.master.39206ee8.11

Summary: 1 successful, 0 failed.

...

Notice that the above command:

We can check the existing assets of any given type deployed to any given namespace using the following Dataflow command:

stranger-data$ dataflow project list eggs --namespace hello_world --deployed

Project namespaces with deployed EGGS:

hello_world

-> dataflow.egg.hello_world.user.stranger-data.master.39206ee8.3

-> dataflow.egg.hello_world.user.stranger-data.master.39206ee8.2

-> dataflow.egg.hello_world.user.stranger-data.master.39206ee8.1

The above list could come in handy, for example if we needed to find and access an older version of an asset deployed from a given branch and commit hash.

Now let’s have a look at the build and deployment of the workflow definition which references the above assets as part of its pipeline DAG.

Let’s list the workflow definitions in our repo again:

stranger-data$ dataflow project list workflows

Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml

-> ./pyspark-workflow/main.sch.yaml

Summary: 2 found.

And let’s look at part of the content of one of these workflows:

stranger-data$ cat ./scala-workflow/main.sch.yaml

...

dag:

- ddl -> write

- write -> audit

- audit -> publish

jobs:

- ddl: ...

- write:

spark:

script: ${dataflow.jar.scala-workflow}

class: com.netflix.spark.ExampleApp

conf: ...

params: ...

- audit: ...

- publish: ...

...

You can see from the above snippet that the write job wants to access some version of the JAR from the scala-workflow namespace. A typical workflow definition, written in YAML, does not need any compilation before it is shipped to the Scheduler API, but Dataflow designates a special step called “rendering” to substitute all of the Dataflow variables and build the final version.

The above expression ${dataflow.jar.scala-workflow} means that the workflow will be rendered and deployed with the latest version of the scala-workflow JAR available at the time of the workflow deployment. It is possible that the JAR is built as part of the same repository in which case the new build of the JAR and a new version of the workflow may be coming from the same deployment. But the JAR may be built as part of a completely different project and in that case the testing and deployment of the new workflow version can be completely decoupled.

We showed above how one would request the latest asset version available during deployment, but with Dataflow asset management we can distinguish two more asset access patterns. An obvious next one is to specify it by all its attributes: asset type, asset namespace, git repo owner, git repo name, git branch name, commit hash and consecutive build number. There is one more extra method for a middle ground solution to pick a specific build for a given namespace and git branch, which can help during testing and development. All of this is part of the user-interface for determining how the deployment bundle will be created. See below diagram for a visual illustration.

In short, using the above variables gives the user full flexibility and allows them to pick any version of any asset in any workflow.

An example of the workflow deployment with the rendering step is shown below:

stranger-data$ dataflow project deploy

...

Building Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml... CREATED ./.workflows/scala-workflow.main.sch.rendered.yaml

-> ./pyspark-workflow/main.sch.yaml... CREATED ./.workflows/pyspark-workflow.main.sch.rendered.yaml

Summary: 2 successful, 0 failed.

Deploying Scheduler Workflows:

-> ./scala-workflow/main.sch.yaml… DEPLOYED AS https://hawkins.com/scheduler/sandbox:user.stranger-data.scala-workflow

-> ./pyspark-workflow/main.sch.yaml… DEPLOYED AS https://hawkins.com/scheduler/sandbox:user.stranger-data.pyspark-workflow

Summary: 2 successful, 0 failed.

And here you can see what the workflow definition looks like before it is sent to the Scheduler API and registered as the latest version. Notice the value of the script variable of the write job. In the original code says ${dataflow.jar.scala-workflow} and in the rendered version it is translated to a specific file pointer:

stranger-data$ cat ./scala-workflow/main.sch.yaml

...

dag:

- ddl -> write

- write -> audit

- audit -> publish

jobs:

- ddl: ...

- write:

spark:

script: s3://dataflow/jars/scala-workflow/user/stranger-data/master/39206ee8/1.jar

class: com.netflix.spark.ExampleApp

conf: ...

params: ...

- audit: ...

- publish: ...

...

The Infrastructure DSE team at Netflix is responsible for providing insights into data that can help the Netflix platform and service scale in a secure and effective way. Our team members partner with business units like Platform, OpenConnect, InfoSec and engage in enterprise level initiatives on a regular basis.

One side effect of such wide engagement is that over the years our repository evolved into a mono-repo with each module requiring a customized build, testing and deployment strategy packaged into a single Jenkins job. This setup required constant upkeep and also meant every time we had a build failure multiple people needed to spend a lot of time in communication to ensure they did not step on each other.

Last quarter we decided to split the mono-repo into separate modules and adopt Dataflow as our asset orchestration tool. Post deployment, the team relies on Dataflow for automated execution of unit tests, management and deployment of workflow related assets.

By the end of the migration process our Jenkins configuration went from:

to:

cd /dataflow_workspace

dataflow project deploy

The simplicity of deployment enabled the team to focus on the problems they set out to solve while the branch based customization gave us the flexibility to be our most effective at solving them.

This new method available for Netflix data engineers makes workflow management easier, more transparent and more reliable. And while it remains fairly easy and safe to build your business logic code (in Scala, Python, etc) in the same repository as the workflow definition that invokes it, the new Dataflow versioned asset registry makes it easier yet to build that code completely independently and then reference it safely inside data pipelines in any other Netflix repository, thus enabling easy code sharing and reuse.

One more aspect of data workflow development that gets enabled by this functionality is what we call branch-driven deployment. This approach enables multiple versions of your business logic and workflows to be running at the same time in the scheduler ecosystem, and makes it easy, not only for individual users to run isolated versions of the code during development, but also to define isolated staging environments through which the code can pass before it reaches the production stage. Obviously, in order for the workflows to be safely used in that configuration they must comply with a few simple rules with regards to the parametrization of their inputs and outputs, but let’s leave this subject for another blog post.

Special thanks to Peter Volpe, Harrington Joseph and Daniel Watson for the initial design review.

Data pipeline asset management with Dataflow was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Post Syndicated from Luca Mezzalira original https://aws.amazon.com/blogs/architecture/architecting-for-machine-learning/

Though it seems like something out of a sci-fi movie, machine learning (ML) is part of our day-to-day lives. So often, in fact, that we may not always notice it. For example, social networks and mobile applications use ML to assess user patterns and interactions to deliver a more personalized experience.

However, AWS services provide many options for the integration of ML. In this post, we will show you some use cases that can enhance your platforms and integrate ML into your production systems.

Performing A/B testing on production traffic to compare a new ML model with the old model is a recommended step after offline evaluation.

This blog post explains how A/B testing works and how it can be combined with multi-armed bandit testing to gradually send traffic to the more effective variants during the experiment. It will teach you how to build it with AWS Cloud Development Kit (AWS CDK), architect your system for MLOps, and automate the deployment of the solutions for A/B testing.

This diagram shows the iterative process to analyze the performance of ML models in online and offline scenarios

Modularity is a key characteristic for modern applications. You can modularize code, infrastructure, and even architecture.

A modular architecture provides an architecture and framework that allows each development role to work on their own part of the system, and hide the complexity of integration, security, and environment configuration. This blog post provides an approach to building a modular ML workload that is easy to evolve and maintain across multiple teams.

A modular architecture allows you to easily assemble different parts of the system and replace them when needed

The accuracy of ML models can deteriorate over time because of model drift or concept drift. This is a common challenge when deploying your models to production. Have you ever experienced it? How would you architect a solution to address this challenge?

Without metrics and automated actions, maintaining ML models in production can be overwhelming. This blog post shows you how to design an MLOps pipeline for model monitoring to detect concept drift. You can then expand the solution to automatically launch a new training job after the drift was detected to learn from the new samples, update the model, and take into account the changes in the data distribution.

Concept drift happens when there is a shift in the distribution. In this case, the distribution of the newly collected data (in blue) starts differing from the baseline distribution (in green)

Moving from experimentation to production forces teams to move fast and automate their operations. Adopting scalable solutions for MLOps is a fundamental step to successfully create production-oriented ML processes.

This blog post provides an extended walkthrough of the ML lifecycle and explains how to optimize the process using Amazon SageMaker. Starting from data ingestion and exploration, you will see how to train your models and deploy them for inference. Then, you’ll make your operations consistent and scalable by architecting automated pipelines. This post offers a fraud detection use case so you can see how all of this can be used to put ML in production.

The ML lifecycle involves three macro steps: data preparation, train and tuning, and deployment with continuous monitoring

Thanks for reading! We’ll see you in a couple of weeks when we discuss how to secure your workloads in AWS.

Looking for more architecture content? AWS Architecture Center provides reference architecture diagrams, vetted architecture solutions, Well-Architected best practices, patterns, icons, and more!

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=Haalls7kWVI

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=AOh3RLxm5D8

Post Syndicated from Tom Jin original https://aws.amazon.com/blogs/big-data/doing-more-with-less-moving-from-transactional-to-stateful-batch-processing/

Amazon processes hundreds of millions of financial transactions each day, including accounts receivable, accounts payable, royalties, amortizations, and remittances, from over a hundred different business entities. All of this data is sent to the eCommerce Financial Integration (eCFI) systems, where they are recorded in the subledger.

Ensuring complete financial reconciliation at this scale is critical to day-to-day accounting operations. With transaction volumes exhibiting double-digit percentage growth each year, we found that our legacy transactional-based financial reconciliation architecture proved too expensive to scale and lacked the right level of visibility for our operational needs.

In this post, we show you how we migrated to a batch processing system, built on AWS, that consumes time-bounded batches of events. This not only reduced costs by almost 90%, but also improved visibility into our end-to-end processing flow. The code used for this post is available on GitHub.

Our legacy architecture primarily utilized Amazon Elastic Compute Cloud (Amazon EC2) to group related financial events into stateful artifacts. However, a stateful artifact could refer to any persistent artifact, such as a database entry or an Amazon Simple Storage Service (Amazon S3) object.

We found this approach resulted in deficiencies in the following areas:

The following diagram illustrates our legacy architecture.

Our new architecture needed to address the deficiencies while preserving the core goal of our service: update stateful artifacts based on incoming financial events. In our case, a stateful artifact refers to a group of related financial transactions used for reconciliation. We considered the following as part of the evolution of our stack:

In our transactional system, each ingested event results in an update to a stateful artifact. This became a problem when thousands of events came in all at once for the same stateful artifact.

However, by ingesting batches of data, we had the opportunity to create separate stateless and stateful processing components. The stateless component performs an initial reduce operation on the input batch to group together related events. This meant that the rest of our system could operate on these smaller stateless artifacts and perform fewer write operations (fewer operations means lower costs).

The stateful component would then join these stateless artifacts with existing stateful artifacts to produce an updated stateful artifact.

As an example, imagine an online retailer suddenly received thousands of purchases for a popular item. Instead of updating an item database entry thousands of times, we can first produce a single stateless artifact that summaries the latest purchases. The item entry can now be updated one time with the stateless artifact, reducing the update bottleneck. The following diagram illustrates this process.

Unlike traditional extract, transform, and load (ETL) jobs, we didn’t want to perform daily or even hourly extracts. Our accountants need to be able to access the updated stateful artifacts within minutes of data arriving in our system. For instance, if they had manually sent a correction line, they wanted to be able to check within the same hour that their adjustment had the intended effect on the targeted stateful artifact instead of waiting until the next day. As such, we focused on parallelizing the incoming batches of data as much as possible by breaking down the individual tasks of the stateful component into subcomponents. Each subcomponent could run independently of each other, which allowed us to process multiple batches in an assembly line format.

Both the stateless and stateful components needed to respond to shifting traffic patterns and possible input batch backlogs. We also wanted to incorporate serverless compute to better respond to scale while reducing the overhead of maintaining an instance fleet.

This meant we couldn’t simply have a one-to-one mapping between the input batch and stateless artifact. Instead, we built flexibility into our service so the stateless component could automatically detect a backlog of input batches and group multiple input batches together in one job. Similar backlog management logic was applied to the stateful component. The following diagram illustrates this process.

To meet our needs, we combined multiple AWS products:

The following diagram illustrates our current architecture.

The following diagram shows our stateless and stateful workflow.

The AWS CloudFormation template to render this architecture and corresponding Java code is available in the following GitHub repo.

We used an Apache Spark application on a long-running Amazon EMR cluster to simultaneously ingest input batch data and perform reduce operations to produce the stateless artifacts and a corresponding index file for the stateful processing to use.

We chose Amazon EMR for its proven highly available data-processing capability in a production setting and also its ability to horizontally scale when we see increased traffic loads. Most importantly, Amazon EMR had lower cost and better operational support when compared to a self-managed cluster.

Each stateful workflow performs operations to create or update millions of stateful artifacts using the stateless artifacts. Similar to the stateless workflows, all stateful artifacts are stored in Amazon S3 across a handful of Apache Spark part-files. This alone resulted in a huge cost reduction, because we significantly reduced the number of Amazon S3 writes (while using the same amount of overall storage). For instance, storing 10 million individual artifacts using the transactional legacy architecture would cost $50 in PUT requests alone, whereas 10 Apache Spark part-files would cost only $0.00005 in PUT requests (based on $0.005 per 1,000 requests).

However, we still needed a way to retrieve individual stateful artifacts, because any stateful artifact could be updated at any point in the future. To do this, we turned to DynamoDB. DynamoDB is a fully managed and scalable key-value and document database. It’s ideal for our access pattern because we wanted to index the location of each stateful artifact in the stateful output file using its unique identifier as a primary key. We used DynamoDB to index the location of each stateful artifact within the stateful output file. For instance, if our artifact represented orders, we would use the order ID (which has high cardinality) as the partition key, and store the file location, byte offset, and byte length of each order as separate attributes. By passing the byte-range in Amazon S3 GET requests, we can now fetch individual stateful artifacts as if they were stored independently. We were less concerned about optimizing the number of Amazon S3 GET requests because the GET requests are over 10 times cheaper than PUT requests.

Overall, this stateful logic was split across three serial subcomponents, which meant that three separate stateful workflows could be operating at any given time.

The following diagram illustrates our pre-fetcher subcomponent.

The pre-fetcher subcomponent uses the stateless index file to retrieve pre-existing stateful artifacts that should be updated. These might be previous shipments for the same customer order, or past inventory movements for the same warehouse. For this, we turn once again to Amazon EMR to perform this high-throughput fetch operation.

Each fetch required a DynamoDB lookup and an Amazon S3 GET partial byte-range request. Due to the large number of external calls, fetches were highly parallelized using a thread pool contained within an Apache Spark flatMap operation. Pre-fetched stateful artifacts were consolidated into an output file that was later used as input to the stateful processing engine.

The following diagram illustrates the stateful processing engine.

The stateful processing engine subcomponent joins the pre-fetched stateful artifacts with the stateless artifacts to produce updated stateful artifacts after applying custom business logic. The updated stateful artifacts are written out across multiple Apache Spark part-files.

Because stateful artifacts could have been indexed at the same time that they were pre-fetched (also called in-flight updates), the stateful processor also joins recently processed Apache Spark part-files.

We again used Amazon EMR here to take advantage of the Apache Spark operations that are required to join the stateless and stateful artifacts.

The following diagram illustrates the state indexer.

This Lambda-based subcomponent records the location of each stateful artifact within the stateful part-file in DynamoDB. The state indexer also caches the stateful artifacts in an Amazon ElastiCache for Redis cluster to provide a performance boost in the Amazon S3 GET requests performed by the pre-fetcher.

However, even with a thread pool, a single Lambda function isn’t powerful enough to index millions of stateful artifacts within the 15-minute time limit. Instead, we employ a cluster of Lambda functions. The state indexer begins with a single coordinator Lambda function, which determines the number of worker functions that are needed. For instance, if 100 part-files are generated by the stateful processing engine, then the coordinator might assign five part-files for each of the 20 Lambda worker functions to work on. This method is highly scalable because we can dynamically assign more or fewer Lambda workers as required.

Each Lambda worker then performs the ElastiCache and DynamoDB writes for all the stateful artifacts within each assigned part-file in a multi-threaded manner. The coordinator function monitors the health of each Lambda worker and restarts workers as needed.

We used Step Functions to coordinate each of the stateless and stateful workflows, as shown in the following diagram.

Every time a new workflow step ran, the step was recorded in a DynamoDB table via a Lambda function. This table not only maintained the order in which stateful batches should be run, but it also formed the basis of the backlog management system, which directed the stateless ingestion engine to group more or fewer input batches together depending on the backlog.

We chose Step Functions for its native integration with many AWS services (including triggering by an Amazon CloudWatch scheduled event rule and adding Amazon EMR steps) and its built-in support for backoff retries and complex state machine logic. For instance, we defined different backoff retry rates based on the type of error.

Our batch-based architecture helped us overcome the transactional processing limitations we originally set out to resolve:

We are particularly excited about the investments we have made moving from a transactional-based system to a batch processing system, especially our shift from using Amazon EC2 to using serverless Lambda and big data Amazon EMR services. This experience demonstrates that even services originally built on AWS can still achieve cost reductions and improve performance by rethinking how AWS services are used.

Inspired by our progress, our team is moving to replace many other legacy services with serverless components. Likewise, we hope that other engineering teams can learn from our experience, continue to innovate, and do more with less.

Find the code used for this post in the following GitHub repository.

Special thanks to development team: Ryan Schwartz, Abhishek Sahay, Cecilia Cho, Godot Bian, Sam Lam, Jean-Christophe Libbrecht, and Nicholas Leong.

Post Syndicated from original https://lwn.net/Articles/883073/

The PinePhone is a Linux-based

smartphone made by PINE64 that runs free

and open-source software (FOSS); it is designed to

use a close-to-mainline Linux kernel. While many

smartphones already use the Linux kernel as part of Android, few run

distributions that are actually similar to those used on desktops and

laptops. The PinePhone is different, however; it provides an experience

that is much closer to normal desktop Linux, though it probably cannot

completely replace a full-featured smartphone—at least yet.

Post Syndicated from original https://lwn.net/Articles/883073/rss

The PinePhone is a Linux-based

smartphone made by PINE64 that runs free

and open-source software (FOSS); it is designed to

use a close-to-mainline Linux kernel. While many

smartphones already use the Linux kernel as part of Android, few run

distributions that are actually similar to those used on desktops and

laptops. The PinePhone is different, however; it provides an experience

that is much closer to normal desktop Linux, though it probably cannot

completely replace a full-featured smartphone—at least yet.

Post Syndicated from original https://lwn.net/Articles/884264/

Version 2.38 of the GNU Binutils tool set has been released. Changes

include new hardware support (including for the LoongArch architecture),

various Unicode-handling improvements, a new --thin option to

ar for the creation of thin archives, and more.

Post Syndicated from original https://lwn.net/Articles/884264/rss

Version 2.38 of the GNU Binutils tool set has been released. Changes

include new hardware support (including for the LoongArch architecture),

various Unicode-handling improvements, a new --thin option to

ar for the creation of thin archives, and more.

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=iCE5Z43EKpk

Post Syndicated from Geographics original https://www.youtube.com/watch?v=5C3TfFBCs4w

Post Syndicated from Rapid7 original https://blog.rapid7.com/2022/02/09/rapid7-team-members-share-key-takeaways-from-amp-2022/

Each year, Rapid7 hosts AMP, our annual employee kickoff event where leaders from across the organization share their goals for the next 12 months. These goals bring us closer to achieving our mission of closing the security achievement gap.

With the effects of COVID-19 still physically separating us, hosting AMP 2022 virtually allowed our people from around the world to maintain a level of togetherness and focus on our shared vision as we move into the new year. While employees throughout the world eagerly attended, we invited some of our newest hires to share their key takeaways.

As an Enterprise Cloud Sales Specialist, Patrick’s role requires him to hone in on his customer service skills to build and strengthen client relationships.

According to Sonou, “We need to speak and understand the customer language, their needs, concerns, and expectations.” AMP 2022 was the perfect opportunity to learn more about the challenges our customers face and how to be a strategic partner by enabling them to advance securely.

“Beyond the technologies, we need to understand our customers deeply and provide the best user experience throughout the life of the collaboration,” says Sonou on the subject. It’s one thing to know the product and have the skills to sell it – it’s another to have a strong understanding of your relationship with the customers who are utilizing our products and services.

Luke Gadomski joins Rapid7 as Director of North American Sales Operations. In his role, Gadomski is committed to creating value for our customers to drive impact. According to Gadomski, “The key elements to accelerating together are partnerships and building trust while aligning in shared goals.” In his role, Gadomski is well aware of the strong teamwork necessary to create and develop these important customer connections.

A quote that stood out to Gadomski on the last day of AMP was made by Rapid7 President and Chief Operating Officer, Andrew Burton: “When we drive forward together with our customers and fellow Moose, we accelerate toward our mission.” This highlights the emphasis on Rapid7’s customer relationships and how cultivating and nurturing those partnerships are closely tied to our overall goals as a company.

Carlie Bower joins the Rapid7 team as Vice President, Engineering Executive in Residence. Through her experience at AMP, Bower noticed that there was an overwhelming presence of community. She recognized the culture that is key to what makes Rapid7 so special.

“We bring our whole selves to work, and that’s why we see so many aspects of our lives and experiences reflected at AMP,” she said. “It’s so exciting to have the opportunity to connect the learning and growth we experience as people. There are lessons in life, teaming, and connection through all of these facets of ourselves, and those provide the foundation for us to do great things together in the workplace. We have incredible potential to make a difference by closing the security gap for customers while having a fulfilling experience together on the journey.”

Bower, along with all Rapid7 Moose, appreciates the culture that brings Rapid7 together and allows career growth both individually and company-wide. Bower believes this success is most reflected in the opportunities our people have to work together to tackle tough problems for customers, “when we thrive working together as one, our customers feel the impact of that cohesion through the amazing experience they have.”

Another new Moose who was equally impressed by the emphasis put on the community was Nancy Li. Li, the current Director of Platform Software Engineering, expanded on her experience, stating, “Good companies take the time to define core values, great companies champion the values so that the employees can remember and demonstrate the values, but rarely have I seen companies like this one where the people at every level live and breathe the values to our core. I felt that. Even in the short month that I’ve been here, especially during AMP, where we had the opportunity to see and hear from leaders and key influencers from all over the company.”

After being in the software industry for 17 years, Li is not new to forums like this. She explains, “Typically, I have seen leaders tend to reflect on the successes of the past and paint beautiful pictures of the future in forums like this, which leaves employees feeling a disconnected sense of reality. What sets AMP apart from others is that the leaders are all very honest about laying out the successes and failures that got us to where we are today and calling out the challenges we need to tackle down the road to succeed in an ever-changing world.” Li described this as being “authentic to the core.”

“AMP has informed me how we got here and left me excited about the future, as I embrace Andrew’s ‘ever curious, never judgmental’ message for 2022 and beyond.”

As a lead-up to the event, Rapid7 employees were encouraged to share personal stories, photos, and videos through Slack, enabling tenured employees and new hires the opportunity to create bonds and get excited. The result was an engaging event that aligned with core values and encouraged learning. AMP 2022 was carried out remarkably well and captured the attention of every single team member by utilizing a user-friendly platform, having sessions that aligned with our core values, and finding ways for our employees to continue to learn during and after the conference.

Interested in exploring a new role? We’re hiring! Click here to browse our open jobs at Rapid7.

Additional reading

Post Syndicated from original https://lwn.net/Articles/884242/

Security updates have been issued by CentOS (aide), Debian (connman), Fedora (perl-App-cpanminus and rust-afterburn), Mageia (glibc), Red Hat (.NET 5.0, .NET 6.0, aide, log4j, ovirt-engine, and samba), SUSE (elasticsearch, elasticsearch-kit, kafka, kafka-kit, logstash, openstack-monasca-agent, openstack-monasca-log-metrics, openstack-monasca-log-persister, openstack-monasca-log-transformer, openstack-monasca-persister-java, openstack-monasca-persister-java-kit, openstack-monasca-thresh, openstack-monasca-thresh-kit, spark, spark-kit, venv-openstack-monasca, zookeeper, zookeeper-kit and elasticsearch, elasticsearch-kit, kafka, kafka-kit, logstash, openstack-monasca-agent, openstack-monasca-persister-java, openstack-monasca-persister-java-kit, openstack-monasca-thresh, openstack-monasca-thresh-kit, spark, spark-kit, storm, storm-kit, venv-openstack-monasca, zookeeper, zookeeper-kit), and Ubuntu (bluez, linux, linux-aws, linux-aws-5.4, linux-gcp, linux-gcp-5.4, linux-hwe-5.4, linux-ibm, linux-kvm, linux-oracle, linux-oracle-5.4, nvidia-graphics-drivers-450-server, nvidia-graphics-drivers-470, nvidia-graphics-drivers-470-server, nvidia-graphics-drivers-510, python2.7, and util-linux).

Post Syndicated from original https://lwn.net/Articles/884242/rss

Security updates have been issued by CentOS (aide), Debian (connman), Fedora (perl-App-cpanminus and rust-afterburn), Mageia (glibc), Red Hat (.NET 5.0, .NET 6.0, aide, log4j, ovirt-engine, and samba), SUSE (elasticsearch, elasticsearch-kit, kafka, kafka-kit, logstash, openstack-monasca-agent, openstack-monasca-log-metrics, openstack-monasca-log-persister, openstack-monasca-log-transformer, openstack-monasca-persister-java, openstack-monasca-persister-java-kit, openstack-monasca-thresh, openstack-monasca-thresh-kit, spark, spark-kit, venv-openstack-monasca, zookeeper, zookeeper-kit and elasticsearch, elasticsearch-kit, kafka, kafka-kit, logstash, openstack-monasca-agent, openstack-monasca-persister-java, openstack-monasca-persister-java-kit, openstack-monasca-thresh, openstack-monasca-thresh-kit, spark, spark-kit, storm, storm-kit, venv-openstack-monasca, zookeeper, zookeeper-kit), and Ubuntu (bluez, linux, linux-aws, linux-aws-5.4, linux-gcp, linux-gcp-5.4, linux-hwe-5.4, linux-ibm, linux-kvm, linux-oracle, linux-oracle-5.4, nvidia-graphics-drivers-450-server, nvidia-graphics-drivers-470, nvidia-graphics-drivers-470-server, nvidia-graphics-drivers-510, python2.7, and util-linux).

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=7Q-ZuzcZO-Q

Post Syndicated from Michael Conterio original https://www.raspberrypi.org/blog/learn-to-code-new-free-online-course-scratch-programming/

Are you curious about coding and computer programming but don’t know how to begin? Do you want to help your children at home, or learners in your school, with their digital skills, but you’re not very confident yet? Then our new, free, and on-demand online course Introduction to Programming with Scratch course is a fun, creative, and colourful starting point for you.

Being able to code can help you do lots of things — from expressing yourself to helping others practice their skills, and from highlighting real-world issues to controlling a robot. Whether you want to get a taste of what coding is about, or you want to learn so that you can support young people, our Introduction to Programming with Scratch course is the perfect place to start if you’ve never tried any coding before.

On this on-demand course, Mark and Vasu from our team will help you take your very first steps on your programming journey.

On the course, you’ll use the programming language Scratch, a beginner-friendly, visual programming language particularly suitable for creating animations and games. All you need is our course and a computer or tablet with a web browser and internet connection that can access the online Scratch editor.

You can code in Scratch without having to memorise and type in commands. Instead, by snapping blocks together, you’ll take control of ‘sprites’, which are characters and objects on the screen that you can move around with the code you create.

As well as learning what you can do with Scratch, you’ll be learning basic programming concepts that are the same for all programming languages. You’ll see how the order of commands is important (sequencing), you’ll make the computer repeat actions (repetition), and you’ll write programs that do different things in different circumstances, for example responding to your user’s actions (selection). Later on, you’ll also make your own reusable code blocks (abstraction).

Throughout the course you’ll learn to make your own programs step by step. In the final week, Mark and Vasu will show you how you can create musical projects and interact with your program using a webcam.

Vasu and Mark will encourage you to share your programs and join the Scratch online community. You will discover how you can explore other people’s Scratch programs for inspiration and support, and how to build on the code they’ve created.

The course starts for the first time on Monday 14 February, but it is available on demand, so you can join it at any time. You’ll get four weeks’ access to the course no matter when you sign up.

For the first four weeks that the course is available, and every three months after that, people from our team will join in to support you and help answer your questions in the comments sections.

If you’re a teacher in England, get free extended access by signing up through Teach Computing here.

You can find more free resources here! These are the newest Scratch pathways on our project site, which you can also share with the young people in your life:

The post Get an easy start to coding with our new free online course appeared first on Raspberry Pi.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/02/breaking-245-bit-elliptic-curve-encryption-with-a-quantum-computer.html

Researchers have calculated the quantum computer size necessary to break 256-bit elliptic curve public-key cryptography:

Finally, we calculate the number of physical qubits required to break the 256-bit elliptic curve encryption of keys in the Bitcoin network within the small available time frame in which it would actually pose a threat to do so. It would require 317 × 106 physical qubits to break the encryption within one hour using the surface code, a code cycle time of 1 μs, a reaction time of 10 μs, and a physical gate error of 10-3. To instead break the encryption within one day, it would require 13 × 106 physical qubits.

In other words: no time soon. Not even remotely soon. IBM’s largest ever superconducting quantum computer is 127 physical qubits.

By continuing to use the site, you agree to the use of cookies. more information

The cookie settings on this website are set to "allow cookies" to give you the best browsing experience possible. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this.