Post Syndicated from original https://lwn.net/Articles/885893/

The

5.16.11,

5.15.25,

5.10.102,

5.4.181,

4.19.231,

4.14.268, and

4.9.303

stable kernel updates have all been released; each contains another set of

important fixes.

Post Syndicated from original https://lwn.net/Articles/885886/

OpenSSH 8.9 has been released. This version includes a fix for a

“security near miss” and removes support for MD5-hashed

passwords. It also includes a new mechanism to

restrict the forwarding of keys in ssh-agent, various FIDO improvements, a new

“post-quantum” key-exchange algorithm, and more.

Post Syndicated from original https://lwn.net/Articles/885885/

Security updates have been issued by Debian (expat), Fedora (php and vim), Mageia (cpanminus, expat, htmldoc, nodejs, polkit, util-linux, and varnish), Red Hat (389-ds-base, curl, kernel, kernel-rt, openldap, python-pillow, rpm, sysstat, and unbound), Scientific Linux (389-ds-base, kernel, openldap, and python-pillow), and Ubuntu (cyrus-sasl2, linux-oem-5.14, and php7.0).

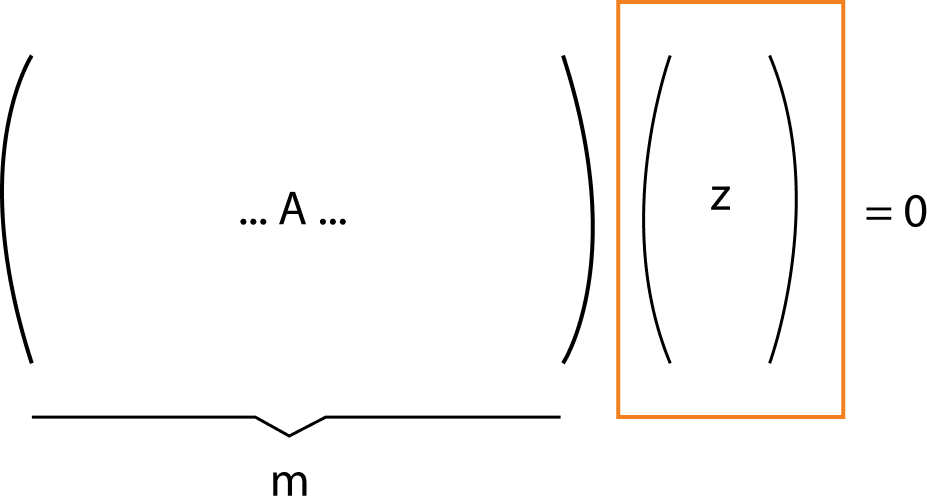

Post Syndicated from Thom Wiggers original https://blog.cloudflare.com/making-protocols-post-quantum/

Ever since the (public) invention of cryptography based on mathematical trap-doors by Whitfield Diffie, Martin Hellman, and Ralph Merkle, the world has had key agreement and signature schemes based on discrete logarithms. Rivest, Shamir, and Adleman invented integer factorization-based signature and encryption schemes a few years later. The core idea, that has perhaps changed the world in ways that are hard to comprehend, is that of public key cryptography. We can give you a piece of information that is completely public (the public key), known to all our adversaries, and yet we can still securely communicate as long as we do not reveal our piece of extra information (the private key). With the private key, we can then efficiently solve mathematical problems that, without the secret information, would be practically unsolvable.

In later decades, there were advancements in our understanding of integer factorization that required us to bump up the key sizes for finite-field based schemes. The cryptographic community largely solved that problem by figuring out how to base the same schemes on elliptic curves. The world has since then grown accustomed to having algorithms where public keys, secret keys, and signatures are just a handful of bytes and the speed is measured in the tens of microseconds. This allowed cryptography to be bolted onto previously insecure protocols such as HTTP or DNS without much overhead in either time or the data transmitted. We previously wrote about how TLS loves small signatures; similar things can probably be said for a lot of present-day protocols.

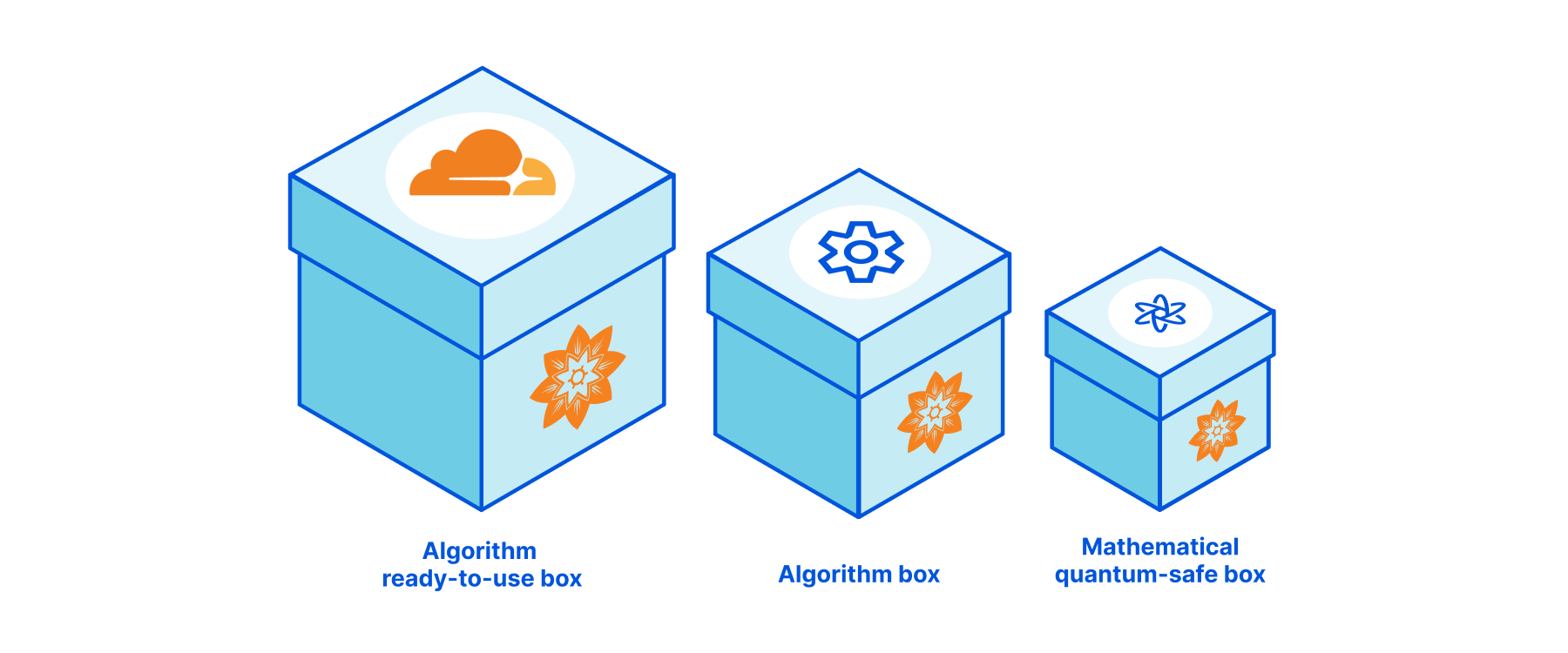

But this blog has “post-quantum” in the title; quantum computers are likely to make our cryptographic lives significantly harder by undermining many of the assurances we previously took for granted. The old schemes are no longer secure because new algorithms can efficiently solve their particular mathematical trapdoors. We, together with everyone on the Internet, will need to swap them out. There are whole suites of quantum-resistant replacement algorithms; however, right now it seems that we need to choose between “fast” and “small”. The new alternatives also do not always have the same properties that we have based some protocols on.

In this blog post, we will discuss how one might upgrade cryptographic protocols to make them secure against quantum computers. We will focus on the cryptography that they use and see what the challenges are in making them secure against quantum computers. We will show how trade-offs might motivate completely new protocols for some applications. We will use TLS here as a stand-in for other protocols, as it is one of the most deployed protocols.

TLS, from SSL and HTTPS fame, gets discussed a lot. We keep it brief here. TLS 1.3 consists of an Ephemeral Elliptic curve Diffie-Hellman (ECDH) key exchange which is authenticated by a digital signature that’s verified by using a public key that’s provided by the server in a certificate. We know that this public key is the right one because the certificate contains another signature by the issuer of the certificate and our client has a repository of valid issuer (“certificate authority”) public keys that it can use to verify the authenticity of the server’s certificate.

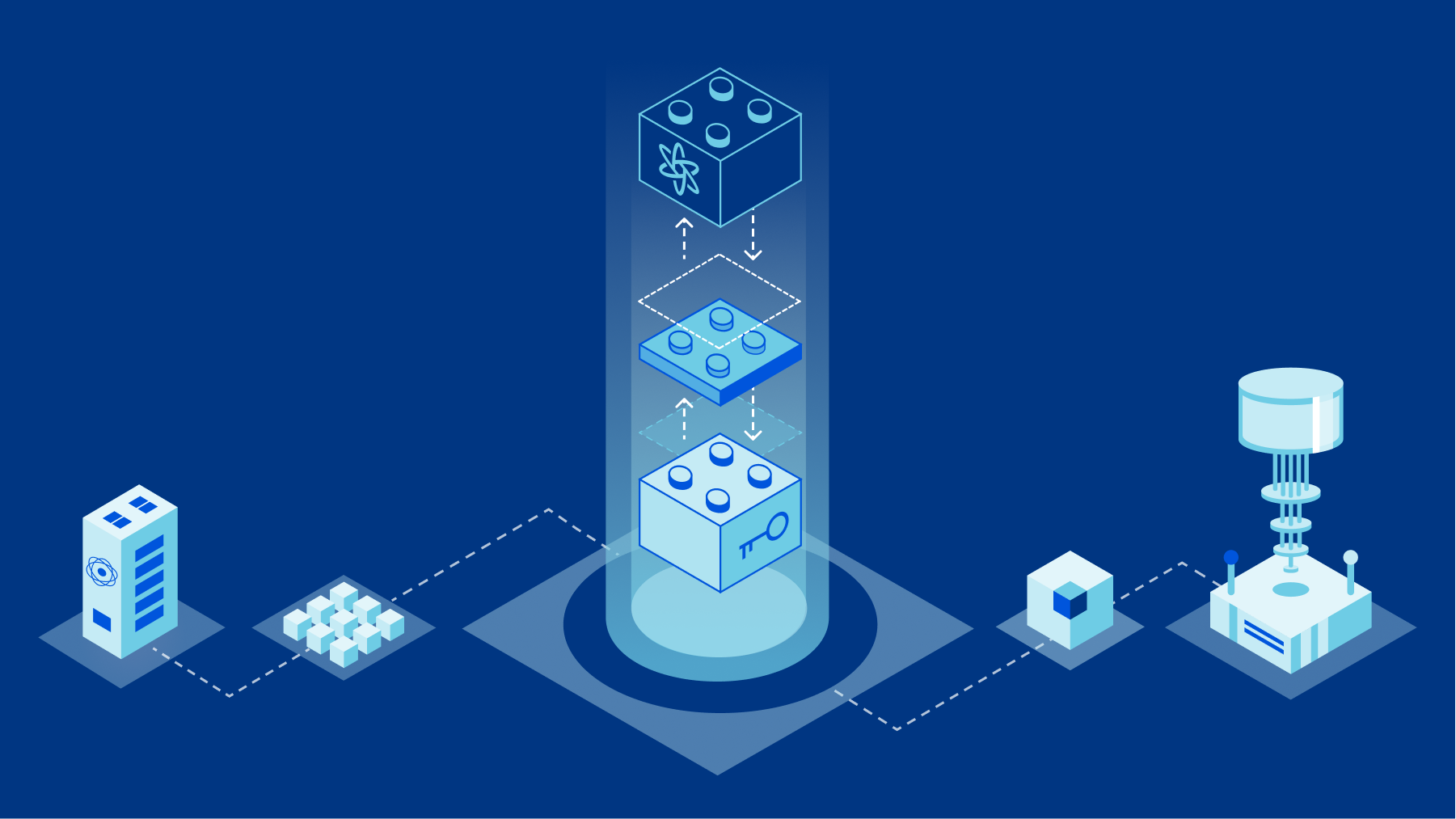

In principle, TLS can become post-quantum straightforwardly: we just write “PQ” in front of the algorithms. We replace ECDH key exchange by post-quantum (PQ) key exchange provided by a post-quantum Key Encapsulation Mechanism (KEM). For the signatures on the handshake, we just use a post-quantum signature scheme instead of an elliptic curve or RSA signature scheme. No big changes to the actual “arrows” of the protocol necessary, which is super convenient because we don’t need to revisit our security proofs. Mission accomplished, cake for everyone, right?

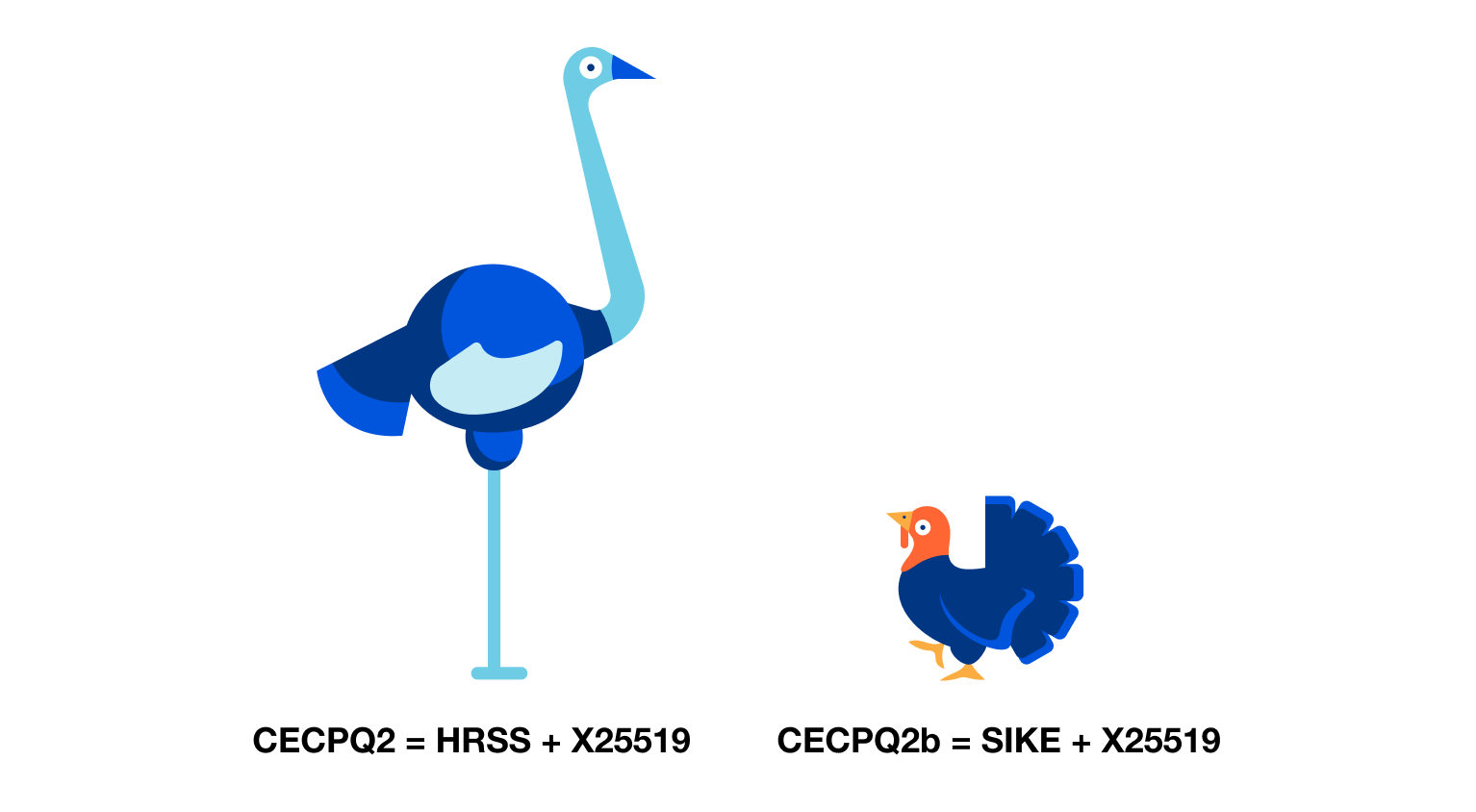

Of course, it’s not so simple. There are nine different suites of post-quantum key exchange algorithms still in the running in round three of the NIST Post-Quantum standardization project: Kyber, SABER, NTRU, and Classic McEliece (the “finalists”); and SIKE, BIKE, FrodoKEM, HQC, and NTRU Prime (“alternates”). These schemes have wildly different characteristics. This means that for step one, replacing the key exchange by post-quantum key exchange, we need to understand the differences between these schemes and decide which one fits best in TLS. Because we’re doing ephemeral key exchange, we consider the size of the public key and the ciphertext since they need to be transmitted for every handshake. We also consider the “speed” of the key generation, encapsulation, and decapsulation operations, because these will affect how many servers we will need to handle these connections.

Table 1: Post-Quantum KEMs at security level comparable with AES128. Sizes in bytes.

| Scheme | Transmission size (pk+ct) | Speed of operations |

|---|---|---|

| Finalists | ||

| Kyber512 | 1,632 | Very fast |

| NTRU-HPS-2048-509 | 1,398 | Very fast |

| SABER (LightSABER) | 1,408 | Very fast |

| Classic McEliece | 261,248 | Very slow |

| Alternate candidates | ||

| SIKEp434 | 676 | Slow |

| NTRU Prime (ntrulpr) | 1,922 | Very fast |

| NTRU Prime (sntru) | 1,891 | Fast |

| BIKE | 5,892 | Slow |

| HQC | 6,730 | Reasonable |

| FrodoKEM | 19,336 | Reasonable |

Fortunately, once we make this table the landscape for KEMs that are suitable for use in TLS quickly becomes clear. We will have to sacrifice an additional 1,400 – 2,000 bytes, assuming SIKE’s slow runtime is a bigger penalty to the connection establishment (see our previous work here). So we can choose one of the lattice-based finalists (Kyber, SABER or NTRU) and call it a day.1

For our post-quantum signature scheme, we can draw a similar table. In TLS, we generally care about the sizes of public keys and signatures. In terms of runtime, we care about signing and verification times, as key generation is only done once for each certificate, offline. The round three candidates for signature schemes are: Dilithium, Falcon, Rainbow (the three finalists), and SPHINCS+, Picnic, and GeMSS.

Table 2: Post-Quantum signature schemes at security level comparable with AES128 (or smallest parameter set). Sizes in bytes.

| Scheme | Public key size | Signature size | Speed of operations |

|---|---|---|---|

| Finalists | |||

| Dilithium2 | 1,312 | 2,420 | Very fast |

| Falcon-512 | 897 | 690 | Fast if you have the right hardware |

| Rainbow-I-CZ | 103,648 | 66 | Fast |

| Alternate Candidates | |||

| SPHINCS+-128f | 32 | 17,088 | Slow |

| SPHINCS+-128s | 32 | 7,856 | Very slow |

| GeMSS-128 | 352,188 | 33 | Very slow |

| Picnic3 | 35 | 14,612 | Very slow |

There are many signatures in a TLS handshake. Aside from the handshake signature that the server creates to authenticate the handshake (with public key in the server certificate), there are signatures on the certificate chain (with public keys for intermediate certificates), as well as OCSP Stapling (1) and Certificate Transparency (2) signatures (without public keys).

This means that if we used Dilithium for all of these, we require 17KB of public keys and signatures. Falcon is looking very attractive here, only requiring 6KB, but it might not run fast enough on embedded devices that don’t have special hardware to accelerate 64-bit floating point computations in constant time. SPHINCS+, GeMSS, or Rainbow each have significant deployment challenges, so it seems that there is no one-scheme-fits-all solution.

Picking and choosing specific algorithms for particular use cases, such as using a scheme with short signatures for root certificates, OCSP Stapling, and CT might help to alleviate the problems a bit. We might use Rainbow for the CA root, OCSP staples, and CT logs, which would mean we only need 66 bytes for each signature. It is very nice that Rainbow signatures are only very slightly larger than 64-byte ed25519 elliptic curve signatures, and they are significantly smaller than 256-byte RSA-2048 signatures. This gives us a lot of space to absorb the impact of the larger handshake signatures required. For intermediate certificates, where both the public key and the signature are transmitted, we might use Falcon because it’s nice and small, and the client only needs to do signature verification.

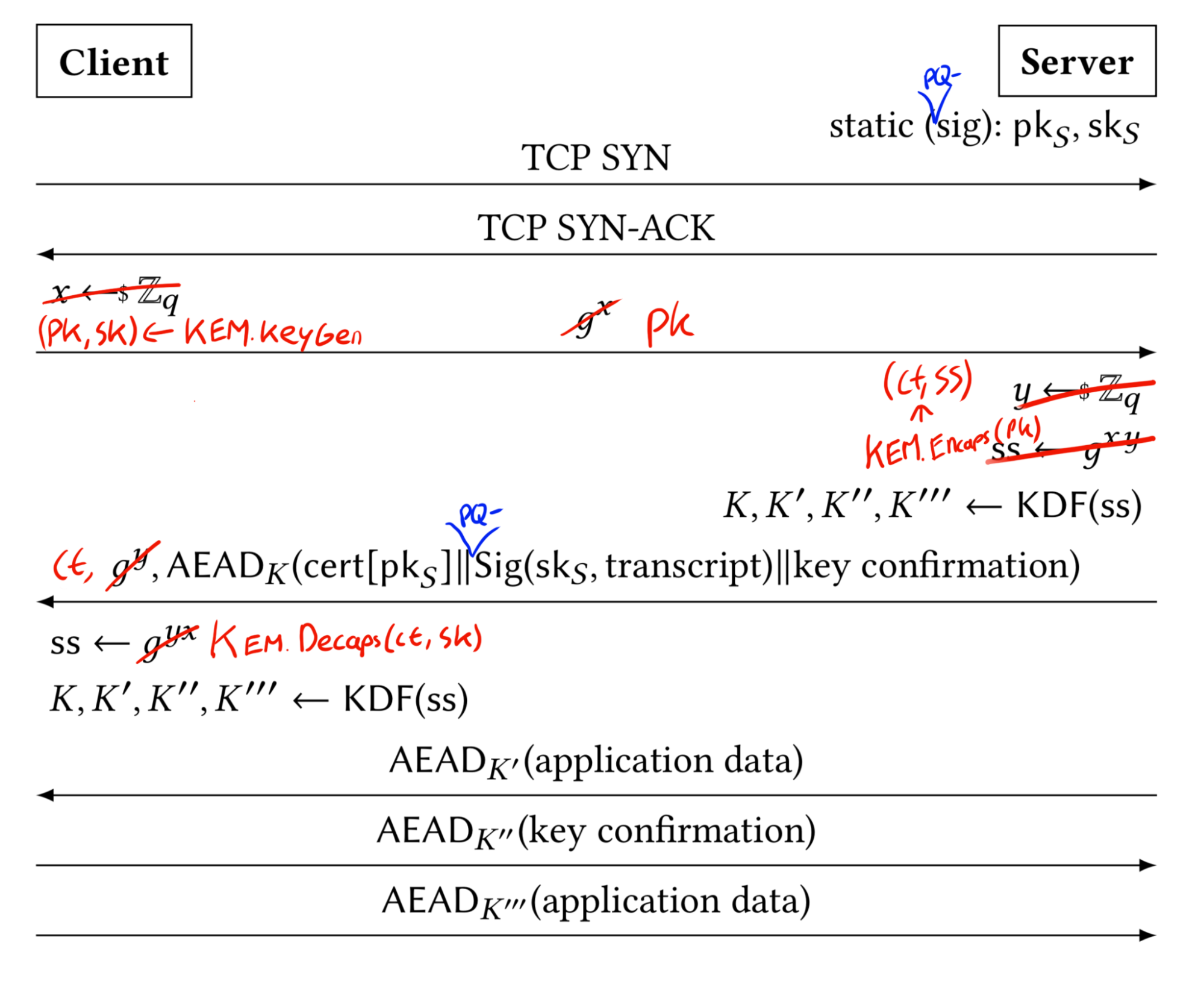

In the pre-quantum world, key exchange and signature schemes used to be roughly equivalent in terms of work required or bytes transmitted. As we saw in the previous section, this doesn’t hold up in the post-quantum world. This means that this might be a good opportunity to also investigate alternatives to the classic “signed key exchange” model. Deploying significant changes to an existing protocol might be harder than just swapping out primitives, but we might gain better characteristics. We will look at such a proposed redesign for TLS here.

The idea is to use key exchange not just for confidentiality, but also for authentication. This uses the following idea: what a signature in a protocol like TLS is actually proving is that the person signing has possession of the secret key that corresponds to the public key that’s in the certificate. But we can also do this with a key exchange key by showing you can derive the same shared secret (if you want to prove this explicitly, you might do so by computing a Message Authentication Code using the established shared secret).

This isn’t new; many modern cryptographic protocols, such as the Signal messaging protocol, have used such mechanisms. They offer privacy benefits like (offline) deniability. But now we might also use this to obtain a faster or “smaller” protocol.

However, this does not come for free. Because authentication via key exchange (via KEM at least) inherently requires two participants to exchange keys, we need to send more messages. In TLS, this means that the server that wants to authenticate first needs to give the client their public key. The client obviously can not encapsulate a shared secret to a key he does not know.

We also still need to verify signatures on the certificate chain and the signatures for OCSP stapling and Certificate Transparency are still necessary. Because we need to do “offline” verification for those elements of the handshake, it is hard to get rid of those signatures. So we will still need to carefully look at those signatures and pick an algorithm that fits there.

Client Server

ClientHello -------->

<-------- ServerHello

<...>

<-------- <Certificate> ^

<KEMEncapsulation> | Auth

{Finished} --------> |

[Application Data] --------> |

<-------- {Finished} v

[Application Data] <-------> [Application Data]

<msg>: encrypted w/ keys derived from ephemeral KEX (HS)

{msg}: encrypted w/ keys derived from HS+KEM (AHS)

[msg]: encrypted w/ traffic keys derived from AHS (MS)

Authentication via KEM in TLS from the AuthKEM proposal

If we put the necessary arrows to authenticate via KEM into TLS it looks something like Figure 2. This is actually a fully-fledged proposal for an alternative to the usual TLS handshake. The academic proposal KEMTLS was published at the ACM CCS conference in 2020; a proposal to integrate this into TLS 1.3 is described in the draft-celi-wiggers-tls-authkem draft RFC.

What this proposal illustrates is that the transition to post-quantum cryptography might motivate, or even require, us to have a brand-new look at what the desired characteristics of our protocol are and what properties we need, like what budget we have for round-trips versus the budget for data transmitted. We might even pick up some properties, like deniability, along the way. For some protocols this is somewhat easy, like TLS; in other protocols there isn’t even a clear idea of where to start (DNSSEC has very tight limits).

We should not wait until NIST has finished standardizing post-quantum key exchange and signature schemes before thinking about whether our protocols are ready for the post-quantum world. For our current protocols, we should investigate how the proposed post-quantum key exchange and signature schemes can be fitted in. At the same time, we might use this opportunity for careful protocol redesigns, especially if the constraints are so tight that it is not easy to fit in post-quantum cryptography. Cloudflare is participating in the IETF and working with partners in both academia and the industry to investigate the impact of post-quantum cryptography and make the transition as easy as possible.

If you want to be a part of the future of cryptography on the Internet, either as an academic or an engineer, be sure to check out our academic outreach or jobs pages.

…..

1Of course, it’s not so simple. The performance measurements were done on a beefy Macbook, using AVX2 intrinsics. For stuff like IoT (yes, your smart washing machine will also need to go post-quantum) or a smart card you probably want to add another few columns to this table before making a choice, such as code size, side channel considerations, power consumption, and execution time on your platform.

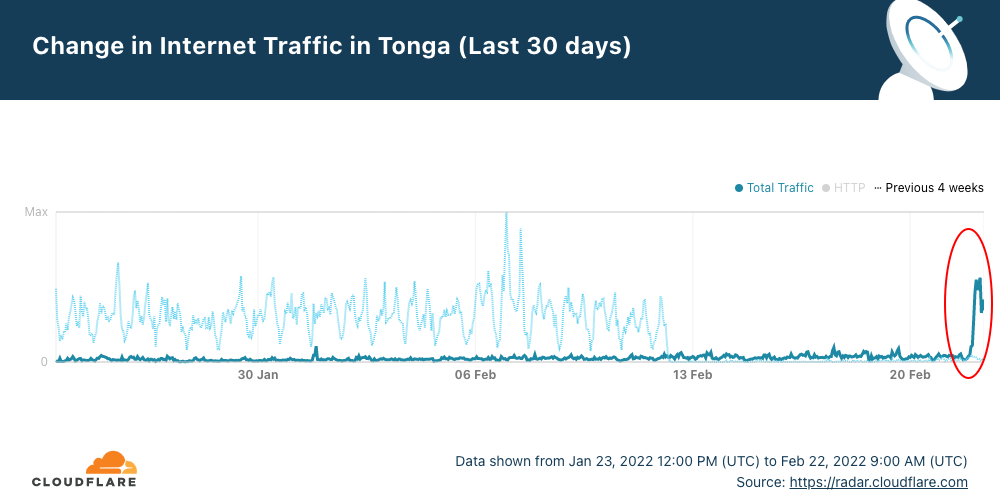

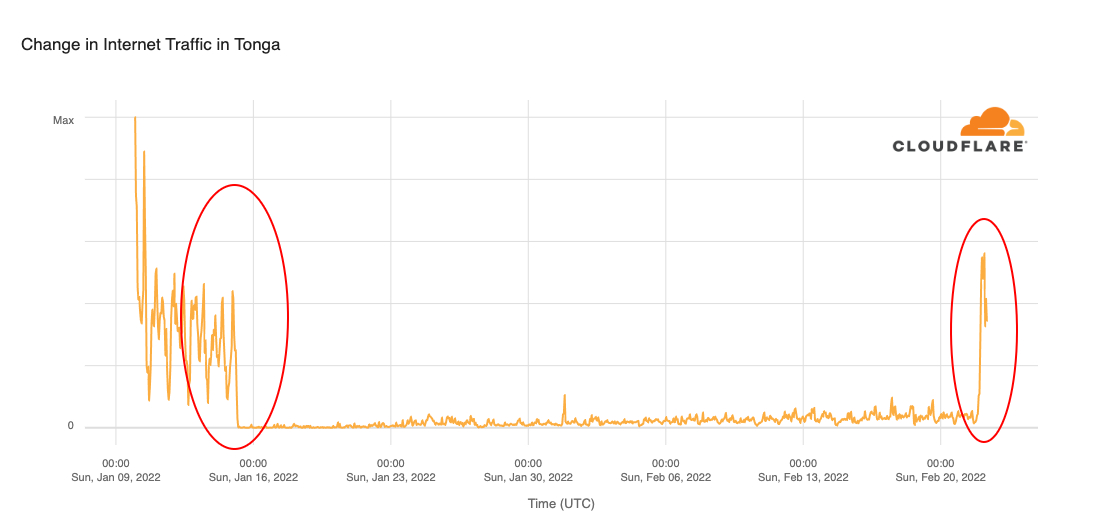

Post Syndicated from Maximilian Wilhelm original https://blog.cloudflare.com/route-leaks-and-confirmation-biases/

This is not what I imagined my first blog article would look like, but here we go.

On February 1, 2022, a configuration error on one of our routers caused a route leak of up to 2,000 Internet prefixes to one of our Internet transit providers. This leak lasted for 32 seconds and at a later time 7 seconds. We did not see any traffic spikes or drops in our network and did not see any customer impact because of this error, but this may have caused an impact to external parties, and we are sorry for the mistake.

All timestamps are UTC.

As part of our efforts to build the best network, we regularly update our Internet transit and peering links throughout our network. On February 1, 2022, we had a “hot-cut” scheduled with one of our Internet transit providers to simultaneously update router configurations on Cloudflare and ISP routers to migrate one of our existing Internet transit links in Newark to a link with more capacity. Doing a “hot-cut” means that both parties will change cabling and configuration at the same time, usually while being on a conference call, to reduce downtime and impact on the network. The migration started off-peak at 10:45 (05:45 local time) with our network engineer entering the bridge call with our data center engineers and remote hands on site as well as operators from the ISP.

At 11:17, we connected the new fiber link and established the BGP sessions to the ISP successfully. We had BGP filters in place on our end to not accept and send any prefixes, so we could evaluate the connection and settings without any impact on our network and services.

As the connection between our router and the ISP — like most Internet connections — was realized over a fiber link, the first item to check are the “light levels” of that link. This shows the strength of the optical signal received by our router from the ISP router and can indicate a bad connection when it’s too low. Low light levels are likely caused by unclean fiber ends or not fully seated connectors, but may also indicate a defective optical transceiver which connects the fiber link to the router – all of which can degrade service quality.

The next item on the checklist is interface errors, which will occur when a network device receives incorrect or malformed network packets, which would also indicate a bad connection and would likely lead to a degradation in service quality, too.

As light levels were good, and we observed no errors on the link, we deemed it ready for production and removed the BGP reject filters at 11:22.

This immediately triggered the maximum prefix-limit protection the ISP had configured on the BGP session and shut down the session, preventing further impact. The maximum prefix-limit is a safeguard in BGP to prevent the spread of route leaks and to protect the Internet. The limit is usually set just a little higher than the expected number of Internet prefixes from a peer to leave some headroom for growth but also catch configuration errors fast. The configured value was just 40 prefixes short of the number of prefixes we were advertising at that site, so this was considered the reason for the session to be shut down. After checking back internally, we asked the ISP to raise the prefix-limit, which they did.

The BGP session was reestablished at 12:08 and immediately shut down again. The problem was identified and fixed at 12:14.

10:45: Start of scheduled maintenance

11:17: New link was connected and BGP sessions went up (filters still in place)

11:22: Link was deemed ready for production and filters removed

11:23: BGP sessions were torn down by ISP router due to configured prefix-limit

12:08: ISP configures higher prefix-limits, BGP sessions briefly come up again and are shut down

12:14: Issue identified and configuration updated

The outage occurred while migrating one of our Internet transits to a link with more capacity. Once the new link and a BGP session had been established, and the link deemed error-free, our network engineering team followed the peer-reviewed deployment plan. The team removed the filters from the BGP sessions, which prevented the Cloudflare router from accepting and sending prefixes via BGP.

Due to an oversight in the deployment plan, which had been peer-reviewed before without noticing this issue, no BGP filters to only export prefixes of Cloudflare and our customers were added. A peer review on the internal chat did not notice this either, so the network engineer performing this change went ahead.

ewr02# show |compare

[edit protocols bgp group 4-ORANGE-TRANSIT]

- import REJECT-ALL;

- export REJECT-ALL;

[edit protocols bgp group 6-ORANGE-TRANSIT]

- import REJECT-ALL;

- export REJECT-ALL;

The change resulted in our router sending all known prefixes to the ISP router, which shut down the session as the number of prefixes received exceeded the maximum prefix-limit configured.

As the configured values for the maximum prefix-limits turned out to be rather low for the number of prefixes on our network, this didn’t come as a surprise to our network engineering team and no investigation into why the BGP session went down was started. The prefix-limit being too low seemed to be a perfectly valid reason.

We asked the ISP to increase the prefix-limit, which they did after they received approval on their side. Once the prefix-limit had been increased and the previously shutdown BGP sessions reset, the sessions were reestablished but were shut down immediately as the maximum prefix-limit was triggered again. This is when our network engineer started questioning whether there was another issue at fault and found and corrected the configuration error previously overlooked.

We made the following change in response to this event: we introduced an implicit reject policy for BGP sessions which will take effect if no import/export policy is configured for a specific BGP neighbor or neighbor group. This change has been deployed.

Route leaks aren’t new, and they keep happening. The industry has come up with many approaches to limit the impact or even prevent route-leaks. Policies and filters are used to control which prefixes should be exported to or imported from a given peer. RPKI can help to make sure only allowed prefixes are accepted from a peer and a maximum prefix-limit can act as a last line of defense when everything else fails.

BGP policies and filters are commonly used to ensure only explicitly allowed prefixes are sent out to BGP peers, usually only allowing prefixes owned by the entity operating the network and its customers. They can also be used to tweak some knobs (BGP local-pref, MED, AS path prepend, etc.) to influence routing decisions and balance traffic across links. This is what the policies we have in place for our peers and transits do. As explained above, the maximum prefix-limit is intended to tear down BGP sessions if more prefixes are being sent or received than to be expected. We have talked about RPKI before, it’s the required cryptographic upgrade to BGP routing, and we still are on our path to securing Internet Routing.

To improve the overall stability of the Internet even more, in 2017, a new Internet standard was proposed, which adds another layer of protection into the mix: RFC8212 defines Default External BGP (EBGP) Route Propagation Behavior without Policies which pretty much tackles the exact issues we were facing.

This RFC updates the BGP-4 standard (RFC4271) which defines how BGP works and what vendors are expected to implement. On the Juniper operating system, JunOS, this can be activated by setting defaults ebgp no-policy reject-always on the protocols bgp hierarchy level starting with Junos OS Release 20.3R1.

If you are running an older version of JunOS, a similar effect can be achieved by defining a REJECT-ALL policy and setting this as import/export policy on the protocols bgp hierarchy level. Note that this will also affect iBGP sessions, which the solution above will have no impact on.

policy-statement REJECT-ALL {

then reject;

}

protocol bgp {

import REJECT-ALL;

export REJECT-ALL;

}

We are sorry for leaking routes of prefixes which did not belong to Cloudflare or our customers and to network engineers who got paged as a result of this.

We have processes in place to make sure that changes to our infrastructure are reviewed before being executed, so potential issues can be spotted before they reach production. In this case, the review process failed to catch this configuration error. In response, we will increase our efforts to further our network automation, to fully derive the device configuration from an intended state.

While this configuration error was caused by human error, it could have been detected and mitigated significantly faster if the confirmation bias did not kick in, making the operator think the observed behavior was to be expected. This error underlines the importance of our existing efforts on training our people to be aware of biases we have in our life. This also serves as a great example on how confirmation bias can influence and impact our work and that we should question our conclusions (early).

It also shows how important protocols like RPKI are. Route leaks are something even experienced network operators can cause accidentally, and technical solutions are needed to reduce the impact of leaks whether they are intentional or the result of an error.

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=3Ej0RFQUMLk

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/02/bypassing-apples-airtag-security.html

A Berlin-based company has developed an AirTag clone that bypasses Apple’s anti-stalker security systems. Source code for these AirTag clones is available online.

So now we have several problems with the system. Apple’s anti-stalker security only works with iPhones. (Apple wrote an Android app that can detect AirTags, but how many people are going to download it?) And now non-AirTags can piggyback on Apple’s system without triggering the alarms.

Apple didn’t think this through nearly as well as it claims to have. I think the general problem is one that I have written about before: designers just don’t have intimate threats in mind when building these systems.

Post Syndicated from Eric Johnson original https://aws.amazon.com/blogs/compute/building-typescript-projects-with-aws-sam-cli/

This post written by Dan Fox, Principal Specialist Solutions Architect and Roman Boiko, Senior Specialist Solutions Architect

The AWS Serverless Application Model (AWS SAM) CLI provides developers with a local tool for managing serverless applications on AWS. This command line tool allows developers to initialize and configure applications, build and test locally, and deploy to the AWS Cloud. Developers can also use AWS SAM from IDEs like Visual Studio Code, JetBrains, or WebStorm. TypeScript is a superset of JavaScript and adds static typing, which reduces errors during development and runtime.

On February 22, 2022 we announced the beta of AWS SAM CLI support for TypeScript. These improvements simplify TypeScript application development by allowing you to build and deploy serverless TypeScript projects using AWS SAM CLI commands. To install the latest version of the AWS SAM CLI, refer to the installation section of the AWS SAM page.

In this post, I initialize a TypeScript project using an AWS SAM template. Then I build a TypeScript project using the AWS SAM CLI. Next, I use AWS SAM Accelerate to speed up the development and test iteration cycles for your TypeScript project. Last, I measure the impact of bundling, tree shaking, and minification on deployment package size.

This walkthrough requires:

AWS SAM now provides the capability to create a sample TypeScript project using a template. Since this feature is still in preview, you can enable this by one of the following methods:

[default.build.parameters]

beta_features = true

[default.sync.parameters]

beta_features = true--beta-features option with sam build and sam sync. I use this approach in the following examples.To create a new project:

sam initOpen the created project in a text editor. In the root, you see a README.MD file with the project description and a template.yaml. This is the specification that defines the serverless application.

In the hello-world folder is an app.ts file written in TypeScript. This project also includes a unit test in Jest and sample configurations for ESLint, Prettier, and TypeScript compilers.

Project structure

Previously, to use TypeScript with AWS SAM CLI, you needed custom steps. These transform the TypeScript project into a JavaScript project before running the build.

Today, you can use the sam build command to transpile code from TypeScript to JavaScript. This bundles local dependencies and symlinks, and minifies files to reduce asset size.

AWS SAM uses the popular open source bundler esbuild to perform these tasks. This does not perform type checking but you may use the tsc CLI to perform this task. Once you have built the TypeScript project, use the sam deploy command to deploy to the AWS Cloud.

The following shows how this works.

sam build. This command uses esbuild to transpile and package app.ts.

sam deploy --guided to deploy the application to your AWS account.AWS SAM Accelerate is a set of features that reduces development and test cycle latency by enabling you to test code quickly against AWS services in the cloud. AWS SAM Accelerate released beta support for TypeScript. Use the template from the last example to use SAM Accelerate with TypeScript.

Use AWS SAM Accelerate to build and deploy your code upon changes.

sam sync --stack-name sam-app --watch.export const lambdaHandler = async (event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> => {

let response: APIGatewayProxyResult;

try {

response = {

statusCode: 200,

body: JSON.stringify({

message: 'hello SAM',

}),

};

} catch (err) {

console.log(err);

response = {

statusCode: 500,

body: JSON.stringify({

message: 'some error happened',

}),

};

}

return response;

};

AWS SAM Accelerate output

One additional benefit of the TypeScript build process is that it reduces your deployment package size through bundling, tree shaking, and minification. The bundling process removes dependency files not referenced in the control flow. Tree shaking is the term used for unused code elimination. It is a compiler optimization that removes unreachable code within files.

Minification reduces file size by removing white space, rewriting syntax to be more compact, and renaming local variables to be shorter. The sam build process performs bundling and tree shaking by default. Configure minification, a feature typically used in production environments, within the Metadata section of the template.yaml file.

Measure the impact of these optimizations by the reduced deployment package size. For example, measure the before and after size of an application, which includes the AWS SDK for JavaScript v3 S3 Client as a dependency.

To begin, change the package.json file to include the @aws-sdk/client-s3 as a dependency:

npm install @aws-sdk/client-s3

package.json contents

npm installdu -sh hello-world

The current application is approximately 50 MB.sam builddu -sh .aws-samThe new package size is approximately 2.8 MB. That represents a 94% reduction in uncompressed application size.

This post reviews several new features that can improve the development experience for TypeScript developers. I show how to create a sample TypeScript project using sam init. I build and deploy a TypeScript project using the AWS SAM CLI. I show how to use AWS SAM Accelerate with your TypeScript project. Last, I measure the impact of bundling, tree shaking, and minification on a sample project. We invite the serverless community to help improve AWS SAM. AWS SAM is an open source project and you can contribute to the repository here.

For more serverless content, visit Serverless Land.

Post Syndicated from original https://xkcd.com/2585/

Post Syndicated from original https://lwn.net/Articles/885682/

Regular

expressions are a common feature of computer languages, especially

higher-level languages like Ruby, Perl, Python, and others, for doing

fairly sophisticated text-pattern matching. Some languages, including

Perl,

incorporate regular expressions into the language itself,

while others have classes or libraries that come with the language

installation. Python’s standard library has the re module,

which provides facilities for working with regular expressions; as a recent

discussion on the python-ideas mailing shows, though, that module has

somewhat fallen by the wayside in recent times.

Post Syndicated from Alexis Robinson original https://aws.amazon.com/blogs/security/aws-achieves-fedramp-p-ato-for-15-services-in-the-aws-us-east-west-and-aws-govcloud-us-regions/

AWS is pleased to announce that 15 additional AWS services have achieved Provisional Authority to Operate (P-ATO) from the Federal Risk and Authorization Management Program (FedRAMP) Joint Authorization Board (JAB).

AWS is continually expanding the scope of our compliance programs to help customers use authorized services for sensitive and regulated workloads. AWS now offers 111 AWS services authorized in the AWS US East/West Regions under FedRAMP Moderate Authorization, and 91 services authorized in the AWS GovCloud (US) Regions under FedRAMP High Authorization.

Figure 1. Newly authorized services list

These additional AWS services now provide the following capabilities for the U.S. federal government and customers with regulated workloads:

The following services are now listed on the FedRAMP Marketplace and the AWS Services in Scope by Compliance Program page.

| Service | FedRAMP Moderate in AWS US East/West | FedRAMP High in AWS GovCloud (US) |

| Amazon Detective | ✓ | |

| Amazon FSx for Lustre | ✓ | ✓ |

| Amazon FSx for Windows File Server | ✓ | ✓ |

| Amazon Kendra | ✓ | |

| Amazon Keyspaces (for Apache Cassandra) | ✓ | |

| Amazon Lex | ✓ | |

| Amazon Macie | ✓ | |

| Amazon MQ | ✓ | |

| AWS CloudHSM | ✓ | |

| AWS Cloud Map | ✓ | |

| AWS Glue DataBrew | ✓ | |

| AWS Outposts | ✓ | ✓ |

| AWS Resource Groups | ✓ | |

| AWS Snowmobile | ✓ | |

| AWS Transfer Family | ✓ | ✓ |

To learn what other public sector customers are doing on AWS, see our Government, Education, and Nonprofits Case Studies and Customer Success Stories. Stay tuned for future updates on our Services in Scope by Compliance Program page. Let us know how this post will help your mission by reaching out to your AWS Account Team. Lastly, if you have feedback about this blog post, let us know in the Comments section.

Want more AWS Security news? Follow us on Twitter.

Post Syndicated from Dmitriy Novikov original https://aws.amazon.com/blogs/security/fine-tune-and-optimize-aws-waf-bot-control-mitigation-capability/

A few years ago at Sydney Summit, I had an excellent question from one of our attendees. She asked me to help her design a cost-effective, reliable, and not overcomplicated solution for protection against simple bots for her web-facing resources on Amazon Web Services (AWS). I remember the occasion because with the release of AWS WAF Bot Control, I can now address the question with an elegant solution. The Bot Control feature now makes this a matter of switching it on to start filtering out common and pervasive bots that generate over 50 percent of the traffic against typical web applications.

Reduce Unwanted Traffic on Your Website with New AWS WAF Bot Control introduced AWS WAF Bot Control and some of its capabilities. That blog post covers everything you need to know about where to start and what elements it uses for configuration and protection. This post unpacks closely-related functionalities, and shares key considerations, best practices, and how to customize for common use cases. Use cases covered include:

Before moving on to precise configuration of the bot mitigation capability, it is important to understand the components that go into the process.

Although labels aren’t unique to Bot Control, the feature takes advantage of them, and many configurations use labels as the main input. A label is a string value that is applied to a request based on matching a rule statement. One way of thinking about them is as tags that belong to the specific request. The request acquires them after being processed by a rule statement, and can be used as identification of similar requests in all subsequent rules within the same web ACL. Labels enable you to act on a group of requests that meets specific criteria. That’s because the subsequent rules in the same web ACL have access to the generated labels and can match against them.

Labels go beyond just a mechanism for matching a rule. Labels are independent of a rule’s action, as they can be generated for Block, Allow, and Count. That opens up opportunities to filter or construct queries against records in AWS WAF logs based on labels, and so implement sophisticated analytics.

A label is a string made up of a prefix, optional namespace, and a name delimited by a colon. For example: prefix:[namespace:]name. The prefix is automatically added by AWS WAF.

AWS WAF Bot Control includes various labels and namespaces:

By default, verified bots are not blocked by Bot Control, but you can use a label to block them with a custom rule.

These labels are added through managed bot detection logic, and Bot Control uses them to perform the following:

Known bot categorization: Comparing the request user-agent to known bots to categorize and allow customers to block by category. Bots are categorized by their function, such as scrapers, search engines, social media.

Bot confirmation: Most respectable bots provide a way to validate beyond the user-agent, typically by doing a reverse DNS lookup of the IP address to confirm the validity of domain and host names. These automatic checks will help you to ensure that only legitimate bots are allowed, and provide a signal to flag requests to downstream systems for bot detection.

Header validation: Request headers validation is performed against a series of checks to look for missing headers, malformed headers, or invalid headers.

Browser signature matching: TLS handshake data and request headers can be deconstructed and partially recombined to create a browser signature that identifies browser and OS combinations. This signature can be validated against the user-agent to confirm they match, and checked against lists of known-good browser known-bad browser signatures.

Below are a few examples of labels that Bot Control has. You can obtain the full list by calling the DescribeManagedRuleGroup API.

awswaf:managed:aws:bot-control:bot:category:search_engine

awswaf:managed:aws:bot-control:bot:name:scrapy

awswaf:managed:aws:bot-control:bot:verified

awswaf:managed:aws:bot-control:signal:non_browser_user_agent

Although Bot Control can be enabled and start protecting your web resources with the default Block action, you can switch all rules in the rule group into a Count action at the beginning. This accomplishes the following:

Labels can be looked up in Amazon CloudWatch metrics and AWS WAF logs, and as soon as you have them, you can start planning whether exceptions or any custom rules are needed to cater for a specific scenario. This blog post explores examples of such use cases in the Common use cases sections below.

Additionally, as AWS WAF processes rules in sequential order, you should consider where the Bot Control rule group is located in your web ACL. To filter out requests that you confidently consider unwanted, you can place AWS Managed Rules rule groups—such as the Amazon IP reputation list—before the Bot Control rule group in the evaluation order. This decreases the number of requests processed by Bot Control, and makes it more cost effective. Simultaneously, Bot Control should be early enough in the rules to:

AWS WAF Bot Control fine-tuning wouldn’t be complete and configurable without a set of recently released features and capabilities of AWS WAF. Let’s unpack them.

Generated labels generate CloudWatch metrics and are placed into AWS WAF logs. It enables you to see what bots and categories hit your website, and the labels associated with them that you can use for fine tuning.

CloudWatch metrics are generated with the following dimensions and metrics.

AWS WAF includes a shortcut to associated CloudWatch metrics at the top of the Overview page, as shown in Figure 1.

Figure 1: Title and description of the chart in AWS WAF with a shortcut to CloudWatch

Alternatively, you can find them in the WAFV2 service category of the CloudWatch Metrics section.

CloudWatch displays generated labels and the volume across dates and times, so you can evaluate and make informed decisions to structure the rules or address false positives. Figure 2 illustrates what labels were generated for requests from bots that hit my website. This example configured only a couple of explicit Allow actions, so most of them were blocked. The top section of the figure 2 shows the load from two selected labels.

Figure 2: WAFV2 CloudWatch metrics for generated Label Namespaces

In AWS WAF logs, generated labels are included in an array under the field labels. Figure 3 shows an example request with the labels array at the bottom.

Figure 3: An example of an AWS WAF log record

This example shows three labels generated for the same request. Uptimerobot follows the monitoring category label, and combining these two labels is useful to provide flexibility for configurations based on them. You can use the whole category, or be laser-focused using the label of the specific bot. You will see how and why that matters later in this blog post. The third label, non_browser_user_agent, is a signal of forwarded requests that have extra headers. For protection from bots in conjunction with labels, you can construct extra scanning in your application for certain requests.

Given that Bot Control is a premium feature and is a paid AWS Managed Rules, the ability to keep your costs in control is crucial. The scope-down statement allows you to optimize for cost by filtering out any traffic that doesn’t require inspection by Bot Control.

To address this goal, you can use scope down statements that can be applied to two broad scenarios.

You can exclude certain parts of your resource from scanning by Bot Control. Think of parts of your web site that you don’t mind being accessed by bots, typically that would be static content, such as images and CSS files. Leaving protection on everything else, such as APIs and login pages. You can also exclude IP ranges that can be considered safe from bot management. For example, traffic that’s known to come from your organization or viewers that belong to your partners or customers.

Alternatively, you can look at this from a different angle, and only apply bot management to a small section of your resources. For example, you can use Bot Control to protect a login page, or certain sensitive APIs, leaving everything else outside of your bot management.

With all of these tools in our toolkit let’s put them into perspective and dive deep into use cases and scenarios.

There are several methods for fine tuning Bot Control to better meet your needs. In this section, you’ll see some of the methods you can use.

In some cases, it is necessary to allow bots access to your websites. A good example is search engine bots, that crawl the web and create an index. If optimization for search engines is important for your business, but you notice excessive load from too many requests hitting your web resource, you might face a dilemma of how to slow crawlers down without unnecessarily blocking them. You can solve this with a combination of Bot Control detection logic and a rate-based rule with a response status code and header to communicate your intention back to crawlers. Most crawlers that are deemed useful have a built-in mechanism to decrease their crawl rate when you detect and respond to increased load.

Note: The rate limit is the maximum number of requests allowed from a single IP address in a five-minute period.

awswaf:managed:aws:bot-control:bot:verified

awswaf:managed:aws:bot-control:bot:category:scraping_framework

awswaf:managed:aws:bot-control:bot:name:Googlebot

Figure 4: Label match rule statement in a rule builder with a specific match key

Figure 5: AWS WAF rule action with a custom response code

With the preceding configuration, Bot Control sets required labels, which you then use in the scope-down statement in a rate-based rule to not only establish a ceiling of how many requests you will allow from specific bots, but also communicate to bots when their crawling rate is too high. If they don’t respect the response and lower their rate, the rule will temporarily block them, protecting your web resource from being overwhelmed.

Note: If you use a category label, such as scraping_framework, all bots that have that label will be counted by your rate-based rule. To avoid unintentional blocking of bots that use the same label, you can either narrow down to a specific bot with a precise bot:name: label, or select a higher rate limit to allow a greater margin for the aggregate.

As mentioned earlier, excluding parts of your web resource from Bot Control protection is a mechanism to reduce the cost of running the feature by focusing only on a subset of the requests reaching a resource. There are a few common scenarios that take advantage of this approach.

Figure 6: A scope-down statement to match based on a string that a URI path starts with

As an alternative to a string match type, you can use a regex pattern set. If you don’t have a regex pattern set, create one using the following guide.

Note: This pattern matches most common file extensions associated with static files for typical web resources. You can customize the pattern set if you have different file types.

Figure 7: A scope-down statement to match based on a regex pattern set as part of a URI path

Another option is to exclude almost everything, by only enabling the Bot Control on the most sensitive part of your application. For example, a login page.

Note: The actual URI path depends on the structure of your application.

Figure 8: A scope-down statement to match based on a string within a URI path

If you have more than one part to exclude, you can use an OR logical statement to list each part in a scope-down statement.

Figure 9 builds on the previous example of an exact string match by adding an OR statement to protect an API named payment.

Figure 9: A scope-down statement with OR logic for more sophisticated matching

Note: The visual editor on the console supports up to five statements. To add more, edit the JSON representation of the rule on the console or use the APIs.

Since verified bots aren’t blocked by default, in most cases there is no need to apply extra logic to allow them through. However, there are scenarios where other AWS WAF rules might match some aspects of requests from verified bots and block them. That can hurt some metrics for SEO, or prevent links from your website from properly propagating and displaying in social media resources. If this is important for your business, then you might want to ensure you protect verified bots by explicitly allowing them in AWS WAF.

awswaf:managed:aws:bot-control:bot:verified

Figure 10: Label match rule statement in a Rule builder with a specific match key

Labels also enable you to single out the bot you don’t want to block from the category that is blocked. One of the common examples are third-party bots that perform monitoring of your web resources.

Let’s take a look at a scenario where UptimeRobot is used to allow a specific bot. The bot falls into a category that’s being blocked by default—bot:category:monitoring. You can either exclude the whole category, which can have a wider impact on resource than you want, or allow only UptimeRobot.

From the logs, you can also verify which category the bot belongs to, which is useful for configuring Scope-down statements.

awswaf:managed:aws:bot-control:bot:category:monitoring

awswaf:managed:aws:bot-control:bot:name:uptimerobot

Figure 11: Label match rule statement with AND logic to single out a specific bot name from a category

Note: This approach is the best practice for analyzing and, if necessary, addressing false positives situations. You can apply exclusion to any bot, or multiple bots, based on the unique bot:name: label.

There are situations when you want to further process or analyze certain requests. or implement logic that is provided by systems in the downstream. In such cases, you can use AWS WAF Bot Control to categorize the requests. Applications later in the process can then apply the intended logic on either a broad group of requests, such as all bots within a category, or as narrow as a certain bot.

AWS WAF prefixes your custom header names with x-amzn-waf- when it inserts them, so when you add abc-category, your downstream system sees it as x-amzn-waf-abc-category.

Figure 12: AWS WAF rule action with a custom header inserted by the service

The custom rule located after Bot Control now inserts the header into any request that it labeled as coming from bots within the security category. Then the security appliance that is after AWS WAF acts on the requests based on the header, and processes them accordingly.

This implementation can serve other scenarios. For example, using your custom headers to communicate to your Origin to append headers that will explicitly prevent caching certain content. That makes bots always get it from the Origin. Inserted headers are accessible within AWS Lambda@Edge functions and CloudFront Functions, this opens up advanced processing scenarios.

This post describes the primary building blocks for using Bot Control, and how you can combine and customize them to address different scenarios. It’s not an exhaustive list of the use cases that Bot Control can be fine-tuned for, but hopefully the examples provided here inspire and provide you with ideas for other implementations.

If you already have AWS WAF associated with any of your web-facing resources, you can view current bot traffic estimates for your applications based on a sample of requests currently processed by the service. Visit the AWS WAF console to view the bot overview dashboard. That’s a good starting point to consider implementing learnings from this blog to improve your bot protection.

It is early days for the feature, and it will keep gaining more capabilities, stay tuned!

If you have feedback about this blog post, submit comments in the Comments section below. If you have questions about this blog post, start a new thread on AWS WAF re:Post or contact AWS Support.

Want more AWS Security news? Follow us on Twitter.

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=nbbC21Wj79I

Post Syndicated from Shveta Shahi original https://www.backblaze.com/blog/crashplan-on-premises-customers-come-on-over/

With CrashPlan sunsetting its On-Premises backup service as of February 28, 2022, customers have some choices to make about how to handle their backups moving forward. As you think about the options—all of which require IT managers to embrace a change—we’d be remiss if we didn’t say Backblaze is ready to help with our Business Backup service for workstations. It’s quick and easy to switch over to, easy to run automatically ongoing, and cost effective.

If you’re a CrashPlan customer but you need a new backup solution, read on to understand your options. If you’re interested in working with us, you can transition from CrashPlan to Backblaze in six simple steps outlined below to protect all employee workstations from accidental data loss or ransomware, automatically and affordably.

CrashPlan customers have two options: transfer to CrashPlan’s Cloud Backup Service or transfer to another vendor. CrashPlan customers have until March 1, 2022 to make the decision and get started. After March 1, CrashPlan customers will lose support for their backup software. If any issues arise with backing up or restoring data, you won’t receive support to help fix the situation from CrashPlan.

CrashPlan’s Cloud Backup Service starts at $10 per endpoint per month for 0-100 endpoints, and is tiered after that. For customers looking for different pricing options or features, some CrashPlan alternatives include Carbonite and iDrive, both of which are offering promotions to attract CrashPlan customers. Keep in mind that once these promotions expire, you’re stuck paying the full price which may be higher than others. And, of course, Backblaze is an option as well.

So, what makes Backblaze a great fit for CrashPlan customers? We’ll share a few reasons. If you are already convinced, you can get started now by following the getting started guide in the next section of this post. If not, here are some of the benefits you’ll get with Backblaze:

Backblaze has been in the backup business for 15 years, and businesses ranging from PagerDuty to Charity: Water to Roush Auto Group rely on us for their data protection. Former CrashPlan customers who recently transitioned to Backblaze are getting the value they expected. Recently, Richard Charbonneau of Clicpomme spoke of the ease and simplicity he gained from switching:

“All our clients are managed by MDM or Munki, so it was really easy for us just to push the uninstaller for CrashPlan and package the new installer for Backblaze for every client.”

– Richard Charbonneau, Founder, Clicpomme

We invite you to join them.

Ready to Get Started?

You can “version off” of CrashPlan and “version on” to Backblaze Business Backup, making for a seamless transition. Simply create and configure an account with Backblaze to start backing up all employee workstations, and let CrashPlan lapse when they sunset On-Premises support on February 28.

You can retain your CrashPlan backups on premises for however long your retention policies stipulate in case you need to restore (or just deprecate those altogether if you’d rather use your on-premises storage servers for something else—it’s up to you!). Then, with Backblaze set up in parallel, you can start relying on Backblaze moving forward.

Here’s how to get started with Backblaze Business Backup.

Backblaze offers a number of different deployment options to give you the most flexibility when deciding how to deploy the Backblaze client to your machines. It can be as simple as sending the invite link via Slack or in a personally crafted email to a handful of users. You can use our Invite Email option to just add email addresses to a canned invite. Or you can deploy via a silent install using RMM tools such as JAMF, SCCM, Munki and others to deploy the software to your end users. Assistance is always available from our solution engineers to help guide you through the deployment process.

With Backblaze Business Backup, you can customize your groups’ administrative access. Specify who has administrator privileges to a group simply by adding an email address to the group settings. As a group administrator, you have the ability to assist your users with restores and be aware of issues when they arise.

You can also integrate with your Single Sign-on provider—either Google or Microsoft—in the settings to improve security, reduce support calls, and free users from having to remember yet another password.

If you are a CrashPlan user looking to transition to a new cloud backup service for your workstations, Backblaze makes moving to the cloud easy. Reach out to us at any time for help transitioning and getting started.

The post CrashPlan On-Premises Customers: Come On Over appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from original https://lwn.net/Articles/885786/

Security updates have been issued by Fedora (java-1.8.0-openjdk-aarch32, radare2, and zsh), openSUSE (ImageMagick and systemd), Red Hat (kpatch-patch, Service Telemetry Framework 1.3 (sg-core-container), and Service Telemetry Framework 1.4 (sg-core-container)), SUSE (ImageMagick, kernel-rt, nodejs12, php74, systemd, ucode-intel, and xerces-j2), and Ubuntu (c3p0, expat, linux, linux-aws, linux-aws-hwe, linux-azure, linux-azure-4.15, linux-dell300x, linux-gcp, linux-gcp-4.15, linux-hwe, linux-oracle, linux-snapdragon, linux, linux-aws, linux-gcp, linux-kvm, linux-oracle, linux-raspi, linux, linux-aws, linux-kvm, linux-lts-xenial, linux-aws, linux-aws-5.4, linux-azure, linux-azure-5.4, linux-azure-fde, linux-gcp, linux-gcp-5.4, linux-gkeop, linux-gkeop-5.4, linux-hwe-5.4, linux-ibm, linux-ibm-5.4 linux-oracle, linux-oracle-5.4, and linux-gke).

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/02/a-new-cybersecurity-social-contract.html

The US National Cyber Director Chris Inglis wrote an essay outlining a new social contract for the cyber age:

The United States needs a new social contract for the digital age — one that meaningfully alters the relationship between public and private sectors and proposes a new set of obligations for each. Such a shift is momentous but not without precedent. From the Pure Food and Drug Act of 1906 to the Clean Air Act of 1963 and the public-private revolution in airline safety in the 1990s, the United States has made important adjustments following profound changes in the economy and technology.

A similarly innovative shift in the cyber-realm will likely require an intense process of development and iteration. Still, its contours are already clear: the private sector must prioritize long-term investments in a digital ecosystem that equitably distributes the burden of cyberdefense. Government, in turn, must provide more timely and comprehensive threat information while simultaneously treating industry as a vital partner. Finally, both the public and private sectors must commit to moving toward true collaboration — contributing resources, attention, expertise, and people toward institutions designed to prevent, counter, and recover from cyber-incidents.

The devil is in the details, of course, but he’s 100% right when he writes that the market cannot solve this: that the incentives are all wrong. While he never actually uses the word “regulation,” the future he postulates won’t be possible without it. Regulation is how society aligns market incentives with its own values. He also leaves out the NSA — whose effectiveness rests on all of these global insecurities — and the FBI, whose incessant push for encryption backdoors goes against his vision of increased cybersecurity. I’m not sure how he’s going to get them on board. Or the surveillance capitalists, for that matter. A lot of what he wants will require reining in that particular business model.

Good essay — worth reading in full.

Post Syndicated from Amy Hunt original https://blog.rapid7.com/2022/02/22/this-ciso-isnt-real-but-his-problems-sure-are/

In 2021, data breaches soared past 2020 levels. This year, it’s expected to be worse. The odds are stacked against this poor guy (and you) now – but a unified extended detection and response (XDR) and SIEM restacks them in your favor.

Take a few minutes to check out this CISO’s day, and you’ll see how.

Go to this resource-rich page for smart, fast information, and a few minutes of fun too. Don’t miss it.

Still here on this page reading? Fine, let’s talk about you.

Cybersecurity isn’t for the fragile foam flowers among us, people who require shade and soft breezes. A little chaos is fun. Adrenaline and cortisol? They give you heightened physical and mental capacity. But it becomes problematic when it doesn’t stop, when you don’t remember your last 40-hour week, or when weekends and holidays are wrecked.

A lot of your co-workers may be happy, but life in the SOC is its own thing. CISOs average about two years in their jobs. And 40% admit job stress has affected their relationships with their partners and/or children.

A whopping 88% of Rapid7 customers say their detection and response has improved since they started using InsightIDR. And 93% say our unified SIEM and XDR has helped them level up and advance security programs.

You have the power to change your day. See how this guy did.

Post Syndicated from Goutam Tamvada original https://blog.cloudflare.com/post-quantum-key-encapsulation/

The Internet is accustomed to the fact that any two parties can exchange information securely without ever having to meet in advance. This magic is made possible by key exchange algorithms, which are core to certain protocols, such as the Transport Layer Security (TLS) protocol, that are used widely across the Internet.

Key exchange algorithms are an elegant solution to a vexing, seemingly impossible problem. Imagine a scenario where keys are transmitted in person: if Persephone wishes to send her mother Demeter a secret message, she can first generate a key, write it on a piece of paper and hand that paper to her mother, Demeter. Later, she can scramble the message with the key, and send the scrambled result to her mother, knowing that her mother will be able to unscramble the message since she is also in possession of the same key.

But what if Persephone is kidnapped (as the story goes) and cannot deliver this key in person? What if she can no longer write it on a piece of paper because someone (by chance Hades, the kidnapper) might read that paper and use the key to decrypt any messages between them? Key exchange algorithms come to the rescue: Persephone can run a key exchange algorithm with Demeter, giving both Persephone and Demeter a secret value that is known only to them (no one else knows it) even if Hades is eavesdropping. This secret value can be used to encrypt messages that Hades cannot read.

The most widely used key exchange algorithms today are based on hard mathematical problems, such as integer factorization and the discrete logarithm problem. But these problems can be efficiently solved by a quantum computer, as we have previously learned, breaking the secrecy of the communication.

There are other mathematical problems that are hard even for quantum computers to solve, such as those based on lattices or isogenies. These problems can be used to build key exchange algorithms that are secure even in the face of quantum computers. Before we dive into this matter, we have to first look at one algorithm that can be used for Key Exchange: Key Encapsulation Mechanisms (KEMs).

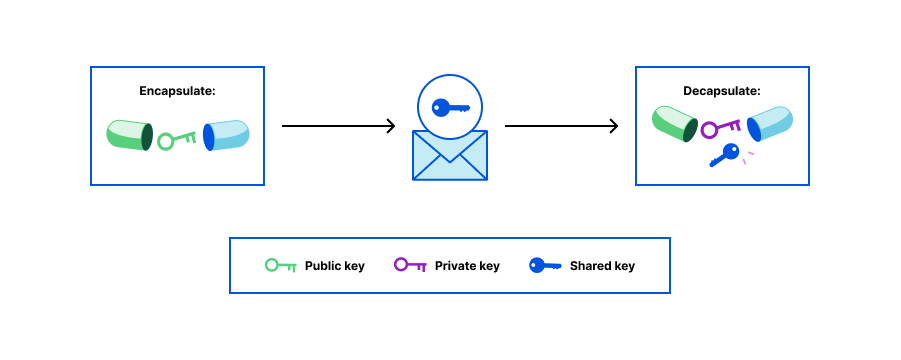

Two people could agree on a secret value if one of them could send the secret in an encrypted form to the other one, such that only the other one could decrypt and use it. This is what a KEM makes possible, through a collection of three algorithms:

A KEM can be seen as similar to a Public Key Encryption (PKE) scheme, since both use a combination of public and private keys. In a PKE, one encrypts a message using the public key and decrypts using the private key. In a KEM, one uses the public key to create an “encapsulation” — giving a randomly chosen shared key — and one decrypts this “encapsulation” with the private key. The reason why KEMs exist is that PKE schemes are usually less efficient than symmetric encryption schemes; one can use a KEM to only transmit the shared/symmetric key, and later use it in a symmetric algorithm to efficiently encrypt data.

Nowadays, in most of our connections, we do not use KEMs or PKEs per se. We either use Key Exchanges (KEXs) or Authenticated Key Exchanges (AKE). The reason for this is that a KEX allows us to use public keys (solving the key exchange problem of how to securely transmit keys) in order to generate a shared/symmetric key which, in turn, will be used in a symmetric encryption algorithm to encrypt data efficiently. A famous KEX algorithm is Diffie-Hellman, but classical Diffie-Hellman based mechanisms do not provide security against a quantum adversary; post-quantum KEMs do.

When using a KEM, Persephone would run Generate and publish the public key. Demeter takes this public key, runs Encapsulate, keeps the generated secret to herself, and sends the encapsulation (the ciphertext) to Persephone. Persephone then runs Decapsulate on this encapsulation and, with it, arrives at the same shared secret that Demeter holds. Hades will not be able to guess even a bit of this secret value even if he sees the ciphertext.

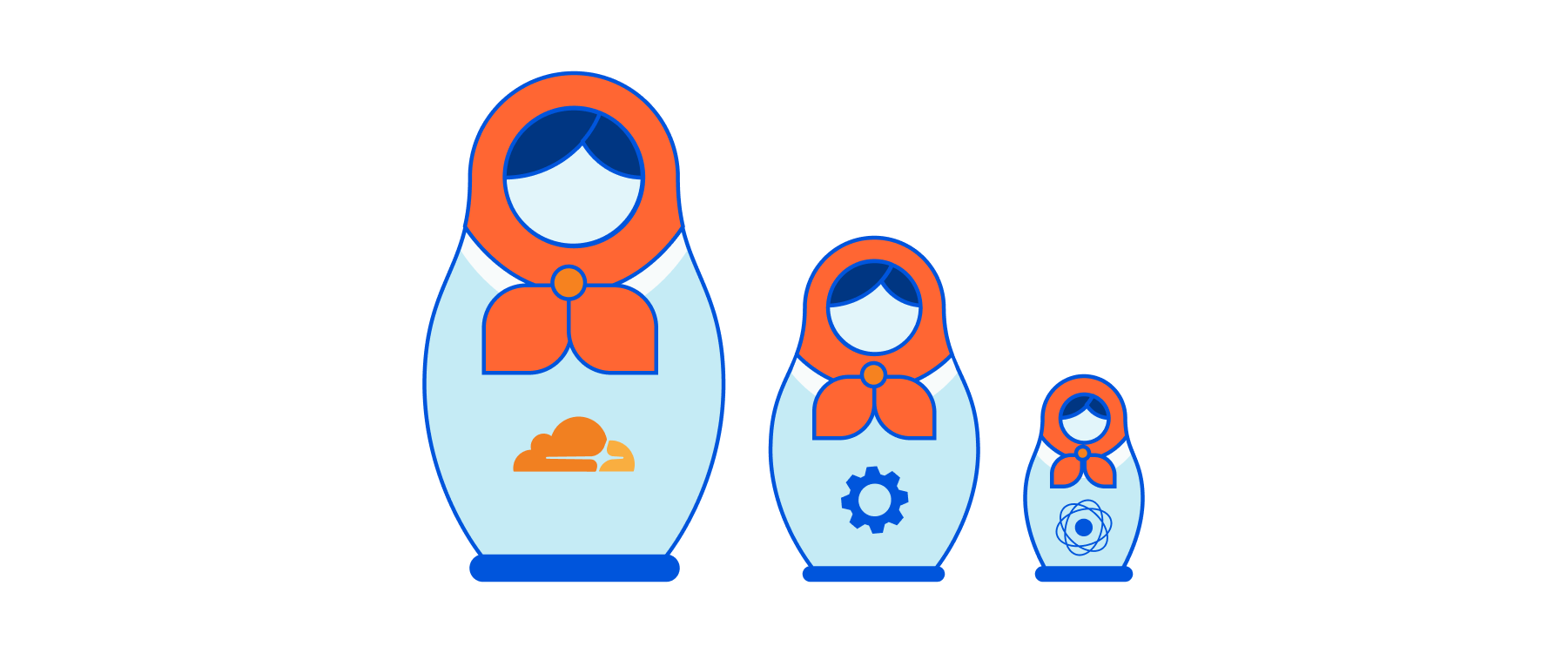

In this post, we go over the construction of one particular post-quantum KEM, called FrodoKEM. Its design is simple, which makes it a good choice to illustrate how a KEM can be constructed. We will look at it from two perspectives:

The core of FrodoKEM is a public-key encryption scheme called FrodoPKE, whose security is based on the hardness of the “Learning with Errors” (LWE) problem over lattices. Let us look now at the first doll of a KEM.

Note to the reader: Some mathematics is coming in the next sections, but do not worry, we will guide you through it.

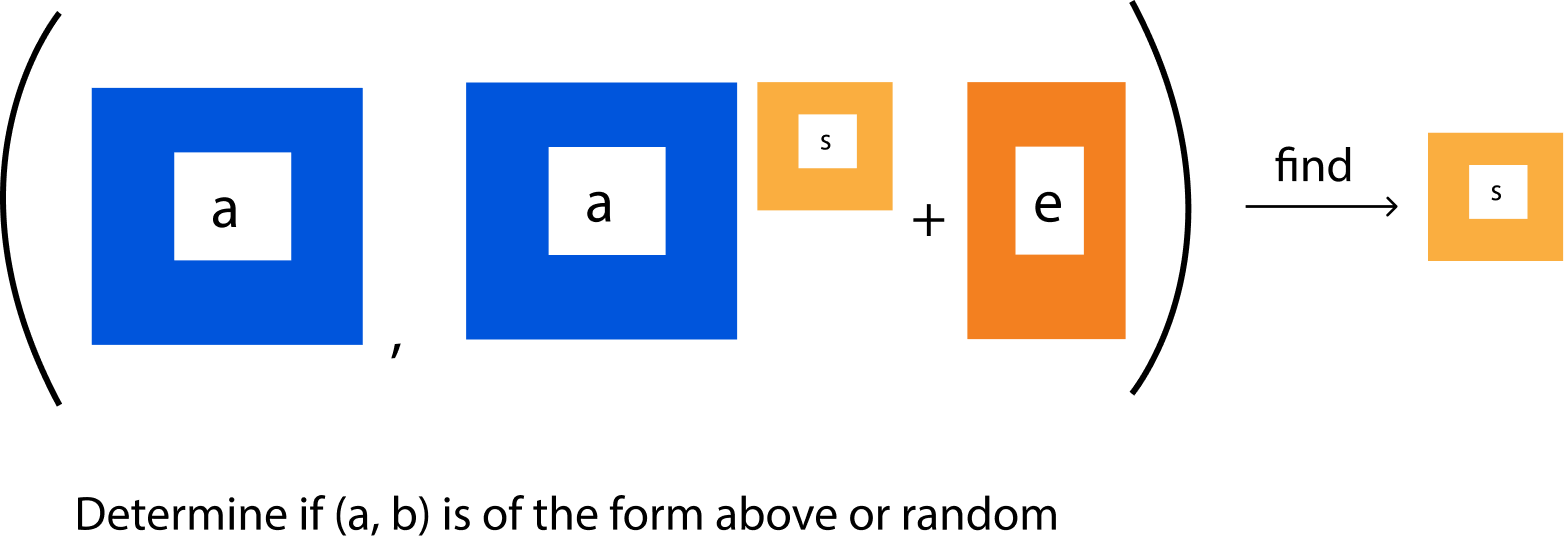

The security (and mathematical foundation) of FrodoKEM relies on the hardness of the Learning With Errors (LWE) problem, a generalization of the classic Learning Parities with Noise problem, first defined by Regev.

In cryptography, specifically in the mathematics underlying it, we often use sets to define our operations. A set is a collection of any element, in this case, we will refer to collections of numbers. In cryptography textbooks and articles, one can often read:

Let $Z_q$ denote the set of integers $\{0, …, q-1\}$ where $(q > 2)$,

which means that we have a collection of integers from 0 to a number q (which has to be bigger than 2. It is assumed that q, in a cryptographic application, is a prime. In the main theorem, it is an arbitrary integer).

Let $\{Z^n\}_q$ denote a vector $(v1, v2, …, vn)$ of n elements, each of which belongs to $Z_q$.

The LWE problem asks to recover a secret vector $s = (s1, s2, …, sn)$ in $\{Z^n\}_q$ given a sequence of random, “approximate” linear equations on s. For instance, if $(q = 23)$ the equations might be:

[s1 + s2 + s3 + s4 ≈ 30 (mod 23)

2s1 + s3 + s5 + … + sn ≈ 40 (mod 23)

10s2 + 13s3 + 1s4 ≈ 50 (mod 23)

…]

We see the left-hand sides of the equations above are not exactly equal to the right-hand side (the equality sign is not used but rather the “≈” sign: approximately equal to); they are off by an introduced slight “error”, (which will be defined as the variable e. In the equations above, the error is, for example, the number 10). If the error was a known, public value, recovering s (the hidden variable) would be easy: after about n equations, we can recover s in a reasonable time using Gaussian elimination. Introducing this unknown error makes the problem difficult to solve (it is difficult with accuracy to find s), even for quantum computers.

An equivalent formulation of the LWE problem is:

Blum, Kalai and Wasserman provided the first subexponetial algorithm for solving this problem. It requires 2O(n /log n) equations/time.

There are two main kinds of computational LWE problems that are difficult to solve for quantum computers (given certain choices of both q and χ):

LWE is just noisy linear algebra, and yet it seems to be a very hard problem to solve. In fact, there are many reasons to believe that the LWE problem is hard: the best algorithms for solving it run in exponential time. It also is closely related to the Learning Parity with Noise (LPN) problem, which is extensively studied in learning theory, and it is believed to be hard to solve (any progress in breaking LPN will potentially lead to a breakthrough in coding theory). How does it relate to building cryptography? LWE is applied to the cryptographic applications of the type of public-key. In this case, the secret value s becomes the private key, and the values bi and ei are the public key.

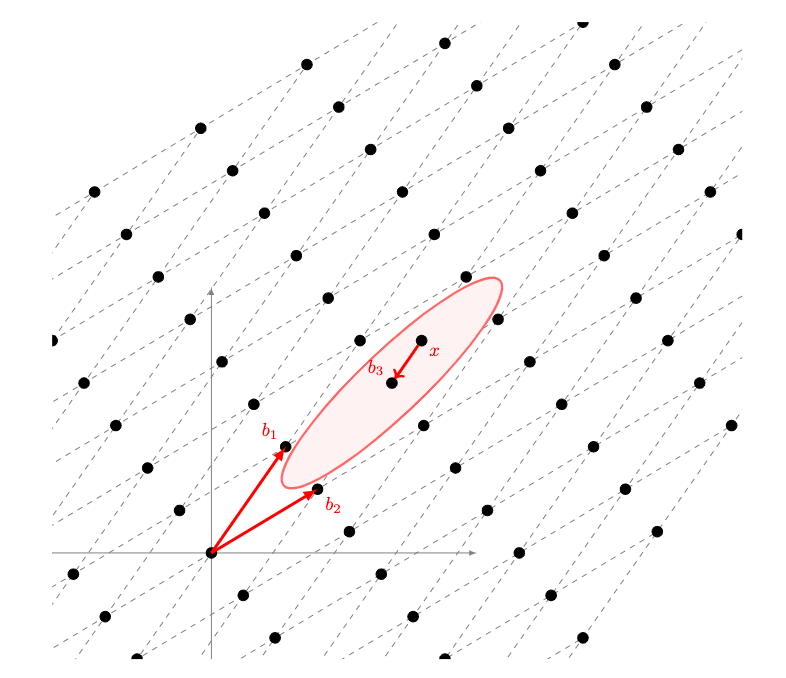

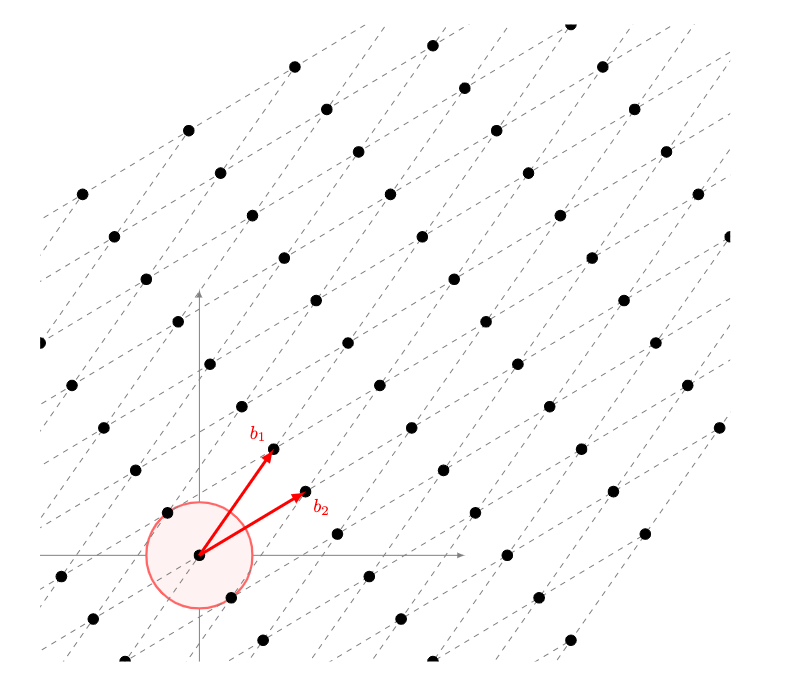

So, why is this problem related to lattices? In other blog posts, we have seen that certain algorithms of post-quantum cryptography are based on lattices. So, how does LWE relate to them? One can view LWE as the problem of decoding from random linear codes, or reduce it to lattices, in particular to problems such as the Short Vector Problem (SVP) or the Shortest independent vectors problem (SIVP): an efficient solution to LWE implies a quantum algorithm to SVP and SIVP. In other blog posts, we talk about SVP, so, in this one, we will focus on the random bounded distance decoding problem on lattices.

Lattices (as seen in the image), as a regular and periodic arrangement of points in space, have emerged as a foundation of cryptography in the face of quantum adversaries; one modern problem in which they rely on is the Bounded Distance Decoding (BDD) problem. In the BDD problem, you are given a lattice with an arbitrary basis (a basis is a list of vectors that generate all the other points in a lattice. In the case of the image, it is the pair of vectors b1 and b2). You are then given a vector b3 on it. You then perturb the lattice point b3 by adding some noise (or error) to give x. Given x, the goal is to find the nearest lattice point (in this case b3), as seen in the image. In this case, LWE is an average-case form of BDD (Regev also gave a worst-case to average-case reduction from BDD to LWE: the security of a cryptographic system is related to the worst-case complexity of BDD).

The first doll is built. Now, how do we build encryption from this mathematical base? From LWE, we can build a public key encryption algorithm (PKE), as we will see next with FrodoPKE as an example.

The second doll of the Matryoshka is using a mathematical base to build a Public Key Encryption algorithm from it. Let’s look at FrodoPKE. FrodoPKE is a public-key encryption scheme which is the building block for FrodoKEM. It is made up of three components: key generation, encryption, and decryption. Let’s say again that Persephone wants to communicate with Demeter. They will run the following operations:

Notice that computing v = v1- b1 * s gives us m + e2 (the message plus the error matrix sampled during encryption). The decryption process performs rounding, which will output the original message m if the error matrix e2 is carefully chosen. If not, notice that there is the potential of decryption failure.

What kind of security does this algorithm give? In cryptography, we design algorithms with security notions in mind, notions they have to attain. This algorithm, FrodoPKE (as with other PKEs), satisfies only IND-CPA (Indistinguishability under chosen-plaintext attack) security. Intuitively, this notion means that a passive eavesdropper listening in can get no information about a message from a ciphertext. Even if the eavesdropper knows that a ciphertext is an encryption of just one of two messages of their choice, looking at the ciphertext should not tell the adversary which one was encrypted. We can also think of it as a game:

A gnome can be sitting inside a box. This box takes a message and produces a ciphertext. All the gnome has to do is record each message and the ciphertext they see generated. An outside-of-the-box adversary, like a troll, wants to beat this game and know what the gnome knows: what ciphertext is produced if a certain message is given. The troll chooses two messages (m1 and m2) of the same length and sends them to the box. The gnome records the box operations and flips a coin. If the coin lands on its face, then they send the ciphertext (c1) corresponding to m1. Otherwise, they send c2 corresponding to m2. The troll, knowing the messages and the ciphertext, has to guess which message was encrypted.

IND-CPA security is not enough for all secure communication on the Internet. Adversaries can not only passively eavesdrop, but also mount chosen-ciphertext attacks (CCA): they can actively modify messages in transit and trick the communicating parties into decrypting these modified messages, thereby obtaining a decryption oracle. They can use this decryption oracle to gain information about a desired ciphertext, and so compromise confidentiality. Such attacks are practical and all that an attacker has to do is, for example, send several million test ciphertexts to a decryption oracle, see Bleichenbacher’s attack and the ROBOT attack, for example.

Without CCA security, in the case of Demeter and Persephone, what this security means is that Hades can generate and send several million test ciphertexts to the decryption oracle and eventually reveal the content of a valid ciphertext that Hades did not generate. Demeter and Persephone then might not want to use this scheme.

The last figure of the Matryoshka doll is taking a secure-against-CPA scheme and making it secure against CCA. A secure-against-CCA scheme must not leak information about its private key, even when decrypting arbitrarily chosen ciphertexts. It must also be the case that an adversary cannot craft valid ciphertexts without knowing what the plaintext message is; suppose, again, that the adversary knows the messages encrypted could only be either m0 or m1. If the attacker can craft another valid ciphertext, for example, by flipping a bit of the ciphertext in transit, they can send this modified ciphertext, and see whether a message close to m1 or m0 is returned.

To make a CPA scheme secure against CCA, one can use the Hofheinz, Hovelmanns, and Kiltz (HHK) transformations (see this thesis for more information). The HHK transformation constructs an IND-CCA-secure KEM from both an IND-CPA PKE and three hash functions. In the case of the algorithm we are exploring, FrodoKEM, it uses a slightly tweaked version of the HHK transform. It has, again, three functions (some parts of this description are simplified):

Generation:

Encapsulate:

Decapsulate:

What this algorithm achieves is the generation of a shared secret and ciphertext which can be used to establish a secure channel. It also means that no matter how many ciphertexts Hades sends to the decryption oracle, they will never reveal the content of a valid ciphertext that Hades himself did not generate. This is ensured when we run the encryption process again in Decapsulate to check if the ciphertext was computed correctly, which ensures that an adversary cannot craft valid ciphertexts simply by modifying them.

With this last doll, the algorithm has been created, and it is safe in the face of a quantum adversary.

While the ring bearer, Frodo, wanders around and transforms, he was not alone in his journey. FrodoKEM is currently designated as an alternative candidate for standardization as part of the post-quantum NIST process. But, there are others:

The lattice-based variants have the advantage of being fast, while producing relatively small keys and ciphertexts. There are concerns about their security, which need to be properly verified, however. More confidence is found in the security of the Classic McEliece scheme, as its underlying problem has been studied for longer (It is only one year older than RSA!). It has a disadvantage: it produces extremely large public keys. Classic-McEliece-348864 for example, produces public keys of size 261,120 bytes, whereas Kyber512, which claims comparable security, produces public keys of size 800 bytes.

They are all Matryoshka dolls (including sometimes non-post-quantum ones). They are all algorithms that are placed one inside the other. They all start with a small but powerful idea: a mathematical problem whose solution is hard to find in an efficient time. They then take the algorithm approach and achieve one cryptographic security. And, by the magic of hashes and length preservation, they achieve more cryptographic security. This just goes to show that cryptographic algorithms are not perfect in themselves; they stack on top of each other to get the best of each one. Facing quantum adversaries with them is the same, not a process of isolation but rather a process of stacking and creating the big picture from the smallest one.

Post Syndicated from Goutam Tamvada original https://blog.cloudflare.com/post-quantum-signatures/

To provide authentication is no more than to assert, to provide proof of, an identity. We can claim who we claim to be but if there is no proof of it (recognition of our face, voice or mannerisms) there is no assurance of that. In fact, we can claim to be someone we are not. We can even claim we are someone that does not exist, as clever Odysseus did once.

The story goes that there was a man named Odysseus who angered the gods and was punished with perpetual wandering. He traveled and traveled the seas meeting people and suffering calamities. On one of his trips, he came across the Cyclops Polyphemus who, in short, wanted to eat him. Clever Odysseus got away (as he usually did) by wounding the cyclops’ eye. As he was wounded, he asked for Odysseus name to which the latter replied:

“Cyclops, you asked for my glorious name, and I will tell it; but do give the stranger’s gift, just as you promised. Nobody I am called. Nobody they called me: by mother, father, and by all my comrades”

(As seen in The Odyssey, book 9. Translation by the authors of the blogpost).

The cyclops believed that was Odysseus’ name (Nobody) and proceeded to tell everyone, which resulted in no one believing him. “How can nobody have wounded you?” they questioned the cyclops. It was a trick, a play of words by Odysseus. Because to give an identity, to tell the world who you are (or who you are pretending to be) is easy. To provide proof of it is very difficult. The cyclops could have asked Odysseus to prove who he was, and the story would have been different. And Odysseus wouldn’t have left the cyclops laughing.

In the digital world, proving your identity is more complex. In face-to-face conversations, we can often attest to the identity of someone by knowing and verifying their face, their voice, or by someone else introducing them to us. From computer to computer, the scenario is a little different. But there are ways. When a user connects to their banking provider on the Internet, they need assurance not only that the information they send is secured; but that they are also sending it to their bank, and not a malicious website masquerading as their provider. The Transport Layer Security (TLS) protocol provides this through digitally signed statements of identity (certificates). Digital signature schemes also play a central role in DNSSEC as well, an extension to the Domain Name System (DNS) that protects applications from accepting forged or manipulated DNS data, which is what happens during DNS cache poisoning, for example.

A digital signature is a demonstration of authorship of a document, conversation, or message sent using digital means. As with “regular” signatures, they can be publicly verified by anyone that knows that it is a signature made by someone.

A digital signature scheme is a collection of three algorithms:

In the case of the Odysseus’ story, what the cyclops could have done to verify his identity (to verify that he indeed was Nobody) was to ask for a proof of identity: for example, for other people to vouch that he is who he claims to be. Or he could have asked for a digital signature (attested by several people or registered as his own) attesting he was Nobody. Nothing like that happened, so the cyclops was fooled.