Post Syndicated from Explosm.net original http://explosm.net/comics/5912/

New Cyanide and Happiness Comic

Post Syndicated from Explosm.net original http://explosm.net/comics/5912/

New Cyanide and Happiness Comic

Post Syndicated from original https://lwn.net/Articles/860820/rss

The LWN.net Weekly Edition for July 1, 2021 is available.

Post Syndicated from original https://lwn.net/Articles/860820/rss

The LWN.net Weekly Edition for July 1, 2021 is available.

Post Syndicated from Birender Pal original https://aws.amazon.com/blogs/architecture/overview-of-data-transfer-costs-for-common-architectures/

Data transfer charges are often overlooked while architecting a solution in AWS. Considering data transfer charges while making architectural decisions can help save costs. This blog post will help identify potential data transfer charges you may encounter while operating your workload on AWS. Service charges are out of scope for this blog, but should be carefully considered when designing any architecture.

There is no charge for inbound data transfer across all services in all Regions. Data transfer from AWS to the internet is charged per service, with rates specific to the originating Region. Refer to the pricing pages for each service—for example, the pricing page for Amazon Elastic Compute Cloud (Amazon EC2)—for more details.

Data transfer within AWS could be from your workload to other AWS services, or it could be between different components of your workload.

When your workload accesses AWS services, you may incur data transfer charges.

If the internet gateway is used to access the public endpoint of the AWS services in the same Region (Figure 1 – Pattern 1), there are no data transfer charges. If a NAT gateway is used to access the same services (Figure 1 – Pattern 2), there is a data processing charge (per gigabyte (GB)) for data that passes through the gateway.

If your workload accesses services in different Regions (Figure 2), there is a charge for data transfer across Regions. The charge depends on the source and destination Region (as described on the Amazon EC2 Data Transfer pricing page).

Charges may apply if there is data transfer between different components of your workload. These charges vary depending on where the components are deployed.

Data transfer within the same Availability Zone is free. One way to achieve high availability for a workload is to deploy in multiple Availability Zones.

Consider a workload with two application servers running on Amazon EC2 and a database running on Amazon Relational Database Service (Amazon RDS) for MySQL (Figure 3). For high availability, each application server is deployed into a separate Availability Zone. Here, data transfer charges apply for cross-Availability Zone communication between the EC2 instances. Data transfer charges also apply between Amazon EC2 and Amazon RDS. Consult the Amazon RDS for MySQL pricing guide for more information.

To minimize impact of a database instance failure, enable a multi-Availability Zone configuration within Amazon RDS to deploy a standby instance in a different Availability Zone. Replication between the primary and standby instances does not incur additional data transfer charges. However, data transfer charges will apply from any consumers outside the current primary instance Availability Zone. Refer to the Amazon RDS pricing page for more detail.

A common pattern is to deploy workloads across multiple VPCs in your AWS network. Two approaches to enabling VPC-to-VPC communication are VPC peering connections and AWS Transit Gateway. Data transfer over a VPC peering connection that stays within an Availability Zone is free. Data transfer over a VPC peering connection that crosses Availability Zones will incur a data transfer charge for ingress/egress traffic (Figure 4).

Transit Gateway can interconnect hundreds or thousands of VPCs (Figure 5). Cost elements for Transit Gateway include an hourly charge for each attached VPC, AWS Direct Connect, or AWS Site-to-Site VPN. Data processing charges apply for each GB sent from a VPC, Direct Connect, or VPN to Transit Gateway.

If workload components communicate across multiple Regions using VPC peering connections or Transit Gateway, additional data transfer charges apply. If the VPCs are peered across Regions, standard inter-Region data transfer charges will apply (Figure 6).

For peered Transit Gateways, you will incur data transfer charges on only one side of the peer. Data transfer charges do not apply for data sent from a peering attachment to a Transit Gateway. The data transfer for this cross-Region peering connection is in addition to the data transfer charges for the other attachments (Figure 7).

Data transfer will occur when your workload needs to access resources in your on-premises data center. There are two common options to help achieve this connectivity: Site-to-Site VPN and Direct Connect.

One option to connect workloads to an on-premises network is to use one or more Site-to-Site VPN connections (Figure 8 – Pattern 1). These charges include an hourly charge for the connection and a charge for data transferred from AWS. Refer to Site-to-Site VPN pricing for more details. Another option to connect multiple VPCs to an on-premises network is to use a Site-to-Site VPN connection to a Transit Gateway (Figure 8 – Pattern 2). The Site-to-Site VPN will be considered another attachment on the Transit Gateway. Standard Transit Gateway pricing applies.

Direct Connect can be used to connect workloads in AWS to on-premises networks. Direct Connect incurs a fee for each hour the connection port is used and data transfer charges for data flowing out of AWS. Data transfer into AWS is $0.00 per GB in all locations. The data transfer charges depend on the source Region and the Direct Connect provider location. Direct Connect can also connect to the Transit Gateway if multiple VPCs need to be connected (Figure 9). Direct Connect is considered another attachment on the Transit Gateway and standard Transit Gateway pricing applies. Refer to the Direct Connect pricing page for more details.

A Direct Connect gateway can be used to share a Direct Connect across multiple Regions. When using a Direct Connect gateway, there will be outbound data charges based on the source Region and Direct Connect location (Figure 10).

Data transfer charges apply based on the source, destination, and amount of traffic. Here are some general tips for when you start planning your architecture:

AWS provides the ability to deploy across multiple Availability Zones and Regions. With a few clicks, you can create a distributed workload. As you increase your footprint across AWS, it helps to understand various data transfer charges that may apply. This blog post provided information to help you make an informed decision and explore different architectural patterns to save on data transfer costs.

Post Syndicated from original https://lwn.net/Articles/861379/rss

A new project from Mozilla, which is meant to help researchers collect

browsing data, but only with the informed consent of the browser-user, is taking a lot of

heat, perhaps in part because the company can never seem to do anything

right, at least in the

eyes of some. Mozilla Rally was

announced

on June 25 as joint venture between the company and researchers at

Princeton University “to enable crowdsourced science for public

good“. The idea is that users can volunteer to give academic studies access to

the same kinds of browser data that is being tracked in some browsers

today. Whether the privacy safeguards are strong

enough—and if there is sufficient reason for users to sign up—remains to be seen.

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=fHpsaasL5gM

Post Syndicated from Elyse Lopez original https://aws.amazon.com/blogs/architecture/top-5-featured-architecture-content-for-june/

The AWS Architecture Center provides new and notable reference architecture diagrams, vetted architecture solutions, AWS Well-Architected best practices, whitepapers, and more. This blog post features some of our top picks from the new and newly updated content we released this month.

This episode of the This is My Architecture video series explores how Taco Bell built a serverless data integration platform to create unique menus for more than 7,000 locations. Find out how this menu middleware uses Amazon Aurora combined with several other services, including AWS Amplify, AWS Lambda, Amazon API Gateway, and AWS Step Functions to create a cost-effective, scalable data pipeline.

How do you effectively implement AWS IoT workloads? This IoT Lens Checklist provides insights that we have gathered from real-world case studies. This will help you quickly learn the key design elements of Well-Architected IoT workloads. The checklist also provides recommendations for improvement.

This whitepaper provides insights and design patterns for cloud architects, data scientists, and developers. It shows you how a lake house architecture allows you to query data across your data warehouse, data lake, and operational databases. Learn how you can store data in a data lake and use a ring of purpose-built data services to quickly make decisions.

This AWS Solutions Implementation helps you automatically track resource use and avoid overspending. Managed from a centralized location, this ready-to-deploy solution provides a cost-effective way to stay within service quotas by receiving notifications — even via Slack! — before you reach the limit.

Digital services providers (DSPs) around the world are focusing on 5G development as part of upgrading their digital infrastructure. This whitepaper explains how DSPs can use different AWS tools and services to fully automate their 5G network deployment and testing and allow orchestration, closed loop use cases, edge analytics, and more.

Post Syndicated from Erick Galinkin original https://blog.rapid7.com/2021/06/30/cve-2021-1675-printnightmare-patch-does-not-remediate-vulnerability/

Vulnerability note: Members of the community including Will Dormann of CERT/CC have noted that the publicly available exploits which purport to exploit CVE-2021-1675 may in fact target a new vulnerability in the same function as CVE-2021-1675. Thus, the advisory update published by Microsoft on June 21 does not address these exploits and defenders should be on the look out for a new patch from Microsoft in the future.

On June 8, 2021, Microsoft released an advisory and patch for CVE-2021-1675 (“PrintNightmare”), a critical vulnerability in the Windows Print Spooler. Although originally classified as a privilege escalation vulnerability, security researchers have demonstrated that the vulnerability allows authenticated users to gain remote code execution with SYSTEM-level privileges. On June 29, 2021, as proof-of-concept exploits for the vulnerability began circulating, security researchers discovered that CVE-2021-1675 is still exploitable on some systems that have been patched. As of this writing, at least 3 different proof-of-concept exploits have been made public.

Rapid7 researchers have confirmed that public exploits work against fully patched Windows Server 2019 installations. The vulnerable service is enabled by default on Windows Server, with the exception of Windows Server Core. Therefore, it is expected that in the vast majority of enterprise environments, all domain controllers, even those that are fully patched, are vulnerable to remote code execution by authenticated attackers.

The vulnerability is in the RpcAddPrinterDriver call of the Windows Print Spooler. A client uses the RPC call to add a driver to the server, storing the desired driver in a local directory or on the server via SMB. The client then allocates a DRIVER_INFO_2 object and initializes a DRIVER_CONTAINER object that contains the allocated DRIVER_INFO_2 object. The DRIVER_CONTAINER object is then used within the call to RpcAddPrinterDriver to load the driver. This driver may contain arbitrary code that will be executed with SYSTEM privileges on the victim server. This command can be executed by any user who can authenticate to the Spooler service.

Since the patch is currently not effective against the vulnerability, the most effective mitigation strategy is to disable the print spooler service itself. This should be done on all endpoints, servers, and especially domain controllers. Dedicated print servers may still be vulnerable if the spooler is not stopped. Microsoft security guidelines do not recommend disabling the service across all domain controllers, since the active directory has no way to remove old queues that no longer exist unless the spooler service is running on at least one domain controller in each site. However, until this vulnerability is effectively patched, this should have limited impact compared to the risk.

On Windows cmd:

net stop spooler

On PowerShell:

Stop-Service -Name Spooler -Force

Set-Service -Name Spooler -StartupType Disabled

The following PowerShell command can be used to help find exploitation attempts:

Get-WinEvent -LogName 'Microsoft-Windows-PrintService/Admin' | Select-String -InputObject {$_.message} -Pattern 'The print spooler failed to load a plug-in module'

We strongly recommend that all customers disable the Windows Print Spooler service on an emergency basis to mitigate the immediate risk of exploitation. While InsightVM and Nexpose checks for CVE-2021-1675 were released earlier in June, we are currently investigating the feasibility of additional checks to determine whether the print spooler service has been disabled in customer environments.

We will update this blog as further information comes to light.

Post Syndicated from Avik Mukherjee original https://aws.amazon.com/blogs/security/aws-security-reference-architecture-a-guide-to-designing-with-aws-security-services/

Amazon Web Services (AWS) is happy to announce the publication of the AWS Security Reference Architecture (AWS SRA). This is a comprehensive set of examples, guides, and design considerations that you can use to deploy the full complement of AWS security services in a multi-account environment that you manage through AWS Organizations. The architecture and accompanying recommendations are based on our experience here at AWS with enterprise customers. The AWS SRA is built around a single-page architecture that depicts a simple three-tier web architecture, and shows you how the AWS security services help you achieve security objectives; where they are best deployed and managed in your AWS accounts; and, how they interact with other security services. The guidance aligns to AWS security foundations, including the AWS Cloud Adoption Framework (AWS CAF), AWS Well-Architected, and the AWS Shared Responsibility Model.

Security executives, architects, and engineers can use the AWS SRA to gain understanding of AWS security services and features, by seeing a more detailed explanation of the organization of the functional accounts within the architecture and the individual services within individual AWS accounts. The document and accompanying code repository can be used in two ways. First, you can use the AWS SRA as a practical guide to deploying AWS security services—beginning with foundational security guidance, discussing each service and its role in the architecture, and ending with a discussion of implementable code examples. Alternatively, the AWS SRA can serve as a starting point for defining a security architecture for your own multi-account environment. It’s designed to prompt you to consider your own security decisions. For example, you can think about how to leverage virtual private cloud (VPC) endpoints as a layer of security control, or consider which controls are managed in the application account, and how appropriate information can flow to the central security team.

The reference document is accompanied by a GitHub code repository that provides examples of how to deploy the services. The examples use common deployment platforms like Customizations for AWS Control Tower, AWS CloudFormation StackSets, and the AWS Landing Zone solution. All the example solutions are deployed with the recommended configurations and are deliberately very restrictive in order to demonstrate patterns in the AWS SRA guidance. AWS will continue to add additional example solutions for new and existing services on a regular basis.

The AWS SRA document and code repository are living artifacts and will be updated periodically, driven by new service and feature releases, customer feedback, and emerging best practices.

Here’s the core architecture diagram from the guide: the AWS SRA in its simplest form. The architecture is purposefully modular and provides a high-level abstracted view that represents a generic web application. The AWS organization and account structure follows the latest AWS guidance for using multiple AWS accounts.

Figure 1: The AWS Security Reference Architecture

The AWS SRA guidance can be used as either a narrative or a reference. The topics are organized as a story, so you can read them from the beginning (foundational security guidance) to the end (discussion of implementable code examples). Alternatively, you can navigate the document to focus on the security principles, services, account types, guidance, and examples that are most relevant to your needs.

The AWS SRA documentation has five primary sections that guide you from AWS security fundamentals to the deployment of code examples:

The AWS SRA provides a Design Considerations section for each element, which discusses optional features or configurations that might have important security implications or capture common variations in how you implement that element—typically as a result of alternate requirements or constraints.

You can refer to the AWS SRA at various stages of your migration to the AWS Cloud. During the initial phase, you can use this document to architect your own multi-account AWS environment and weave in the various security services that AWS has to offer. If you’ve been using AWS for some time, you can use the AWS SRA to evaluate your current architecture and make adjustments to improve your security posture by using the full potential of various AWS security services. If you’re in a mature stage of AWS Cloud adoption, you can use the AWS SRA to independently validate your security architecture against AWS recommended architecture.

You can’t host your workloads on paper, so the next step is to get started building out the reference architecture. You can consume the architecture and the associated code examples and combine these with your organization’s best practices, in order to start building your production grade architecture. If you need assistance, you can reach out to AWS Professional Services, your AWS account team, or the AWS Partner Network, who can work with you to translate the reference architecture into a customized AWS environment that you can then operate.

If you have feedback about this post, submit comments in the Comments section below. If you need assistance with architecting or implementing a secure AWS environment, reach out to AWS Professional Services.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/prime-day-2021-two-chart-topping-days/

In what has now become an annual tradition (check out my 2016, 2017, 2019, and 2020 posts for a look back), I am happy to share some of the metrics from this year’s Prime Day and to tell you how AWS helped to make it happen.

This year I bought all sorts of useful goodies including a Toshiba 43 Inch Smart TV that I plan to use as a MagicMirror, some watering cans, and a Dremel Rotary Tool Kit for my workshop.

Powered by AWS

As in years past, AWS played a critical role in making Prime Day a success. A multitude of two-pizza teams worked together to make sure that every part of our infrastructure was scaled, tested, and ready to serve our customers. Here are a few examples:

Amazon EC2 – Our internal measure of compute power is an NI, or a normalized instance. We use this unit to allow us to make meaningful comparisons across different types and sizes of EC2 instances. For Prime Day 2021, we increased our number of NIs by 12.5%. Interestingly enough, due to increased efficiency (more on that in a moment), we actually used about 6,000 fewer physical servers than we did in Cyber Monday 2020.

Graviton2 Instances – Graviton2-powered EC2 instances supported 12 core retail services. This was our first peak event that was supported at scale by the AWS Graviton2 instances, and is a strong indicator that the Arm architecture is well-suited to the data center.

An internal service called Datapath is a key part of the Amazon site. It is highly optimized for our peculiar needs, and supports lookups, queries, and joins across structured blobs of data. After an in-depth evaluation and consideration of all of the alternatives, the team decided to port Datapath to Graviton and to run it on a three-Region cluster composed of over 53,200 C6g instances.

At this scale, the price-performance advantage of the Graviton2 of up to 40% versus the comparable fifth-generation x86-based instances, along with the 20% lower cost, turns into a big win for us and for our customers. As a bonus, the power efficiency of the Graviton2 helps us to achieve our goals for addressing climate change. If you are thinking about moving your workloads to Graviton2, be sure to study our very detailed Getting Started with AWS Graviton2 document, and also consider entering the Graviton Challenge! You can also use Graviton2 database instances on Amazon RDS and Amazon Aurora; read about Key Considerations in Moving to Graviton2 for Amazon RDS and Amazon Aurora Databases to learn more.

Amazon CloudFront – Fast, efficient content delivery is essential when millions of customers are shopping and making purchases. Amazon CloudFront handled a peak load of over 290 million HTTP requests per minute, for a total of over 600 billion HTTP requests during Prime Day.

Amazon Simple Queue Service – The fulfillment process for every order depends on Amazon Simple Queue Service (SQS). This year, traffic set a new record, processing 47.7 million messages per second at the peak.

Amazon Elastic Block Store – In preparation for Prime Day, the team added 159 petabytes of EBS storage. The resulting fleet handled 11.1 trillion requests per day and transferred 614 petabytes per day.

Amazon Aurora – Amazon Fulfillment Technologies (AFT) powers physical fulfillment for purchases made on Amazon. On Prime Day, 3,715 instances of AFT’s PostgreSQL-compatible edition of Amazon Aurora processed 233 billion transactions, stored 1,595 terabytes of data, and transferred 615 terabytes of data

Amazon DynamoDB – DynamoDB powers multiple high-traffic Amazon properties and systems including Alexa, the Amazon.com sites, and all Amazon fulfillment centers. Over the course of the 66-hour Prime Day, these sources made trillions of API calls while maintaining high availability with single-digit millisecond performance, and peaking at 89.2 million requests per second.

Prepare to Scale

As I have detailed in my previous posts, rigorous preparation is key to the success of Prime Day and our other large-scale events. If your are preparing for a similar event of your own, I can heartily recommend AWS Infrastructure Event Management. As part of an IEM engagement, AWS experts will provide you with architectural and operational guidance that will help you to execute your event with confidence.

— Jeff;

Post Syndicated from Cody Penta original https://aws.amazon.com/blogs/big-data/build-a-real-time-streaming-analytics-pipeline-with-the-aws-cdk/

A recurring business problem is achieving the ability to capture data in near-real time to act upon any significant event close to the moment it happens. For example, you may want to tap into a data stream and monitor any anomalies that need to be addressed immediately rather than during a nightly batch. Building these types of solutions from scratch can be complex; you’re dealing with configuring a cluster of nodes as well as onboarding the application to utilize the cluster. Not only that, but maintaining, patching, and upgrading these clusters takes valuable time and effort away from business-impacting goals.

In this post, we look at how we can use managed services such as Amazon Kinesis to handle our incoming data streams while AWS handles the undifferentiated heavy lifting of managing the infrastructure, and how we can use the AWS Cloud Development Kit (AWS CDK) to provision, build, and reason about our infrastructure.

The following diagram illustrates our real-time streaming data analytics architecture.

This architecture has two main modules, a hot and a cold module, both of which build off an Amazon Kinesis Data Streams stream that receives end-user transactions. Our hot module has an Amazon Kinesis Data Analytics app listening in on the stream for any abnormally high values. If an anomaly is detected, Kinesis Data Analytics invokes our AWS Lambda function with the abnormal payload. The function fans out the payload to Amazon Simple Notification Service (Amazon SNS), which notifies anybody subscribed, and stores the abnormal payload into Amazon DynamoDB for later analysis by a custom web application.

Our cold module has an Amazon Kinesis Data Firehose delivery stream that reads the raw data off of our stream, compresses it, and stores it in Amazon Simple Storage Service (Amazon S3) to later run complex analytical queries against our raw data. We use the higher-level abstractions that the AWS CDK provides to help onboard and provision the necessary infrastructure to start processing the stream.

Before we begin, a quick note about the levels of abstraction the AWS CDK provides. The AWS CDK revolves around a fundamental building block called a construct. These constructs have three abstraction levels:

You can mix these levels of abstractions, as we see in the upcoming code.

We can accomplish this architecture with a series of brief steps:

For this walkthrough, you should have the following prerequisites:

We can bootstrap an AWS CDK project by installing the AWS CDK CLI tool through our preferred node dependency manager.

npm install -g aws-cdk.yarn global add aws-cdk .mkdir <project-name>.cd <project-name>.cdk init app --language [javascript|typescript|java|python|csharp|go].

cdk init app --language typescript).Following these steps builds the initial structure of your AWS CDK project.

Let’s get started with the root stream. We use this stream as a baseline to build our hot and cold modules. For this root stream, we use Kinesis Data Streams because it provides us the capability to capture, process, and store data streams in a reliable and scalable manner. Making this stream with the AWS CDK is quite easy. It’s one line of code:

It’s only one line because the AWS CDK has a concept of sensible defaults. If we want to override these defaults, we explicitly pass in a third argument, commonly known as props:

Now that our data stream is defined, we can work on our first objective, the cold module. This module intends to capture, buffer, and compress the raw data flowing through this data stream into an S3 bucket. Putting the raw data in Amazon S3 allows us to run a plethora of analytical tools on top of it to build data visualization dashboards or run ad hoc queries.

We use Kinesis Data Firehose to buffer, compress, and load data streams into Amazon S3, which serves as a data store to persist streaming data for later analysis.

In the following AWS CDK code, we plug in Kinesis Data Firehose to our stream and configure it appropriately to load data into Amazon S3. One crucial prerequisite we need to address is that services don’t talk to each other without explicit permission. So, we have to first define the IAM roles our services assume to communicate with each other along with the destination S3 bucket.

iam.Role is an L2 construct with a higher-level concept of grants. Grants abstract IAM policies to simple read and write mechanisms with the ability to add individual actions, such as kinesis:DescribeStream, if the default read permissions aren’t enough. The grant family of functions allows us to strike a delicate balance between least privilege and code maintainability. Now that we have the appropriate permission, let’s define our Kinesis Data Firehose delivery stream.

By default, the AWS CDK tries to protect you from deleting valuable data stored in Amazon S3. For development and POC purposes, we override the default with cdk.RemovalPolicy.DESTROY to appropriately clean up leftover S3 buckets:

The Cfn prefix is a good indication that we’re working with an L1 construct (direct mapping to AWS CloudFormation). Because we’re working at a lower-level API, we should be aware of the following:

addDependency() function callBecause of the differences between working with L1 and L2 constructs, it’s best to minimize interactions between them to avoid confusion. One way of doing so is defining an L2 construct yourself, if the project timeline allows it. A template can be found on GitHub.

A general guideline for being explicit about what construct depends on others, like the preceding example, is to recognize where you ask for ARNs. ARNs are only available after a resource is provisioned. Therefore, you need to ensure that resource is created before using it elsewhere.

That’s it! We’ve constructed our cold pipeline! Now let’s work on the hot module.

In the previous section, we constructed a cold pipeline to capture the raw data in its entirety for ad hoc visualizations and analytics. The purpose of the hot module is to listen to the data stream for any abnormal values as data flows through it. If an odd value is detected, we should log it and alert stakeholders. For our use case, we define “abnormal” as an unusually high transaction (over 9000).

Databases, and often what appears at the end of architecture diagrams, usually appear first in AWS CDK code. It allows upstream components to reference downstream values. For example, the database’s name is needed first before we can provision a Lambda function that interacts with that database.

Let’s start provisioning the web app, DynamoDB table, SNS topic, and Lambda function:

In the preceding code, we define our DynamoDB table to store the entire abnormal transaction and a table viewer construct that reads our table and creates a public web app for end-users to consume. We also want to alert operators when an abnormality is detected. We can do this by constructing an SNS topic with a subscription to [email protected]. This email could be your team’s distro. Lastly, we define a Lambda function that serves as the glue between our upcoming Kinesis Data Analytics application, the DynamoDB table and SNS topic. The following is the actual code inside the Lambda function:

The AWS CDK code is similar to the way we defined our Kinesis Data Firehose delivery stream because both CfnApplication and CfnApplicationOutput are L1 constructs. There is one subtle difference here and a core benefit of using the AWS CDK even for L1 constructs: for application code, we can read in a file and render it as a string. This mechanism allows us to separate application code from infrastructure code vs. having both in a single CloudFormation template file. The following is the SQL code we wrote:

That’s it! Now we move on to deployment and testing.

To deploy our AWS CDK code, we can open up a terminal at the root of the AWS CDK project and run cdk deploy. The AWS CDK outputs a list of security-related changes that you can either confirm with a yes or no.

When the AWS CDK finishes deploying, it outputs the data stream name and the Amazon CloudFront URL to our web application. Open the CloudFront URL, the Amazon S3 console (specifically the bucket that our AWS CDK provisioned), and the Python file at scripts/producer.py. The following is the content of that Python file:

The Python script is relatively rudimentary. We take the data stream name as input, construct a Kinesis client, construct a random but realistic payload using the popular faker library, and send that payload to our data stream.

We can run this script by running Python scripts/producer.py. It boots up our Kinesis Data Analytics application if it hasn’t started already and prompts you for the data stream name. After you enter the name and press Enter, you should start seeing Kinesis’s responses in your terminal.

Make sure to use python3 instead of python if your default Python command defaults to version 2. You can check your version by entering python --version in your terminal.

Leave the script running until it randomly generates a couple of high transactions. After they’re generated, you can visit the web app’s URL and see table entries for all anomalies there (as in the following screenshot).

By this time, Kinesis Data Firehose has buffered and compressed raw data from the stream and put it in Amazon S3. You can visit your S3 bucket and see your data landing inside the destination path.

To clean up any provisioned resources, you can run cdk destroy inside the AWS CDK project and confirm the deletion, and the AWS CDK takes care of cleaning up all the resources.

In this post, we built a real-time application with a secondary cold path that gathers raw data for ad hoc analysis. We used the AWS CDK to provision the core managed services that handle the undifferentiated heavy lifting of a real-time streaming application. We then layered our custom application code on top of this infrastructure to meet our specific needs and tested the flow from end to end.

We covered key code snippets in this post, but if you’d like to see the project in its entirety and deploy the solution yourself, you can visit the AWS GitHub samples repo .

Cody Penta is a Solutions Architect at Amazon Web Services and is based out of Charlotte, NC. He has a focus in security and CDK and enjoys solving the really difficult problems in the technology world. Off the clock, he loves relaxing in the mountains, coding personal projects, and gaming.

Cody Penta is a Solutions Architect at Amazon Web Services and is based out of Charlotte, NC. He has a focus in security and CDK and enjoys solving the really difficult problems in the technology world. Off the clock, he loves relaxing in the mountains, coding personal projects, and gaming.

Michael Hamilton is a Solutions Architect at Amazon Web Services and is based out of Charlotte, NC. He has a focus in analytics and enjoys helping customers solve their unique use cases. When he’s not working, he loves going hiking with his wife, kids, and their German shepherd.

Michael Hamilton is a Solutions Architect at Amazon Web Services and is based out of Charlotte, NC. He has a focus in analytics and enjoys helping customers solve their unique use cases. When he’s not working, he loves going hiking with his wife, kids, and their German shepherd.

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=5FFGfPSsMpo

Post Syndicated from original https://lwn.net/Articles/861420/rss

Security updates have been issued by Debian (fluidsynth), Fedora (libgcrypt and tpm2-tools), Mageia (nettle, nginx, openvpn, and re2c), openSUSE (kernel, roundcubemail, and tor), Oracle (edk2, lz4, and rpm), Red Hat (389-ds:1.4, edk2, fwupd, kernel, kernel-rt, libxml2, lz4, python38:3.8 and python38-devel:3.8, rpm, ruby:2.5, ruby:2.6, and ruby:2.7), and SUSE (kernel and lua53).

Post Syndicated from boB Rudis original https://blog.rapid7.com/2021/06/30/forgerock-openam-pre-auth-remote-code-execution-vulnerability-what-you-need-to-know/

On June 29, 2021, security researcher Michael Stepankin (@artsploit) posted details of CVE-2021-35464, a pre-auth remote code execution (RCE) vulnerability in ForgeRock Access Manager identity and access management software. ForgeRock front-ends web applications and remote access solutions in many enterprises.

ForgeRock has issued Security Advisory #202104 to provide information on this vulnerability and will be updating it if and when patches are available.

The weakness exists due to unsafe object deserialization via the Jato framework, with a disturbingly diminutive proof of concept that requires a single GET/POST request for code execution:

GET /openam/oauth2/..;/ccversion/Version?jato.pageSession=<serialized_object>

ForgeRock versions below 7.0 running on Java 8 are vulnerable and the weakness also exists in unpatched versions of the Open Identify Platform’s fork of OpenAM. ForgeRock/OIP installations running on Java 9 or higher are unaffected.

As of July 29, 2021 there are no patches for existing versions of ForgeRock Access Manager. Organizations must either upgrade to version 7.x or apply one of the following workarounds:

Option 1

Disable the VersionServlet mapping by commenting out the following section in the AM web.xml file (located in the /path/to/tomcat/webapps/openam/WEB-INF directory):

<servlet-mapping>

<servlet-name>VersionServlet</servlet-name>

<url-pattern>/ccversion/*</url-pattern>

</servlet-mapping>

To comment out the above section, apply the following changes to the web.xml file:

<!--

<servlet-mapping>

<servlet-name>VersionServlet</servlet-name>

<url-pattern>/ccversion/*</url-pattern>

</servlet-mapping>

-->

Option 2

Block access to the ccversion endpoint using a reverse proxy or other method. On Apache Tomcat, ensure that access rules cannot be bypassed using known path traversal issues: Tomcat path traversal via reverse proxy mapping.

The upgrades remove the vulnerable /ccversion HTTP endpoint along with other HTTP paths that used the vulnerable Jato framework.

As of Tuesday, June 29, 2021, Rapid7 Labs has been able to identify just over 1,000 internet-facing systems that appear to be using ForgeRock’s OpenAM solution.

All organizations running ForgeRock OpenAM 7.0.x or lower (or are using the latest release of the Open Identify Platform’s fork of OpenAM) are urged to prioritize upgrading or applying the mitigations within an accelerated patch window if possible, and at the very least within the 30-day window if you are following the typical 30-60-90 day patch criticality cadence. Furthermore, organizations that are monitoring web application logs and OpenAM server logs should look for anomalous GET or POST request volume to HTTP path endpoints that include /ccversion in them.

For individual vulnerability analysis, see AttackerKB.

This blog post will be updated with new information as warranted.

Header image photo by Hannah Gibbs on Unsplash

Post Syndicated from original https://bivol.bg/%D1%82%D0%B2%D0%BE%D0%B9%D1%82%D0%B0-%D0%BC%D0%B0%D0%B9%D0%BA%D0%B0-%D1%81%D1%8A%D1%89%D0%BE.html

Някой по-умен от мен беше казал, че няма нищо по-опасно от прост, но деен човек, защото никой не може да свърши повече простотии от него. КОЙ може да се впише…

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=5MaqrHIS4rc

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=FvruAiFhPP8

Post Syndicated from Arwa Ginwala original https://blog.cloudflare.com/strength-in-diversity-apac-heritage-month/

In the United States, May is Asian American and Pacific Islander Heritage Month. This year, we wanted to celebrate this occasion in a more inclusive and comprehensive way, which is why we called our celebration APAC Heritage Month. This initiative was a collaboration between Desiflare and Asianflare, Cloudflare’s Employee Resource Groups (ERGs) for employees of Asian descent. We are proud to have run a diverse slate of events and content that we had planned throughout this month of celebration.

Our priority for APAC Heritage Month has been to share the stories and experiences of those in our community: we hosted several different segments to highlight the strong culture and heritage with moderated panels. We also took this opportunity to highlight some of the leaders in the industry of APAC descent around the world, to talk about their journey and struggles, so we can learn from each other and grow to be inspired. Although there has been progress in the past several years regarding representation of APAC stories being told, this small handful of narratives have a hard time representing two thirds of the world. By telling a more diverse set of APAC stories — our own, from immigrants, stories about food, culture and our careers — we hope to bring visibility to our experiences and our existence.

To keep everyone safe during the pandemic, all of our external events this year have been virtual and televised on Cloudflare TV:

Check out our APAC Heritage month landing page for the recordings of the events that occurred this month. We wanted to celebrate this month and truly embrace the culture and diversity, so please watch the recordings back on Cloudflare TV and beyond. Cloudflare embraces diversity and values diverse teams. We have also taken the recommendations of our teammates and put together a Playlist for you to be inspired by and deep dive into the deep rooted culture and practices we have celebrated this month.

In the spirit of highlighting APAC voices, we also wanted to take this opportunity to share a little about how our Asianflare and Desiflare Employee Resource Groups (ERGs) got started.

In March 2020, I (Jade) was having lunch with my colleague Stanley. We were venting to each other about the family of four who had been attacked in a grocery store parking lot in Texas. A few other co-workers joined the conversation, and we got to talk about how it echoed experiences of growing up as a minority in the US with Asian heritage.

That day turned out to be one of the last times we would physically be together in an office.

Stanley and I created Asianflare, the employee resource group for Asian heritage at Cloudflare, when we realized that we needed a space to serve as a support group. Surely, we weren’t the only ones who needed an emotional outlet about current events that impact our demographic. And we had a feeling that things were going to get worse before they got better. In the beginning, we just needed a safe space to just share our experiences with each other. And as lockdowns began across all the offices across the world, the Asianflare community became a real social hub as casual office chatter vanished into the ether.

The community blossomed with every food photo, every music or movie recommendation, every article discussion. We celebrated festivals together, held Zoom Lo Hei (“Prosperity Toss”) in multiple time zones, and held fireside chats on everything from career advice to public policy. Remember when WeChat and TikTok looked like they might be banned in the U.S.? We organized an internal fireside chat with Alissa Starzak, our public policy expert, to answer our questions on what to expect, especially those of us who feared getting cut off from our friends and family. On the average day, though, about 80% of our conversations are about food.

In March 2021, amidst the background radiation of escalating anti-Asian hate, a shooter killed eight people in Atlanta, six of whom were Asian women. What changed this time was the social support structures we had in place. We have a community that can grieve together, just as much as we celebrate together. Our People Team connected us with group therapy sessions offered by one of our benefits providers. At the BEER meeting our CEO, Matthew Prince, not only brought awareness to the issues and what our community was experiencing, but also offered our physical security team’s help. Even when I went outside, the flags were flying at half-mast. Colleagues I hadn’t heard from in ages reached out to make sure we were OK.

I know now that a kid like me growing up today would not see their families’ experiences swept under the rug, because our experiences are a part of the conversation now.

At the San Francisco office in 2019, we started to notice a sizable number of both folks of South Asian origin and folks with a deep interest in South Asian culture. So during Diwali (a festival of lights), we decided to have a small lunch get-together. The precursor to Desiflare was thus born in a room with 25 people congregating together for a commemorative vegetarian meal. The success of this small event led to monthly lunch meetings and eventually the formalization of the Desiflare ERG. Given the sizable Desi presence across all of our offices and the expansive interest we’ve seen in Desi culture across Cloudflare, today we see our ERG heavily represented around the world, especially in San Francisco, Austin, NYC, London, and Singapore!

Our Mission is to “Foster a sense of belonging and community amongst Cloudflare employees with an interest in the rich South Asian Culture as a platform to bring people together.”

We welcome everyone who identifies with or is otherwise interested in South Asian culture and look forward to welcoming all into our Desi community! While we are bound by the common fabric of South Asian, we realize that South Asia is vast and varied. Our shared body of culture embraces a breadth of diverse traditions, cuisines, habits, and beliefs, which is only magnified by the variation across the Desi diaspora across the world.

We therefore aspire to embrace and learn about each other to make the Desiflare ERG a place where all feel welcome!

Post Syndicated from Jon Rolfe original https://blog.cloudflare.com/ten-new-cities-four-new-countries/

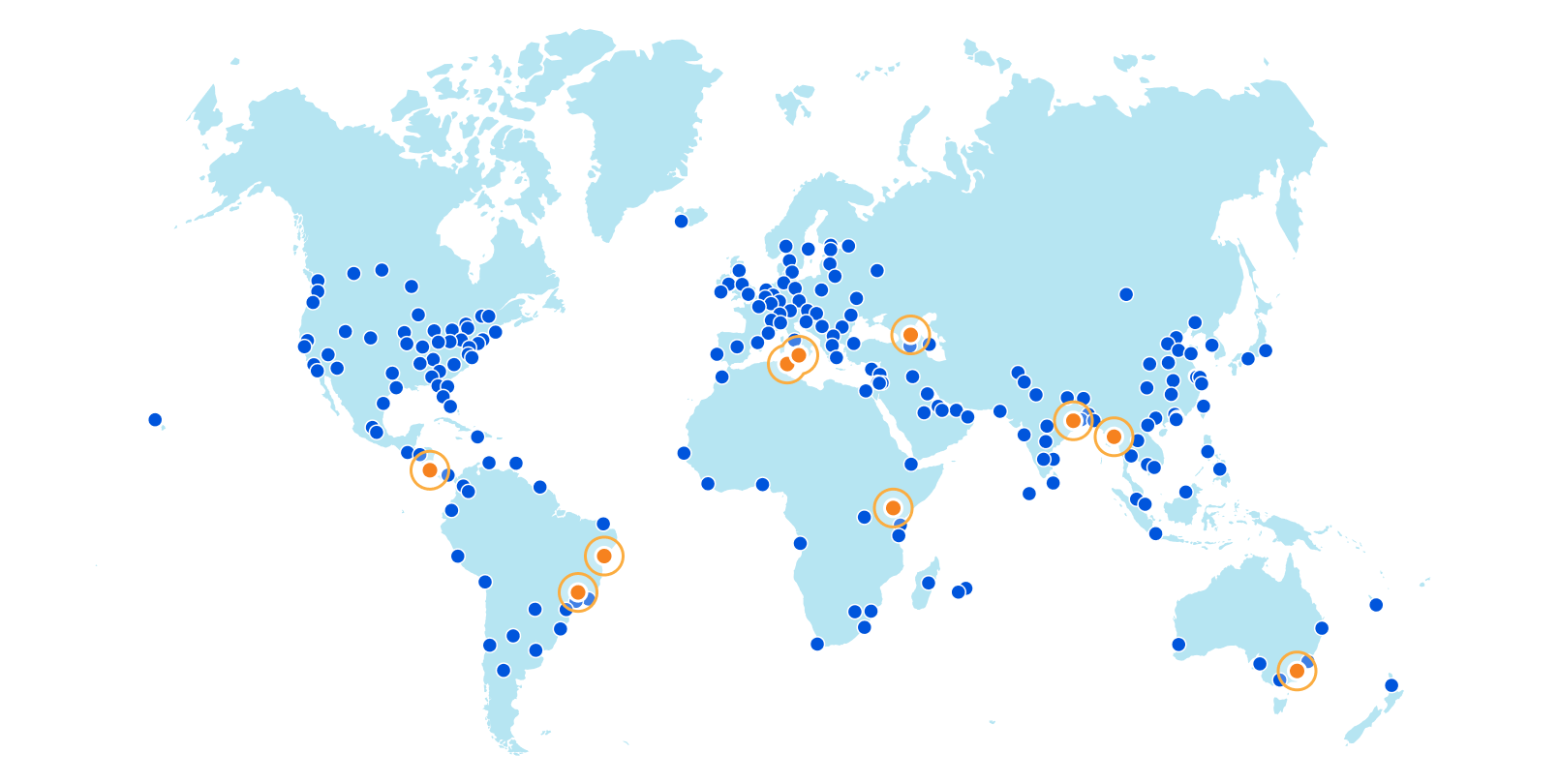

Cloudflare’s global network is always expanding, and 2021 has been no exception. Today, I’m happy to give a mid-year update: we’ve added ten new Cloudflare cities, with four new countries represented among them. And we’ve doubled our computational footprint since the start of pandemic-related lockdowns.

No matter what else we do at Cloudflare, constant expansion of our infrastructure to new places is a requirement to help build a better Internet. 2021, like 2020, has been a difficult time to be a global network — from semiconductor shortages to supply-chain disruptions — but regardless, we have continued to expand throughout the entire globe, experimenting with technologies like ARM, ASICs, and Nvidia all the way.

Without further ado, here are the new Cloudflare cities: Tbilisi, Georgia; San José, Costa Rica; Tunis, Tunisia; Yangon, Myanmar; Nairobi, Kenya; Jashore, Bangladesh; Canberra, Australia; Palermo, Italy; and Salvador and Campinas, Brazil.

These deployments are spread across every continent except Antarctica.

We’ve solidified our presence in every country of the Caucuses with our first deployment in the country of Georgia in the capital city of Tbilisi. And on the other side of the world, we’ve also established our first deployment in Costa Rica’s capital of San José with NIC.CR, run by the Academia Nacional de Ciencias.

In the northernmost country in Africa comes another capital city deployment, this time in Tunis, bringing us one country closer towards fully circling the Mediterranean Sea. Wrapping up the new country docket is our first city in Myanmar with our presence in Yangon, the country’s capital and largest city.

Our second Kenyan city is the country’s capital, Nairobi, bringing our city count in sub-Saharan Africa to a total of fifteen. In Bangladesh, Jashore puts us in the capital of its namesake Jashore District and the third largest city in the country after Chittagong and Dhaka, both already Cloudflare cities.

In the land way down under, our Canberra deployment puts us in Australia’s capital city, located, unsurprisingly, in the Australian Capital Territory. In differently warm lands is Palermo, Italy, capital of the island of Sicily, which we already see boosting performance throughout Italy.

Finally, we’ve gone live in Salvador (capital of the state of Bahia) and Campinas, Brazil, the only city announced today that isn’t a capital. These are some of the first few steps in a larger Brazilian expansion — watch this blog for more news on that soon.

This is in addition to the dozens of new cities we’ve added in Mainland China with our partner, JD Cloud, with whom we have been working closely to quickly deploy and test new cities since last year.

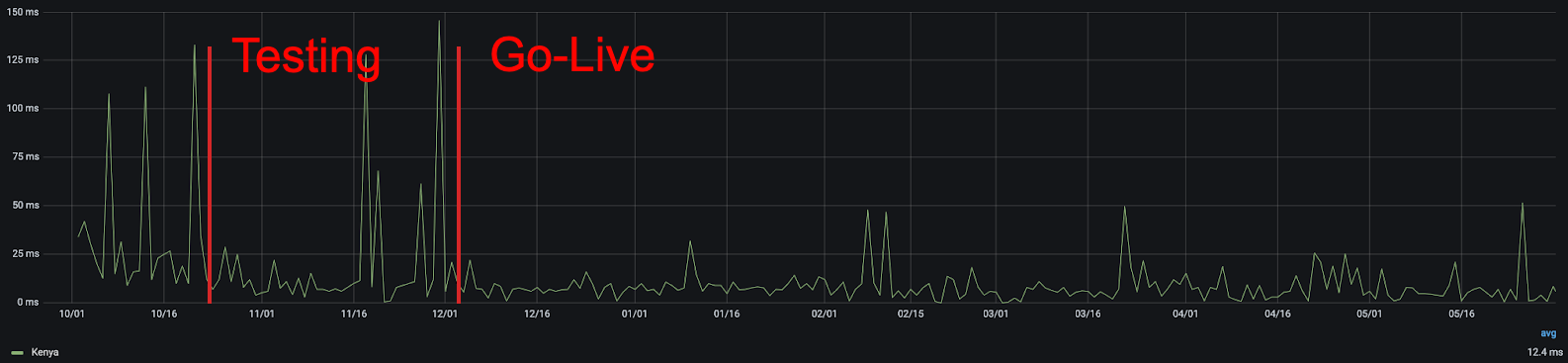

While we’re proud of our provisioning process, the work with new cities begins, not ends, with deployment. Each city is not only a new source of opportunity, but risk: Internet routing is fickle, and things that should improve network quality don’t always do so. While we have always had a slew of ways to track performance, we’ve found that a significant, constant improvement in the 25th percentile latency of non-bot traffic to be an ideal approximation of latency impacted by only physical distance.

Using this metric, we can quickly see the improvement that comes from adding new cities. For example, in Kenya, we can see that the addition of our Nairobi presence improved real user performance:

Latency variations in general are expected on the Internet — particularly in countries with high amounts of Internet traffic originating from non-fixed connections, like mobile phones — but in aggregate, the more consistently low latency, the better. From this chart, you can clearly see that not only was there a reduction in latency, but also that there were fewer frustrating variations in user latency. We all get annoyed when a page loads quickly one second and slowly the next, and the lower jitter that comes with being closer to the server helps to eliminate it.

As a reminder, while these measurements are in thousandths of a second, they add up quickly. Popular sites often require hundreds of individual requests for assets, some of which are initiated serially, so the difference between 25 milliseconds and 5 milliseconds can mean the difference between single and multi-second page load times.

To sum things up, users in the cities or greater areas of these cities should expect an improved Internet experience when using everything from our free, private 1.1.1.1 DNS resolver to the tens of millions of Internet properties that trust Cloudflare with their traffic. We have dozens more cities in the works at any given time, including now. Watch this space for more!

Like our network, Cloudflare continues to rapidly grow. If working at a rapidly expanding, globally diverse company interests you, we’re hiring for scores of positions, including in the Infrastructure group. Or, if you work at a global ISP and would like to improve your users’ experience and be part of building a better Internet, get in touch with our Edge Partners Program at [email protected] we’ll look into sending some servers your way!

By continuing to use the site, you agree to the use of cookies. more information

The cookie settings on this website are set to "allow cookies" to give you the best browsing experience possible. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this.