Post Syndicated from Brian Tokuyoshi original https://blog.cloudflare.com/treating-sase-anxiety

We understand that your VeloCloud deployment may be partially or even fully deployed. You may be experiencing discomfort from SASE anxiety. Symptoms include:

- Sudden vendor whiplash – Over the past 5 years, the ownership and strategic direction of VeloCloud has undergone a series of dramatic changes. VeloCloud was acquired by VMware in 2017, then VMware was spun off from Dell EMC in 2021, and in 2023 Broadcom completed its acquisition of VMware and VeloCloud.

- Dizziness from product names – VeloCloud helpfully published a list of some of its previous product names, which include VeloCloud, Velo, Velo SD-WAN, VeloCloud SD-WAN, and VMware SD-WAN by VeloCloud. But the list also misses other names such as “VMware NSX SD-WAN by VeloCloud” as well. Recently, VMware announced yet another name change by renaming VMware SD-WAN to VMware VeloCloud SD-WAN, and renamed VMware SASE to VMware VeloCloud SASE, secured by Symantec.

- Irregular priorities and strategies – With the number of times that VMware reorganized its various networking and security products into different business units, it’s now about to embark on yet another as Broadcom pursues single vendor SASE.

If you’re a VeloCloud customer, we are here to help you with your transition to Magic WAN, with planning, products and services. You’ve experienced the turbulence, and that’s why we are taking steps to help. First, it’s necessary to illustrate what’s fundamentally wrong with the architecture by acquisition model in order to define the right path forward. Second, we document the steps involved for making a transition from VeloCloud to Cloudflare. Third, we are offering a helping hand to help VeloCloud customers to get their SASE strategies back on track.

Architecture is the key to SASE

Your IT organization must deliver stability across your information systems, because the future of your business depends on the decisions that you make today. You need to make sure that your SASE journey is backed by vendors that you can depend on. Indecisive vendors and unclear strategies rarely inspire confidence, and it’s driving organizations to reconsider their relationship.

It’s not just VeloCloud that’s pivoting. Many vendors are chasing the brass ring to meet the requirement for Single Vendor SASE, and they’re trying to reduce their time to market by acquiring features on their checklist, rather than taking the time to build the right architecture for consistent management and user experience. It’s led to rapid consolidation of both startups and larger product stacks, but now we’re seeing many many instances of vendors having to rationalize their overlapping product lines. Strange days indeed.

But the thing is, Single Vendor SASE is not a feature checklist game. It’s not like shopping for PC antivirus software where the most attractive option was the one with the most checkboxes. It doesn’t matter if you acquire a large stack of product acronyms (ZTNA, SD-WAN, SWG, CASB, DLP, FWaaS, SD-WAN to name but a few) if the results are just as convoluted as the technology it aims to replace.

If organizations are new to SASE, then it can be difficult to know what to look for. However, one clear sign of trouble is taking an SSE designed by one vendor and combining it with SD-WAN from another. Because you can’t get a converged platform out of two fundamentally incongruent technologies.

Why SASE Math Doesn’t Work

The conceptual model for SASE typically illustrates two half circles, with one consisting of cloud-delivered networking and the other being cloud-delivered security. With this picture in mind, it’s easy to see how one might think that combining an implementation of cloud-delivered networking (VeloCloud SD-WAN) and an implementation of cloud-delivered security (Symantec Network Protection – SSE) might satisfy the requirements. Does Single Vendor SASE = SD-WAN + SSE?

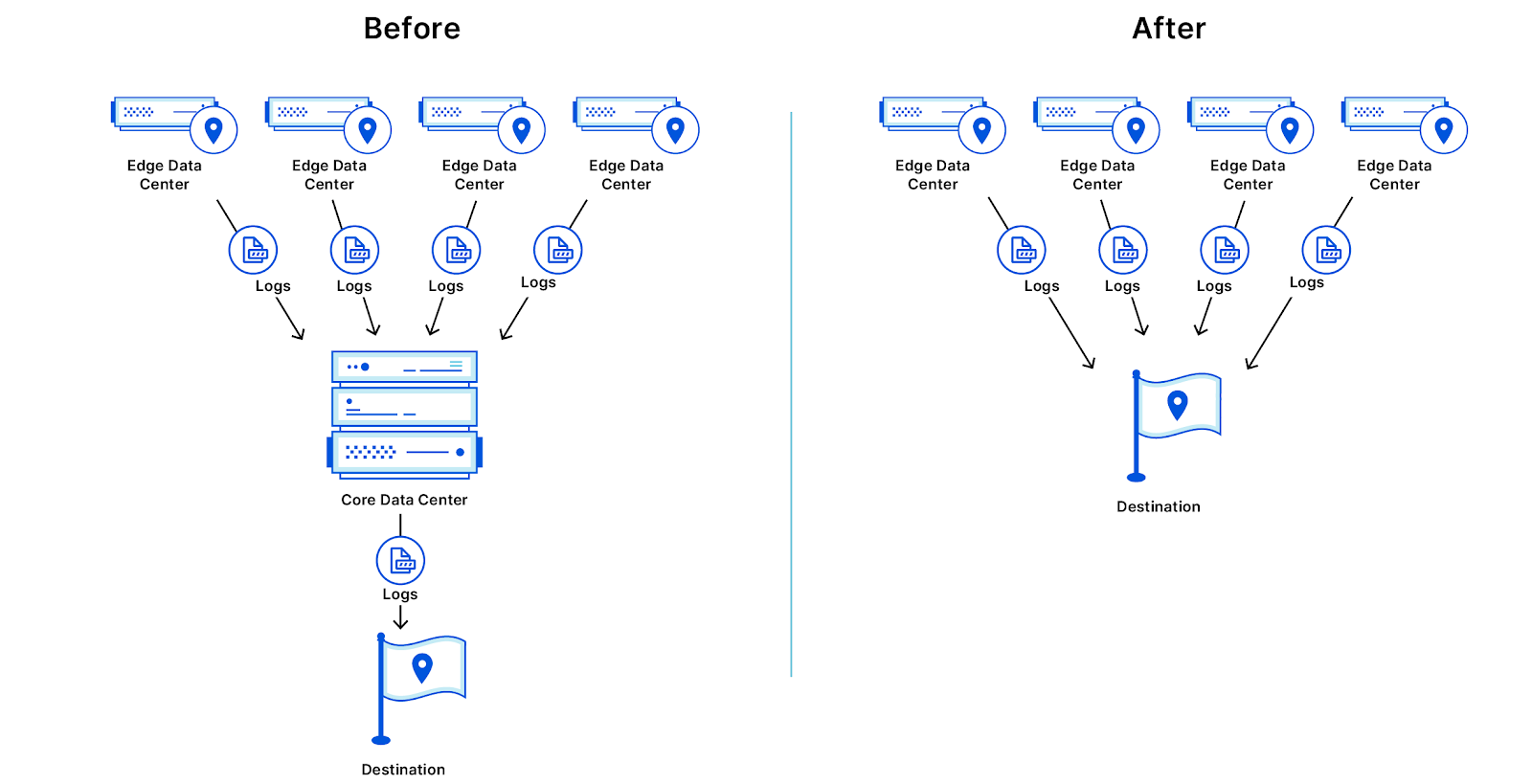

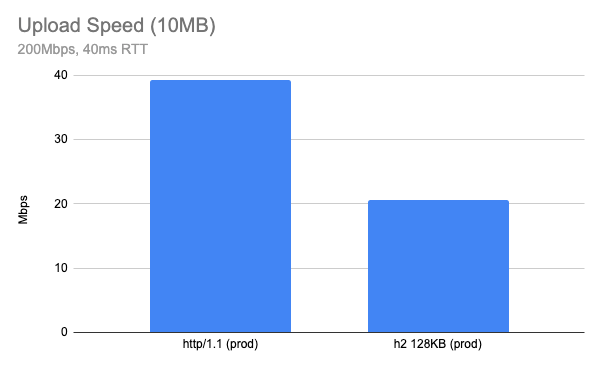

In practice, networking and network security do not exist in separate universes, but SD-WAN and SSE implementations do, especially when they were designed by different vendors. That’s why the math doesn’t work, because even with the requisite SASE functionality, the implementation of the functionality doesn’t fit. SD-WAN is designed for network connectivity between sites over the SD-WAN fabric, whereas SSE largely focuses on the enforcement of security policy for user->application traffic from remote users or traffic leaving (rather than traversing) the SD-WAN fabric. Therefore, to bring these two worlds together, you end up with security inconsistency, proxy chains which create a burden on latency, or implementing security at the edge rather than in the cloud.

Why Cloudflare is different

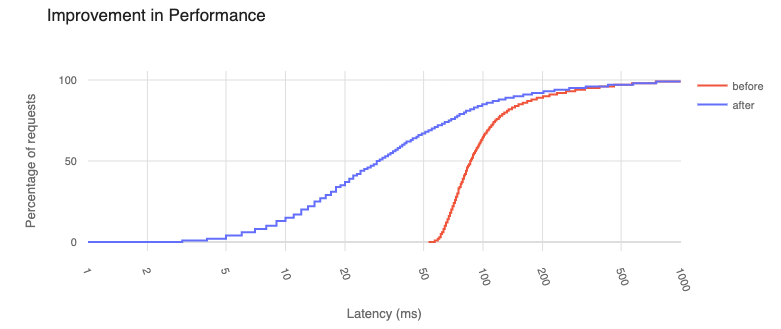

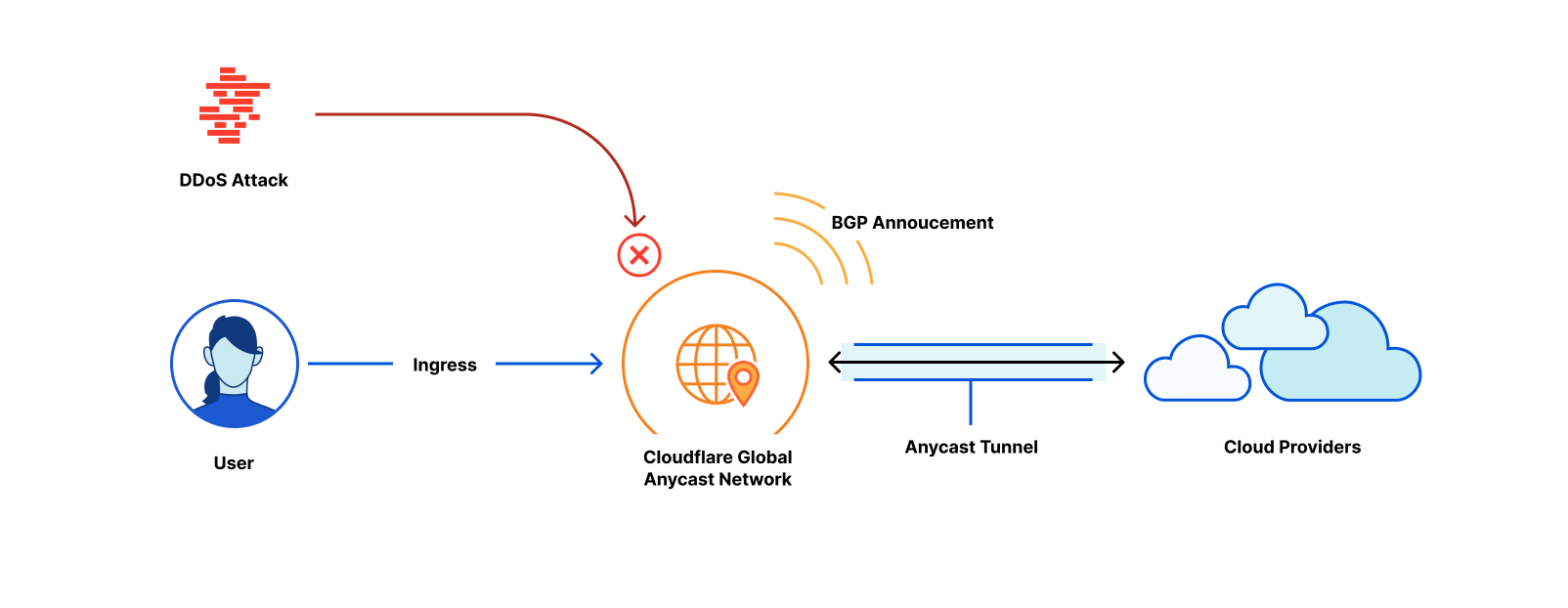

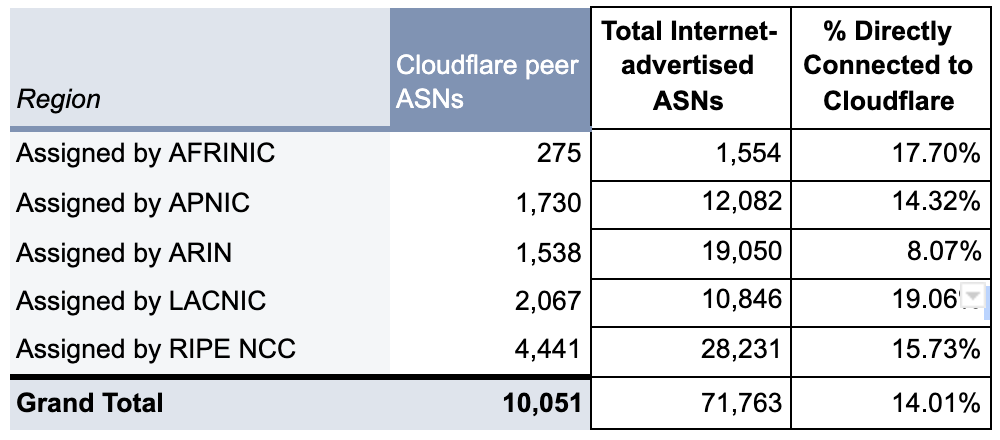

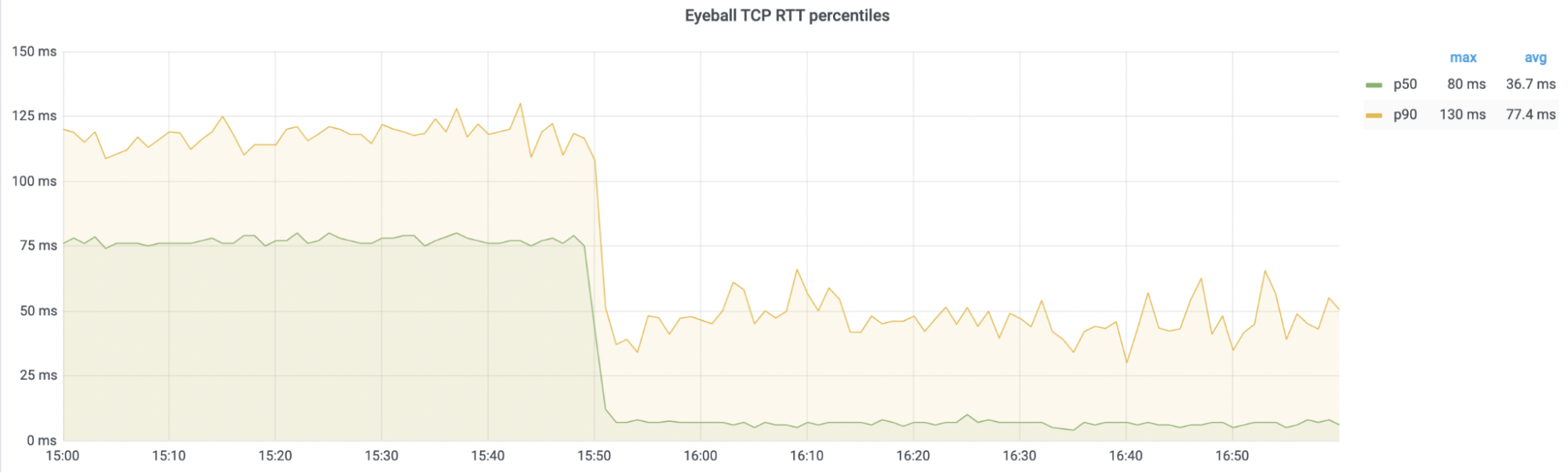

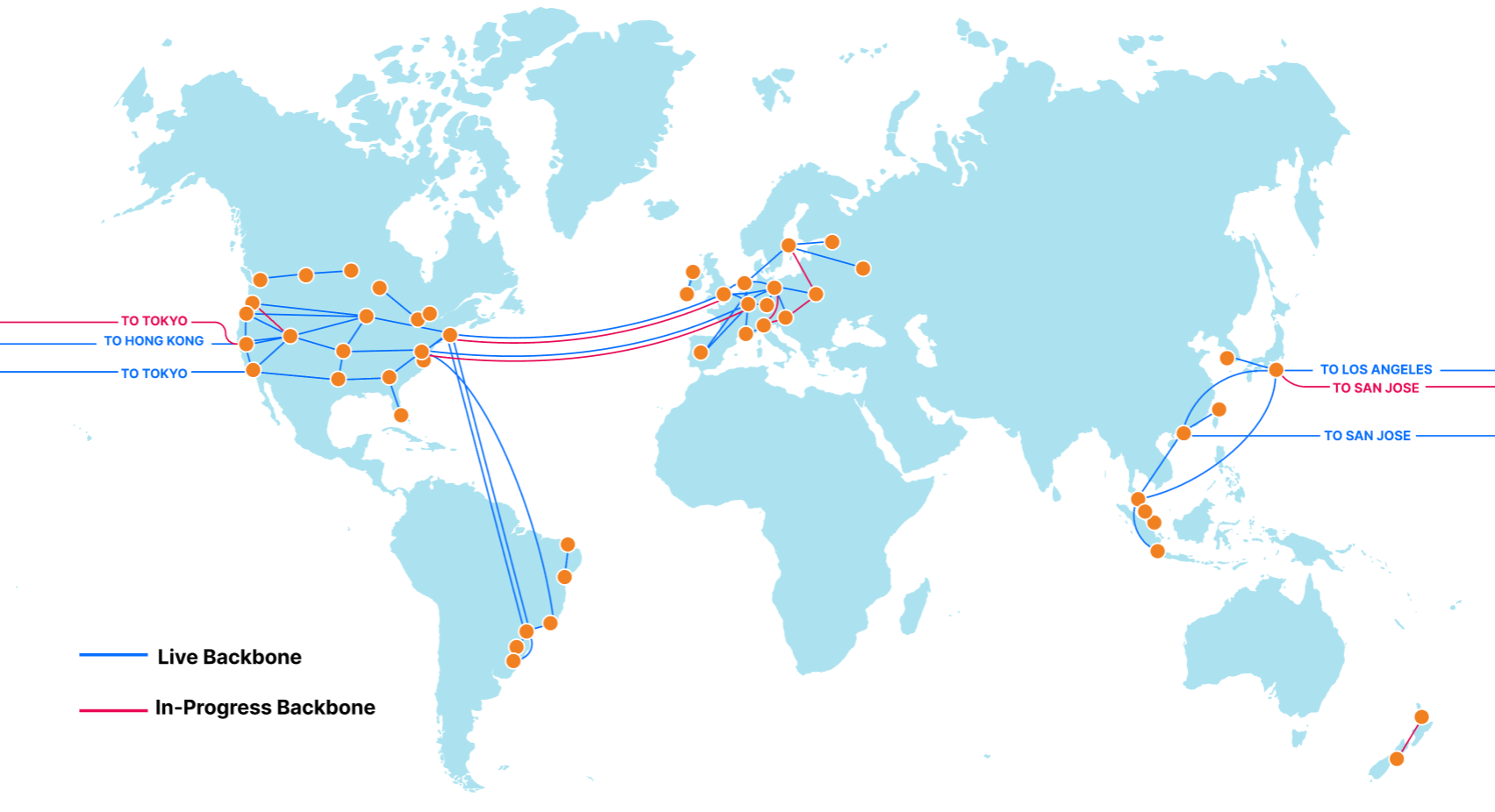

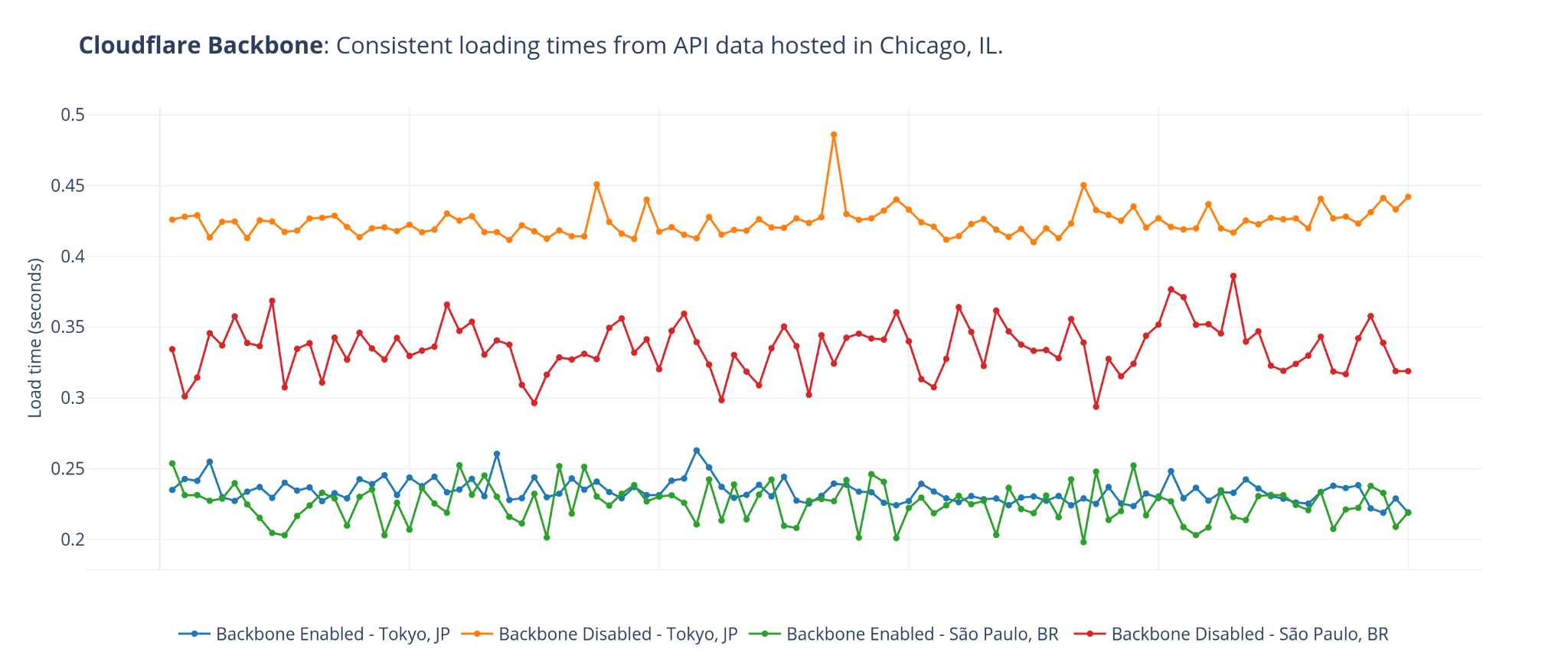

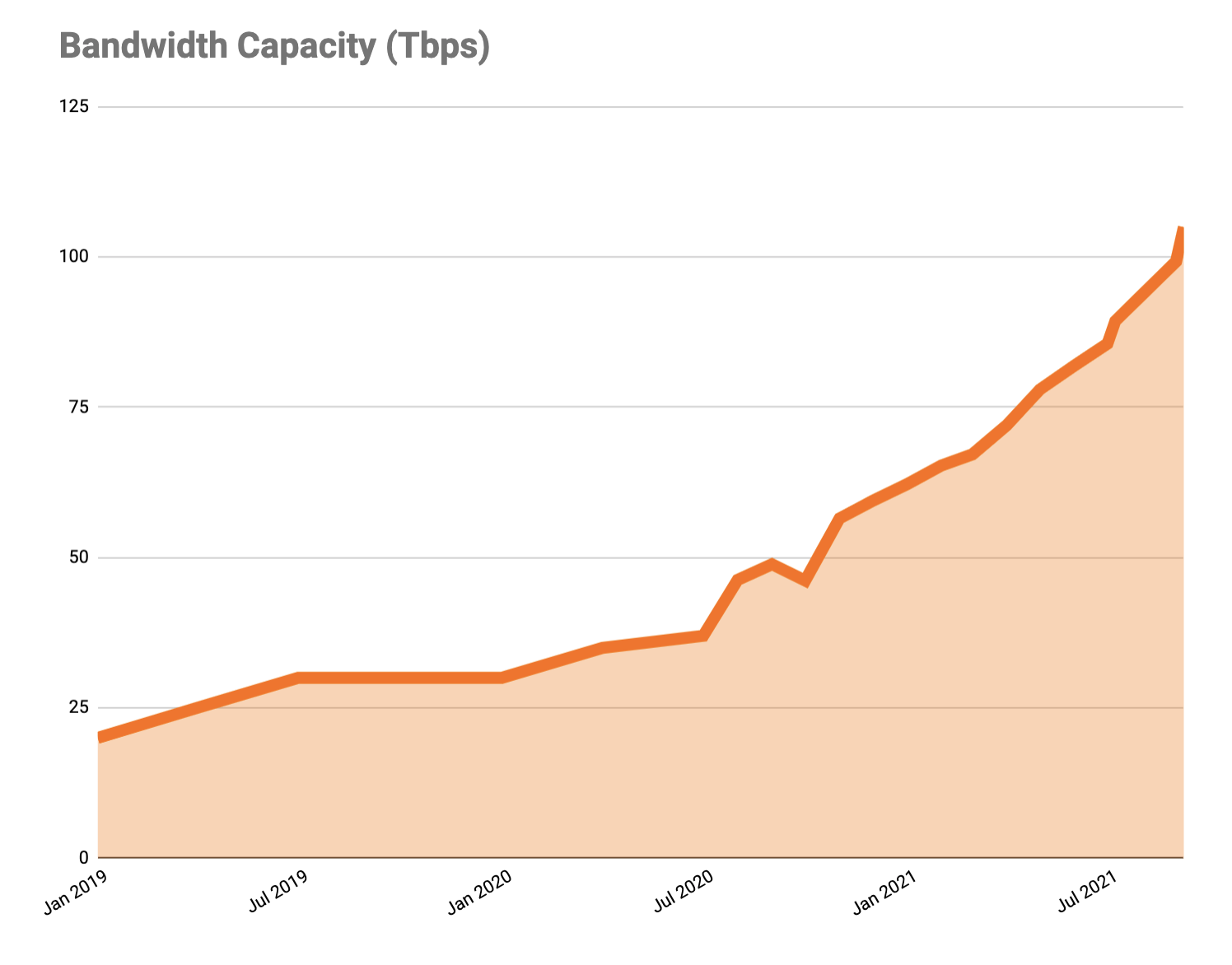

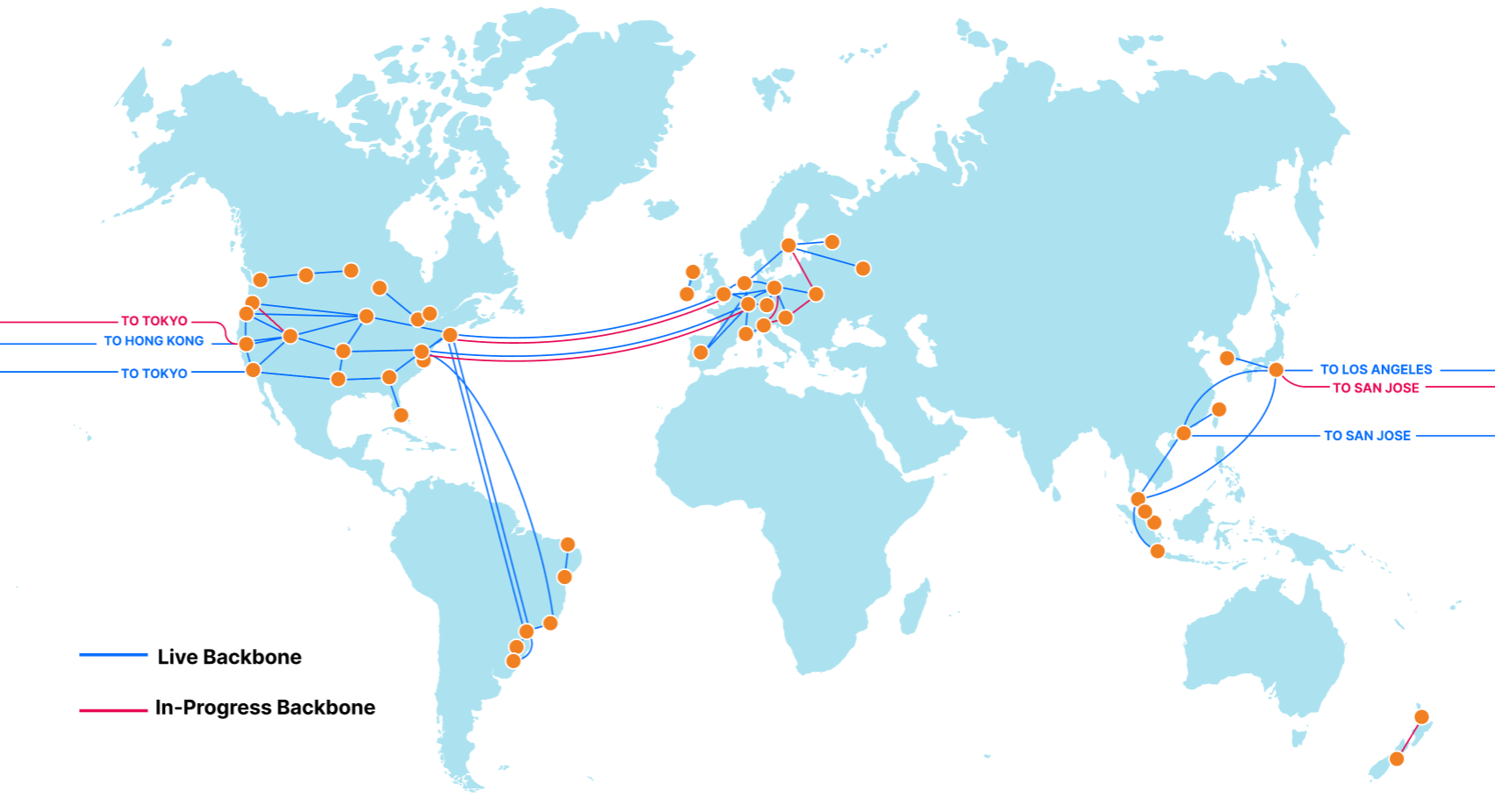

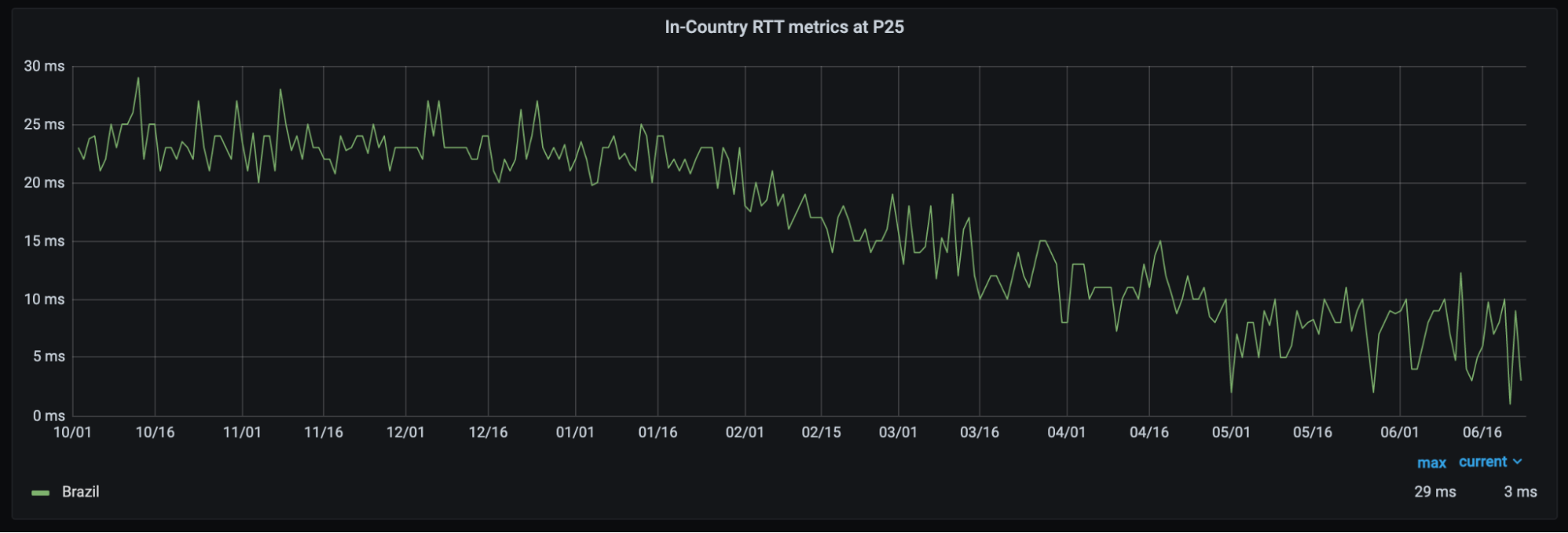

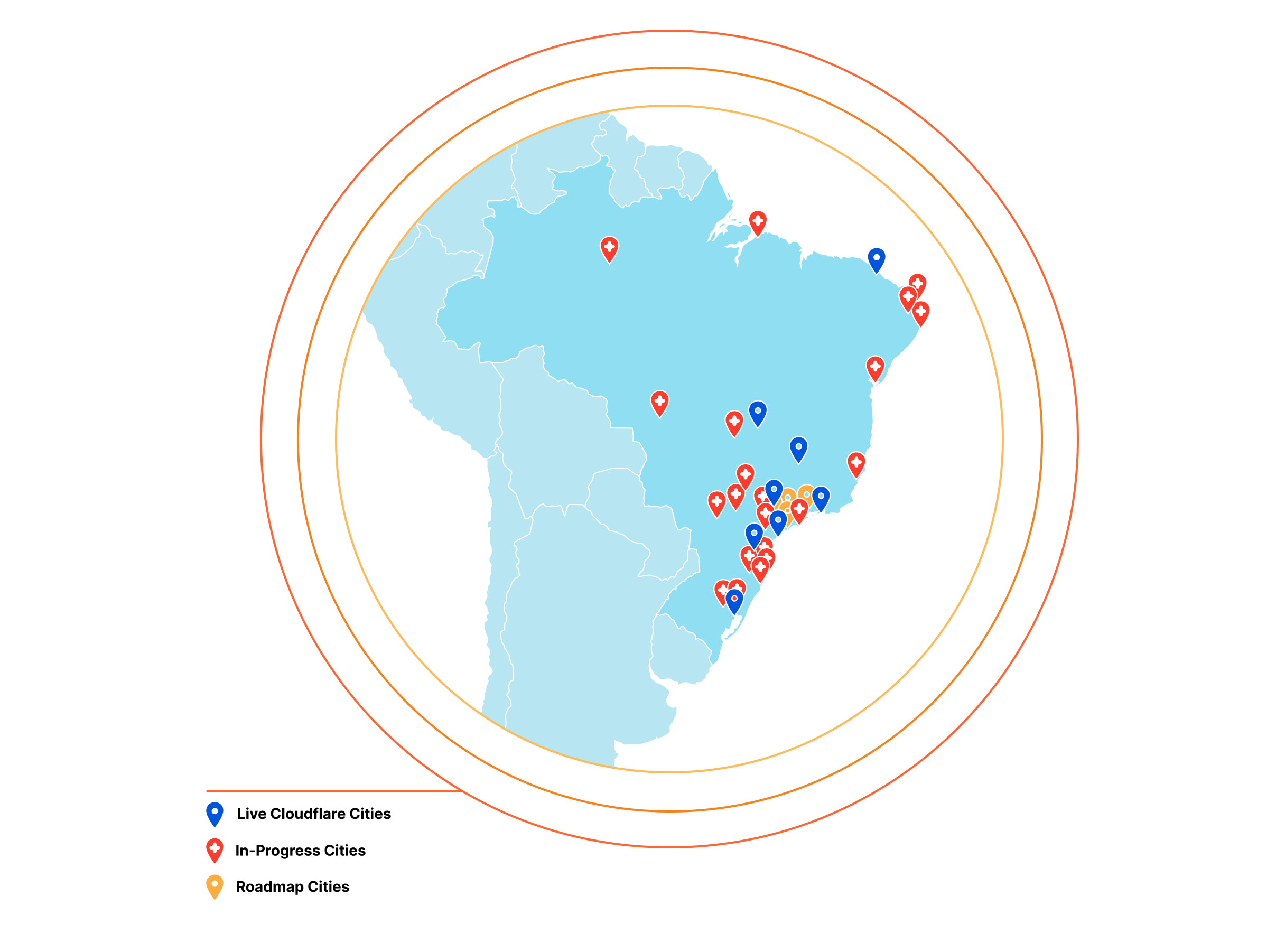

At Cloudflare, the basis for our approach to single vendor SASE starts from building a global network designed with private data centers, overprovisioned network and compute capacity, and a private backbone designed to deliver our customer’s traffic to any destination. It’s what we call any-to-any connectivity. It’s not using the public cloud for SASE services, because the public cloud was designed as a destination for traffic rather than being optimized for transit. We are in full control of the design of our data centers and network and we’re obsessed with making it even better every day.

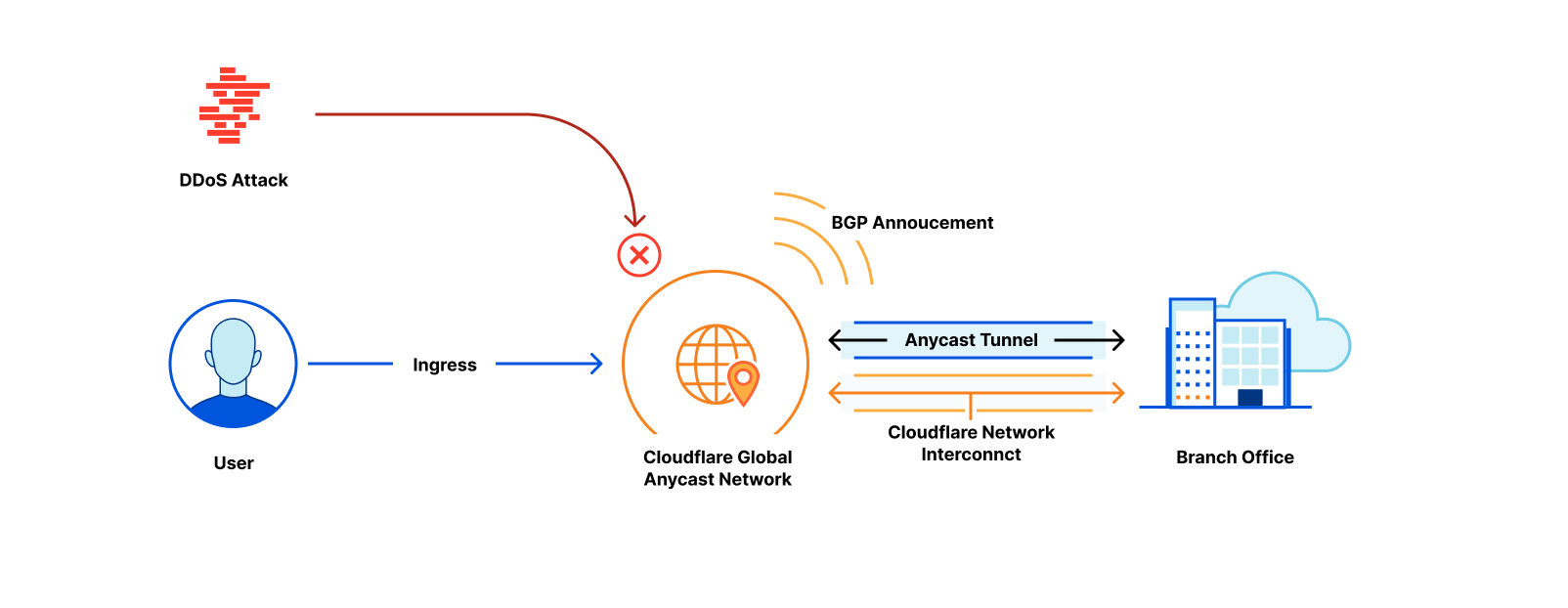

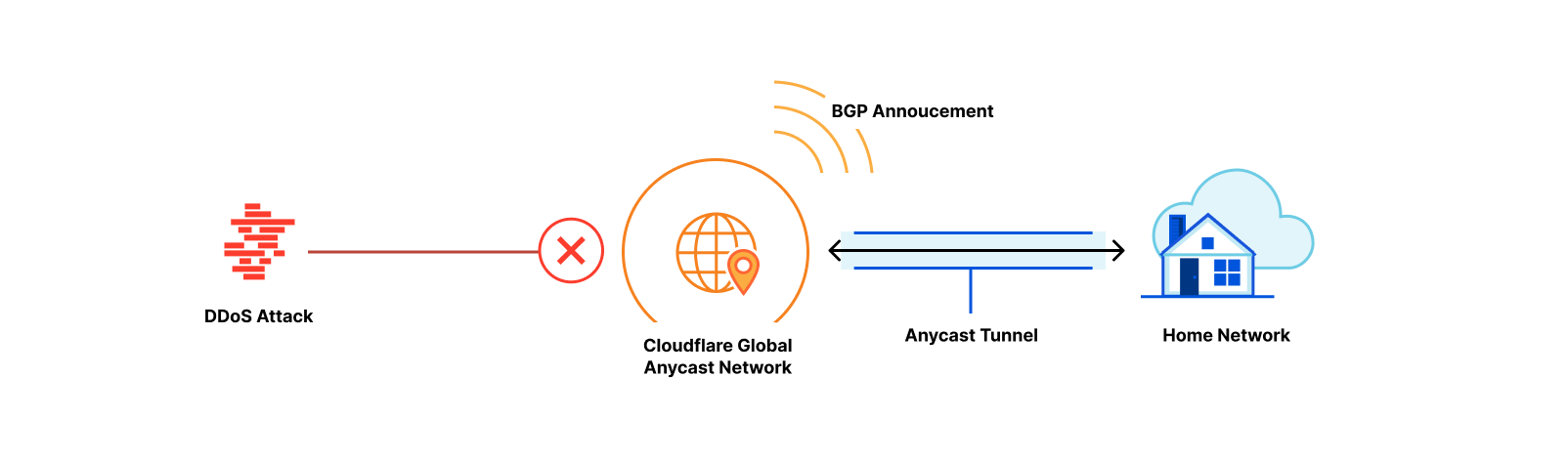

It’s from this network that we deliver networking and security services. Conceptually, we implement a philosophy of composability, where the fundamental network connection between the customer’s site and the Cloudflare data center remains the same across different use cases. In practice, and unlike traditional approaches, it means no downtime for service insertion when you need more functionality — the connection to Cloudflare remains the same. It’s the services and the onboarding of additional destinations that changes as organizations expand their use of Cloudflare.

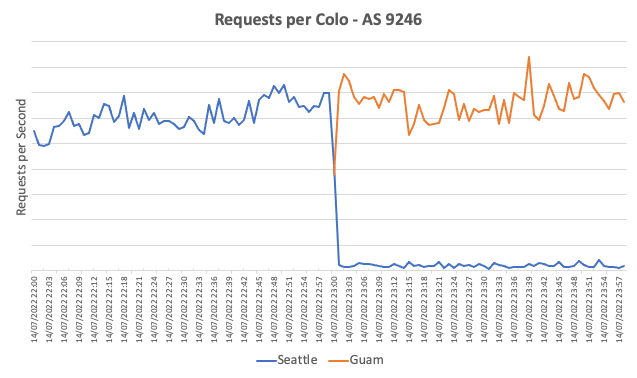

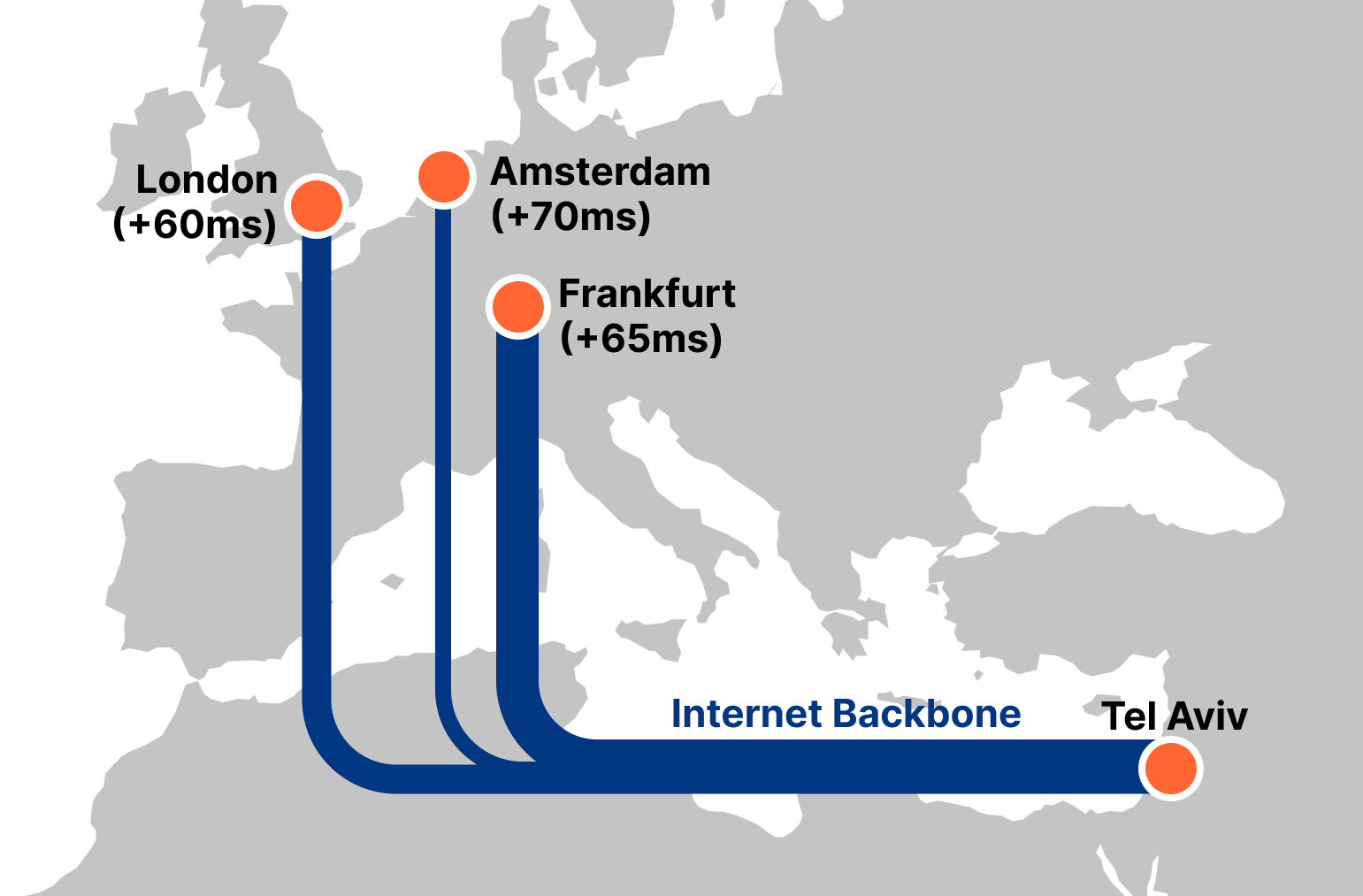

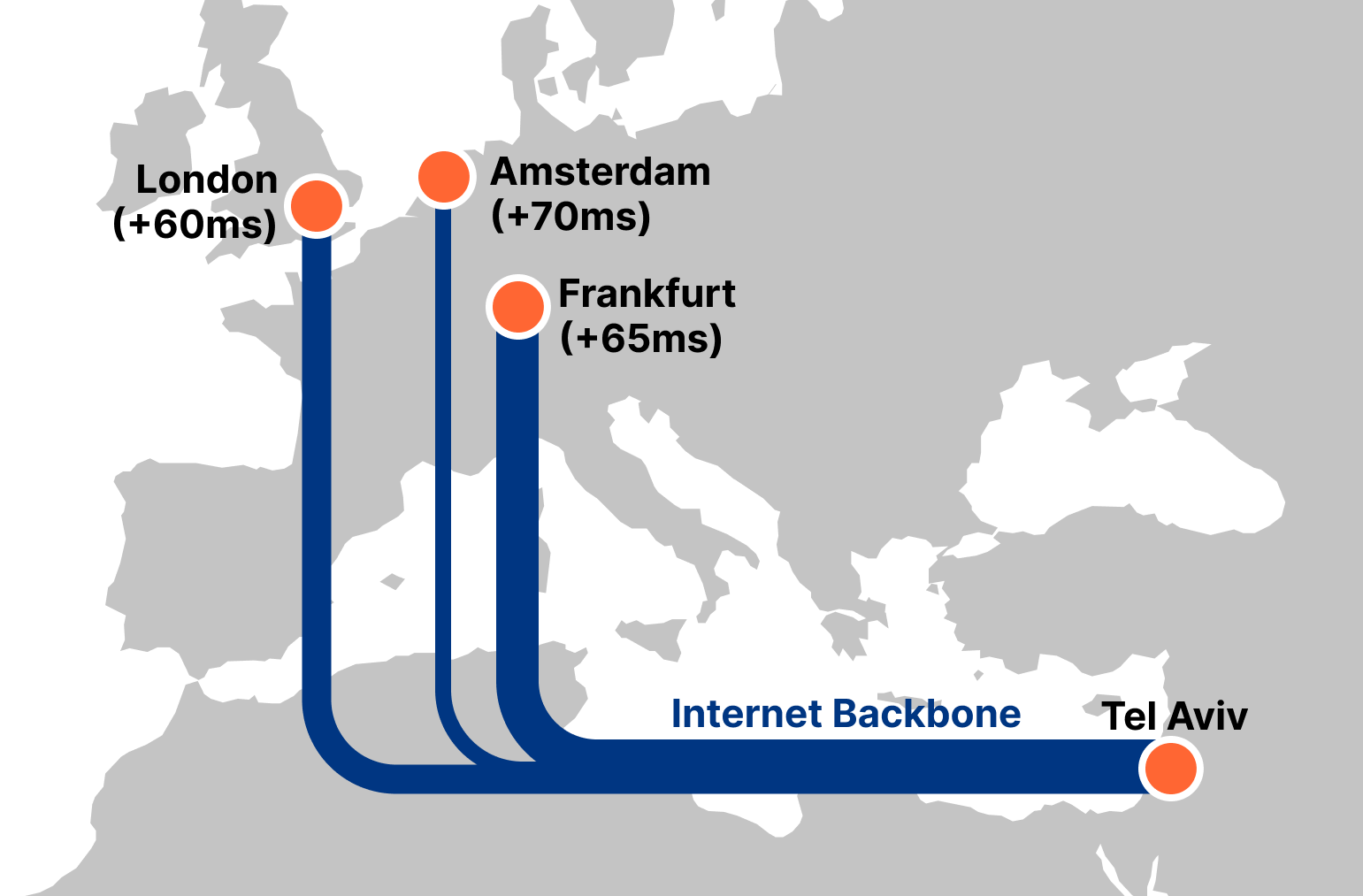

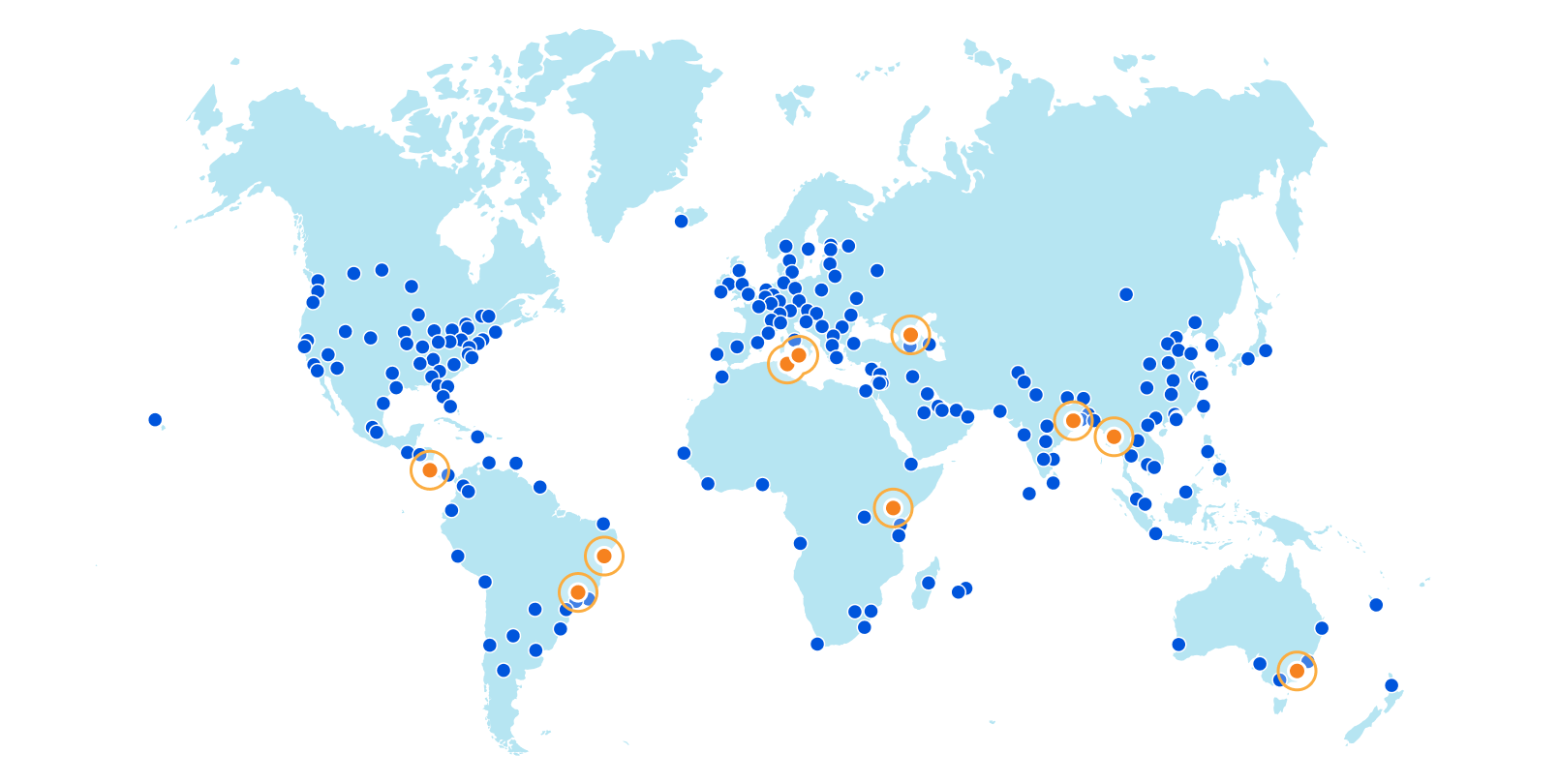

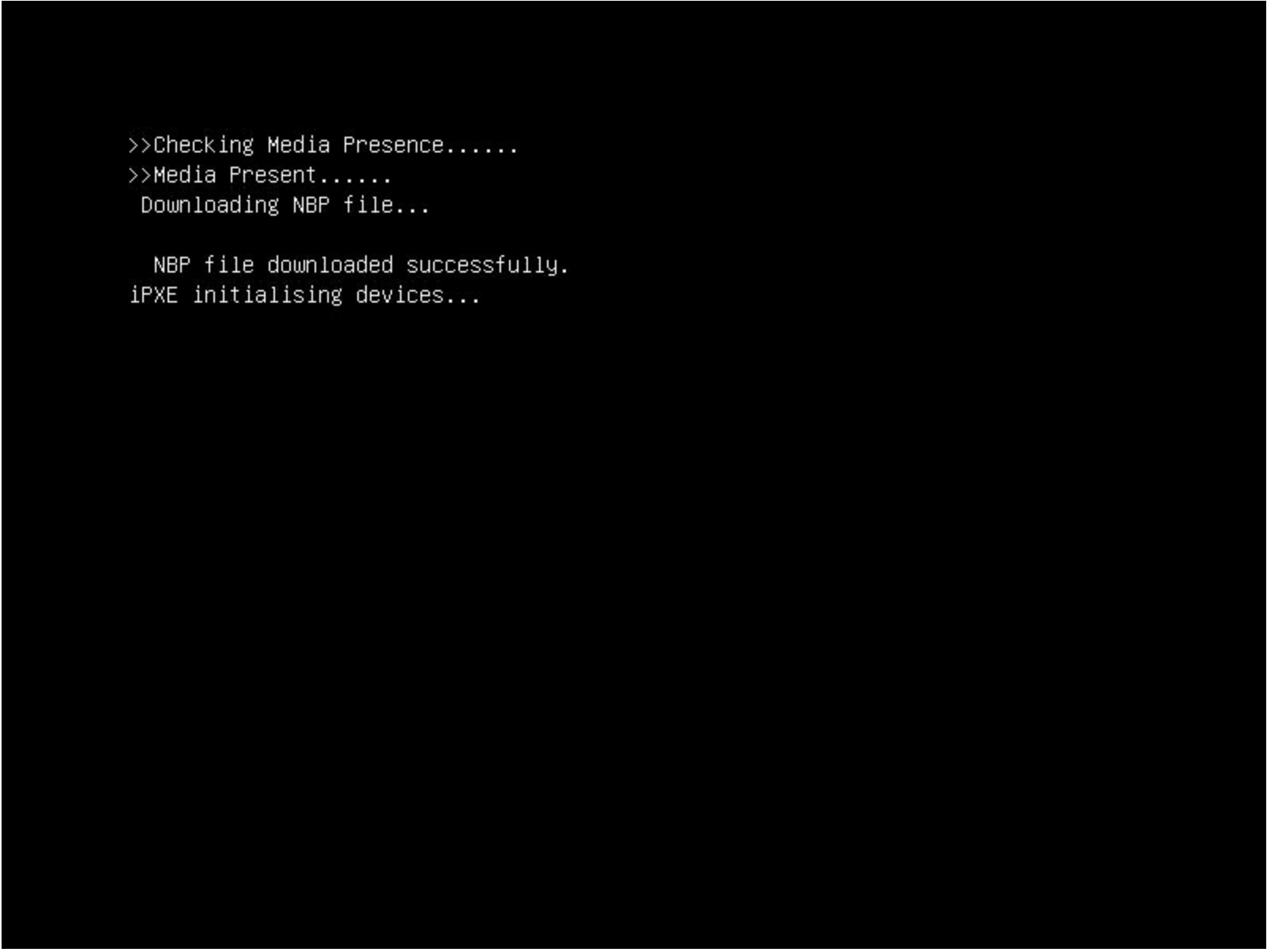

From the perspective of branch connectivity, use Magic WAN for the connectivity that ties your business together, no matter which way traffic passes. That’s because we don’t treat the directions of your network traffic as independent problems. We solve for consistency by on-ramping all traffic through one of Cloudflare’s 310+ anycasted data centers (whether inbound, outbound, or east-west) for enforcement of security policy. We solve for latency by eliminating the need to forward traffic to a compute location by providing full compute services in every data center. We implement SASE using a light edge / heavy cloud model, with services delivered within the Cloudflare connectivity cloud rather than on-prem.

How to transition from VeloCloud to Cloudflare

Start by contacting us to get a consultation session with our solutions architecture team. Our architects specialize in network modernization and can map your SASE goals across a series of smaller projects. We’ve worked with hundreds of organizations to achieve their SASE goals with the Cloudflare connectivity cloud and can build a plan that your team can execute on.

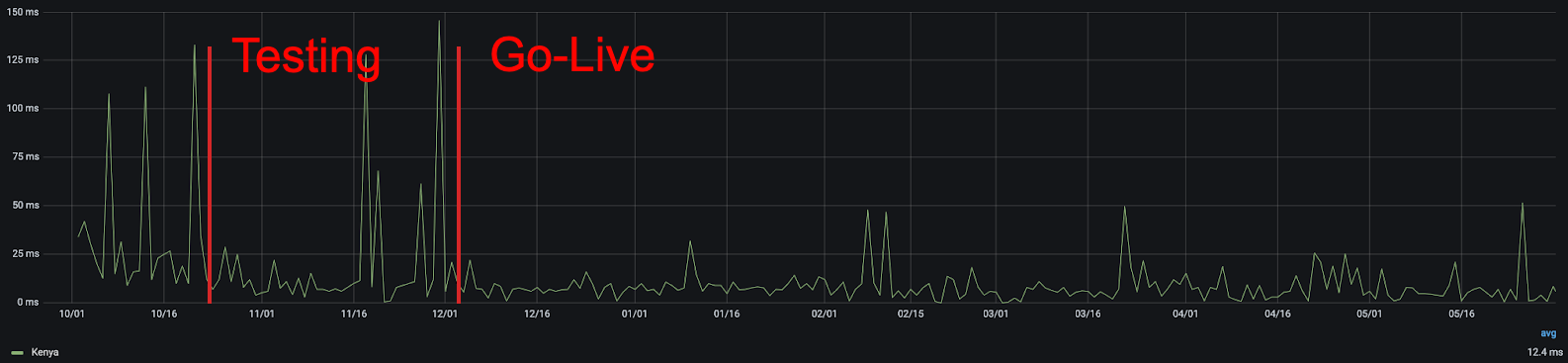

For product education, join one of our product workshops on Magic WAN to get a deep dive into how it’s built and how it can be rolled out to your locations. Magic WAN uses a light edge, heavy cloud model that has multiple network insertion models (whether a tunnel from an existing device, using our turnkey Magic WAN Connector, or deploying a virtual appliance) which can work in parallel or as a replacement for your branch connectivity needs, thus allowing you to migrate at your pace. Our specialist teams can help you mitigate transitionary hardware and license costs as you phase out VeloCloud and accelerate your rollout of Magic WAN.

The Magic WAN technical engineers have a number of resources to help you build product knowledge as well. This includes reference architectures and quick start guides that address your organization’s connectivity goals, whether sizing down your on-prem network in favor of the emerging “coffee shop networking” philosophy, retiring legacy SD-WAN, and full replacement of conventional MPLS.

For services, our customer success teams are ready to support your transition, with services that are tailored specifically for Magic WAN migrations both large and small.

Your next move

Interested in learning more? Contact us to get started, and we’ll help you with your SASE journey. Contact us to learn how to replace VeloCloud with Cloudflare Magic WAN and use our network as an extension of yours.