Post Syndicated from James Allworth original https://blog.cloudflare.com/birthday-week-cloudflare-turns-10/

2020 marks a major milestone for Cloudflare: it’s our 10th birthday.

We’ve always used birthdays as an opportunity to give back to the Internet. But this year — a year in which the Internet has been so central to giving us all some degree of connectedness and normalcy — it feels like giving back to the Internet has been more important than ever.

And while we couldn’t celebrate in person, we were humbled by some of the incredible minds that joined us online to talk about how the Internet has changed over the last ten years — and what we might see over the next ten.

With that, let’s recap the key announcements from Birthday Week 2020.

Day 1, Monday: Workers

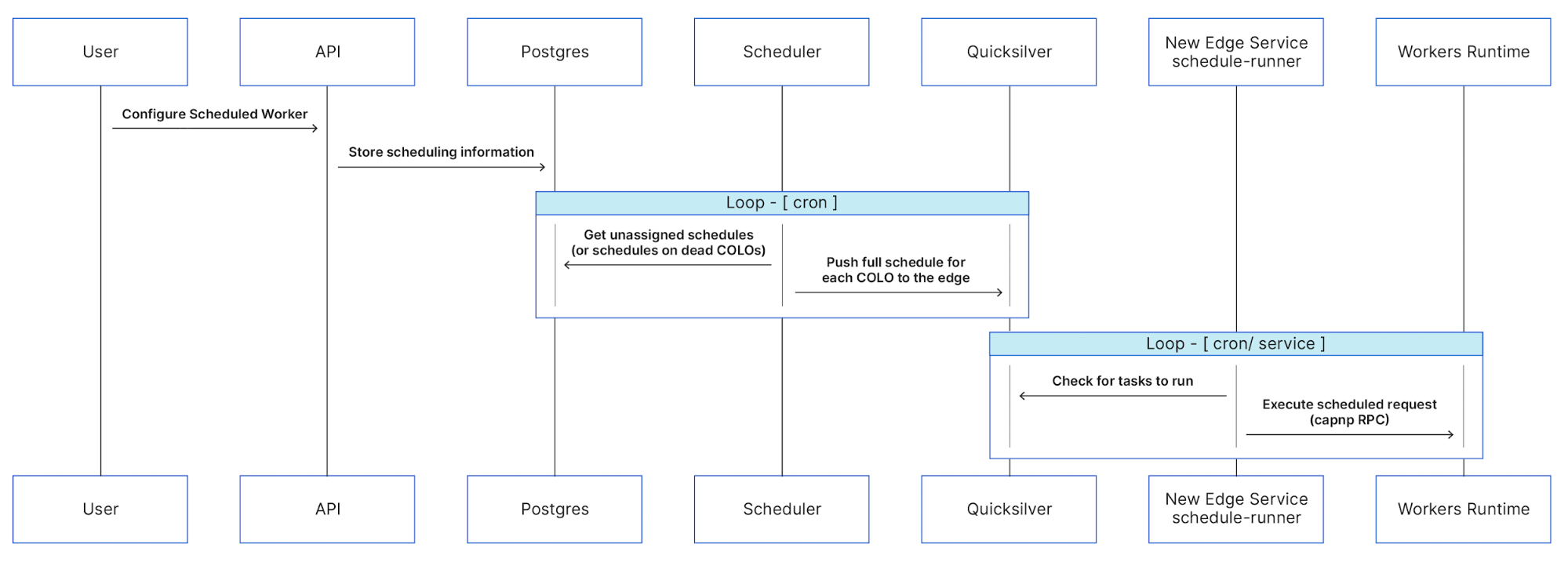

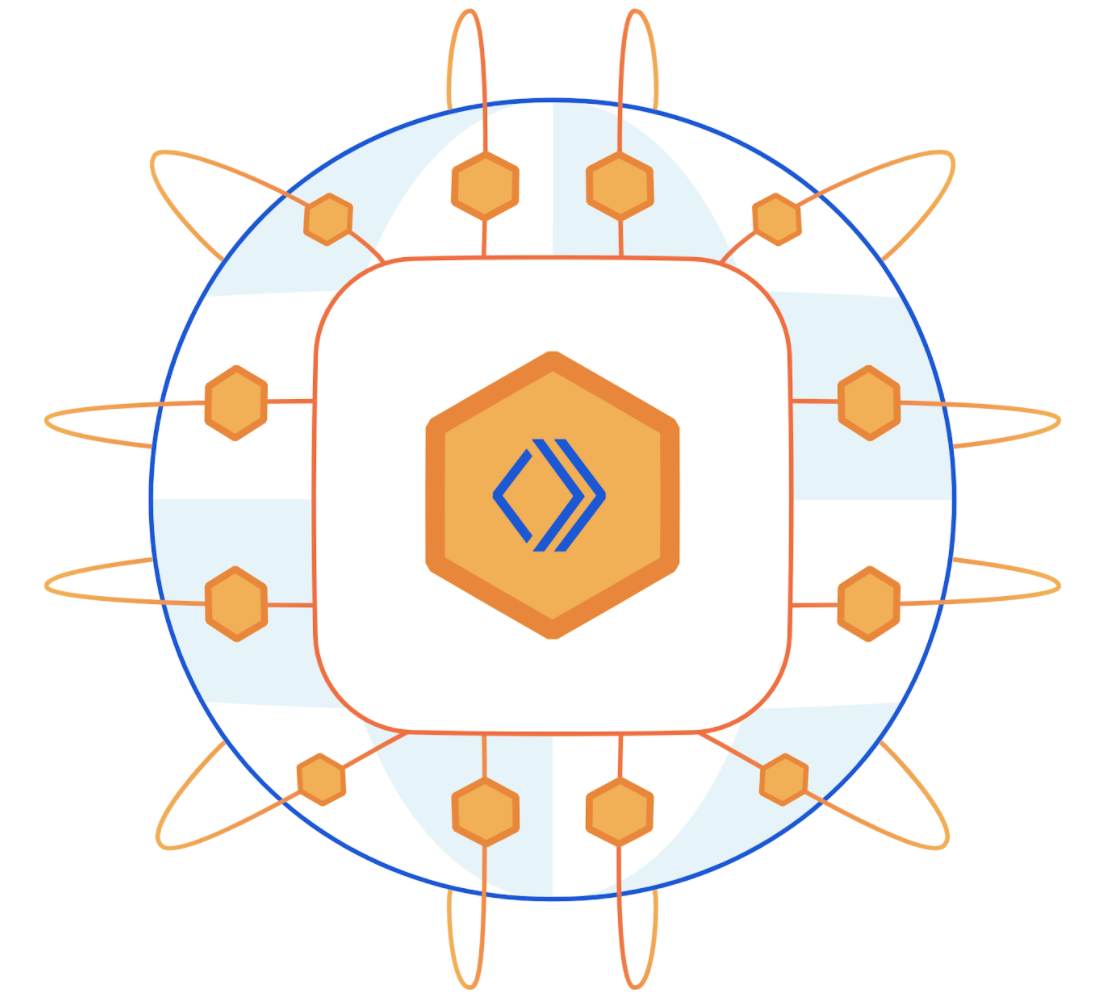

During Birthday Week in 2017, Cloudflare announced Workers — a serverless platform that represented a completely new way to build applications: by writing your code directly onto our network edge. On Monday of this year’s Birthday Week, we announced Durable Objects and Cron Triggers — both of which continue to expand the use cases that Workers can address.

Many folks associate the serverless paradigm with functions as a service — which, at its core, is stateless. Workers KV started down the path of changing this, providing high availability storage on the edge. However, there are use cases where consistency (a client making a request to a database will get the same view of data) is more important than availability (a client making a request to a database requests always receives a response). Say you want to sell tickets to a concert — you don’t want to allow two people to be able to purchase the same ticket. With a traditional application, with a database running in one location, that’s relatively easy to ensure. But with Workers running in Cloudflare’s data centers all over the world, ensuring consistency is a little bit more challenging. Workers Durable Objects solves for this for developers: giving them access to high consistency storage when they’re building on the Workers platform.

Similarly, triggering Workers has historically needed a user to do something, A user visiting a URL, for example. But developers have use cases when they want a Worker to run, independent of a user doing something right now. Syncing for example. Batch jobs. Or perhaps doing something 24 hours after a user has done something. And this is where Cron Triggers come in — now, for developers on the Workers platform, there’s no more need to rely on an eyeball to get things rolling.

Day 2, Tuesday: Analytics

There are a lot of website analytics products out there on the market. Many of those products are, not surprisingly, very good.

But the way they’ve been implemented often leaves a lot to be desired. Most of them operate by tracking individual users, using client-side state like cookies or localStorage — or even fingerprints. This is increasingly a problem. There’s the principle of it: we don’t want to be tracked individually — why would we want visitors to our web properties to feel tracked either? Beyond that though, because so many people are feeling uncomfortable with how they’re being tracked around the web, they’re simply blocking a lot of these analytics products. As a result, all these analytics products are increasingly becoming less accurate.

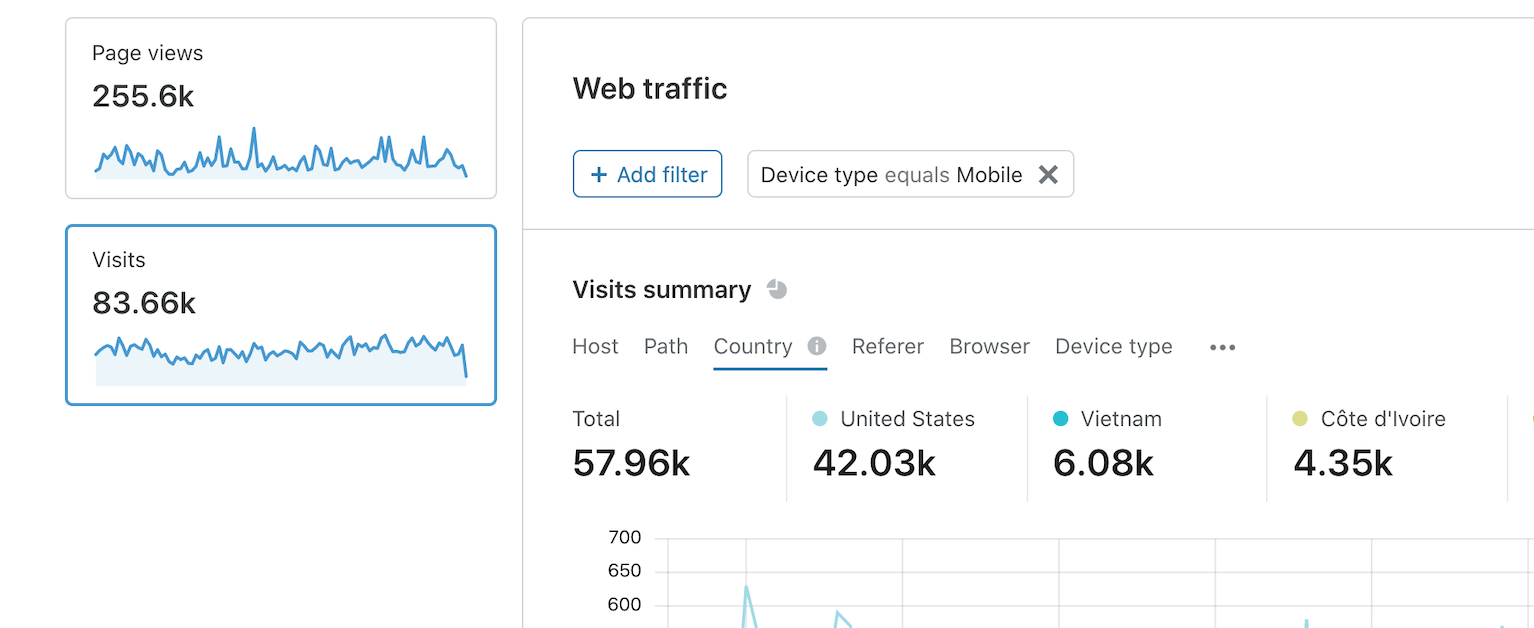

On Tuesday, we announced a new Web Analytics product that allows you to get the best of both worlds — detailed and accurate analytics, without compromising on the privacy of your users. We don’t use any client-side state, like cookies or localStorage, for the purposes of tracking users. And we don’t “fingerprint” individuals via their IP address, User Agent string, or any other data for the purpose of displaying analytics (we consider fingerprinting even more intrusive than cookies, because users have no way to opt out). Because Cloudflare’s business has never been built around tracking users or selling advertising, we don’t do it. Just the metrics, ma’am.

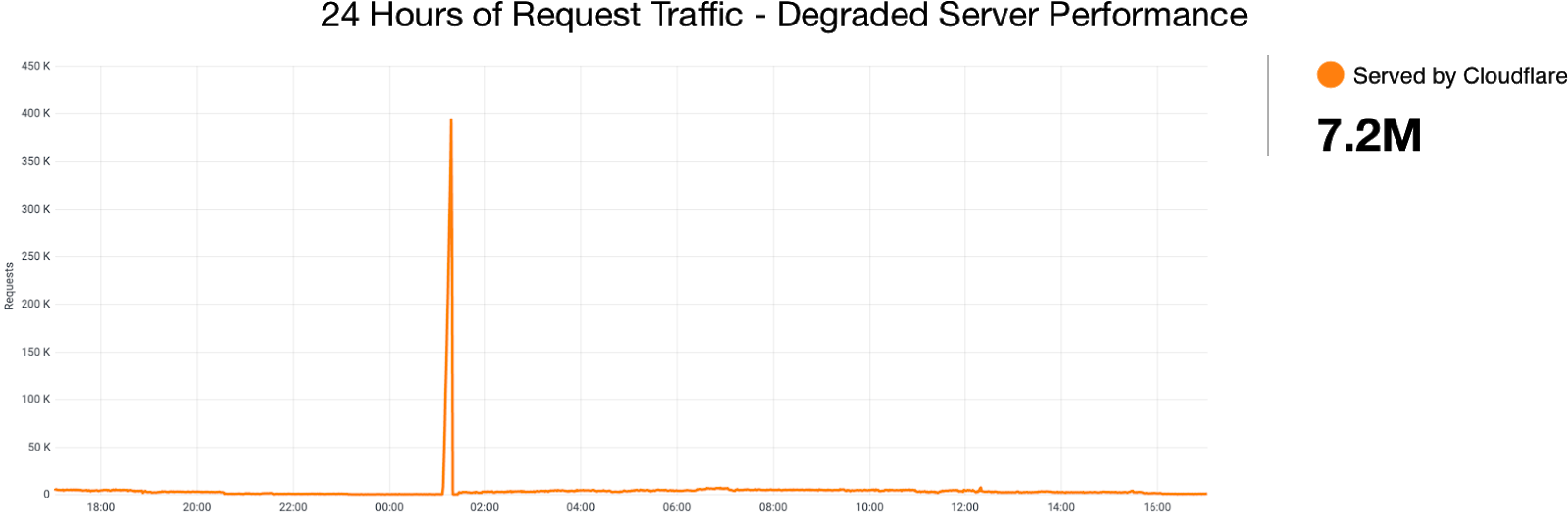

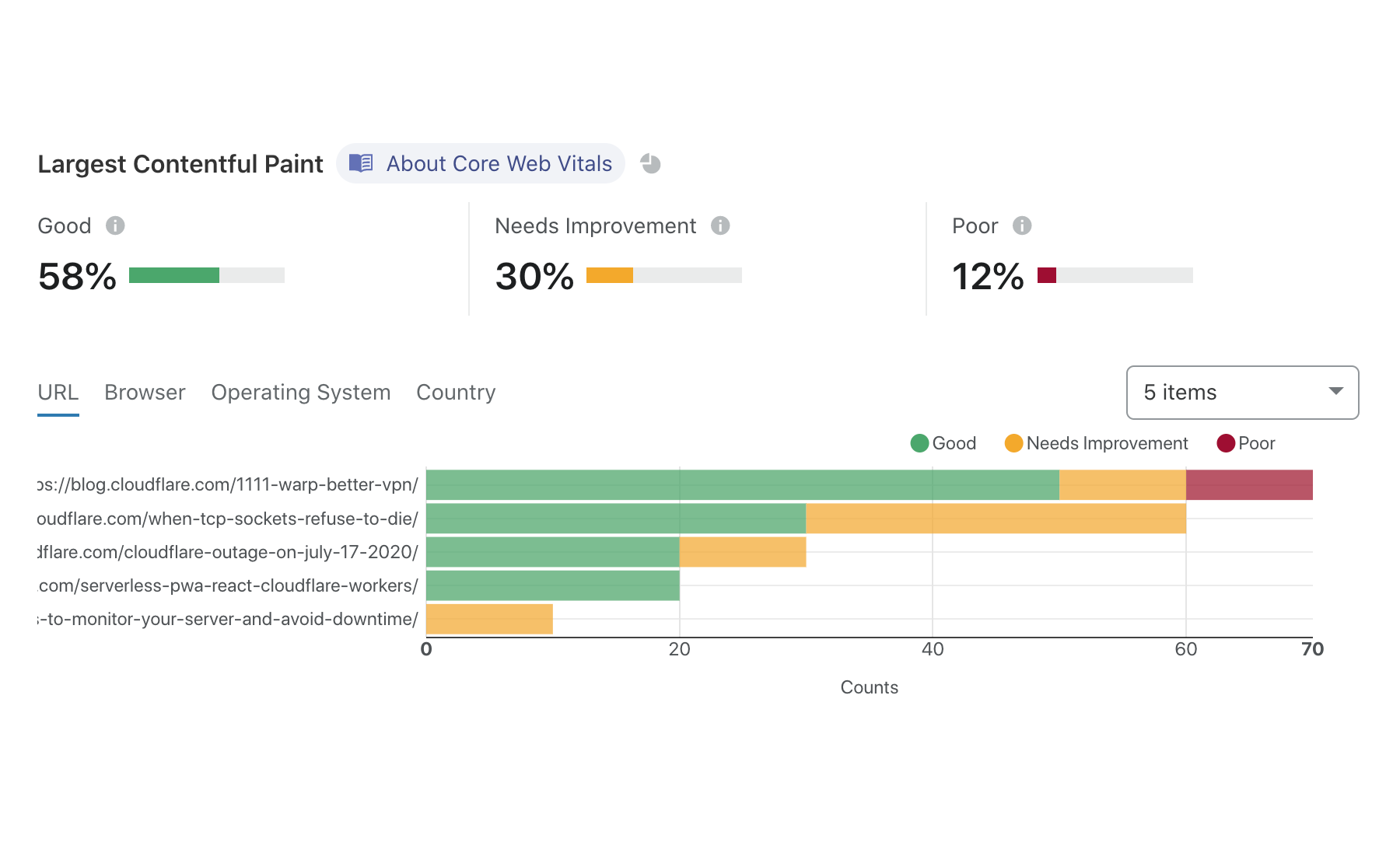

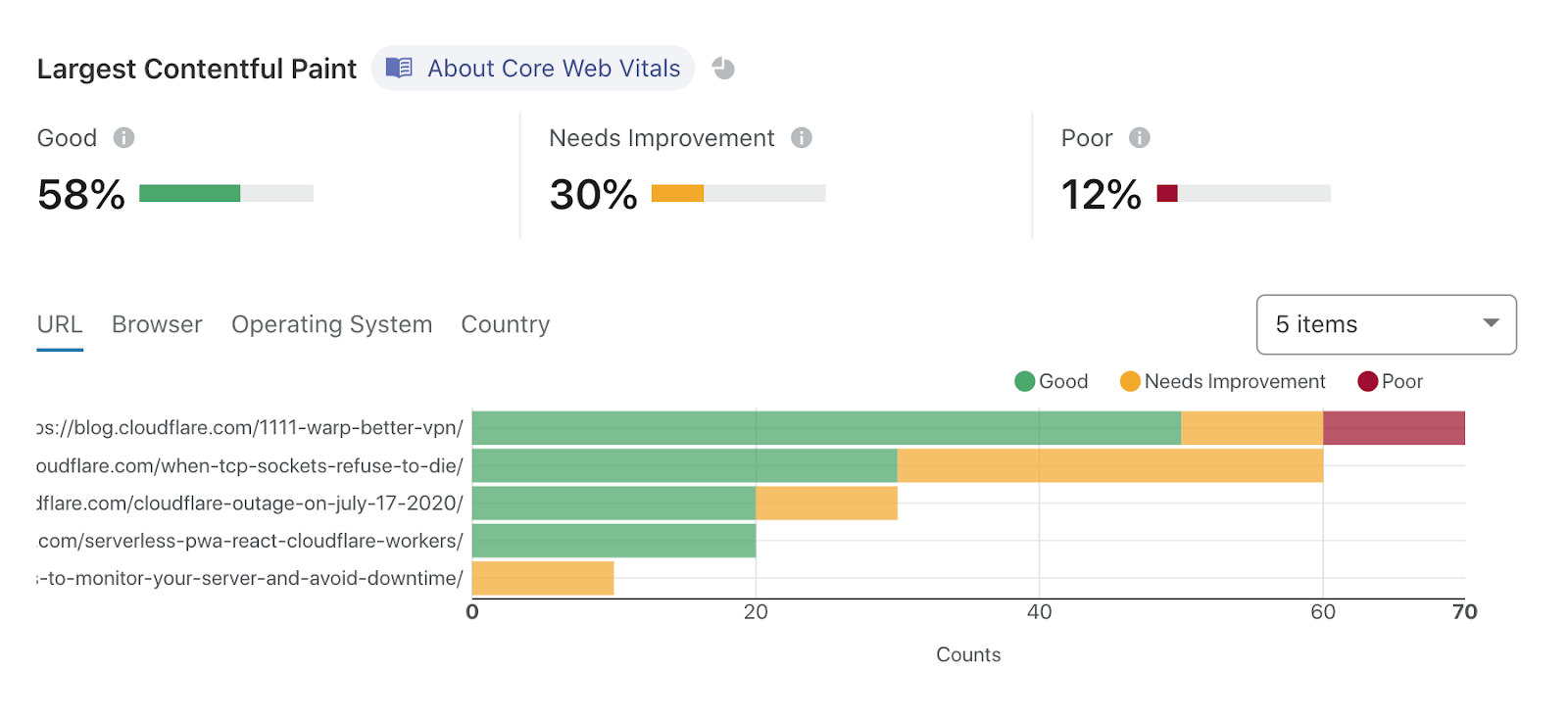

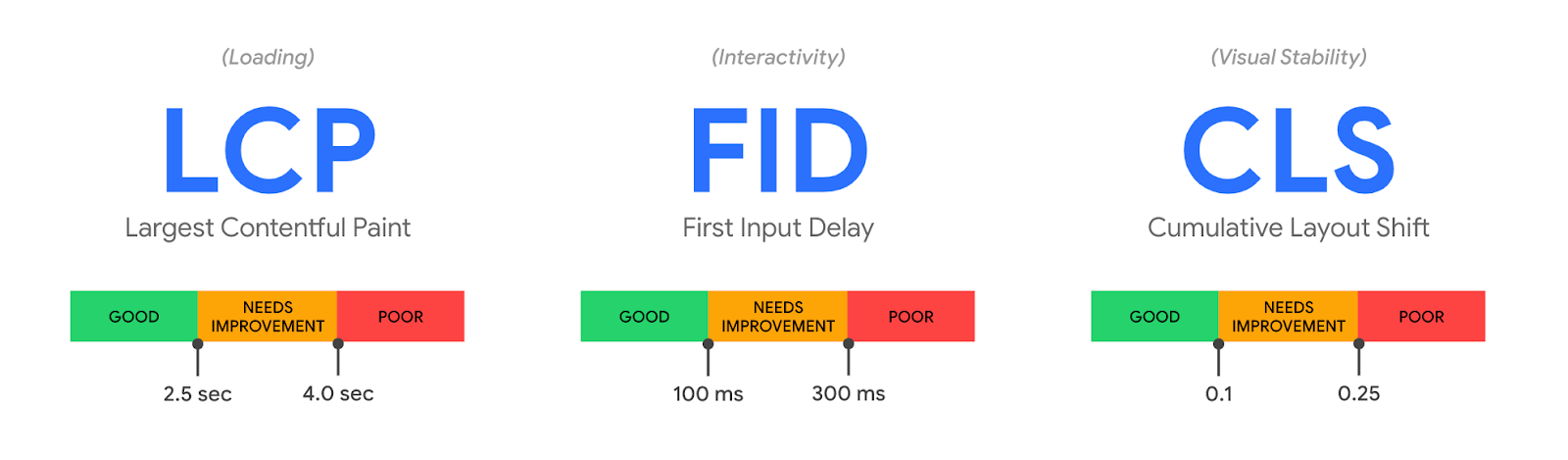

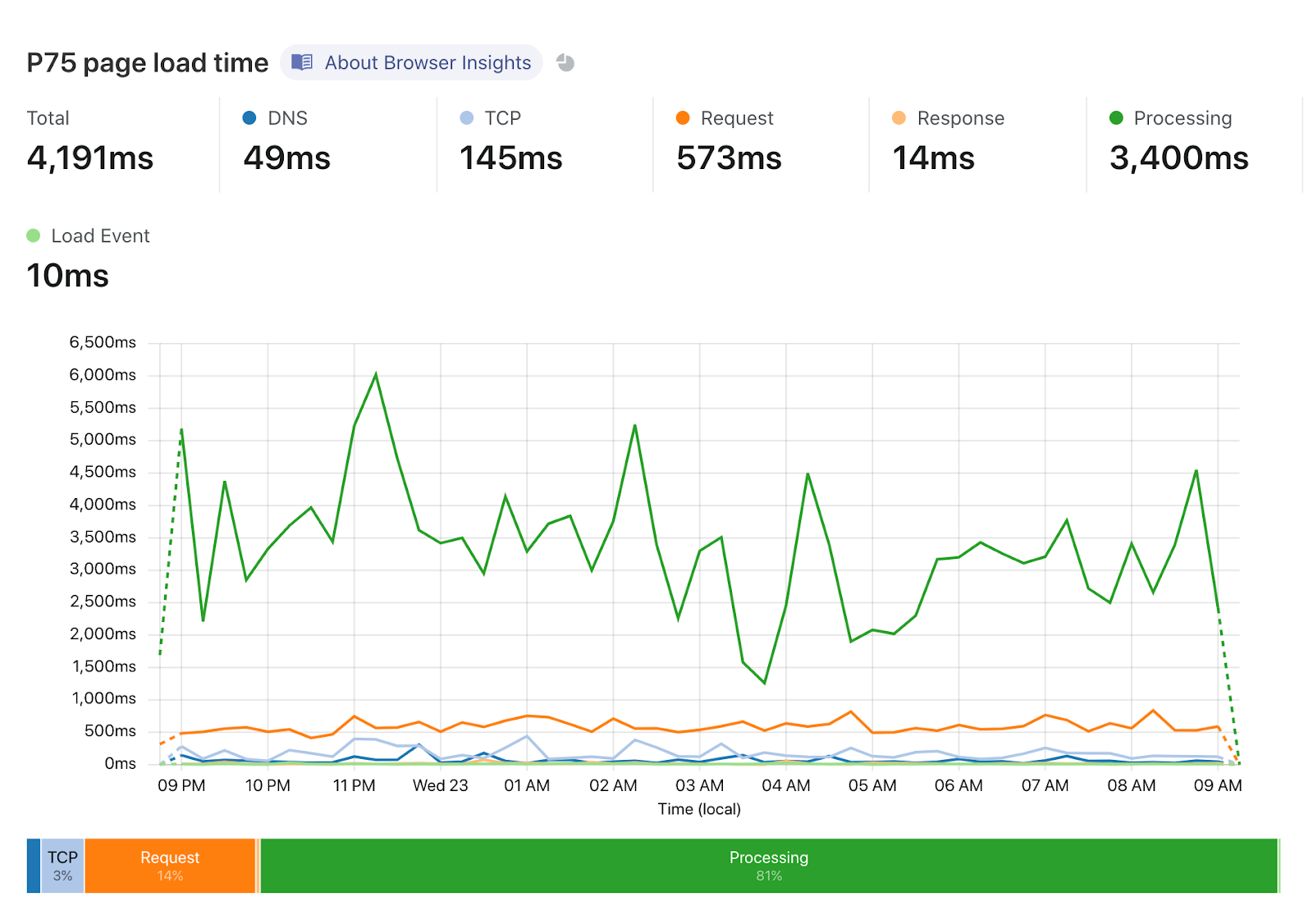

That wasn’t all on Tuesday, though. Another crucial aspect of owning a web property is website performance. Not only does it impact user experience, Google uses a blended measure of performance to inform site ranking in their search results. Google’s Chrome team has been doing some great work on metricizing site performance, and that’s culminated in Web Vitals. We’ve worked with the Chrome team to integrate Web Vitals in our Browser Insights product. You’ve always gotten edge-side performance analytics from Cloudflare, but now, you’re not just seeing the server side view of your web performance: it’s blended with how your users perceive performance, too. We take all that data and present it in a pragmatic way to help you figure out what you need to do to optimize the performance of your site.

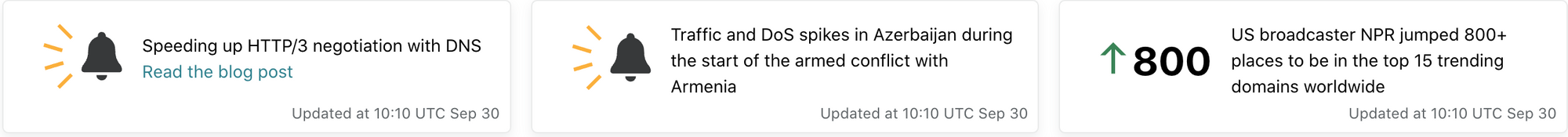

Day 3, Wednesday: Cloudflare Radar and Speeding up HTTPS/HTTP3

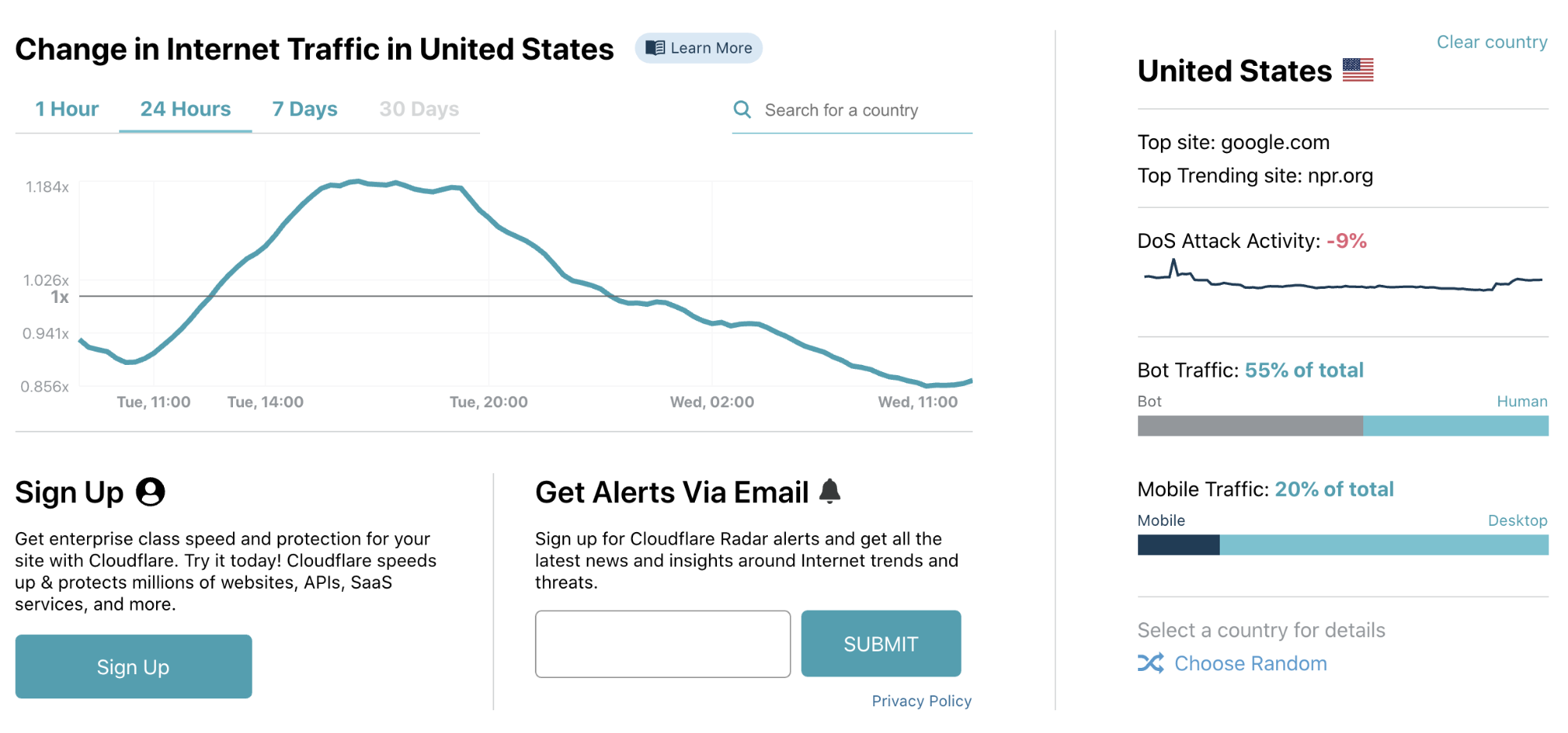

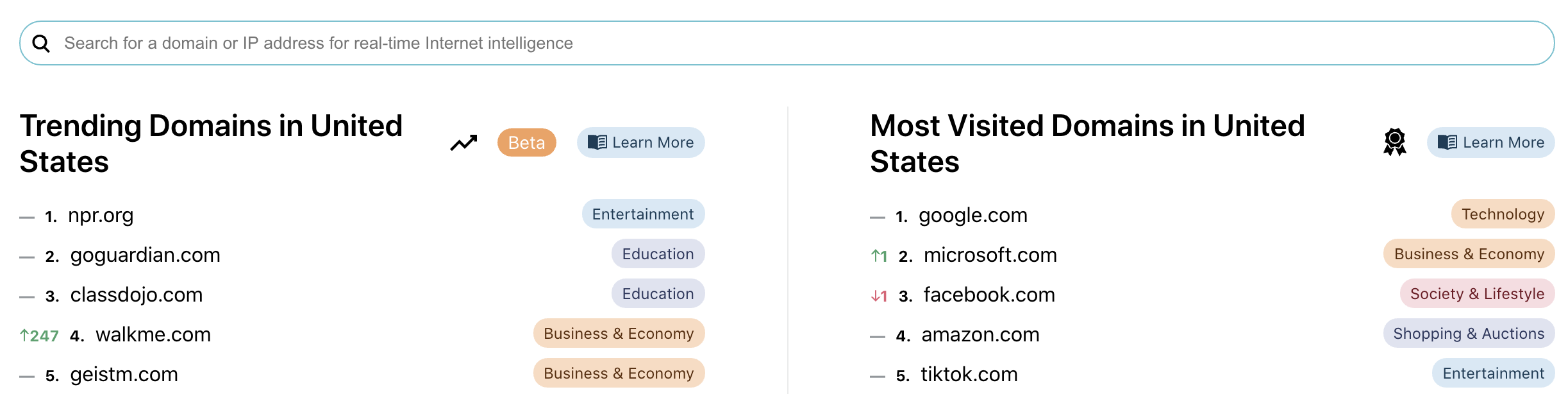

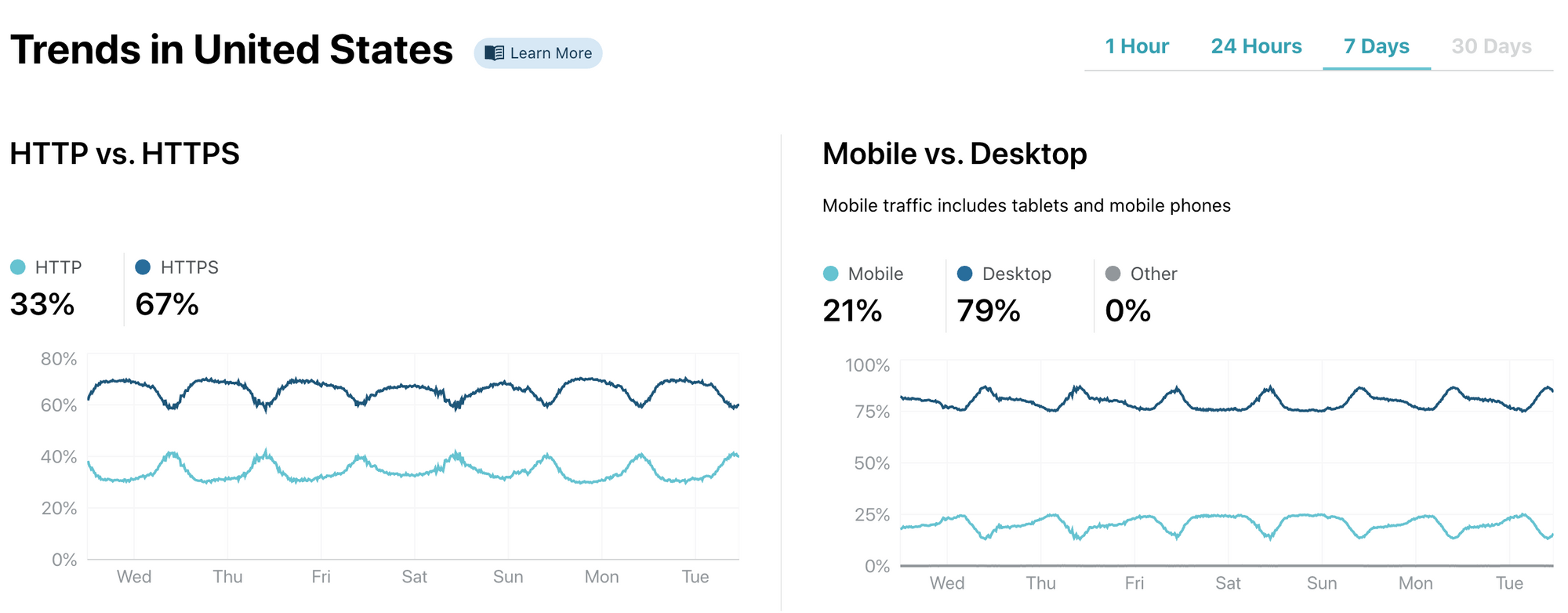

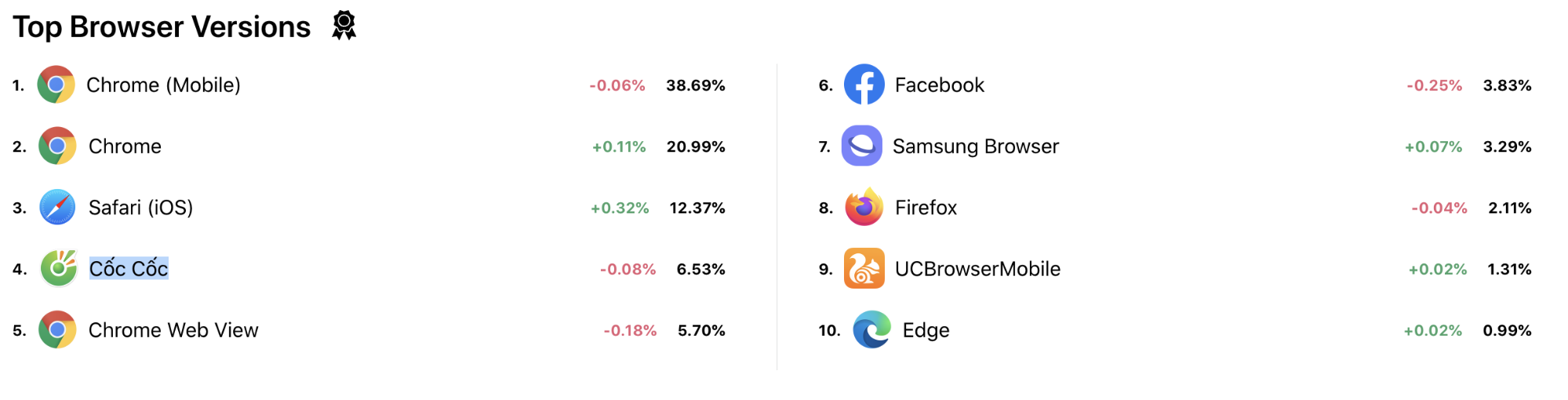

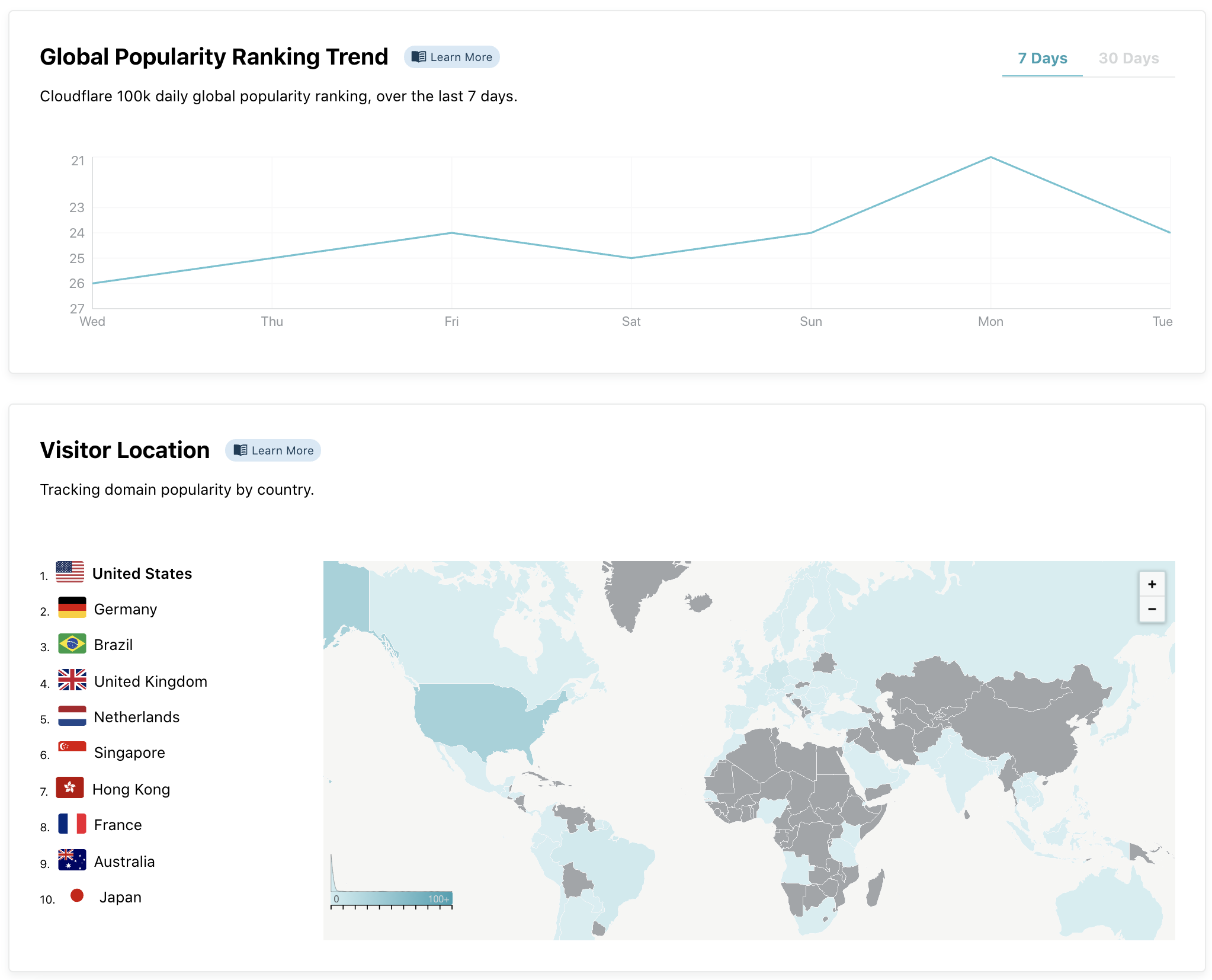

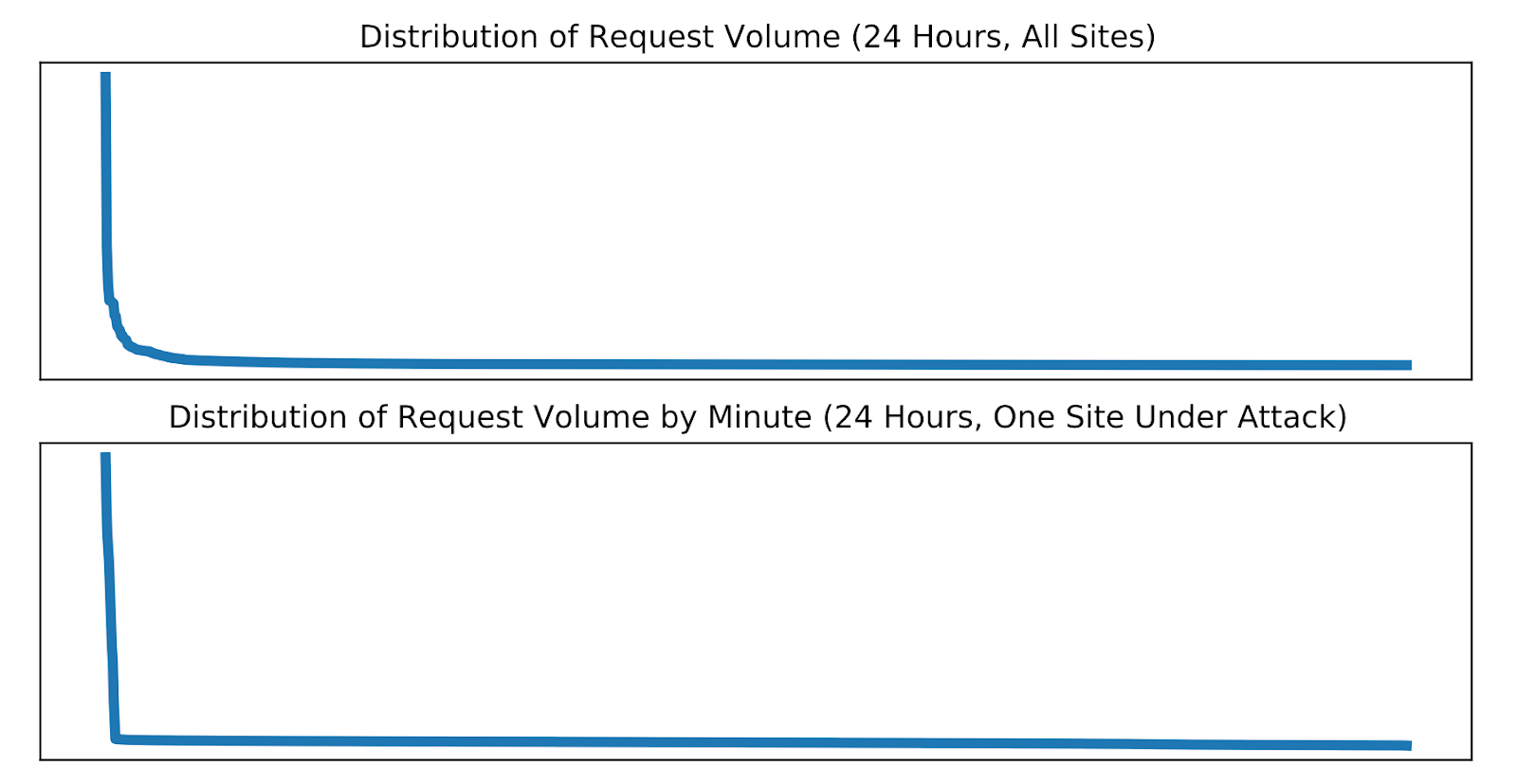

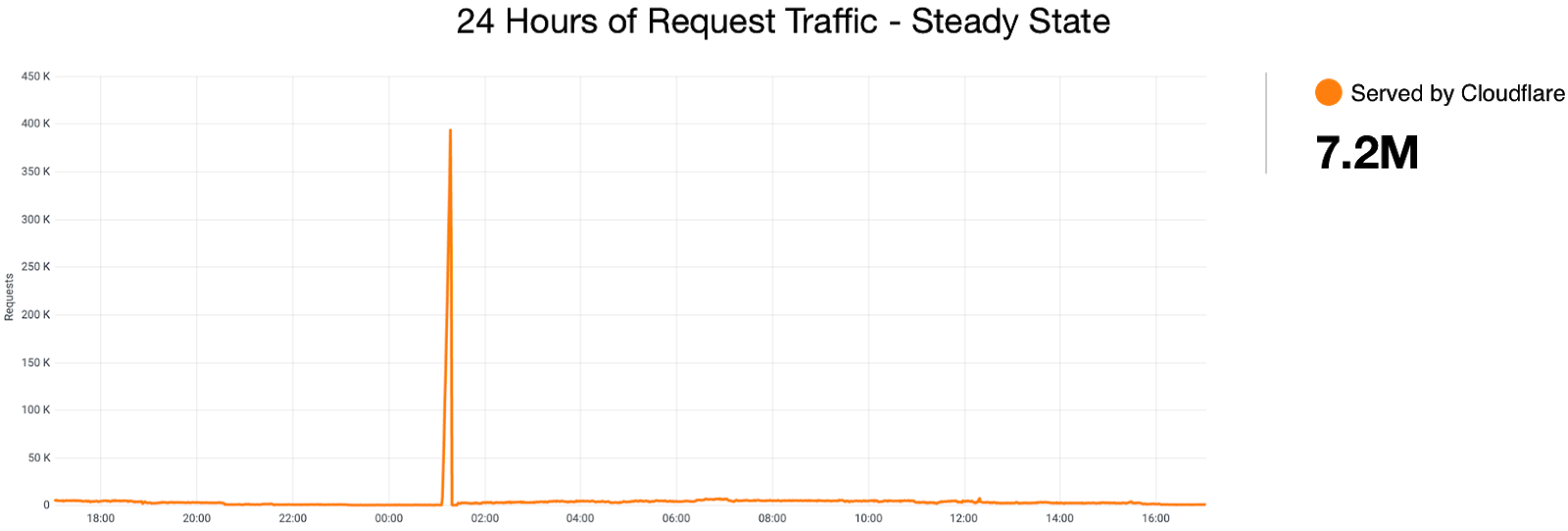

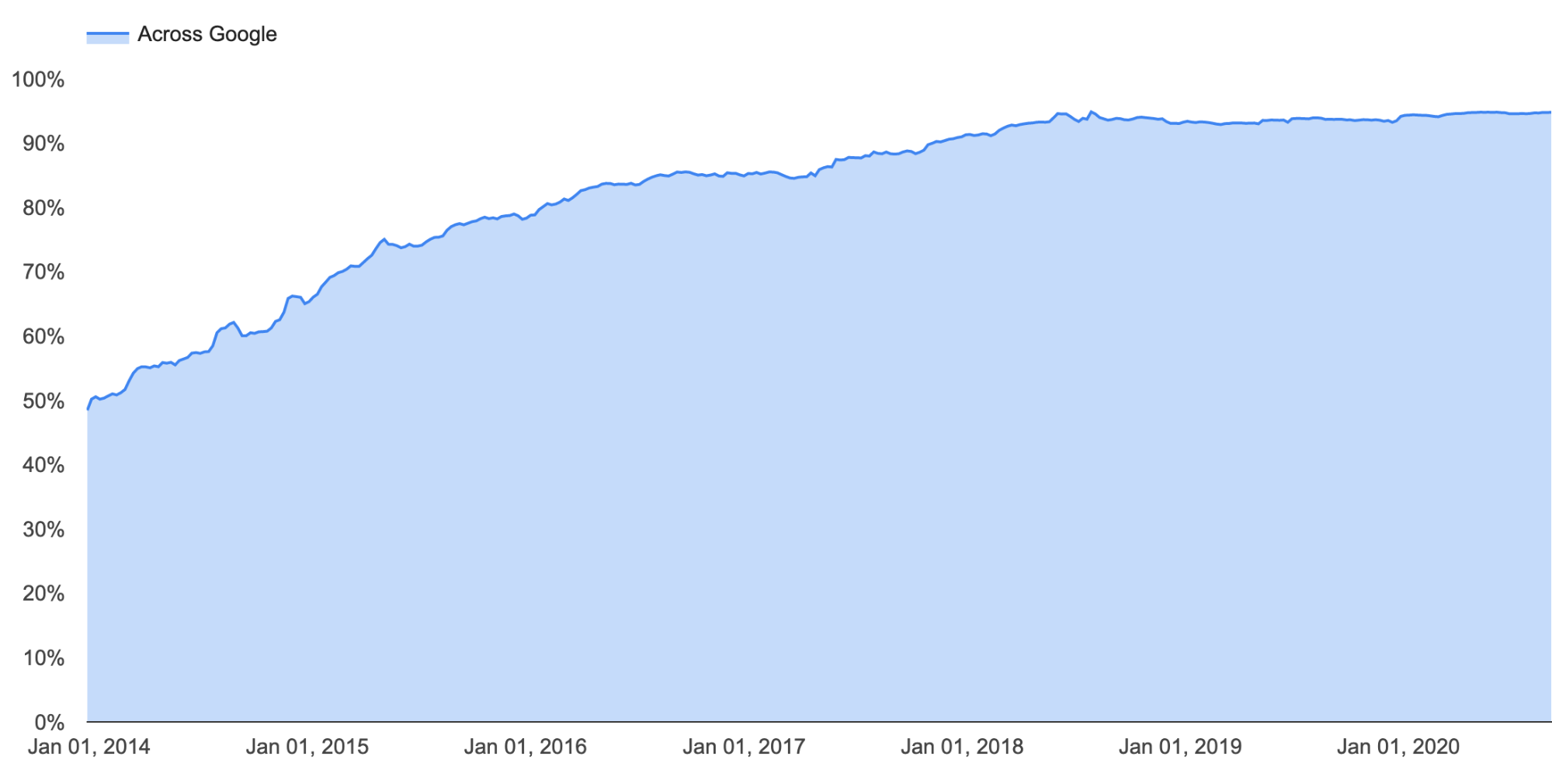

As of today, Cloudflare sits in front of 14.5% of the world’s top 10 million websites. The privilege of getting to serve so many different customers means we get visibility into a lot of things on the web. Wednesday of birthday week was about us taking advantage of that for everyone who is out on the web today.

If you think about the traffic flowing through a city at any given time, it’s like a living, breathing creature. It ebbs and flows; it has rhythms that follow the sun and moon. Unusual events can cause traffic jams; as can accidents. Many cities have traffic reporting services for exactly this reason; knowing what’s going on can help immensely those that need to navigate the city streets. The web is like a global version of this, and given the role that the Internet now plays for humanity, understanding what’s going on probably equals in importance to all those city traffic reports all around the world.

And yet, when you want to get the equivalent of that traffic report, where do you go?

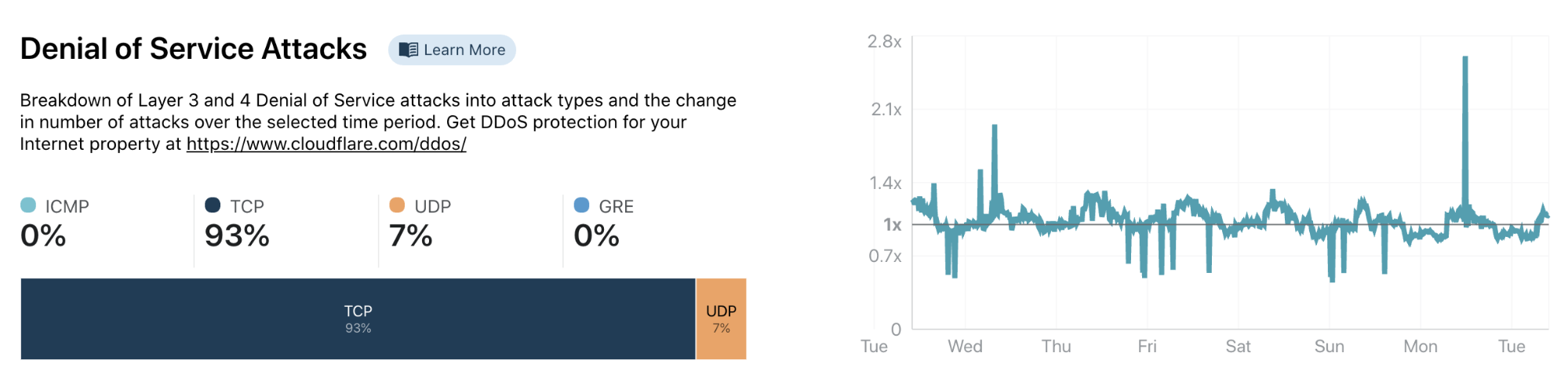

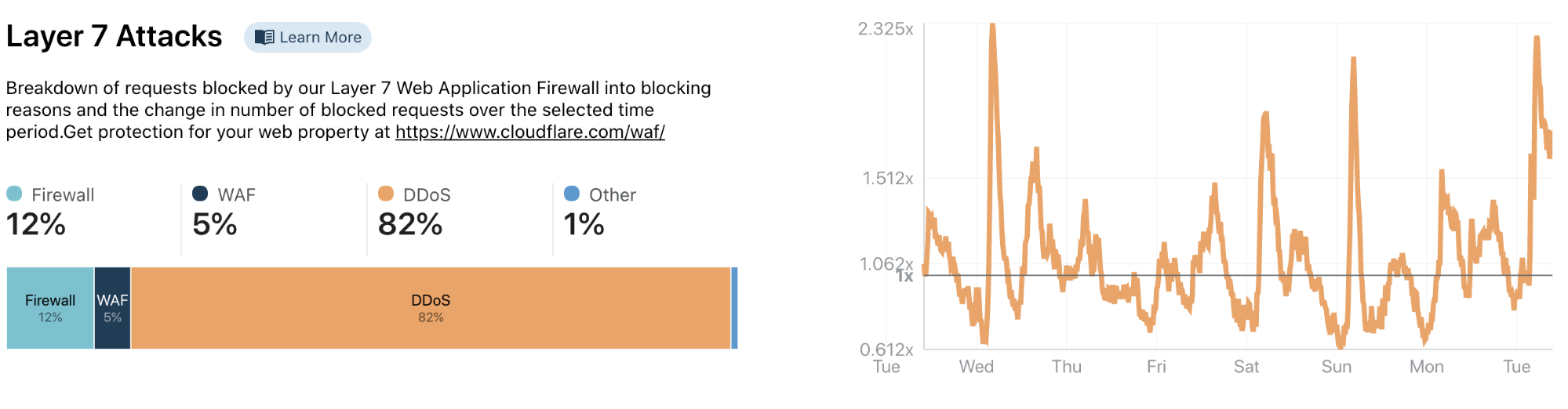

Cloudflare Radar is our answer to that question. Each second, Cloudflare handles on average 18 million HTTP requests and 6 million DNS requests. We block 72 billion cyberthreats every day. Add to that 1 billion unique IP addresses connecting to Cloudflare’s network, we have one of the most representative views on Internet traffic worldwide. Before Radar, all this activity, good and bad, was only available internally at Cloudflare: we used it to help improve our service and protect our customers. With the release of Radar, however, we’re exposing it externally: shining a light on the Internet’s patterns for the world to see.

On the subject of spotting interesting patterns. Back in late June, our team noticed a weird spike in DNS requests for the 65479 Resource Record. It turns out, these spikes were a part of Apple’s iOS14 beta release — Apple were testing out a new SVCB/HTTPS record type. The aim: to patch a limitation that’s been inherent in the HTTPS and HTTP3 protocol. When a user types in a URL without specifying the protocol (e.g. HTTPS), the initial negotiation happens in plaintext because browsers will start with HTTP. Only once it’s established that an HTTPS or HTTP3 resource exists will the browser transition over to that. The problem here is twofold: latency, and also security.

But you know what happens before any HTTP negotiation can happen? A DNS request. And that’s what Apple had implemented that created this interesting pattern: the DNS request was effectively asking whether the site supported HTTPS, or HTTP3. As of Wednesday during birthday week, Cloudflare’s DNS servers will now automatically generate HTTPS records on the fly to advertise whether a particular zone supports HTTP/3 and/or HTTP/2, based on whether those features are enabled on the zone. The result: better performance, and improved security. Who says you need to pick just one?

Day 4, Thursday: API day

Nobody has ever doubted the importance of user interfaces. Finding ways for humans and computers to engage each other has been an area of focus since the very first computers were invented. But as the web has grown, data has become the new oil, and applications have proliferated, there’s another interface that has grown in importance: the interface between different types of applications. Day 4 of Birthday Week was all about APIs.

The first announcement was beta support for gRPC: a new type of protocol that’s intended for building APIs at scale. Most REST APIs use HTTPS and JSON to communicate values. The problem with these is that they’re really designed for that other type of interface mentioned above: for humans to talk to computers. The upside is it makes things human readable; the downside is they’re really inefficient, and as the use of APIs only continues to explode this inefficiency proliferates. The gRPC protocol is an answer to this: it’s an efficient protocol for computers to talk to each other. But up until now, that also came at a price: because gRPC uses newer technology (like HTTP/2) under the covers, existing security and performance tools did not support gRPC traffic out of the box. This meant that customers adopting gRPC to power their APIs had to pick between modernity on one hand, and things like security, performance, and reliability on the other.

Cloudflare’s announcement of support of gPRC fixes this trade-off: when you put your gPRC APIs on Cloudflare, you get all the traditional benefits of Cloudflare along with it. Apprehensive of exposing your APIs to bad actors? Need more performance? Turn on Argo Smart Routing to decrease time to first byte. Increase reliability by adding a Load Balancer. Or add security features such as Bot Management and the WAF.

Speaking of the WAF. If you think about the way our WAF works, it secures web application from attacks by looking for attack patterns — say, bot patterns that try to imitate human patterns, or abuse of how a browser interacts with a site; in both instances, the attack is intended to break something. But because what computers need to talk to each other is different from what computers need to talk to humans, the attack vectors are different. Therefore protecting APIs isn’t quite the same as protecting websites.

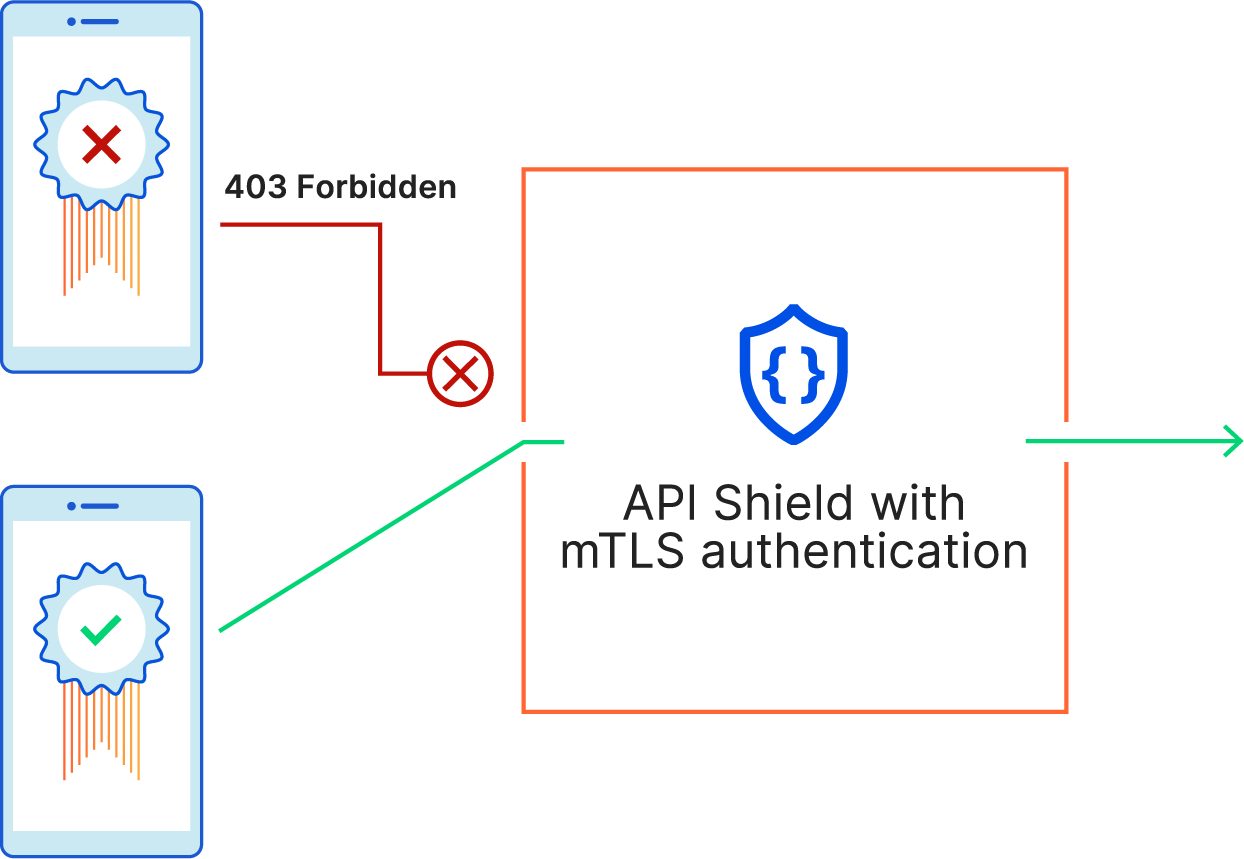

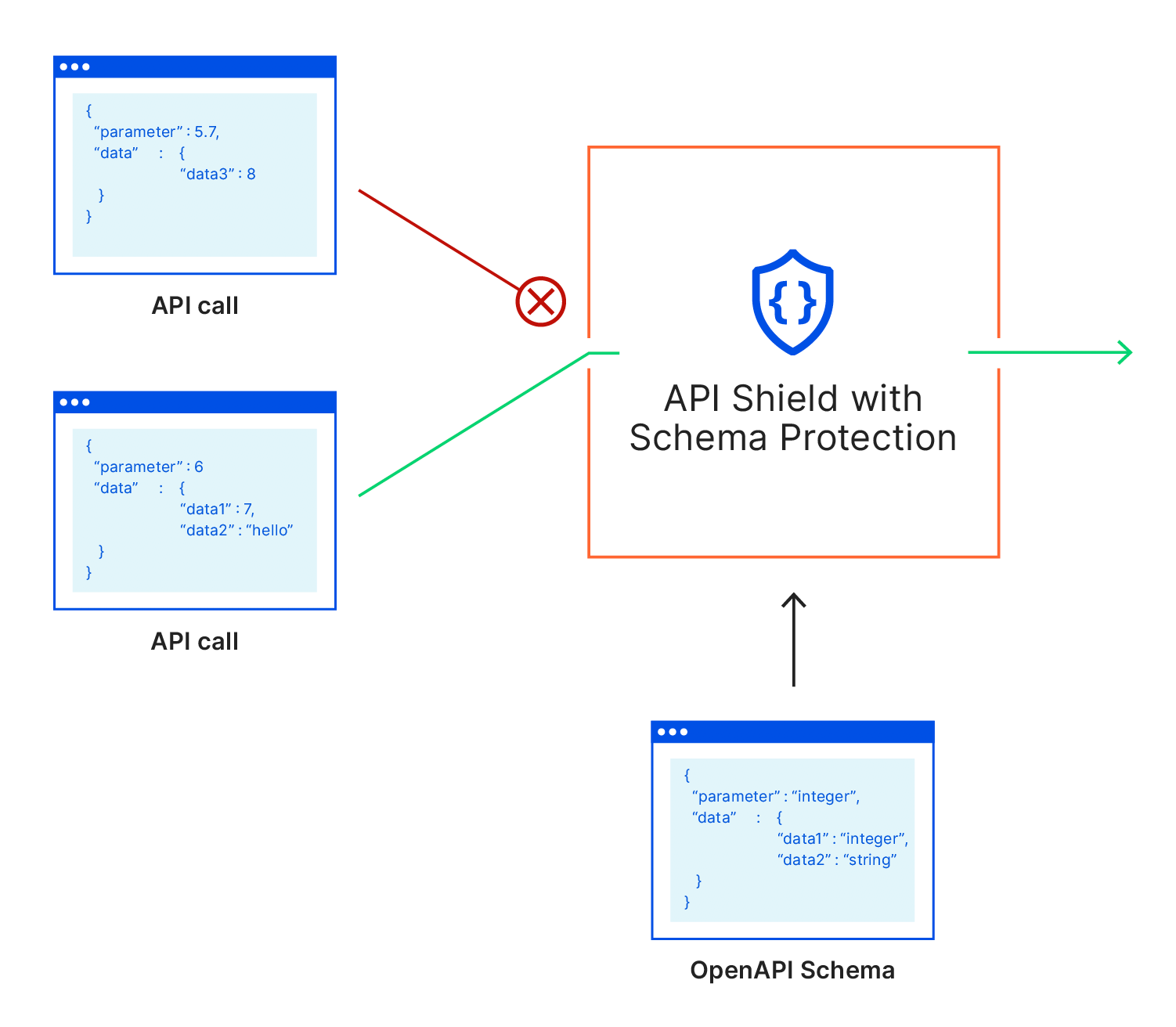

API Shield is purpose-built for just this. It makes it simple to secure APIs through the use of strong client certificate-based authentication, and strict schema-based validation. On the authentication side, API shield uses mutual TLS — which is not vulnerable to the reuse or sharing of passwords or tokens. And once developers can be sure that only legitimate clients (with SSL certificates in hand) are connecting to their APIs, the next step in API Shield is making sure that those clients are making valid requests. It works by matching the contents of API requests—the query parameters that come after the URL and contents of the POST body—against a contract or “schema” that contains the rules for what is expected. If validation fails, the API call is blocked protecting the origin from an invalid request or a malicious payload.

And, as you’d expect from Cloudflare, gRPC and API Shield support each other out of the box.

Day 5, Friday: Automatic Platform Optimization (starting with WordPress)

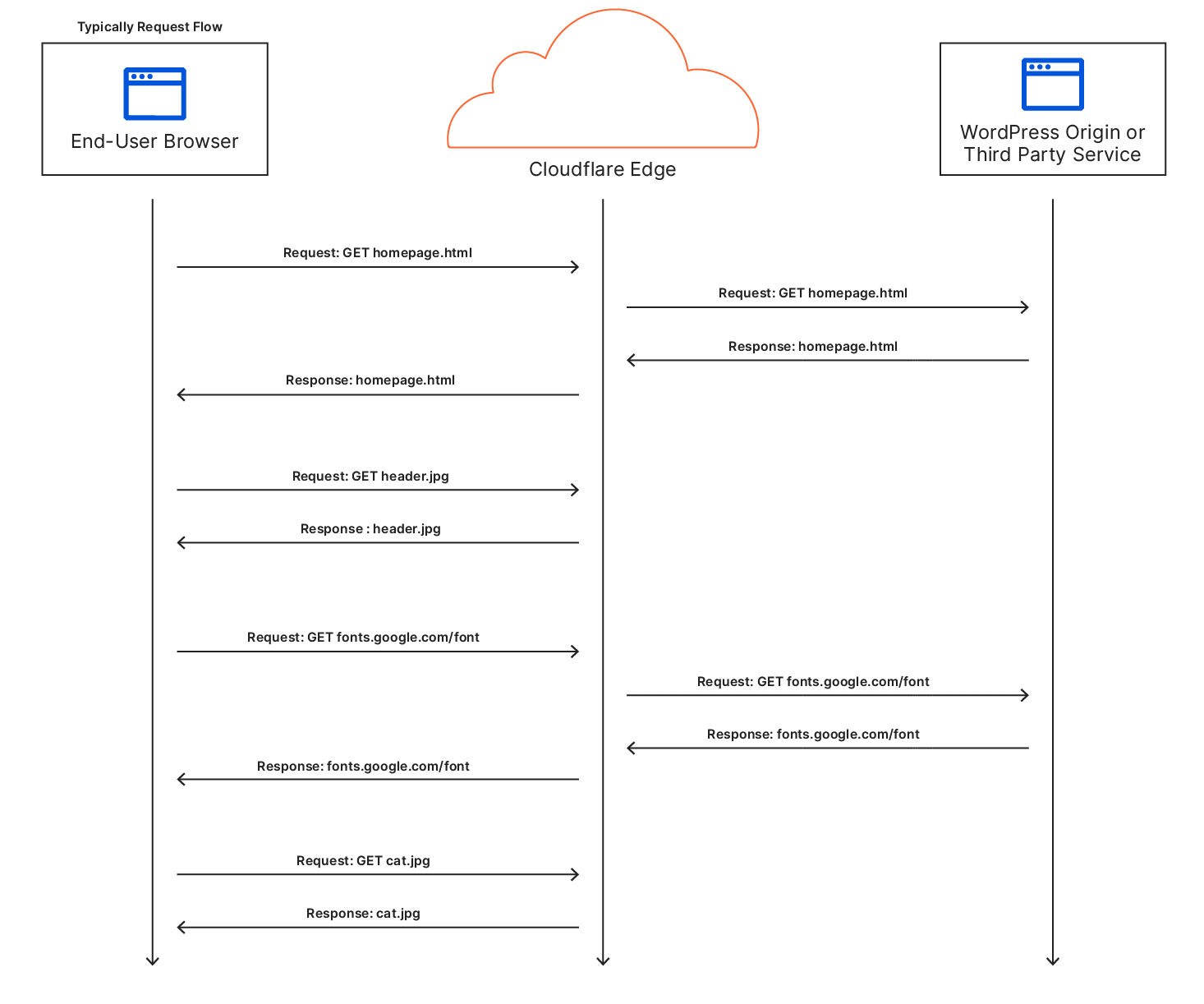

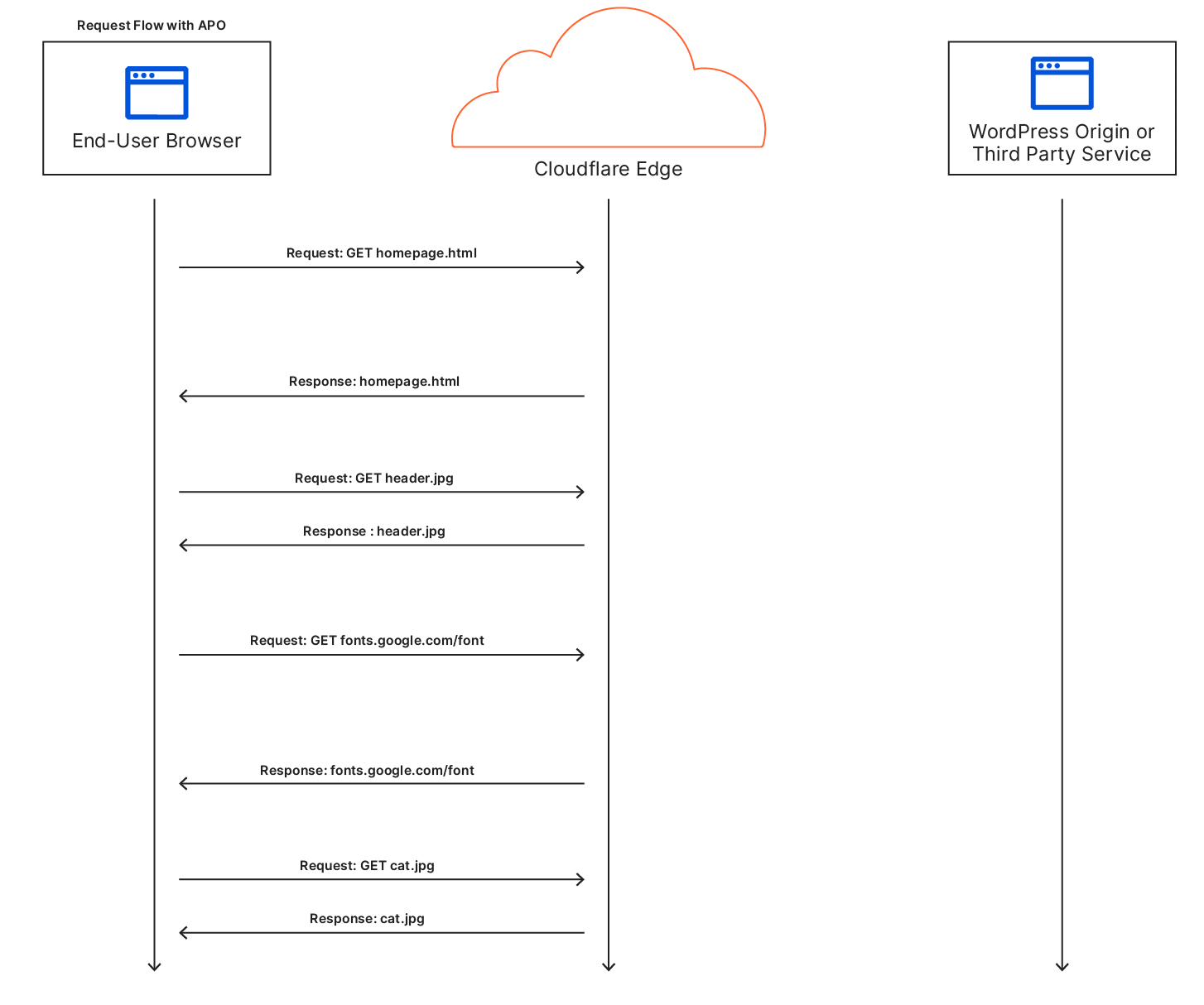

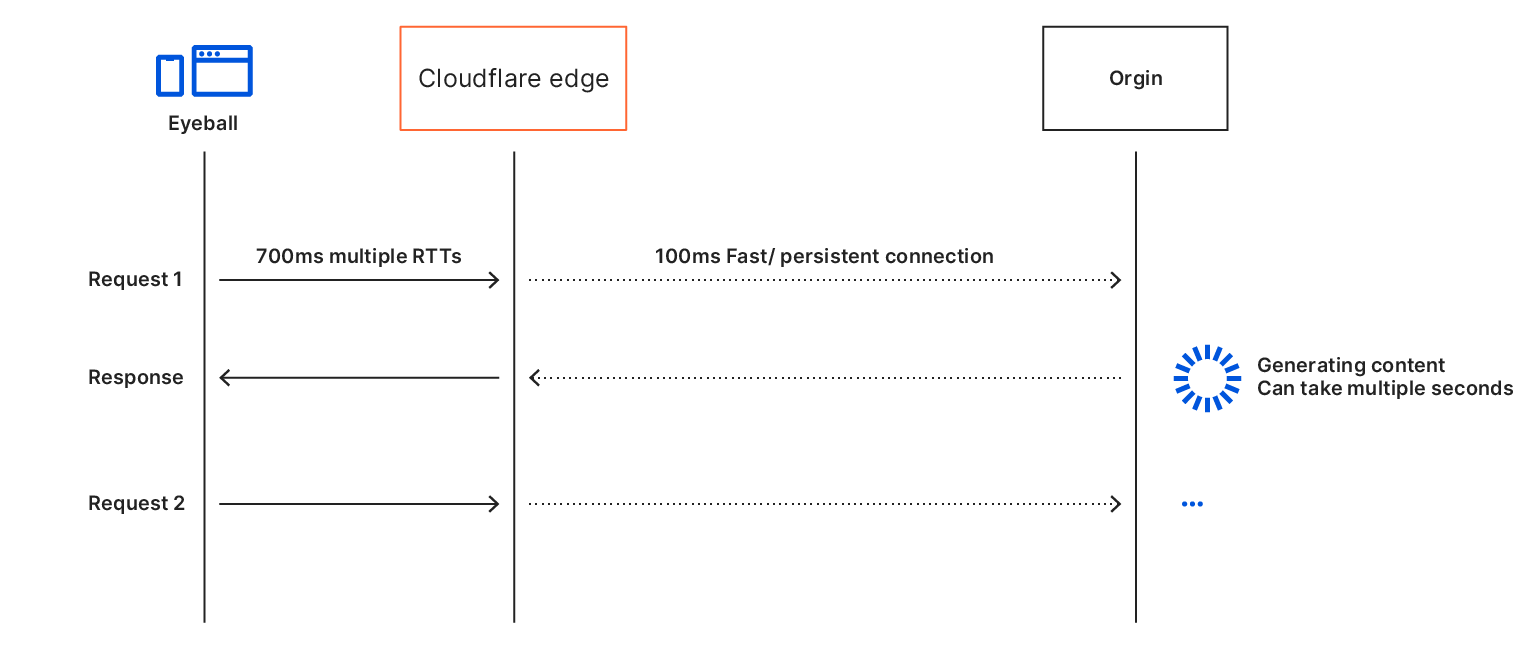

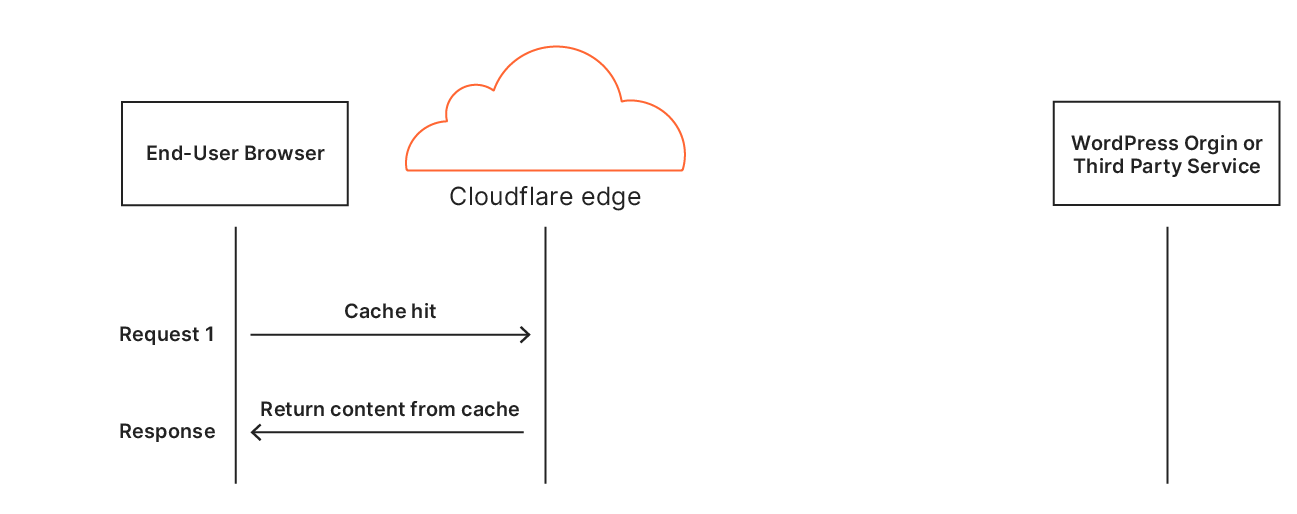

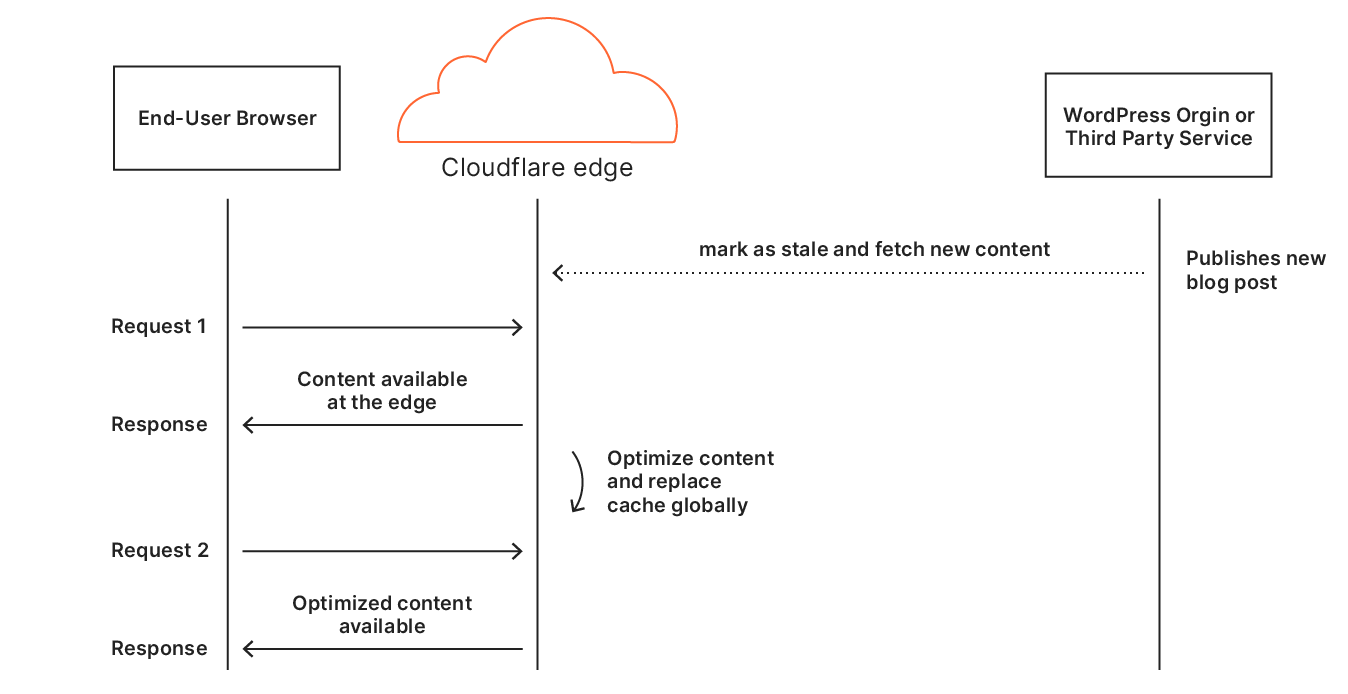

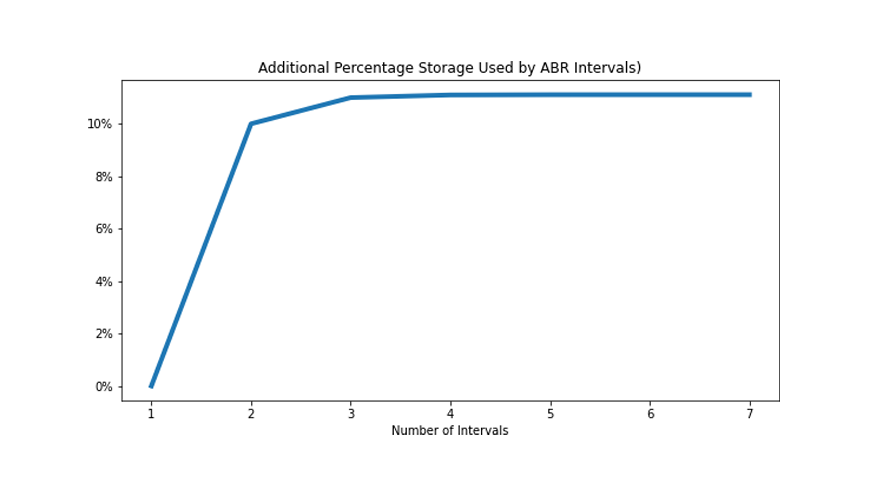

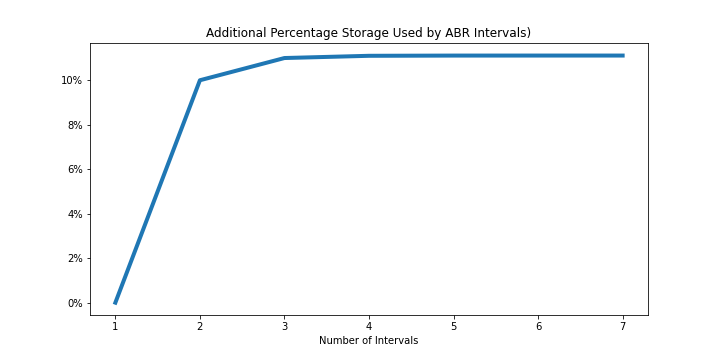

The idea of caching static assets is not new, and it’s something Cloudflare has supported from its inception. It works wonders in speeding up websites: particularly if your origin is slow and/or your user is far from the origin server, then all your performance metrics will be affected. Caching also also has the added benefit of reducing load on origin servers.

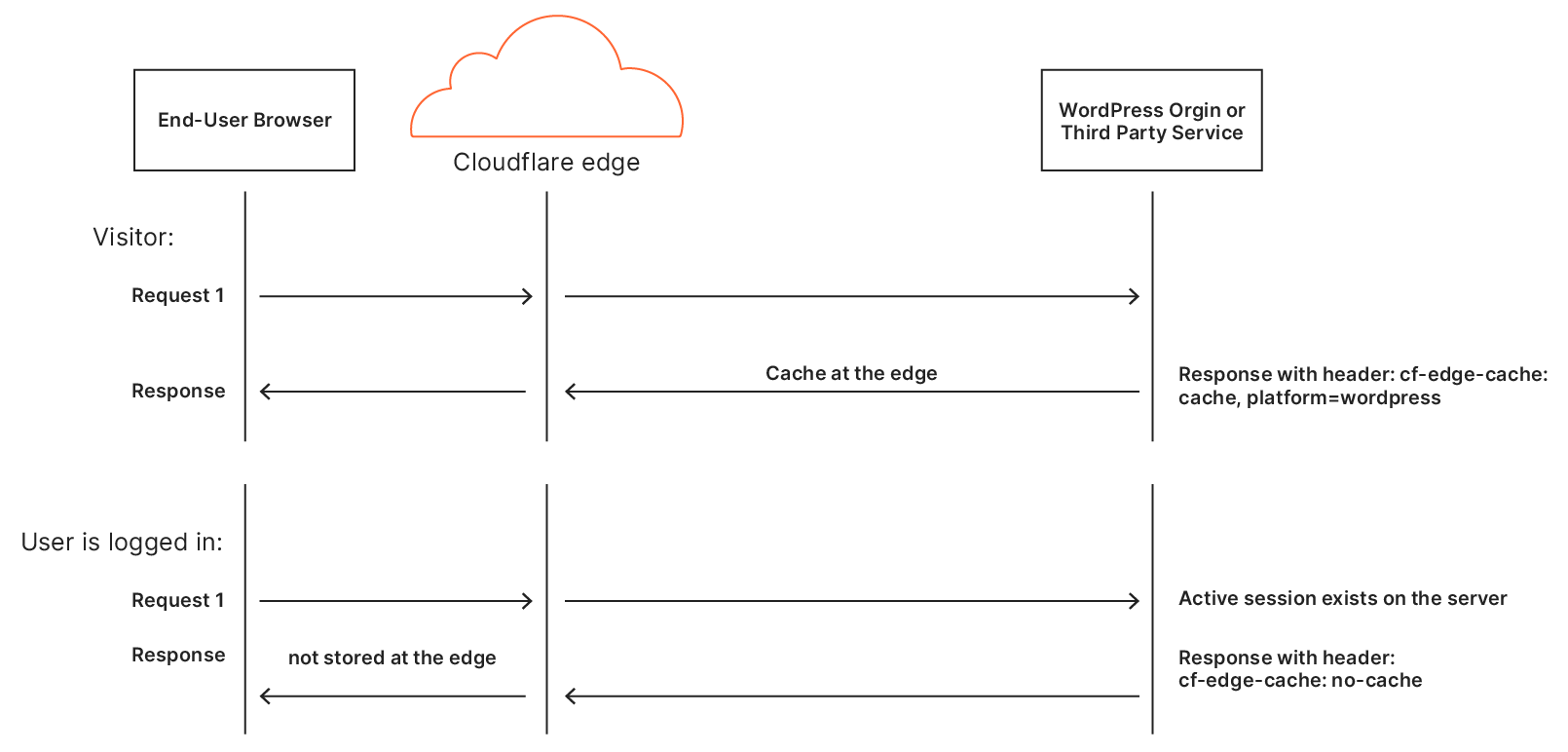

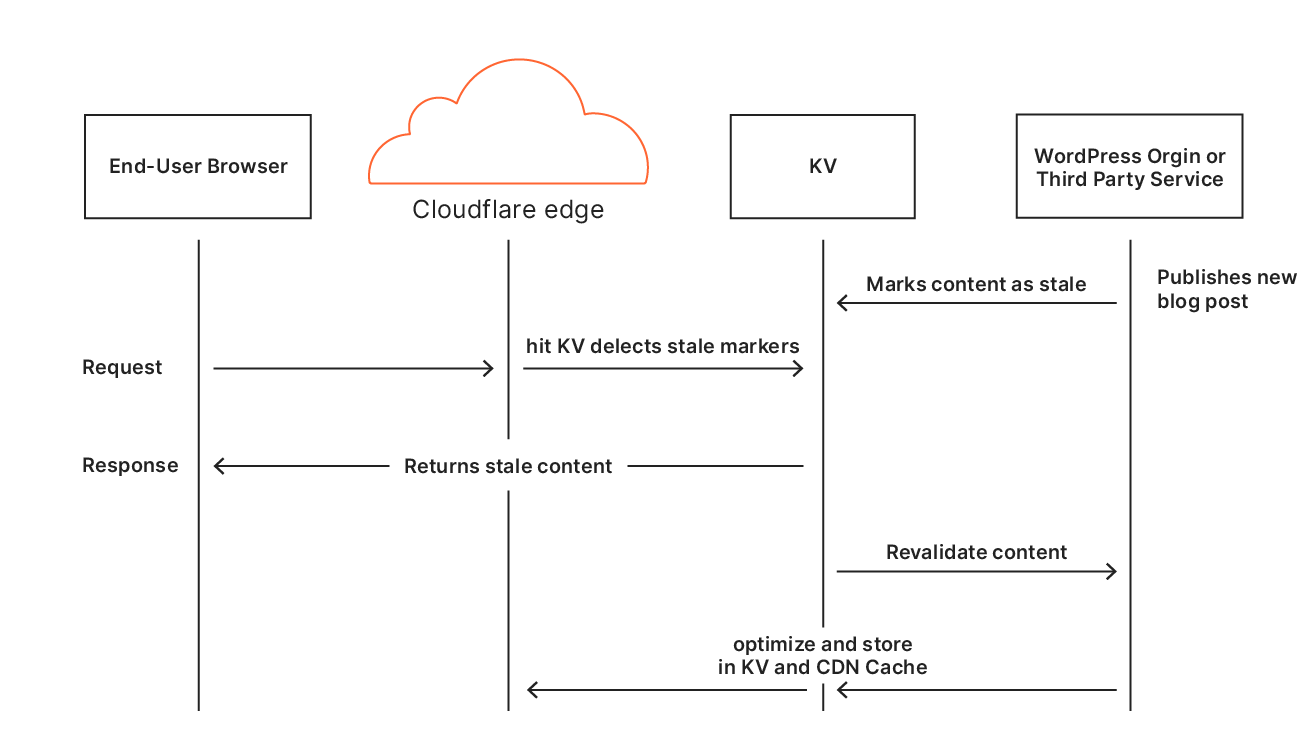

However, things get a little more tricky when it comes to dynamic assets: if the asset could change, shouldn’t you go back to the origin just to make sure? For this reason, by default, Cloudflare doesn’t cache HTML content: there’s a chance it’s going to change for each user. The reality is though, most HTML isn’t really dynamic. It needs to be able to change relatively quickly when the site is updated but for a huge portion of the web, the content is static for months or years at a time. There are special cases like when a user is logged-in (as the admin or otherwise) where the content needs to differ but the vast majority of visits are of anonymous users.

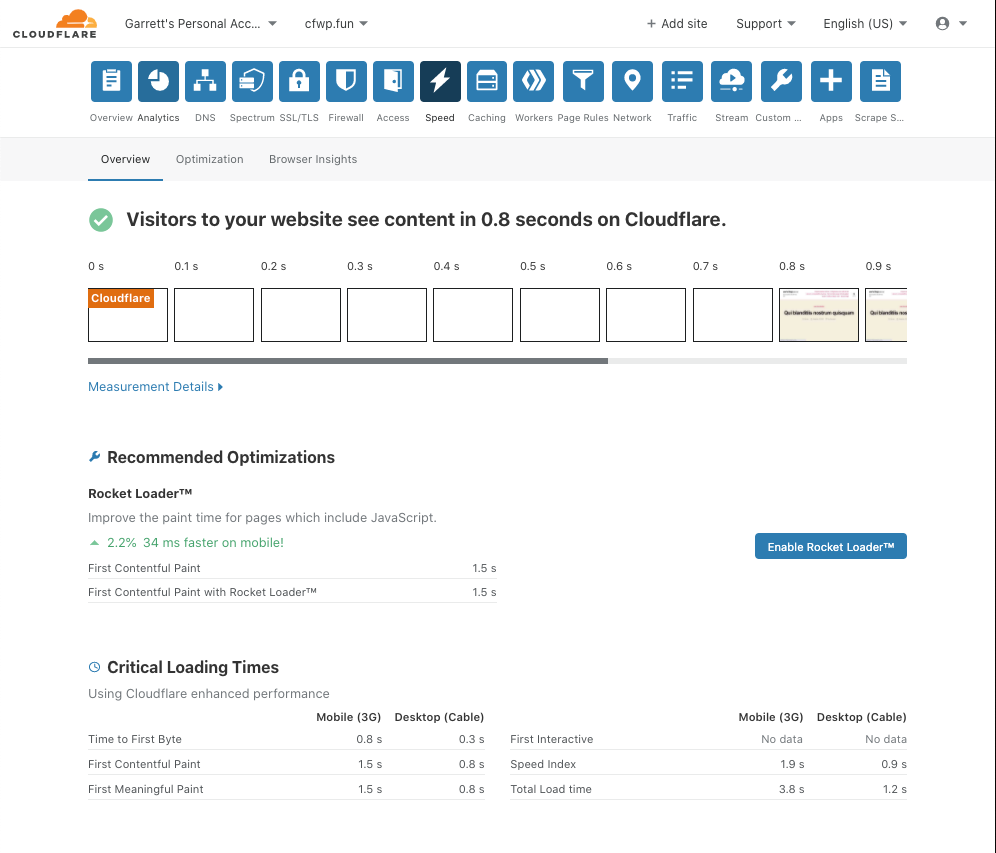

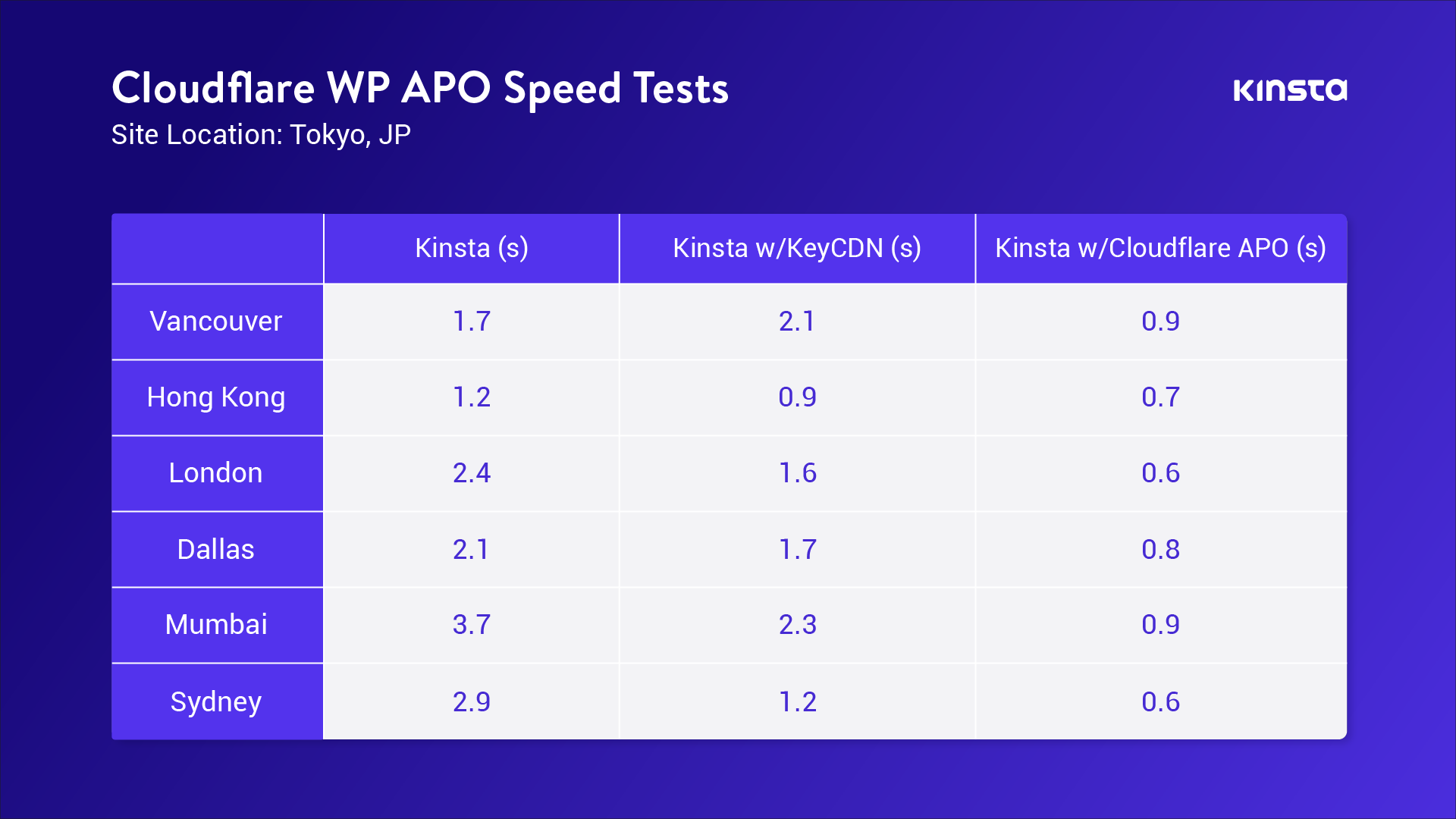

Automatic Platform Optimization, which was announced on Friday, brings more intelligence to this — allowing us to figure out when we should be caching HTML, and when we shouldn’t. The advantage of this is it moves more content closer to the user, and it does it automagically — there’s no configuration required. The benefits aren’t trivial: a 72% reduction in Time to First Byte (TTFB), 23% reduction to First Contentful Paint, and 13% reduction in Speed Index for desktop users at the 90th percentile. We’re starting off with support for WordPress — 38% of all websites, but the plan is to expand this to other platforms in the near future.

All day, every day: Cloudflare TV

Ten years is a long time. The milestone for Cloudflare seemed to be the perfect opportunity to look back over the last ten years of the Internet — what’s changed, what’s surprised us? And more than that: what’s coming over the next ten years?

To look back and then peer out into the future, we were humbled to be joined by some of the most celebrated names in tech and beyond. Among the highlights: Apple co-founder Steve Wozniak, Zoom CEO Eric Yuan, OpenTable CEO Debby Soo, Stripe co-founder and President John Collison, Former CEO & Executive Chairman of Google and Co-Founder of Schmidt Futures Eric Schmidt, former McAfee CEO Chris Young, former Seal Team 6 Commander Dave Cooper, Project Include CEO Ellen Pao, and so many more. All told, it was 24 hours of live discussions over the course of the week.

And with that, it’s a wrap! To everyone who has been a part of the Cloudflare journey over the past 10 years: our customers, folks on the team, friends and supporters, and our partners all around the world: thank you. It’s been an incredible ride.

And, as our co-founder Michelle likes to say, we’re just getting started.