Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=5SR3DLURelw

Измък – примък

Post Syndicated from original https://bivol.bg/%D0%B8%D0%B7%D0%BC%D1%8A%D0%BA-%D0%BF%D1%80%D0%B8%D0%BC%D1%8A%D0%BA.html

Аз дарих. Направих каквото е според скромните ми възможностите за бежанците от войната, която започнаха ватниците на територията на една независима държава. Ватниците започнаха войната, защото искат светът да бъде…

Build a custom Java runtime for AWS Lambda

Post Syndicated from Marcia Villalba original https://aws.amazon.com/blogs/compute/build-a-custom-java-runtime-for-aws-lambda/

This post is written by Christian Müller, Principal AWS Solutions Architect and Maximilian Schellhorn, AWS Solutions Architect

When running applications on AWS Lambda, you have the option to use either one of the managed runtime versions that AWS provides or bring your own custom runtime. The following blog post provides a walkthrough of how you can create and optimize a custom runtime for Java based Lambda functions.

Builders might rely on customized or experimental runtime behavior when creating solutions in the cloud. The Java ecosystem fosters innovation and encourages experiments with the current six-month release schedule for the latest runtime versions.

However, Lambda focuses on providing stable long-term support (LTS) versions. The official Lambda runtimes are built around a combination of operating system, programming language, and software libraries that are subject to maintenance and security updates. For example, the Lambda runtime for Java supports the LTS versions Java 8 Corretto and Java 11 Corretto as of April 2022. The Java 17 Corretto version is pending. In addition, there is no provided runtime for non LTS versions like Java 15 Corretto, Java 16 Corretto, or Java 18 Corretto.

To use other language versions, Lambda allows you to create custom runtimes. Custom runtimes allow builders to provide and configure their own runtimes for running their application code. To enable communication between your custom runtime and Lambda, you can use the runtime interface client library in Java.

With the introduction of modular runtime images in Java 9 (JEP 220), it is possible to include only the Java runtime modules that your application depends on. This reduces the overall runtime size and increases performance, especially during cold-starts. In addition, there are other techniques in Java, like class data sharing and tiered compilation, which allow you to reduce the startup time of your application even further.

To combine those capabilities, this blog post provides an overview for creating and deploying a minified Java runtime on Lambda by using Java 18 Corretto. For step-by-step instructions and prerequisites, refer to the official GitHub example.

Overview of the example

In the following example, you build a custom runtime for a basic Java application that writes request headers to Amazon DynamoDB and is fronted by Amazon API Gateway.

The following diagram summarizes the steps to create the application and the custom runtime:

- Download the preferred Java version and take advantage of jdeps, jlink and class data sharing to create a minified and optimized Java runtime based on the application code (function.jar).

- Create a bootstrap file with optimized starting instructions for the application.

- Package the application code, the optimized Java runtime, and the bootstrap file as a zip file.

- Deploy the runtime, including the app, to Lambda. For example, using the AWS Cloud Development Kit (CDK)

Steps 1–3 are automated and abstracted via Docker. The following section provides a high-level walkthrough of the build and deployment process. For the full version, see the Dockerfile in the GitHub example.

Creating the optimized Java runtime

1. Download the desired Java version and copy the local application code to the Docker environment and build it with Maven:

2. This step results in an uber-jar (function.jar) that you can use as an input argument for jdeps. The output is a file containing all the Java modules that the function depends on:

3. Create an optimized Java runtime based on those application modules with jlink. Remove unnecessary information from the runtime, for example header files or man-pages:

4. This creates your own custom Java 18 runtime in the /jre18-slim folder. You can apply additional optimization techniques such as Class-Data-Sharing (CDS) to generate a classes.jsa file to accelerate the class loading time of the JVM.

Adding optimized starting instructions

You must tell the Lambda execution environment how to start the application. You can achieve that with a bootstrap file that includes the necessary instructions. In addition, you can define parameters to improve the performance further. For example, you could use tiered compilation and SerialGC.

The following snippet represents an example of a bootstrap file:

Packaging the components

Combine the bootstrap file, the custom Java runtime, and the application code in a zip file for later use as the deployment package:

The GitHub example provides a build.sh script to run the above-mentioned process via Docker. This results in a runtime.zip that you can then use as a deployment package.

Deploying the application with the custom runtime

To deploy the custom runtime, use AWS CDK. This allows you to define the needed infrastructure as code more easily in your favorite programming language.

The following code snippet shows how to create a Lambda function from a custom runtime:

To deploy the application and output the necessary API Gateway URL to invoke the Lambda function, use the following command or use the provided provision_infrastructure.sh script:

Testing the application and validating the example results

After deployment, you can load test the application with the open-source software project Artillery.

The following command creates 120 concurrent invocations of the Lambda function for a duration of 60 seconds. It uses the API Gateway URL that is exported after the AWS CDK successfully deployed the application:

Use CloudWatch Log Insights to query the Lambda logs and gather information about the cold start (initDuration) and duration percentiles:

The results provide an indication of how your application performs with the custom runtime. This is especially helpful when comparing different versions.

- Invocation time (@duration) for both cold and warm starts plus function initialization time (@initDuration) if it is a cold start:

- Function initialization time (@initDuration) only:

Conclusion

In this blog post, you learn how to create your own optimized Java runtime for AWS Lambda by using a variety of Java optimization techniques. This allows you to tailor your Java runtime to your application needs.

See the full example on GitHub and make use of your own preferred Java version. Add additional optimization steps in the Dockerfile or tune the parameters in the bootstrap file to optimize the start of the Java virtual machine.

In case you want to re-use your custom runtime in multiple Lambda functions, you can also distribute it via a Lambda layer.

For more serverless learning resources, visit Serverless Land.

LGPD workbook for AWS customers managing personally identifiable information in Brazil

Post Syndicated from Rodrigo Fiuza original https://aws.amazon.com/blogs/security/lgpd-workbook-for-aws-customers-managing-personally-identifiable-information-in-brazil/

AWS is pleased to announce the publication of the Brazil General Data Protection Law Workbook.

The General Data Protection Law (LGPD) in Brazil was first published on 14 August 2018, and started its applicability on 18 August 2020. Companies that manage personally identifiable information (PII) in Brazil as defined by LGPD will have to comply with and attend to the law.

To better help customers prepare and implement controls that focus on LGPD Chapter VII Security and Best Practices, AWS created a workbook based on industry best practices, AWS service offerings, and controls.

Amongst other topics, this workbook covers information security and AWS controls from:

- CIS Controls v8 – framework covering 18 domain controls

- NIST Cybersecurity Framework (CSF) – additional NIST CSF information available to consult

- NIST Privacy Framework – check additional information on mapping between AWS CAF and NIST Privacy Framework

- AWS Cloud Adoption Framework (AWS CAF) – AWS CAF 3.0 now available

- AWS Well-Architected Framework – read through correlation between AWS Well-Architected Framework, AWS CAF and NIST CSF

In combination with Brazil General Data Protection Law Workbook, customers can use the detailed Navigating LGPD Compliance on AWS whitepaper.

AWS adheres to a shared responsibility model. Customers will have to observe which services offer privacy features and determine their applicability to their specific compliance requirements. Further information about data privacy at AWS can be found at our Data Privacy Center. Specific information about LGPD and data privacy at AWS in Brazil can be found on our Brazil Data Privacy page.

To learn more about our compliance and security programs, see AWS Compliance Programs. As always, we value your feedback and questions; reach out to the AWS Compliance team through the Contact Us page.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security news? Follow us on Twitter.

Portuguese

Workbook da LGPD para Clientes AWS que gerenciam Informações de Identificação Pessoal no Brasil

A AWS tem o prazer de anunciar a publicação do Workbook Lei Geral de Proteção de Dados do Brasil.

A Lei Geral de Proteção de Dados (LGPD) teve sua primeira publicação em 14 de agosto de 2018 no Brasil e iniciou sua aplicabilidade em 18 de agosto de 2020. Empresas que gerenciam informações pessoais identificáveis (PII) conforme definido na LGPD terão que cumprir e atender às cláusulas da lei.

Para ajudar melhor os clientes a preparar e implementar controles que se concentram no Capítulo VII da LGPD “da Segurança e Boas Práticas”, a AWS criou uma pasta de trabalho com base nas melhores práticas do setor, ofertas de serviços e controles da AWS.

Entre outros tópicos, esta pasta de trabalho aborda a segurança da informação e os controles da AWS de:

- CIS v8.0 – framework contendo 18 domínios de controles

- NIST Cybersecurity Framework (CSF) – informações adicionais sobre NIST CSF disponíveis para consulta

- NIST Privacy Framework (PF) – confira informações adicionais sobre mapeamento entre AWS CAF e NIST PF

- AWS Cloud Adoption Framework (AWS CAF) – AWS CAF 3.0 agora disponível

- AWS Well-Architected Framework – leia a correlação entre AWS Well-Architected Framework, AWS CAF e NIST CSF

Em combinação com o Workbook Lei Geral de Proteção de Dados do Brasil, os clientes podem usar o whitepaper detalhado Navegando na conformidade com a LGPD na AWS.

A AWS adere a um modelo de responsabilidade compartilhada. Clientes terão que observar quais serviços oferecem recursos de privacidade e determinar sua aplicabilidade aos seus requisitos específicos de compliance. Mais informações sobre a privacidade de dados na AWS podem ser encontradas em nosso Centro de Privacidade de Dados. Informações adicionais sobre LGPD e Privacidade de dados na AWS no Brasil podem ser encontradas em nossa página de Privacidade de Dados no Brasil.

Para saber mais sobre nossos programas de conformidade e segurança, consulte Programas de conformidade da AWS. Como sempre, valorizamos seus comentários e perguntas; entre em contato com a equipe de conformidade da AWS por meio da página Fale conosco.

Se você tiver feedback sobre esta postagem, envie comentários na seção Comentários abaixo.

Quer mais notícias sobre segurança da AWS? Siga-nos no Twitter.

The Experiment Podcast: What Happens to Abortion in a Post-Roe America?

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=DILWCxkbO5s

AWS Week in Review – April 25, 2022

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/aws-week-in-review-april-25-2022/

The first in this year’s series of AWS Summits took place in San Francisco this past week and we had a bunch of great announcements. Let’s take a closer look…

The first in this year’s series of AWS Summits took place in San Francisco this past week and we had a bunch of great announcements. Let’s take a closer look…

Last Week’s Launches

Here are some launches that caught my eye this week:

AWS Migration Hub Orchestrator – Building on AWS Migration Hub (launched in 2017), this service helps you to reduce migration costs by automating manual tasks, managing dependencies between tools, and providing better visibility into the migration progress. It makes use of workflow templates that you can modify and extend, and includes a set of predefined templates to get you started. We are launching with support for applications based on SAP NetWeaver with HANA databases, along with support for rehosting of applications using AWS Application Migration Service (AWS MGN). To learn more, read Channy’s launch post: AWS Migration Hub Orchestrator – New Migration Orchestration Capability with Customizable Workflow Templates.

Amazon DevOps Guru for Serverless – This is a new capability for Amazon DevOps Guru, our ML-powered cloud operations service which helps you to improve the availability of your application using models informed by years of Amazon.com and AWS operational excellence. This launch helps you to automatically detect operational issues in your Lambda functions and DynamoDB tables, giving you actionable recommendations that help you to identify root causes and fix issues as quickly as possible, often before they affect the performance of your serverless application. Among other insights you will be notified of concurrent executions that reach the account limit, lower than expected use of provisioned concurrency, and reads or writes to DynamoDB tables that approach provisioned limits. To learn more and to see the full list of insights, read Marcia’s launch post: Automatically Detect Operational Issues in Lambda Functions with Amazon DevOps Guru for Serverless.

AWS IoT TwinMaker – Launched in preview at re:Invent 2021 (Introducing AWS IoT TwinMaker), this service helps you to create digital twins of real-world systems and to use them in applications. There’s a flexible model builder that allows you to create workspaces that contain entity models and visual assets, connectors to bring in data from data stores to add context, a console-based 3D scene composition tool, and plugins to help you create Grafana and Amazon Managed Grafana dashboards. To learn more and to see AWS IoT TwinMaker in action, read Channy’s post, AWS IoT TwinMaker is now Generally Available.

AWS Amplify Studio – Also launched in preview at re:Invent 2021 (AWS Amplify Studio: Visually build full-stack web apps fast on AWS), this is a point-and-click visual interface that simplifies the development of frontend and backends for web and mobile applications. During the preview we added integration with Figma so that to make it easier for designers and front-end developers to collaborate on design and development tasks. As Steve described in his post (Announcing the General Availability of AWS Amplify Studio), you can easily pull component designs from Figma, attach event handlers, and extend the components with your own code. You can modify default properties, override child UI elements, extend collection items with additional data, and create custom business logic for events. On the visual side, you can use Figma’s Theme Editor plugin to make UI components to your organization’s brand and style.

Amazon Aurora Serverless v2 – Amazon Aurora separates compute and storage, and allows them to scale independently. The first version of Amazon Aurora Serverless was launched in 2018 as a cost-effective way to support workloads that are infrequent, intermittent, or unpredictable. As Marcia shared in her post (Amazon Aurora Serverless v2 is Generally Available: Instant Scaling for Demanding Workloads), the new version is ready to run your most demanding workloads, with instant, non-disruptive scaling, fine-grained capacity adjustments, read replicas, Multi-AZ deployments, and Amazon Aurora Global Database. You pay only for the capacity that you consume, and can save up to 90% compared to provisioning for peak load.

Amazon SageMaker Serverless Inference – Amazon SageMaker already makes it easy for you to build, train, test, and deploy your machine learning models. As Antje descibed in her post (Amazon SageMaker Serverless Inference – Machine Learning Inference without Worrying about Servers), different ML inference use cases pose varied requirements on the infrastructure that is used to host the models. For example, applications that have intermittent traffic patterns can benefit from the ability to automatically provision and scale compute capacity based on the volume of requests. The new serverless inferencing option that Antje wrote about provides this much-desired automatic provisioning and scaling, allowing you to focus on developing your model and your inferencing code without having to manage or worry about infrastructure.

Other AWS News

Here are a few other launches and news items that caught my eye:

AWS Open Source News and Updates – My colleague Ricardo Sueiras writes this weekly open-source newsletter where he highlights new open source projects, tools, and demos from the AWS community. Read edition #109 here.

Amazon Linux AMI – An Amazon Linux 2022 AMI that is optimized for Amazon ECS is now available. Read the What’s New to learn more.

AWS Step Functions – AWS Step Functions now supports over 20 new AWS SDK integrations and over 1000 new AWS API actions. Read the What’s New to learn more.

AWS CloudFormation Registry – There are 35 new resource types in the AWS CloudFormation Registry, including AppRunner, AppStream, Billing Conductor, ECR, EKS, Forecast, Lightsail, MSK, and Personalize. Check out the full list in the What’s New.

Upcoming AWS Events

![]() The AWS Summit season is in full swing – The next AWS Summits are taking place in London (on April 27), Madrid (on May 4-5), Korea (online, on May 10-11), and Stockholm (on May 11). AWS Global Summits are free events that bring the cloud computing community together to connect, collaborate, and learn about AWS. Summits are held in major cities around the world. Besides in-person summits, we also offer a series of online summits across the regions. Find an AWS Summit near you, and get notified when registration opens in your area.

The AWS Summit season is in full swing – The next AWS Summits are taking place in London (on April 27), Madrid (on May 4-5), Korea (online, on May 10-11), and Stockholm (on May 11). AWS Global Summits are free events that bring the cloud computing community together to connect, collaborate, and learn about AWS. Summits are held in major cities around the world. Besides in-person summits, we also offer a series of online summits across the regions. Find an AWS Summit near you, and get notified when registration opens in your area.

.NET Enterprise Developer Day EMEA – .NET Enterprise Developer Day EMEA 2022 is a free, one-day virtual conference providing enterprise developers with the most relevant information to swiftly and efficiently migrate and modernize their .NET applications and workloads on AWS. It takes place online on April 26. Attendees can also opt-in to attend the free, virtual DeveloperWeek Europe event, taking place April 27-28.

.NET Enterprise Developer Day EMEA – .NET Enterprise Developer Day EMEA 2022 is a free, one-day virtual conference providing enterprise developers with the most relevant information to swiftly and efficiently migrate and modernize their .NET applications and workloads on AWS. It takes place online on April 26. Attendees can also opt-in to attend the free, virtual DeveloperWeek Europe event, taking place April 27-28.

![]() AWS Innovate – Data Edition Americas – AWS Innovate Online Conference – Data Edition is a free virtual event designed to inspire and empower you to make better decisions and innovate faster with your data. You learn about key concepts, business use cases, and best practices from AWS experts in over 30 technical and business sessions. This event takes place on May 11.

AWS Innovate – Data Edition Americas – AWS Innovate Online Conference – Data Edition is a free virtual event designed to inspire and empower you to make better decisions and innovate faster with your data. You learn about key concepts, business use cases, and best practices from AWS experts in over 30 technical and business sessions. This event takes place on May 11.

That’s all for this week. Check back again next week for the another AWS Week in Review!

— Jeff;

AWS welcomes new Trans-Atlantic Data Privacy Framework

Post Syndicated from Michael Punke original https://aws.amazon.com/blogs/security/aws-welcomes-new-trans-atlantic-data-privacy-framework/

Amazon Web Services (AWS) welcomes the new Trans-Atlantic Data Privacy Framework (Data Privacy Framework) that was agreed to, in principle, between the European Union (EU) and the United States (US) last month. This announcement demonstrates the common will between the US and EU to strengthen privacy protections in trans-Atlantic data flows, and will supplement the safeguards AWS and other companies already offer today. AWS commits to undertaking certification in accordance with the Data Privacy Framework as it is adopted, and we look forward to our customers and their end users benefiting from the new safeguards.

The Data Privacy Framework, once finalized, will re-establish a mechanism for certified businesses to conduct trans-Atlantic data transfers between the US and EU. According to the announcement, the new Data Privacy Framework will address the concerns raised by the Court of Justice of the European Union (CJEU) when it invalidated the EU-US Privacy Shield in its Schrems II decision in uly 2020. The Data Privacy Framework will adopt new safeguards to ensure that US intelligence activities are limited to what is necessary and proportionate to protect national security, and also create a new redress system to address the complaints of EU citizens.

As one of the architects of the Trusted Cloud Principles (a cloud-industry initiative to help safeguard the interests of organizations and the basic rights of individuals using cloud), AWS fully supports improved rules and regulations that advance privacy and security protections for any organization that wants to use cloud technologies and maintain control of their data.

While organizations using AWS technology have been able to conduct trans-Atlantic data transfers even after Schrems II, the new Data Privacy Framework will ensure further clarity and agility for our customers in their data transfer assessments. This will help our customers unlock value in terms of growth, digital transformation, and global competitive advantage.

Organizations that want to trade with speed and agility to and from the European Economic Area (EEA) need certainty that their goals to innovate and invest in the best technology for growth is supported by international frameworks promoting privacy across borders. Once finalized, the new Data Privacy Framework, coupled with our continued commitment to privacy at AWS, will provide even more simplicity and confidence for customers who choose to transfer data to and from Europe when using AWS services.

More than ever, our collective security requires mutual trust across both sides of the Atlantic and beyond. We therefore look forward to participating in, and remain committed to, the finalization of the Data Privacy Framework. We also support efforts to build broad consensus around the appropriate balance between privacy and security in forums such as the OECD’s workstream on trusted government access to data held by the private sector.

About AWS privacy and security

AWS is committed to protecting customer data. We continue to help customers successfully meet evolving European laws and standards, and achieve the highest levels of security, privacy, and resilience. AWS already offers comprehensive technical, operational, and contractual measures to protect and transfer customer content outside of Europe in compliance with the General Data Protection Regulation (GDPR) and the Schrems II ruling. Customers can also choose to store their content in the European Union by selecting any one or more of our regions in France, Germany, Ireland, Italy, Sweden, and later in 2022, Spain, with the confidence that their data stays in the AWS Region they select. In addition, customers can use an advanced set of access, encryption, and logging features to maintain full control of their content.

Today, AWS customers can also transfer their data outside of the European Economic Area (EEA) by relying on the new Standard Contractual Clauses (SCCs) included in the AWS Data Processing Addendum (DPA), which is supplemented by our strengthened contractual commitments to protect customer data, such as challenging law enforcement requests that conflict with EU law.

We also have a wide variety of tools available to enhance the security of cross-border data transfers for customers with global services. For example, AWS CloudHSM and AWS Key Management Service (AWS KMS) allow customers to encrypt data in transit and at rest, and securely generate and manage control of encryption keys. By building on top of the AWS Nitro System, our answer to confidential computing, which includes the use of specialized hardware and associated firmware to protect customer code and data during processing from outside access, customers can further secure data during processing, and thereby enhance confidentiality and privacy.

AWS has achieved internationally recognized certifications and attestations that demonstrate compliance with rigorous international privacy and security standards, including the Cloud Infrastructure Services in Europe (CISPE) Data Protection Code of Conduct, Cloud Computing Compliance Controls Catalog (C5), ISO27018, and the Esquema National de Securidad (ENS, Spain).

As well as benefitting from these existing measures, our extensive online resources can help customers more easily complete data-transfer assessments and fulfill their GDPR compliance requirements, in accordance with the European Data Protection Board (EDPB) recommendations. This includes regular Information Request Reports showing requests to access data from governments and our responses.

Further information

Our technical paper Navigating Compliance with EU Data Transfer Requirements and AWS’s Privacy Features for AWS Services provide further information to help customers assess the right services for their individual needs.

If you have questions or need more information, visit our EU Data Protection page.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Want more AWS Security news? Follow us on Twitter.

Being friendly: Friendly forks 101

Post Syndicated from Lessley Dennington original https://github.blog/2022-04-25-the-friend-zone-friendly-forks-101/

This is the first post in a two-part series describing friendly forks and alternative strategies for managing them. Stay tuned for part two coming in May!

This post covers what a friendly fork is, why they are beneficial, and how they differ from a divergent fork. We’ll also look at some examples from the wild and provide details on three of our favorite friendly forks of the git/git repository.

To fork or not to fork

To fork or not to fork

Most developers are familiar with the concept of working with source code in repositories. However, what should you do when you want to make one or more major changes to a repository that you do not own? Two options are to submit feature requests or to contribute the features you need yourself. This is a very common approach in open source software, and, when it goes well, it can lead to productive collaboration and useful results for all parties.

But what if the proposed features are not accepted into the repository? What if they were never intended to be contributed back to the original project? If you (or your company) have a strong need for these features, creating a friendly fork of the repository could be the right choice.

What is a friendly fork?

What is a friendly fork?

A friendly fork is a long-lived fork that complements its upstream repository (i.e., the repository from which it was forked) with customizations targeted to a subset of users. Typically, features from the friendly fork are contributed back to the upstream repository through a process known as upstreaming. If that is the case, developers working in the friendly fork sustain relationships with the maintainers of the upstream repository (this is the friend zone we’re so fond of!) to facilitate this flow of features and to improve the software for both user bases. It is also possible, however, for a friendly fork to simply take regular updates from its upstream repository with no maintainer interaction. Friendly forks may or may not eventually re-merge with the upstream repository.

Below are some examples of existing friendly forks of the git/git repository (which are maintained by folks at GitHub) and their purposes.

git-for-windows/git: hosts Windows-specific features of Git. It also sometimes receives early features which are subsequently upstreamed intogit/git.microsoft/git: focuses on features to support monorepos, some of which are subsequently upstreamed intogit/git.github/git: powers GitHub’s backend. It includes GitHub-specific changes, like extra instrumentation specific to GitHub’s infrastructure, but also serves as a staging ground for new features before they are submitted upstream.

git/git is definitely not the only repository with friendly forks. Examples of friendly forks created from other repos include:

- MSYS2: a fork of Cygwin that provides an environment for building, installing, and running native Windows software.

- CentOS: a fork of Red Hat Enterprise Linux (RHEL) created in 2004 to offer a RHEL-like experience for the community. (Interestingly, Red Hat now owns the CentOS trademarks).

It is important to note that not all forks can be considered friendly. There are also divergent forks, which are typically created due to insurmountable disagreements in a community caused by disparate goals or personality conflicts. Divergent forks often become so different from their upstream repositories that sharing code becomes difficult, if not impossible. While it is good to know that divergent forks are a thing you may encounter in the wild, we emphatically believe in the power of the friend zone and will center our focus for the rest of this series on getting and keeping forks there.

A tale of three forks

A tale of three forks

Three of the friendly fork examples provided above are based off of git/git, git-for-windows/git, microsoft/git, and github/git. Each of these forks has a unique history that contributes to the strategy used to maintain it. We will dedicate the remainder of this post to describing the history and purpose of each fork.

git-for-windows/git

git-for-windows/git is the oldest of our forks for discussion. It was created in 2007 to provide the required adjustments to Git for it to run on Windows. While it may seem odd that Windows-specific features weren’t just added to the git/git repository, forking was deemed necessary because the git/git project was (and, still is) run by experts in the Unix systems domain. And Windows, of course, falls outside the scope of that expertise.

Although this fork was originally intended to be short-lived, it soon became clear that Windows support would be an ongoing community need that would require a permanent fork. Thus, development in git-for-windows/git continues in earnest today.

Because it was created to give Windows users the option of using Git for version control, the main purposes of git-for-windows/git are:

- Provide a seamless, pain-free experience for Windows users.

- Separation of concerns (in other words, Windows-specific and Unix-specific features are contributed in different repositories, while shared features can easily flow between the repositories).

As with each fork we discuss in this post, there are some use cases for which it makes sense for new features to be contributed to the fork before they are added upstream. FS Monitor is an example of this in which the Request for Comments went to the git/git mailing list, but early implementations of the feature were merged into git-for-windows/git for rapid testing.

microsoft/git

microsoft/git began as a private fork of git-for-windows/git, with the initial purpose of supporting Microsoft-internal products. However, it was open-sourced in 2017, and, as a result, its goals today are much more general and community-oriented:

- Facilitate easy dogfooding/quick releases of new features for GitHub’s monorepo customers.

- As with

git-for-windows/git, determine which of these features make sense to contribute back upstream and submit/monitor them on the mailing list.

An example feature that was introduced to microsoft/git prior to upstreaming is the sparse index. This was done to speedily get this new feature into the hands of monorepo customers who needed it most. After that was done, the feature was gradually introduced and refined upstream.

github/git

github/git, our final fork for discussion, actually did not begin as a fork. In the early days of GitHub, we carried a handful of changes on top of new Git releases. Because there were only a few, we stored them as *.patch files that got applied on top of each new release before deployment. Over time, however, our custom changes became both more numerous and more applicable to being contributed to git/git. It became clear that a full-fledged fork would be beneficial to improve management, workflows, and our ability to contribute back to upstream. Thus, in 2012, the official github/git fork was born.

Note that github/git differs from the above forks in that it is a private friendly fork, while the other two are public. Private friendly forks can be very beneficial to organizations. For example, they can be an excellent testing ground for new features, as they allow you to be confident code has been battle-tested internally and works before submitting publicly upstream. They can also be less beholden to the upstream release cadence, which helps ensure stability for the product.

To this day, this fork serves the same purpose of powering GitHub’s infrastructure. It fulfills our need to support features specialized to this infrastructure and is also used as a “staging ground” for new features before submitting them to the open source git/git repository. Specific examples of the latter use include bitmaps and multi-pack bitmaps.

That’s all for now!

That’s all for now!

In this post, we’ve discussed what a friendly fork is and how friendly forks differ from divergent forks. We’ve learned about three different friendly forks of the git/git repository and their purposes for existing. Thanks for sticking with us this far, and be sure to keep your  peeled for our second post in the “friend zone” series, in which we’ll talk about how these forks are managed and how you can adapt their strategies to your very own friendly fork!

peeled for our second post in the “friend zone” series, in which we’ll talk about how these forks are managed and how you can adapt their strategies to your very own friendly fork!

Ask an Expert – Sustainability

Post Syndicated from Margaret O'Toole original https://aws.amazon.com/blogs/architecture/ask-an-expert-sustainability/

In this first edition of Ask an Expert, we chat with Margaret O’Toole, Worldwide Tech Leader – Environmental Sustainability and Joseph Beer, Worldwide Tech Leader – Power and Utilities about sustainability solutions and tools to implement sustainability practices into IT design.

When putting together an AWS architecture to solve business problems specifically for sustainability-focused customers, what are some of the considerations?

A core idea of sustainability comes down to efficiency: how can we do the most work with the fewest number of resources? In this case, you want efficiency when you design and build the solution and also when you apply and operate it.

In broad strokes, there are two main things to consider. First, you want to optimize technology usage to reduce impact. Second, you want to find and use the best mix of technology to support sustainability. These objectives must also delight your customers, constituents, and stakeholders as you meet your business objectives in the most cost effective and expeditious way possible.

However, to be successful in combining technology and sustainability, you must consider the culture change of the sustainability transformation. Sustainability must become part of each person’s job function. When it comes to responsibility around sustainability at AWS, we think about it through two lenses.

First, we have the sustainability OF the AWS Cloud, which is our responsibility at AWS. This covers the work we do around purchasing renewable energy, operating efficiently, reducing water consumption in the data centers, and so on. There is more information on sustainability of the AWS Cloud on our sustainability page.

Then, there’s sustainability IN the cloud, which focuses on customers and their AWS usage. This is again focused on efficiency, mostly how to optimize existing patterns of user consumption, data access, software and development patterns, and hardware utilization.

In a related but slightly different vein, we also talk about sustainability THROUGH the cloud. This is how our customers use AWS to work on sustainability projects that help them meet their sustainability goals. This can include anything from carbon tracking or accounting to route optimization for fleets to using machine learning (ML) to reduce packaging and anything in between.

What are the general architecture pattern trends for sustainability in the cloud?

Solutions designed with sustainability in mind aim to be highly efficient. An architect wanting to optimize for sustainability looks for opportunities within user patterns, software patterns, development/test patterns, hardware patterns, and data patterns.

There is no one-size-fits-all way to optimize for sustainability, but the core themes are maximizing utilization and reducing waste or duplication. Most customers start with relatively easy things to accomplish. These typically include things like using the AWS Instance Scheduler to turn off compute when it will not be used or comparing cost and utilization reports to find hot spots to reduce utilization.

Another way to optimize for sustainability is to incorporate AWS Managed Services (AMS) as much as possible (many of these are also serverless). AMS not only increases the speed and efficiency of your design and build time and lower your overhead to run, but they also include automatic scaling as part of the service, which increases compute efficiency. Where AMS is not applicable, you can often configure automatic scaling into the solutions themselves. Automate everything, including your continuous integration and continuous delivery/deployment (CI/CD) code pipeline, data analytics and artificial intelligence (AI)/ML pipelines, and infrastructure builds where you are not using AMS.

And finally, include ongoing AWS Well-Architected reviews and continuously review and optimize your usage of AMS and the size and mix of your compute and storage in your standard operating procedures.

What are the key AWS-based sustainability solutions you are seeing customers ask for across industries and unique to specific industries?

Almost all industries have a set of shared challenges. This generally includes things like facilities or building management, design or optimization, and carbon tracking/footprinting. To help with this, customers must first understand the impact of their facilities, operations, or supply chain. Many customers use AWS services for ingestion, aggregation, and transformation of their real-world data. Once the data is collected and customers understand their relative impact, this data can be used to form models, which act as the basis for optimization. Technologies such as AWS IoT Core, Amazon Managed Blockchain, and AWS Lake Formation are crucial here.

For industries like power and utilities, there are more targeted solutions. Many of these are aimed at supporting the transition to electric vehicles (EVs). Smart EV charging, for example, uses the AWS Cloud and AI/ML to lessen the aggregate impact to the grid that may occur because of EV charging peaks and ramp ups. This helps avoid requiring natural gas at peak times. Amazon Forecast, a fully managed service that delivers highly accurate forecasts, can be useful in the case of short-term electric load forecasting. Grid voltage optimization is another solution that allows utilities to forecast usage requests and more accurately provide the desired voltage to their customers.

Within supply chains, customers use AWS to support traceability and carbon dashboarding to nudge suppliers toward greener energy. Customers commonly look for ways to track and trace throughout their supply chains, either to measure and reduce scope 3 emissions or to optimize their logistics network.

What’s your outlook for sustainability, and what role will the cloud play in future development efforts?

The cloud is critical to solving sustainability challenges that businesses and governments are being challenged with right now. It gives you the flexibility to use resources only when you need them, coupled with immense computing power. Thus, the cloud will be an essential tool in solving many data challenges like reporting and measuring and predicting and analyzing trends.

Migration to the cloud is essential to optimizing workloads and handling massive amounts of data. We can see this directly in how Boom used AWS HPC to support the creation of the world’s fastest and most sustainable aircraft. Additionally, FLSmidth is pursuing sustainable, technology-driven productivity under MissionZero. This initiative is working to achieve zero emissions and zero waste in cement production and mining by 2030 with the help of AWS high performance computing (HPC).

Do you see different trends in sustainability in the cloud versus on-premises?

The usage pattern is different. With the cloud you can use what you want, whenever you want, which allows for customers to drive up a high utilization. This type of efficiency is critical. It’s why 451 Research found that the same task can be completed on AWS with an 88% lower carbon footprint compared to the average surveyed US enterprise data center.

The cloud offers technology that wouldn’t be available on premises, such as large GPU-backed instances capable of processing huge amounts of data in hours that would take weeks on premises. It can also ingest massive streams of data from energy- and resource-consuming and producing assets to optimize their performance and environmental impact in near-real-time.

With the cloud, you have the flexibility and the power to move quickly through research and development to solve sustainability challenges. You can accelerate the development process of new ideas and solutions, which will be essential for the transformation to a carbon neutral, climate positive economy.

Yasmin Elhady | Comedy, Representation & Beyond | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=nX-kq-9Er3w

What does the James Webb Use to See the Universe?

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=BVByTTYJF3M

[$] Extending in-kernel TLS support

Post Syndicated from original https://lwn.net/Articles/892216/

The kernel gained support for the TLS

protocol in the 4.13 release, which came out in September 2017. That

support is incomplete, though, in that it does not provide the kernel with

a way to initiate a TLS connection on its own. Instead, user space creates

a socket and performs the TLS handshake before handing the socket to the

kernel, which can then transfer data using TLS. The situation may be about

to change as a result of this

patch series from Chuck Lever — though user space will still need to

remain in the picture.

Velociraptor Version 0.6.4: Dead Disk Forensics and Better Path Handling Let You Dig Deeper

Post Syndicated from Carlos Canto original https://blog.rapid7.com/2022/04/25/velociraptor-version-0-6-4-dead-disk-forensics-and-better-path-handling-let-you-dig-deeper-2/

Rapid7 is pleased to announce the release of Velociraptor version 0.6.4 – an advanced, open-source digital forensics and incident response (DFIR) tool that enhances visibility into your organization’s endpoints. This release has been in development and testing for several months now and has a lot of new features and improvements.

The main focus of this release is in improving path handling in VQL to allow for more efficient path manipulation. This leads to the ability to analyze dead disk images, which depends on accurate path handling.

Path handling

A path is a simple concept – it’s a string similar to /bin/ls that can be used to pass to an OS API and have it operate on the file in the filesystem (e.g. read/write it).

However, it turns out that paths are much more complex than they first seem. For one thing, paths have an OS-dependent separator (usually / or \). Some filesystems support path separators inside a filename too! To read about the details, check out Paths and Filesystem Accessors, but one of the most interesting things with the new handling is that stacking filesystem accessors is now possible. For example, it’s possible to open a docx file inside a zip file inside an ntfs drive inside a partition.

Dead disk analysis

Velociraptor offers top-notch forensic analysis capability, but it’s been primarily used as a live response agent. Many users have asked if Velociraptor can be used on dead disk images. Although dead disk images are rarely used in practice, sometimes we do encounter these in the field (e.g. in cloud investigations).

Previously, Velociraptor couldn’t be used easily on dead disk images without having to carefully tailor and modify each artifact. In the 0.6.4 release, we now have the ability to emulate a live client from dead disk images. We can use this feature to run the exact same VQL artifacts that we normally do on live systems, but against a dead disk image. If you’d like to read more about this new feature, check out Dead Disk Forensics.

Resource control

When collecting artifacts from endpoints, we need to be mindful of the overall load that collection will cost on endpoints. For performance-sensitive servers, our collection can cause operational disruption. For example, running a yara scan over the entire disk would utilize a lot of IO operations and may use a lot of CPU resources. Velociraptor will then compete for these resources with the legitimate server functionality and may cause degraded performance.

Previously, Velociraptor had a setting called Ops Per Second, which could be used to run the collection “low and slow” by limiting the rate at which notional “ops” were utilized. In reality, this setting was only ever used for Yara scans because it was hard to calculate an appropriate setting: Notional ops didn’t correspond to anything measurable like CPU utilization.

In 0.6.4, we’ve implemented a feedback-based throttler that can control VQL queries to a target average CPU utilization. Since CPU utilization is easy to measure, it’s a more meaningful control. The throttler actively measures the Velociraptor process’s CPU utilization, and when the simple moving average (SMA) rises above the limit, the query is paused until the SMA drops below the limit.

The above screenshot shows the latest resource controls dialog. You can now set a target CPU utilization between 0 and 100%. The image below shows how that looks in the Windows task manager.

By reducing the allowed CPU utilization, Velociraptor will be slowed down, so collections will take longer. You may need to increase the collection timeout to correspond with the extra time it takes.

Note that the CPU limit refers to a percentage of the total CPU resources available on the endpoint. So for example, if the endpoint is a 2 core cloud instance a 50% utilization refers to 1 full core. But on a 32 core server, a 50% utilization is allowed to use 16 cores!

IOPS limits

On some cloud resources, IO operations per second (IOPS) are more important than CPU loading since cloud platforms tend to rate limit IOPS. So if Velociraptor uses many IOPS (e.g. in Yara scanning), it may affect the legitimate workload.

Velociraptor now offers limits on IOPS which may be useful for some scenarios. See for example here and here for a discussion of these limits.

The offline collector resource controls

Many people use the Velociraptor offline collector to collect artifacts from endpoints that they’re unable to install a proper client/server architecture on. In previous versions, there was no resource control or time limit imposed on the offline collector, because it was assumed that it would be used interactively by a user.

However, experience shows that many users use automated tools to push the offline collector to the endpoint (e.g. an EDR or another endpoint agent), and therefore it would be useful to provide resource controls and timeouts to control Velociraptor acquisitions. The below screenshot shows the new resource control page in the offline collector wizard.

GUI changes

Version 0.6.4 brings a lot of useful GUI improvements.

Notebook suggestions

Notebooks are an excellent tool for post processing and analyzing the collected results from various artifacts. Most of the time, similar post processing queries are used for the same artifacts, so it makes sense to allow notebook templates to be defined in the artifact definition. In this release, you can define an optional suggestion in the artifact yaml to allow a user to include certain cells when needed.

The following screenshot shows the default suggestion for all hunt notebooks: Hunt Progress. This cell queries all clients in a hunt and shows the ones with errors, running and completed.

Multiple OAuth2 authenticators

Velociraptor has always had SSO support to allow strong two-factor authentication for access to the GUI. Previously, however, Velociraptor only supported one OAuth2 provider at a time. Users had to choose between Google, Github, Azure, or OIDC (e.g. Okta) for the authentication provider.

This limitation is problematic for some organizations that need to share access to the Velociraptor console with third parties (e.g. consultants need to provide read-only access to customers).

In 0.6.4, Velociraptor can be configured to support multiple SSO providers at the same time. So an organization can provide access through Okta for their own team members at the same time as Azure or Google for their customers.

The Velociraptor knowledge base

Velociraptor is a very powerful tool. Its flexibility means that it can do things that you might have never realized it can! For a while now, we’ve been thinking about ways to make this knowledge more discoverable and easily available.

Many people ask questions on the Discord channel and learn new capabilities in Velociraptor. We want to try a similar format to help people discover what Velociraptor can do.

The Velociraptor Knowledge Base is a new area on the documentation site that allows anyone to submit small (1-2 paragraphs) tips about how to do a particular task. Knowledge base tips are phrased as questions to help people search for them. Provided tips and solutions are short, but they may refer users to more detailed information.

If you learned something about Velociraptor that you didn’t know before and would like to share your experience to make the next user’s journey a little bit easier, please feel free to contribute a small note to the knowledge base.

Importing previous artifacts

Updating the VQL path handling in 0.6.4 introduces a new column called OSPath (replacing the old FullPath column), which wasn’t present in previous versions. While we attempt to ensure that older artifacts should continue to work on 0.6.4 clients, it’s possible that the new VQL artifacts built into 0.6.4 won’t work correctly on older versions.

To make migration easier, 0.6.4 comes built in with the Server.Import.PreviousReleases artifact. This server artifact will load all the artifacts from a previous release into the server, allowing you to use those older versions with older clients.

Try it out!

If you’re interested in the new features, take Velociraptor for a spin by downloading it from our release page. It’s available for free on GitHub under an open source license.

As always, please file bugs on the GitHub issue tracker or submit questions to our mailing list by emailing [email protected]. You can also chat with us directly on our discord server.

Learn more about Velociraptor by visiting any of our web and social media channels below:

Täht: The state of fq_codel and sch_cake worldwide

Post Syndicated from original https://lwn.net/Articles/892556/

Dave Täht has put together a summary of the

state of fair queuing and the fight against bufferbloat in general.

On a very positive note, while it might seem the negatives are

overwhelming in the list above, I’m confident that there are

billions of devices for which fq_codel is doing a good job. I’m

confident there is a rising tide of clued system administrators and

users applying smart queue management in the right places at the

right times. There’s more than enough products on the market

already that have the right stuff in them to make better networks a

matter of merely recognising the problem and applying the fix.

Email Routing Insights

Post Syndicated from Joao Sousa Botto original https://blog.cloudflare.com/email-routing-insights/

Have you ever wanted to try a new email service but worried it might lead to you missing any emails? If you have, you’re definitely not alone. Some of us email ourselves to make sure it reaches the correct destination, others don’t rely on a new address for anything serious until they’ve seen it work for a few days. In any case, emails often contain important information, and we need to trust that our emails won’t get lost for any reason.

To help reduce these worries about whether emails are being received and forwarded – and for troubleshooting if needed – we are rolling out a new Overview page to Email Routing. On the Overview tab people now have full visibility into our service and can see exactly how we are routing emails on their behalf.

Routing Status and Metrics

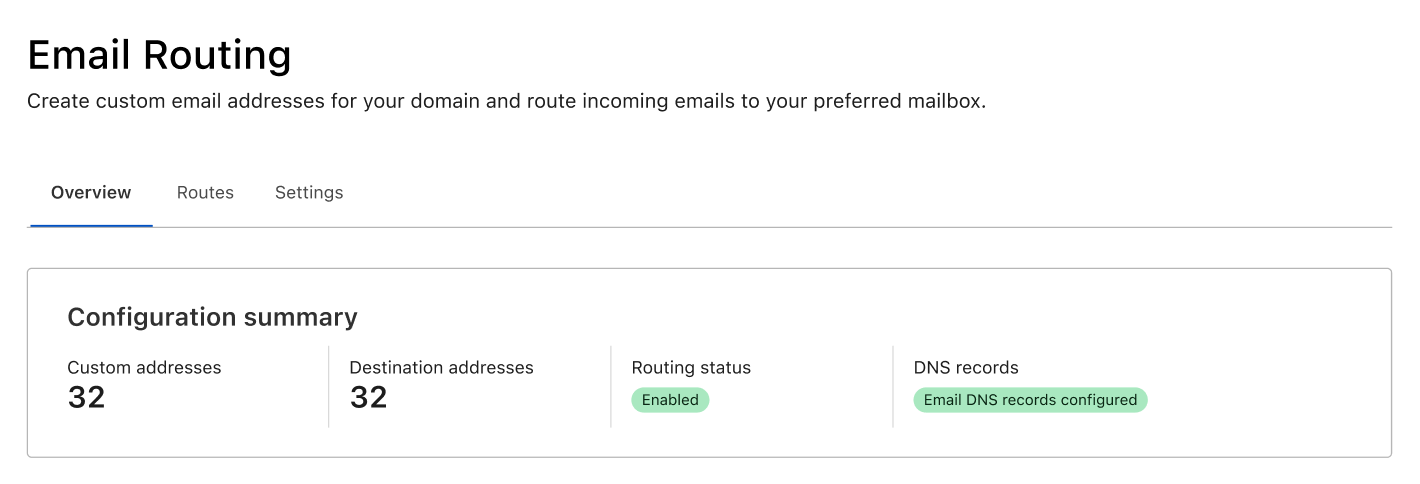

The first thing you will see in the new tab is an at a glance view of the service. This includes the routing status (to know if the service is configured and running), whether the necessary DNS records are configured correctly, and the number of custom and destination addresses on the zone.

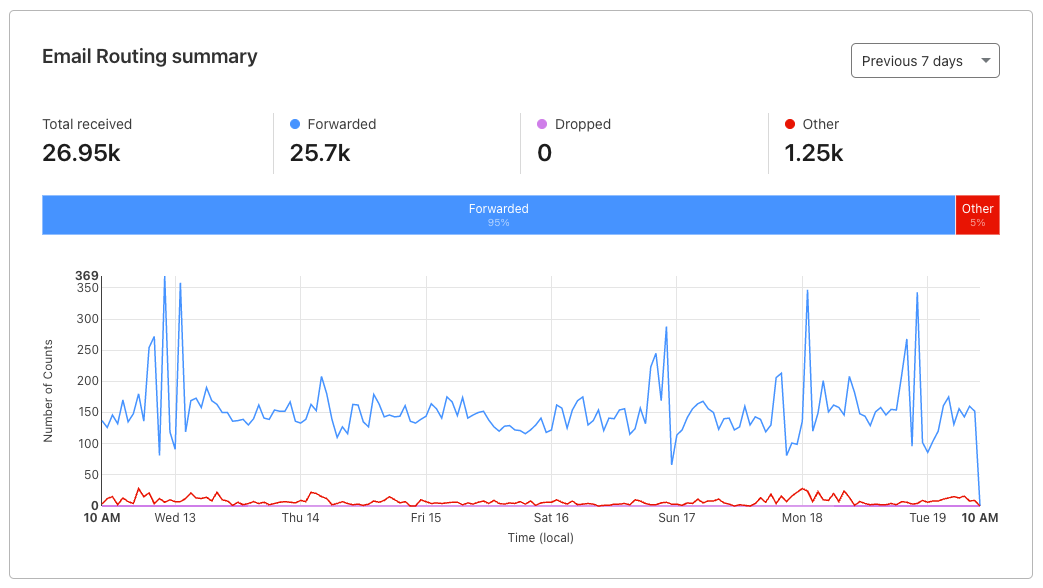

Below the configuration summary, you will see more advanced statistics about the number of messages received on your custom addresses, and what happened to those messages. You will see information about the number of emails forwarded or dropped by Email Routing (based on the rules you created), and the number that fall under other scenarios such as being rejected by Email Routing (due to errors, not passing security checks or being considered spam) or rejected by your destination mailbox. You now have the exact counts and a chart, so that you can track these metrics over time.

Activity Log

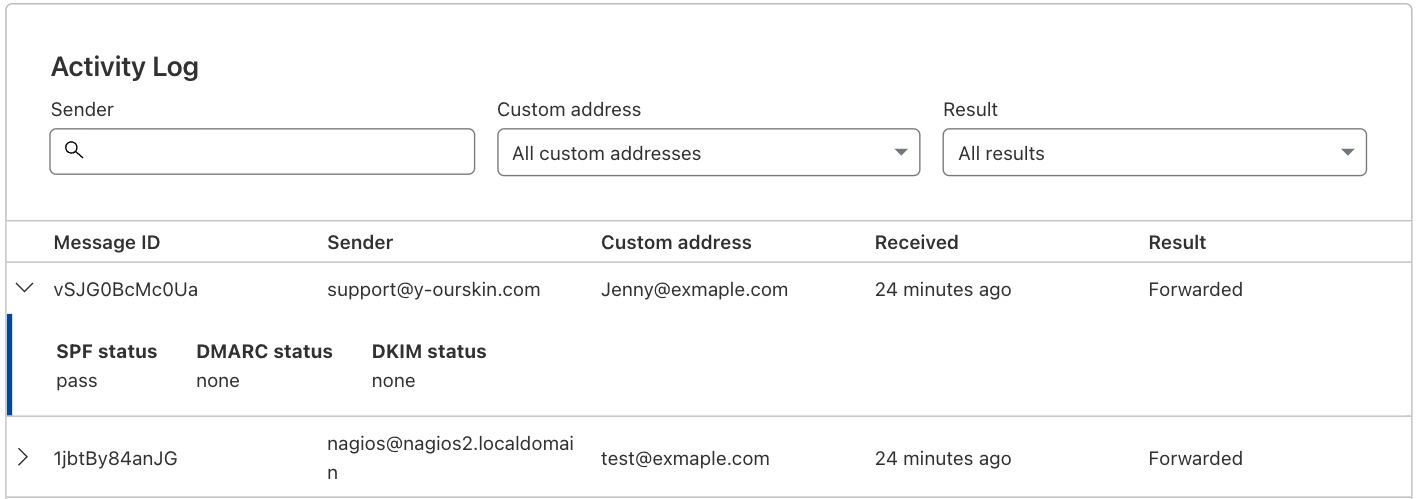

On the Cloudflare Email Routing tab you’ll also see the Activity Log, where you can drill deeper into specific behaviors. These logs show you details about the email messages that reached one of the custom addresses you have configured on your Cloudflare zone.

For each message the logs will show you the Message ID, Sender, Custom Address, when Cloudflare Email Routing received it, and the action that was taken. You can also expand the row to see the SPF, DMARC, and DKIM status of that message along with any relevant error messaging.

And we know looking at every message can be overwhelming, especially when you might be resorting to the logs for troubleshooting purposes, so you have a few options for filtering:

- Search for specific people (email addresses) that have messaged you.

- Filter to show only one of your custom addresses.

- Filter to show only messages where a specific action was taken.

Routes and Settings

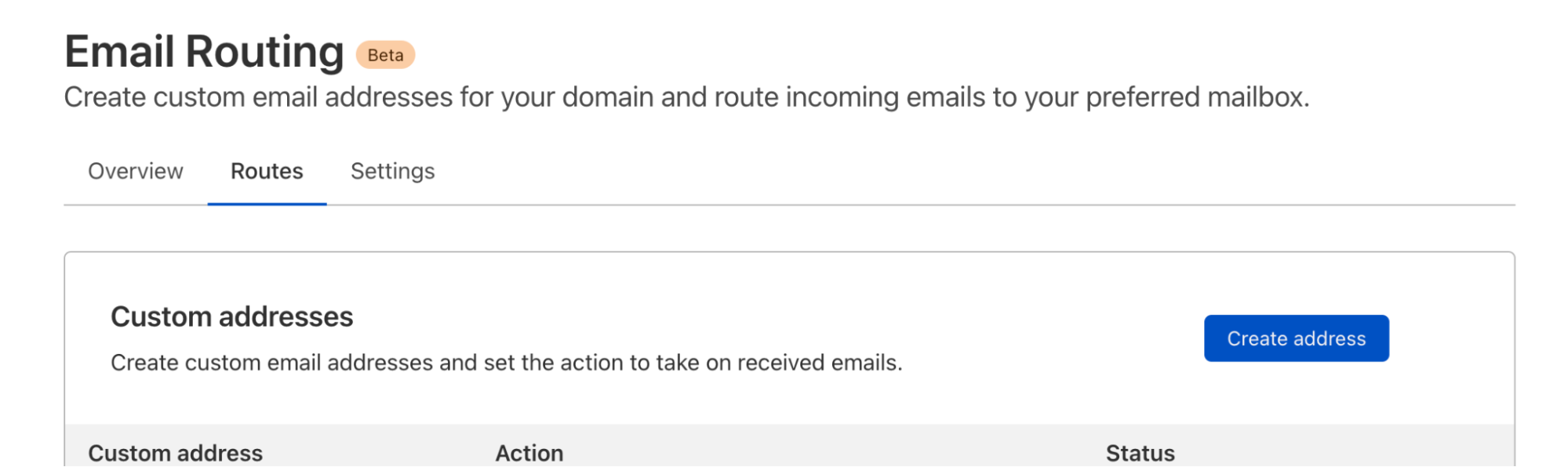

Next to the Overview tab, you will find the Routes tab with the configuration UI that is likely already familiar to you. That’s where you create custom addresses, add and verify destination addresses, and create rules with the relationships between the custom and destination addresses.

Lastly the Settings tab includes less common actions such as the DNS configuration and the options for off boarding from Email Routing.

We hope you enjoy this update. And if you have any questions or feedback about this product, please come see us in the Cloudflare Community and the Cloudflare Discord.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/892536/

Security updates have been issued by Fedora (kernel, kernel-headers, kernel-tools, libinput, podman-tui, and vim), Mageia (git, gzip/xz, libdxfrw, libinput, librecad, and openscad), and SUSE (dnsmasq, git, libinput, libslirp, libxml2, netty, podofo, SDL, SDL2, and tomcat).

The Foundations of the American Mafia: The Murder of David Hennessey.

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=QmaYCJvyly8

SMS Phishing Attacks are on the Rise

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/04/sms-phishing-attacks-are-on-the-rise.html

SMS phishing attacks — annoyingly called “smishing” — are becoming more common.

I know that I have been receiving a lot of phishing SMS messages over the past few months. I am not getting the “Fedex package delivered” messages the article talks about. Mine are usually of the form: “Thank you for paying your bill, here’s a free gift for you.”

Air Bud: Last Week Tonight with John Oliver (Web Exclusive)

Post Syndicated from LastWeekTonight original https://www.youtube.com/watch?v=Hk011WMM7t0

Kernel prepatch 5.18-rc4

Post Syndicated from original https://lwn.net/Articles/892481/

The 5.18-rc4 kernel prepatch is out for

testing. “Fairly slow and calm week – which makes me just suspect

that the other shoe will drop at some point. But maybe things are just

going really well this release. It’s bound to happen _occasionally_, after

all.”

To fork or not to fork

To fork or not to fork What is a friendly fork?

What is a friendly fork? A tale of three forks

A tale of three forks That’s all for now!

That’s all for now!