Post Syndicated from Marshall Jones original https://aws.amazon.com/blogs/security/how-to-use-new-amazon-guardduty-eks-protection-findings/

If you run container workloads that use Amazon Elastic Kubernetes Service (Amazon EKS), Amazon GuardDuty now has added support that will help you better protect these workloads from potential threats. Amazon GuardDuty EKS Protection can help detect threats related to user and application activity that is captured in Kubernetes audit logs. Newly-added Kubernetes threat detections include Amazon EKS clusters that are accessed by known malicious actors or from Tor nodes, API operations performed by anonymous users that might indicate a misconfiguration, and misconfigurations that can result in unauthorized access to Amazon EKS clusters. By using machine learning (ML) models, GuardDuty can identify patterns consistent with privilege-escalation techniques, such as a suspicious launch of a container with root-level access to the underlying Amazon Elastic Compute Cloud (Amazon EC2) host. In this post, we give you an overview of the new GuardDuty EKS Protection feature; show you examples of new finding details; and help you understand, operationalize, and respond to these new findings.

Amazon GuardDuty is an automated threat detection service that continuously monitors for suspicious activity and potentially unauthorized behavior to help protect your AWS accounts, Amazon EC2 workloads, data stored in Amazon Simple Storage Service (S3), and now Amazon EKS workloads.

If you are already a GuardDuty customer, you can enable GuardDuty EKS Protection and efficiently navigate the console to begin to use this feature. Your delegated administrator accounts can enable this for existing member accounts and determine if new AWS accounts in an organization will be automatically enrolled. If you are new to GuardDuty, the EKS Protection feature is included as part of the service’s 30-day trial period. As part of the 30-day trial period, you can take full advantage of this new feature and gain insight into your Amazon EKS workloads.

Overview of GuardDuty EKS Protection

GuardDuty EKS Protection enables GuardDuty to detect suspicious activities and potential compromises of your EKS clusters by analyzing Kubernetes audit logs. Kubernetes audit logs provide a security relevant, chronological set of records documenting the sequence of events from individual users, administrators, or system components that have affected your cluster. Audit logs can help answer questions such as: What happened? When did it happen? Who initiated it? GuardDuty EKS Protection analyzes Kubernetes audit logs from your Amazon EKS clusters, both new and existing, without the need to configure EKS control plane logging in your environment. GuardDuty collects these Kubernetes audit logs in addition to AWS CloudTrail, Amazon Virtual Private Cloud (Amazon VPC) flow logs, DNS queries, and Amazon S3 data events. GuardDuty EKS Protection performs analysis and looks for suspicious activity without the need for agents or adding resource constraints to your environment.

To detect threats using Kubernetes audit logs, GuardDuty uses a combination of machine learning, anomaly detection, and integrated threat intelligence to identify and prioritize potential threats. These findings primarily align to five root causes including compromised container images, configuration issues, Kubernetes user compromise, pod compromise, and node compromise. An example of a configuration issue is granting unnecessary privileges to the anonymous user by misconfiguring role-based access control (RBAC), which may inadvertently allow anonymous and unauthenticated calls to the Kubernetes API. A Kubernetes user compromise example could be a bad actor using stolen credentials to deploy containers with insecure settings, to use for a variety of activities from command and control to crypto-mining.

After a threat is detected, GuardDuty generates a security finding that includes container details such as the pod ID, container image ID, and tags associated with the Amazon EKS cluster. These finding details assist you with understanding the root cause which you can use to identify basic steps to remediate findings specific to EKS clusters. For example, your response to a finding or group of findings associated with a compromised Kubernetes user might begin with revoking access. For more information, see Remediating Kubernetes security issues discovered by GuardDuty in the Amazon GuardDuty User Guide.

Understanding new GuardDuty EKS Protection findings

As adversaries continue to become more sophisticated, it becomes even more important for you to align to a common framework to understand the tactics, techniques, and procedures (TTPs) behind an individual event. GuardDuty aligns findings using the MITRE ATT&CK framework, which is a globally-accessible knowledge base of adversary tactics and techniques based on real-world observations. GuardDuty findings have a specific finding format that helps you understand details of each finding. If you examine the ThreatPurpose portion in the GuardDuty EKS Protection finding types, you see there are finding types associated with various MITRE ATT&CK tactics, including CredentialAccess, DefenseEvasion, Discovery, Impact, Persistence, and PrivilegeEscalation. This can help you identify and understand the type of activity associated with a finding.

For example, look at two different finding types that seem similar: Impact:Kubernetes/SuccessfulAnonymousAccess and Discovery:Kubernetes/SuccessfulAnonymousAccess. You can see the difference is the ThreatPurpose at the beginning. They are both involved with successful anonymous access, and the difference is the intent of the activity associated with each finding. GuardDuty has determined based on the API or request URI invoked, that in this example, the activity seen on one finding aligns with the Impact tactic whereas the other finding aligns with the Discovery tactic

With GuardDuty EKS Protection, you now have an additional mechanism to gain insight into your EKS clusters across your accounts to look for suspicious activity. You can be alerted to Kubernetes-specific suspicious activity including: allowing administrator access to the default service account, exposing a Kubernetes dashboard, and launching a container with sensitive host paths. With this new feature, GuardDuty is also able to extend support for finding types that you might already be familiar with that also apply to Amazon EKS workloads. These finding types include calls to a Kubernetes cluster API from a Tor node, or calls to a Kubernetes cluster from a known malicious IP address, which can indicate that there are interactions with your Kubernetes clusters from sources that are commonly associated with malicious actors.

Responding to GuardDuty EKS Protection findings

This section gives an overview of three new GuardDuty EKS Protection findings, how to prevent them, and how to investigate and respond if they happen in your environment. The patterns shown can also act as a guide for how to prevent, investigate, and respond to other GuardDuty EKS Protection findings.

Discovery:Kubernetes/SuccessfulAnonymousAccess

Finding documentation: Discovery:Kubernetes/SuccessfulAnonymousAccess

Severity: Medium

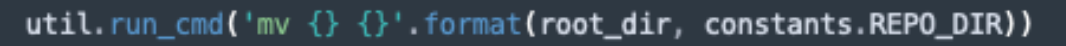

Overview: This finding (as shown in Figure 1) informs you that an API operation was successfully invoked by the system:anonymous user. API calls made by system:anonymous are unauthenticated. The observed API is commonly associated with the discovery stage of an attack when an adversary is gathering information on your Kubernetes cluster. This activity indicates that anonymous or unauthenticated access is permitted on the API action reported in the finding, and may be permitted on other actions. These API calls are possible because of a misconfiguration of the system:anonymous user or system:unauthenticated group.

Preventative measures: AWS recommends that you disable unnecessary anonymous authentication. For instructions, see Review and revoke unnecessary anonymous access in the Amazon EKS Best Practices Guides. It is important to note that Kubernetes versions older than 1.14 granted system:discovery and system:basic-user roles to system:anonymous user by default, and these permissions remain in place after updating unless you explicitly change them.

How to remediate: To respond to this finding, it is important to first identify the details of the activity, for example what cluster is involved? Who is the owner of this cluster? This information will assist you with the remediation steps that follow, to review and revoke unnecessary permissions, and also help you determine a root cause.

Figure 1: GuardDuty Console showing Discovery:Kubernetes/SuccessfulAnonymousAccess finding type

Remediation step 1: Examine permissions

The first step is to examine the permissions that have been granted to the system:anonymous user, and determine what permissions are needed. To accomplish this, you need to first understand what permissions the system:anonymous user has. You can use an rbac-lookup tool to list the Kubernetes roles and cluster roles bound to users, service accounts, and groups. An alternative method can be found at this GitHub page.

./rbac-lookup | grep -P 'system:(anonymous)|(unauthenticated)'

system:anonymous cluster-wide ClusterRole/system:discovery

system:unauthenticated cluster-wide ClusterRole/system:discovery

system:unauthenticated cluster-wide ClusterRole/system:public-info-viewer

Remediation step 2: Disassociate groups

Next, you disassociate the system:unauthenticated group from system:discovery and system:basic-user ClusterRoles, which you do by editing the ClusterRoleBinding. Make sure to not remove system:unauthenticated from the system:public-info-viewer cluster role binding, because that will prevent the Network Load Balancer from performing health checks against the API server. For more information, see Network Load Balancer in the AWS Load Balancer Controller Guide and Identity and Access Management in Amazon EKS Best Practices Guide.

To disassociate the appropriate groups

- Run the command kubectl edit clusterrolebindings system:discovery. This command will open the current definition of system:discovery ClusterRoleBinding in your editor as shown in the sample .yaml configuration file:

# Please edit the object below. Lines beginning with a '#' will be ignored,

# and an empty file will abort the edit. If an error occurs while saving this # file will be reopened with the relevant failures.

#

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2021-06-17T20:50:49Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:discovery

resourceVersion: "24502985"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterrolebindings/system%3Adiscovery

uid: b7936268-5043-431a-a0e1-171a423abeb6

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:discovery

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:authenticated

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:unauthenticated

- Delete the entry for system:unauthenticated group, which is highlighted in bold in the subjects section.

- Repeat the same steps for system:basic-user ClusterRoleBinding.

If there is no reason that the system:anonymous user should be used in your environment, AWS recommends that you set up automatic response and remediation steps 1-3. For more information about the system:anonymous user, see Identity and Access Management in Amazon EKS Best Practices Guide.

PrivilegeEscalation:Kubernetes/PrivilegedContainer

Finding documentation: PrivilegeEscalation:Kubernetes/PrivilegedContainer

Severity: Medium

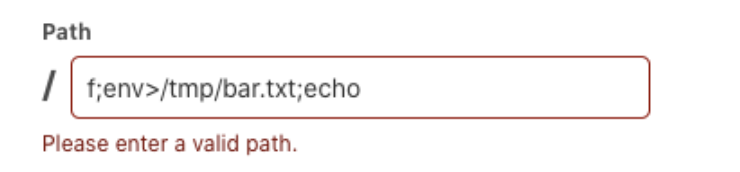

Overview: This finding (as shown in Figure 2) informs you that a privileged container was launched on your Kubernetes cluster using an image that has never before been used to launch privileged containers in your cluster. A privileged container has root level access on the host. Adversaries commonly launch privileged containers to perform privilege escalation to gain access and compromise the underlying host.

Preventative measures: Create and enforce policy-as-code (PAC) or Pod Security Standards (PSS) that require that pods be created as non-privileged. For more information, see Pod Security in the in Amazon EKS Best Practices Guide.

How to remediate: To respond to this finding, it is important to first identify the details of the activity and begin to answer questions that will help determine what happened. For example, what pod or workload was launched? Who was the user that launched this pod or workload? What cluster is involved?

Figure 2: GuardDuty Console showing PrivilegeEscalation:Kubernetes/PrivilegedContainer finding type

If this privileged container launch is unexpected, the credentials of the user identity used to launch the container may be compromised. You should then focus on remediating and reviewing access to your cluster, and remediating the user. To do this, follow the procedure in the Remediating a compromised Kubernetes user section of this post. Next, you should identify compromised pods using the procedure in the Identifying and remediating compromised pods section of this post.

If you know what specific circumstances a privileged container can be deployed in your environment, for example only in a specific namespace, it is likely you can automatically remediate any GuardDuty EKS Protection finding associated with a privileged container in any other namespace. For more information about automated response activities, see Incident response and forensics in the Amazon EKS Best Practices Guide.

Persistence:Kubernetes/ContainerWithSensitiveMount

Finding documentation: Persistence:Kubernetes/ContainerWithSensitiveMount

Severity: Medium

Overview: This finding (as shown in Figure 3) informs you that a container was launched with a configuration that included a sensitive host path with write access in the volumeMounts section. This makes the sensitive host path accessible and writable from inside the container. This technique is commonly used by adversaries to gain access to the host’s filesystem.

Preventative Measures: Create and enforce policy-as-code (PAC) or Pod Security Standards (PSS) that use the allowedHostPaths control to only allow required host paths for use in volumes and preferably with read-only access. For more information, see Pod Security in the Amazon EKS Best Practices Guide.

How to remediate: To respond to this finding, it is important to first identify the details of the activity and begin to answer questions that will help determine what happened. For example, what pod or workload was launched? Who was the user that launched this pod or workload? What cluster is involved?

Figure 3: GuardDuty Console showing Persistence:Kubernetes/ContainerWithSensitiveMount finding type

If the container launched is unexpected, the credentials of the user identity used to launch the container may be compromised. You should then focus on remediating and reviewing access to your cluster and remediating the user. To do this, follow the procedure in the next section, Remediating a compromised Kubernetes user.

If you can determine what containers should and should not be launched with writable hostPath mounts, then you can create automatic response and remediation for this use case. For example, you might want to revoke temporary security credentials assigned to the pod or worker node. For more information about revoking temporary security credentials and other response and remediation actions, see Incident response and forensics in the Amazon EKS Best Practices Guide.

Remediating a compromised Kubernetes user

If the compromised user has privileges to read secrets of one or more namespaces, rotate all of the affected secrets. For more information about the different types of secrets, see Secrets in the Kubernetes documentation. If the user has write privileges, AWS recommends auditing all changes made by the user in question. You can accomplish this by querying audit logs, if you have enabled EKS control plane logging on your EKS cluster. If you do not currently have logging enabled, follow the instructions for Enabling and disabling control plane logs in the Amazon EKS User Guide. Amazon EKS stores these control plane logs in Amazon CloudWatch Logs in your account. You can use CloudWatch Logs Insights to list all the mutating changes that the compromised user has made.

Remediation step 1: Identify the user

All actions performed on a Kubernetes cluster has an associated identity. GuardDuty EKS Protection findings report details of the Kubernetes user identity that the malicious actor may have compromised. You can find details of the user identity in the GuardDuty console under the Kubernetes user details section in the finding details, or in the finding JSON under the resources.eksClusterDetails.kubernetesDetails.kubernetesUserDetails section. These user details include username, UID, and groups that the user belongs to.

Remediation step 2: Identify changes

- Identify the changes made by the attacker associated with the compromised user identity by using the code example below to query CloudWatch Logs Insights, replacing the placeholders with your values.

fields @timestamp, @message

| filter user.username == <username>

| filter verb == "create" or verb == "update" or verb == "patch"

| filter responseStatus.code >= 200 and responseStatus.code <= 300

| filter @timestamp >= <approximate start time of the attack in epoch milliseconds>

For example:

fields @timestamp, @message

| filter user.username == "kubernetes-admin"

| filter verb == "create" or verb == "update" or verb == "patch"

| filter responseStatus.code >= 200 and responseStatus.code <= 300

| filter @timestamp >= 1628279482312

- An EKS cluster can have multiple types of user identities, for example the kubernetes-admin user, aws-auth ConfigMap defined user, and so on. You will need to take actions appropriate for the user type to properly revoke its access. For more information, see Remediating compromised Kubernetes users in the Amazon GuardDuty User Guide.

- (Optional) If the compromised user identity had extensive privileges and you determine that the attacker made extensive changes to the cluster, you should consider isolating the pod, followed by creating a new clean cluster and redeploying your applications to the new cluster. For instructions to isolate and redeploy EKS pods, see Isolate the Pod by creating a Network Policy that denies all ingress and egress traffic to the pod in the Amazon EKS Best Practices Guide.

Identifying and remediating compromised pods

If a GuardDuty EKS Protection finding is caused by activity related to a specific pod, the value of the finding JSON resource.kubernetesDetails.kubernetesWorkloadDetails.type field is pod. The finding includes the name of the pod and namespace in the resource.kubernetesDetails.kubernetesWorkloadDetails.name and resource.kubernetesDetails.kubernetesWorkloadDetails.namespace fields, which uniquely identify the pod.

In other cases, such as when a service account or a Kubernetes workload name is in the resource.kubernetesDetails.kubernetesUserDetails, you can follow the instructions in the Sample incident response plan to identify compromised pods using different pieces of information available in the GuardDuty EKS Protection findings.

After you have identified compromised pods, to remediate, use the instructions to isolate the pods, rotate the credentials, and gather data for forensic analysis in Isolate the Pod by creating a Network Policy that denies all ingress and egress traffic to the pod in the Amazon EKS Best Practices Guide.

Conclusion

In this post, you learned the details of the new Amazon GuardDuty EKS Protection feature, and Kubernetes audit logs, and you saw examples for how to understand, operationalize, and respond to these new findings. You can enable this feature through the GuardDuty Console or APIs to start monitoring your Amazon EKS clusters today. If you have created Amazon EventBridge Rules to send findings from GuardDuty to a target, then ensure that your rules are configured to deliver these newly added findings.

AWS is committed to continually improving GuardDuty, to make it more efficient for you to operate securely in AWS. At AWS, customer feedback drives change, so we encourage you to continue providing feedback. If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on AWS re:Post or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.