Post Syndicated from Йоанна Елми original https://toest.bg/delyan-delchev-interview-cybersecurity/

Няколко дни след началото на руската инвазия в Украйна министърът на електронното управление Божидар Божанов обяви, че съвместно с ГДБОП са предприети действия за „филтриране или преустановяване на трафика от над 45 000 интернет адреса, от които са извършвани опити за зловредна намеса в електронни системи“. Същевременно от януари насам са осъществени редица кибератаки срещу Украйна, като мишени са от държавни институции до банки. Много от тези атаки са приписвани на Русия. Вземайки предвид активната информационна война в България и геополитическите интереси на Русия в региона, Йоанна Елми разговаря с Делян Делчев – телекомуникационен инженер и експерт по информационни технологии с познания и опит в сферата на киберсигурността.

Г-н Делчев, често говорим за „хибридна война“, за която имаше предупреждения и във връзка с инвазията в Украйна. Струва ми се обаче, че има разминаване в схващането на термина. Какво да разбират читателите под този етикет?

По принцип терминът означава динамично и/или едновременно комбиниране на конвенционални и неконвенционални военни действия, дипломация и кибервойна, в т.ч. и информационна (или както си я наричахме ние – пропаганда). Но подобно на други термини, с времето оригиналният смисъл се загубва и днес по-скоро имаме предвид основно електронната пропаганда, понякога подпомагана от хакерство, търсещо сензация.

Може ли да кажем грубо, че хибридната война включва два елемента: комуникационен, например пропаганда, и технически – кибератаки срещу ключова инфраструктура?

Да. Но обръщам внимание, че това, с което асоциираме термина в последно време, е предимно пропагандата по интернет, а всички други съпътстващи действия по-скоро целят нейното подпомагане.

Съществуват ли кибератаки, които са особено популярни? Какви са практиките?

Светът на хакерството е интересен и много по-различен от това, което виждате по телевизията. Доминиращото количество хора, занимаващи се с тези дейности, не са войници, професионалисти или пък гении. Това са най-обикновени хора, например тийнейджъри, събрани в малки приятелски банди, които пробват неща, за които са прочели тук и там, в повечето случаи – без да ги разбират в дълбочина. Те се радват на тръпката от потенциален успех, дори той да е малък, на емоцията да правиш нещо забранено, да получиш същия адреналин като при екстремните спортове.

Има всякакви хора – някои са мотивирани и от възможностите за малки или големи печалби или просто за събиране на информация, която те си мислят, че може да е тайна – да разкрият нещо ново, някоя голяма конспирация. И тези хора са напълно случайно разпръснати по света и са удивително много. Само в Китай има милиони тийнейджъри (т.нар. script kiddies), които отварят за първи път някоя книжка или по-скоро онлайн хакерски документ и веднага искат да си пробват късмета, да видят какво ще стане. Паралелно има и неструктуриран черен пазар: малките банди си взаимодействат и си помагат с услуги, скриптове, достъп, поръчки, плащат си с пари, (крадени) стоки, услуги, програмен код, ресурси и криптовалута. Където има хора и търсене, има и пари, и награди.

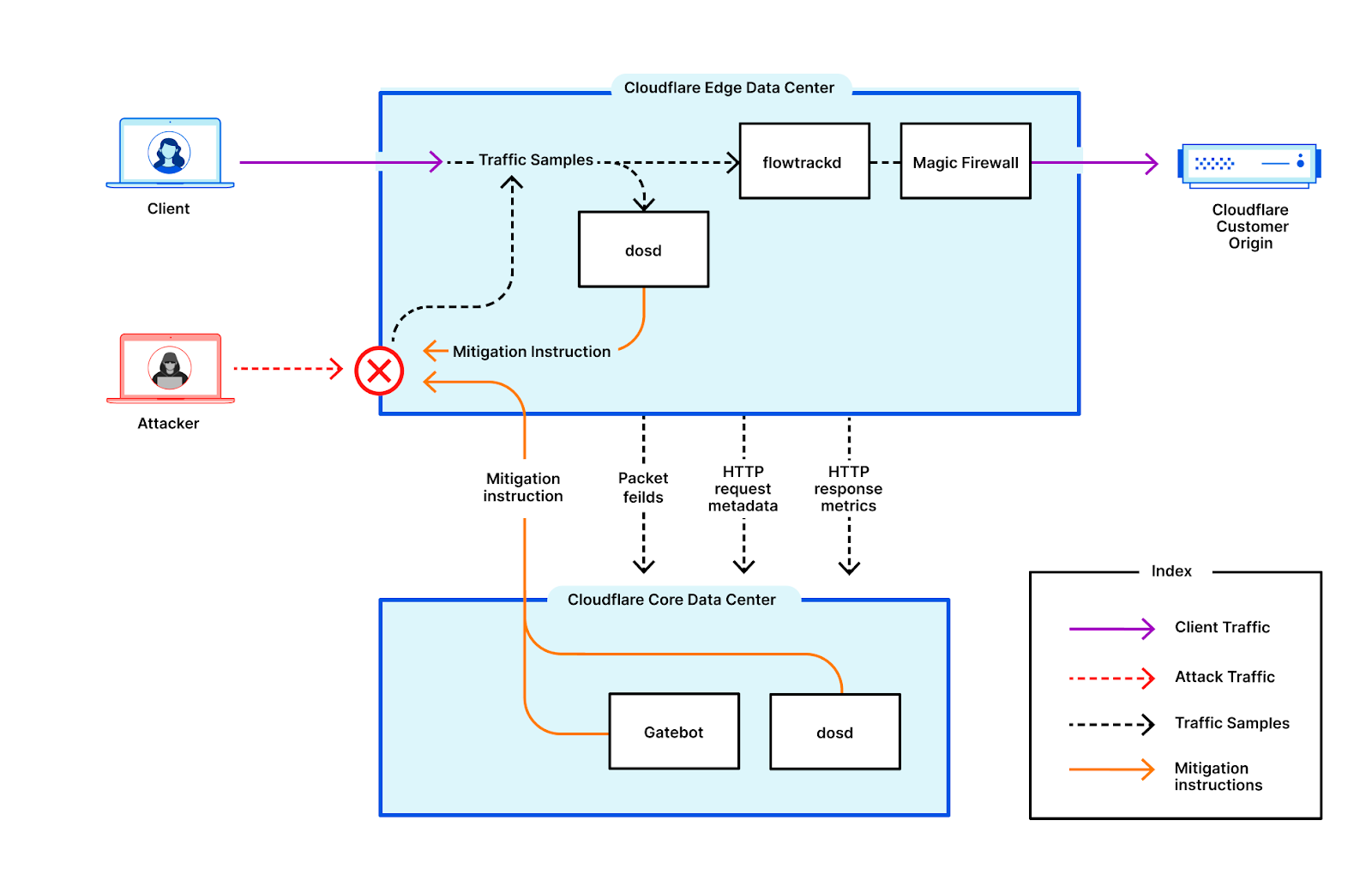

Държавните „киберармии“ всъщност се възползват от тези хакери и големия им брой. Те им спускат поръчки чрез подставени лица, посредници или приятели и съответно ги награждават, ако някъде постигнат успех. Същото правят и обикновени престъпници, частни фирми, детективи и какви ли не други. Ако използваме аналогия от спагети уестърните: обявена е парична награда за главата на някого и всички ловци на глави се втурват да се пробват. Няма никакви гаранции за успех, а заниманието е времеемко, защото реалността не е като по телевизията – идва хакер, оплаква се от нещо, после трака пет секунди на клавиатурата и казва: „Вътре съм.“ В реалния живот дори малки пробиви може да отнемат години и се правят на малки стъпки. Затова и когато бъдат разкрити, пораженията са вече големи, защото пробивът може да не е бил от вчера, а да е отворена порта с години.

Тъй като повечето хакери не разбират занаята в дълбочина, често някои държави или фирми, които са специализирани в областта на сигурността и разполагат с интелигентни и способни ресурси, предоставят готови инструменти, непознати хакове или вътрешна информация на хакерските банди. Понякога дори начеват процеса и подготвят обстановката, а после оставят хакерите да довършат нещата. Хакерите са, един вид, мулета и дори някой да ги уличи, директната връзка с поръчителя е много трудна.

А има ли специфичен почерк според държавата, извършваща кибератака?

Индивидуалните банди се специализират в различни направления – във флууд (от англ. flood, „наводняване“– претоварване на интернет връзки, което води например до блокиране на достъпа до уебсайтове); сриване на ресурси, затрудняване на работоспособността на инфраструктури; хакване, вземане на контрол; създаване и събиране на бот мрежи (които после се ползват за прикриване на хакове и флууд)*; кражби на идентификация, пароли, лична информация, данни за кредитни карти и за криптовалути; рансъмуер (зловреден софтуер, който криптира информацията на заразения компютър и изнудва потребителя да му плати откуп, за да получи ключ за дешифриране) и т.н.

Трудно е от пръв поглед да се каже кой какъв е и дали поведението му е самопородено от хаоса, или има частен интерес, или някой му дърпа конците и го е мотивирал, без значение дали това е станало знайно, или незнайно за извършителя. Но светът е малък и има модели на поведение, които са специфичен стил на различните групи и мотиватори. Има и много улики. В действителност в интернет нищо не е наистина анонимно. Така чрез различни техники може да се идентифицира кой кой е и дали е под влиянието на поръчители от една или друга държава. Киберсигурността се развива и на обикновените хакери им е все по-трудно да откриват нови слаби звена. Това го могат основно хора, които имат познания, специфичен достъп до информация (например сорскод на Windows – Microsoft го предоставя под различни програми на няколко държави, сред които са и Русия, и Китай), разузнаване, възможности за събиране и мотивиране на съмишленици или помагачи, работещи в различни компании.

В скандала Solarwinds например пакетът от хакове съдържаше инструмент с компонент, написан от хакерите, но подписан така, сякаш идва от Microsoft. Този компонент води до лесното и невидимо инсталиране на код, който позволява отдалечено управление на Windows. Това не може да бъде направено от обикновени хакери, тези ключове и процесът по подписването с тях трябва да са тайна. От Microsoft и досега изследват как хакерите са направили пробива. Съвсем вероятно е да е станало по описания по-горе начин – през програмите, по които Microsoft работи директно с някои правителства, и е сериозен сигнал за правителствено участие. Хакерските банди нямат тези възможности, а дори да ги имаха, всичко това щеше да се появи публично и светът щеше да е залят с подобни подписани компоненти. Сега обаче същите инструменти и ключ ненадейно се появиха в няколко хакерски кампании за изземване на украински ИТ системи, което очевидно уличава руски правителствени интереси.

Китайските държавни хакери, както и американските, и руските, имат своя колекция от хакерски инструменти, които разработват тайно, не са публични, но понякога биват предоставени на близки банди (някои често съдействат на всички служби, държави или частни компании едновременно). Така и по инструментите може да се познае кой стои зад атаката. Или по наградите. Или по „мулетата“. Или по начина на плащане. Или дори по пропагандните изрази, които използват (и които издават с кого си комуникират). Макар хаотичните банди да стоят на преден план, отзад понякога се виждат сенките на по-сериозни професионалисти и организатори на кампании. Все още обаче над 99% от кибератаките са изцяло хаотични и не са свързани с държавни „киберармии“.

Как се наказват кибератаките? Съществува ли в света ефективно разработена рамка, която да ги третира като престъпления?

Има опити, но не мисля, че са ефективни. Проблемът е, че обикновено се наказват тийнейджъри, които са на практика невинни или пък малолетни и неопитни както в живота, така и в това, което правят, и всъщност заради това ги хващат. В огромната си част по-опитните хакери или пък техните поръчители, ако има такива, си остават недосегаеми. Не мисля, че е възможно при тази „екосистема“ въобще да има начин да изхвърлим мръсната вода, без да изхвърлим и бебето. Обществените реакции ще са тежки. Затова ако нещо се прави по темата, е епизодично и според мен никой не се опитва сериозно да се занимава с наказания, поне в свободния свят.

Друг проблем е, че видимите хакери са често разпръснати между много държави и просто няма как да хванеш един и после чрез него да намериш и хванеш друг, а чрез него – трети, без подкрепата на тези държави. А това е трудно и понякога невъзможно. Ето защо и никой не иска да прави нещо наистина сериозно и масово, когато не става въпрос за финансови престъпления. От известно време съответните полицейски служби се опитват – под предлог за борба с детската порнография – да разработят по-координирана комуникация между различни държави и често правят масови транснационални кампании. Създадената инфраструктура автоматично впоследствие може да се използва за всякакви киберпрестъпления – от най-големите до примерно нарушаване на авторски права (изтеглили сте някакъв филм от интернет). Но засега тази координация е в процес на подготовка и се фокусира върху детската порнография като общо безспорно припознат проблем от службите.

За мен това, че има куп младежи, които искат да се научат „на хакерство“, не е проблем. Така се натрупва познание. Ако някой хакер е намерил как да влезе в пощата или сървъра ви, не е чак такава беда в повечето случаи, защото загубите са най-често малки. Може да се възползвате от това, за да се учите да се пазите по-добре. Защото ако хакери, които не са държавно спонсорирани, могат да пробият системите ви, то държавно спонсорираните вероятно отдавна се разхождат там необезпокоявано.

Образът на руския хакер е почти фикционален, като на филм. В действителност има ли Русия особена роля в сферата на кибератаките?

Има, но не и по този романтичен начин, по който повечето хора си го представят. „Руските хакери“ всъщност са всякакви хакери, с всякаква националност. Има немалко българи сред тях например. Има и американци, китайци, западноевропейци, какви ли не. И тези хора нямат задължително идеология, нито правят това от любов към Путин; мнозина дори не знаят, че са спонсорирани от Москва чрез посредници. Просто техните банди, приятелски кръгове и контакти ги поставят в позиция да получават, понякога през десетки посредници, възможности за поръчки, които са спуснати от руски поръчители или са в техен интерес.

За разлика от САЩ, които като цяло избягват да използват бандите (с малки изключения) и имат голям собствен и невидим ресурс, напълно отделен от хакерската общност, Русия е много по-прагматична и грубо казано, излиза на свободния пазар. Така тя разчита и на по-нискоквалифицирани хакери, които съответно повече се излагат и биват хващани, защото използват по-видими и груби подходи. Спокойно можем да определим руската практика като „слон в стъкларски магазин“. Но това работи за тях, защото те се радват на пропагандния ефект и на името, което си създават. Тази практика е и по-евтина и по-лесна, тъй като не се налага обучение на хора, нито създаване и развиване на специални държавни структури със специфично познание.

Но тук-там се виждат и по-прецизни руски изпълнения с директно въвличане на по-интелигентни участници от масовите хакери. Solarwinds, както и двете последни кампании в Украйна са добри примери и много служби осъзнаха, че Русия също започва да натрупва и такъв потенциал.

Показаха ли нагледно случаи като теча в НАП, че България е неподготвена в сферата на киберсигурността? Обществеността не се трогна много от изтичането на данни – защо? И как трябва да се обясни на хората, че проблемът ги засяга лично?

Първо, повечето от хакванията, за които съм чул, че се случват в България, и по-специално това в НАП, са породени от небрежност и вероятно от незаинтересованост, често граничеща с глупост. Но основният проблем на България е, че работим след събитията – чакаме нещо да се случи, за да действаме. После работим на парче, докато следващото събитие не дойде да ни накара да действаме отново. Киберсигурността постоянно се променя. С единични действия не може да се постигне нищо. Трябва постоянно да следиш и да реагираш на това, което става или за което чуваш като възможни рискове в други държави. Дори хипотетично в момента да имаш най-добрата защита на света, след няколко месеца вече няма да е така. Държавата трябва да има процедури (а не само стратегии) и тези процедури активно да се изпълняват, а на киберсигурността трябва да се гледа много сериозно.

Усещането ми е, че киберхигиената в държавните ни институции не е на ниво и едва ли не още сричаме азбуката. Смешно е да слушаме изказвания как в НАП са си мислили, че имали сигурност, защото са минали обучение и са направили опит да се сертифицират по ISO27000. Както видяхме, това не е помогнало. Смешно е също някои други институции да си мислят, че като криптират нещо, то автоматично става защитено.

Разглеждайки внимателно как е разработвано хакването на някои от финансовите и държавните институции в Украйна, ще видим, че ако сме били ние на мушка, нито една от простите ни представи как да бъдем или да се чувстваме защитени, не би ни предпазила. Има големи разлики в изграждането на хигиена в киберсигурността на индивидуално или корпоративно ниво и на ниво държава, държавни институции и организации от сферата на сигурността. Ние засега се опитваме да покрием поне корпоративните стандарти – и дори в това нямаме големи успехи.

Гражданите няма как да следят какви пробиви се появяват в киберсигурността, нито пък ще се занимават постоянно с поддържането на киберхигиена, ако тя е трудна и неразбираема. Всяко нещо, което ти създава дискомфорт, скоро бива игнорирано, все едно никога не го е имало. Класически пример за това са изискванията за много сложни пароли – на пръв поглед трябва да се минимизира рискът някой хакер да ги познае, но пък така потребителят е принуден или да използва една и съща парола навсякъде, или да си ги записва и евентуално да ги оставя на публични места. Така вместо да се подобри сигурността с това правило, всъщност тя спада, както показва статистиката.

Киберсигурността трябва да се възприема сериозно отгоре надолу в държавата, а не обратното, от гражданите към властта. Решенията и процесите трябва да са прости и органични, в най-добрия случай невидими за крайните потребители, да не им пречат, и така всичко ще бъде разумно ефективно, дори да не е перфектно. Хората трябва да знаят, че никога нищо в киберсигурността не е перфектно, но може да е достатъчно добро, за да минимизира рисковете и експозицията. За пример: ако в НАП спазваха поне основните принципи на GPDR за съхраняване на личните данни, уязвимостта нямаше да е толкова голяма. Дали дори сега в НАП ги спазват? Или си мислят, че ако не публикуват бекъпите си в интернет достъпни сървъри, те ще са защитени? Предвид наблюдаваното напоследък, това е много измамно усещане.

А по въпроса как киберсигурността ни засяга лично: представете си, че всички електронни блага, които имате днес – банкови карти, пари, интернет, смартфони, лична информация, усещане за личен живот, – може да се загубят и/или да ги получи някой друг, а вие бъдете пренесен, метафорично казано, обратно в 70-те като ниво на комуникация. Ако тази мисъл ви създава дискомфорт, значи трябва да се отнасяте сериозно към киберхигиената си.