Post Syndicated from Надежда Цекулова original https://toest.bg/tova-e-prosto-grip/

Тенисистът Григор Димитров, спортният министър Красен Кралев, телевизионният водещ Ути Бъчваров, анестезиологът и радиоводещ Мирослав Ненков, депутатът Хасан Адемов, фолкпевиците Галена и Деси Слава. Това са само част от известните личности у нас, които публично разказаха за боледуването си от COVID-19. Сред тях има различни случаи – хора, боледували тежко и дори лекувани за определен период в реанимация, и други, прекарали заболяването без никакви или с леки симптоми. Общото им послание е, че вирусът не бива да се подценява, а едно от най-честите определения, използвано от възстановилите се от болестта, е, че тя е „странна“.

В световен мащаб, а и у нас обаче продължава да вирее съмнението в сериозността на COVID-19. Има и хора, които все още не вярват, че вирусът съществува.

Новият вирус е като сезонен грип – дезинформация или оптимизъм

Често протичането на COVID-19 се сравнява с това на сезонния грип. В самото начало на пандемията подобни твърдения преобладаваха, а социалните мрежи и форумите изобилстваха от таблици и графики с броя на загиналите от глад, катастрофи, рак и грип. Данните се ползваха като доказателство колко нерелевантни са строгите ограничения, въведени в борбата срещу заразата, и като довод, че няма нужда от паника. Както по света, така и в България, лидери и експерти на най-високо ниво поддържаха тезата, че SARS-CoV-2 причинява обикновен грип и мерките за овладяването му не бива да бъдат по-различни от тези при сезонните епидемии.

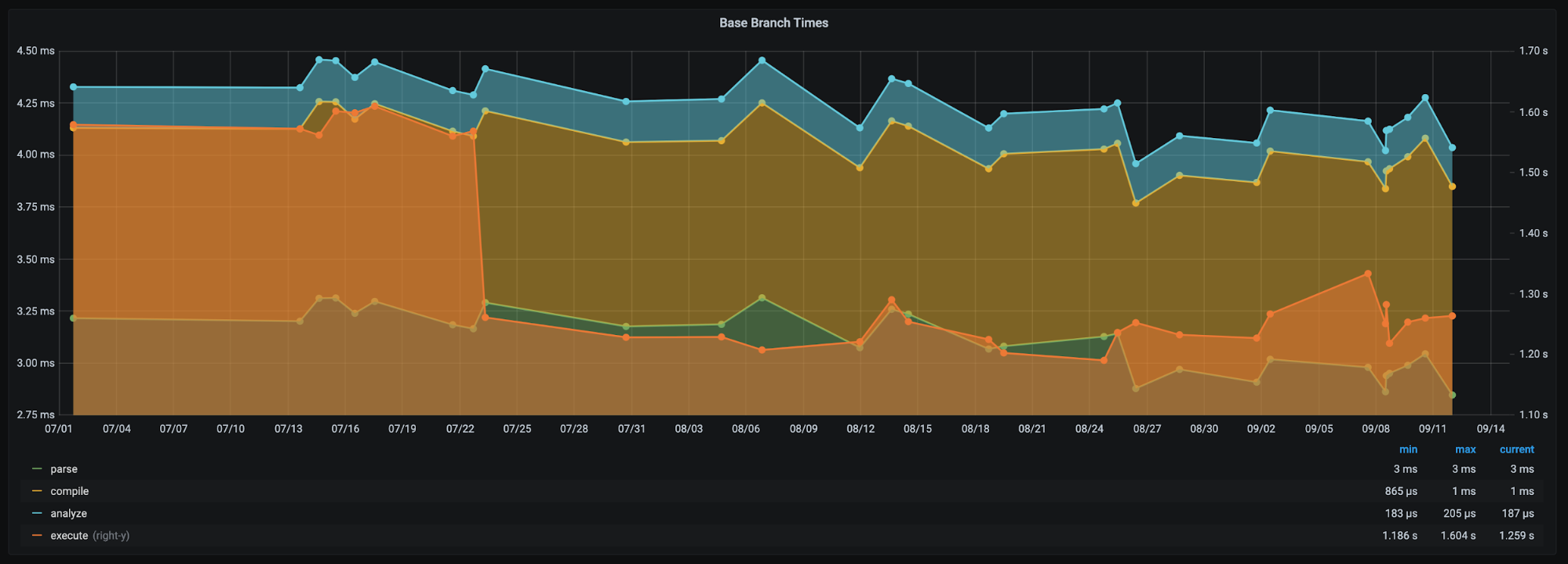

Интерактивната графика по-долу илюстрира добре, макар и не докрай прецизно, защо сериозността на болестта не трябва да се подценява.

През януари Световната здравна организация (СЗО) обявява епидемията от COVID-19 за извънредна ситуация в областта на общественото здравеопазване от международно значение. Към тази дата болестта действително е на последно място като причина за смърт сред близо 20 изброени, в които дори не са включени най-масовите „убийци“ на населението на земята – исхемична болест на сърцето, инсулт, хронична обструктивна белодробна болест, алцхаймер. Липсата на пряко усещане за заплаха от новата болест и съвсем реалистичните страхове от по-стари и популярни зарази правят тезата за „обикновеността“ на вируса близка, желана и изглеждаща достоверно. За кратко.

Респираторно заболяване ли е COVID-19

Въпреки първоначалните хипотези, че SARS-CoV-2 е поредният коронавирус, предизвикващ сезонно респираторно заболяване, след значителните проблеми с лечението на тежко болните науката потърси отговорите в други посоки. Поредица от изследвания установиха, че вирусът всъщност може да засегне всеки орган, включително кръвоносната система. Теорията, че COVID-19 е по-скоро възпаление на съдовата система (васкулит), а не респираторно заболяване, беше популяризирана у нас най-вече от пулмолога д-р Александър Симидчиев, чиито медийни изяви по темата получиха широк отзвук. Той и други медицински експерти положиха усилия да изградят представата за болестта като „лека“, „респираторна“ и „сезонна“.

Въпреки всички научни доказателства обаче сравнението със сезонните респираторни заболявания продължава да циркулира като легитимна гледна точка дори в професионалния дебат по темата. Сред основните аргументи на защитниците на тази теза е, че известните досега данни за разпространението и смъртността от това заболяване приличат повече на тези на грипа, отколкото на тежки инфекции като рубеола и морбили например. Един от защитниците на тезата у нас е председателят на Центъра за защита правата в здравеопазването д-р Стойчо Кацаров, който от самото начало на пандемията акцентира върху несъразмерния според него отговор срещу заразата, който създава по-големи рискове от самата болест.

Специфичното за тази трактовка е, че тя стъпва на верни факти и поставя действията на официалните власти не само у нас, но и в целия свят под въпрос – и така звучи като аргументирана и логична критика. Тази теория, която често предлага превратна интерпретация на числата, пропуска един ключов детайл – в основата на агресивните мерки срещу разпространението на SARS-CoV-2 от самото начало на кризата стои не това, което световната наука знае за вируса, а това, което не знае за него.

„Леталитет“ и „тежко протичане“

Колко от заразените с новия коронавирус се разболяват тежко? А колко умират?

Изчисляването на тези два параметъра e един от факторите, които могат да дадат отговор на въпроса за сериозността на дадено заболяване. Вирусът ебола например буди изключителна тревога, тъй като само тазгодишният епидемичен взрив е убил около половината от засегнатите, а през годините смъртността варира между 25 и 90%.

Все още е трудно да се направят прецизно подобни калкулации за разпространението, тежестта и смъртността на COVID-19, тъй като заболяването е ново. В началото на август СЗО публикува научно писмо, в което подробно обяснява принципа за определяне на смъртността на дадено заболяване. Учените обясняват, че се наблюдават два различни коефициента – IFR (infection fatality ratio), който показва съотношението на броя починали към общия брой заразени, и CFR (case fatality ratio), или леталитет, който отразява броя починали към броя доказани случаи. Заради различията при изчисляването на двата коефициента се говори за „смъртност“ от под 0,1% до над 25%.

От друга страна, самото изчисляване на евентуалния брой заразени е резултат от допускания и предположения, а не точна сметка. За да онагледи този проблем, сайтът Our World in Data публикува диаграма, включваща данни от Съединените щати за доказаните случаи на коронавирус и оценка за истинския брой на заразените според четири от най-използваните математически модели. Вероятността реално заразените да са много повече от доказаните е видима и в четирите модела. По въпроса колко точно са заразените и как болестта се развива във времето обаче, моделите предлагат напълно различни резултати.

В не един научен материал се отбелязва, че трябва да се отчетат значителните разлики на възрастова основа, заради които осредняването на леталитета също би довело до некоректни изводи.

За „тежко протичане“ на COVID-19 може да се говори при определени медицински критерии, разписани от международните професионални организации. Един от най-ясно разпознаваемите от неспециалистите симптоми е дихателната недостатъчност. Все още липсва категоричен научен консенсус по отношение на риска от тежко протичане на заболяването, тъй като освен по възрастови групи, разлики се отчитат и при наличието на други фактори – като съпътстващи заболявания – и дори според държавата.

Въпреки всички условности, в скорошна публикация в медицинското издание The Lancet се прогнозира, че вероятно около 4% от световното население е заплашено от тежко протичане на COVID-19, като при мъжете рискът е два пъти по-висок (6%), отколкото при жените (3%). Делът на уязвимите групи е най-голям в страни със застаряващо население, африкански държави с високо разпространение на ХИВ/СПИН и малки островни държави с широко разпространение на диабет. Ключов фактор при оценката на риска се оказва наличието на диабет и хронични бъбречни, сърдечносъдови и респираторни заболявания.

На този етап от развитието на пандемията математическите модели не могат да дадат точни отговори. Но изминалите девет месеца от началото на коронакризата бяха достатъчни, за да покажат, че тревогите около новото заболяване може да се окажат дългосрочни.

Свидетелства за боледуване, продължаващо по 10–12 или над 15 седмици, се появяват в различни краища на света. Такива случаи бяха документирани и от световните медии и описани в социалните мрежи. Науката също потвърди някои от дългосрочните усложнения, причинени от COVID-19, и отхвърли други. Въпреки че научихме много за коронавируса от началото на пандемията насам, много от въпросите, засягащи хода на заболяването, все още нямат отговори.

Така наличната към момента информация за дела на смъртните случаи и на хората, които оцеляват след тежко протичане на болестта, може да се окаже недостатъчна за цялостна оценка на тежестта на заболяването. Едно обаче е сигурно – става все по-трудно да впишем COVID-19 в графата „лека настинка“.

Дългосрочните ефекти на COVID-19 и защо е важно

Една от най-престижните болнични вериги в Съединените щати – Mayo Clinic, наскоро публикува статия, в която обобщава на достъпен език най-сериозните доказани към момента дългосрочни усложнения на COVID-19. В материала се посочва, че повечето от боледувалите се възстановяват напълно в рамките на няколко седмици. Но при други – дори такива с леко протичане на заболяването, симптомите не отминават дори след първоначалното възстановяване. Най-често те продължават да страдат от умора, кашлица, задух, главоболие, болки в ставите. Екипът на Mayo Clinic изрежда и кои са уязвимите органи и системи – сърце, бели дробове, мозък, кръвоносна система.

Опровергава се и първоначалното убеждение, че здравите млади хора не са толкова застрашени от заболяването. Изследването на леките случаи е особено предизвикателство за медиците, тъй като много от пациентите съобщават за продължителни прояви на някои симптоми, дълго след официалното си излекуване.

Заради недостатъчната, а на места и липсваща диагностика обаче проследяването и изучаването на усложненията често е възпрепятствано. Например анкета, направена в Холандия, разкрива, че почти три месеца след появата на първите симптоми, 9 от всеки 10 души съобщават, че имат проблеми с извършването на обикновени ежедневни дейности. 1622 души със съмнение за коронавирус са участвали в проучването, но 91% не са били хоспитализирани, а 43% изобщо не са били диагностицирани от лекар.

В изследване на Кралския колеж в Лондон се предполага, че едва 52% от преболедувалите във Великобритания са оздравели за по-малко от 13 дни. Проучването включва както тежки случаи, лекувани в интензивно отделение, така и хора, прекарали заболяването у дома с леки симптоми. Друго английско проучване, публикувано в специализираното издание за неврология Brain, докладва за увреждания на нервната система, причинени от COVID-19, като някои от усложненията не са свързани с това дали болестта е протекла леко, или тежко.

Изследвани са хора, при които болестта не е дала никакви респираторни симптоми, и неврологичните разстройства са били първият и единствен симптом на COVID-19. Документирани са случаите на 43 души на възраст между 16 и 85 години с различни форми на мозъчно увреждане вследствие на прекараната инфекция.

През лятото репортаж на британската телевизия Sky News разказва за болницата в италианския град Бергамо, един от най-засегнатите от заразата райони в световен мащаб. Местните лекари проследяват състоянието на хората, лекувани от COVID-19 в най-тежките седмици на епидемията през март и април. Психоза, безсъние, бъбречна болест, гръбначни инфекции, инсулти, хронична умора и двигателни проблеми са сред най-сериозните усложнения, останали след излекуването на пациентите, споделят италианските лекари пред телевизията. Те подчертават, че според тях става дума за системна инфекция, която засяга всички органи в тялото, а не за респираторно заболяване, както се смяташе в началото на пандемията. Проактивното издирване на пациентите е провокирано от предишно малко проучване, което сочи, че над 87% от пациентите страдат от поне един симптом, който не отминава дори след като болните се смятат за излекувани.

Както всяко друго ново явление, и тази болест формира свой собствен речник. Описаните по-горе случаи получават общото наименование long COVID, или на български – „дългопротичащ ковид“. Понятието възниква спонтанно, тъй като в държавите с масово разпространение, като Великобритания и САЩ, се появяват много пациенти, съобщаващи за продължително неразположение, които много дълго време не са тествани. Понятието е възприето и от медицинската общност, като в началото на септември престижното научно издание British Medical Journal дори организира уебинар за специалисти със съвети как да диагностицират и лекуват пациенти с „дългопротичащ ковид“.

Сериозно, но не безнадеждно

Данните за много пациенти с дългосрочно проявяващи се симптоми в условията на липсващо специализирано лечение мотивират специалистите да търсят алтернативни решения за тези случаи. Репортаж на Си Ен Ен от Международния конгрес на Европейското дружество по белодробни болести съобщава за изследване, което дава надежда за по-бързо възстановяване с помощта на точна двигателна и респираторна рехабилитация. Любопитен детайл е, че рехабилитацията повлиява позитивно дори върху психо-неврологичните симптоми, като депресия и тревожност.

Не трябва да забравяме обаче, че далеч не всички въпроси около COVID-19 са получили своите отговори и не е изключено изследванията по темата отново да променят разбирането на медиците за същността на заболяването, неговия ход и лечение.

Заглавна илюстрация: © Пеню Кирацов

„Тоест“ разчита единствено на финансовата подкрепа на читателите си.