Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=YtX9_3sY7GM

Зам.-министър на земеделието ловува със строителния бос Краси Карото

Post Syndicated from Николай Марченко original https://bivol.bg/%D0%B7%D0%B0%D0%BC-%D0%BC%D0%B8%D0%BD%D0%B8%D1%81%D1%82%D1%8A%D1%80-%D0%BD%D0%B0-%D0%B7%D0%B5%D0%BC%D0%B5%D0%B4%D0%B5%D0%BB%D0%B8%D0%B5%D1%82%D0%BE-%D0%BB%D0%BE%D0%B2%D1%83%D0%B2%D0%B0-%D1%81%D1%8A.html

Имената на бившия изпълнителен директор на „Лукойл България“ Валентин Златев, на члена на надзорния съвет на „Търговска банка Д“ АД Фуат Гювен и на съсобственика на строителната „Каро Трейдинг“ Красимир…

Run Faster Log Searches With InsightIDR

Post Syndicated from Teresa Copple original https://blog.rapid7.com/2022/03/11/run-faster-log-searches-with-insightidr/

While it could be true that life is more about seeking than finding, log searches are all about getting results. You need to get the right data back as quickly as possible. In this blog, let’s explore how to make the best use of InsightIDR’s Log Search capabilities to get the correct data returned back to you as fast as possible.

First, you need an understanding of how Rapid7’s InsightIDR Log Search feature works. You may even want to review this doc to familiarize yourself with some of the newer search functionality that has been recently released and to understand some of the Log Search nuances discussed here.

The basics

Let’s begin by looking at how the Rapid7 InsightIDR search engine extracts data. The search engine processes both structured and unstructured log data to extract out valuable fields into key-value-pairs (KVPs) whenever it is possible for it to do so. These normalized fields of data or KVPs allow you to search the data more efficiently.

While the normalized fields of data are typically the same for similar types of logs, in InsightIDR they are not normalized across the product. That is, you’ll see the same extracted fields, or keys, pulled out for logs in the same Log Set, but the extracted fields and key names used in other Log Sets may be different.

As everyone who has spent any amount of time looking at log data knows, individual log entries can be all over the place. Some vendors have great logs that contain structured data with all the valuable information that you need, but not all products do this. Sometimes the logs consist, at least in part, of unstructured data. That is, the logs are not in KVP or cannot be easily broken into distinct fields of data.

The Rapid7 search engine automatically identifies the keys in structured data, as well as from unstructured data, and automatically identifies the KVPs to make the data searchable. This allows you to search for any value or text appearing in your log lines without creating a dedicated index. That is, you can search by specifying either just text like “ksmith” or “/.*smith.*/”, or you can search with the KVP specified – for example “destination_account=ksmith” – with equal ease in the search engine. However, is one of these searches better than the other? Let’s keep going to answer that question.

As InsightIDR is completely cloud-native, the architecture is designed to take advantage of many cloud-native search optimizations, including shared resources and auto-scaling. Therefore, in terms of search performance, a number of specialized algorithms are used that are designed to search for data across millions of log lines. These include optimizations to find needle-in-a-haystack entries, statistical algorithms for specific functions (e.g. for percentile), parallelization for aggregate operations, and regular expression optimizations. How quickly the results are returned can vary based on the number of logs, the number of (matching) loglines in that time range, the particular query, and the nature of the data – e.g. the number of keys and values in a logline.

Did you know that statistics on the last 100 searches that have been performed in your InsightIDR instance are available in the Settings section of InsightIDR? Go to Settings -> Log Search -> Log Search Statistics to view them. In addition to basic information, such as when the query completed and how long it took, you can also use the “index factor” that is provided to determine how efficient your query is. The index factor is a value from 0 to 100 that represents how much the indexing technology was used during the search. The higher the index value, the more efficient the search is. The Log Search Statistics page is especially helpful if you want to optimize a query you will be running against a large data set or using frequently.

How to improve your searches

As you can see, the Log Search query performance can be influenced by a number of factors. While we discuss some general considerations in this section, keep in mind that for queries that run frequently, you may want to test out different options to find what works best for your logs.

General recommendations

Here are some of the best ways to speed up your log searches:

- Specify smaller time ranges. Longer time ranges add to the amount of time the query will take because the search query is analyzing a larger number of logs. This can easily be several hundred million records, such as with firewall logs.

- Search across a single Log Set at a time. Searching across different Log Sets usually slows down the search, as different types of logs may have different key-value pairs. That is, these searches often cannot be optimally indexed.

- Add functions only as needed. Searches with only a where() search specified are faster than searches with groupby() and calculate().

- Use simple queries when possible. Simple queries return data faster. Complex queries with many parts to calculate are often slower.

- Consider the amount of data being searched. Both the number of log entries that are being searched and the size of the log should be considered. As the logs are stored in key-value pairs, the more keys that the logs have, the slower they are to search.

The old adage about deciding if you want your result to be fast, cheap, or good applies here, too — except that with log searches, the triad that influences your results is fast, amount of data to be searched, and complexity of the query. With log searches, these tradeoffs are important. If you are searching a Log Set with large logs, such as process start events, then you may have to decide which optimization makes the most sense: Should you run your search against a smaller time range but still use a complex query with functions? Or would you rather search a longer time range but forgo the groupby() or calculate()? Or would you rather search a long time range using a complex query and then just wait for the search to complete?

If you need to search across Log Sets for a user, computer, IP address, etc., then maybe it makes more sense to build a dashboard with cards for the data points that you need instead of using Log Search. Use the filter option on the dashboard to specify the user, computer, etc. on which you need to filter. In fact, a great dashboard collection might just be your iced dirty chai latte, the combination that solves most of your log search challenges all at once. If you haven’t already done so, you may want to check out the new Dashboard Libraries, as more are being added every month, and they can make building out a useful dashboard almost instantaneous.

Specifying keys vs. free text

It is an interesting paradox of Log Search that specifying a key as part of the search does not always improve the search speed. That is, it is sometimes faster to use a key like “destination_account=ksmith,” but not always. When you specify a key-value-pair to search, then the log entries must be parsed for the search to complete, and this can be more time-consuming than just doing a text search.

In general, when the term appears infrequently, running a text-based search (e.g. /ksmith/) is usually faster.

Also, you may get better results searching for only a text value instead of searching a specific key. That is, this query:

where(FAILED_OTHER)

… might be more efficient and run faster than this query:

where(result=FAILED_OTHER)

Of course, this only applies if the value will not be part of any of the other fields in the log entry. If the value might be part of other fields, then you will need to specify the key in order for the results to be accurate.

Expanding on this further, the more specific you can be with the value, the faster the results will be returned. Be specific, and specify as much of the text as possible. A search that contains a very specific value with no key specified is often the fastest way to search, although you should test this with your particular logs to see what works best with them.

The corollary to this is that partial-match-type searches tend to be slower than if a full value is specified. That is, searching for /adm_ksmith/ will be faster than /adm_.*/. Finally, case-insensitive searches are only slightly slower than when the case is specified. “Loose” searches — those that are for partial and case-insensitive searches — are slower, largely because partial match searches are slower. However, these types of searches are usually not so slow that you should try to avoid them.

Contradictorily, it is also sometimes the case that specifying a key to search rather than free text can greatly improve indexing and therefore reduce the search times. This is particularly true if the term you are searching appears frequently in the logs.

Additional log search tips

Here are some other ways to improve your search.

- Check to see if a field exists before grouping on it. Some fields (the key part of the key-value pair) do not exist in every log entry. If you run groupby on a field and it doesn’t always exist, the query will run faster if you first verify that the field is part of the logs that are being grouped on. Example:

where(direction)groupby(direction)

- Which logical operators you use can make a big difference in your search results. AND is recommended, as it filters the data, resulting in fewer logs that need to be searched. In other words, AND improves the indexing factor of the search. OR should be avoided if possible, as it will match more data and slow down the search. In general, less data can be indexed when the search includes an OR logical operator. You do need to use common sense, because depending on your search criteria, it may be that you need to use OR.

- Avoid using a no-equal whenever possible. In general, when you are searching for specific text, the indexer is able to skip over chunks of log data and work efficiently. In order to search for a “not equal to,” every entry must be checked. The “no equal” expressions are NOT, !=, and !==, and they should be avoided whenever possible. Again, use common sense, because your query may not work unless you use a “no-equal.”

- The order that you specify text in the query is not important. That is, the queries are not evaluated left-to-right — rather, the entire query is first evaluated to determine how it can be best indexed.

- Using regular expression is usually not slower than using a native LEQL search.

For example, a search like

where(/vpn asset.*/i)

… is a perfectly fine search.

However, using logical operators in the regular expression will make the search slower for exactly the same reason that they can make the regular search slower. In addition, using the logical operators — especially the (“|”), which is logical OR — can be more impactful in regular expression searches, as they disable the use of indexing the logs. For example, a query like this:

where(geoip_country_name=/China|India/)

… should be avoided if possible. Instead, use this query:

where(geoip_country_name=/China/ OR geoip_country_name=/India/)

You could also use the functions IN or IIN:

where(geoip_country_name IN [China,India])

To summarize how the indexing works, let’s look at a Log Search query that I have constructed:

where(direction=OUTBOUND AND connection_status!=DENY AND destination_port=/21|22|23|25|53|80|110|111|135|139|143|443|445|993|995|1723|3306|3389|5900|8080/)groupby(geoip_organization)

Should this query be optimized? The first thing that the log search evaluator will do is to determine if any of the search can be indexed.

In looking at the components of the search, it has three computations that are all being ANDed together: “direction=OUTBOUND,” “connection_status!=DENY,” and then the port evaluation. Remember, AND is good since it can reduce the amount of data that must be evaluated. “direction=OUTBOUND” can be indexed and will reduce the amount of data against which the other computations must be run. “connection_status!=DENY” cannot be indexed since it contains “not equal” — in other words, every log entry must be checked to determine if it contains this KVP. However, the connection_status computation is vital to how this query works, and it cannot be removed.

Is there a way to optimize this part of the query? The “connection_status” key has only two possible values, so it can easily be changed to an equal statement instead of a no-equal. Also, not all firewall logs have this field so we can add verifying that the field exists to the query. Finally, the destination_port search is not optimal, as it contains a long series of OR computations. This computation is also an important criteria for the search, and it cannot be removed. However, it could be improved by replacing the regular expression with the IN function.

where(direction=OUTBOUND AND connection_status AND connection_status=ACCEPT AND destination_port IN [21,22,23,25,53,80,110,111,135,139,143,443,445,993,995,1723,3306,3389,5900,8080])groupby(geoip_organization)

Will this change improve the search greatly? The best way to find out is to test the searches with your own log data. However, keep in mind that “direction=OUTBOUND” will be evaluated first, because it can be indexed. In addition, since in these particular logs (firewall logs), this first computation greatly reduces the amount of log entries left to be evaluated, other optimizations to the query will not greatly enhance the speed of the search. That is, in this particular case, both queries take about the same amount of time to complete.

However, the search might run faster without any keys specified. Could I remove them and speed up my search? Given the nature of the search, I do need to keep “connection_status” and “destination_port” as the values in these fields can occur in other parts of the logs. However, I could remove “direction” and run this search:

where(OUTBOUND AND connection_status!=DENY AND destination_port IN [21,22,23,25,53,80,110,111,135,139,143,443,445,993,995,1723,3306,3389,5900,8080])groupby(geoip_organization)

In fact, this query runs about 30% faster than those with “direction=” key specified.

Let’s look at a second example. I want to find all the failed authentications for all the workstations on my 10.0.2.0 subnet. I can run one of these three searches:

where(source_asset_address=/10\.0\.2\..*/ AND result!=SUCCESS)groupby(source_asset_address)

where(source_asset_address=/10\.0\.2\..*/ AND result=/FAILED.*/)groupby(source_asset_address)

where(source_asset_address=/10\.0\.2\..*/ AND result IN [FAILED_BAD_LOGIN,FAILED_BAD_PASSWORD,FAILED_ACCOUNT_LOCKED,FAILED_ACCOUNT_DISABLED,FAILED_OTHER])groupby(source_asset_address)

Which one is better? Since the first one uses a “not equal” as part of the computation, the percentage of the search data that can be indexed will be less than the other two searches. However, the second search has a partial match (/FAILED.*/) versus the full match of the first search. Partial searches are slower than specifying all the text to be matched. Finally, the third search avoids both the “no-equal” and a partial match by using the IN function to list all the possible matches that are valid.

As you might have guessed, the third search is the winner, completing slightly faster than the first search but more than twice as fast as the second one. If you are searching a large set of data over a long period of time, the third search is definitely the best one to use.

How data is returned to the Log Search UI

Finally, although it is not related to log search speed, you might be curious about how data gets returned into the Log Search UI. As the log search query runs, as long as there are no errors, it will continue to pull back data to be returned for the search. For searches that do not contain groupby() or calculate(), results will be returned to the UI as the search runs. However, if groupby() or calculate() are part of the query, these functions are evaluated against the entire search period. Therefore, partial results are not possible.

If the search results cannot be returned because of an error, such as a search that cannot be computed or a rate-limiting error with a groupby() or calculate() function, then instead of the data being returned, you will see an error in the Log Search UI.

Hopefully, this blog has given you a better sense of how the Log Search search engine works and provided you with some practical tips, so you can start running faster searches.

Additional reading:

Women at AWS – Diverse backgrounds make great solutions architects

Post Syndicated from Jigna Gandhi original https://aws.amazon.com/blogs/architecture/women-at-aws-diverse-backgrounds-make-great-solutions-architects/

This International Women’s Day, we’re featuring more than a week’s worth of posts that highlight female builders and leaders. We’re showcasing women in the industry who are building, creating, and, above all, inspiring, empowering, and encouraging everyone—especially women and girls—in tech.

Thinking about becoming a Solutions Architect, but not sure where to start? Wondering if your work experience and skills qualify you for the role? Let us help!

We’re Solutions Architects at Amazon Web Services (AWS). In this post, we’ll cover what Solutions Architects do, what got us interested in being Solutions Architects, and what skills and resources you might need to be successful in the role.

We also share our different career backgrounds and how we ended up pursuing careers as Solutions Architects. Let our experiences be your guide.

What do Solutions Architects do?

We work with enterprise customers from various industries and bring our unique technical and business knowledge to provide technical solutions that use AWS services.

Being a Solutions Architect is a combination of technical and sales roles; the technical aspect is 60-70% and the sales aspect is 30-40%.

As Solutions Architects, we provide technical guidance to customers on how they can achieve business outcomes by using cloud technology. The role requires strong business acumen to understand each stakeholder’s motivation, as well as technical skills to provide guidance.

What we’re working on right now

Despite having the same job title, we all work with different technologies across different industries.

Jigna works with Digital Native Business customers (cloud native/customers who started in the cloud). She helps them apply best practices for AWS services and guides them in implementing complex workloads on AWS. You can see some of her recent work in action here:

- How to build a persona-centric data platform on AWS for analytics and machine learning with a seven-layered approach

- Scripting Aurora failover tasks for multi-Region applications using Amazon Aurora database

- Reference architecture on building operational analytics on AWS modern data architecture

Jennifer works with enterprise customers to understand their business requirements and provide technical solutions that align with their objectives. See what she’s co-written recently:

- Automate Amazon OpenSearch Service synonym file updates

- Practical Entity Resolution on AWS to Reconcile Data in the Real World

Cheryl works with AWS enterprise customers. Her core area of focus is serverless technologies. Lately, she has presented in AWS She Builds Tech Skills and has co-authored multiple blogs:

- AWS She Builds Tech Skills – Episode 5 – Building Event Driven Architectures

- Using AWS Serverless to Power Event Management Applications

- Implementing Multi-Region Disaster Recovery Using Event-Driven Architecture

Sanjukta works with Greenfield customers (enterprises in early stages of AWS adoption) and helps them accelerate initial workloads and lays the foundations to help them scale their AWS usage to innovate and modernize their applications. She collaborates with AWS internal teams for:

- Adoption of AWS Solutions for US Northeast customers

- Contributing towards mainframe-focused opportunities for Greenfield customers

What got you interested in this role?

We all started in different roles and had limited exposure to cloud technologies, but we all had one thing in common. We were curious and wanted to learn. Being in this career means that you’re continuously learning and researching about current and emerging technologies.

As we progressed in our careers, we expanded our technical knowledge and skills. Most of us had technical depth in a few areas, such as development or architecture.

We all continued on various career paths and earned in-job training or acquired external certifications that led us to explore Solutions Architecture.

As enterprises adopted cloud technologies, we knew that our ability to adapt to the changing technical landscape along with our industry experience would enable us to better assist customers with their needs to provide the best technical solutions.

How did we get here?

We have prior experience working on analytics, application development, infrastructure, and legacy technologies across financial services, healthcare, retail, and gaming industries. We use this expertise to help AWS customers with similar needs.

We now use this expertise to help AWS customers with similar needs. Strengthening your individual experience will help you become a Solutions Architect.

Jigna has a Bachelor of Engineering in Information Technology. She has held multiple roles ranging from software engineer, cloud engineer, to technical team lead. She has worked with several enterprise customers on their requirements, technical designs, and implementation.

Driven by her passion for helping clients in their business and technology endeavors, she decided to become a Solutions Architect.

Jennifer has a BS in Information Systems. She worked in the financial services industry, where she held various architecture and implementation roles.

She became a Solutions Architect because she was interested in working with a wide range of technical services to provide complete solutions for business applications.

Cheryl has a BS in Physics, Chemistry, and Mathematics and a Post BS Diploma in Computer Science. She has led several complex, highly robust and massively scalable software solutions for large-scale enterprise applications. She has worked as a software engineer and in several other roles in IT.

She became a Solutions Architect because she wanted to use her technical and communication skills to partner successfully with her business counterparts to meet their objectives.

Sanjukta has a BS in Computer Application and an MS in Software Engineering. She has worked on and led several mainframe projects for financial and healthcare enterprises.

As companies retired their legacy applications, she pursued external trainings and certifications to learn Solutions Architecture to help customers in their migration journey.

What skills do I need?

There is no one set of skills that fits all when it comes to being a Solutions Architect.

You do not need to meet all these requirements right now, but they are good skills to develop over time:

- Technical Knowledge: Good knowledge of how different technical components work together is beneficial. This includes networking, database, storage, analytics, etc.

- Communication: Even though this is a soft skill, learning and practicing how to communicate clearly and confidently will enable you to be successful in customer engagements.

- Domain Knowledge: Developing a command over a few domains like retail, financial services, healthcare, etc., is useful, but you don’t need an in-depth knowledge about all domains, industries, or technologies.

- Architecture Design: System architecture defines its major components, their relationships, and how they interact with each other.

- Resourcefulness: Be curious. Wanting to learn new things is an absolute necessity when you want to be a SA. You may not know all the answers, but being willing and able to find the answers and solve problems is what sets you apart and helps you excel in this field.

Can you give me some resources to get started?

There were various resources we used to develop our skills at AWS:

- AWS Cloud Practitioner Essentials is a good starting point.

- AWS Skill Builder offers online courses and classroom training to learn new topics and sharpen our skills.

- AWS Certifications will help you reinforce knowledge.

Conclusion

Role models and mentors helped us gravitate toward this role. Our colleagues and managers inspired us to broaden our horizons and look beyond our current roles. We encourage you to do the same.

No matter where you’ve started in your career, your talent and experience can be an asset to customers.

Ready to get started?

Interested in applying for a Solutions Architecture role?

We’ve got more content for International Women’s Day!

For more than a week we’re sharing content created by women. Check it out!

- Deploying service-mesh-based architectures using AWS App Mesh and Amazon ECS from Kesha Williams, an AWS Hero and award-winning software engineer.

- A collection of several blog posts written and co-authored by women

- Curated content from the Let’s Architect! team and a live Twitter chat

- Another post on Building your brand as a SA

Other ways to participate

[$] Random numbers and virtual-machine forks

Post Syndicated from original https://lwn.net/Articles/887207/

One of the key characteristics of a random-number generator (RNG) is its

unpredictability; by definition, it should not be possible to know what the

next number

to be produced will be. System security depends on this unpredictability

at many levels. An attacker who knows an RNG’s future output may be able

to eavesdrop on (or interfere with) network conversations, compromise

cryptographic keys, and more. So it is a bit disconcerting to know that

there is a common event that can cause RNG predictability: the forking or

duplication of a

virtual machine. Linux RNG maintainer Jason Donenfeld is working on a

solution to this problem.

The 1994 Smartwatch That Syncs with a CRT – LGR Oddware

Post Syndicated from LGR original https://www.youtube.com/watch?v=GCHHzw4s5W4

Seven new stable kernels

Post Syndicated from original https://lwn.net/Articles/887636/

Greg Kroah-Hartman has announced the release of seven stable kernels—these

contain mitigations for the Spectre branch history injection

variant: 5.16.14, 5.15.28, 5.10.105, 5.4.184, 4.19.234, 4.14.271, and 4.9.306. Users should upgrade.

Security updates for Friday

Post Syndicated from original https://lwn.net/Articles/887635/

Security updates have been issued by Debian (nbd, ruby-sidekiq, tryton-proteus, and tryton-server), Mageia (shapelib and thunderbird), openSUSE (minidlna, python-libxml2-python, python-lxml, and thunderbird), Oracle (kernel, kernel-container, and python-pip), Red Hat (.NET 5.0, .NET 6.0, .NET Core 3.1, firefox, kernel, and kernel-rt), Scientific Linux (firefox), SUSE (openssh, python-libxml2-python, python-lxml, and thunderbird), and Ubuntu (expat vulnerabilities and, firefox, and subversion).

The Great Blizzard of 1888

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=VhTEL1O2D1w

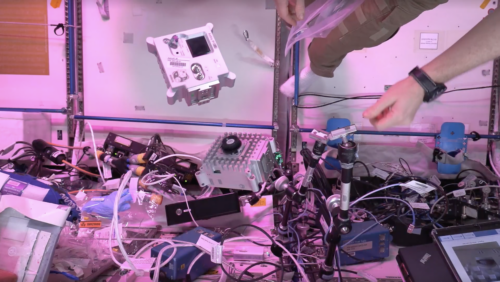

3D print you own replica Astro Pi flight case

Post Syndicated from Richard Hayler original https://www.raspberrypi.org/blog/3d-print-astro-pi-flight-case-mark-ii/

We’ve put together a new how-to guide for 3D printing and assembling your own Astro Pi unit replica, based on the upgraded units we sent to the International Space Station in December.

The Astro Pi case connects young people to the Astro Pi Challenge

It wasn’t long after the first Raspberry Pi computer was launched that people started creating the first cases for it. Over the years, they’ve designed really useful ones, along with some very stylish ones. Without a doubt, the most useful and stylish one has to be the Astro Pi flight case.

This case houses the Astro Pi units, the hardware young people use when they take part in the European Astro Pi Challenge. Designed by the amazing Jon Wells for the very first Astro Pi Challenge, which was part of Tim Peake’s Principia mission to the ISS in 2015, the case has become an iconic part of the Astro Pi journey for young people.

As Jon says: “The design of the original flight case, although functional, formed an emotional connection with the young people who took part in the programme and is an engaging and integral part of the experience of the Astro Pi.”

People love to 3D print Astro Pi cases

Although printing an Astro Pi case is absolutely not essential for participating in the European Astro Pi Challenge, many of the teams of young people who participate in Astro Pi Mission Space Lab, and create experiments to run on the Astro Pi units aboard the ISS, do print Astro Pi cases to house the hardware that we send them for testing their experiments.

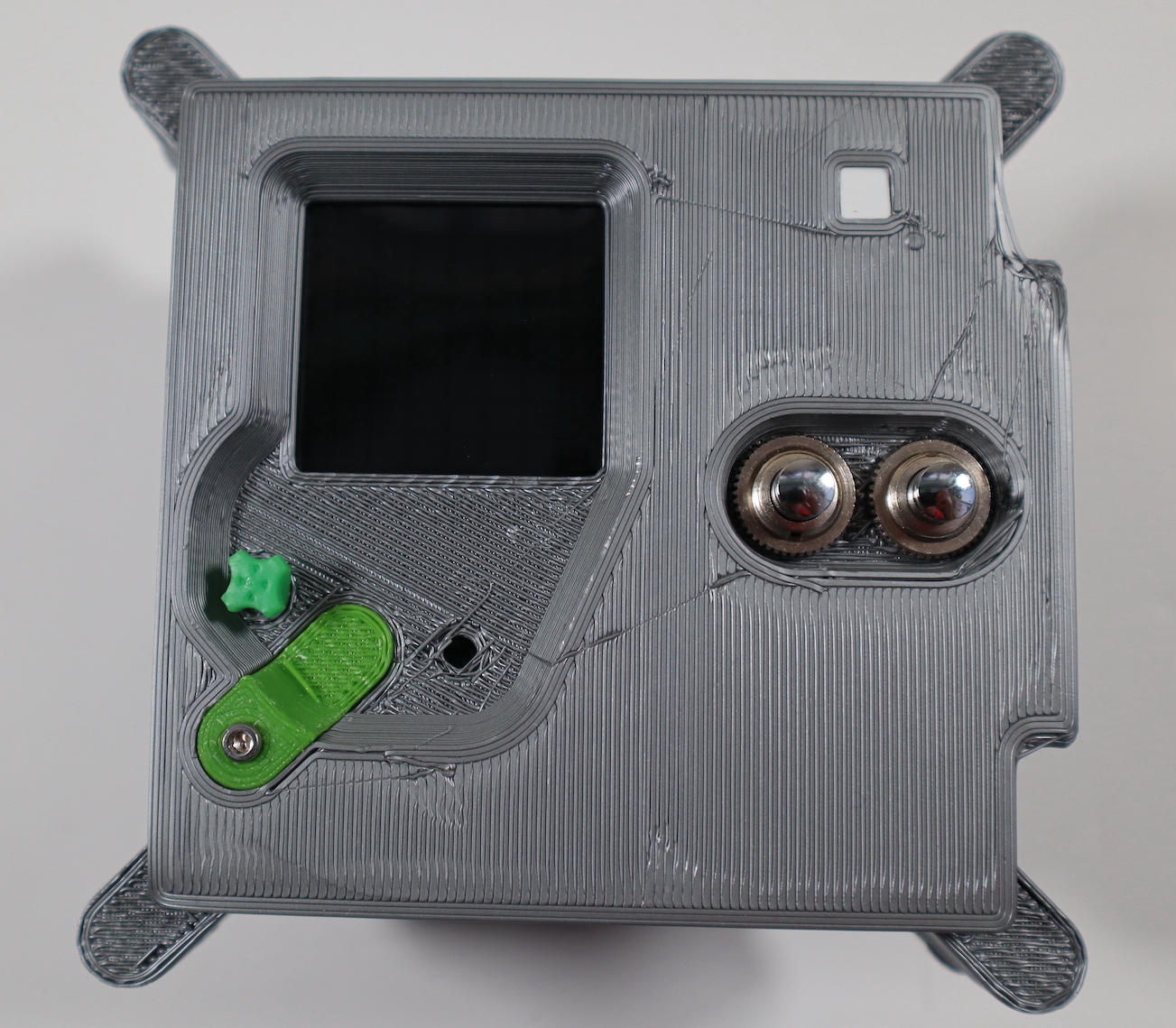

When we published the first how-to guide for 3D printing an Astro Pi case and making a working replica of the unit, it was immediately popular. We saw an exciting range of cases being produced. Some people (such as me) tried to make theirs look as similar as possible to the original aluminium Astro Pi flight unit, even using metallic spray paint to complete the effect. Others chose to go for a multicolour model, or even used glow-in-the-dark filament.

So it wasn’t a huge surprise that when we announced that we were sending upgraded Astro Pi units to the ISS — with cases again designed by Jon Wells — we received a flurry of requests for the files needed to 3D print these new cases.

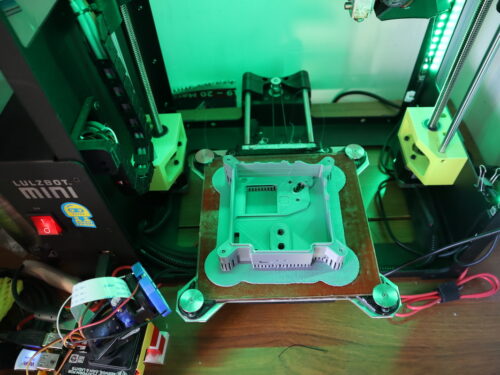

Now that the commissioning of the new Astro Pi units, which arrived on board the International Space Station in December, is complete, we’ve been able to put together an all-new how-to guide to 3D printing your own Mark II Astro Pi case and assembling your own Astro Pi unit replica at home or in the classroom.

The guide also includes step-by-step instructions to completing the internal wiring so you can construct a working Astro Pi unit. We’re provided a custom version of the self-test software that is used on the official Astro Pis, so you can check that everything is operational.

If you’re new to 3D printing, you might like to try one of our BlocksCAD projects and practice printing a simpler design before you move on the the Astro Pi case.

Changes and improvements to the guide

We’ve made some changes to the original CAD designs to make printing the Mark II case parts and assembling a working Astro Pi replica unit as easy as possible. Unlike the STL files for the Mark I case, we’ve kept the upper and lower body components as single parts, rather than splitting each into two thinner halves. 3D printers have continued to improve since we wrote the first how-to guide. Most now have heated beds, which prevent warping, and we’ve successfully printed the Mark II parts on a range of affordable machines.

The guide contains lots of hints and tips for getting the best results. As usual with 3D printing, be prepared to make some tweaks for the particular printer that you use.

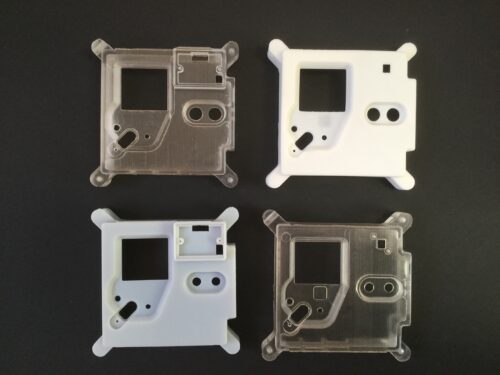

In addition to the upper and lower case parts, there are also some extra components to print this time: the colour sensor window, the joystick cap, the Raspberry Pi High Quality Camera housing, and the legs that protect the lenses and allow the Astro Pi units on the ISS to be safely placed up against the nadir window.

We’ve included files for four variants of the upper case part (see above). In order to keep costs down, the kits that we send to Astro Pi Mission Space Lab teams have a different PIR sensor to the ones of the proper Astro Pi units. So we’ve produced files for upper case parts that allow that sensor to be fitted. If you’re not taking part in the European Astro Pi Challenge, this also offers a cheaper alternative to creating an Astro Pi replica which still includes the motion detection capability:

We’ve also provided versions for the upper case part that have smaller holes for the push buttons. So, if you don’t fancy splashing out on the supremely pressable authentic buttons, you can use other colourful alternatives, which typically have a smaller diameter.

Do share photos of your 3D-printed Astro Pi cases with us by tweeting pictures of them to @astro_pi and @RaspberryPi_org.

One week left to help young people make space history with Astro Pi Mission Zero

It’s still not too late for young people to take part in this year’s Astro Pi beginners’ coding activity, Mission Zero, and suggest their ideas for the names for the two new Astro Pi units! Astro Pi Mission Zero is still open until next Friday, 18 March.

The post 3D print you own replica Astro Pi flight case appeared first on Raspberry Pi.

False Dichotomy

Post Syndicated from original https://xkcd.com/2592/

New – Amazon EC2 X2idn and X2iedn Instances for Memory-Intensive Workloads with Higher Network Bandwidth

Post Syndicated from Channy Yun original https://aws.amazon.com/blogs/aws/new-amazon-ec2-x2idn-and-x2iedn-instances-for-memory-intensive-workloads-with-higher-network-bandwidth/

In 2016, we launched Amazon EC2 X1 instances designed for large-scale and in-memory applications in the cloud. The price per GiB of RAM for X1 instances is among the lowest. X1 instances are ideal for high performance computing (HPC) applications and running in-memory databases like SAP HANA and big data processing engines such as Apache Spark or Presto.

The following year, we launched X1e instances with up to 4 TiB of memory designed to run SAP HANA and other memory-intensive, in-memory applications. These instances are certified by SAP to run production environments of the next-generation Business Suite S/4HANA, Business Suite on HANA (SoH), Business Warehouse on HANA (BW), and Data Mart Solutions on HANA on the AWS Cloud.

Today, I am happy to announce the general availability of Amazon EC2 X2idn/X2iedn instances, built on the AWS Nitro system and featuring the third-generation Intel Xeon Scalable (Ice Lake) processors with up to 50 percent higher compute price performance than comparable X1 instances. These improvements result in up to 45 percent higher SAP Application Performance Standard (SAPS) performance than comparable X1 instances.

You might have noticed that we’re now using the “i” suffix in the instance type to specify that the instances are using an Intel processor, “e” in the memory-optimized instance family to indicate extended memory, “d” with local NVMe-based SSDs that are physically connected to the host server, and “n” to support higher network bandwidth up to 100 Gbps.

X2idn instances enable up to 2 TiB of memory, while X2iedn instances enable up to 4 TiB of memory. X2idn and X2iedn instances also support 100 Gbps of network performance with hardware-enabled VPC encryption and support 80 Gbps of Amazon EBS bandwidth and 260k IOPs with EBS-encrypted volumes.

| Instance Name | vCPUs | RAM (GiB) | Local NVMe SSD Storage (GB) | Network Bandwidth (Gbps) | EBS-Optimized Bandwidth (Gbps) |

| x2idn.16xlarge | 64 | 1024 | 1 x 1900 | Up to 50 | Up to 40 |

| x2idn.24xlarge | 96 | 1536 | 1 x 1425 | 75 | 60 |

| x2idn.32xlarge | 128 | 2048 | 2 x 1900 | 100 | 80 |

| x2iedn.xlarge | 4 | 128 | 1 x 118 | Up to 25 | Up to 20 |

| x2iedn.2xlarge | 8 | 256 | 1 x 237 | Up to 25 | Up to 20 |

| x2iedn.4xlarge | 16 | 512 | 1 x 475 | Up to 25 | Up to 20 |

| x2iedn.8xlarge | 32 | 1024 | 1 x 950 | 25 | 20 |

| x2iedn.16xlarge | 64 | 2048 | 1 x 1900 | 50 | 40 |

| x2iedn.24xlarge | 96 | 3072 | 2 x 1425 | 75 | 60 |

| x2iedn.32xlarge | 128 | 4096 | 2 x 1900 | 100 | 80 |

X2idn instances are ideal for running large in-memory databases such as SAP HANA. All of the X2idn instance sizes are certified by SAP for production HANA and S/4HANA workloads. In addition, X2idn instances are ideal for memory-intensive and latency-sensitive workloads such as Apache Spark and Presto, and for generating real-time analytics, processing giant graphs using Neo4j or Titan, or creating enormous caches.

X2iedn instances are optimized for applications that seek high memory to vCPU ratio and deliver the highest memory capacity per vCPU among all virtualized EC2 instance types. X2iedn is suited to run high-performance databases (such as Oracle DB, SQL server) and in-memory workloads (such as SAP HANA, Redis). Workloads that are sensitized to per-core licensing, such as Oracle DB, greatly benefit from the higher memory per vCPU (32GB:1vCPU) offered by X2iedn. X2iedn allows you to optimize licensing costs because it provides customers the same memory at half the number of vCPU compared to X2idn.

These instances offer the same amount of local storage as in X1/X1e, up to 3.8 TB, but the local storage in X2idn/X2iedn is NVMe-based, which will offer an order of magnitude lower latency compared to SATA SSDs in X1/X1e.

Things to Know

Here are some fun facts about the X2idn and X2iedn instances:

Optimizing CPU—You can disable Intel Hyper-Threading Technology for workloads that perform well with single-threaded CPUs, like some HPC applications.

NUMA—You can make use of non-uniform memory access (NUMA) on X2idn and X2iedn instances. This advanced feature is worth exploring if you have a deep understanding of your application’s memory access patterns.

Available Now

X2idn instances are now available in the US East (N. Virginia), Asia Pacific (Mumbai, Singapore, Tokyo), Europe (Frankfurt, Ireland) Regions.

X2iedn instances are now available in the US East (Ohio, N. Virginia), US West (Oregon), Asia Pacific (Singapore, Tokyo), Europe (Frankfurt, Ireland) Regions.

You can use On-Demand Instances, Reserved Instances, Savings Plan, and Spot Instances. Dedicated Instances and Dedicated Hosts are also available.

To learn more, visit our EC2 X2i Instances page, and please send feedback to AWS re:Post for EC2 or through your usual AWS Support contacts.

– Channy

The Experiment Podcast: A Jewish Family’s Debt to Ukraine

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=Pna0ty9CCNo

7Rapid Questions: Growing From BDR to Commercial Sales Manager With Maria Loughrey

Post Syndicated from Rapid7 original https://blog.rapid7.com/2022/03/10/7rapid-questions-growing-from-bdr-to-commercial-sales-manager-with-maria-loughrey/

Welcome back to 7Rapid Questions, our blog series where we hear about the great work happening at Rapid7 from the people who are doing it across our global offices. For this installment, we sat down with Maria Loughrey, Commercial Sales Manager for the UK and Ireland at our Reading, UK office.

What did you want to be when you grew up?

After a brief stint of wanting to go to America to study law at Harvard (thank you, “Legally Blonde”), I ended up studying psychology and wanted to become a forensic psychologist.

So, how did you end up in cybersecurity?

I was approached by a recruitment partner of Rapid7, which prompted me to research what cybersecurity was all about. I found that not only is it a super interesting topic, but people are really passionate about it. It was evident how much Rapid7 cared about their customers’ security and, in turn, how much customers respected them as a vendor. It took a bit of a leap of faith to step away from my career plan and start working for a company I knew very little about, but I’m so glad I did!

What has your career journey been like at Rapid7?

Since the aforementioned leap of faith, Rapid7 haven’t stopped putting their faith in me in return. I started in the business development team and then got promoted into a sales overlay role supporting the Account Executive team. I’ve been in sales ever since — starting with SMB customers, then mid-market accounts, and more recently covering the Enterprise market in the UK.

Last year, I became a team lead alongside my Enterprise AE role, and then at the beginning of this year, I was promoted into a management position to support the Commercial Sales team. The support and belief I have received from Rapid7 and my management team over the last 8 years have been truly humbling.

What has been your proudest moment?

It was bittersweet moving into a management position this year, as it meant not working directly with customers as much, but when I introduced new team members who would be stepping into my role, so many customers had such lovely things to say and let me know that I’d be missed. It’s amazing to hear that you’ve had such a positive impact.

What is a fun fact some people might not know about you?

I have a very mild form of Tourette syndrome, which causes people to have “tics.”

Which of Rapid7’s core values do you embody the most?

Bring You. This is SUCH a difficult question, but I chose Bring You because not only do I strive to be my most authentic self at work, but I also think it’s incredibly important for everyone to bring their own perspectives and style. Businesses thrive on diversity of mindset. Without this, creativity becomes stagnant and growth slows. So, Bring You.

What three words would you use to describe the culture at Rapid7?

Understanding, inclusive, genuine.

Want to join Maria and her team? We’re hiring! Browse our open roles at Rapid7 here.

Additional reading:

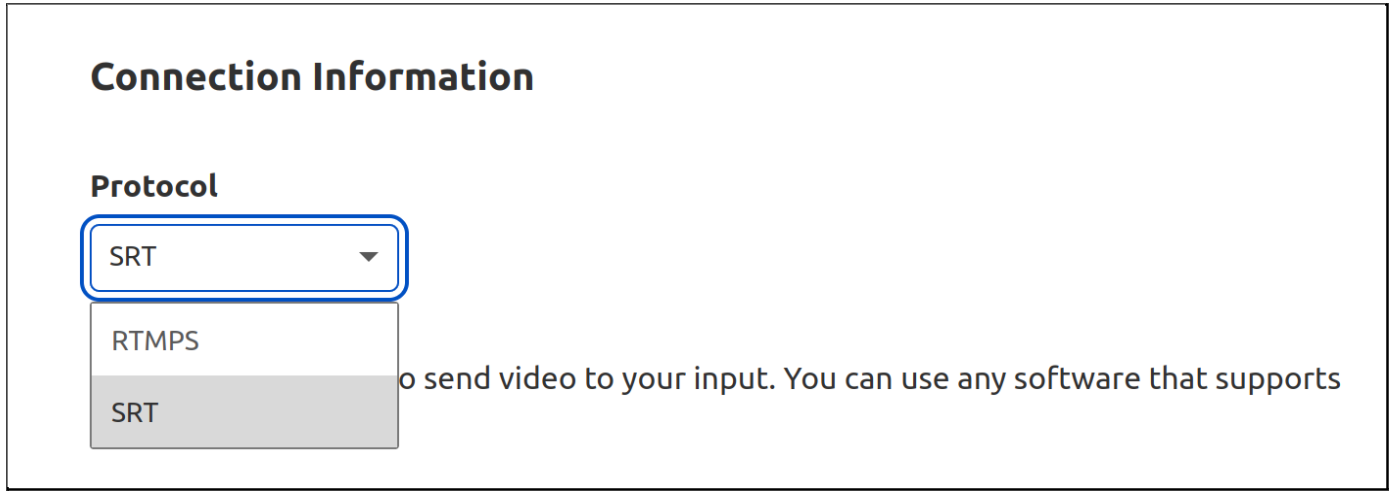

Stream now supports SRT as a drop-in replacement for RTMP

Post Syndicated from Renan Dincer original https://blog.cloudflare.com/stream-now-supports-srt-as-a-drop-in-replacement-for-rtmp/

SRT is a new and modern live video transport protocol. It features many improvements to the incumbent popular video ingest protocol, RTMP, such as lower latency, and better resilience against unpredictable network conditions on the public Internet. SRT supports newer video codecs and makes it easier to use accessibility features such as captions and multiple audio tracks. While RTMP development has been abandoned since at least 2012, SRT development is maintained by an active community of developers.

We don’t see RTMP use going down anytime soon, but we can do something so authors of new broadcasting software, as well as video streaming platforms, can have an alternative.

Starting today, in open beta, you can use Stream Connect as a gateway to translate SRT to RTMP or RTMP to SRT with your existing applications. This way, you can get the last-mile reliability benefits of SRT and can continue to use the RTMP service of your choice. It’s priced at $1 per 1,000 minutes, regardless of video encoding parameters.

You can also use SRT to go live on Stream Live, our end-to-end live streaming service to get HLS and DASH manifest URLs from your SRT input, and do simulcasting to multiple platforms whether you use SRT or RTMP.

Stream’s SRT and RTMP implementation supports adding or removing RTMP or SRT outputs without having to restart the source stream, scales to tens of thousands of concurrent video streams per customer and runs on every Cloudflare server in every Cloudflare location around the world.

Go live like it’s 2022

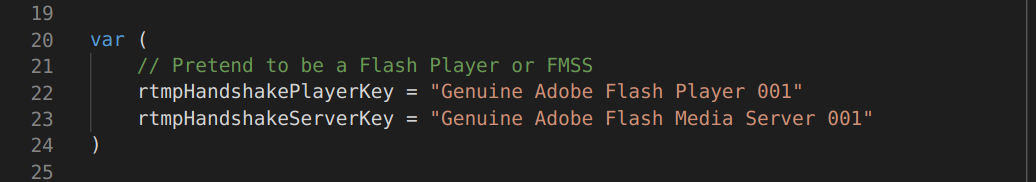

When we first started developing live video features on Cloudflare Stream earlier last year we had to decide whether to reimplement an old and unmaintained protocol, RTMP, or focus on the future and start off fresh by using a modern protocol. If we launched with RTMP, we would get instant compatibility with existing clients but would give up features that would greatly improve performance and reliability. Reimplementing RTMP would also mean we’d have to handle the complicated state machine that powers it, demux the FLV container, parse AMF and even write a server that sends the text “Genuine Adobe Flash Media Server 001” as part of the RTMP handshake.

Even though there were a few new protocols to evaluate and choose from in this project, the dominance of RTMP was still overwhelming. We decided to implement RTMP but really don’t want anybody else to do it again.

Eliminate head of line blocking

A common weakness of TCP when it comes to low latency video transfer is head of line blocking. Imagine a camera app sending videos to a live streaming server. The camera puts every frame that is captured into packets and sends it over a reliable TCP connection. Regardless of the diverse set of Internet infrastructure it may be passing through, TCP makes sure all packets get delivered in order (so that your video frames don’t jump around) and reliably (so you don’t see any parts of the frame missing). However, this type of connection comes at a cost. If a single packet is dropped, or lost in the network somewhere between two endpoints like it happens on mobile network connections or wifi often, it means the entire TCP connection is brought to a halt while the lost packet is found and re-transmitted. This means that if one frame is suddenly missing, then everything that would come after the lost video frame needs to wait. This is known as head of line blocking.

RTMP experiences head of line blocking because it uses a TCP connection. Since SRT is a UDP-based protocol, it does not experience head of line blocking. SRT features packet recovery that is aware of the low-latency and high reliability requirements of video. Similar to QUIC, it achieves this by implementing its own logic for a reliable connection on top of UDP, rather than relying on TCP.

SRT solves this problem by waiting only a little bit, because it knows that losing a single frame won’t be noticeable by the end viewer in the majority of cases. The video moves on if the frame is not re-transmitted right away. SRT really shines when the broadcaster is streaming with less-than-stellar Internet connectivity. Using SRT means fewer buffering events, lower latency and a better overall viewing experience for your viewers.

RTMP to SRT and SRT to RTMP

Comparing SRT and RTMP today may not be that useful for the most pragmatic app developers. Perhaps it’s just another protocol that does the same thing for you. It’s important to remember that even though there might not be a big improvement for you today, tomorrow there will be new video use cases that will benefit from a UDP-based protocol that avoids head of line blocking, supports forward error correction and modern codecs beyond H.264 for high-resolution video.

Switching protocols requires effort from both software that sends video and software that receives video. This is a frustrating chicken-or-the-egg problem. A video streaming service won’t implement a protocol not in use and clients won’t implement a protocol not supported by streaming services.

Starting today, you can use Stream Connect to translate between protocols for you and deprecate RTMP without having to wait for video platforms to catch up. This way, you can use your favorite live video streaming service with the protocol of your choice.

Stream is useful if you’re a live streaming platform too! You can start using SRT while maintaining compatibility with existing RTMP clients. When creating a video service, you can have Stream Connect to terminate RTMP for you and send SRT over to the destination you intend instead.

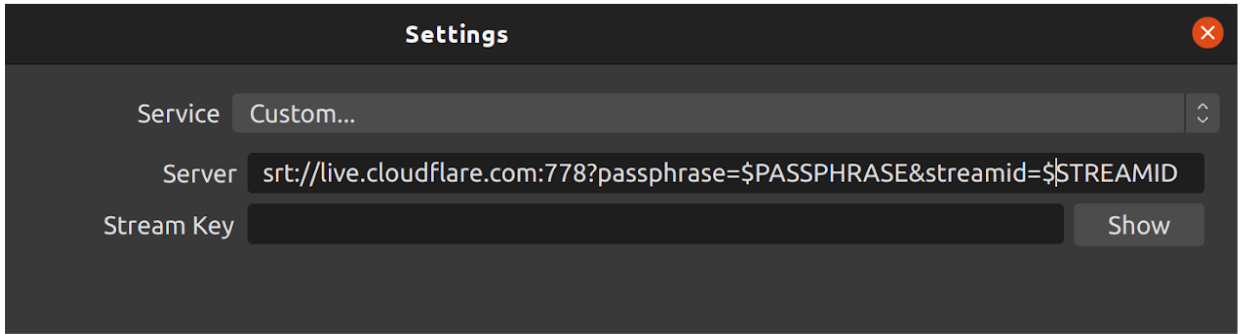

SRT is already implemented in software like FFmpeg and OBS. Here’s how to get it working from OBS:

Get started with signing up for Cloudflare Stream and adding a live input.

Protocol-agnostic Live Streaming

We’re working on adding support for more media protocols in addition to RTMP and SRT. What would you like to see next? Let us know! If this post vibes with you, come work with the engineers building with video and more at Cloudflare!

How Cloudflare verifies the code WhatsApp Web serves to users

Post Syndicated from Matt Silverlock original https://blog.cloudflare.com/cloudflare-verifies-code-whatsapp-web-serves-users/

How do you know the code your web browser downloads when visiting a website is the code the website intended you to run? In contrast to a mobile app downloaded from a trusted app store, the web doesn’t provide the same degree of assurance that the code hasn’t been tampered with. Today, we’re excited to be partnering with WhatsApp to provide a system that assures users that the code run when they visit WhatsApp on the web is the code that WhatsApp intended.

With WhatsApp usage in the browser growing, and the increasing number of at-risk users — including journalists, activists, and human rights defenders — WhatsApp wanted to take steps to provide assurances to browser-based users. They approached us to help dramatically raise the bar for third-parties looking to compromise or otherwise tamper with the code responsible for end-to-end encryption of messages between WhatsApp users.

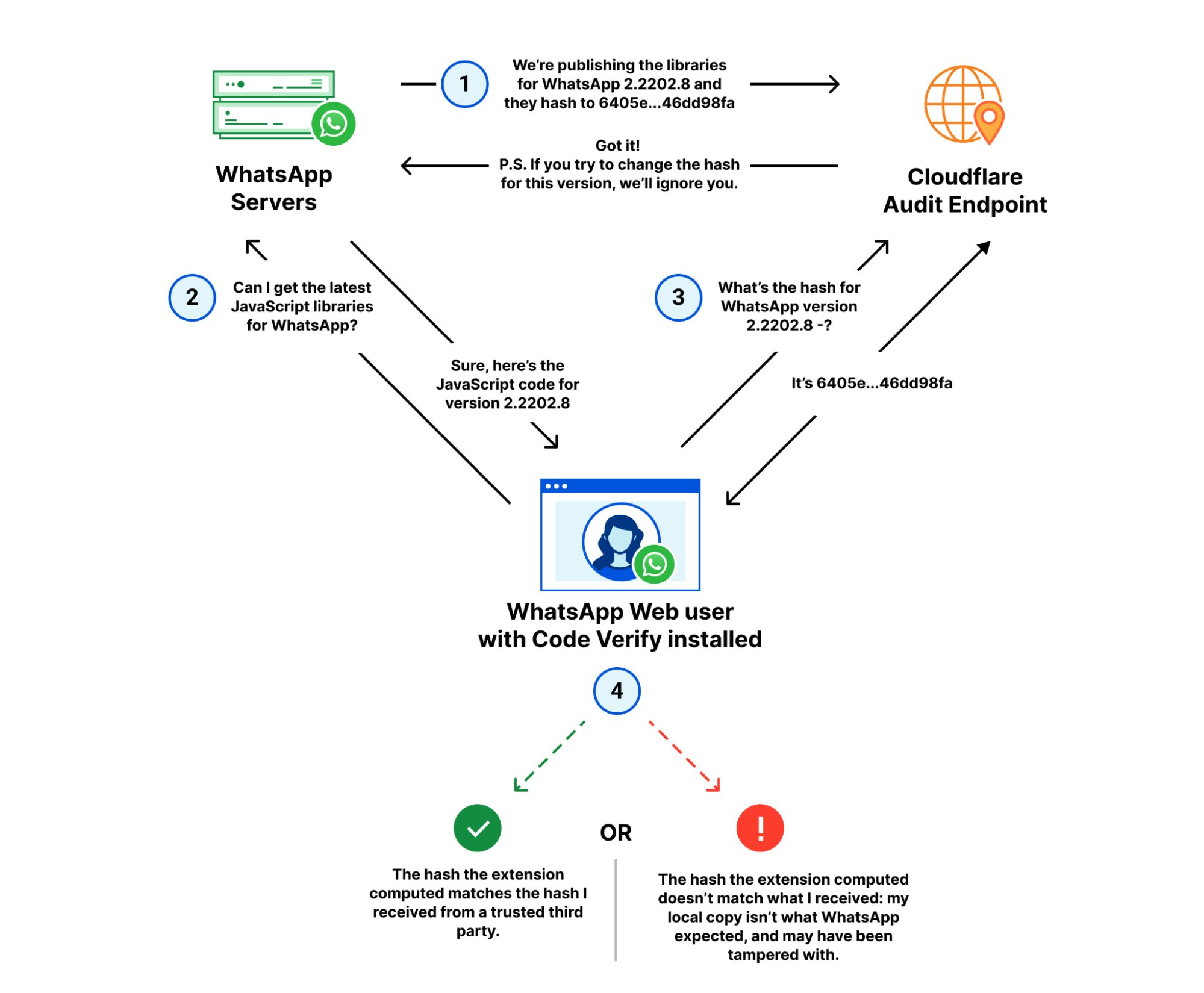

So how will this work? Cloudflare holds a hash of the code that WhatsApp users should be running. When users run WhatsApp in their browser, the WhatsApp Code Verify extension compares a hash of that code that is executing in their browser with the hash that Cloudflare has — enabling them to easily see whether the code that is executing is the code that should be.

The idea itself — comparing hashes to detect tampering or even corrupted files — isn’t new, but automating it, deploying it at scale, and making sure it “just works” for WhatsApp users is. Given the reach of WhatsApp and the implicit trust put into Cloudflare, we want to provide more detail on how this system actually works from a technical perspective.

Before we dive in, there’s one important thing to explicitly note: Cloudflare is providing a trusted audit endpoint to support Code Verify. Messages, chats or other traffic between WhatsApp users are never sent to Cloudflare; those stay private and end-to-end encrypted. Messages or media do not traverse Cloudflare’s network as part of this system, an important property from Cloudflare’s perspective in our role as a trusted third party.

Making verification easier

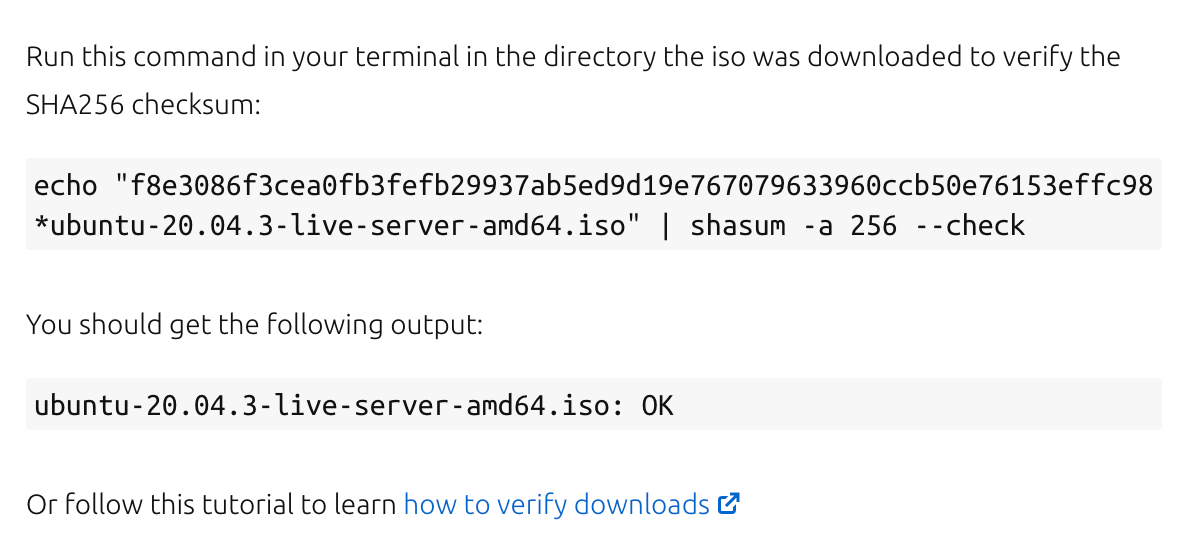

Hark back to 2003: Fedora, a popular Linux distribution based on Red Hat, has just been launched. You’re keen to download it, but want to make sure you have the “real” Fedora, and that the download isn’t a “fake” version that siphons off your passwords or logs your keystrokes. You head to the download page, kick off the download, and see an MD5 hash (considered secure at the time) next to the download. After the download is complete, you run md5 fedora-download.iso and compare the hash output to the hash on the page. They match, life is good, and you proceed to installing Fedora onto your machine.

But hold on a second: if the same website providing the download is also providing the hash, couldn’t a malicious actor replace both the download and the hash with their own values? The md5 check we ran above would still pass, but there’s no guarantee that we have the “real” (untampered) version of the software we intended to download.

There are other approaches that attempt to improve upon this — providing signed signatures that users can verify were signed with “well known” public keys hosted elsewhere. Hosting those signatures (or “hashes”) with a trusted third party dramatically raises the bar when it comes to tampering, but now we require the user to know who to trust, and require them to learn tools like GnuPG. That doesn’t help us trust and verify software at the scale of the modern Internet.

This is where the Code Verify extension and Cloudflare come in. The Code Verify extension, published by Meta Open Source, automates this: locally computing the cryptographic hash of the libraries used by WhatsApp Web and comparing that hash to one from a trusted third-party source (Cloudflare, in this case).

We’ve illustrated this to make how it works a little clearer, showing how each of the three parties — the user, WhatsApp and Cloudflare — interact with each other.

Broken down, there are four major steps to verifying the code hasn’t been tampered with:

- WhatsApp publishes the latest version of their JavaScript libraries to their servers, and the corresponding hash for that version to Cloudflare’s audit endpoint.

- A WhatsApp web client fetches the latest libraries from WhatsApp.

- The Code Verify browser extension subsequently fetches the hash for that version from Cloudflare over a separate, secure connection.

- Code Verify compares the “known good” hash from Cloudflare with the hash of the libraries it locally computed.

If the hashes match, as they should under almost any circumstance, the code is “verified” from the perspective of the extension. If the hashes don’t match, it indicates that the code running on the user’s browser is different from the code WhatsApp intended to run on all its user’s browsers.

Security needs to be convenient

It’s this process — and the fact that is automated on behalf of the user — that helps provide transparency in a scalable way. If users had to manually fetch, compute and compare the hashes themselves, detecting tampering would only be for the small fraction of technical users. For a service as large as WhatsApp, that wouldn’t have been a particularly accessible or user-friendly approach.

This approach also has parallels to a number of technologies in use today. One of them is Subresource Integrity in web browsers: when you fetch a third-party asset (such as a script or stylesheet), the browser validates that the returned asset matches the hash described. If it doesn’t, it refuses to load that asset, preventing potentially compromised scripts from siphoning off user data. Another is Certificate Transparency and the related Binary Transparency projects. Both of these provide publicly auditable transparency for critical assets, including WebPKI certificates and other binary blobs. The system described in this post doesn’t scale to arbitrary assets – yet – but we are exploring ways in which we could extend this offering for something more general and usable like Binary Transparency.

Our collaboration with the team at WhatsApp is just the beginning of the work we’re doing to help improve privacy and security on the web. We’re aiming to help other organizations verify the code delivered to users is the code they’re meant to be running. Protecting Internet users at scale and enabling privacy are core tenets of what we do at Cloudflare, and we look forward to continuing this work throughout 2022.

How to set up federated single sign-on to AWS using Google Workspace

Post Syndicated from Wei Chen original https://aws.amazon.com/blogs/security/how-to-set-up-federated-single-sign-on-to-aws-using-google-workspace/

Organizations who want to federate their external identity provider (IdP) to AWS will typically do it through AWS Single Sign-On (AWS SSO), AWS Identity and Access Management (IAM), or use both. With AWS SSO, you configure federation once and manage access to all of your AWS accounts centrally. With AWS IAM, you configure federation to each AWS account, and manage access individually for each account. AWS SSO supports identity synchronization through the System for Cross-domain Identity Management (SCIM) v2.0 for several identity providers. For IdPs not currently supported, you can provision users manually. Otherwise, you can choose to federate to AWS from Google Workspace through IAM federation, which this post will cover below.

Google Workspace offers a single sign-on service based off of the Security Assertion Markup Language (SAML) 2.0. Users can use this service to access to your AWS resources by using their existing Google credentials. For users to whom you grant access, they will see an additional SAML app in their Google Workspace console. When your users choose this SAML app, they will be redirected to www.google.com the AWS Management Console.

Solution Overview

In this solution, you will create a SAML identity provider in IAM to establish a trusted communication channel across which user authentication information may be securely passed with your Google IdP in order to permit your Google Workspace users to access the AWS Management Console. You, as the AWS administrator, delegate responsibility for user authentication to a trusted IdP, in this case Google Workspace. Google Workspace leverages SAML 2.0 messages to communicate user authentication information between Google and your AWS account. The information contained within the SAML 2.0 messages allows an IAM role to grant the federated user permissions to sign in to the AWS Management Console and access your AWS resources. The IAM policy attached to the role they select determines which permissions the federated user has in the console.

Figure 1: Login process for IAM federation

Figure 1 illustrates the login process for IAM federation. From the federated user’s perspective, this process happens transparently: the user starts at the Google Workspace portal and ends up at the AWS Management Console, without having to supply yet another user name and password.

- The portal verifies the user’s identity in your organization. The user begins by browsing to your organization’s portal and selects the option to go to the AWS Management Console. In your organization, the portal is typically a function of your IdP that handles the exchange of trust between your organization and AWS. In Google Workspace, you navigate to https://myaccount.google.com/ and select the nine dots icon on the top right corner. This will show you a list of apps, one of which will log you in to AWS. This blog post will show you how to configure this custom app.

Figure 2: Google Account page

- The portal verifies the user’s identity in your organization.

- The portal generates a SAML authentication response that includes assertions that identify the user and include attributes about the user. The portal sends this response to the client browser. Although not discussed here, you can also configure your IdP to include a SAML assertion attribute called SessionDuration that specifies how long the console session is valid. You can also configure the IdP to pass attributes as session tags.

- The client browser is redirected to the AWS single sign-on endpoint and posts the SAML assertion.

- The endpoint requests temporary security credentials on behalf of the user, and creates a console sign-in URL that uses those credentials.

- AWS sends the sign-in URL back to the client as a redirect.

- The client browser is redirected to the AWS Management Console. If the SAML authentication response includes attributes that map to multiple IAM roles, the user is first prompted to select the role for accessing the console.

The list below is a high-level view of the specific step-by-step procedures needed to set up federated single sign-on access via Google Workspace.

The setup

Follow these top-level steps to set up federated single sign-on to your AWS resources by using Google Apps:

- Download the Google identity provider (IdP) information.

- Create the IAM SAML identity provider in your AWS account.

- Create roles for your third-party identity provider.

- Assign the user’s role in Google Workspace.

- Set up Google Workspace as a SAML identity provider (IdP) for AWS.

- Test the integration between Google Workspace and AWS IAM.

- Roll out to a wider user base.

Detailed procedures for each of these steps compose the remainder of this blog post.

Step 1. Download the Google identity provider (IdP) information

First, let’s get the SAML metadata that contains essential information to enable your AWS account to authenticate the IdP and locate the necessary communication endpoint locations:

- Log in to the Google Workspace Admin console

- From the Admin console Home page, select Security > Settings > Set up single sign-on (SSO) with Google as SAML Identity Provider (IdP).

Figure 3: Accessing the “single sign-on for SAML applications” setting

- Choose Download Metadata under IdP metadata.

Figure 4: The “SSO with Google as SAML IdP” page

Step 2. Create the IAM SAML identity provider in your account

Now, create an IAM IdP for Google Workspace in order to establish the trust relationship between Google Workspace and your AWS account. The IAM IdP you create is an entity within your AWS account that describes the external IdP service whose users you will configure to assume IAM roles.

- Sign in to the AWS Management Console and open the IAM console at https://console.aws.amazon.com/iam/.

- In the navigation pane, choose Identity providers and then choose Add provider.

- For Configure provider, choose SAML.

- Type a name for the identity provider (such as GoogleWorkspace).

- For Metadata document, select Choose file then specify the SAML metadata document that you downloaded in Step 1–c.

- Verify the information that you have provided. When you are done, choose Add provider.

Figure 5: Adding an Identity provider

- Document the Amazon Resource Name (ARN) by viewing the identity provider you just created in step f. The ARN should looks similar to this:

arn:aws:iam::123456789012:saml-provider/GoogleWorkspace

Step 3. Create roles for your third-party Identity Provider

For users accessing the AWS Management Console, the IAM role that the user assumes allows access to resources within your AWS account. The role is where you define what you allow a federated user to do after they sign in.

- To create an IAM role, go to the AWS IAM console. Select Roles > Create role.

- Choose the SAML 2.0 federation role type.

- For SAML Provider, select the provider which you created in Step 2.

- Choose Allow programmatic and AWS Management Console access to create a role that can be assumed programmatically and from the AWS Management Console.

- Review your SAML 2.0 trust information and then choose Next: Permissions.

Figure 6: Reviewing your SAML 2.0 trust information

GoogleSAMLPowerUserRole:

- For this walkthrough, you are going to create two roles that can be assumed by SAML 2.0 federation. For GoogleSAMLPowerUserRole, you will attach the PowerUserAccess AWS managed policy. This policy provides full access to AWS services and resources, but does not allow management of users and groups. Choose Filter policies, then select AWS managed – job function from the dropdown. This will show a list of AWS managed policies designed around specific job functions.

Figure 7: Selecting the AWS managed job function

- To attach the policy, select PowerUserAccess. Then choose Next: Tags, then Next: Review.

Figure 8: Attaching the PowerUserAccess policy to your role

- Finally, choose Create role to finalize creation of your role.

Figure 9: Creating your role

GoogleSAMLViewOnlyRole

Repeat steps a to g for the GoogleSAMLViewOnlyRole, attaching the ViewOnlyAccess AWS managed policy.

Figure 10: Creating the GoogleSAMLViewOnlyRole

Figure 11: Attaching the ViewOnlyAccess permissions policy

- Document the ARN of both roles. The ARN should be similar to

arn:aws:iam::123456789012:role/GoogleSAMLPowerUserRole and

arn:aws:iam::123456789012:role/GoogleSAMLViewOnlyAccessRole.

Step 4. Assign the user’s role in Google Workspace

Here you will specify the role or roles that this user can assume in AWS.

- Log in to the Google Admin console.

- From the Admin console Home page, go to Directory > Users and select Manage custom attributes from the More dropdown, and choose Add Custom Attribute.

- Configure the custom attribute as follows:

Category: AWS Description: Amazon Web Services Role Mapping For Custom fields, enter the following values:

Name: AssumeRoleWithSaml Info type: Text Visibility: Visible to user and admin InNo. of values: Multi-value - Choose Add. The new category should appear in the Manage user attributes page.

Figure12: Adding the custom attribute

- Navigate to Users, and find the user you want to allow to federate into AWS. Select the user’s name to open their account page, then choose User Information.

- Select on the custom attribute you recently created, named AWS. Add two rows, each of which will include the values you recorded earlier, using the format below for each AssumeRoleWithSaml row.

Row 1:

arn:aws:iam::123456789012:role/GoogleSAMLPowerUserRole,arn:aws:iam:: 123456789012:saml-provider/GoogleWorkspaceRow 2:

arn:aws:iam::123456789012:role/GoogleSAMLViewOnlyAccessRole,arn:aws:iam:: 123456789012:saml-provider/GoogleWorkspaceThe format of the AssumeRoleWithSaml is constructed by using the RoleARN(from Step 3-h) + “,”+ Identity provider ARN (from Step 2-g), this value will be passed as SAML attribute value for attribute with name https://aws.amazon.com/SAML/Attributes/Role. The final result will look similar to below:

Figure 13: Adding the roles that the user can assume

Step 5. Set up Google Workspace as a SAML identity provider (IdP) for AWS

Now you’ll set up the SAML app in your Google Workspace account. This includes adding the SAML attributes that the AWS Management Console expects in order to allow a SAML-based authentication to take place.

Log into the Google Admin console.

- From the Admin console Home page, go to Apps > Web and mobile apps.

- Choose Add custom SAML app from the Add App dropdown.

- Enter AWS Single-Account Access for App name and upload an optional App icon to identify your SAML application, and select Continue.

Figure 14: Naming the custom SAML app and setting the icon

- Fill in the following values:

ACS URL: https://signin.aws.amazon.com/saml Entity ID: urn:amazon:webservices Name ID format: EMAIL Name ID: Basic Information > Primary email Note: Your primary email will become your role’s AWS session name

- Choose CONTINUE.

Figure 15: Adding the custom SAML app

- AWS requires the IdP to issue a SAML assertion with some mandatory attributes (known as claims). The AWS documentation explains how to configure the SAML assertion. In short, you need to create an assertion with the following:

- An attribute of name https://aws.amazon.com/SAML/Attributes/Role. This element contains one or more AttributeValue elements that list the IAM identity provider and role to which the user is mapped by your IdP. The IAM role and IAM identity provider are specified as a comma-delimited pair of ARNs in the same format as the RoleArn and PrincipalArn parameters that are passed to AssumeRoleWithSAML.

- An attribute of name https://aws.amazon.com/SAML/Attributes/RoleSessionName (again, this is just a definition of type, not an actual URL) with a string value. This is the federated user’s role session name in AWS.

- A name identifier (NameId) that is used to identify the subject of a SAML assertion.

Google Directory attributes App attributes AWS > AssumeRoleWithSaml https://aws.amazon.com/SAML/Attributes/Role Basic Information > Primary email https://aws.amazon.com/SAML/Attributes/RoleSessionName

Figure 16: Mapping between Google Directory attributes and SAML attributes

- Choose FINISH and save the mapping.

Step 6. Test the integration between Google Workspace and AWS IAM

- Log into the Google Admin portal.

- From the Admin console Home page, go to Apps > Web and mobile apps.

- Select the Application you created in Step 5-i.

- At the top left, select TEST SAML LOGIN, then choose ALLOW ACCESS within the popup box.

Figure 18: Testing the SAML login

- Select ON for everyone in the Service status section, and choose SAVE. This will allow every user in Google Workspace to see the new SAML custom app.

Figure 19: Saving the custom app settings

- Now navigate to Web and mobile apps and choose TEST SAML LOGIN again. Amazon Web Services should open in a separate tab and display two roles for users to choose from:

FIgure 20: Testing SAML login again

Figure 21: Selecting the IAM role you wish to assume for console access

- Select the desired role and select Sign in.

- You should now be redirected to AWS Management Console home page.

- Google workspace users should now be able to access the AWS application from their workspace:

Figure 22: Viewing the AWS custom app

Conclusion

By following the steps in this blog post, you’ve configured your Google Workspace directory and AWS accounts to allow SAML-based federated sign-on for selected Google Workspace users. Using this over IAM users helps centralize identity management, making it easier to adopt a multi-account strategy.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Want more AWS Security news? Follow us on Twitter.

Make data available for analysis in seconds with Upsolver low-code data pipelines, Amazon Redshift Streaming Ingestion, and Amazon Redshift Serverless

Post Syndicated from Roy Hasson original https://aws.amazon.com/blogs/big-data/make-data-available-for-analysis-in-seconds-with-upsolver-low-code-data-pipelines-amazon-redshift-streaming-ingestion-and-amazon-redshift-serverless/

Amazon Redshift is the most widely used cloud data warehouse. Amazon Redshift makes it easy and cost-effective to perform analytics on vast amounts of data. Amazon Redshift launched Streaming Ingestion for Amazon Kinesis Data Streams, which enables you to load data into Amazon Redshift with low latency and without having to stage the data in Amazon Simple Storage Service (Amazon S3). This new capability enables you to build reports and dashboards and perform analytics using fresh and current data, without needing to manage custom code that periodically loads new data.

Upsolver is an AWS Advanced Technology Partner that enables you to ingest data from a wide range of sources, transform it, and load the results into your target of choice, such as Kinesis Data Streams and Amazon Redshift. Data analysts, engineers, and data scientists define their transformation logic using SQL, and Upsolver automates the deployment, scheduling, and maintenance of the data pipeline. It’s pipeline ops simplified!

There are multiple ways to stream data to Amazon Redshift and in this post we will cover two options that Upsolver can help you with: First, we show you how to configure Upsolver to stream events to Kinesis Data Streams that are consumed by Amazon Redshift using Streaming Ingestion. Second, we demonstrate how to write event data to your data lake and consume it using Amazon Redshift Serverless so you can go from raw events to analytics-ready datasets in minutes.

Prerequisites

Before you get started, you need to install Upsolver. You can sign up for Upsolver and deploy it directly into your VPC to securely access Kinesis Data Streams and Amazon Redshift.

Configure Upsolver to stream events to Kinesis Data Streams

The following diagram represents the architecture to write events to Kinesis Data Streams and Amazon Redshift.

To implement this solution, you complete the following high-level steps:

- Configure the source Kinesis data stream.

- Execute the data pipeline.

- Create an Amazon Redshift external schema and materialized view.

Configure the source Kinesis data stream

For the purpose of this post, you create an Amazon S3 data source that contains sample retail data in JSON format. Upsolver ingests this data as a stream; as new objects arrive, they’re automatically ingested and streamed to the destination.

- On the Upsolver console, choose Data Sources in the navigation sidebar.

- Choose New.

- Choose Amazon S3 as your data source.

- For Bucket, you can use the bucket with the public dataset or a bucket with your own data.

- Choose Continue to create the data source.

- Create a data stream in Kinesis Data Streams, as shown in the following screenshot.

This is the output stream Upsolver uses to write events that are consumed by Amazon Redshift.

Next, you create a Kinesis connection in Upsolver. Creating a connection enables you to define the authentication method Upsolver uses—for example, an AWS Identity and Access Management (IAM) access key and secret key or an IAM role.

- On the Upsolver console, choose More in the navigation sidebar.

- Choose Connections.

- Choose New Connection.

- Choose Amazon Kinesis.

- For Region, enter your AWS Region.

- For Name, enter a name for your connection (for this post, we name it

upsolver_redshift). - Choose Create.

Before you can consume the events in Amazon Redshift, you must write them to the output Kinesis data stream.

- On the Upsolver console, navigate to Outputs and choose Kinesis.

- For Data Sources, choose the Kinesis data source you created in the previous step.

- Depending on the structure of your event data, you have two choices:

- If the event data you’re writing to the output doesn’t contain any nested fields, select Tabular. Upsolver automatically flattens nested data for you.

- To write your data in a nested format, select Hierarchical.

- Because we’re working with Kinesis Data Streams, select Hierarchical.

Execute the data pipeline

Now that the stream is connected from the source to an output, you must select which fields of the source event you wish to pass through. You can also choose to apply transformations to your data—for example, adding correct timestamps, masking sensitive values, and adding computed fields. For more information, refer to Quick guide: SQL data transformation.

After adding the columns you want to include in the output and applying transformations, choose Run to start the data pipeline. As new events arrive in the source, Upsolver automatically transforms them and forwards the results to the output stream. There is no need to schedule or orchestrate the pipeline; it’s always on.

Create an Amazon Redshift external schema and materialized view

First, create an IAM role with the appropriate permissions (for more information, refer to Streaming ingestion). Now you can use the Amazon Redshift query editor, AWS Command Line Interface (AWS CLI), or API to run the following SQL statements.

- Create an external schema that is backed by Kinesis Data Streams. The following command requires you to include the IAM role you created earlier:

- Create a materialized view that allows you to run a SELECT statement against the event data that Upsolver produces:

- Instruct Amazon Redshift to materialize the results to a table called

mv_orders: - You can now run queries against your streaming data, such as the following:

Use Upsolver to write data to a data lake and query it with Amazon Redshift Serverless

The following diagram represents the architecture to write events to your data lake and query the data with Amazon Redshift.

To implement this solution, you complete the following high-level steps:

- Configure the source Kinesis data stream.

- Connect to the AWS Glue Data Catalog and update the metadata.

- Query the data lake.

Configure the source Kinesis data stream

We already completed this step earlier in the post, so you don’t need to do anything different.

Connect to the AWS Glue Data Catalog and update the metadata

To update the metadata, complete the following steps:

- On the Upsolver console, choose More in the navigation sidebar.

- Choose Connections.

- Choose the AWS Glue Data Catalog connection.

- For Region, enter your Region.

- For Name, enter a name (for this post, we call it

redshift serverless). - Choose Create.

- Create a Redshift Spectrum output, following the same steps from earlier in this post.

- Select Tabular as we’re writing output in table-formatted data to Amazon Redshift.

- Map the data source fields to the Redshift Spectrum output.

- Choose Run.

- On the Amazon Redshift console, create an Amazon Redshift Serverless endpoint.

- Make sure you associate your Upsolver role to Amazon Redshift Serverless.

- When the endpoint launches, open the new Amazon Redshift query editor to create an external schema that points to the AWS Glue Data Catalog (see the following screenshot).

This enables you to run queries against data stored in your data lake.

Query the data lake

Now that your Upsolver data is being automatically written and maintained in your data lake, you can query it using your preferred tool and the Amazon Redshift query editor, as shown in the following screenshot.

Conclusion

In this post, you learned how to use Upsolver to stream event data into Amazon Redshift using streaming ingestion for Kinesis Data Streams. You also learned how you can use Upsolver to write the stream to your data lake and query it using Amazon Redshift Serverless.

Upsolver makes it easy to build data pipelines using SQL and automates the complexity of pipeline management, scaling, and maintenance. Upsolver and Amazon Redshift enable you to quickly and easily analyze data in real time.

If you have any questions, or wish to discuss this integration or explore other use cases, start the conversation in our Upsolver Community Slack channel.

About the Authors