Post Syndicated from Explosm.net original http://explosm.net/comics/5965/

New Cyanide and Happiness Comic

Post Syndicated from Explosm.net original http://explosm.net/comics/5965/

New Cyanide and Happiness Comic

Post Syndicated from Elyse Lopez original https://aws.amazon.com/blogs/architecture/top-5-featured-architecture-content-for-august/

The AWS Architecture Center provides new and notable reference architecture diagrams, vetted architecture solutions, AWS Well-Architected best practices, whitepapers, and more. This blog post features some of our best picks from the new and newly updated content we released in the past month.

The travel and hospitality industries are facing new challenges when generating, accessing, and analyzing data at scale. This new reference architecture diagram shows how to implement a travel and hospitality-related data mesh that you can use to work with data such as travel reservations, loyalty programs, and digital revenue.

This new whitepaper discusses architecture considerations and recommended practices that systems architects and IT managers can apply to build highly available on-premises application environments with AWS Outposts.

This reference architecture is designed for manufacturers that want to enhance their Internet of Things (IoT) infrastructure by using computer vision for product quality analysis. It shows how to detect and act on product defect classification using AWS IoT and artificial intelligence/machine learning (AI/ML) services.

Data tampering is costly for manufacturing companies, and quality data can be particularly vulnerable. This new AWS Solutions Implementation protects quality data by using cryptographic hashing in Amazon Quantum Ledger Database (Amazon QLDB) to maintain an accurate history of data changes.

Many developers are realizing the benefits of moving from centralized to distributed architectures. Because of this, information on how to establish communication between the application services in those environments is very popular. This episode of Back to Basics covers best practices to establish communication, including trade-offs, common anti-patterns, and how to handle errors in less than 5 minutes!

Post Syndicated from Grab Tech original https://engineering.grab.com/multi-armed-bandit-system-recommendation

A/B testing is an experiment where a random e-commerce platform user is given two versions of a variable: a control group and a treatment group, to discover the optimal version that maximizes conversion. When running A/B testing, you can take the Multi-Armed Bandit optimisation approach to minimise the loss of conversion due to low performance.

In the traditional software development process, Multi-Armed Bandit (MAB) testing and rolling out a new feature are usually separate processes. The novel Multi-Armed Bandit System for Recommendation solution, hereafter the Multi-Armed Bandit Optimiser, proposes automating the Multi-Armed Bandit testing simultaneously while rolling out the new feature.

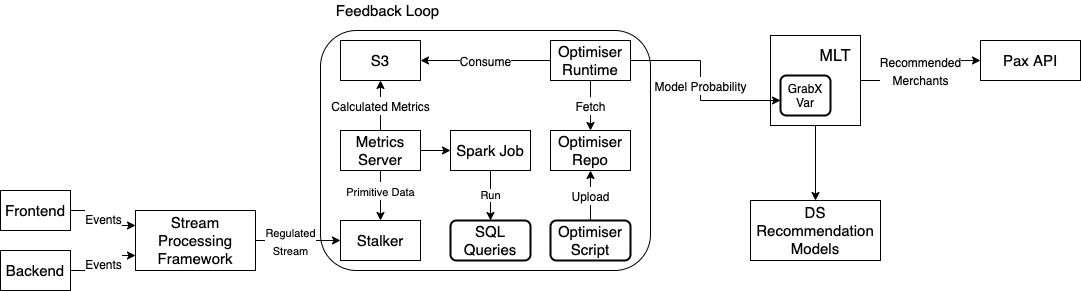

The following diagram illustrates the system architecture.

The novel Multi-Armed Bandit System for Recommendation solution contains three building blocks.

A lightweight system that performs basic operations on Kafka Streams, such as aggregation, filtering, and mapping. The proposed solution relies on this framework to pre-process raw events published by mobile apps and backend processes into the proper format that can be fed into the feedback loop.

A system that calculates the goal metrics and optimises the model traffic distribution. It runs a metrics server which pulls the data from Stalker, which is a time series database that stores the processed events in the last one hour. The metrics server invokes a Spark Job periodically to run the SQL queries that computes the pre-defined goal metrics: the Clickthrough Rate, Conversion Rate and so on, provided by users. The output of the job is dumped into an S3 bucket, and is picked up by optimiser runtime. It runs the Multi-Armed Bandit Optimiser to optimise the model traffic distribution based on the latest goal metrics.

The Multi-Armed Bandit Optimiser consists of the following modules:

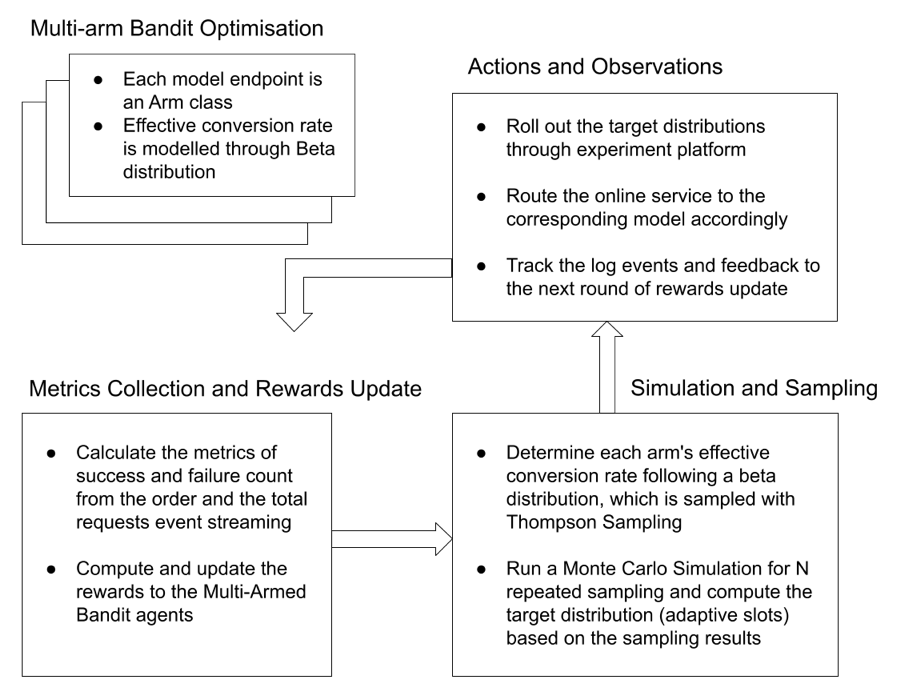

The goal of the Multi-Armed Bandit Optimisation is to find the optimal Arm that results in the best predefined metrics, and then allocate the maximum traffic to that Arm.

The solution can be illustrated in the following problem. For K Arm, in which the action space A={1,2,…,K}, the Multi-Arm-Bandit Optimiser goal is to solve the one-shot optimisation problem of  .

.

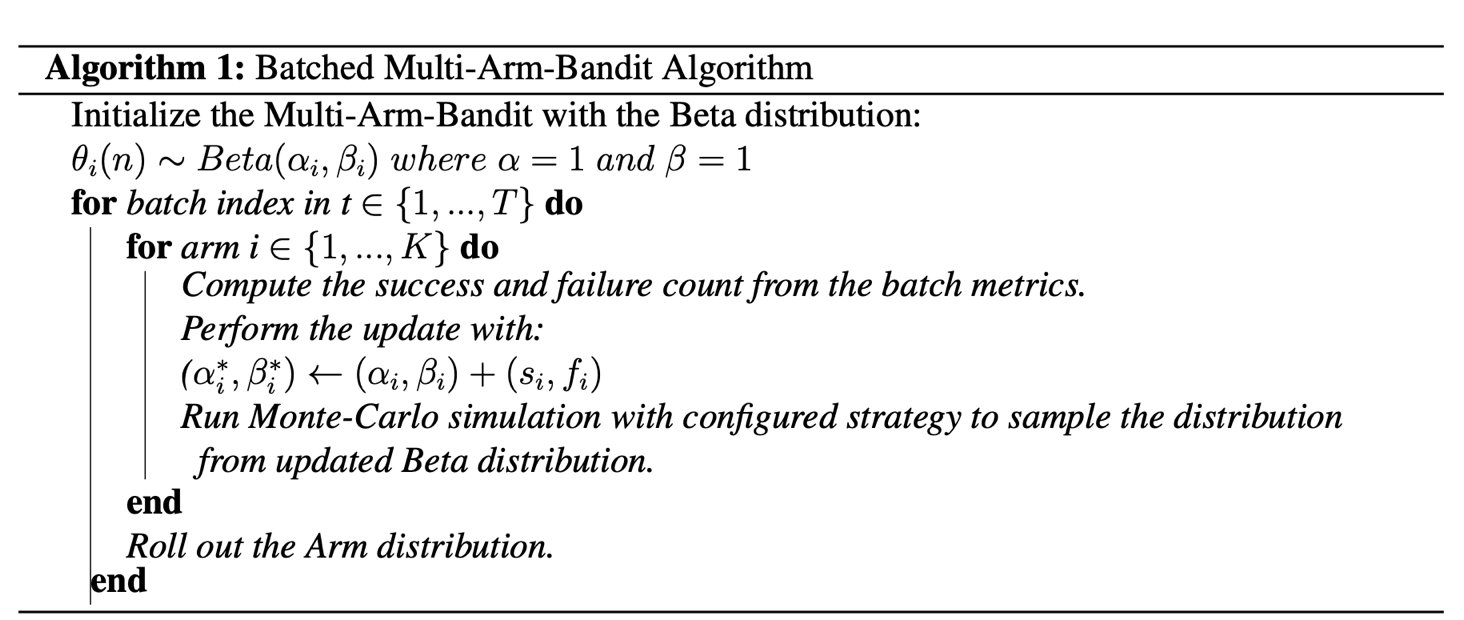

The Reward Update module collects a batch of the metrics. It calculates the Success and Failure counts, then updates the Beta distribution of each Arm with the Batched Multi-Armed Bandit algorithm.

In the Multi-Armed Bandit Agent module, each Arm’s metrics are modelled as a Beta distribution which is sampled with Thompson Sampling. The Beta distribution formula is:

.

.

The Batched Multi-Armed Bandit algorithm updates the Beta distribution with the batch metrics. The optimisation algorithm can be described in the following method.

The Monte-Carlo Simulation module runs the simulation for N repeated times to find the best Arm over a configurable simulation window. Then, it applies the simulated results as each Arm’s distribution percentage for the next round.

To handle different scenarios, we designed two strategies.

The Adaptive Rollout module rolls out the sampled distribution of each Multi-Armed Bandit Arm, in the form of Multi-Armed Bandit Arm Model ID and distribution, to the experimentation platform’s configuration variable. The resulting variable is then read from the online service. The process repeats as it collects feedback from the Adaptive Rollout metrics’ results in the feedback loop.

In the GrabFood Recommended for You widget, there are several food recommendation models that categorise lists of merchants. The choice of the model is controlled through experiments at rollout, and the results of the experiments are analysed offline. After the analysis, data scientists and product managers rectify the model choice based on the experiment results.

The Multi-Armed Bandit System for Recommendation solution improves the process by speeding up the feedback loop with the Multi-Armed Bandit system. Instead of depending on offline data which comes out at T+N, the solution responds to minute-level metrics, and adjusts the model faster.

This results in an optimal solution faster. The proposed Multi-Armed Bandit for Recommendation solution workflow is illustrated in the following diagram.

The GrabFood recommendation uses the Effective Conversion Rate metrics as the optimisation objective. The Effective Conversion Rate is defined as the total number of checkouts through the Recommended for You widget, divided by the total widget viewed and multiplied by the coverage rate.

The events of views, clicks, and checkouts are collected over a 30-minute aggregation window and the coverage. A request with a checkout is considered as a success event, while a non-converted request is considered as a failure event.

With the Multi-Armed Bandit Optimiser, the Beta distribution is selected to model the Effective Conversion Rate. The use of the mean strategy in the Monte-Carlo Simulation results in a more stable distribution.

The Multi-Armed Bandit Optimiser uses the eater ID as the unique entity, applies a policy and assigns different percentages of eaters to each model, based on computed distribution at the beginning of each loop.

The Multi-Armed Bandit Optimiser first runs model validation to ensure all candidates are suitable for rolling out. If the scheduled MAB job fails, it falls back to a default distribution that is set to 50-50% for each model.

Grab is more than just the leading ride-hailing and mobile payments platform in Southeast Asia. We use data and technology to improve everything from transportation to payments and financial services across a region of more than 620 million people. We aspire to unlock the true potential of Southeast Asia and look for like-minded individuals to join us on this ride.

If you share our vision of driving South East Asia forward, apply to join our team today.

Post Syndicated from Danilo Poccia original https://aws.amazon.com/blogs/aws/amazon-managed-grafana-is-now-generally-available-with-many-new-features/

In December, we introduced the preview of Amazon Managed Grafana, a fully managed service developed in collaboration with Grafana Labs that makes it easy to use the open-source and the enterprise versions of Grafana to visualize and analyze your data from multiple sources. With Amazon Managed Grafana, you can analyze your metrics, logs, and traces without having to provision servers, or configure and update software.

During the preview, Amazon Managed Grafana was updated with new capabilities. Today, I am happy to announce that Amazon Managed Grafana is now generally available with additional new features:

Let’s do a quick walkthrough to see how this works in practice.

Using Amazon Managed Grafana

In the Amazon Managed Grafana console, I choose Create workspace. A workspace is a logically isolated, highly available Grafana server. I enter a name and a description for the workspace, and then choose Next.

I can use AWS Single Sign-On (AWS SSO) or an external identity provider via SAML to authenticate the users of my workspace. For simplicity, I select AWS SSO. Later in the post, I’ll show how SAML authentication works. If this is your first time using AWS SSO, you can see the prerequisites (such as having AWS Organizations set up) in the documentation.

Then, I choose the Service managed permission type. In this way, Amazon Managed Grafana will automatically provision the necessary IAM permissions to access the AWS Services that I select in the next step.

In Service managed permission settings, I choose to monitor resources in my current AWS account. If you use AWS Organizations to centrally manage your AWS environment, you can use Grafana to monitor resources in your organizational units (OUs).

I can optionally select the AWS data sources that I am planning to use. This configuration creates an AWS Identity and Access Management (IAM) role that enables Amazon Managed Grafana to access those resources in my account. Later, in the Grafana console, I can set up the selected services as data sources. For now, I select Amazon CloudWatch so that I can quickly visualize CloudWatch metrics in my Grafana dashboards.

Here I also configure permissions to use Amazon Managed Service for Prometheus (AMP) as a data source and have a fully managed monitoring solution for my applications. For example, I can collect Prometheus metrics from Amazon Elastic Kubernetes Service (EKS) and Amazon Elastic Container Service (Amazon ECS) environments, using AWS Distro for OpenTelemetry or Prometheus servers as collection agents.

In this step I also select Amazon Simple Notification Service (SNS) as a notification channel. Similar to the data sources before, this option gives Amazon Managed Grafana access to SNS but does not set up the notification channel. I can do that later in the Grafana console. Specifically, this setting adds SNS publish permissions to topics that start with grafana to the IAM role created by the Amazon Managed Grafana console. If you prefer to have tighter control on permissions for SNS or any data source, you can edit the role in the IAM console or use customer-managed permissions for your workspace.

Finally, I review all the options and create the workspace.

After a few minutes, the workspace is ready, and I find the workspace URL that I can use to access the Grafana console.

I need to assign at least one user or group to the Grafana workspace to be able to access the workspace URL. I choose Assign new user or group and then select one of my AWS SSO users.

By default, the user is assigned a Viewer user type and has view-only access to the workspace. To give this user permissions to create and manage dashboards and alerts, I select the user and then choose Make admin.

Back to the workspace summary, I follow the workspace URL and sign in using my AWS SSO user credentials. I am now using the open-source version of Grafana. If you are a Grafana user, everything is familiar. For my first configurations, I will focus on AWS data sources so I choose the AWS logo on the left vertical bar.

Here, I choose CloudWatch. Permissions are already set because I selected CloudWatch in the service-managed permission settings earlier. I select the default AWS Region and add the data source. I choose the CloudWatch data source and on the Dashboards tab, I find a few dashboards for AWS services such as Amazon Elastic Compute Cloud (Amazon EC2), Amazon Elastic Block Store (EBS), AWS Lambda, Amazon Relational Database Service (RDS), and CloudWatch Logs.

I import the AWS Lambda dashboard. I can now use Grafana to monitor invocations, errors, and throttles for Lambda functions in my account. I’ll save you the screenshot because I don’t have any interesting data in this Region.

Using SAML Authentication

If I don’t have AWS SSO enabled, I can authenticate users to the Amazon Managed Grafana workspace using an external identity provider (IdP) by selecting the SAML authentication option when I create the workspace. For existing workspaces, I can choose Setup SAML configuration in the workspace summary.

First, I have to provide the workspace ID and URL information to my IdP in order to generate IdP metadata for configuring this workspace.

After my IdP is configured, I import the IdP metadata by specifying a URL or copying and pasting to the editor.

Finally, I can map user permissions in my IdP to Grafana user permissions, such as specifying which users will have Administrator, Editor, and Viewer permissions in my Amazon Managed Grafana workspace.

Availability and Pricing

Amazon Managed Grafana is available today in ten AWS Regions: US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Ireland), Europe (Frankfurt), Europe (London), Asia Pacific (Singapore), Asia Pacific (Tokyo), Asia Pacific (Sydney), and Asia Pacific (Seoul). For more information, see the AWS Regional Services List.

With Amazon Managed Grafana, you pay for the active users per workspace each month. Grafana API keys used to publish dashboards are billed as an API user license per workspace each month. You can upgrade to Grafana Enterprise to have access to enterprise plugins, support, and on-demand training directly from Grafana Labs. For more information, see the Amazon Managed Grafana pricing page.

— Danilo

Post Syndicated from original https://xkcd.com/2510/

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=680S6UbCT_w

Post Syndicated from Николай Марченко original https://bivol.bg/%D0%B1%D1%8A%D0%BB%D0%B3%D0%B0%D1%80%D1%81%D0%BA%D0%B0%D1%82%D0%B0-%D1%82%D0%B5%D0%BB%D0%B5%D0%B3%D1%80%D0%B0%D1%84%D0%BD%D0%B0-%D0%B0%D0%B3%D0%B5%D0%BD%D1%86%D0%B8%D1%8F-%D1%84%D1%83%D0%BD%D0%BA.html

Николай Марченко от “Биволъ” анализира за Balkan Free Media Initiative (BFMI) чрез няколко нашумели факти от световната хроника, отразени от Българската национална информационна агенция БТА. Националната новинарска агенция на България…

Post Syndicated from original http://www.gatchev.info/blog/?p=2376

Бе и аз преди мислех, дека туй със злите мафии е приказка некаква. Ама излезна, че не е, от мене да знаете! Има ги, и вършат секакви злини на човечеството. И най-големата от сички – де що има умен човек и кадърен, и полезен, затварят го в едни специални затвори. И там ги дрогират и им промиват мозъците, да забравят кои и какви са. И да престанат да помагат на хората. Видех го с очите си!

Като ме пенсионираха от ДАП-а, тръгнах да търсим да чистим некаде другаде, да добавям към пенсията. Лесно намерих – съвременен човек съм, имам туйто кампютър дома, внучката ми купи, да се чуваме и видиме по него с нея. Малко ми е криво на дъщерята, че стана безродничка, заряза хубавата ни родина и се дигна у Канада. Ама внучето вина нема. Ем и български понаучи покрай мене. Понекога кани съученици, превежда им какво си говориме, те много се радват. И за тех е хубаво, да видят българин и да научат колко е убава България… Та, по кампютъра намерих и обявата. Де да знам аз, че е за такъв затвор! Отидох, говорихме, назначиха ме.

Та, почнах аз работа у едно от отделенията на затвора. За шизо… шизо… абе се я забравям тая дума, сложна е. И там колко таланти и благодетели на човечеството видех затворени и измъчвани, думи немам!

Имаше за начало не един, а цели двама Исус Христосовци! Дошли да спасяват човечеството. На зор ще да е работата, щом Господ си праща синовете на по двойки. То единият беше дъщеря де, ама кои сме ние, че да ограничаваме Господа кого да праща? Пък и има логика, връзва се, като са двама! Те постоянно се караха кой е истинският, ама аз си мисля, че ще да са и двамата. Само един на тия пропаднали времена надали стига, прав е Господ да прати двама!

По-обикновените бяха още повече. Имаше един Никола Тесла – не знаех кой е, ама в кампютъра има един фейсбук, дето пише всичко за всичко. Та, там прочетох – ако не знаете, той е един уж сърбин, ама всъщност българин, дето избягал в Америка и там изобретил лъчите на смъртта и пренасянето на енергия по въздуха. Сигурно хамериканците са го хванали и са ни го върнали да го държим затворен, те така всички свестни хора ги хващат и затварят, и това го пише във фейсбука. Аз да си кажа, отначало не му повярвах. Във фейсбука пишеше кога е роден, връзваше се да е на към сто и педесе години, па наглед и трийсе нема. Ама той ми обясни, че бил изобретил и лъчи за вечна младост… Е такъв гений значи, в затвора го държат! И го тъпчат с дроги да му промият мозъка, да спре да помага на хората!

Имаше и един Нострадамус. Прероден – той като умирал, се прераждал, понеже било важно на света да има пророк. И за него прочетох у фейсбука кой е. Отначало не вервах, че пророкува – той да не е Ванга, я! Ама той ми обясни, че затова се бил преродил българин, понеже ние сме свещен народ и на нас е дадено да сме пророци. Аз пак не вервах, ама той само като ме изгледа, все едно мерка ми взима, и от раз позна, че съм с незавършено основно! И ми рече – затова не са могли да ти промият мозъка, чиче. На тия, дето са учили висши, са им налели у главите толкова лъжи, че никога не могат да видят истината. Ама неучилите не са промити и могат! Знаех си аз, че съм по-така от всякакви чантаджии с дипломи, ама пророк си требва да го обясни, че да можеш го разбра! И логиката му да видиш, и всичко.

Тия с Нобелови награди са сигурно къде десет. (Това са едни награди за най-велики учени, пише го във фейсбука.) Половината са наизобретили вечни двигатели, да освободят човечеството от петролната и машинната мафии. Ама били наивни, и мафиите ги хванали и ги назатворили там. (Официално му викат болница, за не помня какви заболявания беше, пак некаква сложна дума. Ама като ги гледам, болни вътре не видях. Лъжа на мафиите си е.) От другите един открил средство за безсмъртие, ама като почнал да го раздава на хората, мафиите го усетили и го затворили. И всички, на които го раздавал, още същия час ги или изтровили, или разболели лошо. Нали постоянно ръсят с кемтрейлсове от самолетите, в тях има едни чипове за управление от разстояние, и през тях… И други имаше, ама то можеш ли ги запомни всичките! Страдалци за доброто на човечеството!

Персонала го гледам – ходят дегизирани с престилки, все едно наистина са лекари. От по-младите може и да имаше един-двама истински, де. Младички, наивнички, мислят си, че реално помагат на някого. Горките… Ама по-дъртите до един си бяха мафиоти. Шефът примерно, уж професор бил, един с едни очила, постоянно само нарежда. Нема как да не е билдерберг, те нали са едни такива важни. Или поне илюминат – един от изобретателите вътре ми обясни по какво се познавало, че иска лампите все да са светнати. Главната сестра пък, каквато е усойница, нема как да не е рептил. (Това рептилите били некакви марсиански змии.) Като ме види, все – измий тука, що не си измел там… Не може ли човек да дремне малко бе? Мафиоти с мафиоти…

А, забравих – от затворниците има и един лекар. С две Нобелови награди. Казва се Хипократ. Та, той ми обясни за сегашната епидемия. Никакъв вирус нямало, микробите били измислици на мафиите. Хората умирали не от вируса, а само със него. Всъщност от кемтрейлсовете, дето ги ръсят Сорос и Бил Гейтс от самолетите. Това било тръгнало още от четиринайсети век, тогава измрели две трети от хората. Уж че имало голяма чума, ама всъщност никаква чума нямало, хората умирали само с нея, а не от нея, а от кемтрейлсовете. Тогава самолети нямало още, та ги ръсели от хеликоптери… Маските по начало били направени, за да запушват устата на хората, да не могат да говорят истината, ама после Иван Костов ги усъвършенствал, направил вируси и ги сложил в тях, и като си сложиш маската, се заразяваш и умираш. Затова толкова напират да карат децата в училище да носят маски – да изтребят българския народ, за да заселят тук на освободената земя талибани с чалми и ятагани, по заповед на Меркел. Хипократ го знаел със сигурност, бил виждал лично заповедта с подписите на Меркел и Костов. Затова и го затворили.

И ако знаете какво ги правят, горките! Те повечето си мълчат, имат достойнство хората, ама един не издържа и ми се оплака. Той е изобретил едно хапче, дето го слагаш в един резервоар чешмяна вода, и тя става на бензин, по-качествен от тоя по бензиностанциите. И цяла опаковка хапчета струва жълти стотинки. Получил за тях Нобелова награда и златен медал, по-голям от длан. Ама петролната мафия го хванала и го затворила тук, да не си изгуби печалбите. И го тъпчели с разни отрови, да забрави кой е и какъв е. А като минали две седмици и видели, че отровите не действат, една нощ му откраднали Нобеловия медал, докато спал. Подменили му го с ръждива капачка за буркан, да му се подиграят. И да го разколебаят… Мъчители проклети!

Цялата Вселена ни жали нас, и сума ти извънземни са се събрали да ни помагат. Избират си разни хора и говорят на тях и чрез тях, дават ни мъдрост и знание. А мафията ги хваща тия хора и също ги затваря. Има вътре четири или пет такива затворени. Трудно е да се каже точно, понеже единият е всъщност двама. И постоянно се кара със себе си, на кой от двамата какво са казали извънземните. Ама и двамата бяха съгласни, че единственото ни спасение е майчица Русия. Тя е най-свещената страна на цялата вселена, и двамата извънземни го били потвърдили. А пък разните еврогейове са педераси, и искат да направят и нас такива. Да приемем закон, че се разрешават само хомо… абе, педераски бракове. А ако нормални хора се срещат по любов, да им вземат децата и да ги дадат за осиновяване на черни норвежки педераси, те чакали на опашка и плащали много за бели деца. Особено ако са от свещен народ. Пиели им кръвта, за да станат и те свещени.

(Това последното ако знаете колко ме ядоса, думи немам. На децата да нямат свян да посягат! Още същата вечер го казах на внучката по кампютъра, и я предупредих да внимава. Тя беше с няколко други деца, съученици от класа. И им преведе и на тях, да са предупредени. Ако знаете колко се радваха всичките! Щерката по едно време влезе в стаята и ме чу, и нещо се понамуси, не разбрах защо. Ама внучката нещо й каза там на някакъв език, не знам кой, нали в Канада имат над сто езика, и щерката се успокои. Даже май я похвали, сигурно че предупреждава и другите деца, да знаят от какво да се пазят. И какви ужасии има по този свят. Че и у нас даже, нищо че сме свещена земя българска…)

А пък във фейсбука има някакви хора, дето казват, че не било така. Ами! Хубаво им го каза един там, професор академик, абе много умен човек. Че са платени агенти на мафията и взимат пари, за да лъжат хората, че микробите съществуват. И че станиолените шапки не пазели от пет-джито, и че гемеото нямало да ни изяде… Лъжци! А кажете сега, има ли нещо невярно от дето ви го разказах? Кое не е така?

Post Syndicated from original https://lwn.net/Articles/867944/rss

The Free Software Foundation (FSF) clarifies

the purpose of its copyright policies and examines the impact of

potential alternatives.

For some GNU packages, the ones that are FSF-copyrighted, we ask contributors for two kinds of legal papers: copyright assignments, and employer copyright disclaimers. We drew up these policies working with lawyers in the 1980s, and they make possible our steady and continuing enforcement of the GNU General Public License (GPL).

These papers serve four different but related legal purposes, all of which help ensure that the GNU Project’s goals of freedom for the community are met.

Post Syndicated from David Zhang original https://aws.amazon.com/blogs/big-data/get-started-with-the-amazon-redshift-data-api/

Amazon Redshift is a fast, scalable, secure, and fully managed cloud data warehouse that enables you to analyze your data at scale. Tens of thousands of customers use Amazon Redshift to process exabytes of data to power their analytical workloads.

The Amazon Redshift Data API is an Amazon Redshift feature that simplifies access to your Amazon Redshift data warehouse by removing the need to manage database drivers, connections, network configurations, data buffering, credentials, and more. You can run SQL statements using the AWS Software Development Kit (AWS SDK), which supports different languages such as C++, Go, Java, JavaScript, .Net, Node.js, PHP, Python, and Ruby.

| Since you’re reading this post, you may also be interested in the following AWS Online Tech Talks video for more info: |

With the Data API, you can programmatically access data in your Amazon Redshift cluster from different AWS services such as AWS Lambda, Amazon SageMaker notebooks, AWS Cloud9, and also your on-premises applications using the AWS SDK. This allows you to build cloud-native, containerized, serverless, web-based, and event-driven applications on the AWS Cloud.

The Data API also enables you to run analytical queries on Amazon Redshift’s native tables, external tables in your data lake via Amazon Redshift Spectrum, and also across Amazon Redshift clusters, which is known as data sharing. You can also perform federated queries with external data sources such as Amazon Aurora.

In an earlier, post, we shared in great detail on how you can use the Data API to interact with your Amazon Redshift data warehouse. In this post, we learn how to get started with the Data API in different languages and also discuss various use cases in which customers are using this to build modern applications combining modular, serverless, and event-driven architectures. The Data API was launched in September 2020, and thousands of our customers are already using it for a variety of use cases:

In this section, we discuss the key features of the Data API.

The Data API integrates with the AWS SDK to run queries. Therefore, you can use any language supported by the AWS SDK to build your application with it, such as C++, Go, Java, JavaScript, .NET, Node.js, PHP, Python, and Ruby.

With the Data API, you can run individual queries from your application or submit a batch of SQL statements within a transaction, which is useful to simplify your workload.

With the Data API, you can run parameterized SQL queries, which brings the ability to write reusable code when developing ETL code by passing parameters into a SQL template instead of concatenating parameters into each query on their own. This also makes it easier to migrate code from existing applications that needs parameterization. In addition, parameterization also makes code secure by eliminating malicious SQL injection.

With the Data API, you can interact with Amazon Redshift without having to configure JDBC or ODBC drivers. The Data API eliminates the need for configuring drivers and managing database connections. You can run SQL commands to your Amazon Redshift cluster by calling a Data API secured API endpoint.

The Data API doesn’t need a persistent connection with Amazon Redshift. Instead, it provides a secure HTTP endpoint, which you can use to run SQL statements. Therefore, you don’t need to set up and manage a VPC, security groups, and related infrastructure to access Amazon Redshift with the Data API.

The Data API provides two options to provide credentials:

You can also use the Data API when working with federated logins through IAM credentials. You don’t have to pass database credentials via API calls when using identity providers such as Okta, Azure Active Directory, or database credentials stored in Secrets Manager. If you’re using Lambda, the Data API provides a secure way to access your database without the additional overhead of launching Lambda functions in Amazon Virtual Private Cloud (Amazon VPC).

The Data API is asynchronous. You can run long-running queries without having to wait for it to complete, which is key in developing a serverless, microservices-based architecture. Each query results in a query ID, and you can use this ID to check the status and response of the query. In addition, query results are stored for 24 hours.

You can monitor Data API events in Amazon EventBridge, which delivers a stream of real-time data from your source application to targets such as Lambda. This option is available when you’re running your SQL statements in the Data API using the WithEvent parameter set to true. When a query is complete, the Data API can automatically send event notifications to EventBridge, which you may use to take further actions. This helps you design event-driven applications with Amazon Redshift. For more information, see Monitoring events for the Amazon Redshift Data API in Amazon EventBridge.

It’s easy to get started with the Data API using the AWS SDK. You can use the Data API to run your queries on Amazon Redshift using different languages such as C++, Go, Java, JavaScript, .Net, Node.js, PHP, Python and Ruby.

All API calls from different programming languages follow similar parameter signatures. For instance, you can run the ExecuteStatement API to run individual SQL statements in the AWS Command Line Interface (AWS CLI) or different languages such as Python and JavaScript (NodeJS).

The following code is an example using the AWS CLI:

The following example code uses Python:

The following code uses JavaScript (NodeJS):

We have also published a GitHub repository showcasing how to get started with the Data API in different languages such as Go, Java, JavaScript, Python, and TypeScript. You may go through the step-by-step process explained in the repository to build your custom application in all these languages using the Data API.

You can use the Data API to modernize and simplify your application architectures by creating modular, serverless, event-driven applications with Amazon Redshift. In this section, we discuss some common use cases.

When performing ETL workflows, you have to complete a number of steps. As your business scales, the steps and dependencies often become complex and difficult to manage. With the Data API and Step Functions, you can easily orchestrate complex ETL workflows. You can explore the following example use case and AWS CloudFormation template demonstrating ETL orchestration using the Data API and Step Functions.

If you’re designing your custom application in any programming language that is supported by the AWS SDK, the Data API simplifies data access from your applications, which may be an application hosted on Amazon Elastic Compute Cloud (Amazon EC2) or Amazon Elastic Container Service (Amazon ECS) and other compute services or a serverless application built with Lambda. You can explore an example use case and CloudFormation template showcasing how to easily work with the Data API from Amazon EC2 based applications.

SageMaker notebooks are very popular among the data science community to analyze and solve machine learning problems. The Data API makes it easy to access and visualize data from your Amazon Redshift data warehouse without troubleshooting issues on password management or VPC or network issues. You can learn more about this use case along with a CloudFormation template showcasing how to use the Data API to interact from a SageMaker Jupyter notebook.

With the AWS SDK, you can use the Data APIs to directly invoke them as REST API calls such as GET or POST methods. For more information, see REST for Redshift Data API.

Event–driven applications are popular with many customers, where applications run in response to events. A primary benefit of this architecture is the decoupling of producer and consumer processes, which allows greater flexibility in application design and building decoupled processes. For more information, see Building an event-driven application with AWS Lambda and the Amazon Redshift Data API. The following CloudFormation template demonstrates the same.

Similar to event-driven ELT applications, event-driven web applications are also becoming popular, especially if you want to avoid long-running database queries, which create bottlenecks for the application servers. For example, you may be running a web application that has a long-running database query taking a minute to complete. Instead of designing that web application with long-running API calls, you can use the Data API and Amazon API Gateway WebSockets, which creates a lightweight websocket connection with the browser and submits the query to Amazon Redshift using the Data API. When the query is finished, the Data API sends a notification to EventBridge about its completion. When the data is available in the Data API, it’s pushed back to this browser session and the end-user can view the dataset. You can explore an example use case along with a CloudFormation template showcasing how to build an event-driven web application using the Data API and API Gateway WebSockets.

With the Data API, you can design a serverless data processing workflow, where you can design an end-to-end data processing pipeline orchestrated using serverless AWS components such as Lambda, EventBridge, and the Data API client. Typically, a data pipeline involves multiple steps, for example:

The example use case Serverless Data Processing Workflow using Amazon Redshift Data Api demonstrates how to chain multiple Lambda functions in a decoupled fashion and build an end-to-end data pipeline. In that code sample, a Lambda function is run through a scheduled event that loads raw data from Amazon Simple Storage Service (Amazon S3) to Amazon Redshift. On its completion, the Data API generates an event that triggers an event rule in EventBridge to invoke another Lambda function that prepares and transforms raw data. When that process is complete, it generates another event triggering a third EventBridge rule to invoke another Lambda function and unloads the data to Amazon S3. The Data API enables you to chain this multi-step data pipeline in a decoupled fashion.

The Data API offers many additional benefits when integrating Amazon Redshift into your analytical workload. The Data API simplifies and modernizes current analytical workflows and custom applications. You can perform long-running queries without having to pause your application for the queries to complete. This enables you to build event-driven applications as well as fully serverless ETL pipelines.

The Data API functionalities are available in many different programming languages to suit your environment. As mentioned earlier, there are a wide variety of use cases and possibilities where you can use the Data API to improve your analytical workflow. To learn more, see Using the Amazon Redshift Data API.

David Zhang is an AWS Solutions Architect who helps customers design robust, scalable, and data-driven solutions across multiple industries. With a background in software engineering, David is an active leader and contributor to AWS open-source initiatives. He is passionate about solving real-world business problems and continuously strives to work from the customer’s perspective.

David Zhang is an AWS Solutions Architect who helps customers design robust, scalable, and data-driven solutions across multiple industries. With a background in software engineering, David is an active leader and contributor to AWS open-source initiatives. He is passionate about solving real-world business problems and continuously strives to work from the customer’s perspective.

Bipin Pandey is a Data Architect at AWS. He loves to build data lake and analytics platform for his customers. He is passionate about automating and simplifying customer problems with the use of cloud solutions.

Bipin Pandey is a Data Architect at AWS. He loves to build data lake and analytics platform for his customers. He is passionate about automating and simplifying customer problems with the use of cloud solutions.

Manash Deb is a Senior Analytics Specialist Solutions Architect at AWS. He has worked on building end-to-end data-driven solutions in different database and data warehousing technologies for over 15 years. He loves to learn new technologies and solving, automating, and simplifying customer problems with easy-to-use cloud data solutions on AWS.

Manash Deb is a Senior Analytics Specialist Solutions Architect at AWS. He has worked on building end-to-end data-driven solutions in different database and data warehousing technologies for over 15 years. He loves to learn new technologies and solving, automating, and simplifying customer problems with easy-to-use cloud data solutions on AWS.

Bhanu Pittampally is Analytics Specialist Solutions Architect based out of Dallas. He specializes in building analytical solutions. His background is in data warehouse – architecture, development and administration. He is in data and analytical field for over 13 years. His Linkedin profile is here.

Bhanu Pittampally is Analytics Specialist Solutions Architect based out of Dallas. He specializes in building analytical solutions. His background is in data warehouse – architecture, development and administration. He is in data and analytical field for over 13 years. His Linkedin profile is here.

Chao Duan is a software development manager at Amazon Redshift, where he leads the development team focusing on enabling self-maintenance and self-tuning with comprehensive monitoring for Redshift. Chao is passionate about building high-availability, high-performance, and cost-effective database to empower customers with data-driven decision making.

Chao Duan is a software development manager at Amazon Redshift, where he leads the development team focusing on enabling self-maintenance and self-tuning with comprehensive monitoring for Redshift. Chao is passionate about building high-availability, high-performance, and cost-effective database to empower customers with data-driven decision making.

Debu Panda, a Principal Product Manager at AWS, is an industry leader in analytics, application platform, and database technologies, and has more than 25 years of experience in the IT world.

Debu Panda, a Principal Product Manager at AWS, is an industry leader in analytics, application platform, and database technologies, and has more than 25 years of experience in the IT world.

Post Syndicated from original https://lwn.net/Articles/867657/rss

A longstanding tug-of-war between system package managers and Python’s own

installation mechanisms (primarily pip, but there are others) looks

on its way to being resolved—or at least regularized. PEP 668

(“Graceful cooperation between external and Python package

managers“) has been created to provide ways for the two types of package installation

to

work together, rather than at cross-purposes at times.

Since many operating systems depend on Python tools, with package versions

that may differ from those of users’ Python applications, making them play together

nicely should result in more stable systems.

Post Syndicated from Raj Jain original https://aws.amazon.com/blogs/security/how-to-use-acm-private-ca-for-enabling-mtls-in-aws-app-mesh/

Securing east-west traffic in service meshes, such as AWS App Mesh, by using mutual Transport Layer Security (mTLS) adds an additional layer of defense beyond perimeter control. mTLS adds bidirectional peer-to-peer authentication on top of the one-way authentication in normal TLS. This is done by adding a client-side certificate during the TLS handshake, through which a client proves possession of the corresponding private key to the server, and as a result the server trusts the client. This prevents an arbitrary client from connecting to an App Mesh service, because the client wouldn’t possess a valid certificate.

In this blog post, you’ll learn how to enable mTLS in App Mesh by using certificates derived from AWS Certificate Manager Private Certificate Authority (ACM Private CA). You’ll also learn how to reuse AWS CloudFormation templates, which we make available through a companion open-source project, for configuring App Mesh and ACM Private CA.

You’ll first see how to derive server-side certificates from ACM Private CA into App Mesh internally by using the native integration between the two services. You’ll then see a method and code for installing client-side certificates issued from ACM Private CA into App Mesh; this method is needed because client-side certificates aren’t integrated natively.

You’ll learn how to use AWS Lambda to export a client-side certificate from ACM Private CA and store it in AWS Secrets Manager. You’ll then see Envoy proxies in App Mesh retrieve the certificate from Secrets Manager and use it in an mTLS handshake. The solution is designed to ensure confidentiality of the private key of a client-side certificate, in transit and at rest, as it moves from ACM to Envoy.

The solution described in this blog post simplifies and allows you to automate the configuration and operations of mTLS-enabled App Mesh deployments, because all of the certificates are derived from a single managed private public key infrastructure (PKI) service—ACM Private CA—eliminating the need to run your own private PKI. The solution uses Amazon Elastic Container Services (Amazon ECS) with AWS Fargate as the App Mesh hosting environment, although the design presented here can be applied to any compute environment that is supported by App Mesh.

ACM Private CA provides a highly available managed private PKI service that enables creation of private CA hierarchies—including root and subordinate CAs—without the investment and maintenance costs of operating your own private PKI service. The service allows you to choose among several CA key algorithms and key sizes and makes it easier for you to export and deploy private certificates anywhere by using API-based automation.

App Mesh is a service mesh that provides application-level networking across multiple types of compute infrastructure. It standardizes how your microservices communicate, giving you end-to-end visibility and helping to ensure transport security and high availability for your applications. In order to communicate securely between mesh endpoints, App Mesh directs the Envoy proxy instances that are running within the mesh to use one-way or mutual TLS.

TLS provides authentication, privacy, and data integrity between two communicating endpoints. The authentication in TLS communications is governed by the PKI system. The PKI system allows certificate authorities to issue certificates that are used by clients and servers to prove their identity. The authentication process in TLS happens by exchanging certificates via the TLS handshake protocol. By default, the TLS handshake protocol proves the identity of the server to the client by using X.509 certificates, while the authentication of the client to the server is left to the application layer. This is called one-way TLS. TLS also supports two-way authentication through mTLS. In mTLS, in addition to the one-way TLS server authentication with a certificate, a client presents its certificate and proves possession of the corresponding private key to a server during the TLS handshake.

The following sections describe one-way and mutual TLS integrations between App Mesh and ACM Private CA in the context of an example application. This example application exposes an API to external clients that returns a text string name of a color—for example, “yellow”. It’s an extension of the Color App that’s used to demonstrate several existing App Mesh examples.

The example application is comprised of two services running in App Mesh—ColorGateway and ColorTeller. An external client request enters the mesh through the ColorGateway service and is proxied to the ColorTeller service. The ColorTeller service responds back to the ColorGateway service with the name of a color. The ColorGateway service proxies the response to the external client. Figure 1 shows the basic design of the application.

Figure 1: App Mesh services in the Color App example application

The two services are mapped onto the following constructs in App Mesh:

Let’s review running the example application in one-way TLS mode. Although optional, starting with one-way TLS allows you to compare the two methods and establish how to look at certain Envoy proxy statistics to distinguish and verify one-way TLS versus mTLS connections.

For practice, you can deploy the example application project in your own AWS account and perform the steps described in your own test environment.

Note: In both the one-way TLS and mTLS descriptions in the following sections, we’re using a flat certificate hierarchy for demonstration purposes. The root CAs are issuing end-entity certificates. The AWS ACM Private CA best practices recommend that root CAs should only be used to issue certificates for intermediate CAs. When intermediate CAs are involved, your certificate chain has multiple certificates concatenated in it, but the mechanisms are the same as those described here.

Because this is a one-way TLS authentication scenario, you need only one Private CA—ColorTeller—and issue one end-entity certificate from it that’s used as the server-side certificate for the ColorTeller virtual node.

Figure 2, following, shows the architecture for this setup, including notations and color codes for certificates and a step-by-step process that shows how the system is configured and functions. Because this architecture uses a server-side certificate only, you use the native integration between App Mesh and ACM Private CA and don’t need an external mechanism for certificate integration.

Figure 2: One-way TLS in App Mesh integrated with ACM Private CA

The steps in Figure 2 are:

Step 1: A Private CA instance—ColorTeller—is created in ACM Private CA. Next, an end-entity certificate is created and signed by the CA. This certificate is used as the server-side certificate in ColorTeller.

Step 2: The CloudFormation templates configure the ColorGateway to validate server certificates against the ColorTeller private CA certificate chain. As the App Mesh endpoints are starting up, the ColorTeller CA certificate trust chain is ingested into the ColorGateway Envoy instance. The TLS configuration for ColorGateway in App Mesh is shown in Figure 3.

Figure 3: One-way TLS configuration in the client policy of ColorGateway

Figure 3 shows that the client policy attributes for outbound transport connections for ColorGateway have been configured as follows:

This configuration ensures that ColorGateway makes outbound TLS-only connections towards ColorTeller, extracts the CA trust chain from ACM-PCA, and validates the server certificate presented by the ColorTeller virtual node against the configured CA ARN.

Step 3: The CloudFormation templates configure the ColorTeller virtual node with the ColorTeller end-entity certificate ARN in ACM Private CA. While the App Mesh endpoints are started, the ColorTeller end-entity certificate is ingested into the ColorTeller Envoy instance.

The TLS configuration for the ColorTeller virtual node in App Mesh is shown in Figure 4.

Figure 4: One-way TLS configuration in the listener configuration of ColorTeller

Figure 4 shows that various TLS-related attributes are configured as follows:

Note: Figure 4 shows an annotation where the certificate ARN has been superimposed by the cert icon in green color. This icon follows the color convention from Figure 2 and can help you relate how the individual resources are configured to construct the architecture shown in Figure 2. The cert shown (and the associated private key that is not shown) in the diagram is necessary for ColorTeller to run the TLS stack and serve the certificate. The exchange of this material happens over the internal communications between App Mesh and ACM Private CA.

Step 4: The ColorGateway service receives a request from an external client.

Step 5: This step includes multiple sub-steps:

To verify that a TLS connection was established and that it is one-way TLS authenticated, run the following command on your bastion host:

This command queries the runtime statistics that are maintained in ColorTeller Envoy and filters the output for certain SSL-related counts. The count for ssl.handshake should be one. If the ssl.handshake count is more than one, that means there’s been more than one TLS handshake. The count for ssl.no_certificate should also be one, or equal to the count for ssl.handshake. The ssl.no_certificate count tracks the total successful TLS connections with no client certificate. Since this is a one-way TLS handshake that doesn’t involve client certificates, this count is the same as the count of ssl.handshake.

The preceding statistics verify that a TLS handshake was completed and the authentication was one-way, where the ColorGateway authenticated the ColorTeller but not vice-versa. You’ll see in the next section how the ssl.no_certificate count differs when mTLS is enabled.

In the one-way TLS discussion in the previous section, you saw that App Mesh and ACM Private CA integration works without needing external enhancements. You also saw that App Mesh retrieved the server-side end-entity certificate in ColorTeller and the root CA trust chain in ColorGateway from ACM Private CA internally, by using the native integration between the two services.

However, a native integration between App Mesh and ACM Private CA isn’t currently available for client-side certificates. Client-side certificates are necessary for mTLS. In this section, you’ll see how you can issue and export client-side certificates from ACM Private CA and ingest them into App Mesh.

The solution uses Lambda to export the client-side certificate from ACM Private CA and store it in Secrets Manager. The solution includes an enhanced startup script embedded in the Envoy image to retrieve the certificate from Secrets Manager and place it on the Envoy file system before the Envoy process is started. The Envoy process reads the certificate, loads it in memory, and uses it in the TLS stack for the client-side certificate exchange of the mTLS handshake.

The choice of Lambda is based on this being an ephemeral workflow that needs to run only during system configuration. You need a short-lived, runtime compute context that lets you run the logic for exporting certificates from ACM Private CA and store them in Secrets Manager. Because this compute doesn’t need to run beyond this step, Lambda is an ideal choice for hosting this logic, for cost and operational effectiveness.

The choice of Secrets Manager for storing certificates is based on the confidentiality requirements of the passphrase that is used for encrypting the private key (PKCS #8) of the certificate. You also need a higher throughput data store that can support secrets retrieval from large meshes. Secrets Manager supports a higher API rate limit than the API for exporting certificates from ACM Private CA, and thus serves as a high-throughput front end for ACM Private CA for serving certificates without compromising data confidentiality.

The resulting architecture is shown in Figure 5. The figure includes notations and color codes for certificates—such as root certificates, endpoint certificates, and private keys—and a step-by-step process showing how the system is configured, started, and functions at runtime. The example uses two CA hierarchies for ColorGateway and ColorTeller to demonstrate an mTLS setup where the client and server belong to separate CA hierarchies but trust each other’s CAs.

Figure 5: mTLS in App Mesh integrated with ACM Private CA

The numbered steps in Figure 5 are:

Step 1: A Private CA instance representing the ColorGateway trust hierarchy is created in ACM Private CA. Next, an end-entity certificate is created and signed by the CA, which is used as the client-side certificate in ColorGateway.

Step 2: Another Private CA instance representing the ColorTeller trust hierarchy is created in ACM Private CA. Next, an end-entity certificate is created and signed by the CA, which is used as the server-side certificate in ColorTeller.

Step 3: As part of running CloudFormation, the Lambda function is invoked. This Lambda function is responsible for exporting the client-side certificate from ACM Private CA and storing it in Secrets Manager. This function begins by requesting a random password from Secrets Manager. This random password is used as the passphrase for encrypting the private key inside ACM Private CA before it’s returned to the function. Generating a random password from Secrets Manager allows you to generate a random password with a specified complexity.

Step 4: The Lambda function issues an export certificate request to ACM, requesting the ColorGateway end-entity certificate. The request conveys the private key passphrase retrieved from Secrets Manager in the previous step so that ACM Private CA can use it to encrypt the private key that’s sent in the response.

Step 5: The ACM Private CA responds to the Lambda function. The response carries the following elements of the ColorGateway end-entity certificate.

Step 6: The Lambda function processes the response that is returned from ACM. It extracts individual fields in the JSON-formatted response and stores them in Secrets Manager. The Lambda function stores the following four values in Secrets Manager:

Step 7: The App Mesh services—ColorGateway and ColorTeller—are started, which then start their Envoy proxy containers. A custom startup script embedded in the Envoy docker image fetches a certificate from Secrets Manager and places it on the Envoy file system.

Note: App Mesh publishes its own custom Envoy proxy Docker container image that ensures it is fully tested and patched with the latest vulnerability and performance patches. You’ll notice in the example source code that a custom Envoy image is built on top of the base image published by App Mesh. In this solution, we add an Envoy startup script and certain utilities such as AWS Command Line Interface (AWS CLI) and jq to help retrieve the certificate from Secrets Manager and place it on the Envoy file system during Envoy startup.

Step 8: The CloudFormation scripts configure the client policy for mTLS in ColorGateway in App Mesh, as shown in Figure 6. The following attributes are configured:

Figure 6: Client-side mTLS configuration in ColorGateway

At the end of the custom Envoy startup script described in step 7, the core Envoy process in ColorGateway service is started. It retrieves the ColorTeller CA root certificate from ACM Private CA and configures it internally as a trusted CA. This retrieval happens due to native integration between App Mesh and ACM Private CA. This allows ColorGateway Envoy to validate the certificate presented by ColorTeller Envoy during the TLS handshake.

Step 9: The CloudFormation scripts configure the listener configuration for mTLS in ColorTeller in App Mesh, as shown in Figure 7. The following attributes are configured:

Figure 7: Server-side mTLS configuration in ColorTeller

At the end of the Envoy startup script described in step 7, the core Envoy process in ColorTeller service is started. It retrieves its own server-side end-entity certificate and corresponding private key from ACM Private CA. This retrieval happens internally, driven by the native integration between App Mesh and ACM Private CA. This allows ColorTeller Envoy to present its server-side certificate to ColorGateway Envoy during the TLS handshake.

The system startup concludes with this step, and the application is ready to process external client requests.

Step 10: The ColorGateway service receives a request from an external client.

Step 11: The ColorGateway Envoy initiates a TLS handshake with the ColorTeller Envoy. During the first half of the TLS handshake protocol, the ColorTeller Envoy presents its server-side certificate to the ColorGateway Envoy. The ColorGateway Envoy validates the certificate. Because the ColorGateway Envoy has been configured with the ColorTeller CA trust chain in step 8, the validation succeeds.

Step 12: During the second half of the TLS handshake, the ColorTeller Envoy requests the ColorGateway Envoy to provide its client-side certificate. This step is what distinguishes an mTLS exchange from a one-way TLS exchange.

The ColorGateway Envoy responds with its end-entity certificate that had been placed on its file system in step 7. The ColorTeller Envoy validates the received certificate with its CA trust chain, which contains the ColorGateway root CA that was placed on its file system (in step 7). The validation succeeds, and so an mTLS session is established.

You can now verify that an mTLS exchange happened by running the following command on your bastion host.

The count for ssl.handshake should be one. If the ssl.handshake count is more than one, that means that you’ve gone through more than one TLS handshake. It’s important to note that the count for ssl.no_certificate—the total successful TLS connections with no client certificate—is zero. This shows that mTLS configuration is working as expected. Recall that this count was one or higher—equal to the ssl.handshake count—in the previous section that described one-way TLS. The ssl.no_certificate count being zero indicates that this was an mTLS authenticated connection, where the ColorGateway authenticated the ColorTeller and vice-versa.

The ACM Private CA certificates that are imported into App Mesh are not eligible for managed renewal, so an external certificate renewal method is needed. This example solution uses an external renewal method as recommended in Renewing certificates in a private PKI that you can use in your own implementations.

The certificate renewal mechanism can be broken down into six steps, which are outlined in Figure 8.

Figure 8: Certificate renewal process in ACM Private CA and App Mesh on ECS integration

Here are the steps illustrated in Figure 8:

Step 1: ACM generates an Amazon CloudWatch Events event when a certificate is close to expiring.

Step 2: CloudWatch triggers a Lambda function that is responsible for certificate renewal.

Step 3: The Lambda function renews the certificate in ACM and exports the new certificate by calling ACM APIs.

Step 4: The Lambda function writes the certificate to Secrets Manager.

Step 5: The Lambda function triggers a new service deployment in an Amazon ECS cluster. This will cause the ECS services to go through a graceful update process to acquire a renewed certificate.

Step 6: The Envoy processes in App Mesh fetch the client-side certificate from Secrets Manager using external integration, and the server-side certificate from ACM using native integration.

In this post, you learned a method for enabling mTLS authentication between App Mesh endpoints based on certificates issued by ACM Private CA. mTLS enhances security of App Mesh deployments due to its bidirectional authentication capability. While server-side certificates are integrated natively, you saw how to use Lambda and Secrets Manager to integrate client-side certificates externally. Because ACM Private CA certificates aren’t eligible for managed renewal, you also learned how to implement an external certificate renewal process.

This solution enhances your App Mesh security posture by simplifying configuration of mTLS-enabled App Mesh deployments. It achieves this because all mTLS certificate requirements are met by a single, managed private PKI service—ACM Private CA—which allows you to centrally manage certificates and eliminates the need to run your own private PKI.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the AWS Certificate Manager forum or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=CFivx5bgfJY

Post Syndicated from Harley Geiger original https://blog.rapid7.com/2021/08/31/cybersecurity-in-the-infrastructure-bill/

On August 10, 2021, the U.S. Senate passed the Infrastructure Investment and Jobs Act of 2021 (H.R.3684). The bill comes in at 2,700+ pages, provides for $1.2T in spending, and includes several cybersecurity items. We expect this legislation to become law around late September and do not expect significant changes to the content. This post provides highlights on cybersecurity from the legislation.

(Check out our joint letter calling for cybersecurity in infrastructure legislation here.)

Cybersecurity is essential to ensure modern infrastructure is safe, and Rapid7 commends Congress and the Administration for including cybersecurity in the Infrastructure Investment and Jobs Act. Rapid7 led industry calls to include cybersecurity in the bill, and we are encouraged that several priorities identified by industry are reflected in the text, such as cybersecurity-specific funding for state and local governments and the electrical grid.

On the other hand, cybersecurity will be competing with natural disasters and extreme weather for funding in many (not all) grants created under the bill. In addition, not all critical infrastructure sectors receive cybersecurity resources through the legislation, with healthcare being a notable exclusion. Congress should address these gaps in the upcoming budget reconciliation package.

Below is a brief-ish summary of cybersecurity-related items in the bill. The infrastructure sectors with the most allocations appear to be energy, water, transportation, and state and local governments. Many of these funding opportunities take the form of federal grants for infrastructure resilience, which includes cybersecurity as well as natural hazards. Other funds are dedicated solely to cybersecurity.

Please note that this list aims to include major infrastructure cybersecurity funding items, but is not comprehensive. (For example, the bill also provides funding for the National Cyber Director.) Citations to the Senate-passed legislation are included.

State and local governments: $1B over 4 years for the State, Local, Tribal, and Territorial (SLTT) Grant Program. This new grant program will help SLTT governments to develop or implement cybersecurity plans. FEMA will administer the program. This is also known as The State and Local Cybersecurity Improvement Act. [Sec. 70611]

Energy: $250M over five years for the Rural and Municipal Utility Advanced Cybersecurity Grant and Technological Assistance Program. The Department of Energy (DOE) must create a new program to provide grants and technical assistance to improve electric utilities’ ability to detect, respond to, and recover from cybersecurity threats. [Sec. 40124]

Energy: Enhanced grid security. The DOE must create a program to develop advanced cybersecurity applications and technologies for the energy sector, among other things. Over a period of five years, this section authorizes $250M for the Cybersecurity for the Energy Sector RD&D program, $50M for the Energy Sector Operational Support for Cyberresilience Program, and $50M for Modeling and Assessing Energy Infrastructure Risk. [Sec. 40125]

Energy: State energy security plans. This creates federal financial and technical assistance for states to develop or implement an energy security plan that secures state energy infrastructure against cybersecurity threats, among other things. [Sec. 40108]

Water: $250M over 5 years for the Midsize and Large Drinking Water System Infrastructure Resilience and Sustainability Program. This creates a new grant program to assist midsize and large drinking water systems with increasing resilience to cybersecurity vulnerabilities, as well as natural hazards. [Sec. 50107]

Water: $175M over five years for technical assistance and grants for emergencies affecting public water systems. This extends an expired fund to help mitigate threats and emergencies to drinking water. This includes, among other things, emergency situations caused by a cybersecurity incident. [Sec. 50101]

Water: $25M over five years for the Clean Water Infrastructure Resiliency and Sustainability Program. This creates a new program providing grants to owners/operators of publicly owned treatment works to increase the resiliency of water systems against cybersecurity vulnerabilities, as well as natural hazards. [Sec. 50205]

Transportation: Cybersecurity eligible for National Highway Performance Program (NHPP). This expands on the existing NHPP grant program to allow states to use funds for resiliency of the National Highway System. "Resiliency" includes cybersecurity, as well as natural hazards. [Sec. 11105]

Transportation: Cybersecurity eligible for Surface Transportation Block Grant Program. This expands the existing grant program to allow funding measures to protect transportation facilities from cybersecurity threats, among other things. [Sec. 11109]

General: $100M over five years for the Cyber Response and Recovery Fund. This creates a fund for CISA to provide direct support to public or private entities that respond and recover from cyberattacks and breaches designated as a “significant incident.” The support can include technical assistance and response activities, such as vulnerability assessment, threat detection, network protection, and more. The program ends in 2028. [Sec. 70602, Div. J]

These cybersecurity items are significant down payments to safeguard the nation’s investment in infrastructure modernization. Combined with the recent Executive Order and memorandum on industrial control systems security, the Biden Administration is demonstrating that cybersecurity is a high priority.

However, more work must be done to address cybersecurity weaknesses in critical infrastructure. While the Infrastructure Investment and Jobs Act provides cybersecurity resources for some sectors, most of the 16 critical infrastructure sectors are excluded. Healthcare is an especially notable example, as the sector faces a serious ransomware problem in the middle of a deadly pandemic.

Congress is now preparing a larger budget reconciliation bill, to be advanced at roughly the same time as the infrastructure legislation. We encourage Congress and the Administration to take this opportunity to boost cybersecurity for other sectors, especially healthcare. As with the infrastructure bill, we suggest providing grants dedicated to cybersecurity, and requiring that grant funds be used to adopt or implement standards-based security safeguards and risk management practices.

Congress’ activity during the COVID-19 crisis continues to be punctuated by large, ambitious bills. To secure the modern economy and essential services, we hope the Infrastructure Investment and Jobs Act sets a precedent that sound cybersecurity policies will be integrated into transformative legislation to come.

Post Syndicated from Sam Palani original https://aws.amazon.com/blogs/architecture/field-notes-how-to-boost-your-search-results-using-amazon-kendra-relevance-tuning/

One challenge enterprises face when they implement an intelligent search solution for their large data sources, is the ability to quickly provide relevant search results. When working with large data sources, not all features or attributes within your data will be equally relevant to all your users. We want to prioritize identifying and boosting specific attributes for your users to provide the most relevant search results.

Relevance in Amazon Kendra tuning allows you to give a boost to a result in the response when the query includes terms that match the attribute. For example, you might have two similar documents but one is created more recently. A good practice is to boost the relevance for the newer (or earlier) document.

Relevance tuning in Amazon Kendra can be performed manually at the Index level or at the query level. In this blog post, we show how to tune an existing index that is connected to external data sources, and ultimately optimize your internal search results.

We will walk through how you can manually tune your index using boosting techniques to achieve the best results. This enables you to prioritize the results from a specific data source so your users get the most relevant results when they perform searches.

Figure 1. Amazon Kendra setup

Figure 1 illustrates a standard Amazon Kendra setup. An Amazon Kendra index is connected to different Amazon Simple Storage Service (Amazon S3) buckets with multiple data sources.

There are two types of user personas. The first persona is administrators who are responsible for managing the index and performing administrative tasks such as access control, index tuning, and so forth. The second persona is users who access Amazon Kendra either directly or through a custom application that can make API search requests on an Amazon Kendra index. You can use relevance tuning to boost results from one of these data sources to provide a more relevant search result.

This solution requires the following:

If you do not have these prerequisites set up, you might check out Create and query an index that walks you through how to create and query an index in Amazon Kendra.

Furthermore, the AWS services you use in this tutorial are within the AWS Free Tier under a 30-day trial.

First, review your facet definition and confirm it is facetable, displayable, searchable, and sortable.

In the Amazon Kendra console, select your Amazon Kendra index, then select Facet definition in the Data management panel. Confirm that _data_source_id has all of its attributes checked.

Figure 2. Check facet definition

Next, verify that you have at least two data sources for your Amazon Kendra index.

In the Amazon Kendra console, select your Amazon Kendra index, and then select Data sources in the Data management panel. Confirm that your data sources are correctly synced and available. In our example, data-source-2 is an earlier version and contains unprocessed documents compared to sample-datasource that has newer versions and has more relevant content.

Figure 3. Verify data sources

Next, we will test a regular search without any relevance tuning. Select Search console, and enter the search term Amazon Kendra VPC. Review your search results.

Figure 4. Regular Amazon Kendra search

In our example search results, the document from the second data source 39_kendra-dg_kendra-dg appears as the third result.

Now we will boost the first data source so documents from the first data source are displayed ahead of the other data sources.

Select data source, and boost the first data source sample-datasource to 8. Press the Save button to save your tuning. Wait several seconds for the changes to propagate.

Figure 5. Boost sample-datasource

Next, we will test the search with relevance tuning applied. In the search text box enter the search term Amazon Kendra VPC. Review your search results.

Figure 6. Searching after boosting

Notice that the search result no longer contains the document from the second data source.

To avoid incurring any future charges, remove any index created specifically for this tutorial. In the Amazon Kendra console, select your index. Then select Actions, and choose Delete.

Figure 7. Delete index

In this blog post, we showed you how relevance tuning can be used to produce results ranked by their relevance. We also walked you through an example regarding how to manually perform relevance tuning at the index level in Amazon Kendra to boost your search results.

In addition to relevance tuning at the index level, you can also perform relevance tuning at the query level. Finally, check out the What is Amazon Kendra? and Relevance tuning with Amazon Kendra blog posts to learn more.

Post Syndicated from original https://lwn.net/Articles/867919/rss

The 5.15 merge window is off to a fast start; stay tuned for our usual full

summary. It is worth mentioning, though, that the realtime preemption

locking code has been pulled into the

mainline with little fanfare. This

work began in 2004 and has fundamentally

changed many parts of the core kernel. With this pull, the sleepable locks

that make deterministic realtime response possible have finally joined all

of that other work (though the kernel must be built with the

REALTIME configuration option to use them).

Congratulations are due to all of the realtime developers who pushed this

project forward for nearly two decades.

Post Syndicated from original https://lwn.net/Articles/867917/rss

Security updates have been issued by CentOS (libsndfile and libX11), Debian (ledgersmb, libssh, and postgresql-9.6), Fedora (squashfs-tools), openSUSE (389-ds, nodejs12, php7, spectre-meltdown-checker, and thunderbird), Oracle (kernel, libsndfile, and libX11), Red Hat (bind, cloud-init, edk2, glibc, hivex, kernel, kernel-rt, kpatch-patch, microcode_ctl, python3, and sssd), SUSE (bind, mysql-connector-java, nodejs12, sssd, and thunderbird), and Ubuntu (apr, squashfs-tools, thunderbird, and uwsgi).

Post Syndicated from Julian Wood original https://aws.amazon.com/blogs/compute/building-well-architected-serverless-applications-optimizing-application-performance-part-3/

This series of blog posts uses the AWS Well-Architected Tool with the Serverless Lens to help customers build and operate applications using best practices. In each post, I address the serverless-specific questions identified by the Serverless Lens along with the recommended best practices. See the introduction post for a table of contents and explanation of the example application.

This post continues part 2 of this security question. Previously, I look at designing your function to take advantage of concurrency via asynchronous and stream-based invocations. I cover measuring, evaluating, and selecting optimal capacity units.

Consider using native integrations between managed services as opposed to AWS Lambda functions when no custom logic or data transformation is required. This can enable optimal performance, requires less resources to manage, and increases security. There are also a number of AWS application integration services that enable communication between decoupled components with microservices.

When using Amazon API Gateway APIs, you can use the AWS integration type to connect to other AWS services natively. With this integration type, API Gateway uses Apache Velocity Template Language (VTL) and HTTPS to directly integrate with other AWS services.

Timeouts and errors must be managed by the API consumer. For more information on using VTL, see “Amazon API Gateway Apache Velocity Template Reference”. For an example application that uses API Gateway to read and write directly to/from Amazon DynamoDB, see “Building a serverless URL shortener app without AWS Lambda”.

There is also a tutorial available, Build an API Gateway REST API with AWS integration.

When using AWS AppSync, you can use VTL, direct integration with Amazon Aurora, Amazon Elasticsearch Service, and any publicly available HTTP endpoint. AWS AppSync can use multiple integration types and can maximize throughput at the data field level. For example, you can run full-text searches on the orderDescription field against Elasticsearch while fetching the remaining data from DynamoDB. For more information, see the AWS AppSync resolver tutorials.

In the serverless airline example used in this series, the catalog service uses AWS AppSync to provide a GraphQL API for searching flights. AWS AppSync uses DynamoDB as a database, and all compute logic is contained in the Apache Velocity Template (VTL).

AWS Step Functions integrates with multiple AWS services using service Integrations. For example, this allows you to fetch and put data into DynamoDB, or run an AWS Batch job. You can also publish messages to Amazon Simple Notification Service (SNS) topics, and send messages to Amazon Simple Queue Service (SQS) queues. For more details on the available integrations, see “Using AWS Step Functions with other services”.

Using Amazon EventBridge, you can connect your applications with data from a variety of sources. You can connect to various AWS services natively, and act as an event bus across multiple AWS accounts to ease integration. You can also use the API destination feature to route events to services outside of AWS. EventBridge handles the authentication, retries, and throughput. For more details on available EventBridge targets, see the documentation.

Consider caching when clients may not require up to date data. Optimize access patterns to only fetch data that is necessary to end users. This improves the overall responsiveness of your workload and makes more efficient use of compute and data resources across components.

For REST APIs, you can use API Gateway caching to reduce the number of calls made to your endpoint and also improve the latency of requests to your API. When you enable caching for a stage or method, API Gateway caches responses for a specified time-to-live (TTL) period. API Gateway then responds to the request by looking up the endpoint response from the cache, instead of making a request to your endpoint.

For more information, see “Enabling API caching to enhance responsiveness”.