Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=-vV-YaKsIGk

Protect public clients for Amazon Cognito by using an Amazon CloudFront proxy

Post Syndicated from Mahmoud Matouk original https://aws.amazon.com/blogs/security/protect-public-clients-for-amazon-cognito-by-using-an-amazon-cloudfront-proxy/

In Amazon Cognito user pools, an app client is an entity that has permission to call unauthenticated API operations (that is, operations that don’t have an authenticated user), such as operations to sign up, sign in, and handle forgotten passwords. In this post, I show you a solution designed to protect these API operations from unwanted bots and distributed denial of service (DDoS) attacks.

To protect Amazon Cognito services and customers, Amazon Cognito applies request rate quotas on all API categories, and throttles rapid calls that exceed the assigned quota. For that reason, you must ensure your applications control who can call unauthenticated API operations and at what rate, so that user calls aren’t throttled because of unwanted or misconfigured clients that call these API operations at high rates.

App clients fall into one of two categories: public clients (used from web or mobile applications) and private or confidential clients (used from a secured backend). Public clients shouldn’t have secrets, because it isn’t possible to protect secrets in these types of clients. Confidential clients, on the other hand, use a secret to authorize calls to unauthenticated operations. In these clients, the secret can be protected in the backend.

The benefit of using a confidential app client with a secret in Amazon Cognito is that unauthenticated API operations will accept only the calls that include the secret hash for this client, and will drop calls with an invalid or missing secret. In this way, you control who calls these API operations. Public applications can use a confidential app client by implementing a lightweight proxy layer in front of the Amazon Cognito endpoint, and then using this proxy to add a secret hash in relevant requests before passing the requests to Amazon Cognito.

There are multiple options that you can use to implement this proxy. One option is to use Amazon CloudFront and Lambda@Edge to add the secret hash to the incoming requests. When you use a CloudFront proxy, you can also use AWS WAF, which gives you tools to detect and block unwanted clients. From Lambda@Edge, you can also integrate with other services (like Amazon Fraud Detector or third-party bot detection services) to help you detect possible fraudulent requests and block them. The CloudFront proxy, with the right set of security tools, helps protect your Amazon Cognito user pool from unwanted clients.

Solution overview

To implement this lightweight proxy pattern, you need to create an application client with a secret. Unauthenticated API calls to this client must include the secret hash which is added to the request from the proxy layer. Client applications use an SDK like AWS Amplify, the Amazon Cognito Identity SDK, or a mobile SDK to communicate with Amazon Cognito. By default, the SDK sends requests to the Regional Amazon Cognito endpoint. Your application must override the default endpoint by manually adding an “Endpoint” property in the app configuration. See the Integrate the client application with the proxy section later in this post for more details.

Figure 1 shows how this works, step by step.

Figure 1: A proxy solution to the Amazon Cognito Regional endpoint

The workflow is as follows:

- You configure the client application (mobile or web client) to use a CloudFront endpoint as a proxy to an Amazon Cognito Regional endpoint. You also create an application client in Amazon Cognito with a secret. This means that any unauthenticated API call must have the secret hash.

- Clients that send unauthenticated API calls to the Amazon Cognito endpoint directly are blocked and dropped because of the missing secret.

- You use Lambda@Edge to add a secret hash to the relevant incoming requests before passing them on to the Amazon Cognito endpoint.

- From Lambda@Edge, you must have the app client secret to be able to calculate the secret hash and add it to the request. It’s recommended that you keep the secret in AWS Secrets Manager and cache it for the lifetime of the function.

- You use AWS WAF with CloudFront distribution to enforce rate limiting, allow and deny lists, and other rule groups according to your security requirements.

When to use this pattern

It’s a best practice to use this proxy pattern with clients that use SDKs to integrate with Amazon Cognito user pools. Examples include mobile applications that use the iOS or Android SDK, or web applications that use client-side libraries like Amplify or the Amazon Cognito Identity SDK to integrate with Amazon Cognito.

You don’t need to use a proxy pattern with server-side applications that use an AWS SDK to integrate with Amazon Cognito user pools from a protected backend, because server-side applications can natively use confidential clients and protect the secret in the backend.

You can’t use this solution with applications that use Hosted UI and OAuth 2.0 endpoints to integrate with Amazon Cognito user pools. This includes federation scenarios where users sign in with an external identity provider (IdP).

Implementation and deployment details

Before you deploy this solution, you need a user pool and an application client that has the client secret. When you have these in place, choose the following Launch Stack button to launch a CloudFormation stack in your account and deploy the proxy solution.

Note: The CloudFormation stack must be created in the us-east-1 AWS Region, but the user pool itself can exist in any supported Region.

The template takes the parameters shown in Figure 2 below.

Figure 2: CloudFormation stack creation with initial parameters

The parameters in Figure 2 include:

- AdvancedSecurityEnabled is a flag that indicates whether advanced security is enabled in the user pool or not. This flag determines which version of the Lambda function is deployed. Notice that if you change this flag as part of a stack update, it overrides the function code, so if you have any manual changes, make sure to back up your changes.

- AppClientSecret is the secret for your application client. This secret is stored in Secrets Manager and accessed from Lambda@Edge as needed.

- LambdaS3BucketName is the bucket that hosts the Lambda code package. You don’t need to change this parameter unless you have a requirement to modify or extend the solution with your own Lambda function.

- RateLimit is the maximum number of calls from a single IP address that are allowed within a 5 minute period. Values between 100 requests and 20 million requests are valid for RateLimit.

- UserPoolId is the ID of your user pool. This value is used by Lambda@Edge when needed (for example, to call admin APIs, which require the user pool ID).

- UserPoolRegion is the AWS Region where you created your user pool. This value is used to determine which Amazon Cognito Regional endpoint to proxy the calls to.

Important: provide a value suitable for your application and security requirements.

This template creates several resources in your AWS account, as follows:

- A CloudFront distribution that serves as a proxy to an Amazon Cognito Regional endpoint.

- An AWS WAF web access control list (ACL) with rules for the allow list, deny list, and rate limit.

- A Lambda function to be deployed at the edge and assigned to the origin request event.

- A secret in Secrets Manager, to hold the values of the application client secret and user pool ID.

After you create the stack, the CloudFront distribution domain name is available on the Outputs tab in the CloudFront console, as shown in Figure 3. This is the value that’s used as the Endpoint property in your client-side application. You can optionally add an alternative domain name to the CloudFront distribution if you prefer to use your own custom domain.

Figure 3: The output of the CloudFormation stack creation, displaying the CloudFront domain name

Use Lambda@Edge to add a secret hash to the request

As explained earlier, the purpose of having this proxy is to be able to inject the secret hash in unauthenticated API calls before passing them to the Amazon Cognito endpoint. This injection is achieved by a Lambda function that intercepts incoming requests at the edge (the CloudFront distribution) before passing them to the origin (the Amazon Cognito Regional endpoint).

The Lambda function that is deployed to the edge has two versions. One is a simple pass-through proxy that only adds the secret hash, and this version is used if Amazon Cognito advanced security isn’t enabled. The other version is a proxy that uses the AdminInitiateAuth and AdminRespondToAuthChallenge API operations instead of unauthenticated API operations for the user authentication and challenge response. This allows the proxy layer to propagate the client IP address to the Amazon Cognito endpoint, which guides the adaptive authentication features of advanced security. The version that is deployed by the stack is determined by the AdvancedSecurityEnabled flag when you create or update the CloudFormation stack.

You can extend this solution by manually modifying the Lambda function with your own processing logic. For example, you can integrate with fraud detection or bot detection services to evaluate the request and decide to proceed or reject the call. Note that after making any change to the Lambda function code, you must deploy a new version to the edge location. To do that from the Lambda console, navigate to Actions, choose Deploy to Lambda@Edge, and then choose Use existing CloudFront trigger on this function.

Important: If you update the stack from CloudFormation and change the value of the AdvancedSecurityEnabled flag, the new value overrides the Lambda code with the default version for the choice. In that case, all manual changes are lost.

Allow or block requests

The template that is provided in this blog post creates a web ACL with three rules: AllowList, DenyList, and RateLimit. These rules are evaluated in order and determine which requests are allowed or blocked. The template also creates four IP sets, as shown in Figure 4, to hold the values of allowed or blocked IPs for both IPv4 and IPv6 address types.

Figure 4: The CloudFormation template creates IP sets in the AWS WAF console for allow and deny lists

If you want to always allow requests from certain clients, for example, trusted enterprise clients or server-side clients in cases where a large volume of requests is coming from the same IP address like a VPN gateway, add these IP addresses to the corresponding AllowList IP set. Similarly, if you want to always block traffic from certain IPs, add those IPs to the corresponding DenyList IP set.

Requests from sources that aren’t on the allow list or deny list are evaluated based on the volume of calls within 5 minutes, and sources that exceed the defined rate limit within 5 minutes are automatically blocked. If you want to change the defined rate limit, you can do so by updating the CloudFormation stack and providing a different value for the RateLimit parameter. Or you can modify this value directly in the AWS WAF console by editing the RateLimit rule.

Note: You can also use AWS Managed Rules for AWS WAF to add additional protection according to your security needs.

Integrate the client application with the proxy

You can integrate the client application with the proxy by changing the Endpoint in your client application to use the CloudFront distribution domain name. The domain name is located in the Outputs section of the CloudFormation stack.

You then need to edit your client-side code to forward calls to Amazon Cognito through the proxy endpoint. For example, if you’re using the Identity SDK, you should change this property as follows.

If you’re using AWS Amplify, you can change the endpoint in the aws-exports.js file by overriding the property aws_cognito_endpoint. Or, if you configure Amplify Auth in your code, you can provide the endpoint as follows.

If you have a mobile application that uses the Amplify mobile SDK, you can override the endpoint in your configuration as follows (don’t include AppClientSecret parameter in your configuration). Note that the Endpoint value contains the domain name only, not the full URL. This feature is available in the latest releases of the iOS and Android SDKs.

Warning: The Amplify CLI overwrites customizations to the awsconfiguration.json and amplifyconfiguration.json files if you do an amplify push or amplify pull operation. You must manually re-apply the Endpoint customization and remove the AppClientSecret if you use the CLI to modify your cloud backend.

Solution limitations

This solution has these limitations:

- If advanced security features are enabled for the user pool, Amazon Cognito calculates risk for user events. If you use this proxy pattern, the IP address that is propagated in user events is the proxy IP address, which causes risk calculation for SignUp, ForgotPassword, and ResendCode events to be inaccurate. On the other hand, Sign-In events still have the client IP address propagated correctly, and risk calculation and adaptive authentication for Sign-In events aren’t affected by the use of this proxy.

- This solution is not applicable to Hosted UI, OAuth 2.0 endpoints, and federation flows.

- Authenticated and admin API operations (which require developer credentials or an access token) aren’t covered in this solution. These API operations don’t require a secret hash, and they use other authentication mechanisms.

- Using this proxy solution with mobile apps requires an update to the application. The update might take time to be available in the relevant app store, and you must depend on end users to update their app. Plan ahead of time to use the solution with mobile apps.

How to detect unusual behavior

In this section, I share with you the steps to detect, quickly analyze and respond to unwanted clients. It’s a best practice to configure monitoring and alarms that help you to detect unexpected spikes in activity. Additionally, I show you how to be ready to quickly identify clients that are calling your resources at a higher-than-usual rate.

Monitor utilization compared to quotas

Amazon Cognito integrates with Service Quotas, which monitor service utilization compared to quotas. These metrics help you detect unexpected spikes and be alerted if you’re approaching your quota for a certain API category. Approaching your quota indicates that there is a risk that calls from legitimate users will be throttled.

To view utilization versus quota metrics

- In the Service Quotas console, choose Service Quotas, choose AWS Services, and then choose Amazon Cognito User Pools.

- Under Service quotas, enter the search term rate of. This shows you the list of API categories and the assigned quotas for each category.

Figure 5: The Service Quotas console showing Amazon Cognito API category rate quotas

- Choose any of the API categories to see utilization versus quota metrics.

Figure 6: The Service Quotas console showing utilization vs quota metrics for Amazon Cognito UserCreation APIs

- You can also create alarms from this page to alert you if utilization is above a pre-defined threshold. You can create alarms starting at 50 percent utilization. It’s recommended that you create multiple alarms, for example at the 50 percent, 70 percent, and 90 percent thresholds, and configure CloudWatch alarms as appropriate.

Figure 7: Creating an alarm for the utilization of the UserCreation API category

Analyze CloudTrail logs with Athena

If you detect an unexpected spike in traffic to a certain API category, the next step is to identify the sources of this spike. You can do that by using CloudTrail logs or, after you deploy and use this proxy solution, CloudFront logs as sources of information. You can then analyze these logs by using Amazon Athena queries.

The first step is to create Athena tables from CloudTrail and CloudFront logs. You can do that by following these steps for CloudTrail and similar steps for CloudFront. After you have these tables created, you can create a set of queries that help you identify unwanted clients. Here are a couple of examples:

- Use the following query to identify clients with the highest call rate to the InitiateAuth API operation within the timeframe you noticed the spike (change the eventtime value to reflect the attack window).

- Use the following query to identify clients that come through CloudFront with the highest error rate.

After you identify sources that are calling your service with a higher-than-usual rate, you can block these clients by adding them to the DenyList IP set that was created in AWS WAF.

Analyze CloudTrail events with CloudWatch Logs Insights

It’s a best practice to configure your trail to send events to CloudWatch Logs. After you do this, you can interactively search and analyze your Amazon Cognito CloudTrail events with CloudWatch Logs Insights to identify errors, unusual activity, or unusual user behavior in your account.

Conclusion

In this post, I showed you how to implement a lightweight proxy to an Amazon Cognito endpoint, which can be used with an application client secret to control access to unauthenticated API operations. This approach, together with security tools such as AWS WAF, helps provide protection for these API operations from unwanted clients. I also showed you strategies to help detect an ongoing attack and quickly analyze, identify, and block unwanted clients.

For more strategies for DDoS mitigation, see the AWS Best Practices for DDoS Resiliency.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the Amazon Cognito forum or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Why the Robot Hackers Aren’t Here (Yet)

Post Syndicated from Erick Galinkin original https://blog.rapid7.com/2021/07/14/why-the-robot-hackers-arent-here-yet/

“Estragon: I’m like that. Either I forget right away or I never forget.” – Samuel Beckett, Waiting for Godot

Hacking and Automation

As hackers, we spend a lot of time making things easier for ourselves.

For example, you might be aware of a tool called Metasploit, which can be used to make getting into a target easier. We’ve also built internet-scale scanning tools, allowing us to easily view data about open ports across the internet. Some of our less ethical comrades-in-arms build worms and botnets to automate the process of doing whatever they want to do.

If the heart of hacking is making things do what they shouldn’t, then perhaps the lungs are automation.

Over the years, we’ve seen security in general and vulnerability discovery in particular move from a risky, shady business to massive corporate-sponsored activities with open marketplaces for bug bounties. We’ve also seen a concomitant improvement in the techniques of hacking.

If hackers had known in 1996 that we’d go from Stack-based buffer overflows to chaining ROP gadgets, perhaps we’d have asserted “no free bugs” earlier on. This maturity has allowed us to find a number of bugs that would have been unbelievable in the early 2000s, and exploits for those bugs are quickly packaged into tools like Metasploit.

Now that we’ve automated the process of running our exploits once they’ve been written, why is it so hard to get machines to find the bugs for us?

This is, of course, not for lack of trying. Fuzzing is a powerful technique that turns up a ton of bugs in an automated way. In fact, fuzzing is powerful enough that loads of folks turn up 0-days while they’re learning how to do fuzzing!

However, the trouble with fuzzing is that you never know what you’re going to turn up, and once you get a crash, there is a lot of work left to be done to craft an exploit and understand how and why the crash occurred — and that’s on top of all the work needed to craft a reliable exploit.

Automated bug finding, like we saw in the DARPA Cyber Grand Challenge, takes this to another level by combining fuzzing and symbolic execution with other program analysis techniques, like reachability and input dependence. But fuzzers and SMT solvers — a program that solves particular types of logic problems — haven’t found all the bugs, so what are we missing?

As with many problems in the last few years, organizations are hoping the answer lies in artificial intelligence and machine learning. The trouble with this hope is that AI is good at some tasks, and bug finding may simply not be one of them — at least not yet.

Learning to Find Bugs

Academic literature is rich with papers aiming to find bugs with machine learning. A quick Google Scholar search turns up over 140,000 articles on the topic as of this writing, and many of these articles seem to promise that, any day now, machine learning algorithms will turn up bugs in your source code.

There are a number of developer tools that suggest this could be true. Tools like Codota, Tabnine, and Kite will help auto-complete your code and are quite good. In fact, Microsoft has used GPT-3 to write code from natural language.

But creating code and finding bugs is sadly an entirely different problem. A 2017 paper written by Chappell et al — a collaboration between Australia’s Queensland University of Technology and the technology giant Oracle — found that a variety of machine learning approaches vastly underperformed Oracle’s Parfait system, which uses more traditional symbolic analysis techniques on the intermediate representations used by the compiler.

Another paper, out of the University of Oslo in Norway, Simulated SQL injection using Q-Learning, a form of Reinforcement Learning. This paper caused a stir in the MLSec community and especially within the DEF CON AI village (full disclosure: I am an officer for the AI village and helped cause the stir). The possibility of using a roomba-like method to find bugs was deeply enticing, and Erdodi et al. did great work.

However, their method requires a very particular environment, and although the agent learned to exploit the specific simulation, the method does not seem to generalize well. So, what’s a hacker to do?

Blaming Our Boots

“Vladimir: There’s man all over for you, blaming on his boots the faults of his feet.” – Samuel Beckett, Waiting for Godot

One of the fundamental problems with throwing machine learning at security problems is that many ML techniques have been optimized for particular types of data. This is particularly important for deep learning techniques.

Images are tensors — a sort of matrix with not just height and width but also color channels — of rectangular bit maps with a range of possible values for each pixel. Natural language is tokenized, and those tokens are mapped into a word embedding, like GloVe or Word2Vec.

This is not to downplay the tremendous accomplishments of these machine learning techniques but to demonstrate that, in order for us to repurpose them, we must understand why they were built this way.

Unfortunately, the properties we find important for computer vision — shift invariance and orientation invariance — are not properties that are important for tasks like vulnerability detection or malware analysis. There is, likewise, a heavy dependence in log analysis and similar tasks on tokens that are unlikely to be in our vocabulary — oddly encoded strings, weird file names, and unusual commands. This makes these techniques unsuitable for many of our defensive tasks and, for similar reasons, mostly useless for generating net-new exploits.

Why doesn’t this work? A few issues are at play here. First, the machine does not understand what it is learning. Machine learning algorithms are ultimately function approximators — systems that see some inputs and some outputs, and figure out what function generated them.

For example, if our dataset is:

X = {1, 3, 7, 11, 2}

Y = {3, 7, 15, 23, 5}

Our algorithm might see the first input and output: 3 = f(1) and guess that f(x) = 3x.

By the second input, it would probably be able to figure out that y = f(x) = 2x + 1.

By the fifth, there would be a lot of good evidence that f(x) = 2x + 1. But this is a simple linear model, with one weight term and one bias term. Once we have to account for a large number of dimensions and a function that turns a label like “cat” into a 32 x 32 image with 3 color channels, approximating that function becomes much harder.

It stands to reason then that the function which maps a few dozen lines of code that spread across several files into a particular class of vulnerability will be harder still to approximate.

Ultimately, the problem is neither the technology on its own nor the data representation on its own. It is that we are trying to use the data we have to solve a hard problem without addressing the underlying difficulties of that problem.

In our case, the challenge is not identifying vulnerabilities that look like others we’ve found before. The challenge is in capturing the semantic meaning of the code and the code flow at a point, and using that information to generate an output that tells us whether or not a certain condition is met.

This is what SAT solvers are trying to do. It is worth noting then that, from a purely theoretical perspective, this is the problem SAT, the canonical NP-Complete problem. It explains why the problem is so hard — we’re trying to solve one of the most challenging problems in computer science!

Waiting for Godot

The Samuel Beckett play, Waiting for Godot, centers around the characters of Vladimir and Estragon. The two characters are, as the title suggests, waiting for a character named Godot. To spoil a roughly 70-year-old play, I’ll give away the punchline: Godot never comes.

Today, security researchers who are interested in using artificial intelligence and machine learning to move the ball forward are in a similar position. We sit or stand by the leafless tree, waiting for our AI Godot. Like Vladimir and Estragon, our Godot will never come if we wait.

If we want to see more automation and applications of machine learning to vulnerability discovery, it will not suffice to repurpose convolutional neural networks, gradient-boosted decision trees, and transformers. Instead, we need to think about the way we represent data and how to capture the relevant details of that data. Then, we need to develop algorithms that can capture, learn, and retain that information.

We cannot wait for Godot — we have to find him ourselves.

Auto scaling Amazon Kinesis Data Streams using Amazon CloudWatch and AWS Lambda

Post Syndicated from Matthew Nolan original https://aws.amazon.com/blogs/big-data/auto-scaling-amazon-kinesis-data-streams-using-amazon-cloudwatch-and-aws-lambda/

This post is co-written with Noah Mundahl, Director of Public Cloud Engineering at United Health Group.

In this post, we cover a solution to add auto scaling to Amazon Kinesis Data Streams. Whether you have one stream or many streams, you often need to scale them up when traffic increases and scale them down when traffic decreases. Scaling your streams manually can create a lot of operational overhead. If you leave your streams overprovisioned, costs can increase. If you want the best of both worlds—increased throughput and reduced costs—then auto scaling is a great option. This was the case for United Health Group. Their Director of Public Cloud Engineering, Noah Mundahl, joins us later in this post to talk about how adding this auto scaling solution impacted their business.

Overview of solution

In this post, we showcase a lightweight serverless architecture that can auto scale one or many Kinesis data streams based on throughput. It uses Amazon CloudWatch, Amazon Simple Notification Service (Amazon SNS), and AWS Lambda. A single SNS topic and Lambda function process the scaling of any number of streams. Each stream requires one scale-up and one scale-down CloudWatch alarm. For an architecture that uses Application Auto Scaling, see Scale Amazon Kinesis Data Streams with AWS Application Auto Scaling.

The workflow is as follows:

- Metrics flow from the Kinesis data stream into CloudWatch (bytes/second, records/second).

- Two CloudWatch alarms, scale-up and scale-down, evaluate those metrics and decide when to scale.

- When one of these scaling alarms triggers, it sends a message to the scaling SNS topic.

- The scaling Lambda function processes the SNS message:

- The function scales the data stream up or down using UpdateShardCount:

- Scale-up events double the number of shards in the stream

- Scale-down events halve the number of shards in the stream

- The function updates the metric math on the scale-up and scale-down alarms to reflect the new shard count.

- The function scales the data stream up or down using UpdateShardCount:

Implementation

The scaling alarms rely on CloudWatch alarm metric math to calculate a stream’s maximum usage factor. This usage factor is a percentage calculation from 0.00–1.00, with 1.00 meaning the stream is 100% utilized in either bytes per second or records per second. We use the usage factor for triggering scale-up and scale-down events. Our alarms use the following usage factor thresholds to trigger scaling events: >= 0.75 for scale-up and < 0.25 for scale-down. We use 5-minute data points (period) on all alarms because they’re more resistant to Kinesis traffic micro spikes.

Scale-up usage factor

The following screenshot shows the metric math on a scale-up alarm.

The scale-up max usage factor for a stream is calculated as follows:

Scale-down usage factor

We calculate the scale-down usage factor the same as the scale-up usage factor with some additional metric math to (optionally) take into account the iterator age of the stream to block scale-downs when stream processing is falling behind. This is useful if you’re using Lambda functions per shard, known as the Parallelization Factor, to process your streams. If you have a backlog of data, scaling down reduces the number of Lambda functions you need to process that backlog.

The following screenshot shows the metric math on a scale-down alarm.

The scale-down max usage factor for a stream is calculated as follows:

Deployment

You can deploy this solution via AWS CloudFormation. For more information, see the GitHub repo.

If you need to generate traffic on your streams for testing, consider using the Amazon Kinesis Data Generator. For more information, see Test Your Streaming Data Solution with the New Amazon Kinesis Data Generator.

Optum’s story

As the health services innovation arm of UnitedHealth Group, Optum has been on a multi-year journey towards advancing maturity and capabilities in the public cloud. Our multi-cloud strategy includes using many cloud-native services offered by AWS. The elasticity and self-healing features of the public cloud are among of its many strengths, and we use the automation provided natively by AWS through auto scaling capabilities. However, some services don’t natively provide those capabilities, such as Kinesis Data Streams. That doesn’t mean that we’re complacent and accept inelasticity.

Reducing operational toil

At the scale Optum operates at in the public cloud, monitoring for errors or latency related to our Kinesis data stream shard count and manually adjusting those values in response could become a significant source of toil for our public cloud platform engineering teams. Rather than engaging in that toil, we prefer to engineer automated solutions that respond much faster than humans and help us maintain performance, data resilience, and cost-efficiency.

Serving our mission through engineering

Optum is a large organization with thousands of software engineers. Our mission is to help people live healthier lives and help make the health system work better for everyone. To accomplish that mission, our public cloud platform engineers must act as force multipliers across the organization. With solutions such as this, we ensure that our engineers can focus on building and not on responding to needless alerts.

Conclusion

In this post, we presented a lightweight auto scaling solution for Kinesis Data Streams. Whether you have one stream or many streams, this solution can handle scaling for you. The benefits include less operational overhead, increased throughput, and reduced costs. Everything you need to get started is available on the Kinesis Auto Scaling GitHub repo.

About the authors

Matthew Nolan is a Senior Cloud Application Architect at Amazon Web Services. He has over 20 years of industry experience and over 10 years of cloud experience. At AWS he helps customers rearchitect and reimagine their applications to take full advantage of the cloud. Matthew lives in New England and enjoys skiing, snowboarding, and hiking.

Matthew Nolan is a Senior Cloud Application Architect at Amazon Web Services. He has over 20 years of industry experience and over 10 years of cloud experience. At AWS he helps customers rearchitect and reimagine their applications to take full advantage of the cloud. Matthew lives in New England and enjoys skiing, snowboarding, and hiking.

Paritosh Walvekar is a Cloud Application Architect with AWS Professional Services, where he helps customers build cloud native applications. He has a Master’s degree in Computer Science from University at Buffalo. In his free time, he enjoys watching movies and is learning to play the piano.

Paritosh Walvekar is a Cloud Application Architect with AWS Professional Services, where he helps customers build cloud native applications. He has a Master’s degree in Computer Science from University at Buffalo. In his free time, he enjoys watching movies and is learning to play the piano.

Noah Mundahl is Director of Public Cloud Engineering at United Health Group.

Noah Mundahl is Director of Public Cloud Engineering at United Health Group.

Upcoming Speaking Engagements

Post Syndicated from Schneier.com Webmaster original https://www.schneier.com/blog/archives/2021/07/upcoming-speaking-engagements-10.html

This is a current list of where and when I am scheduled to speak:

- I’m speaking at Norbert Wiener in the 21st Century, a virtual conference hosted by The IEEE Society on Social Implications of Technology (SSIT), July 23-25, 2021.

- I’m speaking at DEFCON 29, August 5-8, 2021.

- I’m speaking (via Internet) at SHIFT Business Festival in Finland, August 25-26, 2021.

- I’ll be speaking at an Informa event on September 14, 2021. Details to come.

- I’m keynoting CIISec Live—an all-online event—September 15-16, 2021.

- I’m speaking at the Cybersecurity and Data Privacy Law Conference in Plano, Texas, USA, September 22-23, 2021.

The list is maintained on this page.

Depth of Field & Focus – COMPLETE GUIDE

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=CFkgjnmMyGQ

Some massive stable kernel updates

Post Syndicated from original https://lwn.net/Articles/862872/rss

The

5.13.2,

5.12.17,

5.10.50, and

5.4.132

stable kernel updates are out. They are huge; when asked why, Greg

Kroah-Hartman responded:

They show the problem that we currently have where maintainers wait

at the end of the -rc cycle and keep valid fixes from being sent to

Linus. They “bunch up” and come out only in -rc1 and so the first

few stable releases after -rc1 comes out are huge. It’s been

happening for the past few years and only getting worse. These

stable releases are proof of that, the 5.13.2-rc release was the

largest we have ever done and it broke one of my scripts because of

it 🙁

There has been more than the usual amount of discussion about patches that

perhaps should not have been included; the probability of regressions in

these releases may be a bit above average. They also, of course, contain a

lot of important bug fixes.

Intelligently Search Media Assets with Amazon Rekognition and Amazon ES

Post Syndicated from Sridhar Chevendra original https://aws.amazon.com/blogs/architecture/intelligently-search-media-assets-with-amazon-rekognition-and-amazon-es/

Media assets have become increasingly important to industries like media and entertainment, manufacturing, education, social media applications, and retail. This is largely due to innovations in digital marketing, mobile, and ecommerce.

Successfully locating a digital asset like a video, graphic, or image reduces costs related to reproducing or re-shooting. An efficient search engine is critical to quickly delivering something like the latest fashion trends. This in turn increases customer satisfaction, builds brand loyalty, and helps increase businesses’ online footprints, ultimately contributing towards revenue.

This blog post shows you how to build automated indexing and search functions using AWS serverless managed artificial intelligence (AI)/machine learning (ML) services. This architecture provides high scalability, reduces operational overhead, and scales out/in automatically based on the demand, with a flexible pay-as-you-go pricing model.

Automatic tagging and rich metadata with Amazon ES

Asset libraries for images and videos are growing exponentially. With Amazon Elasticsearch Service (Amazon ES), this media is indexed and organized, which is important for efficient search and quick retrieval.

Adding correct metadata to digital assets based on enterprise standard taxonomy will help you narrow down search results. This includes information like media formats, but also richer metadata like location, event details, and so forth. With Amazon Rekognition, an advanced ML service, you do not need to tag and index these media assets. This automatic tagging and organization frees you up to gain insights like sentiment analysis from social media.

Figure 1 is tagged using Amazon Rekognition. You can see how rich metadata (Apparel, T-Shirt, Person, and Pills) is extracted automatically. Without Amazon Rekognition, you would have to manually add tags and categorize the image. This means you could only do a keyword search on what’s manually tagged. If the image was not tagged, then you likely wouldn’t be able to find it in a search.

Data ingestion, organization, and storage with Amazon S3

As shown in Figure 2, use Amazon Simple Storage Service (Amazon S3) to store your static assets. It provides high availability and scalability, along with unlimited storage. When you choose Amazon S3 as your content repository, multiple data providers are configured for data ingestion for future consumption by downstream applications. In addition to providing storage, Amazon S3 lets you organize data into prefixes based on the event type and captures S3 object mutations through S3 event notifications.

S3 event notifications are invoked for a specific prefix, suffix, or combination of both. They integrate with Amazon Simple Queue Service (Amazon SQS), Amazon Simple Notification Service (Amazon SNS), and AWS Lambda as targets. (Refer to the Amazon S3 Event Notifications user guide for best practices). S3 event notification targets vary across use cases. For media assets, Amazon SQS is used to decouple the new data objects ingested into S3 buckets and downstream services. Amazon SQS provides flexibility over the data processing based on resource availability.

Data processing with Amazon Rekognition

Once media assets are ingested into Amazon S3, they are ready to be processed. Amazon Rekognition determines the entities within each asset. Amazon Rekognition then extracts the entities in JSON format and assigns a confidence score.

If the confidence score is below the defined threshold, use Amazon Augmented AI (A2I) for further review. A2I is an ML service that helps you build the workflows required for human review of ML predictions.

Amazon Rekognition also supports custom modeling to help identify entities within the images for specific business needs. For instance, a campaign may need images of products worn by a brand ambassador at a marketing event. Then they may need to further narrow their search down by the individual’s name or age demographic.

Using our solution, a Lambda function invokes Amazon Rekognition to extract the entities from the ingested assets. Lambda continuously polls the SQS queue for any new messages. Once a message is available, the Lambda function invokes the Amazon Rekognition endpoint to extract the relevant entities.

The following is a sample output from detect_labels API call in Amazon Rekognition and the transformed output that will be updated to downstream search engine:

{'Labels': [{'Name': 'Clothing', 'Confidence': 99.98137664794922, 'Instances': [], 'Parents': []}, {'Name': 'Apparel', 'Confidence': 99.98137664794922,'Instances': [], 'Parents': []}, {'Name': 'Shirt', 'Confidence': 97.00833129882812, 'Instances': [], 'Parents': [{'Name': 'Clothing'}]}, {'Name': 'T-Shirt', 'Confidence': 76.36670684814453, 'Instances': [{'BoundingBox': {'Width': 0.7963646650314331, 'Height': 0.6813027262687683, 'Left':

0.09593021124601364, 'Top': 0.1719706505537033}, 'Confidence': 53.39663314819336}], 'Parents': [{'Name': 'Clothing'}]}], 'LabelModelVersion': '2.0', 'ResponseMetadata': {'RequestId': '3a561e82-badc-4ba0-aa77-39a13f1bb3a6', 'HTTPStatusCode': 200, 'HTTPHeaders': {'content-type': 'application/x-amz-json-1.1', 'date': 'Mon, 17 May 2021 18:32:27 GMT', 'x-amzn-requestid': '3a561e82-badc-4ba0-aa77-39a13f1bb3a6','content-length': '542', 'connection': 'keep-alive'}, 'RetryAttempts': 0}}

As shown, the Lambda function submits an API call to Amazon Rekognition, where a T-shirt image in .jpeg format is provided as the input. Based on your confidence score threshold preference, Amazon Rekognition will prompt you to initiate a human review using Amazon A2I. It will also prompt you to use Amazon Rekognition Custom Labels to train the custom models. Lambda then identifies and arranges the labels and updates the specified index.

Indexing with Amazon ES

Amazon ES is a managed search engine service that provides enterprise-grade search engine capability for applications. In our solution, assets are searched based on entities that are used as metadata to update the index. Amazon ES is hosted as a public endpoint or a VPC endpoint for secure access within the specified AWS account.

Labels are identified and marked as tags, which are assigned to .jpeg formatted images. The following sample output shows the query on one of the tags issued on an Amazon ES cluster.

Query:

curl-XGET https://<ElasticSearch Endpoint>/<_IndexName>/_search?q=T-Shirt

Output:

{"took":140,"timed_out":false,"_shards":{"total":5,"successful":5,"skipped":0,"failed":0},"hits":{"total":{"value":1,"relation":"eq"},"max_score":0.05460011,"hits":[{"_index":"movies","_type":"_doc","_id":"15","_score":0.05460011,"_source":{"fileName":"s7-1370766_lifestyle.jpg","objectTags":["Clothing","Apparel","Sailor

Suit","Sleeve","T-Shirt","Shirt","Jersey"]}}]}}

In addition to photos, Amazon Rekognition also detects the labels on videos. It can recognize labels and identify characters and entities. These are then added to Amazon ES to enhance search capability. This allows users to skip to specific parts of a video for quick searchability. For instance, a marketer may need images of cashmere sweaters from a fashion show that was streamed and recorded.

Once the raw video clip is identified, it is then converted using Amazon Elastic Transcoder to play back on mobile devices, tablets, web browsers, and connected televisions. Elastic Transcoder is a highly scalable and cost-effective media transcoding service in the cloud. Segmented output renditions are created for delivery using the multiple protocols to compatible devices.

Conclusion

This blog describes AWS services that can be applied to diverse set of use cases for tagging and efficient search of images and videos. You can build automated indexing and search using AWS serverless managed AI/ML services. They provide high scalability, reduce operational overhead, and scale out/in automatically based on the demand, with a flexible pay-as-you-go pricing model.

To get started, use these references to create your own sample architectures:

Stephen Walker | Beyond | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=LnG8QUEwR8k

Data preparation using an Amazon RDS for MySQL database with AWS Glue DataBrew

Post Syndicated from Dhiraj Thakur original https://aws.amazon.com/blogs/big-data/data-preparation-using-an-amazon-rds-for-mysql-database-with-aws-glue-databrew/

With AWS Glue DataBrew, data analysts and data scientists can easily access and visually explore any amount of data across their organization directly from their Amazon Simple Storage Service (Amazon S3) data lake, Amazon Redshift data warehouse, or Amazon Aurora and Amazon Relational Database Service (Amazon RDS) databases. You can choose from over 250 built-in functions to merge, pivot, and transpose the data without writing code.

Now, with added support for JDBC-accessible databases, DataBrew also supports additional data stores, including PostgreSQL, MySQL, Oracle, and Microsoft SQL Server. In this post, we use DataBrew to clean data from an RDS database, store the cleaned data in an S3 data lake, and build a business intelligence (BI) report.

Use case overview

For our use case, we use three datasets:

- A school dataset that contains school details like school ID and school name

- A student dataset that contains student details like student ID, name, and age

- A student study details dataset that contains student study time, health, country, and more

The following diagram shows the relation of these tables.

For our use case, this data is collected by a survey organization after an annual exam, and updates are made in Amazon RDS for MySQL using a Java script-based frontend application. We join the tables to create a single view and create aggregated data through a series of data preparation steps, and the business team uses the output data to create BI reports.

Solution overview

The following diagram illustrates our solution architecture. We use Amazon RDS to store data, DataBrew for data preparation, Amazon Athena for data analysis with standard SQL, and Amazon QuickSight for business reporting.

- Create a JDBC connection for RDS and a DataBrew project. DataBrew does the transformation to find the top performing students across all the schools considered for analysis.

- The DataBrew job writes the final output to our S3 output bucket.

- After the output data is written, we can create external tables on top of it with Athena create table statements and load partitions with MCSK REPAIR commands.

- Business users can use QuickSight for BI reporting, which fetches data through Athena. Data analysts can also use Athena to analyze the complete refreshed dataset.

Prerequisites

To complete this solution, you should have an AWS account.

Prelab setup

Before beginning this tutorial, make sure you have the required permissions to create the resources required as part of the solution.

For our use case, we use three mock datasets. You can download the DDL code and data files from GitHub.

- Create the RDS for MySQL instance to capture the student health data.

- Make sure you have set up the correct security group for Amazon RDS. For more information, see Setting Up a VPC to Connect to JDBC Data Stores.

- Create three tables:

student_tbl,study_details_tbl, andschool_tbl. You can use DDLsql to create the database objects. - Upload the

student.csv,study_details.csv, andschool.csvfiles in their respective tables. You can usestudent.sql,study_details.sql, andschool.sqlto insert the data in the tables.

Create an Amazon RDS connection

To create your Amazon RDS connection, complete the following steps:

- On the DataBrew console, choose Datasets.

- On the Connections tab, choose Create connection.

- For Connection name, enter a name (for example,

student_db-conn). - For Connection type, select JDBC.

- For Database type, choose MySQL.

- Provide other parameters like RDS endpoint, port, database name, and database login credentials.

- In the Network options section, choose the VPC, subnet, and security group of your RDS instance.

- Choose Create connection.

Create your datasets

We have three tables in Amazon RDS: school_tbl, student_tbl, and study_details_tbl. To use these tables, we first need to create a dataset for each table.

To create the datasets, complete the following steps (we walk you through creating the school dataset):

- On the Datasets page of the DataBrew console, choose Connect new dataset.

- For Dataset name, enter school-dataset.

- Choose the connection you created (

AwsGlueDatabrew-student-db-conn). - For Table name, enter

school_tbl. - Choose Create dataset.

- Repeat these steps for the

student_tblandstudy_details_tbltables, and name the newdatasets student-datasetandstudy-detail-dataset, respectively.

All three datasets are available to use on the Datasets page.

Create a project using the datasets

To create your DataBrew project, complete the following steps:

- On the DataBrew console, choose Projects.

- Choose Create project.

- For Project Name, enter

my-rds-proj. - For Attached recipe, choose Create new recipe.

The recipe name is populated automatically.

- For Select a dataset, select My datasets.

- For Dataset name, select

study-detail-dataset.

- For Role name, choose your AWS Identity and Access management (IAM) role to use with DataBrew.

- Choose Create project.

You can see a success message along with our RDS study_details_tbl table with 500 rows.

After the project is opened, a DataBrew interactive session is created. DataBrew retrieves sample data based on your sampling configuration selection.

Open an Amazon RDS project and build a transformation recipe

In a DataBrew interactive session, you can cleanse and normalize your data using over 250 built-in transforms. In this post, we use DataBrew to identify top performing students by performing a few transforms and finding students who got marks greater than or equal to 60 in the last annual exam.

First, we use DataBrew to join all three RDS tables. To do this, we perform the following steps:

- Navigate to the project you created.

- Choose Join.

- For Select dataset, choose

student-dataset. - Choose Next.

- For Select join type, select Left join.

- For Join keys, choose

student_idfor Table A and deselectstudent_idfor Table B. - Choose Finish.

Repeat the steps for school-dataset based on the school_id key.

- Choose MERGE to merge

first_nameandlast_name. - Enter a space as a separator.

- Choose Apply.

We now filter the rows based on marks value greater than or equal to 60 and add the condition as a recipe step.

- Choose FILTER.

- Provide the source column and filter condition and choose Apply.

The final data shows the top performing students’ data who had marks greater than or equal to 60.

Run the DataBrew recipe job on the full data

Now that we have built the recipe, we can create and run a DataBrew recipe job.

- On the project details page, choose Create job.

- For Job name¸ enter

top-performer-student.

For this post, we use Parquet as the output format.

- For File type, choose PARQUET.

- For S3 location, enter the S3 path of the output folder.

- For Role name, choose an existing role or create a new one.

- Choose Create and run job.

- Navigate to the Jobs page and wait for the

top-performer-studentjob to complete.

- Choose the Destination link to navigate to Amazon S3 to access the job output.

Run an Athena query

Let’s validate the aggregated table output in Athena by running a simple SELECT query. The following screenshot shows the output.

Create reports in QuickSight

Now let’s do our final step of the architecture, which is creating BI reports through QuickSight by connecting to the Athena aggregated table.

- On the QuickSight console, choose Athena as your data source.

- Choose the database and catalog you have in Athena.

- Select your table.

- Choose Select.

Now you can create a quick report to visualize your output, as shown in the following screenshot.

If QuickSight is using SPICE storage, you need to refresh the dataset in QuickSight after you receive notification about the completion of the data refresh. We recommend using SPICE storage to get better performance.

Clean up

Delete the following resources that might accrue cost over time:

- The RDS instance

- The recipe job top-performer-student

- The job output stored in your S3 bucket

- The IAM roles created as part of projects and jobs

- The DataBrew project

my-rds-projand its associated recipemy-rds-proj-recipe - The DataBrew datasets

Conclusion

In this post, we saw how to create a JDBC connection for an RDS database. We learned how to use this connection to create a DataBrew dataset for each table, and how to reuse this connection multiple times. We also saw how we can bring data from Amazon RDS into DataBrew and seamlessly apply transformations and run recipe jobs that refresh transformed data for BI reporting.

About the Author

Dhiraj Thakur is a Solutions Architect with Amazon Web Services. He works with AWS customers and partners to provide guidance on enterprise cloud adoption, migration, and strategy. He is passionate about technology and enjoys building and experimenting in the analytics and AI/ML space.

Dhiraj Thakur is a Solutions Architect with Amazon Web Services. He works with AWS customers and partners to provide guidance on enterprise cloud adoption, migration, and strategy. He is passionate about technology and enjoys building and experimenting in the analytics and AI/ML space.

Trigger ID’s in Home Assistant Automation

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=MTOAnpqxb-4

Learn the Internet of Things with “IoT for Beginners” and Raspberry Pi

Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/learn-the-internet-of-things-with-iot-for-beginners-and-raspberry-pi/

Want to dabble in the Internet of Things but don’t know where to start? Well, our friends at Microsoft have developed something fun and free just for you. Here’s Senior Cloud Advocate Jim Bennett to tell you all about their brand new online curriculum for IoT beginners.

IoT — the Internet of Things — is one of the biggest growth areas in technology, and one that, to me, is very exciting. You start with a device like a Raspberry Pi, sprinkle some sensors, dust with code, mix in some cloud services and poof! You have smart cities, self-driving cars, automated farming, robotic supermarkets, or devices that can clean your toilet after you shout at Alexa for the third time.

It feels like every week there is another survey out on what tech skills will be in demand in the next five years, and IoT always appears somewhere near the top. This is why loads of folks are interested in learning all about it.

In my day job at Microsoft, I work a lot with students and lecturers, and I’m often asked for help with content to get started with IoT. Not just how to use whatever cool-named IoT services come from your cloud provider of choice to enable digital whatnots to add customer value via thingamabobs, but real beginner content that goes back to the basics.

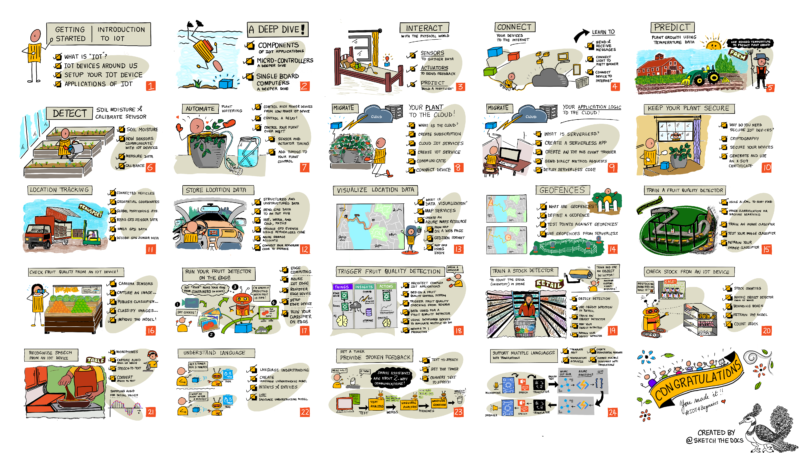

This is why a few of us have spent the last few months locked away building IoT for Beginners. It’s a free, open source, 24-lesson university-level IoT curriculum designed for teachers and students, and built by IoT experts, education experts and students.

What will you learn?

The lessons are grouped into projects that you can build with a Raspberry Pi so that you can deep-dive into use cases of IoT, following the journey of food from farm to table.

You’ll build projects as you learn the concepts of IoT devices, sensors, actuators, and the cloud, including:

- An automated watering system, controlling a relay via a soil moisture sensor. This starts off running just on your device, then moves to a free MQTT broker to add cloud control. It then moves on again to cloud-based IoT services to add features like security to stop Farmer Giles from hacking your watering system.

- A GPS-based vehicle tracker plotting the route taken on a map. You get alerts when a vehicle full of food arrives at a location by using cloud-based mapping services and serverless code.

- AI-based fruit quality checking using a camera on your device. You train AI models that can detect if fruit is ripe or not. These start off running in the cloud, then you move them to the edge running directly on your Raspberry Pi.

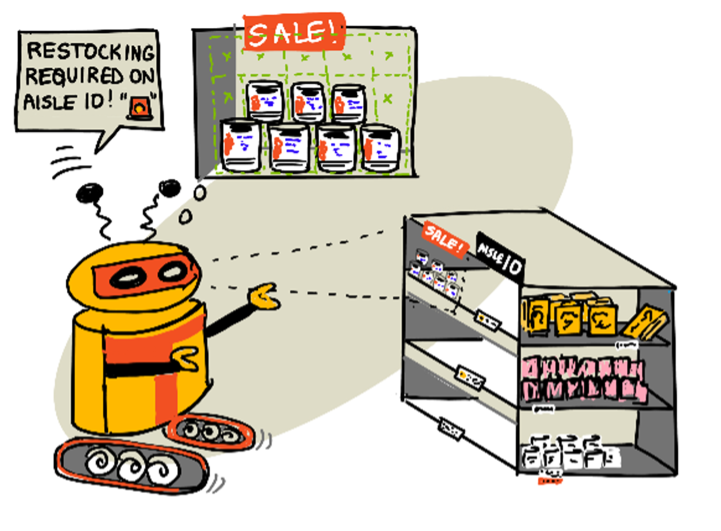

- Smart stock checking so you can see when you need to restack the shelves, again powered by AI services.

- A voice-controlled smart timer so you have more devices to shout at when cooking your food! This one uses AI services to understand what you say into your IoT device. It gives spoken feedback and even works in many different languages, translating on the fly.

Grab your Raspberry Pi and some sensors from our friends at Seeed Studio and get building. Without further ado, please meet IoT For Beginners: A Curriculum!

The post Learn the Internet of Things with “IoT for Beginners” and Raspberry Pi appeared first on Raspberry Pi.

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/862855/rss

Security updates have been issued by CentOS (xstream), Debian (linuxptp), Fedora (glibc and krb5), Gentoo (pillow and thrift), Mageia (ffmpeg and libsolv), openSUSE (kernel and qemu), SUSE (kernel), and Ubuntu (php5, php7.0).

STOP using Tuya Integrations! How To transplant an ESP8266 100% LOCAL – Tuya-Convert F.0

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=d_HpkIiWC3Y

Live Teardown Unboxing 25 amp Sonoff POWR3 with Guest Tediore

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=dUFNt8lr_PE

The Forgotten History of Ice

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=tLwyfFT5VF8

China Taking Control of Zero-Day Exploits

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/07/china-taking-control-of-zero-day-exploits.html

China is making sure that all newly discovered zero-day exploits are disclosed to the government.

Under the new rules, anyone in China who finds a vulnerability must tell the government, which will decide what repairs to make. No information can be given to “overseas organizations or individuals” other than the product’s manufacturer.

No one may “collect, sell or publish information on network product security vulnerabilities,” say the rules issued by the Cyberspace Administration of China and the police and industry ministries.

This just blocks the cyber-arms trade. It doesn’t prevent researchers from telling the products’ companies, even if they are outside of China.

Your WiFi Sucks, Fix It.

Post Syndicated from The Hook Up original https://www.youtube.com/watch?v=UBtZMoqIwR4

Bad Map Projection: The Greenland Special

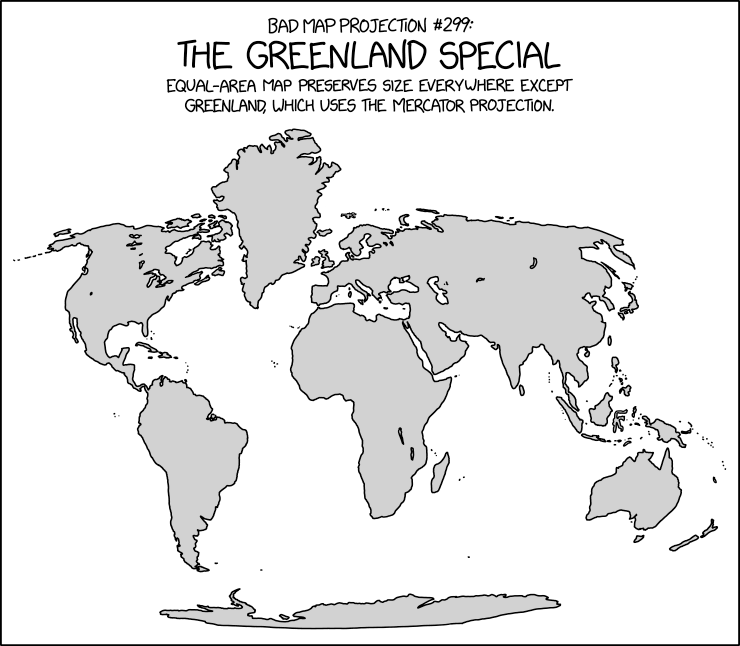

Post Syndicated from original https://xkcd.com/2489/

[$] Copyleft-next and the kernel

Post Syndicated from original https://lwn.net/Articles/862611/rss

The Linux kernel is, as a whole, licensed under the GPLv2, but various

parts and pieces are licensed under other compatible licenses and/or

dual-licensed. That picture was much murkier only a few years back, before

the SPDX in the kernel project cleaned up

the licensing information in most of the kernel source by specifying

the licenses, by name rather than boilerplate text, directly

in the files. A recent move to add yet

another license into the mix is encountering some headwinds, but the

license in question has already being used in a few kernel files, and has

been for four years at this point.