Post Syndicated from David Belson original http://blog.cloudflare.com/traffic-anomalies-notifications-radar/

We launched the Cloudflare Radar Outage Center (CROC) during Birthday Week 2022 as a way of keeping the community up to date on Internet disruptions, including outages and shutdowns, visible in Cloudflare’s traffic data. While some of the entries have their genesis in information from social media posts made by local telecommunications providers or civil society organizations, others are based on an internal traffic anomaly detection and alerting tool. Today, we’re adding this alerting feed to Cloudflare Radar, showing country and network-level traffic anomalies on the CROC as they are detected, as well as making the feed available via API.

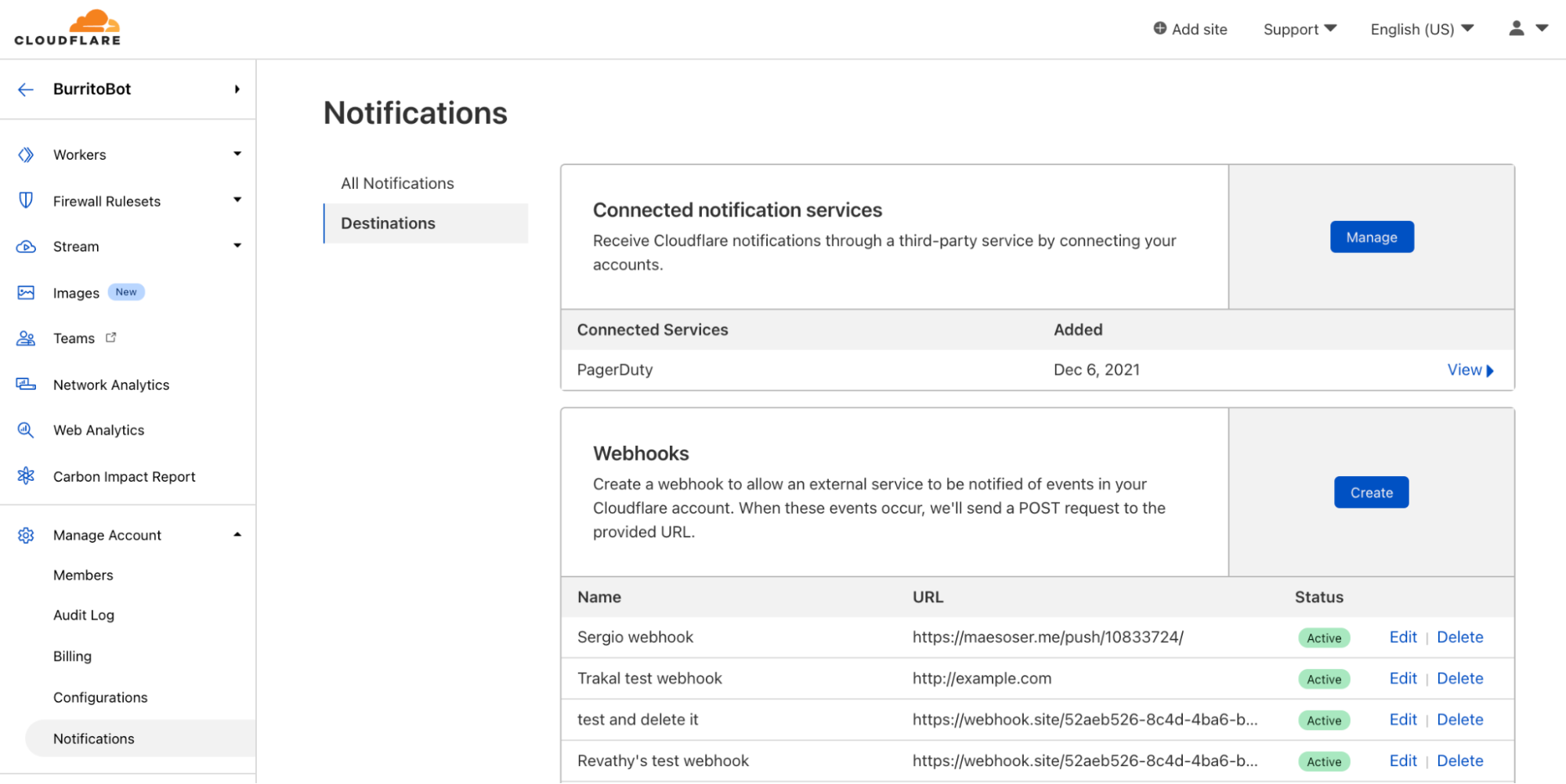

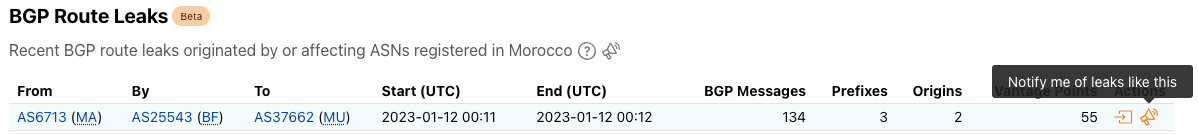

Building on this new functionality, as well as the route leaks and route hijacks insights that we recently launched on Cloudflare Radar, we are also launching new Radar notification functionality, enabling you to subscribe to notifications about traffic anomalies, confirmed Internet outages, route leaks, or route hijacks. Using the Cloudflare dashboard’s existing notification functionality, users can set up notifications for one or more countries or autonomous systems, and receive notifications when a relevant event occurs. Notifications may be sent via e-mail or webhooks — the available delivery methods vary according to plan level.

Traffic anomalies

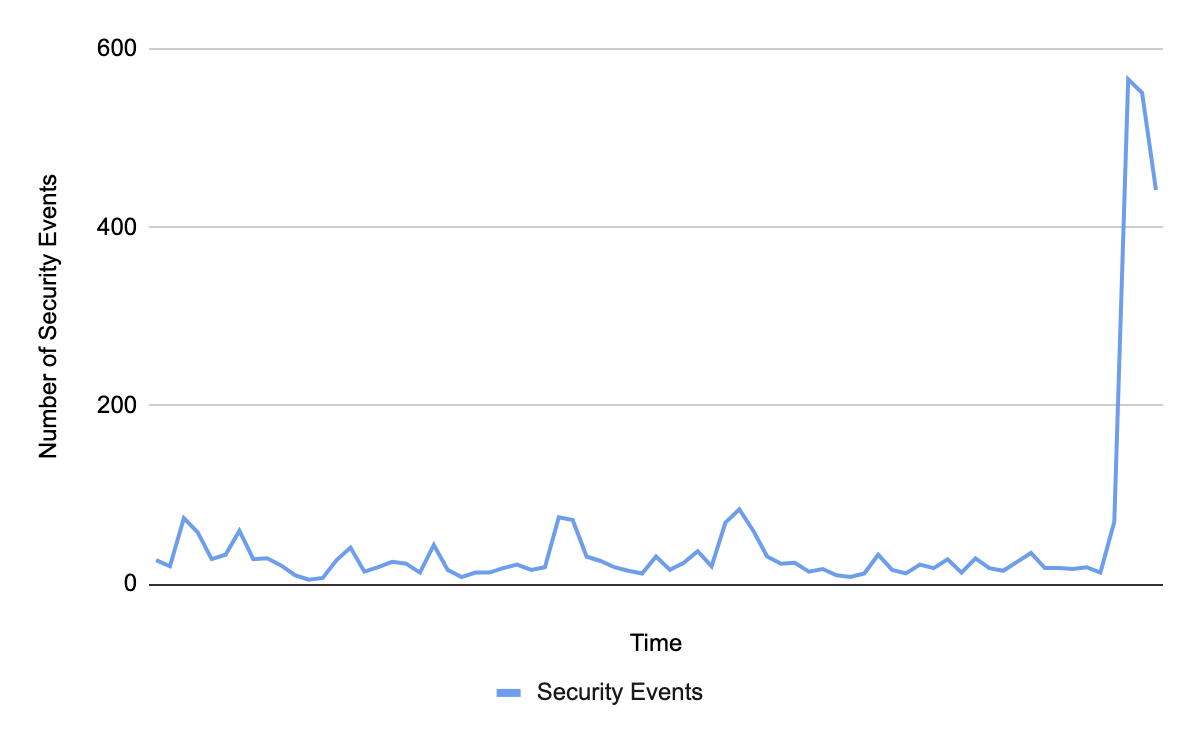

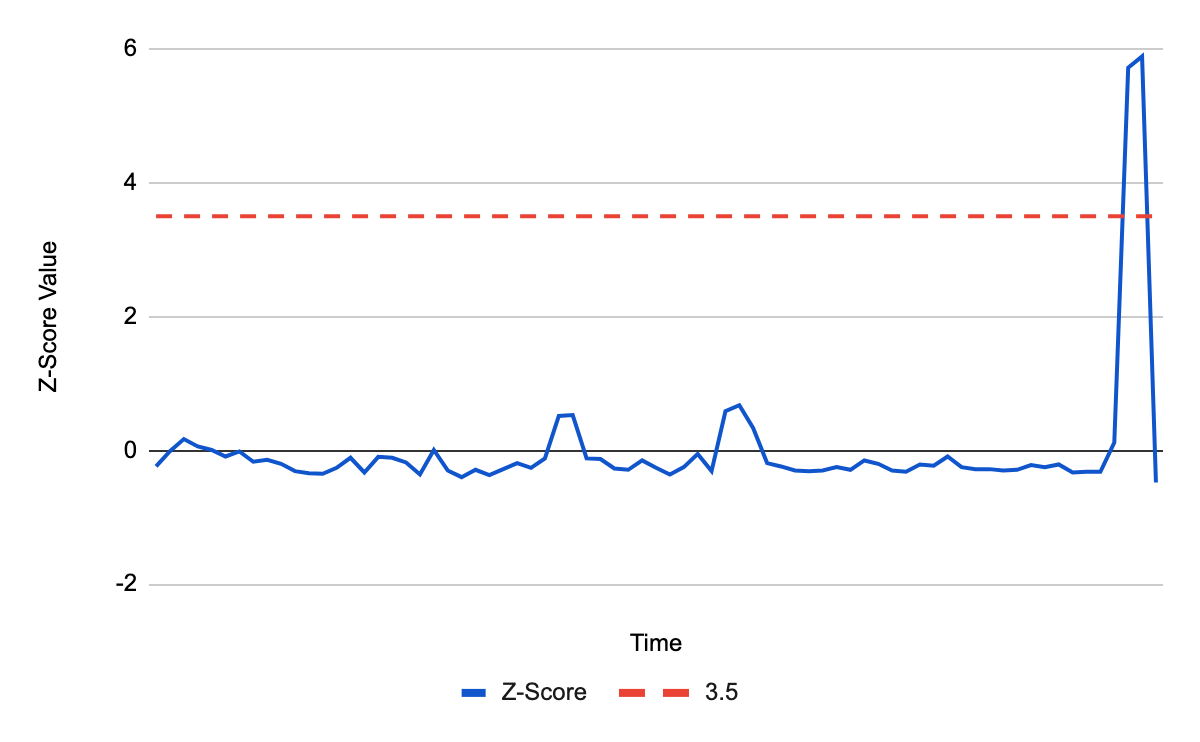

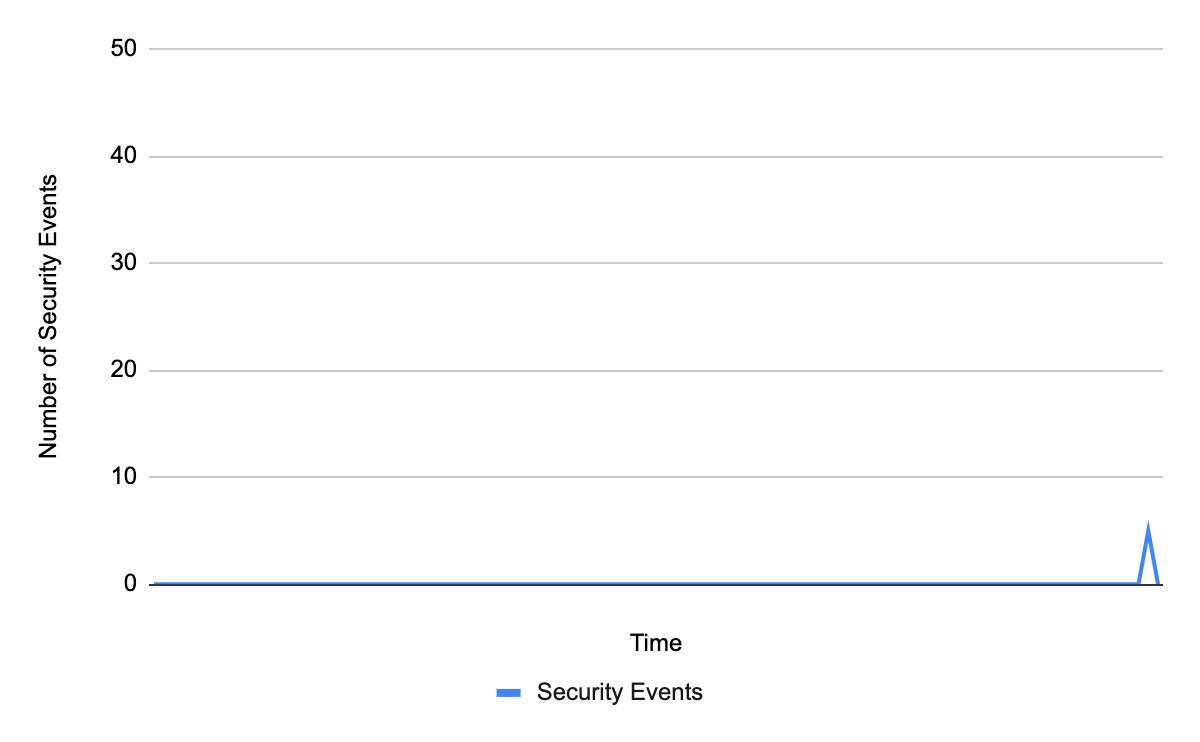

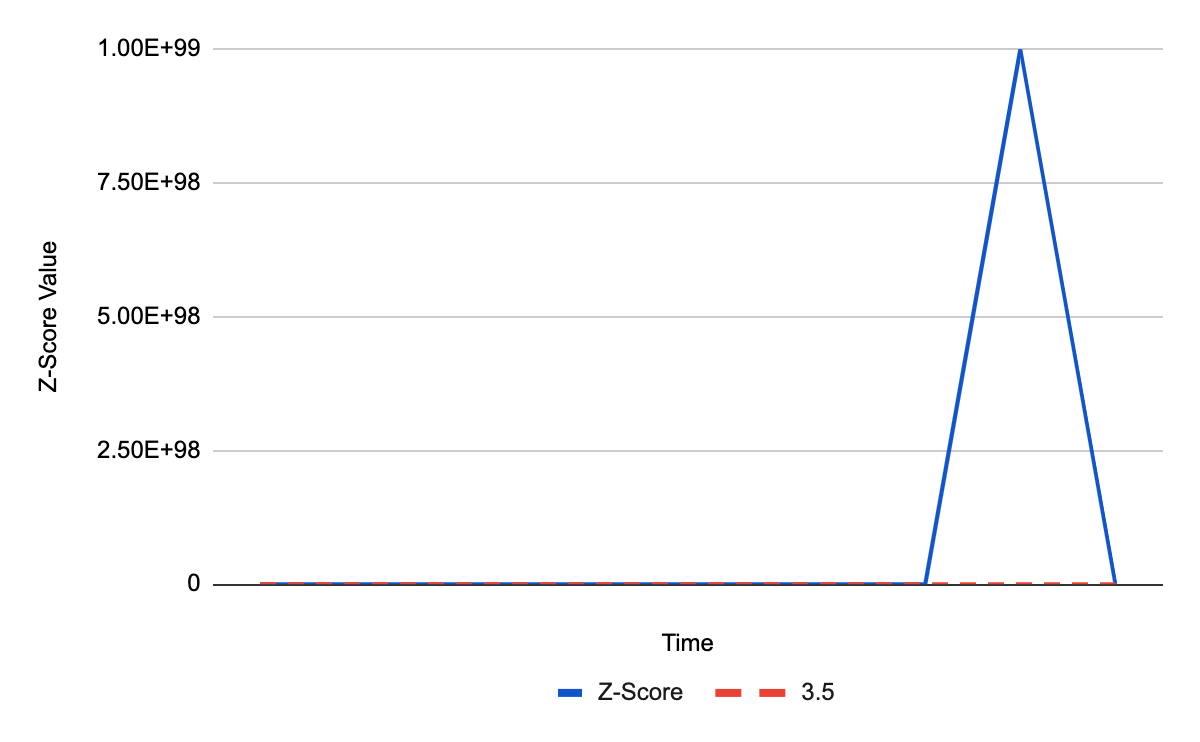

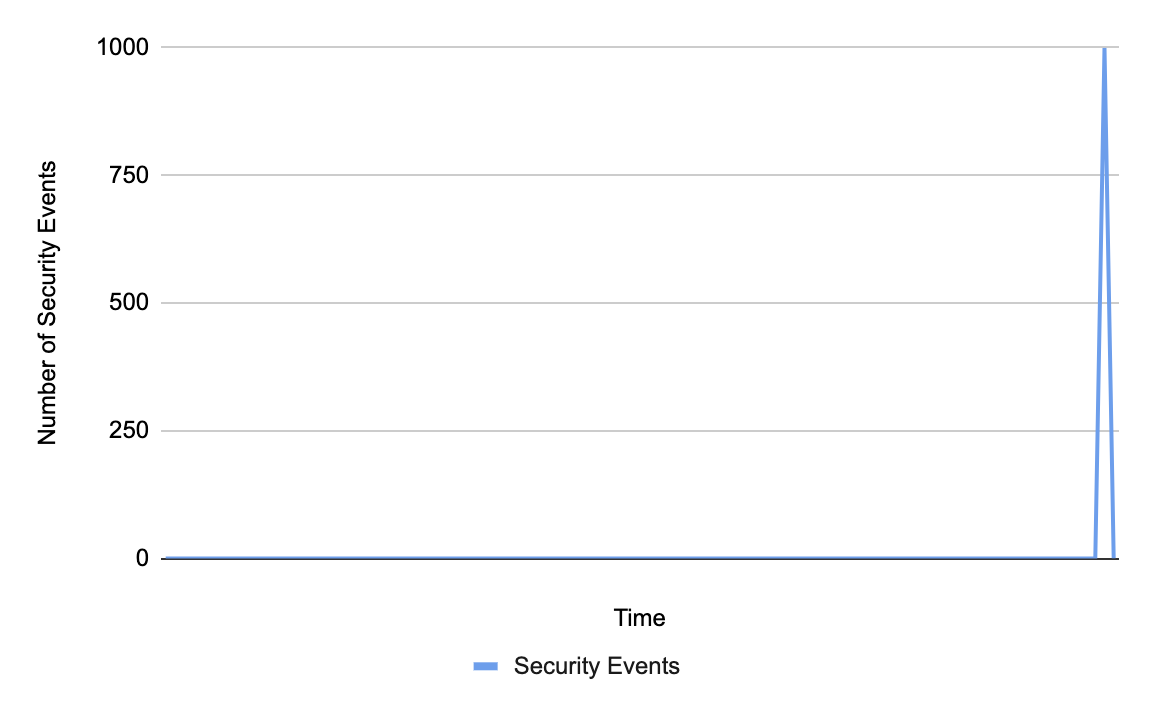

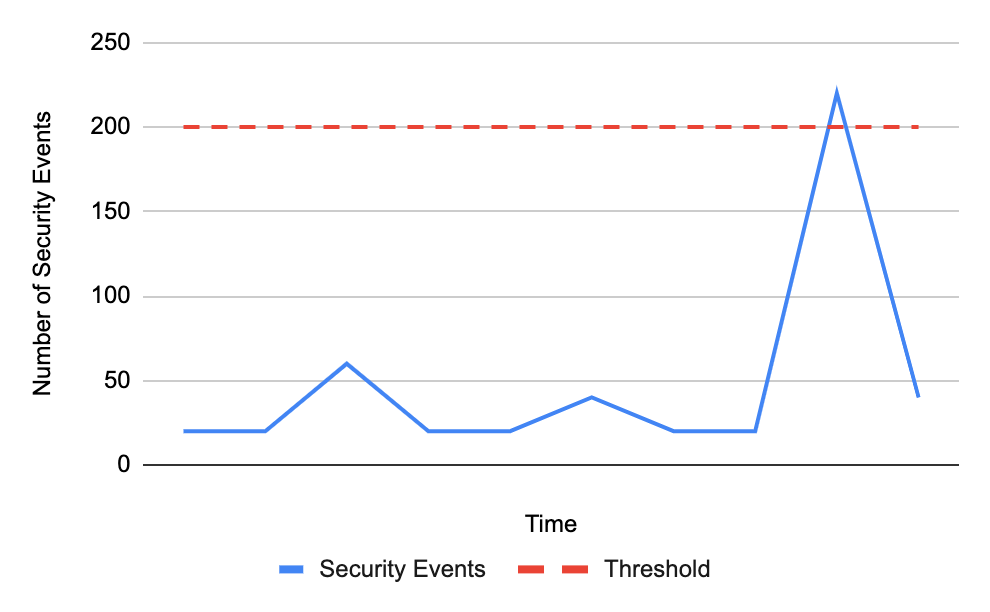

Internet traffic generally follows a fairly regular pattern, with daily peaks and troughs at roughly the same volumes of traffic. However, while weekend traffic patterns may look similar to weekday ones, their traffic volumes are generally different. Similarly, holidays or national events can also cause traffic patterns and volumes to differ significantly from “normal”, as people shift their activities and spend more time offline, or as people turn to online sources for information about, or coverage of, the event. These traffic shifts can be newsworthy, and we have covered some of them in past Cloudflare blog posts (King Charles III coronation, Easter/Passover/Ramadan, Brazilian presidential elections).

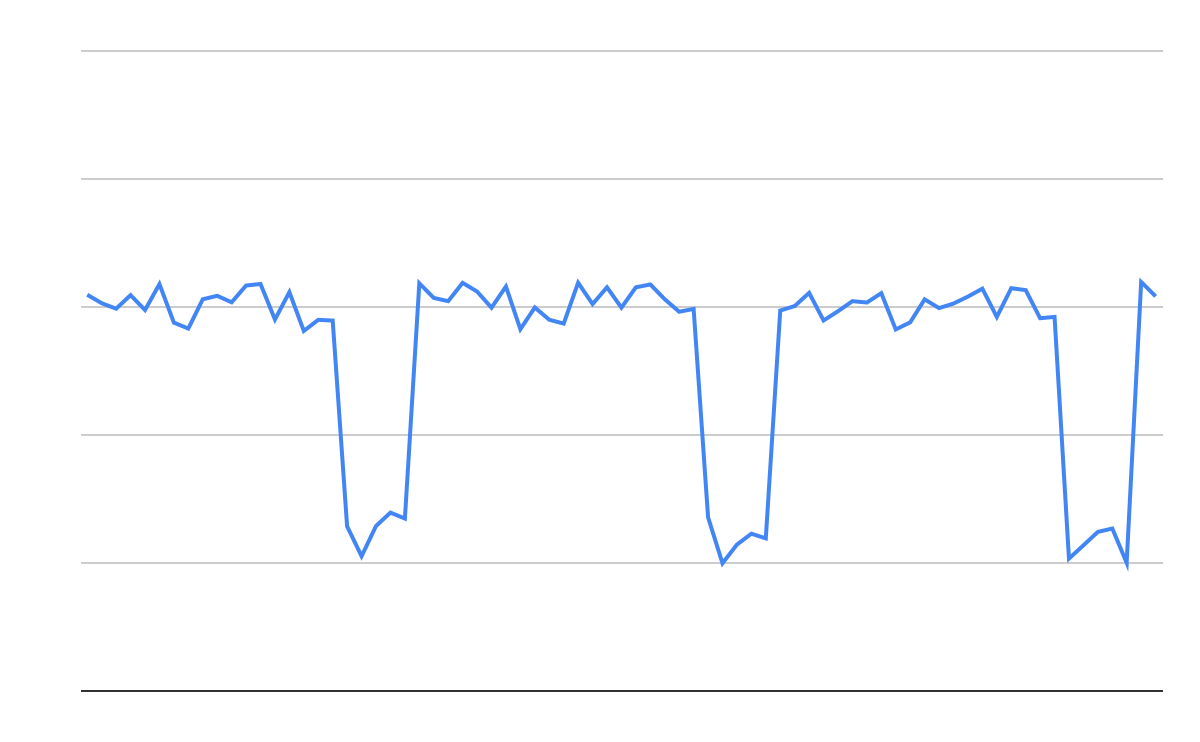

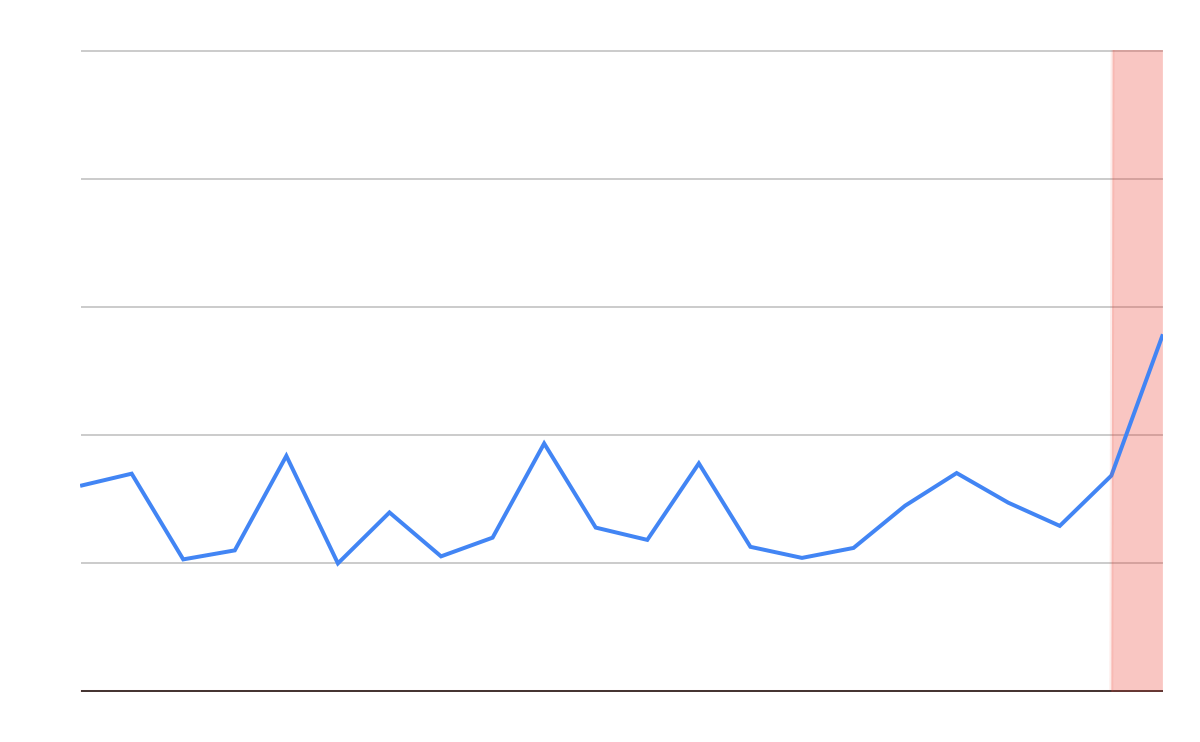

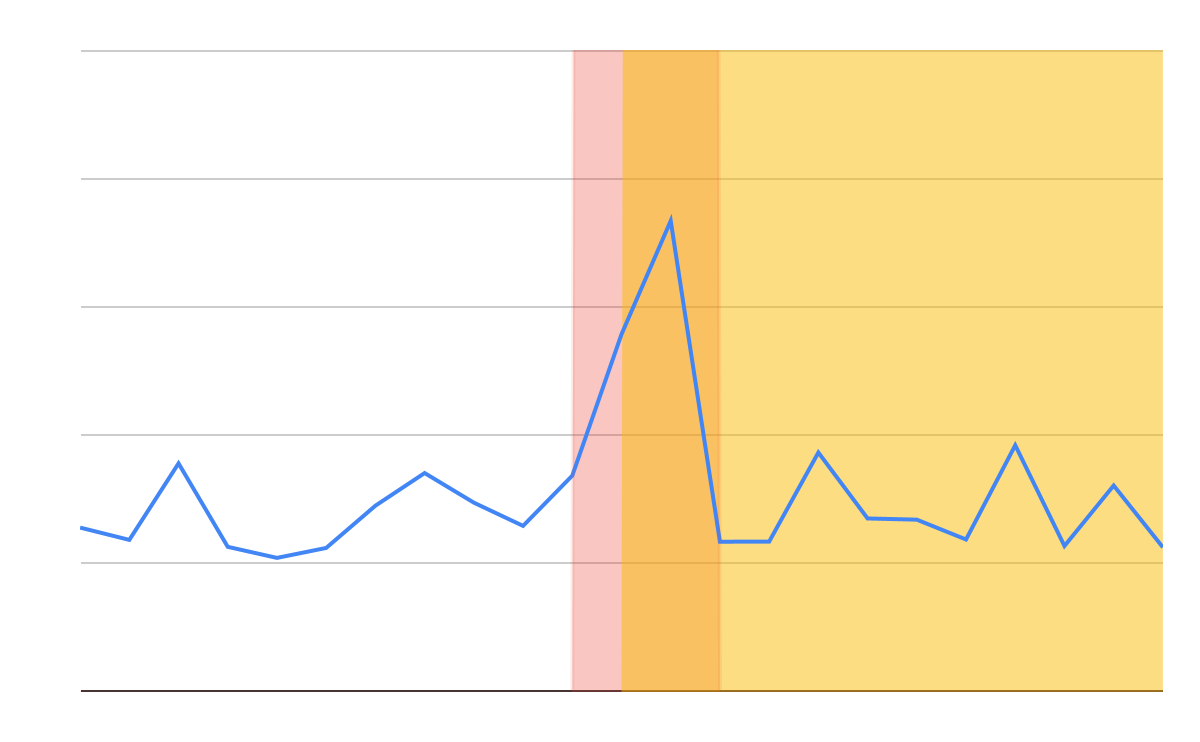

However, as you also know from reading our blog posts and following Cloudflare Radar on social media, it is the more drastic drops in traffic that are a cause for concern. Some are the result of infrastructure damage from severe weather or a natural disaster like an earthquake and are effectively unavoidable, but getting timely insights into the impact of these events on Internet connectivity is valuable from a communications perspective. Other traffic drops have occurred when an authoritarian government orders mobile Internet connectivity to be shut down, or shuts down all Internet connectivity nationwide. Timely insights into these types of anomalous traffic drops are often critical from a human rights perspective, as Internet shutdowns are often used as a means of controlling communication with the outside world.

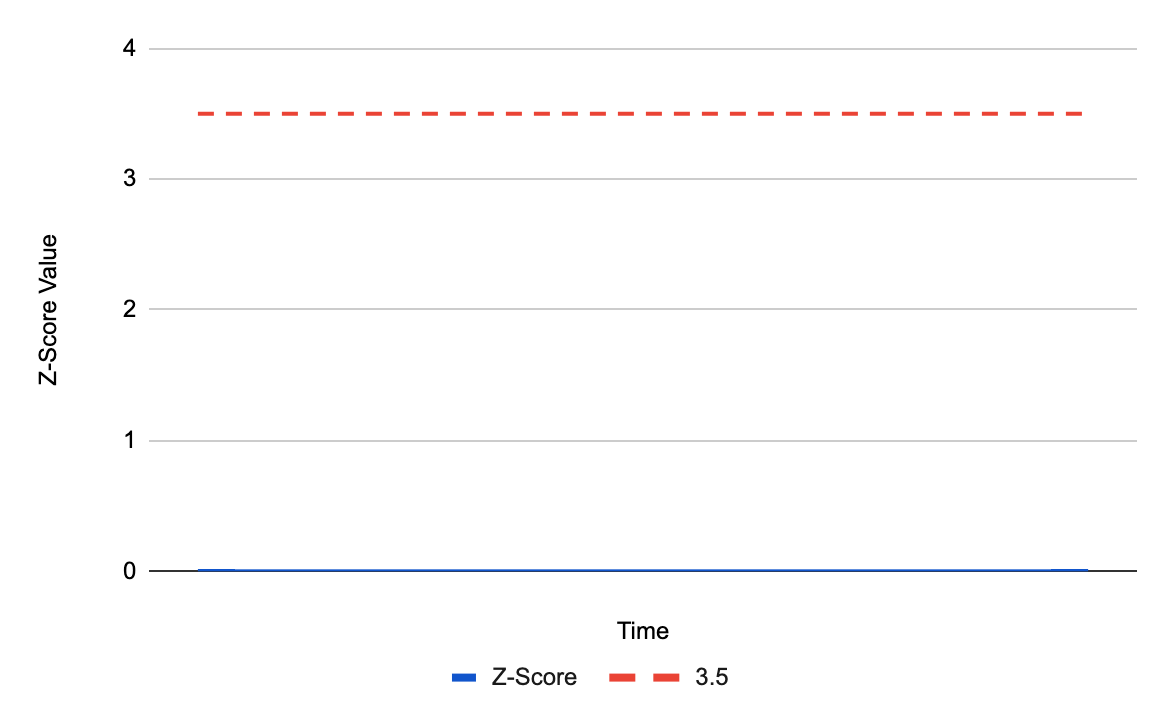

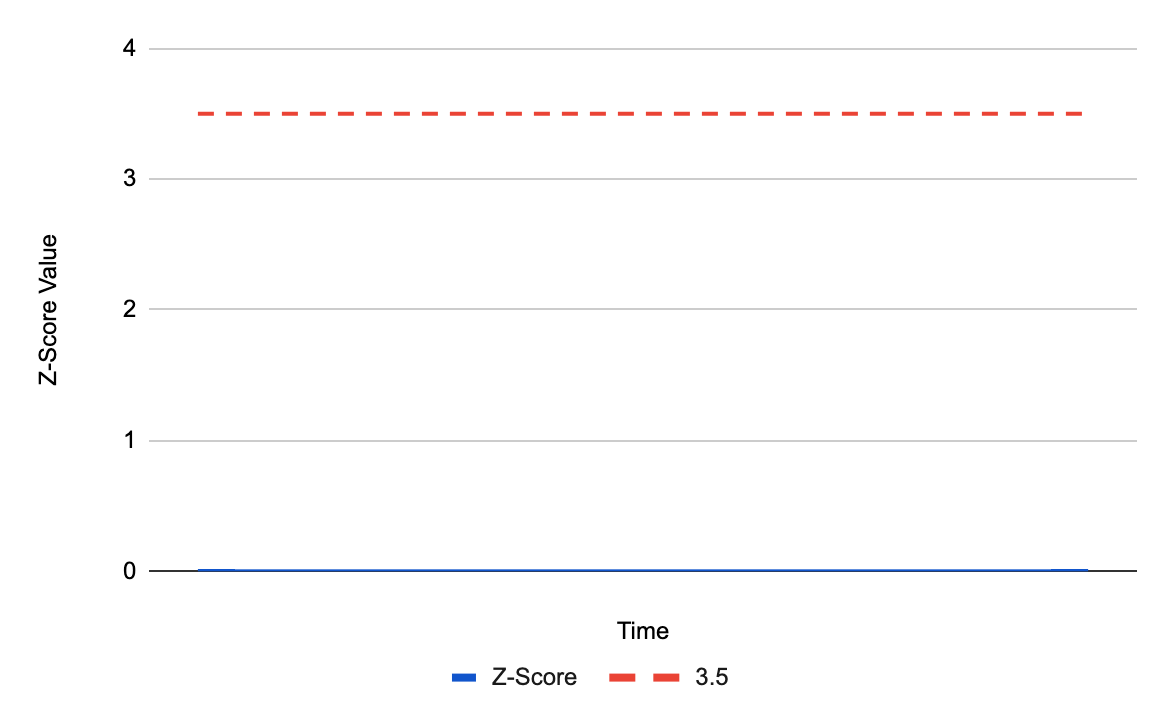

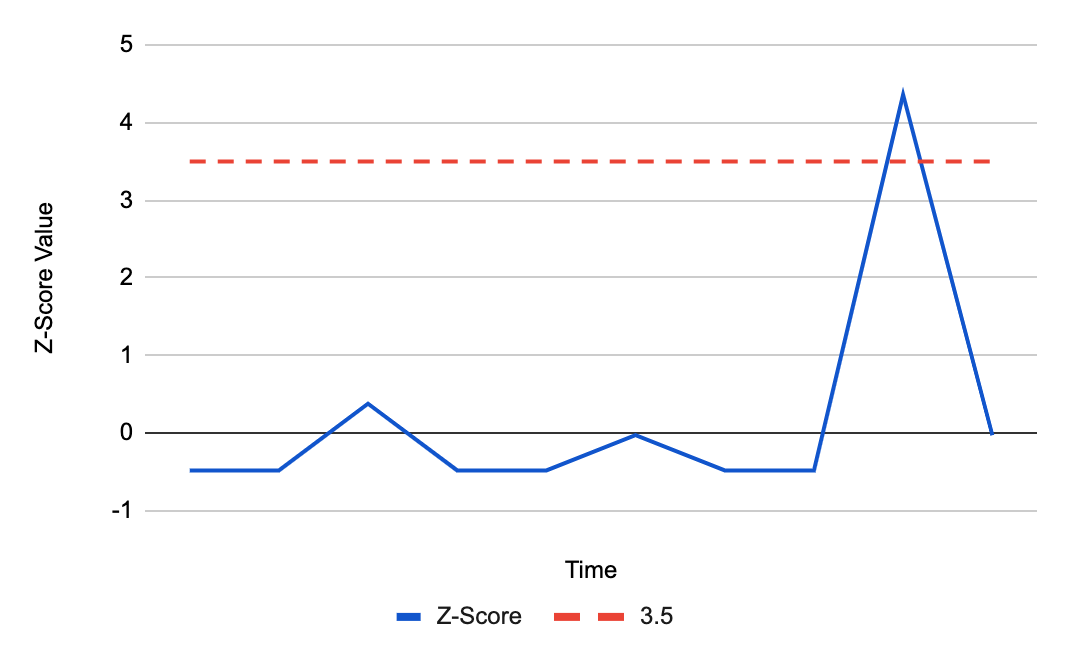

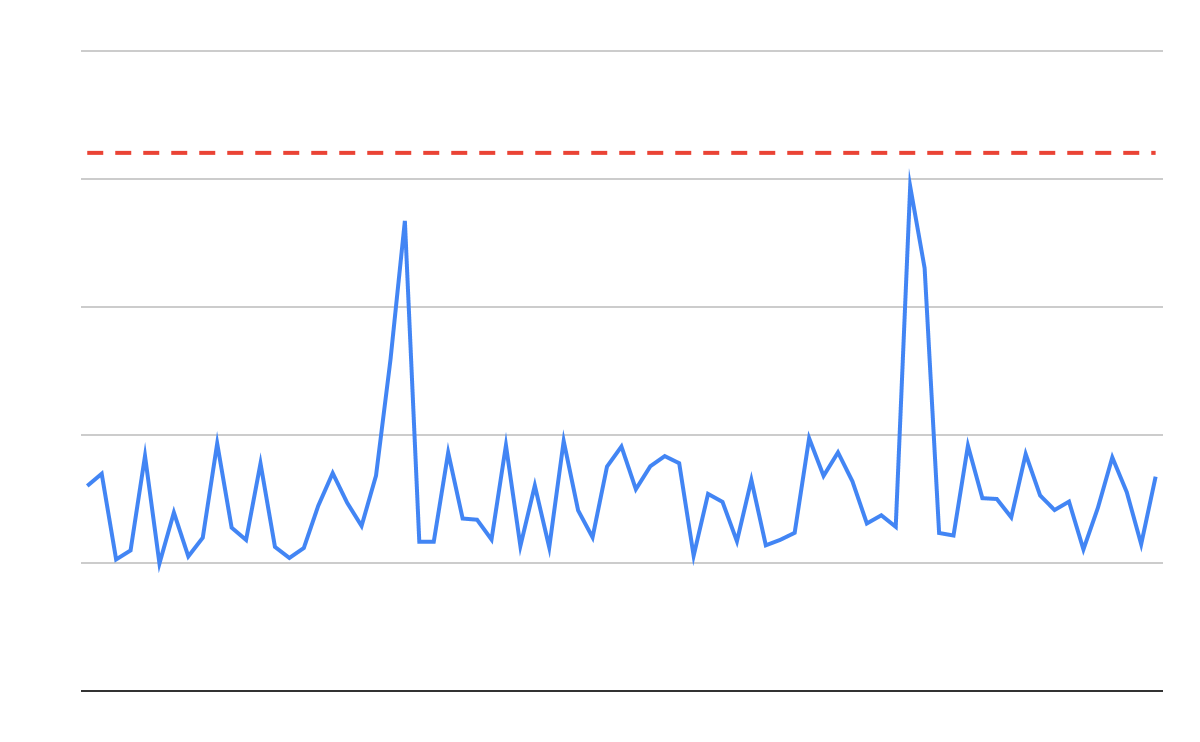

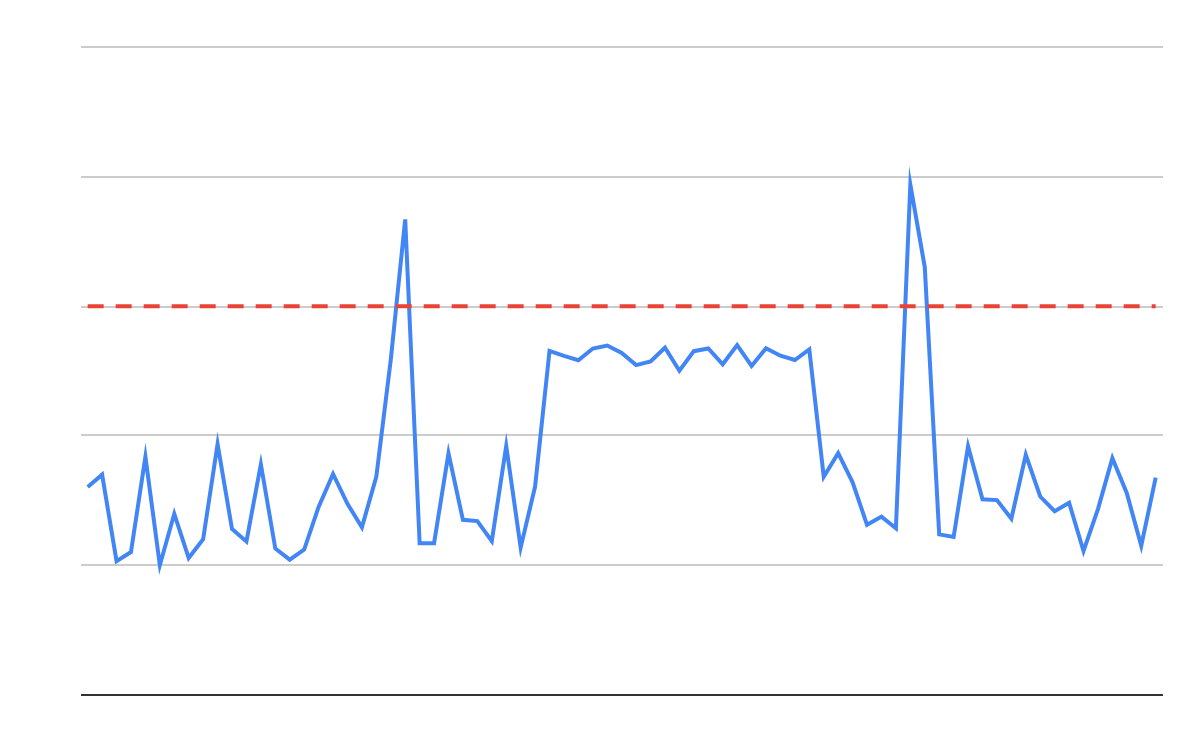

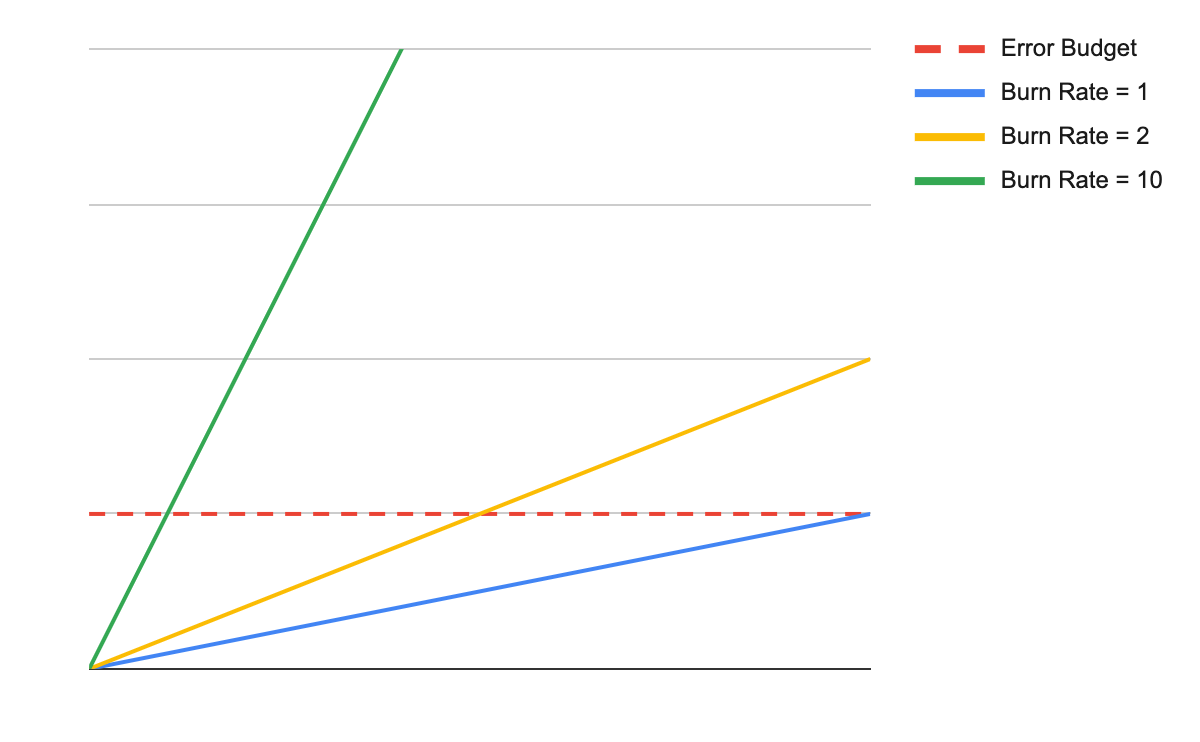

Over the last several months, the Cloudflare Radar team has been using an internal tool to identify traffic anomalies and post alerts for followup to a dedicated chat space. The companion blog post Gone Offline: Detecting Internet Outages goes into deeper technical detail about our traffic analysis and anomaly detection methodologies that power this internal tool.

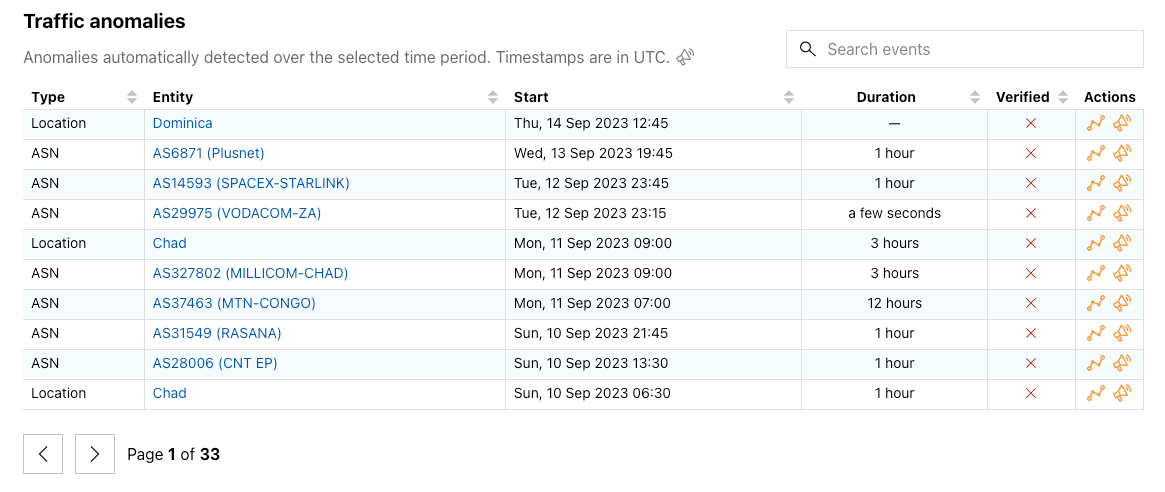

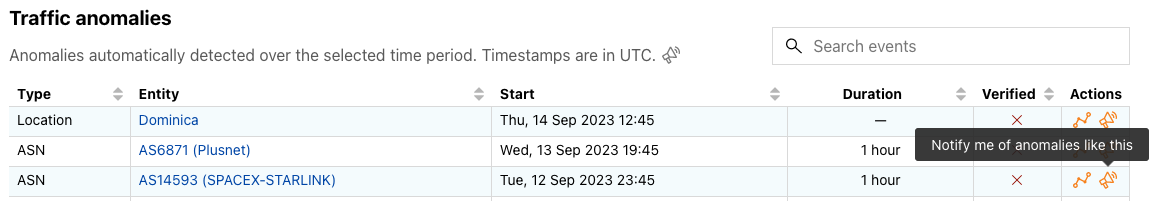

Many of these internal traffic anomaly alerts ultimately result in Outage Center entries and Cloudflare Radar social media posts. Today, we’re extending the Cloudflare Radar Outage Center and publishing information about these anomalies as we identify them. As shown in the figure below, the new Traffic anomalies table includes the type of anomaly (location or ASN), the entity where the anomaly was detected (country/region name or autonomous system), the start time, duration, verification status, and an “Actions” link, where the user can view the anomaly on the relevant entity traffic page or subscribe to a notification. (If manual review of a detected anomaly finds that it is present in multiple Cloudflare traffic datasets and/or is visible in third-party datasets, such as Georgia Tech’s IODA platform, we will mark it as verified. Unverified anomalies may be false positives, or related to Netflows collection issues, though we endeavor to minimize both.)

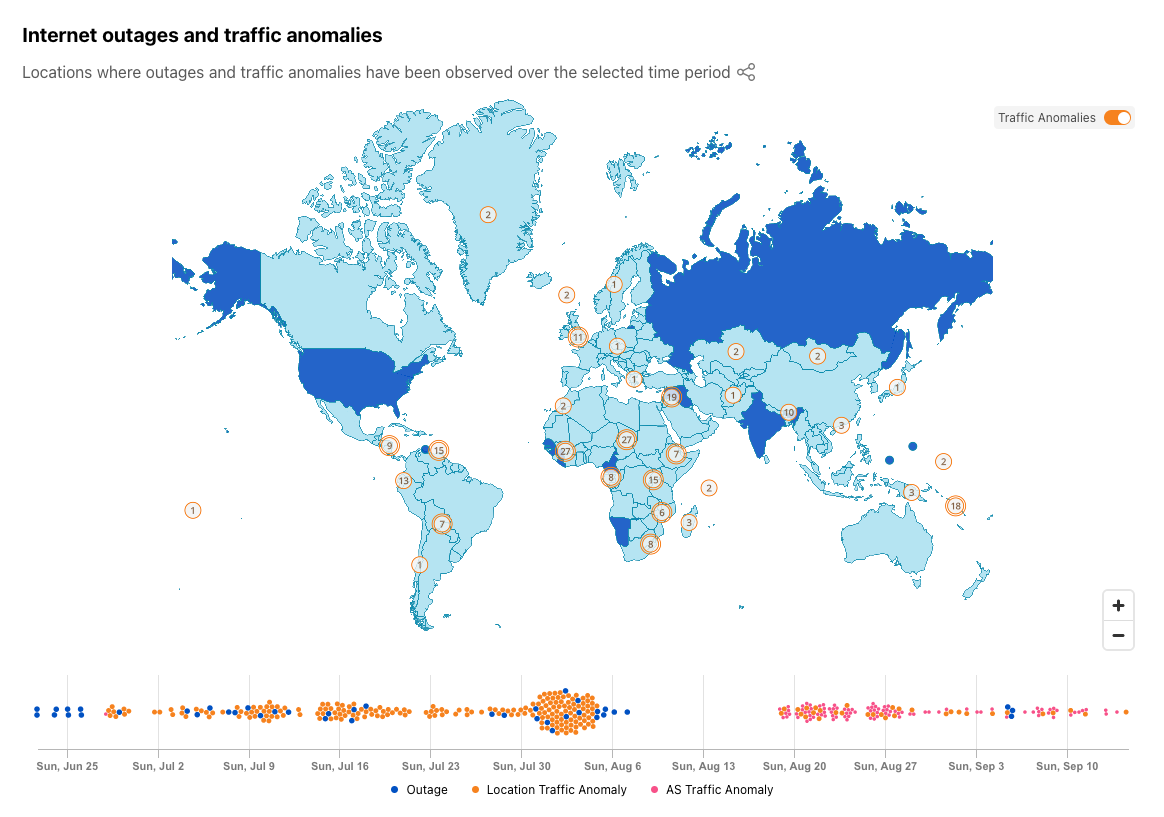

In addition to this new table, we have updated the Cloudflare Radar Outage Center map to highlight where we have detected anomalies, as well as placing them into a broader temporal context in a new timeline immediately below the map. Anomalies are represented as orange circles on the map, and can be hidden with the toggle in the upper right corner. Double-bordered circles represent an aggregation across multiple countries, and zooming in to that area will ultimately show the number of anomalies associated with each country that was included in the aggregation. Hovering over a specific dot in the timeline displays information about the outage or anomaly with which it is associated.

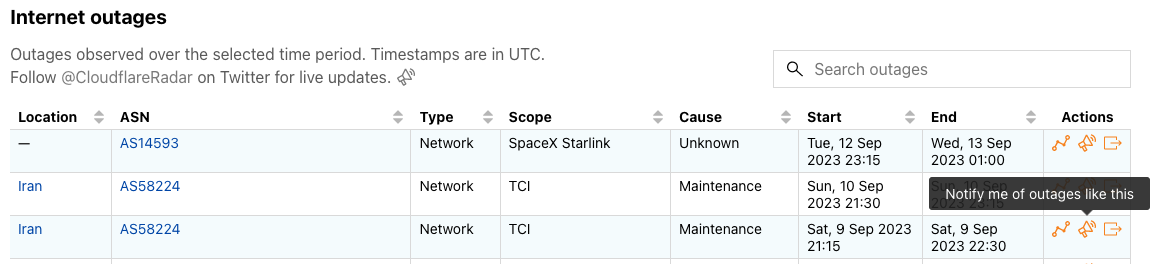

Internet outage information has been available via the Radar API since we launched the Outage Center and API in September 2022, and traffic anomalies are now available through a Radar API endpoint as well. An example traffic anomaly API request and response are shown below.

Example request:

curl --request GET \ --url https://api.cloudflare.com/client/v4/radar/traffic_anomalies \ --header 'Content-Type: application/json' \ --header 'X-Auth-Email: '

Example response:

{

"result": {

"trafficAnomalies": [

{

"asnDetails": {

"asn": "189",

"locations": {

"code": "US",

"name": "United States"

},

"name": "LUMEN-LEGACY-L3-PARTITION"

},

"endDate": "2023-08-03T23:15:00Z",

"locationDetails": {

"code": "US",

"name": "United States"

},

"startDate": "2023-08-02T23:15:00Z",

"status": "UNVERIFIED",

"type": "LOCATION",

"uuid": "55a57f33-8bc0-4984-b4df-fdaff72df39d",

"visibleInDataSources": [

"string"

]

}

]

},

"success": true

}

Notifications overview

Timely knowledge about Internet “events”, such as drops in traffic or routing issues, are potentially of interest to multiple audiences. Customer service or help desk agents can use the information to help diagnose customer/user complaints about application performance or availability. Similarly, network administrators can use the information to better understand the state of the Internet outside their network. And civil society organizations can use the information to inform action plans aimed at maintaining communications and protecting human rights in areas of conflict or instability. With the new notifications functionality also being launched today, you can subscribe to be notified about observed traffic anomalies, confirmed Internet outages, route leaks, or route hijacks, at a country or autonomous system level. In the following sections, we discuss how to subscribe to and configure notifications, as well as the information contained within the various types of notifications.

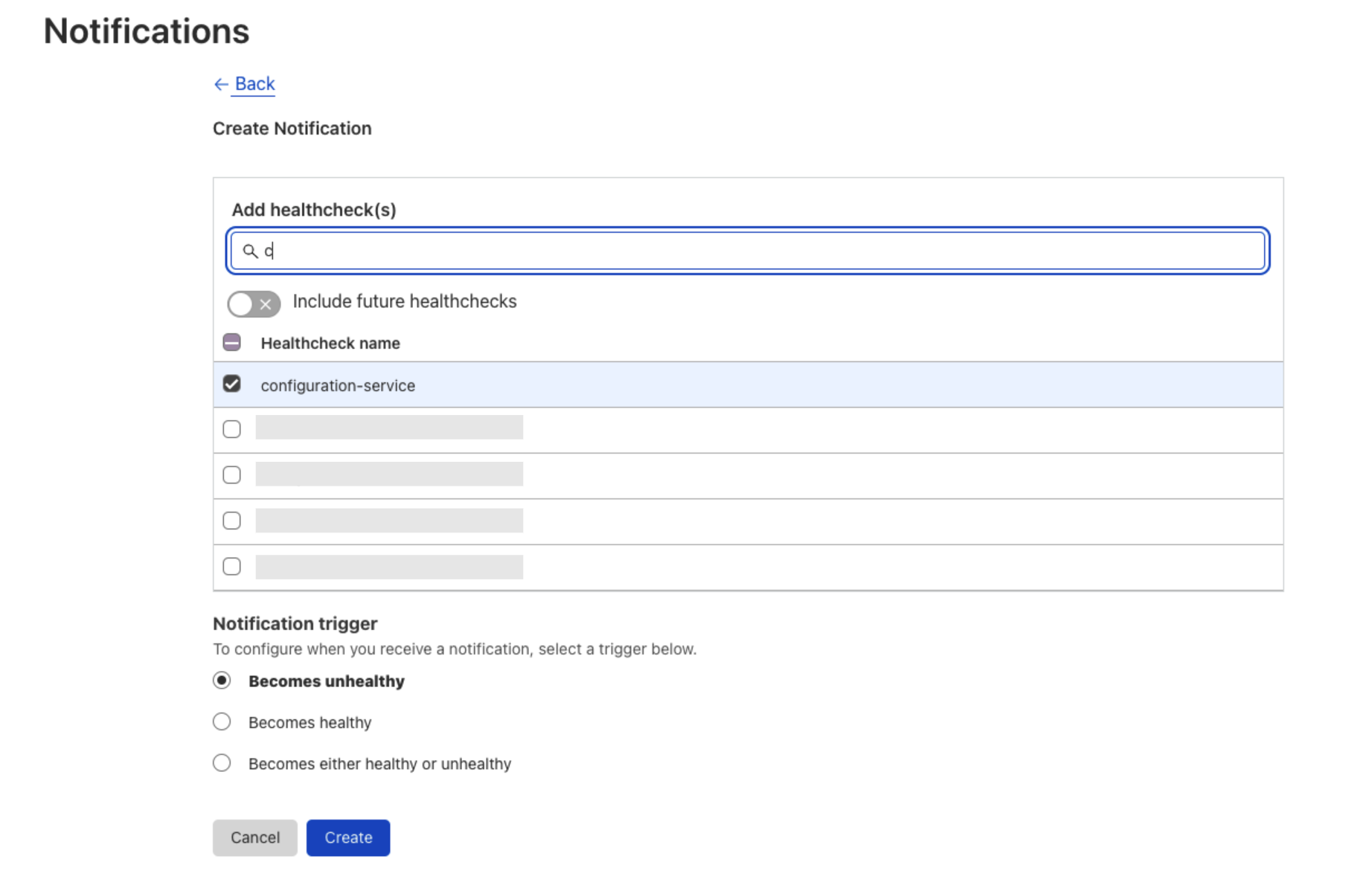

Subscribing to notifications

Note that you need to log in to the Cloudflare dashboard to subscribe to and configure notifications. No purchase of Cloudflare services is necessary — just a verified email address is required to set up an account. While we would have preferred to not require a login, it enables us to take advantage of Cloudflare’s existing notifications engine, allowing us to avoid having to dedicate time and resources to building a separate one just for Radar. If you don’t already have a Cloudflare account, visit https://dash.cloudflare.com/sign-up to create one. Enter your username and a unique strong password, click “Sign Up”, and follow the instructions in the verification email to activate your account. (Once you’ve activated your account, we also suggest activating two-factor authentication (2FA) as an additional security measure.)

Once you have set up and activated your account, you are ready to start creating and configuring notifications. The first step is to look for the Notifications (bullhorn) icon – the presence of this icon means that notifications are available for that metric — in the Traffic, Routing, and Outage Center sections on Cloudflare Radar. If you are on a country or ASN-scoped traffic or routing page, the notification subscription will be scoped to that entity.

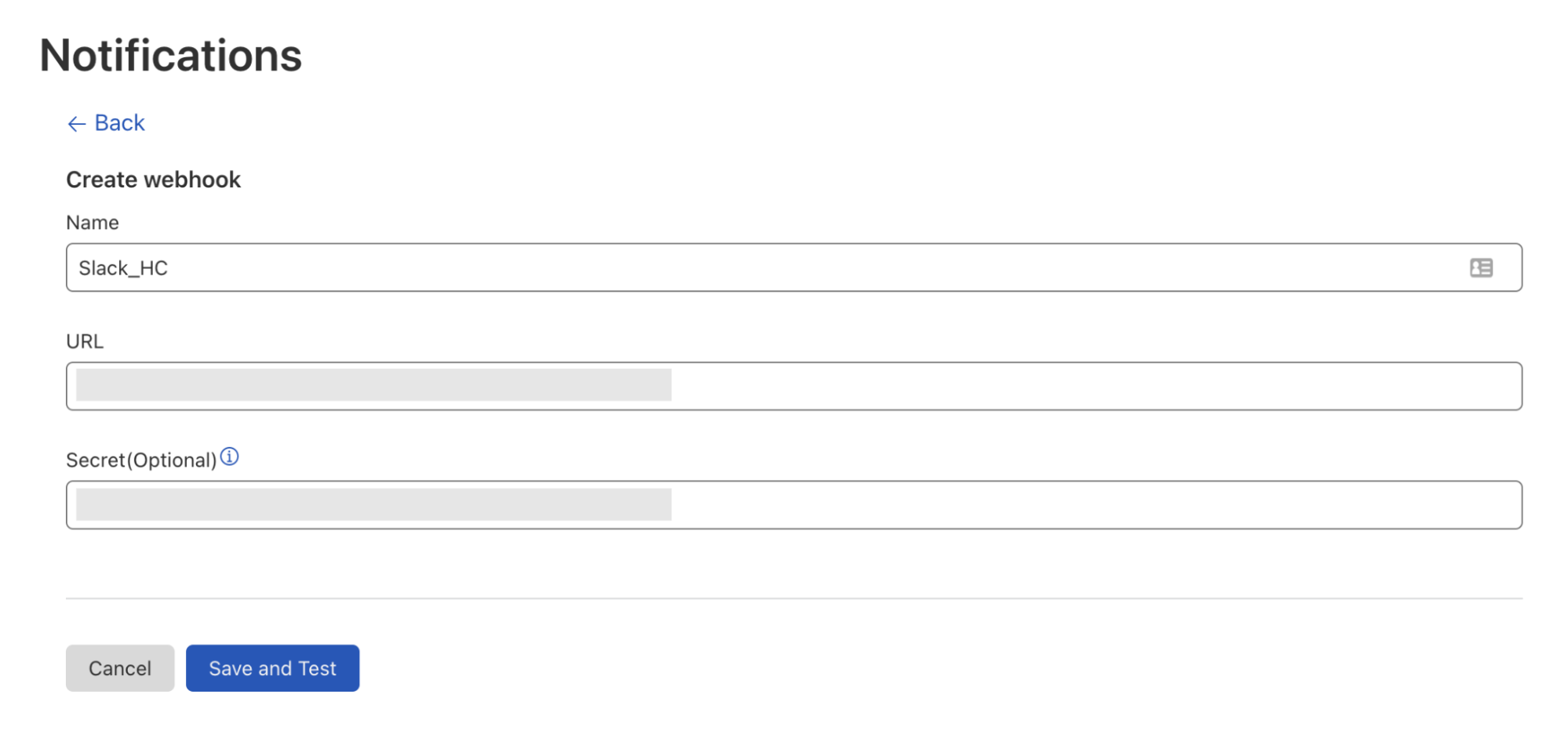

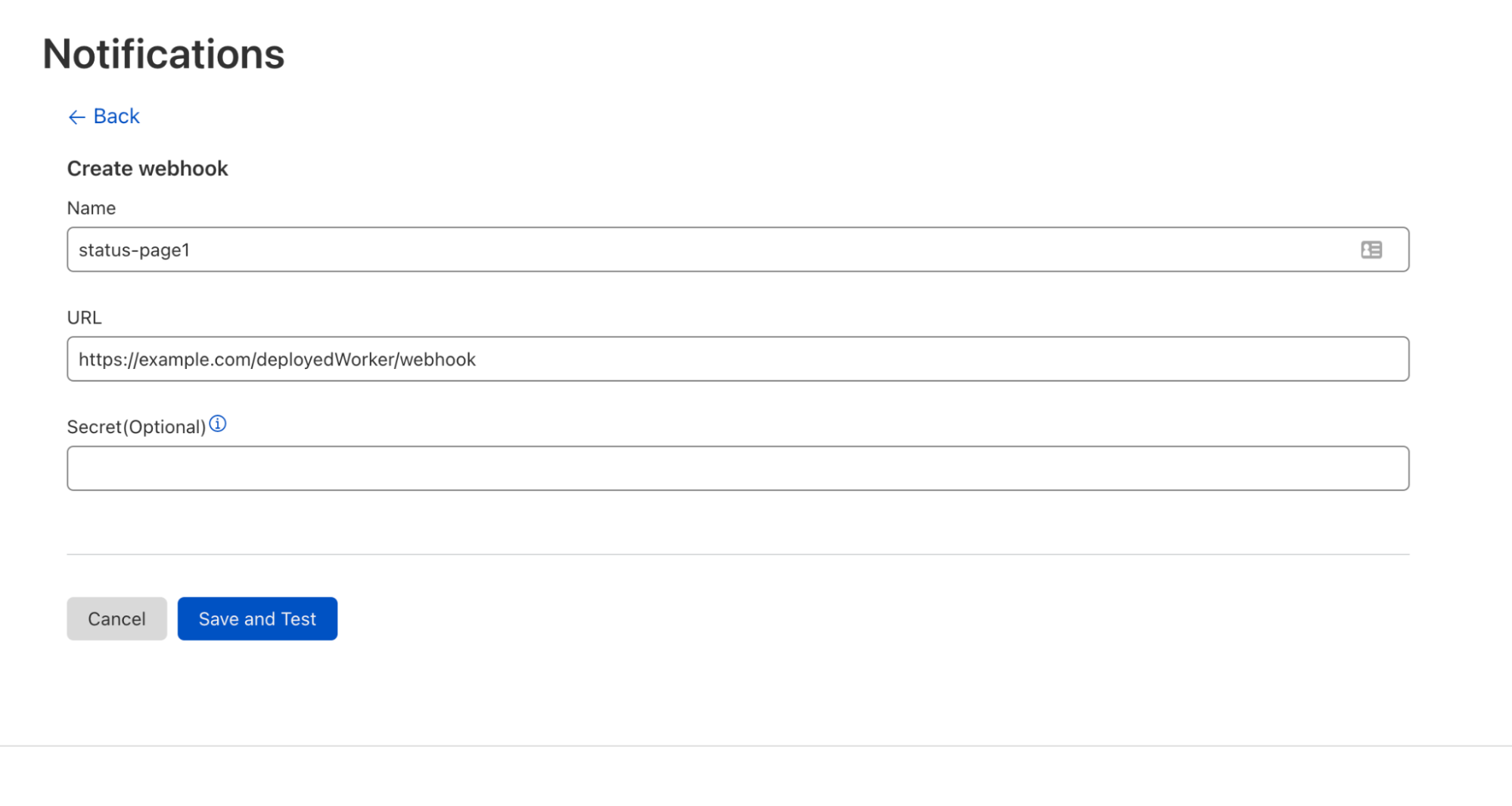

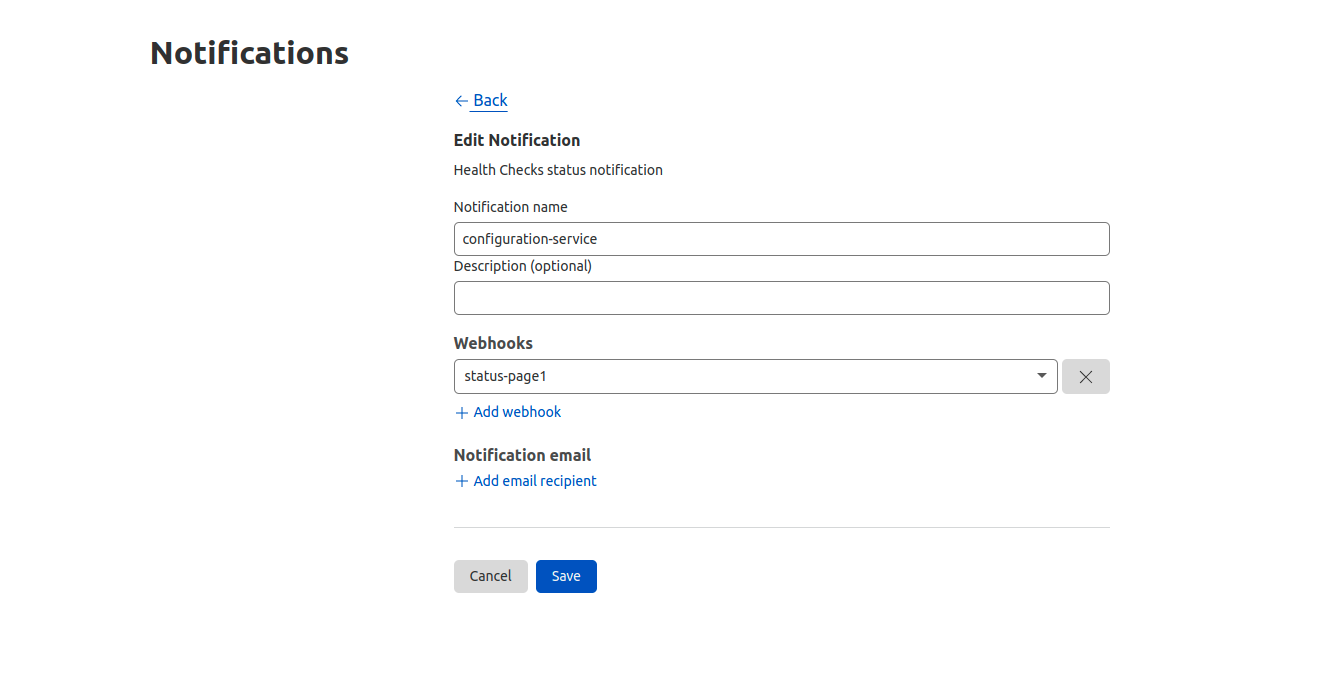

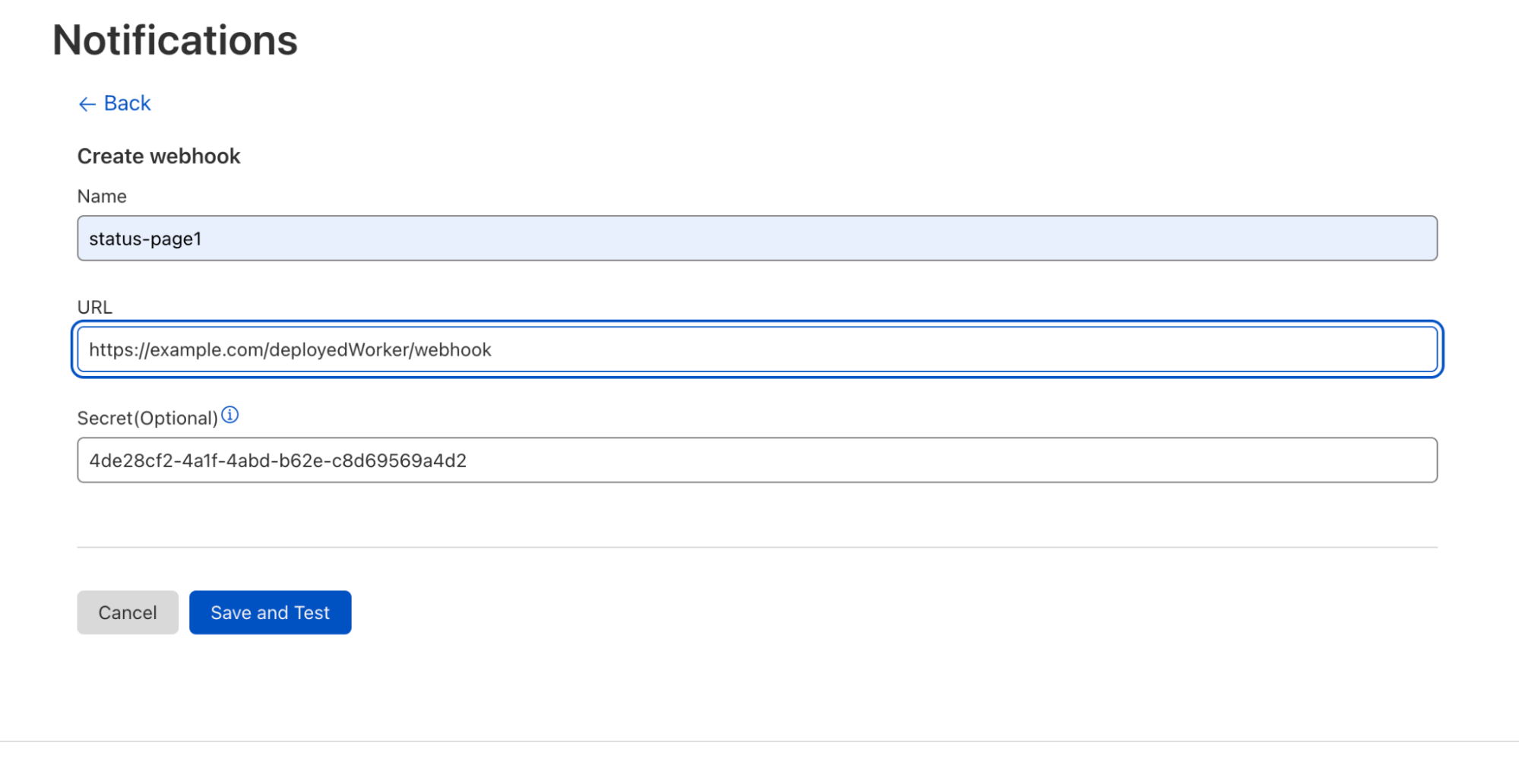

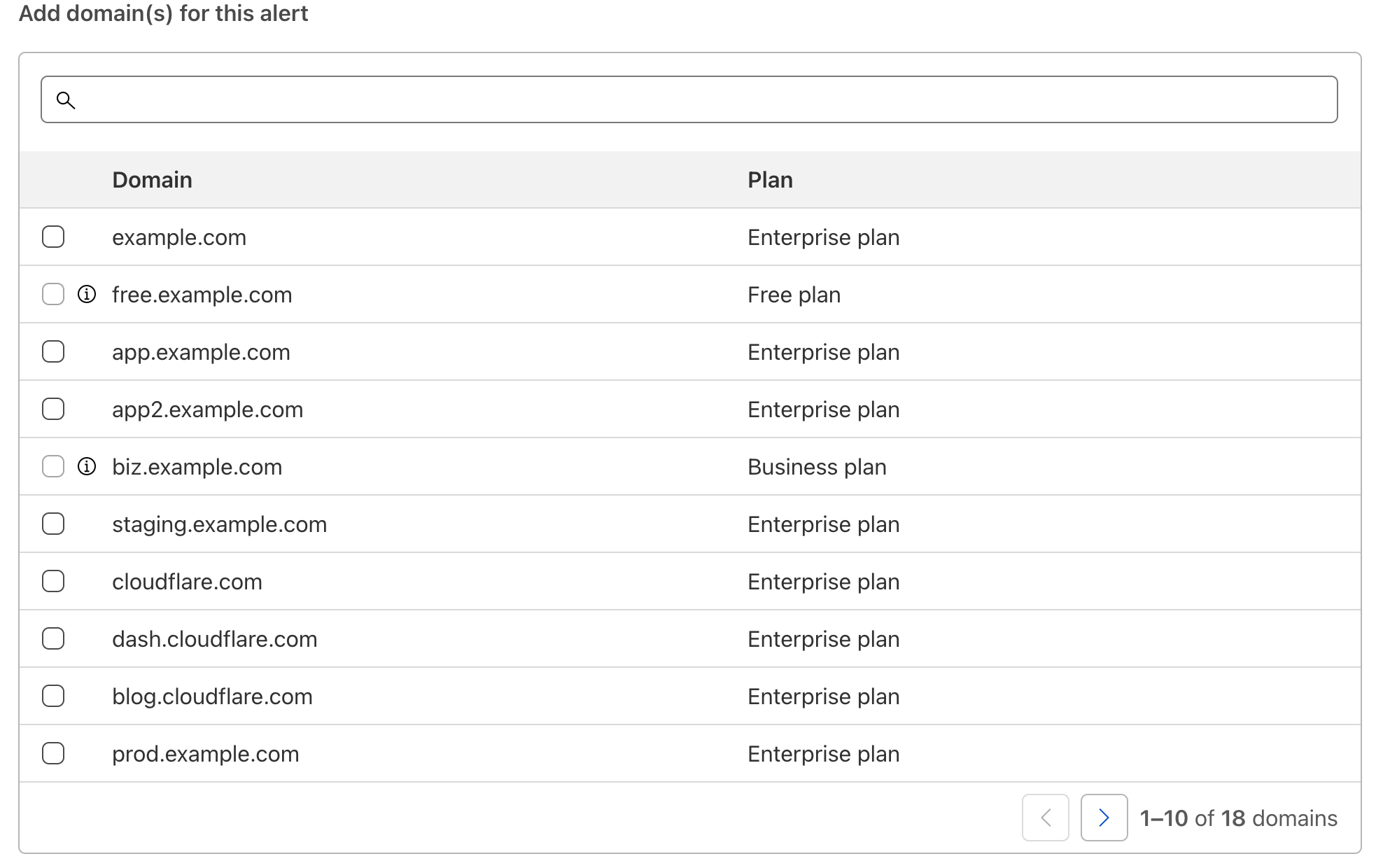

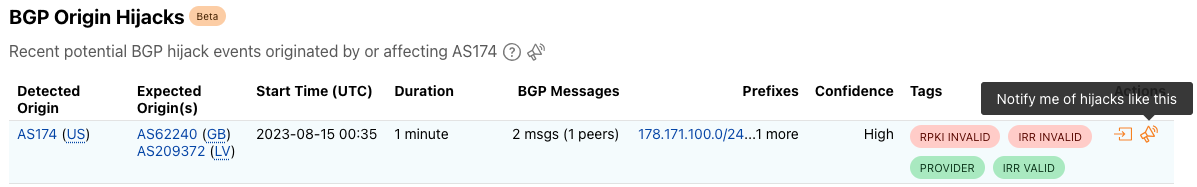

After clicking a notification icon, you’ll be taken to the Cloudflare login screen. Enter your username and password (and 2FA code if required), and once logged in, you’ll see the Add Notification page, pre-filled with the key information passed through from the referring page on Radar, including relevant locations and/or ASNs. (If you are already logged in to Cloudflare, then you’ll be taken directly to the Add Notification page after clicking a notification icon on Radar.) On this page, you can name the notification, add an optional description, and adjust the location and ASN filters as necessary. Enter an email address for notifications to be sent to, or select an established webhook destination (if you have webhooks enabled on your account).

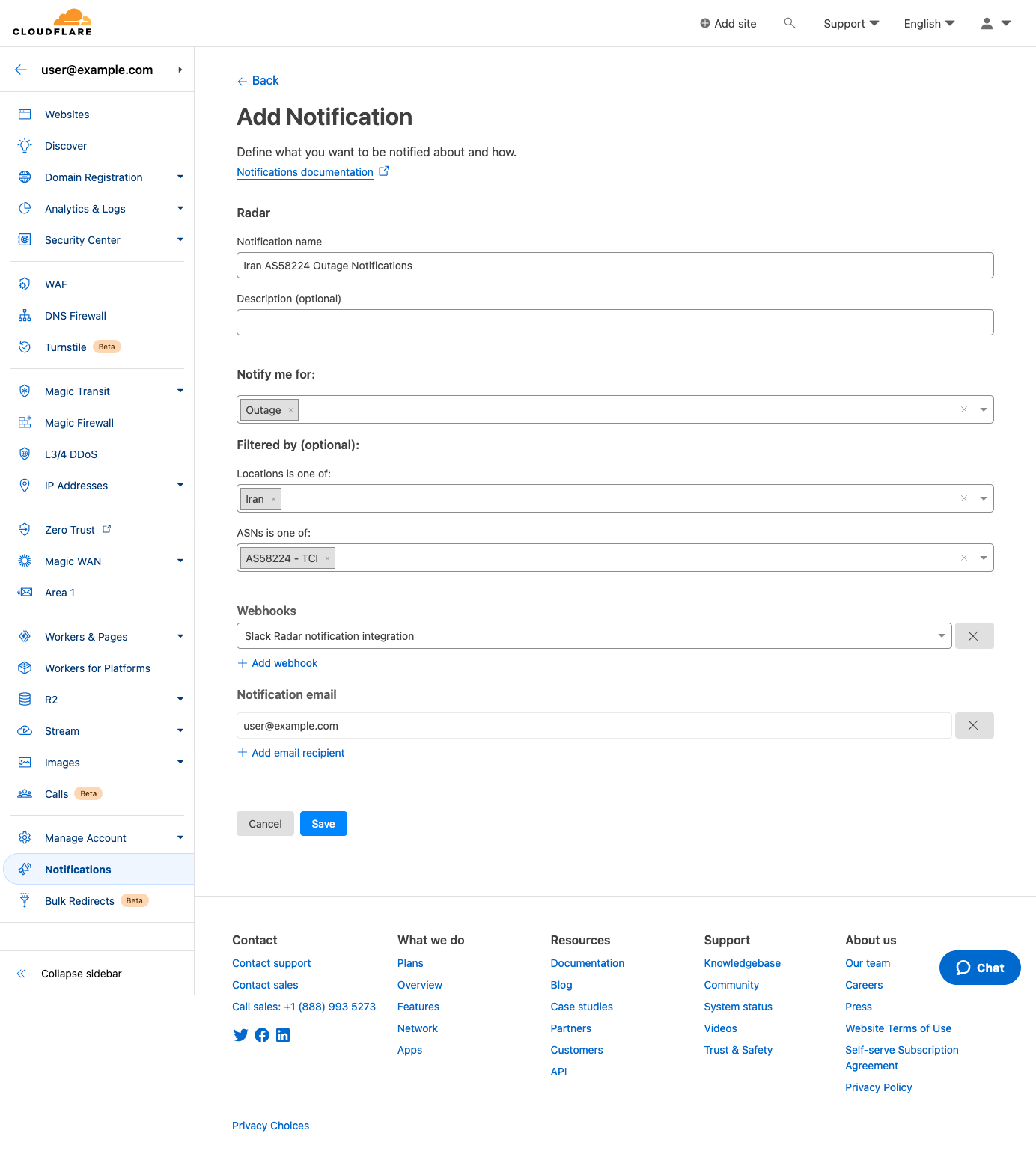

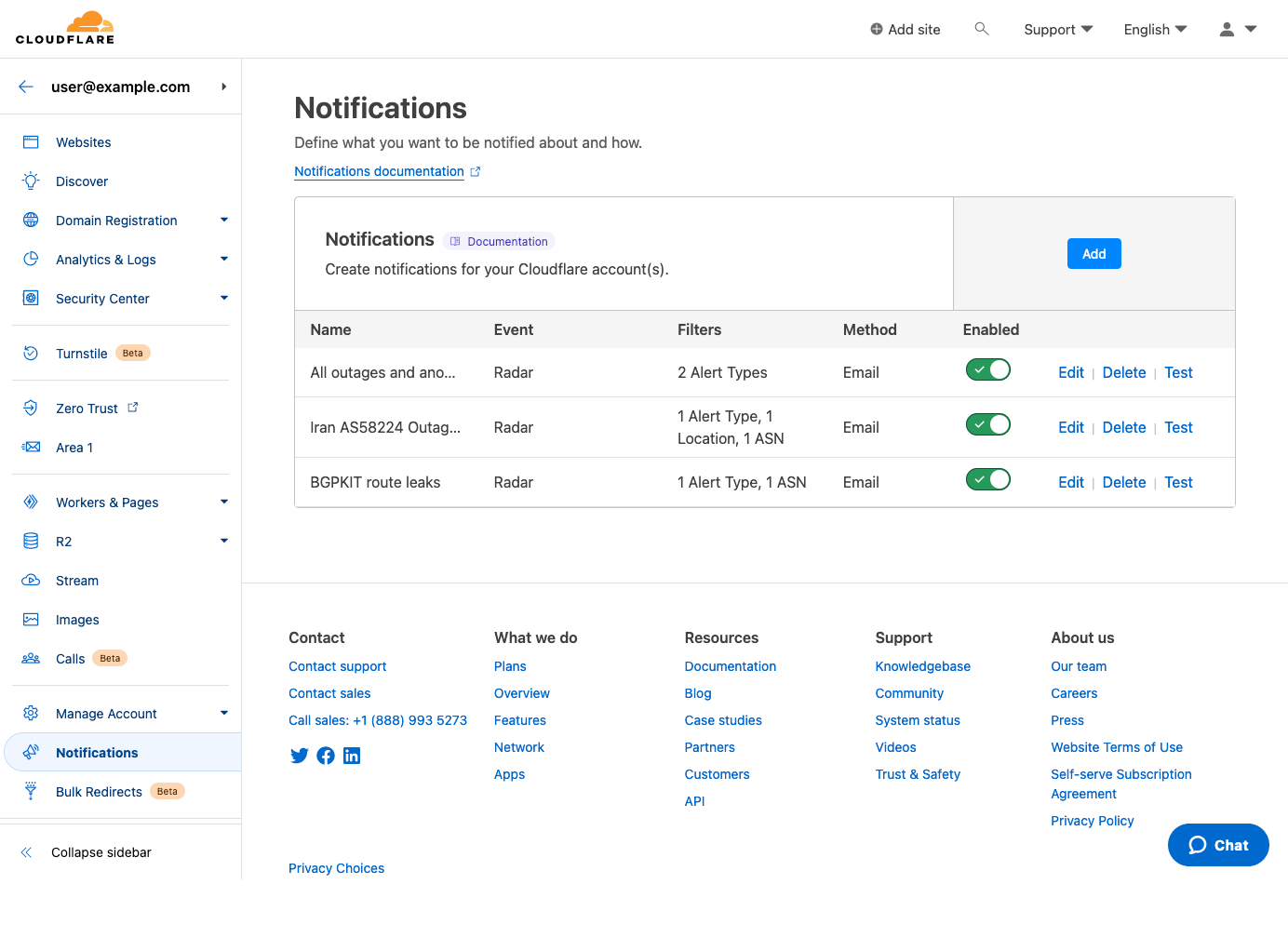

Click “Save”, and the notification is added to the Notifications Overview page for the account.

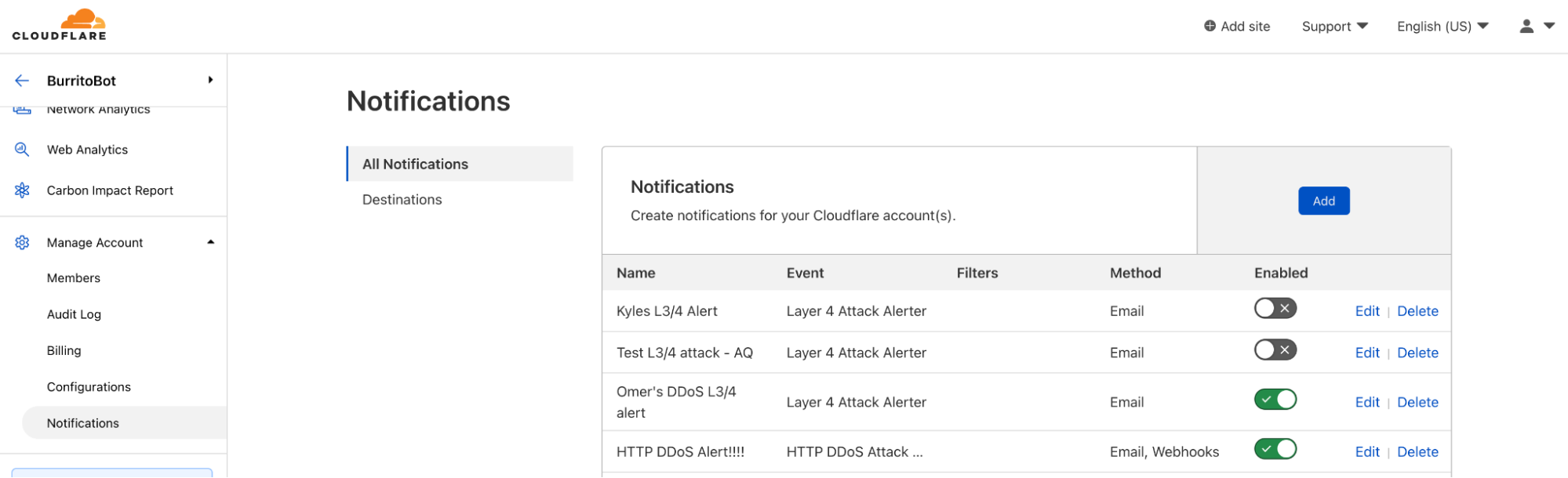

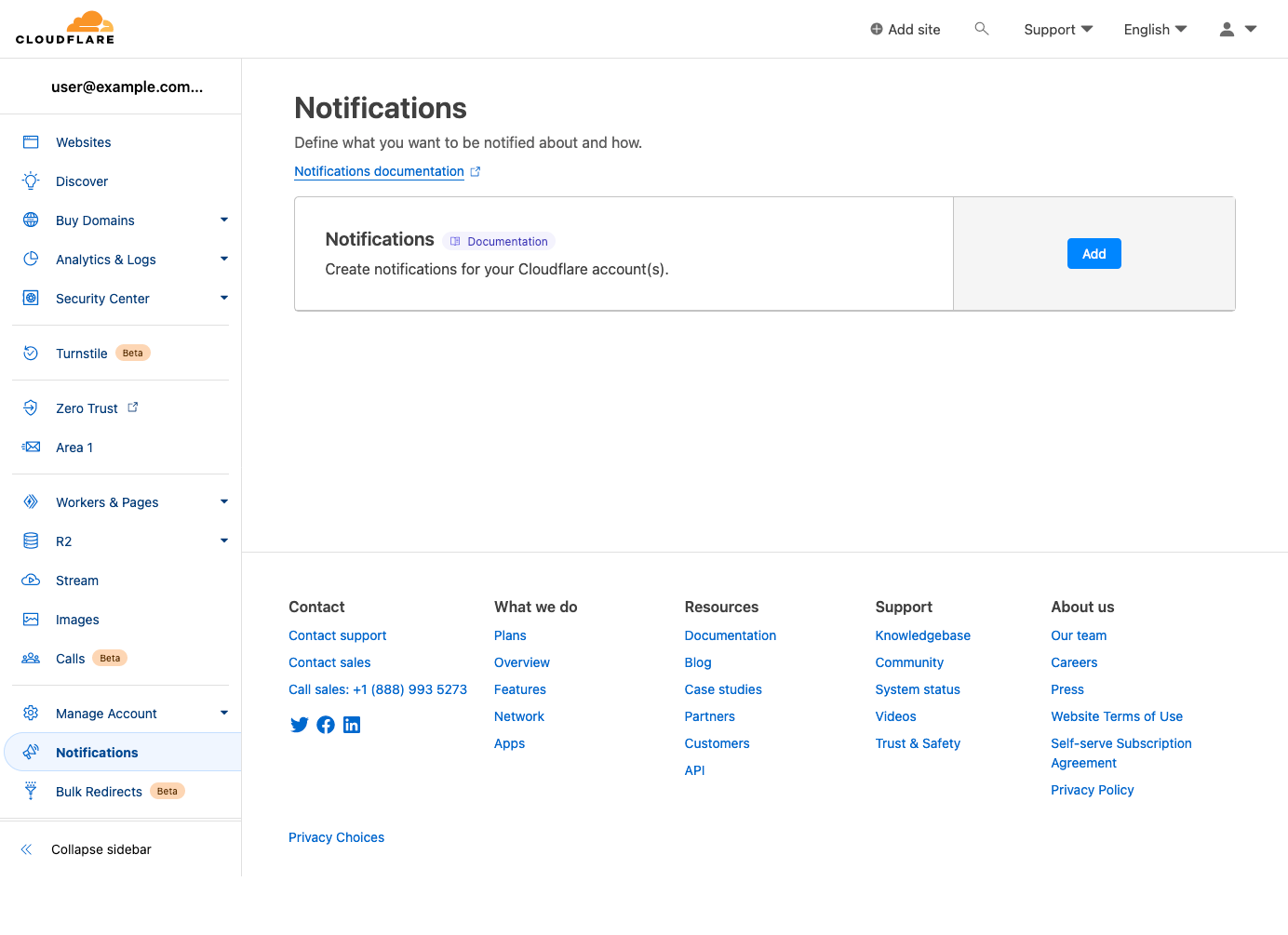

You can also create and configure notifications directly within Cloudflare, without starting from a link on Radar a Radar page. To do so, log in to Cloudflare, and choose “Notifications” from the left side navigation bar. That will take you to the Notifications page shown below. Click the “Add” button to add a new notification.

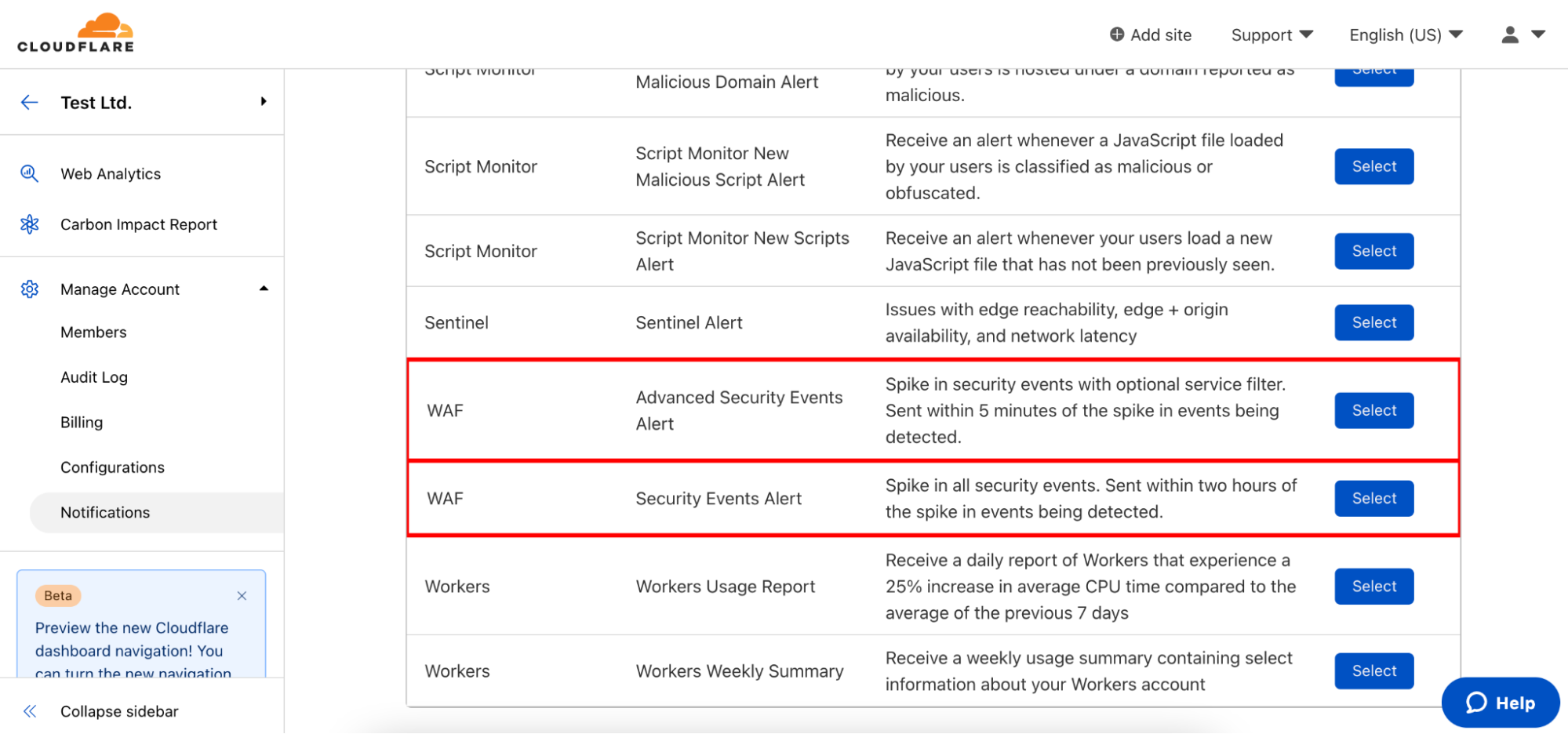

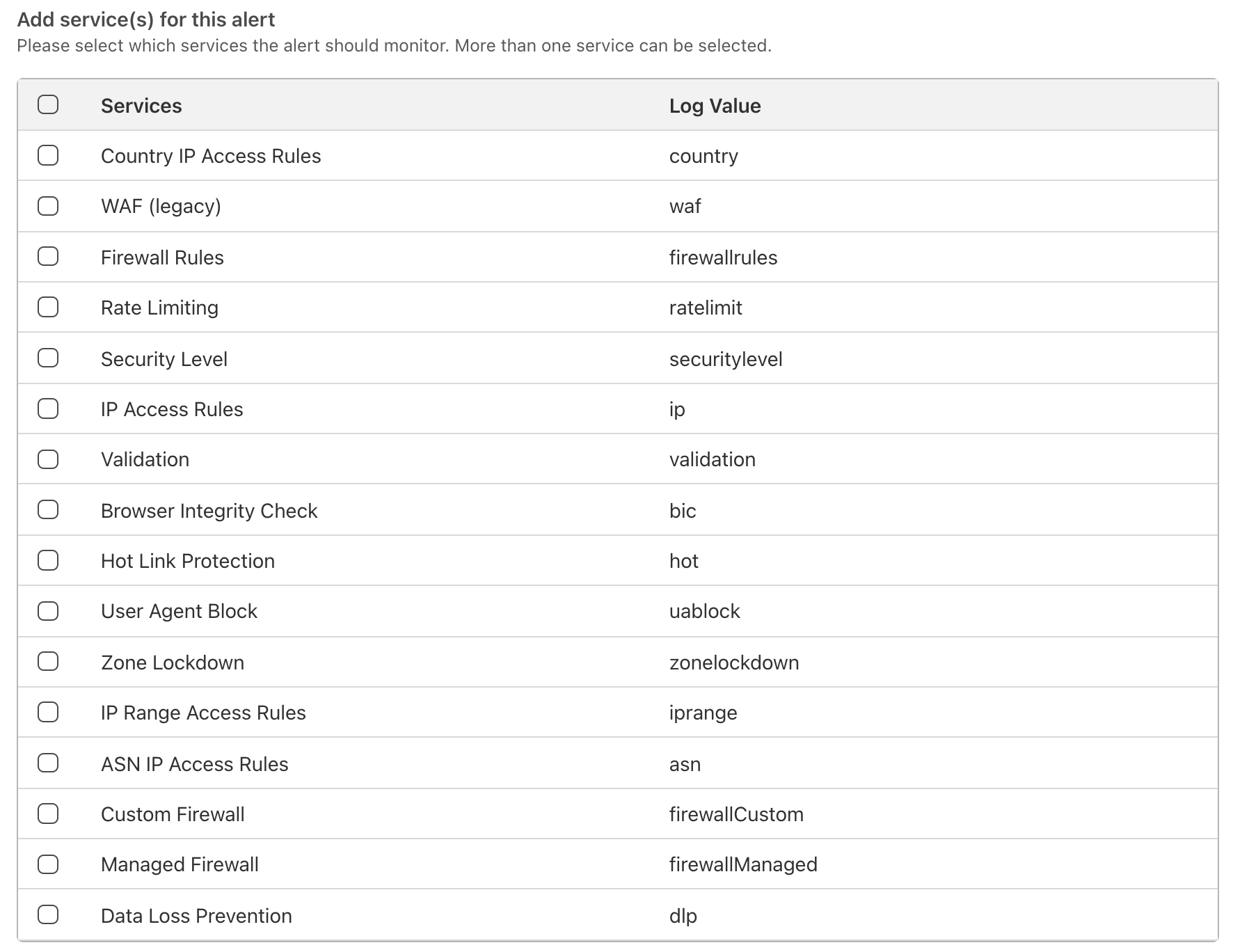

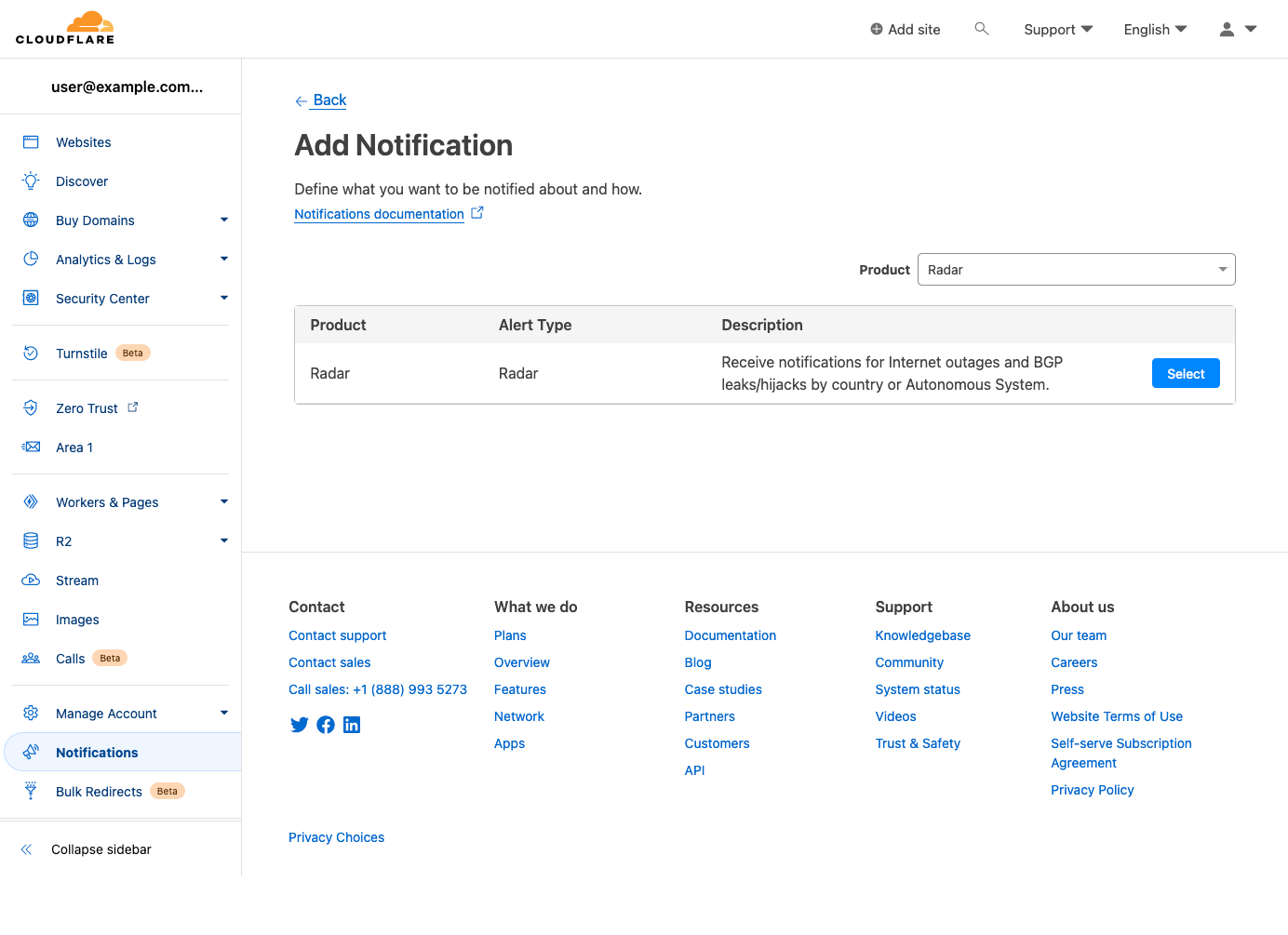

On the next page, search for and select “Radar” from the list of Cloudflare products for which notifications are available.

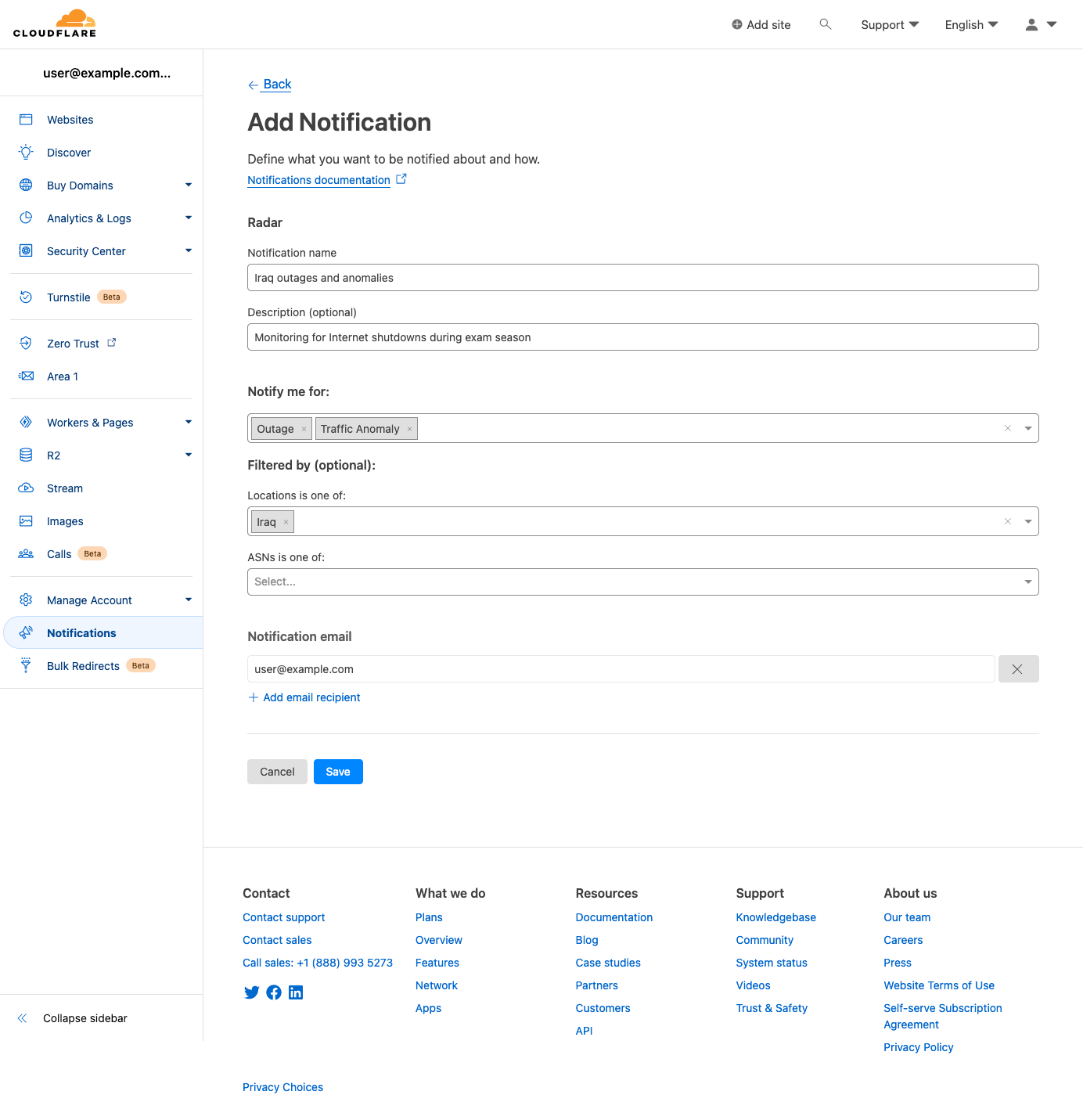

On the subsequent “Add Notification” page, you can create and configure a notification from scratch. Event types can be selected in the “Notify me for:” field, and both locations and ASNs can be searched for and selected within the respective “Filtered by (optional)” fields. Note that if no filters are selected, then notifications will be sent for all events of the selected type(s). Add one or more emails to send notifications to, or select a webhook target if available, and click “Save” to add it to the list of notifications configured for your account.

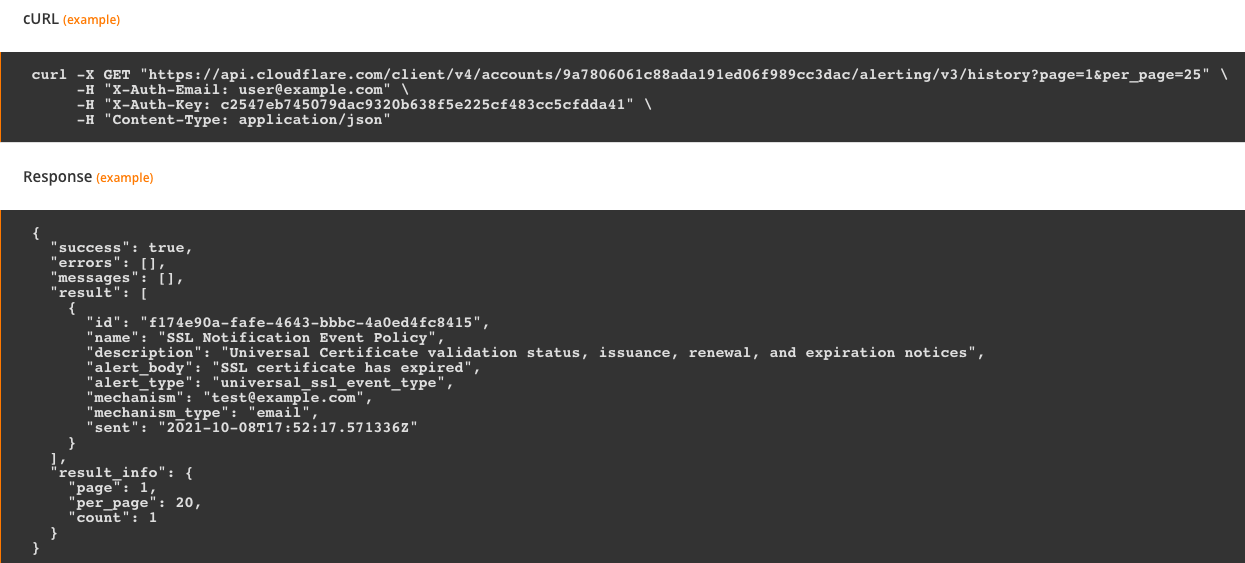

It is worth mentioning that advanced users can also create and configure notifications through the Cloudflare API Notification policies endpoint, but we will not review that process within this blog post.

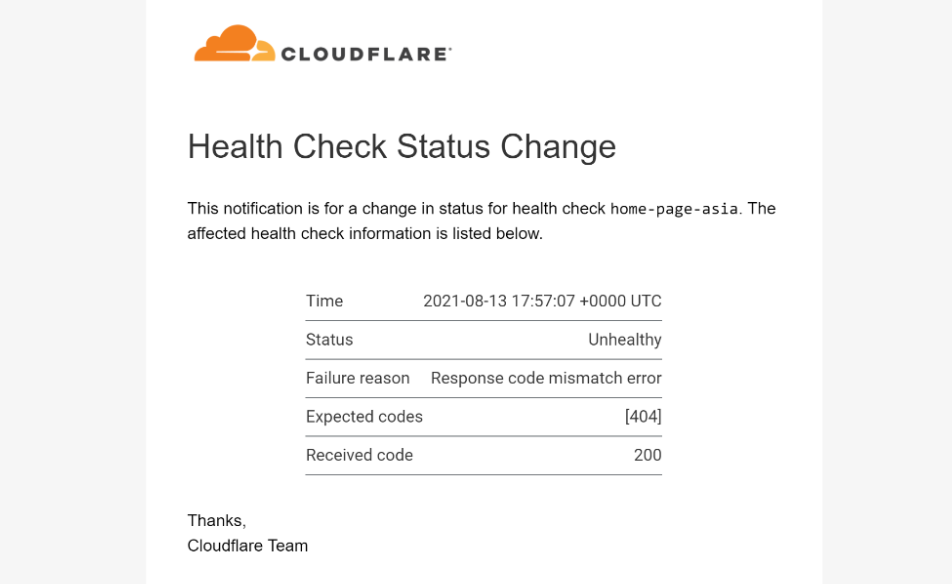

Notification messages

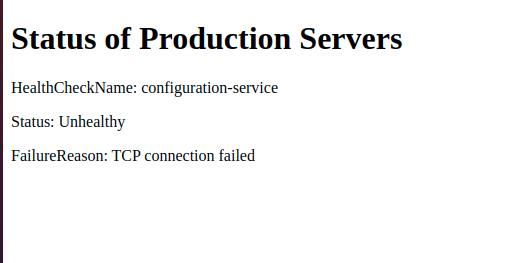

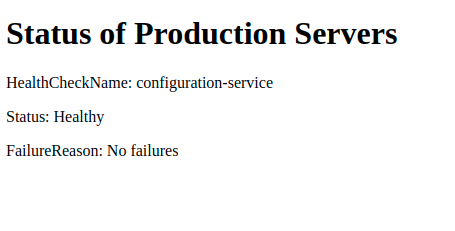

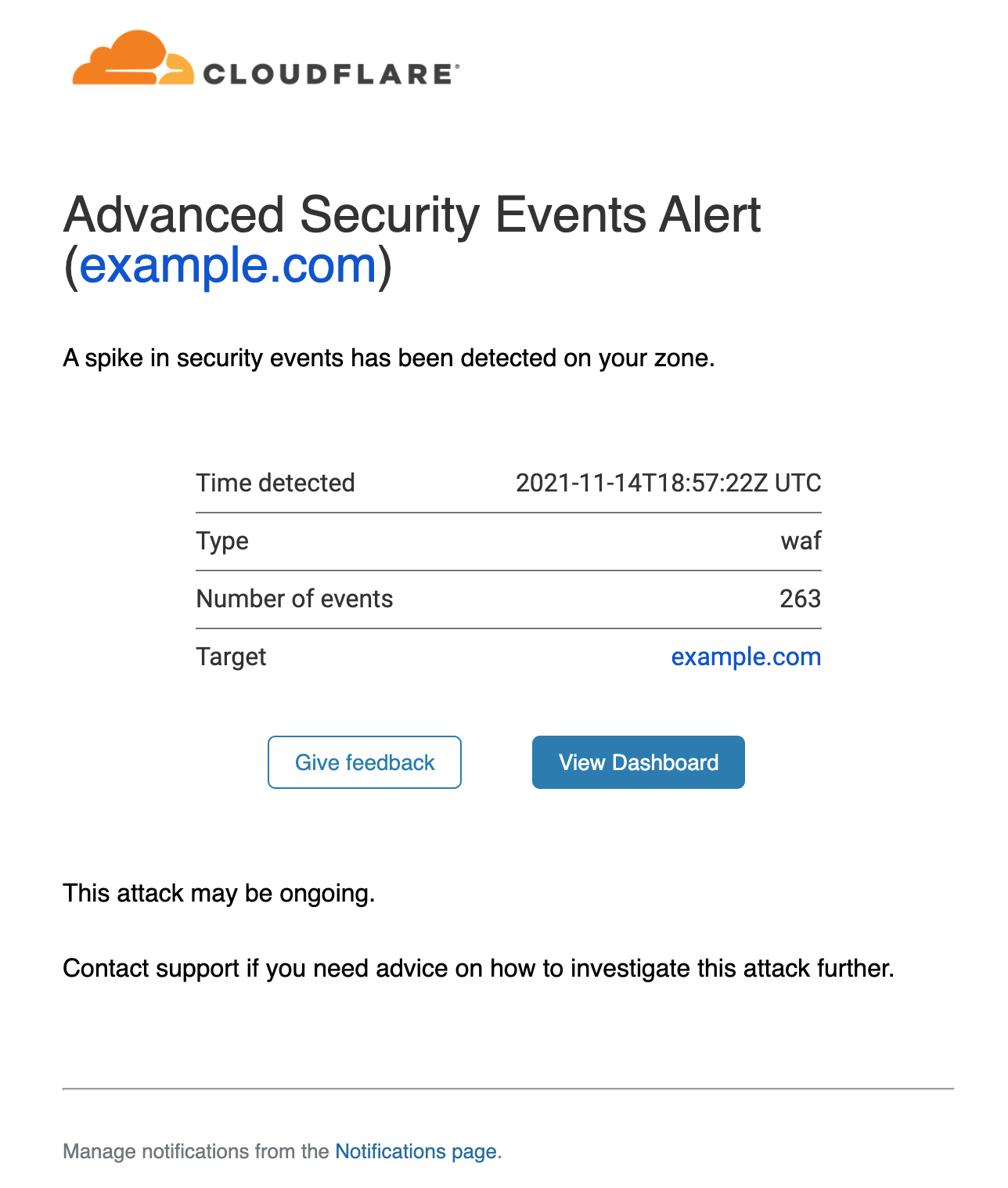

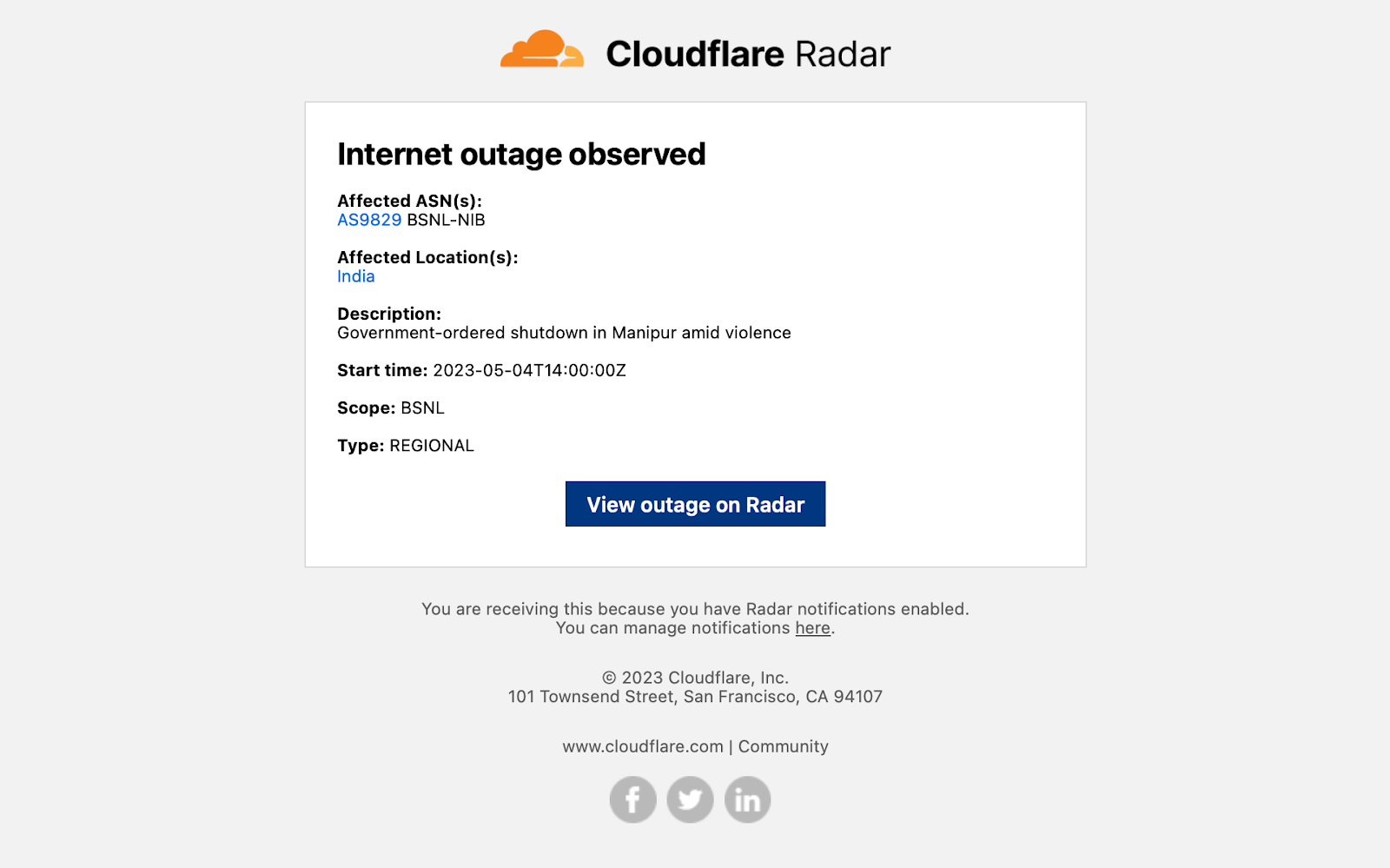

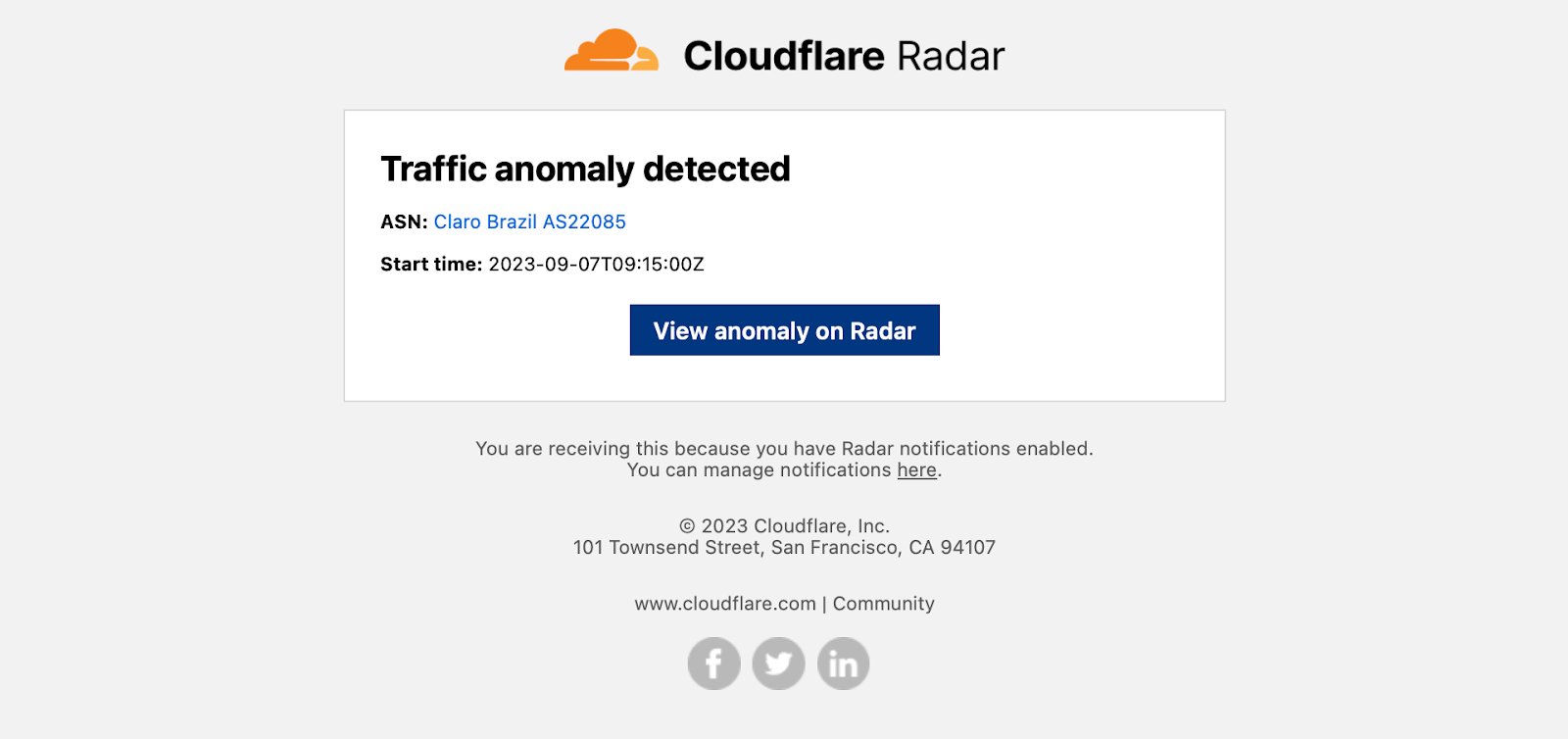

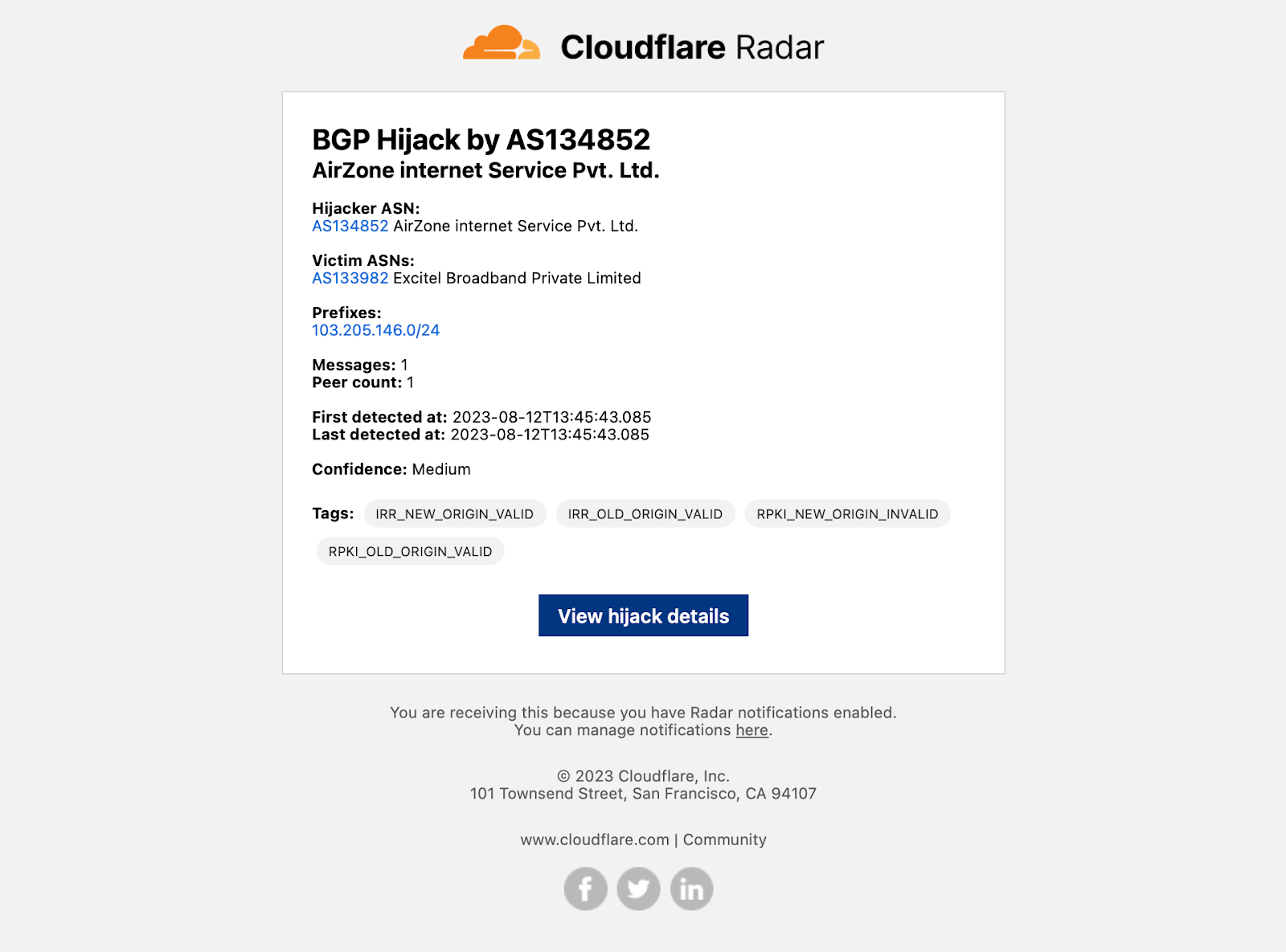

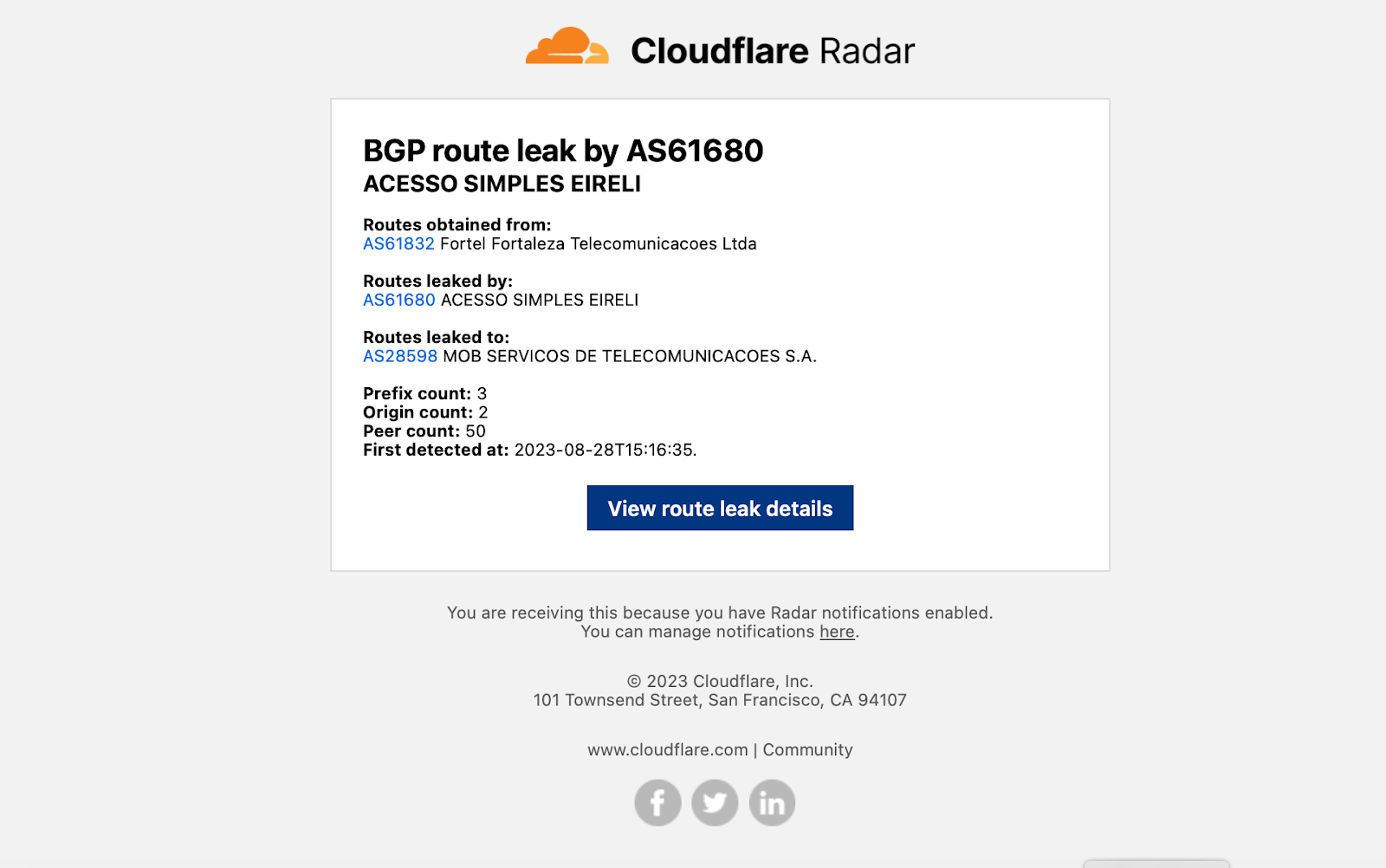

Example notification email messages are shown below for the various types of events. Each contains key information like the type of event, affected entities, and start time — additional relevant information is included depending on the event type. Each email includes both plaintext and HTML versions to accommodate multiple types of email clients. (Final production emails may vary slightly from those shown below.)

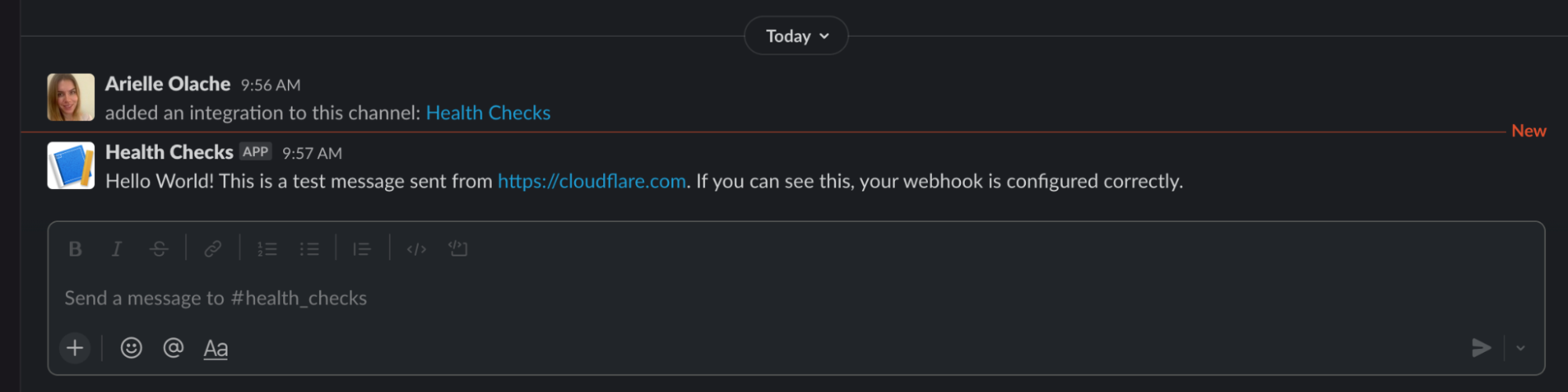

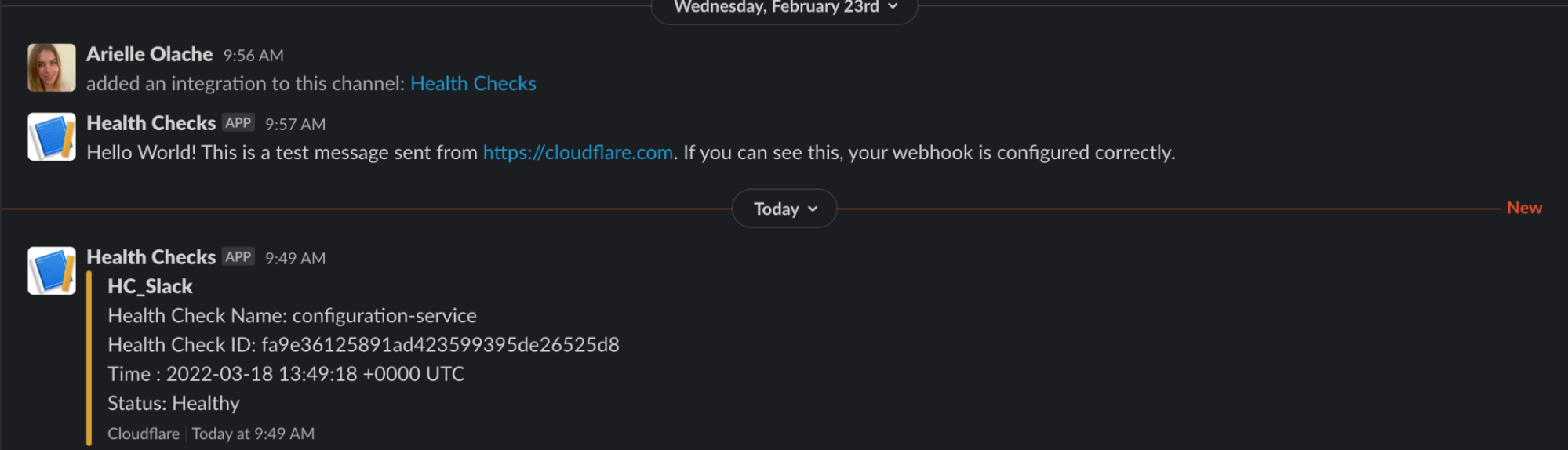

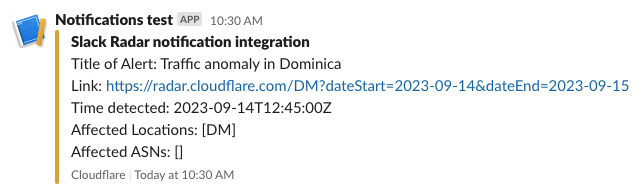

If you are sending notifications to webhooks, you can integrate those notifications into tools like Slack. For example, by following the directions in Slack’s API documentation, creating a simple integration took just a few minutes and results in messages like the one shown below.

Conclusion

Cloudflare’s unique perspective on the Internet provides us with near-real-time insight into unexpected drops in traffic, as well as potentially problematic routing events. While we’ve been sharing these insights with you over the past year, you had to visit Cloudflare Radar to figure out if there were any new “events”. With the launch of notifications, we’ll now automatically send you information about the latest events that you are interested in.

We encourage you to visit Cloudflare Radar to familiarize yourself with the information we publish about traffic anomalies, confirmed Internet outages, BGP route leaks, and BGP origin hijacks. Look for the notification icon on the relevant graphs and tables on Radar, and go through the workflow to set up and subscribe to notifications. (And don’t forget to sign up for a Cloudflare account if you don’t have one already.) Please send us feedback about the notifications, as we are constantly working to improve them, and let us know how and where you’ve integrated Radar notifications into your own tools/workflows/organization.

Follow Cloudflare Radar on social media at @CloudflareRadar (Twitter), cloudflare.social/@radar (Mastodon), and radar.cloudflare.com (Bluesky).