Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=1F_4AC9_oSE

Common questions when evolving your VM program

Post Syndicated from Rapid7 original https://blog.rapid7.com/2022/11/02/common-questions-when-evolving-your-vm-program/

Authored by Natalie Hurd

Perhaps your organization is in the beginning stages of planning a digital transformation, and it’s time to start considering how the security team will adapt. Or maybe your digital transformation is well underway, and the security team is struggling to keep up with the pace of change. Either way, you’ve likely realized that the approach you’ve used with traditional infrastructure will need to evolve as you think about managing risk in your modern ecosystem. After all, a cloud instance running Kubernetes clusters to support application development is quite different from an on-premise Exchange server!

A recent webinar led by two of Rapid7’s leaders, Peter Scott (VP, Product Marketing) and Cindy Stanton (SVP, Product and Customer Marketing), explored the specific challenges of managing the evolution of risk across traditional and cloud environments. The challenges may be plentiful, but the strategies for success are just as numerous!

Over the course of several years, Rapid7 has helped many customers evolve their security programs in order to keep pace with the evolution of technology, and Peter and Cindy have noticed some themes of what tends to make these organizations successful. They advise working with your team & other stakeholders to find answers to the following questions:

- What sorts of resources does your organization run in the cloud, and who owns them?

- What does “good” look like when securing your cloud assets, and how will you measure success?

- Which standards and frameworks is your company subject to, compliance or otherwise?

Gathering answers to these questions as early as possible will not only aid in the efficacy of your security program, it will also help to establish strong relationships & understanding amongst key stakeholders.

Establishing Ownership

Proactively identifying teams and individuals that own the assets in your environment will go a long way towards ensuring speed of resolution when risk is present. Peter strongly suggests working with your organization’s Product or Project Development teams to figure out who owns what and get it documented. This way, when you see a misconfiguration, vulnerability or threat that needs to be dealt with, you know exactly who to talk to to get it resolved, saving important time.

The owners that you identify will not only have a hand to play in fixing problems, they can help make the necessary changes to “shift left” and prevent problems in the first place. The sooner you can identify these stakeholders and build relationships with them, the more successful you’ll be in the long run.

Defining “Good” and Tracking Achievement

Since we’ve established that securing traditional environments is not the same as securing modern environments, we can also agree that the definition of success may not be the same either! After you’ve established ownership, Cindy notes that it’s also important to define what “good” looks like, and how you plan to measure & report on it. Once you’ve created a definition of “good” within your immediate team, it’s also important to socialize that with stakeholders across your organization and track progress towards achieving that state. Tracking & sharing progress is valuable whether your organization meets, exceeds or falls short of your goals; celebrating the wins is just as important as seeking to understand the losses!

Aligning to Standards and Frameworks

Every industry comes with its own set of compliance and regulatory standards that must be adhered to, and it’s important to understand how security fits in. Your team can use these frameworks as a North Star of sorts when considering how to secure your environment, and the cloud aspects of your environment are no exception. Ben Austin, the moderator of the webinar, provides some perspective on the utility of compliance as a method for demonstrating progress in risk reduction. If your assets are more compliant today than they were 3 months ago, that’s a win for every stakeholder involved. If assets are getting less compliant, then you can work with your already-identified asset owners to make a plan to turn the ship around, and contextualize the importance of remaining compliant with them.

Check out our two previous blogs in the series to learn more about Addressing the Evolving Attack Surface and Adapting your VM Program to Regain Control, and watch the full webinar replay any time!

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/913504/

Security updates have been issued by Debian (ffmpeg and linux-5.10), Fedora (libksba, openssl, and php), Gentoo (openssl), Mageia (curl, gdk-pixbuf2.0, libksba, nbd, php, and virglrenderer), Red Hat (kernel, kernel-rt, libksba, and openssl), SUSE (gnome-desktop, hdf5, hsqldb, kernel, nodejs10, openssl-3, php7, podofo, python-Flask-Security, python-lxml, and xorg-x11-server), and Ubuntu (backport-iwlwifi-dkms, firefox, ntfs-3g, and openssl).

NIKKOR Z 600mm – Faster, Sharper, Lighter and EXPENSIVE-ER…

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=km-WjZZ2aDk

The Ghost Ship of Diamond Shoals

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=S6XPAYjCCAc

Cloudflare is not affected by the OpenSSL vulnerabilities CVE-2022-3602 and CVE-2022-37

Post Syndicated from Evan Johnson original https://blog.cloudflare.com/cloudflare-is-not-affected-by-the-openssl-vulnerabilities-cve-2022-3602-and-cve-2022-37/

Yesterday, November 1, 2022, OpenSSL released version 3.0.7 to patch CVE-2022-3602 and CVE-2022-3786, two HIGH risk vulnerabilities in the OpenSSL 3.0.x cryptographic library. Cloudflare is not affected by these vulnerabilities because we use BoringSSL in our products.

These vulnerabilities are memory corruption issues, in which attackers may be able to execute arbitrary code on a victim’s machine. CVE-2022-3602 was initially announced as a CRITICAL severity vulnerability, but it was downgraded to HIGH because it was deemed difficult to exploit with remote code execution (RCE). Unlike previous situations where users of OpenSSL were almost universally vulnerable, software that is using other versions of OpenSSL (like 1.1.1) are not vulnerable to this attack.

How do these issues affect clients and servers?

These vulnerabilities reside in the code responsible for X.509 certificate verification – most often executed on the client side to authenticate the server and the certificate presented. In order to be impacted by this vulnerability the victim (client or server) needs a few conditions to be true:

- A malicious certificate needs to be signed by a Certificate Authority that the victim trusts.

- The victim needs to validate the malicious certificate or ignore a series of warnings from the browser.

- The victim needs to be running OpenSSL 3.0.x before 3.0.7.

For a client to be affected by this vulnerability, they would have to visit a malicious site that presents a certificate containing an exploit payload. In addition, this malicious certificate would have to be signed by a trusted certificate authority (CA).

Servers with a vulnerable version of OpenSSL can be attacked if they support mutual authentication – a scenario where both client and a server provide a valid and signed X.509 certificate, and the client is able to present a certificate with an exploit payload to the server.

How should you handle this issue?

If you’re managing services that run OpenSSL: you should patch vulnerable OpenSSL packages. On a Linux system you can determine if you have any processes dynamically loading OpenSSL with the lsof command. Here’s an example of finding OpenSSL being used by NGINX.

root@55f64f421576:/# lsof | grep libssl.so.3

nginx 1294 root mem REG 254,1 925009 /usr/lib/x86_64-linux-gnu/libssl.so.3 (path dev=0,142)

Once the package maintainers for your Linux distro release OpenSSL 3.0.7 you can patch by updating your package sources and upgrading the libssl3 package. On Debian and Ubuntu this can be done with the apt-get upgrade command

root@55f64f421576:/# apt-get --only-upgrade install libssl3

With that said, it’s possible that you could be running a vulnerable version of OpenSSL that the lsof command can’t find because your process is statically compiled. It’s important to update your statically compiled software that you are responsible for maintaining, and make sure that over the coming days you are updating your operating system and other installed software that might contain the vulnerable OpenSSL versions.

Key takeaways

Cloudflare’s use of BoringSSL helped us be confident that the issue would not impact us prior to the release date of the vulnerabilities.

More generally, the vulnerability is a reminder that memory safety is still an important issue. This issue may be difficult to exploit because it requires a maliciously crafted certificate that is signed by a trusted CA, and certificate issuers are likely to begin validating that the certificates they sign don’t contain payloads that exploit these vulnerabilities. However, it’s still important to patch your software and upgrade your vulnerable OpenSSL packages to OpenSSL 3.0.7 given the severity of the issue.

To learn more about our mission to help build a better Internet, start here. If you’re looking for a new career direction, check out our open positions.

Зависимостите в България – кратка справка от първа ръка

Post Syndicated from Юлия Георгиева original https://toest.bg/zavisimostite-v-bulgaria/

Традиционно темата за зависимостите в България е силно митологизирана, което води и до замитането ѝ под килима. Точно както за страшните караконджули и самодиви не се говори на висок глас и по тъмно, така и тази тема е поставена в сферата на мистичното зло. Според разпространената митология проблемът със зависимостите е проблем на новото, на времето на Прехода, а през соца такова нещо не е имало. Всъщност страната ни има доста богата култура на употреба на психоактивни вещества, която се корени далеч в праисторията ни.

Нека си припомним, че едни от първите производители на вино в света са траките, които са живели по тези земи и са започнали да произвеждат варианти на виното преди повече от 5000 години. А тогава на него се е гледало като на сакрална напитка, мост към божественото, възможност да комуникираш с по-висшите сили. Достъпът до него е бил привилегия за избрани. Но траките далеч не са се ограничавали само до употребата на алкохол. В свой доклад, озаглавен „За Родопите, растенията, съдържащи алкалоиди, и тяхното приложение в религиозните ритуали и медицината“, Лидия Кирилова пише:

Няма никакво съмнение за това, че траките са познавали и използвали в магическите си ритуали някои от многобройните лекарствени растения, растящи на Балканския полуостров. Според Херодот те „хвърляли върховете на конопа в огъня и дишали дима през кухи тръстикови стебла, от което ставали като пияни и накрая заспивали“. Съществуват неясни сведения, че по долината на Струма жреците приготвяли упойваща напитка от татул.

По-късно виното намира своето място и в християнската религиозна традиция. В православието църковното причастие включва даването на хляб и вино, които са символ на тялото и кръвта на Исус, а виното присъства на практика във всички църковни ритуали в жизнения цикъл на човека – от кръщенето, през сватбата, та до погребението. Един от най-обичаните и уважавани български светци е Трифон Зарезан, наследник на Дионис. А исторически виното е и символ на сила и могъщество, привилегия на победителите – Крум пие вино от черепа на победения Никифор.

В по-ново време популярна става и ракията, която бързо е припозната за традиционна напитка. Интересно е да отбележим, че тя е толкова важна за българина, та дори през османското владичество никой не е посегнал на домашното ѝ производство, независимо че ислямът не позволява употребата на алкохол. Традицията за производство и консумация на домашна ракия продължава и до днес, а политиците не успяват да съберат кураж и да поставят производството ѝ под контрол, който би гарантирал безопасността на напитката. През последните петнайсетина години темата се повдига регулярно и се подхвърля като горещ картоф между институциите, които се опитват да се спасят от отговорност. Куриозен факт е, че през 2015 г. един от опитите да се регламентира производството на ракия доведе до жалба от страна на малки производители до омбудсмана Мая Манолова и по този казус тя дори сезира Конституционния съд.

Цялата история дава ясна представа за важността, която употребата на алкохол и някои други психоактивни вещества (тютюнът все още е изключително популярен в страната) представлява за българите. Естествено, извън културнотрадиционния контекст съществува и чисто здравен. През 2019 г. България е на девето място в света по употреба на алкохол с впечатляващите 12,46 литра годишна консумация на човек. За сравнение: Русия е със скромните 10,5 литра, а сърбите изпиват eдва по 8,85 литра годишно. Очевидно консумацията на алкохол е широко приета в българското общество и на нея се гледа като на нещо нормално, дори задължително.

В същото време е странно как общественият разговор за консумацията на наркотици (които също са психоактивни вещества със сходен здравен риск и действие) е абсолютно табу.

А наркотиците никак не са ново явление

Действително употребата на психоактивни вещества през социализма е много по-слабо разпространена, отколкото в по-късните години, но все пак е налице. Вещества като омнопон, лидол, морфин, както и различни препарати, съдържащи опиати, са достъпни само за малък кръг от хора. Те се снабдяват с тях чрез познати лекари и медицински лица, с фалшиви рецепти или кражби от аптеки и болници.

През 60-те години проблемът става толкова видим, че Народната република създава Кабинет за лечение на алкохолизъм и тютюнопушене, който през 70-те се трансформира в Център за профилактика и лечение на зависимости. През 80-те години центърът се превръща във водещо лечебно заведение за зависими и приема пациенти и от други балкански страни, като Гърция, където тогава подобни грижи не съществуват. През 2004 г. центърът е регистриран като Държавна психиатрична болница за лечение на наркомании и алкохолизъм на бюджетна издръжка с капацитет от 40 места (през 70-те години са били 160).

Важно е също да се отбележи, че през 80-те години България е известна с износа си на каптагон (вид амфетамин) към Близкия изток, който е сериозен източник на така нужната на НРБ твърда валута. Този износ поставя основите и на един от най-големите канали за трафик на наркотици между Европа и Близкия изток, който е ключов и до днес.

Деветдесетте години на ХХ век са времето, когато България се сблъсква челно с огромния проблем за нелегалните наркотици. Докато хероиновата епидемия удря Западна Европа през 80-те и страните започват да вземат спешни мерки, в България първоначално всичко изглежда спокойно. Отварянето на страната обаче води до навлизането на хероина и кокаина от Близкия изток (основно през същия онзи канал за износ на каптагон), а родната марихуана става суперпопулярна и се разпространява със скоростта на горски пожар.

Невижданата хероинова епидемия отнема живота на хиляди млади хора. Хероинът бързо се превръща в начин на живот, вид бунт срещу системата. Използва се основно от хора с образование и желание за свобода и различност, гневни на предишния режим, на мизерията и безпътицата в този период.

Инициативи в сферата на зависимостите

В отговор на този изключително сериозен проблем през 1994 г. се създава Националният център по наркомании, който отговаря за изготвянето и координацията на дейностите по превенция, лечение, рехабилитация, намаляване на вредите и т.н. През 1997 г. се основава първата неправителствена организация, която се занимава с намаляването на вредите от употребата на наркотици – Фондация „Инициатива за здраве“. През следващата година се създават две сходни организации – „Доза обич“ в Бургас и „Панацея“ в Пловдив. Тяхното финансиране идва от международни източници.

Важно е да обърнем специално внимание на тези неправителствени организации и на тяхната дейност, защото те са в основата на последвалия отговор на държавата по темата. Дейността им е свързана главно с превенция на кръвнопреносими инфекции, като СПИН, хепатит С, хепатит В и сифилис, но най-важната им функция всъщност е поддържането на добър контакт с младите хора, които употребяват наркотици, осигуряване на среда, в която те да се чувстват сигурни и да споделят трудностите си, което им помага и да съберат кураж, за да започнат промяна. Изключително важно е, че организациите работят на терен, на местата, където хората се събират и се чувстват в безопасност.

Малко по-късно се създава и първата безплатна субституираща програма в страната, която оказва подкрепа на най-тежко зависимите от опиати пациенти. Създават се и първите частни терапевтични общности, които следват високи международни стандарти за работа. За съжаление, тогава се появяват и придобиват популярност и т.нар. комуни, които третират зависимите младежи като безплатна работна ръка и възможност за реализиране на по-големи печалби.

Стъпки в правилната посока

През 2003 г. в България навлиза Глобалният фонд за борба с ХИВ, туберкулоза и малария, чието финансиране дава възможност на Министерството на здравеопазването да предложи наистина всеобхватна превенция от ХИВ за хората, употребяващи наркотици. Освен трите вече работещи организации се формират множество нови, които покриват почти изцяло територията на страната. Изграждат се екипи, провеждат се обучения за персонала, закупуват се оборудване и апаратура. Благодарение на тези средства България се превръща в страна еталон за борбата със СПИН и други кръвнопреносими инфекции.

Прави впечатление обаче, че дейностите, свързани с превенция и лечение на зависимостите, някак изостават от превенцията от ХИВ. Държавните институции не подкрепят със средства доказано ефективните научни методи за превенция, обучение за които са получили преподаватели от цялата страна, и съвсем естествено те престават да бъдат от интерес за училищата и учителите. Безплатното лечение остава затворено в кръга на психиатричната помощ, където опит и знания за зависимостите имат единици специалисти ентусиасти, работещи основно в Държавната психиатрична болница за лечение на наркомании и алкохолизъм в софийския квартал „Суходол“ и в Шесто мъжко отделение на Държавната психиатрична болница в Раднево. Частните терапевтични общности стават мнозинство и цените им хвръкват, независимо че далеч не всички дават добри резултати. Учителите са оставени да преценяват сами как в часовете на класа да осъществяват превенция на употребата на наркотици.

В същото време служители от Министерството на здравеопазването отчитат изключително добри резултати по отношение на борбата с ХИВ, участват във всевъзможни международни форуми, където представят добрите си практики. Множество експерти изграждат успешна кариера на международно ниво и остават в чужбина.

След напускането на Глобалния фонд

През 2017 г. финансирането от Глобалния фонд приключи. Независимо че всяко правителство след 2003 г. е подписало споразумение България да гарантира продължението на дейностите след оттеглянето на Глобалния фонд, адекватно финансиране няма и до днес. Процесът по закриване на организации, работещи за превенцията от ХИВ и кръвнопреносими инфекции, вече е започнал през 2014 г., още при първите финансови съкращения от Глобалния фонд. Тогава започва и сериозна кампания за „преструктуриране“ (или иначе казано – закриване) на Националния център по наркомании, която достигна целта си през 2019 г.

Последните опити за продължаване на дейностите по превенция от ХИВ от страна на експертните неправителствени организации беше в периода от юли 2019 до юли 2020 г., когато бяха открити два пъти повече нови серопозитивни лица в сравнение с предишните периоди. След това работата на терен с хора, които активно употребяват наркотици, престана. Никой не прави превенция на кръвнопреносими инфекции, никой не тества на терен, никой не работи с мотивацията за лечение на зависимите хора, както се правеше през годините.

В тази тежка ситуация през 2019 г. Центърът за хуманни политики пое грижата за единствения дневен център за хора, които употребяват наркотици – „Розовата къща“. Място, на което страдащите от зависимост могат да получат храна, дрехи, хигиенни материали, лекарства, да потърсят съдействие за издаване на документи, записване при личен лекар, подаване на искане за ТЕЛК, лечение на зависимостта си или пък на друго заболяване. Място, на което да получат подкрепа в битката си за по-достоен живот. Дейността на „Розовата къща“ се финансира основно от частни лица и организации.

За край на този обзор ми се ще да отбележа нещо много важно, което сякаш като общество често забравяме:

зависимостта е преди всичко заболяване.

По-точната научна дефиниция е, че зависимостта е „хронично-рецидивиращо заболяване, което предизвиква трайни промени в мозъчната биохимия“. Към същата група впрочем спада и захарният диабет.

Успешното лечение на зависимостта не опира само до личното решение и воля на болния. Зависимият има нужда от подкрепа и терапия, която да е безплатна и достъпна. А ние – от повече открити разговори за този социален проблем и за стигмата, с която обричаме на маргинализация част от нашето общество.

Заглавна снимка: Бележка, оставена от самите ползватели на „Розовата къща“ © Мила Бобадова

Гретчен Парлато и силата на деликатния музикален изказ

Post Syndicated from original https://toest.bg/gretchen-parlato-plovdiv-jazz-fest/

Гретчен Парлато е една от най-добрите съвременни джаз певици. Има 4 самостоятелни и над 85 съвместни албума. Пяла е с Хърби Хенкок, Уейн Шортър, Маркъс Милър, Аирто Морейра и много други големи имена в музиката. Третият ѝ албум The Lost and Found от 2011 г. получи над 30 национални и международни награди, а най-новият – Flor – донесе на певицата втора номинация за „Грами“ в категорията „Най-добър вокален джаз албум“ (първата беше за концертния Live in NYC от 2015 г.).

Flor излиза през март 2021 г., след дълга пауза по майчинство, което е и сред основните теми в албума на американската певица. За записа си тя работи с утвърдени имена като Марсел Камарго, Артьом Манукян, Лео Коста, Аирто Морейра, Джералд Клейтън и Марк Гилиана. Именно за да представи своя последен албум, Гретчен Парлато започна своето световно турне. В рамките на обиколката тя ще пристигне и на сцената на предстоящия „Пловдив Джаз Фест“ заедно със своята група, в т.ч. и впечатляващия джаз пианист Тейлър Айгсти, който пък е тазгодишният носител на „Грами“ за най-добър съвременен инструментален албум.

Програмата на осмото издание на „Пловдив Джаз Фест“ включва шест концерта в рамките на четири вечери – от 4 до 7 ноември. По време на изданието ще можем да се насладим и на музиката на легендарния Чарлз Мингъс, представена от четирима пианисти – Антони Дончев (България), Дани Грисет (САЩ), Пьотр Вилезол (Полша), Роберто Таренци (Италия); да чуем младата джаз тромпетистка от британско-бахрейнски произход Яз Мохамед; да отпразнуваме заедно 70-годишнината на великата Йълдъз Ибрахимова, която ще се качи на сцената с Живко Петров, Веселин Веселинов – Еко и Христо Йоцов; да се потопим в творчеството на американския джаз пианист и композитор Емет Коен, а също и на може би най-известния фламенко-джаз пианист Давид Пеня Дорантес. Концертът на Гретчен Парлато и нейния квартет е заключителният за фестивала и ще се състои на 7 ноември от 20 ч. в Дома на културата „Борис Христов“ в Пловдив.

Помолихме известната българска джаз певица Мирослава Кацарова, която е и организатор на престижния фестивал, да направи това блицинтервю с Гретчен Парлато специално за читателите на „Тоест“.

Вие сте една от любимите ми певици и честно казано, сред основните причини да Ви ценя толкова високо като вокалист и творец е Вашето умение интелигентно да овладявате вокалната си фраза и избора Ви да не правите певчески демонстрации, а да създавате умни и тихи кулминации в песните си. Бихте ли ни разкрили пътя, който изминахте, за да достигнете до този деликатен музикален изказ?

Благодаря Ви за тези комплименти, те значат много за мен. Моята цел винаги е била да пея така, както говоря, да остана автентична, истинска, честна и чиста с гласа и артистичността си. Щом веднъж човек открие своя глас, не е необходимо да звучи като някой друг. Трябва да звучи естествено и без усилие. В моето изкуство да работя задълбочено, технически, емоционално и духовно означава да се издигна високо в артистичния си изказ и в смисъла си на творец.

Със сигурност имате свои любими вокалисти, но кои от тях особено са Ви вдъхновили?

Имам неизброимо много любими певци, особено ако преминем отвъд границите на джаза. Но сега ще се спра само на някои – Боби Макферин, Жоао Жилберто, Ела Фицджералд, Сара Вон… И това са наистина малка част от тях. Особено Боби и Жоао ме научиха, че нюансите, детайлите и интимността могат да бъдат изключително мощни, а също и да развият дълбоко чувство за ритмично фразиране.

Най-новият Ви албум Flor се появи след дълга пауза, дължаща се на майчинството Ви. На какво Ви научи този период и отрази ли се той на музиката и на избора Ви като артист?

Майчинството категорично трансформира чувството ми за перспектива, цел, смисъл в изкуството и в живота. Flor е почит към това време, към сина ми, към разцъфването ми отново като артист и откриването на баланса между майчинство и творчество. Да съм майка ми позволява да си задам въпроса как това се отразява на сина ми, на семейството ми, и дори повече – как това се отразява на другите хора, които ще го чуят сега и по-нататък в бъдещето. Нашето изкуство ни надживява, а това ни помага да мислим за качеството на творчеството си и за неговото въздействие и способност да изцелява.

С какво се занимавате сега, правите ли нови записи и какви са Вашите нови музикални посоки? И в този контекст – какво е бъдещето на джаза според Вас?

Идната пролет предстои да запишем двойния си албум с китариста Лайънъл Луеке. Планираме да направим турне с този проект, така че следете за датите на предстоящите ни концерти. Иначе, бъдещето на джаза е отворено и безкрайно, постоянно разширяващо се, растящо… Но в същото време – с дълбоки корени в традицията и наследството. Всичко е възможно в бъдещето на джаза, затова съм тук.

Заглавна снимка: © Lauren Desberg

Canon R6ii – Reality Check… 🤔

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=DdQB0v4tMhU

digiblurDIY LIVE AMA with the Inovelli Founder

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=1F8zaivp6tQ

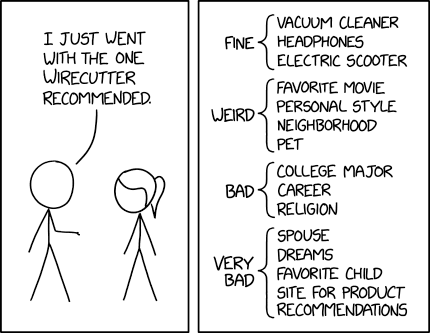

Wirecutter Recommendation

Post Syndicated from original https://xkcd.com/2693/

Your guide to Amazon Communication Developer Services (CDS) at re:Invent 2022

Post Syndicated from Lauren Cudney original https://aws.amazon.com/blogs/messaging-and-targeting/your-guide-to-amazon-communication-developer-services-cds-at-reinvent-2022/

Your guide to AWS Communication Developer Services (CDS) at re:Invent 2022

AWS Communication Developer Services has an exciting lineup at re:Invent this year, with 12 sessions spanning communication solutions through Amazon Pinpoint, Amazon Simple Email Service, and Amazon Chime SDK. With breakouts, workshops, and an in-person customer reception, there will be something for everyone. Here is a detailed guide to help you build your agenda and get the most our of your re:Invent 2022 experience.

In addition to AWS executive keynotes, like Adam Selipsky’s on Tuesday morning, there are several session you don’t want to miss:

Topic – CPaaS and AWS Communication Services

Improving multichannel communications (BIZ201) – Breakout session

Wednesday November 30, 11:30 PM – 12:30 PM PDT

Your communication strategy directly impacts customer satisfaction, revenue, growth, and retention. This session covers how the customer communication landscape is changing and how you can shift your product strategies to impact business outcomes. Learn how AWS Communication Developer Services (CDS) improve customer communication through CPaaS channels like email, SMS, push notifications, telephony, audio, and video. Explore how building a multichannel architecture can shift user habits and increase satisfaction. This session is tailored for chief marketing officers, chief product officers, chief innovation officers, marketing technologists, and communication builders.

Speaker: Siddhartha Rao, GM, Amazon Chime SDK, Amazon Web Services

Topic – Email (Amazon Simple Email Service)

Improve email inbox deliverability with innovation from Amazon SES (BIZ306) – Chalk Talk

Wednesday, November 30, 4:00 – 5:00 PM PDT

This chalk talk demonstrates how to improve email deliverability, leading to increased email ROI. Learn how to build new features in Amazon SES that increase deliverability through system and configuration suggestions, and then automatically implement those changes for more seamless sending. This chalk talk is designed for email data engineers, marketing operations professionals, and marketing engineers.

Topic – Messaging and Orchestration through SMS, Push, In App (Amazon Pinpoint)

Successfully engage customers using Amazon Pinpoint’s SMS channel (BIZ301) – Chalk Talk

Monday, November 28, 1:00 – 2:00 PM PDT

Developing or upgrading an SMS program can be challenging for any enterprise undergoing digital transformation. In this chalk talk, Amazon Pinpoint experts demonstrate how to build a two-way SMS solution and how to monitor global message deliverability. This talk is designed for communication builders, marketing technologists, and IT decision-makers.

Integrate AI and ML into customer engagement (BIZ309) – Chalk Talk

Thursday, December 1, 3:30 – 4:30 PM PDT

In this chalk talk, learn how to use Amazon Lex AI and Amazon Personalize ML to create two-way engagements with customers in Amazon Pinpoint. Amazon Pinpoint is an omni-channel messaging platform on AWS, supporting SMS, push notifications, email, voice, and custom channels. Follow along as Amazon Pinpoint specialists walk through sample architecture and code that uses Amazon Lex AI chatbots to check order status, share updates via the customer’s preferred channel, and personalize responses to the customer, taking customer engagement to the next level.

Topic – Voice and Video (Amazon Chime SDK)

Use AI to live transcribe and translate multiparty video calls – Workshop

Thursday, December 1, 2:00 – 4:00 PM PDT

Harness the power of real-time transcription in web-based voice or video calls through the Amazon Chime SDK. Join this interactive workshop to learn how to build transcription and translation into multiparty video calls requiring additional compliance and language accessibility, such as telehealth appointments, government services, parent-teacher interactions, or financial services. Experts guide attendees in a hands-on demo that combines the power of Amazon Chime SDK, Amazon Transcribe, and Amazon Translate into one simple interface. This workshop is designed for communication developers. You must bring your laptop to participate.

We look forward to seeing you at re:Invent!

A few stable kernel updates

Post Syndicated from original https://lwn.net/Articles/913392/

The

5.4.222,

4.19.263, and

4.14.297

stable kernel updates have been released. The first two contain a single

patch for a Clang compilation error; 4.14.297, instead, has a number of

fixes and speculative-execution mitigations.

[$] Moving past TCP in the data center, part 1

Post Syndicated from original https://lwn.net/Articles/913260/

At the recently concluded Netdev

0x16 conference, which was held both in Lisbon, Portugal and virtually,

Stanford professor John Ousterhout gave his personal views on where

networking in data centers needs to be headed. To solve the problems that

he sees, he suggested some “fairly significant changes” to those

environments, including leaving behind the venerable—ubiquitous—TCP

transport protocol. While LWN was unable to attend the conference itself,

due to scheduling and time-zone conflicts, we were able to view the video of

Ousterhout’s keynote talk to bring you this report.

Your guide to AWS Analytics at re:Invent 2022

Post Syndicated from Imtiaz Sayed original https://aws.amazon.com/blogs/big-data/your-guide-to-aws-analytics-at-reinvent-2022/

Join the global cloud community at AWS re:Invent this year to meet, get inspired, and rethink what’s possible!

Reserved seating is available for registered attendees to secure seats in the sessions of their choice. You can reserve a seat in your favorite sessions by signing in to the attendee portal and navigating to Event > Sessions. For those who can’t make it in person, you can get your free online pass to watch live keynotes and leadership sessions by registering for a virtual-only access. This curated attendee guide helps data and analytics enthusiasts manage their schedule*, as well as navigate the AWS analytics and business intelligence tracks to get the best out of re:Invent.

For additional session details, visit the AWS Analytics splash page.

#AWSanalytics, #awsfordata, #reinvent22

Keynotes

KEY002 | Adam Selipsky (CEO, Amazon Web Services) | Tuesday, November 29 | 8:30 AM – 10:30 AM

Join Adam Selipsky, CEO of Amazon Web Services, as he looks at the ways that forward-thinking builders are transforming industries and even our future, powered by AWS.

KEY003 | Swami Sivasubramanian (Vice President, AWS Data and Machine Learning) | Wednesday, November 30 | 8:30 AM – 10:30 AM

Join Swami Sivasubramanian, Vice President of AWS Data and Machine Learning, as he reveals the latest AWS innovations that can help you transform your company’s data into meaningful insights and actions for your business.

Leadership sessions

ANT203-L | Unlock the value of your data with AWS analytics | G2 Krishnamoorthy, VP of AWS Analytics | Wednesday, November 30 | 2:30 PM – 3:30 PM

G2 addresses the current state of analytics on AWS, covers the latest service innovations around data, and highlights customer successes with AWS analytics. Also, learn from organizations like FINRA and more who have turned to AWS for their digital transformation journey.

Breakout sessions

AWS re:Invent breakout sessions are lecture-style and one hour long sessions delivered by AWS experts, customers, and partners.

| Monday, Nov 28 | Tuesday, Nov 29 | Wednesday, Nov 30 | Thursday, Dec 1 | Friday, Dec 2 |

|

10:00 AM – 11:00 AM ANT326 | How BMW, Intuit, and Morningstar are transforming with AWS and Amazon Athena |

11:00 AM – 12:00 PM ANT301 | Democratizing your organization’s data analytics experience |

10:00 AM – 11:00 AM ANT212 | How JPMC and LexisNexis modernize analytics with Amazon Redshift |

12:30 PM – 1:30 PM ANT207 | What’s new in AWS streaming |

8:30 AM – 9:30 AM ANT311 | Building security operations with Amazon OpenSearch Service |

|

11:30 AM – 12:30 PM ANT206 | What’s new in Amazon OpenSearch Service |

12:15 PM – 1:15 PM ANT334 | Simplify and accelerate data integration and ETL modernization with AWS Glue |

10:00 AM – 11:00 AM ANT209 | Build interactive analytics applications |

12:30 PM – 1:30 PM BSI203 | Differentiate your apps with Amazon QuickSight embedded analytics |

. |

|

12:15 PM – 1:15 PM ANT337 | Migrating to Amazon EMR to reduce costs and simplify operations |

1:15 PM – 2:15 PM ANT205 | Achieving your modern data architecture |

10:45 AM – 11:45 AM ANT218 | Leveling up computer vision and artificial intelligence development |

1:15 PM – 2:15 PM ANT336 | Building data mesh architectures on AWS |

. |

|

1:00 PM – 2:00 PM ANT341 | How Riot Games processes 20 TB of analytics data daily on AWS |

2:00 PM – 3:00 PM BSI201 | Reinvent how you derive value from your data with Amazon QuickSight |

11:30 AM – 12:30 PM ANT340 | How Sony Orchard accelerated innovation with Amazon MSK |

2:00 PM – 3:00 PM ANT342 | How Poshmark accelerates growth via real-time analytics and personalization |

. |

|

1:45 PM – 2:45 PM BSI207 | Get clarity on your data in seconds with Amazon QuickSight Q |

2:45 PM – 3:45 PM ANT339 | How Samsung modernized architecture for real-time analytics |

1:00 PM – 2:00 PM ANT201 | What’s new with Amazon Redshift |

3:30 PM – 4:30 PM ANT219 | Dow Jones and 3M: Observability with Amazon OpenSearch Service |

. |

|

3:15 PM – 4:15 PM ANT302 | What’s new with Amazon EMR |

3:30 PM – 4:30 PM ANT204 | Enabling agility with data governance on AWS |

2:30 PM – 3:30 PM BSI202 | Migrate to cloud-native business analytics with Amazon QuickSight |

. | . |

|

4:45 PM – 5:45 PM ANT335 | How Disney Parks uses AWS Glue to replace thousands of Hadoop jobs |

5:00 PM – 6:00 PM ANT338 | Scaling data processing with Amazon EMR at the speed of market volatility |

4:45 PM – 5:45 PM ANT324 | Modernize your data warehouse |

. | . |

|

5:30 PM – 6:30 PM ANT220 | Using Amazon AppFlow to break down data silos for analytics and ML |

5:45 PM – 6:45 PM ANT325 | Simplify running Apache Spark and Hive apps with Amazon EMR Serverless |

5:30 PM – 6:30 PM ANT317 | Self-service analytics with Amazon Redshift Serverless |

. | . |

Chalk talks

Chalk talks are an hour long, highly interactive content format with a small audience. Each begins with a short lecture delivered by an AWS expert, followed by a Q&A session with the audience.

| Monday, Nov 28 | Tuesday, Nov 29 | Wednesday, Nov 30 | Thursday, Dec 1 | Friday, Dec 2 |

|

12:15 PM – 1:15 PM ANT303 | Security and data access controls in Amazon EMR |

11:00 AM – 12:00 PM ANT318 [Repeat] | Build event-based microservices with AWS streaming services |

9:15 AM – 10:15 AM ANT320 [Repeat] | Get better price performance in cloud data warehousing with Amazon Redshift |

11:45 AM – 12:45 PM ANT329 | Turn data to insights in seconds with secure and reliable Amazon Redshift |

9:15 AM – 10:15 AM ANT314 [Repeat] | Why and how to migrate to Amazon OpenSearch Service |

|

12:15 PM – 1:15 PM BSI401 | Insightful dashboards through advanced calculations with QuickSight |

11:45 AM – 12:45 PM BSI302 | Deploy your BI assets at scale to thousands with Amazon QuickSight |

10:45 AM – 11:45 AM ANT330 [Repeat] | Run Apache Spark on Kubernetes with Amazon EMR on Amazon EKS |

1:15 PM – 2:15 PM ANT401 | Ingest machine-generated data at scale with Amazon OpenSearch Service |

10:00 AM – 11:00 AM ANT322 [Repeat] | Simplifying ETL migration and data integration with AWS Glue |

|

1:00 PM – 2:00 PM ANT323 [Repeat] | Break through data silos with Amazon Redshift |

1:15 PM – 2:15 PM ANT327 | Modernize your analytics architecture with Amazon Athena |

12:15 PM – 1:15 PM ANT323 [Repeat] | Break through data silos with Amazon Redshift |

2:00 PM – 3:00 PM ANT333 [Repeat] | Build a serverless data streaming workload with Amazon Kinesis |

.. |

|

1:45 PM – 2:45 PM ANT319 | Democratizing ML for data analysts |

2:45 PM – 3:45 PM ANT320 [Repeat] | Get better price performance in cloud data warehousing with Amazon Redshift |

4:00 PM – 5:00 PM ANT314 [Repeat] | Why and how to migrate to Amazon OpenSearch Service |

.2:00 AM – 3:00 PM ANT330 [Repeat] | Run Apache Spark on Kubernetes with Amazon EMR on Amazon EKS |

. |

|

1:45 PM – 2:45 PM ANT322 [Repeat] | Simplifying ETL migration and data integration with AWS Glue |

2:45 PM – 3:45 PM BSI301 | Architecting multi-tenancy for your apps with Amazon QuickSight |

4:45 PM – 5:45 PM ANT333 [Repeat] | Build a serverless data streaming workload with Amazon Kinesis |

. | . |

|

5:30 PM – 6:30 PM ANT315 | Optimizing Amazon OpenSearch Service domains for scale and cost |

4:15 PM – 5:15 PM ANT304 | Run serverless Spark workloads with AWS analytics |

4:45 PM – 5:45 PM ANT331 | Understanding TCO for different Amazon EMR deployment models |

. | . |

| . |

5:00 PM – 6:00 PM ANT328 | Build transactional data lakes using open-table formats in Amazon Athena |

4:45 PM – 5:45 PM ANT321 | What’s new in AWS Lake Formation |

. | . |

| . | . |

7:00 PM – 8:00 PM ANT318 [Repeat] | Build event-based microservices with AWS streaming services |

. | . |

Builders’ sessions

These are one-hour small-group sessions with up to nine attendees per table and one AWS expert. Each builders’ session begins with a short explanation or demonstration of what you’re going to build. Once the demonstration is complete, bring your laptop to experiment and build with the AWS expert.

| Monday, Nov 28 | Tuesday, Nov 29 | Wednesday, Nov 30 | Thursday, Dec 1 | Friday, Dec 2 |

| …………………………. |

11:00 AM – 12:00 PM ANT402 | Human vs. machine: Amazon Redshift ML inferences |

1:00 PM – 2:00 PM ANT332 | Build a data pipeline using Apache Airflow and Amazon EMR Serverless |

11:00 AM – 12:00 PM ANT316 [Repeat] | How to build dashboards for machine-generated data |

……………………… |

| . | . |

7:00 PM – 8:00 PM ANT316 [Repeat] | How to build dashboards for machine-generated data |

. | . |

Workshops

Workshops are two-hour interactive sessions where you work in teams or individually to solve problems using AWS services. Each workshop starts with a short lecture, and the rest of the time is spent working the problem. Bring your laptop to build along with AWS experts.

| Monday, Nov 28 | Tuesday, Nov 29 | Wednesday, Nov 30 | Thursday, Dec 1 | Friday, Dec 2 |

|

10:00 AM – 12:00 PM ANT306 [Repeat] | Beyond monitoring: Observability with operational analytics |

11:45 AM – 1:45 PM ANT313 | Using Apache Spark for data science and ML workflows with Amazon EMR |

8:30 AM – 10:30 AM ANT307 | Improve search relevance with ML in Amazon OpenSearch Service |

11:00 AM – 1:00 PM ANT403 | Event detection with Amazon MSK and Amazon Kinesis Data Analytics |

8:30 AM – 10:30 AM ANT309 [Repeat]| Build analytics applications using Apache Spark with Amazon EMR Serverless |

|

4:00 PM – 6:00 PM ANT309 [Repeat]| Build analytics applications using Apache Spark with Amazon EMR Serverless |

2:45 PM – 4:45 PM ANT310 [Repeat] | Build a data mesh with AWS Lake Formation and AWS Glue |

12:15 PM – 2:15 PM ANT306 [Repeat] | Beyond monitoring: Observability with operational analytics |

11:45 AM – 1:45 PM BSI205 | Build stunning customized dashboards with Amazon QuickSight |

. |

| . | . |

12:15 PM – 2:15 PM ANT312 | Near real-time ML inferences with Amazon Redshift |

2:45 PM – 4:45 PM ANT308 | Seamless data sharing using Amazon |

. |

| . | . |

5:30 PM – 7:30 PM ANT310 [Repeat] | Build a data mesh with AWS Lake Formation and AWS Glue |

. | . |

| . | . |

5:30 PM – 7:30 PM BSI303 | Seamlessly embed analytics into your apps with Amazon QuickSight |

. | . |

* All schedules are in PDT time zone.

AWS Analytics & Business Intelligence kiosks

Join us at the AWS Analytics Kiosk in the AWS Village at the Expo. Dive deep into AWS Analytics with AWS subject matter experts, see the latest demos, ask questions, or just drop by to socially connect with your peers.

About the author

Imtiaz (Taz) Sayed is the WW Tech Leader for Analytics at AWS. He enjoys engaging with the community on all things data and analytics. He can be reached via

Imtiaz (Taz) Sayed is the WW Tech Leader for Analytics at AWS. He enjoys engaging with the community on all things data and analytics. He can be reached viaLinkedIn.

Hands-On IoT Hacking: Rapid7 at DEF CON 30 IoT Village, Pt. 3

Post Syndicated from Deral Heiland original https://blog.rapid7.com/2022/11/01/hands-on-iot-hacking-rapid7-at-def-con-30-iot-village-pt-3/

Welcome back to our blog series on Rapid7’s IoT Village exercise from DEF CON 30. In our previous posts, we covered how to achieve access to flash memory and how to extract file system data from the device. In this post, we’ll cover how to modify the data we’ve extracted.

Modify extracted file systems data

Now that you have unsquashfs’d the Squash file system, the next step is to take a look at the extracted file system and its general structure. In our example, the unsquashed file system is located at /root/Desktop/Work/squashfs-root. To see the structure, while in the folder /Desktop/Work, you can run the following command to change director and then list the file and folders:

- cd squashfs-root

- ls -al

As you can see, we have unpacked a copy of the squash file system containing the embedded Linux root file system that was installed on the cable modem for the ARM processor.

The next goal will be to make the following three changes so we can eventually gain access to the cable modem via SSH:

- Create or add a dropbear_rsa_key to squashfs.

- Remove symbolic link to passwd and recreate it.

- Modify the inittab file to launch dropbear on startup.

To make these changes, you will first need to change the directory to the squashfs-root folder. In our IoT Village exercise example, that folder was “~/Desktop/Work/squashfs-root/etc”, and the attendees used the following command:

- cd ~/Desktop/Work/squashfs-root/etc

It is critical that you are in the correct directory and not in the etc directory of your local host before running any of the following commands to avoid potential damage to your laptop or desktop’s configuration. You can validate this by entering the command “pwd”, which allows you to examine the returned path results as shown below:

- pwd

The next thing we need to do is locate a copy of the dropbear_rsa_key file and copy it over to “~/Desktop/Work/squashfs-root/etc”. This RSA key is needed to support dropbear services, which allow SSH communication to the cable modem. It turns out that a usable copy of dropbear_rsa_key file is located within the etc folder on Partition 12, which in our example was found to be mounted at /media/root/disk/etc. You can use the application Disks to confirm the location of the mount point for Partition 12, similar to the method we used for Partition 5 shown in Figure 4.

By running the following command during the IoT Village exercise, attendees were successfully able to copy the dropbear_rsa_key from Partition 12 into “~/Desktop/Work/squashfs-root/etc/”:

- cp /media/root/disk/etc/dropbear_rsa_key .

Next, we had participants remove the symbolic linked passwd file and create a new passwd file that points to the correct shell. Originally, the symbolic link pointed to a passwd file that pointed the root user to a shell that prevented root from accessing the system. By changing the passwd file, we can assign a usable shell environment to the root user.

Like above, the first step is to make sure you are in the correct folder listed below:

“~/Desktop/Work/squashfs-root/etc”

Once you have validated that you are in the correct folder, you can run the following command to delete the passwd file from the squash file systems:

- rm passwd

Once this is deleted, you can next create a new passwd file and add the following data to this file as shown below using vi:

Note: Here is a list of common vi interaction commands that are useful when working within vi:

- i = insert mode. This allows you to enter data in the file

- esc key will exit insert mode

- esc key followed by entering :wq and then the enter key will write and exit vi

- esc key followed dd will delete the line you are on

- esc key followed x will single character

- esc key followed shift a will place you in append insert mode at the end of the line

- esc key followed a will place you in append insert mode at the point of your cursor

- vi passwd

Once in vi, add the following line:

root:x:0:0:root:/:/bin/sh

Next, we need to alter the inittab file. Again, make sure you are currently in the folder “/root/Desktop/Work/squashfs-root/etc”. Once this is validated, you can use vi to open and edit the inittab file.

- vi inittab

The file should originally look like this:

You will need to add the following line to the file:

::respawn:/usr/sbin/dropbear -p 1337 -r /etc/dropbear_rsa_key

Add the line as shown below, and save the file:

Once you’ve completed all these changes, double-check them to make sure they are correct before moving on to the next sections. This will help avoid the need to redo everything if you made an incorrect alteration.

If everything looks correct, it’s time to move repack the squash file system and write the data back to Partition 5 on the cable modem.

Repacking squash file system and rewriting back to Modem

In this step, you will be repacking the squash file system and using the Linux dd command to write the image back to the cable modems NAND Flash memory.

The first thing you will need to do is change the directory back to the working folder – in our example, that is “/Desktop/Work”. This can be done from the current location of “~/Desktop/Work/squashfs-root/etc” by running the following command:

- cd ../../

Next, you’ll use the command “mksquashfs” to repack the squash folder into a binary file called new-P5.bin. To do this, run the following command from the “/Desktop/Work” folder that you should currently be in.

- mksquashfs squashfs-root/ new-P5.bin

Once the above command has completed, you should have a file called new-P5.bin. This binary file contains the squashfs-root folder properly packed and ready to be copied back onto the cable modem partition 5.

Note: If for some reason you think you have made a mistake and need to rerun the “mksquashfs” command, make sure you delete the new-P5.bin file first. “mksquashfs” will not overwrite the file, but it will append the data to it. If this happens it will cause the new-P5.bin images to have duplicates of all the files which will cause your attempt to gain root access to fail. So, if you rerun mksquashfs make sure to delete the old new-P5.bin file first.

One you have run the mksquashfs and have a new-P5.bin file containing the repacked squashfs, you’ll use the Linux dd command to write the binary file back to the cable modem partition 5.

To complete this step, first make sure you have identified the correct “Device:” location using the method shown in Figure 7 from part 2 of this blog series.

In this example, the “Device:” was determined to be sdd5. So we can write the binary images by running the following dd command:

- dd if=new-P5.bin of=/dev/sdd5

Once the dd command completes, the modified squash file system image should now be written on the modem’s NAND flash memory chip. Before proceeding, disconnect the SD Card reader from the cable modem as shown below.

Note: Attaching the SD Card Reader and 12V power supply to the cable modem at the same time will damage the cable modem and render it nonfunctional.

In our next and final post in this series, we’ll cover how to gain root access over the device’s secure shell protocol (SSH). Check back with us next week!

Export historical Security Hub findings to an S3 bucket to enable complex analytics

Post Syndicated from Jonathan Nguyen original https://aws.amazon.com/blogs/security/export-historical-security-hub-findings-to-an-s3-bucket-to-enable-complex-analytics/

AWS Security Hub is a cloud security posture management service that you can use to perform security best practice checks, aggregate alerts, and automate remediation. Security Hub has out-of-the-box integrations with many AWS services and over 60 partner products. Security Hub centralizes findings across your AWS accounts and supported AWS Regions into a single delegated administrator account in your aggregation Region of choice, creating a single pane of glass to consolidate and view individual security findings.

Because there are a large number of possible integrations across accounts and Regions, your delegated administrator account in the aggregation Region might have hundreds of thousands of Security Hub findings. To perform complex analytics or machine learning across the existing (historical) findings that are maintained in Security Hub, you can export findings to an Amazon Simple Storage Service (Amazon S3) bucket. To export new findings that have recently been created, you can implement the solution in the aws-security-hub-findings-export GitHub repository. However, Security Hub has data export API rate quotas, which can make exporting a large number of findings challenging.

In this blog post, we provide an example solution to export your historical Security Hub findings to an S3 bucket in your account, even if you have a large number of findings. We walk you through the components of the solution and show you how to use the solution after deployment.

Prerequisites

To deploy the solution, complete the following prerequisites:

- Enable Security Hub.

- If you want to export Security Hub findings for multiple accounts, designate a Security Hub administrator account.

- If you want to export Security Hub findings across multiple Regions, enable cross-Region aggregation.

Solution overview and architecture

In this solution, you use the following AWS services and features:

- Security Hub export orchestration

- AWS Step Functions helps you orchestrate automation and long-running jobs, which are integral to this solution. You need the ability to run a workflow for hours due to the Security Hub API rate limits and number of findings and objects.

- AWS Lambda functions handle the logic for exporting and storing findings in an efficient and cost-effective manner. You can customize Lambda functions to most use cases.

- Storage of exported findings

- Amazon Simple Storage Service (Amazon S3) helps you share the exported findings and use them in a standardized format for multiple use cases across AWS services.

- Job status tracking

- Amazon EventBridge tracks changes in the status of the Step Functions workflow. The solution can run for over 100 hours; by using EventBridge, you don’t have to manually check the status.

- Amazon Simple Notification Service (Amazon SNS) sends you notifications when the long-running jobs are complete or when they might have issues.

- AWS Systems Manager Parameter Store provides a quick way to track overall status by maintaining a numeric count of successfully exported findings that you can compare with the number of findings shown in the Security Hub dashboard.

Figure 1 shows the architecture for the solution, deployed in the Security Hub delegated administrator account in the aggregation Region. The figure shows multiple Security Hub member accounts to illustrate how you can export findings for an entire AWS Organizations organization from a single delegated administrator account.

Figure 1: High-level overview of process and resources deployed in the Security Hub account

As shown in Figure 1, the workflow after deployment is as follows:

- The Step Functions workflow for the Security Hub export is invoked.

- The Step Functions workflow invokes a single Lambda function that does the following:

- Retrieves Security Hub findings that have an Active status and puts them in a temporary file.

- Pushes the file as an object to Amazon S3.

- Adds the global count of exported findings from the Step Functions workflow to a Systems Manager parameter for validation and tracking purposes.

- Repeats steps b–c for about 10 minutes to get the most findings while preventing the Lambda function from timing out.

- If a nextToken is present, pushes the nextToken to the output of the Step Functions.

Note: If the number of items in the output is smaller than the number of items returned by the API call, then the return output includes a nextToken, which can be passed to a subsequent command to retrieve the next set of items.

- The Step Functions workflow goes through a Choice state as follows:

- If a Security Hub nextToken is present, Step Functions invokes the Lambda function again.

- If a Security Hub nextToken isn’t present, Step Functions ends the workflow successfully.

- An EventBridge rule tracks changes in the status of the Step Functions workflow and sends events to an SNS topic. Subscribers to the SNS topic receive a notification when the status of the Step Functions workflow changes.

Deploy the solution

You can deploy the solution through either the AWS Management Console or the AWS Cloud Development Kit (AWS CDK).

To deploy the solution (console)

- In your delegated administrator Security Hub account, launch the AWS CloudFormation template by choosing the following Launch Stack button. It will take about 10 minutes for the CloudFormation stack to complete.

Note: The stack will launch in the US East (N. Virginia) Region (us-east-1). If you are using cross-Region aggregation, deploy the solution into the Region where Security Hub findings are consolidated. You can download the CloudFormation template for the solution, modify it, and deploy it to your selected Region.

To deploy the solution (AWS CDK)

- Download the code from our aws-security-hub-findings-historical-export GitHub repository, where you can also contribute to the sample code. The CDK initializes your environment and uploads the Lambda assets to Amazon S3. Then, you deploy the solution to your account.

- While you are authenticated in the security tooling account, run the following commands in your terminal. Make sure to replace <AWS_ACCOUNT> with the account number, and replace <REGION> with the AWS Region where you want to deploy the solution.

cdk bootstrap aws://<AWS_ACCOUNT>/<REGION>

cdk deploy SechubHistoricalPullStack

Solution walkthrough and validation

Now that you’ve successfully deployed the solution, you can see each aspect of the automation workflow in action.

Before you start the workflow, you need to subscribe to the SNS topic so that you’re notified of status changes within the Step Functions workflow. For this example, you will use email notification.

To subscribe to the SNS topic

- Open the Amazon SNS console.

- Go to Topics and choose the Security_Hub_Export_Status topic.

- Choose Create subscription.

- For Protocol, choose Email.

- For Endpoint, enter the email address where you want to receive notifications.

- Choose Create subscription.

- After you create the subscription, go to your email and confirm the subscription.

You’re now subscribed to the SNS topic, so any time that the Step Functions status changes, you will receive a notification. Let’s walk through how to run the export solution.

To run the export solution

- Open the Amazon Step Functions console.

- In the left navigation pane, choose State machines.

- Choose the new state machine named sec_hub_finding_export.

- Choose Start execution.

- On the Start execution page, for Name – optional and Input – optional, leave the default values and then choose Start execution.

Figure 2: Example input values for execution of the Step Functions workflow

- This will start the Step Functions workflow and redirect you to the Graph view. If successful, you will see that the overall Execution status and each step have a status of Successful.

- For long-running jobs, you can view the CloudWatch log group associated with the Lambda function to view the logs.

- To track the number of Security Hub findings that have been exported, open the Systems Manager console, choose Parameter Store, and then select the /sechubexport/findingcount parameter. Under Value, you will see the total number of Security Hub findings that have been exported, as shown in Figure 3.

Figure 3: Systems Manager Parameter Store value for the number of Security Hub findings exported

Depending on the number of Security Hub findings, this process can take some time. This is primarily due to the GetFindings quota of 3 requests per second. Each GetFindings request can return a maximum of 100 findings, so this means that you can get up to 300 findings per second. On average, the solution can export about 1 million findings per hour. If you have a large number of findings, you can start the finding export process and wait for the SNS topic to notify you when the process is complete.

How to customize the solution

The solution provides a general framework to help you export your historical Security Hub findings. There are many ways that you can customize this solution based on your needs. The following are some enhancements that you can consider.

Change the Security Hub finding filter

The solution currently pulls all findings with RecordState: ACTIVE, which pulls the active Security Hub findings in the AWS account. You can update the Lambda function code, specifically the finding_filter JSON value within the create_filter function, to pull findings for your use case. For example, to get all active Security Hub findings from the AWS Foundational Security Best Practices standard, update the Lambda function code as follows.

Export more than 100 million Security Hub findings

The example solution can export about 100 million Security Hub findings. This number is primarily determined by the speed at which findings can be exported, due to the following factors:

If you want to export more than 100 million Security Hub findings, do one of the following:

- Use nested Step Functions workflows. For instructions, see Start Workflow Executions from a Task State.

- Implement a pattern by using a Lambda function to start a new execution of your state machine to split ongoing work across multiple workflow executions. For more information, see the tutorial Continuing Ongoing Work as a New Execution.

Note: If you implement either of these solutions, make sure that the nextToken also gets passed to the new Step Functions execution by updating the Lambda function code to parse and pass the nextToken received in the last request.

Speed up the export

One way to increase the export bandwidth, and reduce the overall execution time, is to run the export job in parallel across the individual Security Hub member accounts rather than from the single delegated administrator account.

You could use CloudFormation StackSets to deploy this solution in each Security Hub member account and send the findings to a centralized S3 bucket. You would need to modify the solution to allow an S3 bucket to be provided as an input, and all the Lambda function Identity and Access Management (IAM) roles would need cross-account access to the S3 bucket and corresponding AWS Key Management Service (AWS KMS) key. You would also need to make updates in each member account to iterate through the various Regions in which the Security Hub findings exist.

Next steps

The solution in this post is designed to assist in the retrieval and export of all existing findings currently in Security Hub. After you successfully run this solution to export historical findings, you can continuously export new Security Hub findings by using the sample solution in the aws-security-hub-findings-export GitHub repository.

Now that you’ve exported the Security Hub findings, you can set up and run custom complex reporting or queries against the S3 bucket by using Amazon Athena and AWS Glue. Additionally, you can run machine learning and analytics capabilities by using services like Amazon SageMaker or Amazon Lookout for Metrics.

Conclusion

In this post, you deployed a solution to export the existing Security Hub findings in your account to a central S3 bucket, so that you can apply complex analytics and machine learning to those findings. We walked you through how to use the solution and apply it to some example use cases after you successfully exported existing findings across your AWS environment. Now your security team can use the data in the S3 bucket for predictive analytics and determine if there are Security Hub findings and specific resources that might need to be prioritized for review due to a deviation from normal behavior. Additionally, you can use this solution to enable more complex analytics on multiple fields by querying large and complex datasets with AWS Athena.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a thread on AWS Security Hub re:Post.

Want more AWS Security news? Follow us on Twitter.

CVE-2022-3786 and CVE-2022-3602: Two High-Severity Buffer Overflow Vulnerabilities in OpenSSL Fixed

Post Syndicated from Rapid7 original https://blog.rapid7.com/2022/11/01/cve-2022-3786-and-cve-2022-3602-two-high-severity-buffer-overflows-in-openssl-fixed/

The Rapid7 research team will update this blog post as we learn more details about this vulnerability and its attack surface area. We expect to update this page next by 3 PM EDT on November 1, 2022.

The OpenSSL project released version 3.0.7 on November 1, 2022, to address CVE-2022-3786 and CVE-2022-3602, two high-severity vulnerabilities affecting OpenSSL’s 3.0.x version stream discovered and reported by Polar Bear and Viktor Dukhovni. OpenSSL is a widely used open-source cryptography library that allows for the implementation of secure communications online; this includes generating public/private keys and use of SSL and TLS protocols. (Currently, only the 1.1.1 and 3.0 version streams of OpenSSL are supported). The OpenSSL team warned maintainers and users on October 25 that a critical flaw was on the way — only the second to ever impact the product. Upon release, however, neither vulnerability carried a critical severity rating.

CVE-2022-3786 and CVE-2022-3602 are buffer overflow vulnerabilities in OpenSSL versions below 3.0.7 that both rely on a maliciously crafted email address in a certificate. They differ in two crucial ways: CVE-2022-3786 can overflow an arbitrary number of bytes on the stack with the "." character (a period), leading to denial of service, while CVE-2022-3602 allows a crafted email address to overflow exactly four attacker-controlled bytes on the stack. OpenSSL has a blog available here.

According to the OpenSSL advisory, the vulnerability occurs after certificate verification and requires either a CA to have signed the malicious certificate or for the application to continue certificate verification despite failure to construct a path to a trusted issuer. In other words, exploitability is significantly limited:

- In the case where a server is the target (a webserver, database server, mail server, etc): The server must first request client authentication as part of a mutual authentication configuration. This is an unusual configuration, and usually specialized to higher-security use cases.

- In the case where a client is the target (web browser, email reader, database connector, etc): The attacker would need to first coerce a vulnerable client to connect to a malicious server. This could be done through impersonation (MitM on the network, hijacking an existing resource, etc) or by providing an incentive for a person to click a link (through phishing, watering holes, etc).

For both scenarios, these kinds of attacks do not lend themselves well to widespread exploitation.

Once again, these vulnerabilities only affect the OpenSSL 3.0.x version stream, which has not yet been widely adopted. We are not aware of any exploitation in the wild at the time of the vulnerability’s release on November 1, 2022.

Affected products

- OpenSSL versions 3.0.0 to 3.0.6 (fixed in 3.0.7)

A broad array of popular distributions and technologies use OpenSSL in their offerings, including many widely used Linux distributions. OpenSSL 1.x, which is unaffected, is still the most popular version stream in use. Major distribution maintainers will likely have individual updates out quickly, but we expect a long tail of advisories and trailing fixes as vendors update additional implementations. Community tracking efforts like this one from Royce Williams, or government tracking efforts like this one from NCSC-NL may also be helpful for following individual vendor impact or remediation communications.

Mitigation guidance

Organizations that are running an affected version of OpenSSL should update to 3.0.7 when practical, prioritizing operating system-level updates and public-facing shared services with direct dependencies on OpenSSL. Emergency patching is not indicated.

Rapid7 customers

Our engineering team is in the process of developing both authenticated and unauthenticated vulnerability checks to allow InsightVM and Nexpose customers to assess their exposure to CVE-2022-3786 and CVE-2022-3602. We expect these checks to be available in a content release today (November 1, 2022).

In the meantime, InsightVM customers can use Query Builder with the query software.description CONTAINS OpenSSL 3 to find potentially affected assets. Nexpose and InsightVM customers can create a Dynamic Asset Group with a filtered asset search looking for Software name contains OpenSSL 3.

Additionally, Nexpose and InsightVM customers can use the following SQL query in a SQL Query Export (Security Console -> Reports -> SQL Query Export) to identify whether they have (any version of) OpenSSL in their environments. This query will produce a CSV file with a list of assets containing installed software with “openssl” in its title, and the corresponding version previously found in scans or Insight Agent-based assessments:

SELECT da.sites AS "Site_Name", da.ip_address AS "IP_Address", da.mac_address AS "MAC_Address", da.host_name AS "DNS_Hostname", ds.vendor AS "Vendor", ds.name AS "Software_Name", ds.family AS "Software_Family", ds.version AS "Software_Version", ds.software_class AS "Software_Class" FROM dim_asset_software das JOIN dim_software ds USING(software_id) JOIN dim_asset da ON da.asset_id = das.asset_id WHERE ds.software_class LIKE '%' AND ds.name ILIKE '%openssl%' ORDER BY ds.name ASC

The Software_Version column of the CSV can be used to narrow the scope down to OpenSSL 3.x – note that this query may also return packages that are not OpenSSL proper, e.g. libgnutls-openssl27, that have a version number starting with 3 but do not correspond to 3.0.x of OpenSSL per se.

OpenSSL 3.0.7 released

Post Syndicated from original https://lwn.net/Articles/913370/

The much-anticipated OpenSSL 3.0.7 release, which fixes some high-risk

security problems, is available. The release

notes list two vulnerabilities (CVE-2022-3786 and CVE-2022-3602) that

have not yet been documented on the OpenSSL

vulnerabilities page. LWN commenter mat2 has provided the relevant information, though. It

is worth updating quickly, but many sites do not appear to be at immediate

risk.

Karen MacNeil | The Wine Bible: 3rd Edition | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=Dh284bcMP9U