Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=Sjo4Y6YjCi8

Избори 2021: заявления за гласуване в чужбина

Post Syndicated from original https://yurukov.net/blog/2021/izbori2021-zaqvleniq/

Преди точно три дни ЦИК пусна електронния формуляр за заявления за гласуване в чужбина. Минути след това Да, България пусна на страницата си линк със съвети как да се гласува. Много доброволци и организации призоваха също българите в чужбина да подават такива заявления, за да бъдат отворени повече секции. Именно благодарение на подготовката и няколкото паралелни информационни кампании на последните се стигна до рекорден брой заявления още в първите часове.

Съвети за заявленията

Скоро след началото пуснах мейл с инструкции на около 2000 активни българи зад граница, които се бяха абонирали за новини на портала Glasuvam.org. Ето ги накратко:

- Заявления се подават до 9-ти март 2021 електронно на сайта на ЦИК

- В електронния формуляр се попълват данните точно както са по лична карта, включително адрес и име на кирилица. Точният адрес е чужбина не е задължителен – достатъчно е да се посочи град

- От списъка с местата може да изберете близко до вас място или да добавите ново. На тази таблица или тази карта се виждат по-добре къде са.

- Силно препоръчително е да подкрепите откриването на вече добавено място, за да няма множество близки с по няколко заявления и никое от тях да не стане секция. Ако добавяте ново, напишете името на града на кирилица или точно както се изписва на местния език

- При подаване на заявление в чужбина бивате отписвани от списъка в България. Подайте такова заявление единствено, ако знаете, че ще гласуват извън България

- Ако сайтът откаже заявлението, проверете името си в този списък. Там ще има информация за причината и грешката, която са открили. Обикновено е заради изписване на имената или адреса по лична карта.

- При проблем със заявлението пишете на [email protected]

Друг важен момент е, че може да се подава заявление и да се гласува с изтекли лични документи. Според Закон за мерките и действията по време на извънредното положение, лични документи изтекли между март 2020 и края на януари 2021 остават валидни. В този смисъл всеки с така изтекъл документ може да ги използва за изборите. Вече потвърдих, че електронният формуляр за заявленията ги приема.

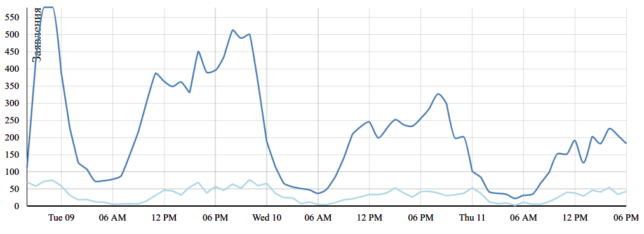

Активността до сега

Скоро след споделянето на линка на формуляра в социалните мрежи заваляха заявленията. В първите 90 минути имаше 450 заявления, а за по-малко от ден – 5000. Към този момент са вече 15000 и растат бързо. Вече има събрани поне 60 заявления за 64 секции извън дипломатическите ни представителства.

Тази активност е учудваща предвид онова, което сме видели на предишни избори. По време на европейските имаше общо 8673 заявления. Тогава предупредих, че има сериозен проблем с активността. При предходните парламентарни през 2017-та имаше около 45 хиляди заявления за всички 4 седмици от кампанията по събиране. Сега минахме една трета от тях за само три дни.

Горната графика е част от динамичната таблица, с която следя събирането на секциите. Полезен инструмент е да се види къде колко секции има събрани и къде има нужда от още гласове. Направена е именно с цел да е в полза на организиращите такива секции по места.

Обновява се на всеки 5 минути изтегляйки данните от таблицата на ЦИК, изчиствайки и обработвайки ги в разбираем формат. Същите данни ще видите и на картата, която пускам вече години наред със заявленията. Тя ще бъде последвана от карта със секциите, когато станат известни.

Рискът от „размиване“ на заявленията

Един от редовните въпроси, които изниква, е за загубата на заявления. Това се случва, тъй като ЦИК позволява в заявлението да се въведе място за гласуване в свободен текст. Така се стига до записи като „някъде около спирката на…“. Така от една страна не може да се определи точното място на някои искания, а от друга, прекален точното описание на други може да ги пръсне из покрайнините на някой град, да не са достатъчни за никоя една точка и секция да няма.

Причината за свободния текст е, че това беше възможност, за която много настояваха в самото начало, когато електронният формуляр беше въведен. Ползата от това е, че доброволци и организации могат да координират усилията за секция на точно определено място. Ако ЦИК определя в кои градове може да се подава заявление, това практически спира подобни инициативи на желаещи да се организират.

Все пак, преди да може всеки от нас да избере възможността „въведи сам“, му се показват всички добавени до онзи момент места. Така първо има избор да ги подкрепи. Имаше известни проблеми в тази част от формуляра на ЦИК, като някои градове бяха с объркани държави. Това беше обаче бързо поправено и не доведе до загуба на гласове. Имаше кратък период днес, в който самият формуляр спря да се отваря, но и това беше решено бързо. Самият факт, че някой го забеляза показва каква голяма активност наблюдаваме в момента.

Наистина, има риск да се изгубят някои заявления, но според анализите ми до сега в огромна част от случаите организацията на секции близо до общностите ни е била успешна. Именно затова винаги още преди началото на кампанията съветваме желаещите да гласуват в чужбина да се поинтересуват кой организира секция в близост до тях и да се включат като доброволци по възможност. Картата горе също помага в тази насока.

Отделно, от ЦИК също обединяват някои заявления при видимо еднакви места изписани различно. Това се прави с изрично решение на комисията и помага да се съберат дори желаещи заявили да гласуват близко един до друг.

Същинското откриване на секции

Основната цел на кампанията е да даде основание да се отворят секции на места, където има голяма концентрация на българи. Докато в консулствата и посолствата това ще стане автоматично, на други места се налага местните общности да организират всичко, включително комисии, помещения и наеми.

Събирането на достатъчно заявления за едно място предполага, но не задължава ЦИК да отвори секция там. Освен някои ограничения в ИК, възможно е приемащата страна да не разреши, както и да липсва организационен капацитет за целта. Под последното се разбира, че няма достатъчно официални лица да участват във всяка секция. Това обаче е доста рядко.

По-голям риск за секциите обаче представлява течащата пандемия. Изискванията за социална дистанция, затваряне на заведения, училища и клубове, както и ограничения в придвижването може да направят трудно и дори невъзможно да се организират секции на някои места. Това може да е и проблем за дипломатическите ни представителства, където традиционно липсва достатъчно място и може да се отворят по-малко секции с по-малко урни, отколкото на предходни избори.

Самото гласуване ще бъде допълнително затруднено – задача, с която се заемаше активно ЦИК до сега с въвеждането на все по-объркващи и сложни бюрократични спънки. Струпването на хора пред секциите ще създаде проблем с местните власти, а изискванията за разстояние ще забави гласуването на всеки отделен човек. Затова е още по-важно да има повече секции на повече места – за да се избегнат многочасовите опашки, които наблюдавахме в градове като Берлин, Лондон и Франкфурт.

Следващи стъпки

Важно е да се свържете с приятелите и роднините си в чужбина и да ги призовете да подкрепят откриването на секции близо до тях. Една важна тънкост в тези заявления е, че с подаването им се отписвате от списъците в България. Тези, които се притесняват, че ще се злоупотреби с вота им могат така да си гарантират, че това няма да стане. Важно е обаче да знаете със сигурност, че ще гласувате в чужбина на 4-ти април, тъй като след заявлението няма да може да упражните правото си на глас в страната.

От друга страна, подаването на заявление за една секция в чужбина не означава непременно, че трябва да гласувате именно там. Може на избраното от вас място да няма открита секция. Дори да има обаче, може да отидете в която и да е друга, за да гласувате там. Просто попълвате декларация, че не сте гласували другаде и ви добавят в списъка. Внимавайте обаче, защото данните от списъците в чужбина се проверяват и следват санкции при многократно гласуване.

Тепърва е научим за планираните противоепидемични мерки по време на вота. Ще изпратя отново подробности на всички абонирали се в Glasuvam.org. Междувременно все още може да се свържете с местното българско дружество или консулство, за да се присъедините като доброволец в организирането на вота. Може да се запишете и като защитник на Ти Броиш, за да имате възможност да присъствате при броенето и да подсигурите, че няма да има случайни или умишлени подмени.

The post Избори 2021: заявления за гласуване в чужбина first appeared on Блогът на Юруков.

[$] kcmp() breaks loose

Post Syndicated from original https://lwn.net/Articles/845448/rss

Given the large set of system calls implemented by the Linux kernel, it

would not be surprising for most people to be unfamiliar with a few of

them. Not everybody needs to know the details of

setresgid(),

modify_ldt(),

or

lookup_dcookie(),

after all. But even developers who have a wide understanding of the Linux

system-call set may be surprised by kcmp(),

which is not enabled by default in the kernel build. It would seem,

though, that the word has gotten out, leading to an effort to make

kcmp() more widely available.

‘Oumuamua: From Beyond the Stars

Post Syndicated from Geographics original https://www.youtube.com/watch?v=MarE0gP5-hw

Security updates for Thursday

Post Syndicated from original https://lwn.net/Articles/845750/rss

Security updates have been issued by Debian (firejail and netty), Fedora (java-1.8.0-openjdk, java-11-openjdk, rubygem-mechanize, and xpdf), Mageia (gstreamer1.0-plugins-bad, nethack, and perl-Email-MIME and perl-Email-MIME-ContentType), openSUSE (firejail, java-11-openjdk, python, and rclone), Red Hat (dotnet, dotnet3.1, dotnet5.0, and rh-nodejs12-nodejs), SUSE (firefox, kernel, python, python36, and subversion), and Ubuntu (gnome-autoar, junit4, openvswitch, postsrsd, and sqlite3).

Rust 1.50.0 released

Post Syndicated from original https://lwn.net/Articles/845748/rss

Version

1.50.0 of the Rust language has been released. “For this

release, we have improved array indexing, expanded safe access to union

fields, and added to the standard library.”

Ultimate 50mm Showdown – Nikon F vs Z (pt1)

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=tGQBYQ33WX0

Чиповете, които държавата ще слага на автомобилите, са грешно решение

Post Syndicated from Bozho original https://blog.bozho.net/blog/3691

ИААА (по-известна като ДАИ) ще слага чипове на автомобилите (по-конкретно – чипове в стикерите за технически преглед). Доста притеснителен подход с много проблеми и малко ползи.

Да започнем от чисто процедурните аспекти – на сайта на ИААА липсва информация за обществено обсъждане по въпроса. Такова има на strategy.bg, но там в мотивите изобщо не се засяга въпроса с чиповете. Това се случило миналата година, но аз не помня адекватно обсъждане по темата. Затова и чак сега разбирам за него.

Измененията в наредбата са с цел маркиране на екологичните показатели на автомобилите, което само по себе си е добре, но не бих реализирал мерките чрез задължителен RFID стикер. В наредбата се говори за „стикер“ и единствено в приложение на края на наредбата става ясно, че ще има чип. Какви са проблемите (и ще извините техническите детайли, но и те са важни)?

- Всеки може да прочете поне част от съдържанието на чипа. UHF RFID може да бъде четен на 5-6 метра, но в зависимост от големината може да стигне и до 30, така че със сравнително проста техника всеки може да записва номера на преминаващи автомобили през определени места.

- Избраният стандарт не е предвиден за такива цели. EPCGlobal е стандарт, с който може да се заменят баркодовете за маркиране на продукти. Той не предвижда криптиране на данните. Според наредбата някои данни в чипа ще се „кодират“, но не казват как. Кодирането е некриптографска трансформация, т.е. не е нужен ключ, за да бъде декодирана записаната информация. Дори да е използван грешния термин, наредбата не казва кой създава и пази ключовете и как. Т.е. на практика дори „кодираните“ данни са достъпни за всички, или поне за „определени хора“. Тези данни включват технически параметри, но и административни такива – регистрационен номер, дата на регистрация, и др. Включва и доста данни, потенциално полезни на автокрадци, например.

- Четенето на регистрационни номера става с камери, които трябва да са обозначени и са видими на пътя. Четенето на RFID чип става тихомълком и без да разберете, че някой го е прочел.

- Обикновено такива чипове могат да бъдат клонирани (стандартът определя различни възможности за защита, но наредбата не казва какъв ще бъде използван). Т.е. на черния пазар ще тръгнат фалшиви чипове.

- Не се предвижда нищо за екраниране на чипа или за неговото повреждане. Т.е. съвсем спокойно в един момент половината чипове в стикерите могат да спрат да работят.

- Според изпълнителния директор на агенцията (който преди това работеше в КАТ и има репутация на добър професионалист), в чипа ще има данни за собственика на автомобила, а не само технически параметри на автомобила. Това би било безкрайно притеснително, но според наредбата не е вярно. Та г-н Рановски ли бърка, медиите ли са предали грешно думите му, или ИААА планира да сложи лични данни?

- Няма нужда от това. Информацията за екологичния клас и останалите технически параметри трябва да се намира в централизирана база данни, а не да се размотава по чипове. Органите, на които им е нужна, ще могат да си я набавят по регистрационен номер на автомобила (чрез ръчно въвеждане или чрез автоматична проверка чрез камери), като за това ще остават необходимите следи, предпазващи от злоупотреби. Ако някоя община иска да контролира допустимите автомобили в дни с повишено замърсяване, да направи това с камери, а не с четци за чипове.

Смятам, че избраното решение е необмислено, грешно, потенциално скъпо, със спорна сигурност, криещо рискове от нерегламентирано следене (както от частни лица, така и от държавни органи), подлежащо на манипулации и излишно.

Материалът Чиповете, които държавата ще слага на автомобилите, са грешно решение е публикуван за пръв път на БЛОГодаря.

Machine learning and depth estimation using Raspberry Pi

Post Syndicated from David Plowman original https://www.raspberrypi.org/blog/machine-learning-and-depth-estimation-using-raspberry-pi/

One of our engineers, David Plowman, describes machine learning and shares news of a Raspberry Pi depth estimation challenge run by ETH Zürich (Swiss Federal Institute of Technology).

Spoiler alert – it’s all happening virtually, so you can definitely make the trip and attend, or maybe even enter yourself.

What is Machine Learning?

Machine Learning (ML) and Artificial Intelligence (AI) are some of the top engineering-related buzzwords of the moment, and foremost among current ML paradigms is probably the Artificial Neural Network (ANN).

They involve millions of tiny calculations, merged together in a giant biologically inspired network – hence the name. These networks typically have millions of parameters that control each calculation, and they must be optimised for every different task at hand.

This process of optimising the parameters so that a given set of inputs correctly produces a known set of outputs is known as training, and is what gives rise to the sense that the network is “learning”.

Machine Learning frameworks

A number of well known companies produce free ML frameworks that you can download and use on your own computer. The network training procedure runs best on machines with powerful CPUs and GPUs, but even using one of these pre-trained networks (known as inference) can be quite expensive.

One of the most popular frameworks is Google’s TensorFlow (TF), and since this is rather resource intensive, they also produce a cut-down version optimised for less powerful platforms. This is TensorFlow Lite (TFLite), which can be run effectively on Raspberry Pi.

Depth estimation

ANNs have proven very adept at a wide variety of image processing tasks, most notably object classification and detection, but also depth estimation. This is the process of taking one or more images and working out how far away every part of the scene is from the camera, producing a depth map.

Here’s an example:

The image on the right shows, by the brightness of each pixel, how far away the objects in the original (left-hand) image are from the camera (darker = nearer).

We distinguish between stereo depth estimation, which starts with a stereo pair of images (taken from marginally different viewpoints; here, parallax can be used to inform the algorithm), and monocular depth estimation, working from just a single image.

The applications of such techniques should be clear, ranging from robots that need to understand and navigate their environments, to the fake bokeh effects beloved of many modern smartphone cameras.

Depth Estimation Challenge

We were very interested then to learn that, as part of the CVPR (Computer Vision and Pattern Recognition) 2021 conference, Andrey Ignatov and Radu Timofte of ETH Zürich were planning to run a Monocular Depth Estimation Challenge. They are specifically targeting the Raspberry Pi 4 platform running TFLite, and we are delighted to support this effort.

For more information, or indeed if any technically minded readers are interested in entering the challenge, please visit:

- CVPR 2021 – conference homepage

- Mobile AI Workshop 2021

- Monocular Depth Estimation Challenge

The conference and workshops are all taking place virtually in June, and we’ll be sure to update our blog with some of the results and models produced for Raspberry Pi 4 by the competing teams. We wish them all good luck!

The post Machine learning and depth estimation using Raspberry Pi appeared first on Raspberry Pi.

[$] LWN.net Weekly Edition for February 11, 2021

Post Syndicated from original https://lwn.net/Articles/845091/rss

The LWN.net Weekly Edition for February 11, 2021 is available.

[$] Python cryptography, Rust, and Gentoo

Post Syndicated from original https://lwn.net/Articles/845535/rss

There is always a certain amount of tension between the goals of those

using older, less-popular architectures and the goals of projects targeting

more mainstream users and systems. In many ways, our community has been

spoiled by the number of architectures supported by GCC, but a lot of new

software is not being written in C—and existing software is migrating away

from it.

The Rust language is

often the choice these days for both new and existing code bases, but it is

built with LLVM, which supports fewer architectures than GCC

supports—and Linux runs on. So the question that arises is how much these older, non-Rusty

architectures should be able to hold back future development; the answer,

in several places now, has been “not much”.

Scaling Neuroscience Research on AWS

Post Syndicated from Konrad Rokicki original https://aws.amazon.com/blogs/architecture/scaling-neuroscience-research-on-aws/

HHMI’s Janelia Research Campus in Ashburn, Virginia has an integrated team of lab scientists and tool-builders who pursue a small number of scientific questions with potential for transformative impact. To drive science forward, we share our methods, results, and tools with the scientific community.

Introduction

Our neuroscience research application involves image searches that are computationally intensive but have unpredictable and sporadic usage patterns. The conventional on-premises approach is to purchase a powerful and expensive workstation, install and configure specialized software, and download the entire dataset to local storage. With 16 cores, a typical search of 50,000 images takes 30 seconds. A serverless architecture using AWS Lambda allows us to do this job in seconds for a few cents per search, and is capable of scaling to larger datasets.

Parallel Computation in Neuroscience Research

Basic research in neuroscience is often conducted on fruit flies. This is because their brains are small enough to study in a meaningful way with current tools, but complex enough to produce sophisticated behaviors. Conducting such research nonetheless requires an immense amount of data and computational power. Janelia Research Campus developed the NeuronBridge tool on AWS to accelerate scientific discovery by scaling computation in the cloud.

Figure 1: A “mask image” (on the left) is compared to many different fly brains (on the right) to find matching neurons. (Janella Research Campus)

The fruit fly (Drosophila melanogaster) has about 100,000 neurons and its brain is highly stereotyped. This means that the brain of one fruit fly is similar to the next one. Using electron microscopy (EM), the FlyEM project has reconstructed a wiring diagram of a fruit fly brain. This connectome includes the structure of the neurons and the connectivity between them. But EM is only half of the picture. Once scientists know the structure and connectivity, they must perform experiments to find what purpose the neurons serve.

Flies can be genetically modified to reproducibly express a fluorescent protein in certain neurons, causing those neurons to glow under a light microscope (LM). By iterating through many modifications, the FlyLight project has created a vast genetic driver library. This allows scientists to target individual neurons for experiments. For example, blocking a particular neuron of a fly from functioning, and then observing its behavior, allows a scientist to understand the function of that neuron. Through the course of many such experiments, scientists are currently uncovering the function of entire neuronal circuits.

We developed NeuronBridge, a tool available for use by neuroscience researchers around the world, to bridge the gap between the EM and LM data. Scientists can start with EM structure and find matching fly lines in LM. Or they may start with a fly line and find the corresponding neuronal circuits in the EM connectome.

Both EM and LM produce petabytes of 3D images. Image processing and machine learning algorithms are then applied to discern neuron structure. We also developed a computational shortcut called color depth MIP to represent depth as color. This technique compresses large 3D image stacks into smaller 2D images that can be searched efficiently.

Image search is an embarrassingly parallel problem ideally suited to parallelization with simple functions. In a typical search, the scientist will create a “mask image,” which is a color depth image featuring only the neuron they want to find. The search algorithm must then compare this image to hundreds of thousands of other images. The paradigm of launching many short-lived cloud workers, termed burst-parallel compute, was originally suggested by a group at UCSD. To scale NeuronBridge, we decided to build a serverless AWS-native implementation of burst-parallel image search.

The Architecture

Our main reason for using a serverless approach was that our usage patterns are unpredictable and sporadic. The total number of researchers who are likely to use our tool is not large, and only a small fraction of them will need the tool at any given time. Furthermore, our tool could go unused for weeks at a time, only to get a flood of requests after a new dataset is published. A serverless architecture allows us to cope with this unpredictable load. We can keep costs low by only paying for the compute time we actually use.

One challenge of implementing a burst-parallel architecture is that each Lambda invocation requires a network call, with the ensuing network latency. Spawning several thousands of functions from a single manager function can take many seconds. The trick to minimizing this latency is to parallelize these calls by recursively spawning concurrent managers in a tree structure. Each leaf in this tree spawns a set of worker functions to do the work of searching the imagery. Each worker reads a small batch of images from Amazon Simple Storage Service (S3). They are then compared to the mask image, and the intermediate results are written to Amazon DynamoDB, a serverless NoSQL database.

Figure 2: Serverless architecture for burst-parallel search

Search state is monitored by an AWS Step Functions state machine, which checks DynamoDB once per second. When all the results are ready, the Step Functions state machine runs another Lambda function to combine and sort the results. The state machine addresses error conditions and timeouts, and updates the browser when the asynchronous search is complete. We opted to use AWS AppSync to notify a React web client, providing an interactive user experience while remaining entirely serverless.

As we scaled to 3,000 concurrent Lambda functions reading from our data bucket, we reached Amazon S3’s limit of 5,500 GETs per second per prefix. The fix was to create numbered prefix folders and then randomize our key list. Each worker could then search a random list of images across many prefixes. This change distributed the load from our highly parallel functions across a number of S3 shards, and allowed us to run with much higher parallelism.

We also addressed cold-start latency. Infrequently used Lambda functions take longer to start than recently used ones, and our unpredictable usage patterns meant that we were experiencing many cold starts. In our Java functions, we found that most of the cold-start time was attributed to JVM initialization and class loading. Although many mitigations for this exist, our worker logic was small enough that rewriting the code to use Node.js was the obvious choice. This immediately yielded a huge improvement, reducing cold starts from 8-10 seconds down to 200 ms.

With all of these insights, we developed a general-purpose parallel computation framework called burst-compute. This AWS-native framework runs as a serverless application to implement this architecture. It allows you to massively scale your own custom worker functions and combiner functions. We used this new framework to implement our image search.

Conclusion

The burst-parallel architecture is a powerful new computation paradigm for scientific computing. It takes advantage of the enormous scale and technical innovation of the AWS Cloud to provide near-interactive on-demand compute without expensive hardware maintenance costs. As scientific computing capability matures for the cloud, we expect this kind of large-scale parallel computation to continue becoming more accessible. In the future, the cloud could open doors to entirely new types of scientific applications, visualizations, and analysis tools.

We would like to express our thanks to AWS Solutions Architects Scott Glasser and Ray Chang, for their assistance with design and prototyping, and to Geoffrey Meissner for reviewing drafts of this write-up.

Source Code

All of the application code described in this article is open source and licensed for reuse:

The data and imagery are shared publicly on the Registry of Open Data on AWS.

Mitigate data leakage through the use of AppStream 2.0 and end-to-end auditing

Post Syndicated from Chaim Landau original https://aws.amazon.com/blogs/security/mitigate-data-leakage-through-the-use-of-appstream-2-0-and-end-to-end-auditing/

Customers want to use AWS services to operate on their most sensitive data, but they want to make sure that only the right people have access to that data. Even when the right people are accessing data, customers want to account for what actions those users took while accessing the data.

In this post, we show you how you can use Amazon AppStream 2.0 to grant isolated access to sensitive data and decrease your attack surface. In addition, we show you how to achieve end-to-end auditing, which is designed to provide full traceability of all activities around your data.

To demonstrate this idea, we built a sample solution that provides a data scientist with access to an Amazon SageMaker Studio notebook using AppStream 2.0. The solution deploys a new Amazon Virtual Private Cloud (Amazon VPC) with isolated subnets, where the SageMaker notebook and AppStream 2.0 instances are set up.

Why AppStream 2.0?

AppStream 2.0 is a fully-managed, non-persistent application and desktop streaming service that provides access to desktop applications from anywhere by using an HTML5-compatible desktop browser.

Each time you launch an AppStream 2.0 session, a freshly-built, pre-provisioned instance is provided, using a prebuilt image. As soon as you close your session and the disconnect timeout period is reached, the instance is terminated. This allows you to carefully control the user experience and helps to ensure a consistent, secure environment each time. AppStream 2.0 also lets you enforce restrictions on user sessions, such as disabling the clipboard, file transfers, or printing.

Furthermore, AppStream 2.0 uses AWS Identity and Access Management (IAM) roles to grant fine-grained access to other AWS services such as Amazon Simple Storage Service (Amazon S3), Amazon Redshift, Amazon SageMaker, and other AWS services. This gives you both control over the access as well as an accounting, via Amazon CloudTrail, of what actions were taken and when.

These features make AppStream 2.0 uniquely suitable for environments that require high security and isolation.

Why SageMaker?

Developers and data scientists use SageMaker to build, train, and deploy machine learning models quickly. SageMaker does most of the work of each step of the machine learning process to help users develop high-quality models. SageMaker access from within AppStream 2.0 provides your data scientists and analysts with a suite of common and familiar data-science packages to use against isolated data.

Solution architecture overview

This solution allows a data scientist to work with a data set while connected to an isolated environment that doesn’t have an outbound path to the internet.

First, you build an Amazon VPC with isolated subnets and with no internet gateways attached. This ensures that any instances stood up in the environment don’t have access to the internet. To provide the resources inside the isolated subnets with a path to commercial AWS services such as Amazon S3, SageMaker, AWS System Manager you build VPC endpoints and attach them to the VPC, as shown in Figure 1.

Figure 1: Network Diagram

You then build an AppStream 2.0 stack and fleet, and attach a security group and IAM role to the fleet. The purpose of the IAM role is to provide the AppStream 2.0 instances with access to downstream AWS services such as Amazon S3 and SageMaker. The IAM role design follows the least privilege model, to ensure that only the access required for each task is granted.

During the building of the stack, you will enable AppStream 2.0 Home Folders. This feature builds an S3 bucket where users can store files from inside their AppStream 2.0 session. The bucket is designed with a dedicated prefix for each user, where only they have access. We use this prefix to store the user’s pre-signed SagaMaker URLs, ensuring that no one user can access another users SageMaker Notebook.

You then deploy a SageMaker notebook for the data scientist to use to access and analyze the isolated data.

To confirm that the user ID on the AppStream 2.0 session hasn’t been spoofed, you create an AWS Lambda function that compares the user ID of the data scientist against the AppStream 2.0 session ID. If the user ID and session ID match, this indicates that the user ID hasn’t been impersonated.

Once the session has been validated, the Lambda function generates a pre-signed SageMaker URL that gives the data scientist access to the notebook.

Finally, you enable AppStream 2.0 usage reports to ensure that you have end-to-end auditing of your environment.

To help you easily deploy this solution into your environment, we’ve built an AWS Cloud Development Kit (AWS CDK) application and stacks, using Python. To deploy this solution, you can go to the Solution deployment section in this blog post.

Note: this solution was built with all resources being in a single AWS Region. The support of multi Region is possible but isn’t part of this blog post.

Solution requirements

Before you build a solution, you must know your security requirements. The solution in this post assumes a set of standard security requirements that you typically find in an enterprise environment:

- User authentication is provided by a Security Assertion Markup Language (SAML) identity provider (IdP).

- IAM roles are used to access AWS services such as Amazon S3 and SageMaker.

- AWS IAM access keys and secret keys are prohibited.

- IAM policies follow the least privilege model so that only the required access is granted.

- Windows clipboard, file transfer, and printing to local devices is prohibited.

- Auditing and traceability of all activities is required.

Note: before you will be able to integrate SAML with AppStream 2.0, you will need to follow the AppStream 2.0 Integration with SAML 2.0 guide. There are quite a few steps and it will take some time to set up. SAML authentication is optional, however. If you just want to prototype the solution and see how it works, you can do that without enabling SAML integration.

Solution components

This solution uses the following technologies:

- Amazon VPC – provides an isolated network where the solution will be deployed.

- VPC endpoints – provide access from the isolated network to commercial AWS services such as Amazon S3 and SageMaker.

- AWS Systems Manager – stores parameters such as S3 bucket names.

- AppStream 2.0 – provides hardened instances to run the solution on.

- AppStream 2.0 home folders – store users’ session information.

- Amazon S3 – stores application scripts and pre-signed SageMaker URLs.

- SageMaker notebook – provides data scientists with tools to access the data.

- AWS Lambda – runs scripts to validate the data scientist’s session, and generates pre-signed URLs for the SageMaker notebook.

- AWS CDK – deploys the solution.

- PowerShell – processes scripts on AppStream 2.0 Microsoft Windows instances.

Solution high-level design and process flow

The following figure is a high-level depiction of the solution and its process flow.

Figure 2: Solution process flow

The process flow—illustrated in Figure 2—is:

- A data scientist clicks on an AppStream 2.0 federated or a streaming URL.

- If it’s a federated URL, the data scientist authenticates using their corporate credentials, as well as MFA if required.

- If it’s a streaming URL, no further authentication is required.

- The data scientist is presented with a PowerShell application that’s been made available to them.

- After starting the application, it starts the PowerShell script on an AppStream 2.0 instance.

- The script then:

- Downloads a second PowerShell script from an S3 bucket.

- Collects local AppStream 2.0 environment variables:

- AppStream_UserName

- AppStream_Session_ID

- AppStream_Resource_Name

- Stores the variables in the session.json file and copies the file to the home folder of the session on Amazon S3.

- The PUT event of the JSON file into the Amazon S3 bucket triggers an AWS Lambda function that performs the following:

- Reads the session.json file from the user’s home folder on Amazon S3.

- Performs a describe action against the AppStream 2.0 API to ensure that the session ID and the user ID match. This helps to prevent the user from manipulating the local environment variable to pretend to be someone else (spoofing), and potentially gain access to unauthorized data.

- If the session ID and user ID match, a pre-signed SageMaker URL is generated and stored in session_url.txt, and copied to the user’s home folder on Amazon S3.

- If the session ID and user ID do not match, the Lambda function ends without generating a pre-signed URL.

- When the PowerShell script detects the session_url.txt file, it opens the URL, giving the user access to their SageMaker notebook.

Code structure

To help you deploy this solution in your environment, we’ve built a set of code that you can use. The code is mostly written in Python and for the AWS CDK framework, and with an AWS CDK application and some PowerShell scripts.

Note: We have chosen the default settings on many of the AWS resources our code deploys. Before deploying the code, you should conduct a thorough code review to ensure the resources you are deploying meet your organization’s requirements.

AWS CDK application – ./app.py

To make this application modular and portable, we’ve structured it in separate AWS CDK nested stacks:

- vpc-stack – deploys a VPC with two isolated subnets, along with three VPC endpoints.

- s3-stack – deploys an S3 bucket, copies the AppStream 2.0 PowerShell scripts, and stores the bucket name in an SSM parameter.

- appstream-service-roles-stack – deploys AppStream 2.0 service roles.

- appstream-stack – deploys the AppStream 2.0 stack and fleet, along with the required IAM roles and security groups.

- appstream-start-fleet-stack – builds a custom resource that starts the AppStream 2.0 fleet.

- notebook-stack – deploys a SageMaker notebook, along with IAM roles, security groups, and an AWS Key Management Service (AWS KMS) encryption key.

- saml-stack – deploys a SAML role as a placeholder for SAML authentication.

PowerShell scripts

The solution uses the following PowerShell scripts inside the AppStream 2.0 instances:

- sagemaker-notebook-launcher.ps1 – This script is part of the AppStream 2.0 image and downloads the sagemaker-notebook.ps1 script.

- sagemaker-notebook.ps1 – starts the process of validating the session and generating the SageMaker pre-signed URL.

Note: Having the second script reside on Amazon S3 provides flexibility. You can modify this script without having to create a new AppStream 2.0 image.

Deployment Prerequisites

To deploy this solution, your deployment environment must meet the following prerequisites:

- A machine with Python, Git, and AWS Command Line interface (AWS CLI) installed, to be used for the deployment.

- Download the solution code from Github.

- (Optional) A SAML federation solution that’s tied to your corporate directory.

Note: We used AWS Cloud9 with Amazon Linux 2 to test this solution, as it comes preinstalled with most of the prerequisites for deploying this solution.

Deploy the solution

Now that you know the design and components, you’re ready to deploy the solution.

Note: In our demo solution, we deploy two stream.standard.small AppStream 2.0 instances, using Windows Server 2019. This gives you a reasonable example to work from. In your own environment you might need more instances, a different instance type, or a different version of Windows. Likewise, we deploy a single SageMaker notebook instance of type ml.t3.medium. To change the AppStream 2.0 and SageMaker instance types, you will need to modify the stacks/data_sandbox_appstream.py and stacks/data_sandbox_notebook.py respectively.

Step 1: AppStream 2.0 image

An AppStream 2.0 image contains applications that you can stream to your users. It’s what allows you to curate the user experience by preconfiguring the settings of the applications you stream to your users.

To build an AppStream 2.0 image:

-

Build an image following the Create a Custom AppStream 2.0 Image by Using the AppStream 2.0 Console tutorial.

Note: In Step 1: Install Applications on the Image Builder in this tutorial, you will be asked to choose an Instance family. For this example, we chose General Purpose. If you choose a different Instance family, you will need to make sure the appstream_instance_type specified under Step 2: Code modification is of the same family.

In Step 6: Finish Creating Your Image in this tutorial, you will be asked to provide a unique image name. Note down the image name as you will need it in Step 2 of this blog post.

- Copy notebook-launcher.ps1 to a location on the image. We recommend that you copy it to C:\AppStream.

- In Step 2—Create an AppStream 2.0 Application Catalog—of the tutorial, use C:\Windows\System32\Windowspowershell\v1.0\powershell.exe as the application, and the path to notebook-launcher.ps1 as the launch parameter.

Note: While testing your application during the image building process, the PowerShell script will fail because the underlying infrastructure is not present. You can ignore that failure during the image building process.

Step 2: Code modification

Next, you must modify some of the code to fit your environment.

Make the following changes in the cdk.json file:

- vpc_cidr – Supply your preferred CIDR range to be used for the VPC.

Note: VPC CIDR ranges are your private IP space and thus can consist of any valid RFC 1918 range. However, if the VPC you are planning on using for AppStream 2.0 needs to connect to other parts of your private network (on premise or other VPCs), you need to choose a range that does not conflict or overlap with the rest of your infrastructure.

- appstream_Image_name – Enter the image name you chose when you built the Appstream 2.0 image in Step 1.a.

- appstream_environment_name – The environment name is strictly cosmetic and drives the naming of your AppStream 2.0 stack and fleet.

- appstream_instance_type – Enter the AppStream 2.0 instance type. The instance type must be part of the same instance family you used in Step 1 of the To build an AppStream 2.0 image section. For a list of AppStream 2.0 instances, visit https://aws.amazon.com/appstream2/pricing/.

- appstream_fleet_type – Enter the fleet type. Allowed values are ALWAYS_ON or ON_DEMAND.

- Idp_name – If you have integrated SAML with this solution, you will need to enter the IdP name you chose when creating the SAML provider in the IAM Console.

Step 3: Deploy the AWS CDK application

The CDK application deploys the CDK stacks.

The stacks include:

- VPC with isolated subnets

- VPC Endpoints for S3, SageMaker, and Systems Manager

- S3 bucket

- AppStream 2.0 stack and fleet

- Two AppStream 2.0 stream.standard.small instances

- A single SageMaker ml.t2.medium notebook

Run the following commands to deploy the AWS CDK application:

- Install the AWS CDK Toolkit.

- Create and activate a virtual environment.

- Change directory to the root folder of the code repository.

- Install the required packages.

- If you haven’t used AWS CDK in your account yet, run:

- Deploy the AWS CDK stack.

Step 4: Test the solution

After the stack has successfully deployed, allow approximately 25 minutes for the AppStream 2.0 fleet to reach a running state. Testing will fail if the fleet isn’t running.

Without SAML

If you haven’t added SAML authentication, use the following steps to test the solution.

- In the AWS Management Console, go to AppStream 2.0 and then to Stacks.

- Select the stack, and then select Action.

- Select Create streaming URL.

- Enter any user name and select Get URL.

- Enter the URL in another tab of your browser and test your application.

With SAML

If you are using SAML authentication, you will have a federated login URL that you need to visit.

If everything is working, your SageMaker notebook will be launched as shown in Figure 3.

Figure 3: SageMaker Notebook

Note: if you receive a web browser timeout, verify that the SageMaker notebook instance “Data-Sandbox-Notebook” is currently in InService status.

Auditing

Auditing for this solution is provided through AWS CloudTrail and AppStream 2.0 Usage Reports. Though CloudTrail is enabled by default, to collect and store the CloudTrail logs, you must create a trail for your AWS account.

The following logs will be available for you to use, to provide auditing.

- Login – CloudTrail

- User ID

- IAM SAML role

- AppStream stack

- S3 – CloudTrail

- IAM role

- Source IP

- S3 bucket

- Amazon S3 object

- AppStream 2.0 instance IP address – AppStream 2.0 usage reports

- User ID

- Session ID

- Elastic network interface

Connecting the dots

To get an accurate idea of your users’ activity, you have to correlate some logs from different services. First, you collect the login information from CloudTrail. This gives you the user ID of the user who logged in. You then collect the Amazon S3 put from CloudTrail, which gives you the IP address of the AppStream 2.0 instance. And finally, you collect the AppStream 2.0 usage report which gives you the IP address of the AppStream 2.0 instance, plus the user ID. This allows you to connect the user ID to the activity on Amazon S3. For auditing & controlling exploration activities with SageMaker, please visit this GitHub repository.

Though the logs are automatically being collected, what we have shown you here is a manual way of sifting through those logs. For a more robust solution on querying and analyzing CloudTrail logs, visit Querying AWS CloudTrail Logs.

Costs of this Solution

The cost for running this solution will depend on a number of factors like the instance size, the amount of data you store, and how many hours you use the solution. AppStream 2.0 is charged per instance hour and there is one instance in this example solution. You can see details on the AppStream 2.0 pricing page. VPC endpoints are charged by the hour and by how much data passes through them. There are three VPC endpoints in this solution (S3, System Manager, and SageMaker). VPC endpoint pricing is described on the Privatelink pricing page. SageMaker Notebooks are charged based on the number of instance hours and the instance type. There is one SageMaker instance in this solution, which may be eligible for free tier pricing. See the SageMaker pricing page for more details. Amazon S3 storage pricing depends on how much data you store, what kind of storage you use, and how much data transfers in and out of S3. The use in this solution may be eligible for free tier pricing. You can see details on the S3 pricing page.

Before deploying this solution, make sure to calculate your cost using the AWS Pricing Calculator, and the AppStream 2.0 pricing calculator.

Conclusion

Congratulations! You have deployed a solution that provides your users with access to sensitive and isolated data in a secure manner using AppStream 2.0. You have also implemented a mechanism that is designed to prevent user impersonation, and enabled end-to-end auditing of all user activities.

To learn about how Amazon is using AppStream 2.0, visit the blog post How Amazon uses AppStream 2.0 to provide data scientists and analysts with access to sensitive data.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

New global ID format coming to GraphQL

Post Syndicated from Wissam Abirached original https://github.blog/2021-02-10-new-global-id-format-coming-to-graphql/

The GitHub GraphQL API has been publicly available for over 4 years now. Its usage has grown immensely over time, and we’ve learned a lot from running one of the largest public GraphQL APIs in the world. Today, we are introducing a new format for object identifiers and a roll out plan that brings it to our GraphQL API this year.

What is driving the change?

As GitHub grows and reaches new scale milestones, we came to the realization that the current format of Global IDs in our GraphQL API will not support our projected growth over the coming years. The new format gives us more flexibility and scalability in handling your requests even faster.

What exactly is changing?

We are changing the Global ID format in our GraphQL API. As a result, all object identifiers in GraphQL will change and some identifiers will become longer than they are now. Since you can get an object’s Global ID via the REST API, these changes will also affect an object’s node_id returned via the REST API. Object identifiers will continue to be opaque strings and should not be decoded.

How will this be rolled out?

We understand that our APIs are a critical part of your engineering workflows, and our goal is to minimize the impact as much as possible. In order to give you time to migrate your implementations, caches, and data records to the new Global IDs, we will go through a gradual rollout and thorough deprecation process that includes three phases.

- Introduce new format: This phase will introduce the new Global IDs into the wild on a type by type basis, for newly created objects only. Existing objects will continue to have the same ID. We will start by migrating the least requested object types, working our way towards the most popular types. Note that the new Global IDs may be longer and, in case you were storing the ID, you should ensure you can store the longer IDs. During this phase, as long as you can handle the potentially longer IDs, no action is required by you. The expected duration of this phase is 3 months.

- Migrate: In this phase you should look to update your caches and data records. We will introduce migration tools allowing you to toggle between the two formats making it easy for you to update your caches to the new IDs. The migration tools will be detailed in a separate blog post, closer to launch date. You will be able to use the old or new IDs to refer to an object throughout this phase. The expected duration of this phase is 3 months.

- Deprecate: In this phase, all REST API requests and GraphQL queries will return the new IDs. Requests made with the old IDs will continue to work, but the response will only include the new ID as well as a deprecation warning. The expected duration of this phase is 3 months.

Once the three migration phases are complete, we will sunset the old IDs. All requests made using the old IDs will result in an error. Overall, the whole process should take 9 months, with the goal of giving you plenty of time to adjust and migrate to the new format.

Tell us what you think

If you have any concerns about the rollout of this change impacting your app, please contact us and include information such as your app name so that we can better assist you.

[$] Visiting another world

Post Syndicated from original https://lwn.net/Articles/845446/rss

The world wide web is truly a wondrous invention, but it is not without

flaws. There are massive privacy woes that stem from its standards and

implementation; it is also so fiendishly complex that few can truly grok

all of its expanse. That complexity affords enormous flexibility, for good

or ill.

Those who are looking for a simpler way to exchange

information—or hearken back to web prehistory—may find the Gemini project worth a look.

Lali Espósito | Libra y más allá | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=9T9bJL9LPyM

Finding a 1Up When Free Cloud Credits Run Out

Post Syndicated from Amrit Singh original https://www.backblaze.com/blog/finding-a-1up-when-free-cloud-credits-run-out/

For people in the early stages of development, a cloud storage provider that offers free credits might seem like a great deal. And diversified cloud providers do offer these kinds of promotions to help people get started with storing data: Google Cloud Free Tier and AWS Free Tier offer credits and services for a limited time, and both providers also have incentive funds for startups which can be unlocked through incubators that grant additional credits of up to tens of thousands of dollars.

Before you run off to give them a try though, it’s important to consider the long-term realities that await you on the far side of these promotions.

The reality is that once they’re used up, budget items that were zeros yesterday can become massive problems tomorrow. Twitter is littered with countless experiences of developers finding themselves surprised with an unexpected bill and the realization that they need to figure out how to navigate the complexities of their cloud provider—fast.

we made the unfortunate mistake (and I'm sure this is how they get you) of not watching our cloud costs so when the generous credits ran out we were hit with big bills until we did major refactoring. Lessons learned early on

— Yuan Gao (@mesetatron) December 5, 2020

What to Do When You Run Out of Free Cloud Storage Credits

So, what do you do once you’re out of credits? You could try signing up with different emails to game the system, or look into getting into a different incubator for more free credits. If you plan on your app being around for a few years and succeeding, the solution of finding more credits isn’t scalable, and the process of applying to another incubator would take too long. You can always switch from Google Cloud Platform to AWS to get free credits elsewhere, but transferring data between providers almost always incurs painful egress charges.

Because chasing free credits won’t work forever, this post offers three paths for navigating your cloud bills after free tiers expire. It covers:

- Staying with the same provider. Once you run out of free credits, you can optimize your storage instances and continue using (and paying) for the same provider.

- Exploring multi-cloud options. You can port some of your data to another solution and take advantage of the freedom of a multi-cloud strategy.

- Choosing another provider. You can transfer all of your data to a different cloud that better suits your needs.

Path 1: Stick With Your Current Cloud Provider

If you’re running out of promotional credits with your current provider, your first path is to just continue using their storage services. Many people see this as your only option because of the frighteningly high egress fees you’d face if you try to leave. If you choose to stay with the same provider, be sure to review and account for all of the instances you’ve spun up.

Here’s an example of a bill that one developer faced after their credits expired: This user found themselves locked into an unexpected $2,700 bill because of egress costs. Looking closer at their experience, the spike in charges was due to a data transfer of 30TB of data. The first 1GB of data transferred out is free, followed by egress costing $0.09 per gigabyte for the first 10TB and $0.085 per gigabyte for the next 40TB. Doing the math, that’s:

$0.085/GB x 20,414 GB = $1735, $0.090/GB x 10,239 GB = $921

Choosing to stay with your current cloud provider is a straightforward path, but it’s not necessarily the easiest or least expensive option, which is why it’s important to conduct a thorough audit of the current cloud services you have in use to optimize your cloud spend.

Optimizing Your Current Cloud Storage Solution

Over time, cloud infrastructure tends to become more complex and varied, and your cloud storage bills follow the same pattern. Cloud pricing transparency in general is an issue with most diversified providers—in short: It’s hard to understand what you’re paying for, and when. If you haven’t seen a comparison yet, a breakdown contrasting storage providers is shared in this post.

Many users find that AWS and Google Cloud are so complex that they turn to services that can help them monitor and optimize their cloud spend. These cost management services charge based on a percentage of your AWS spend. For a startup with limited resources, paying for these professional services can be challenging, but manually predicting cloud costs and optimizing spending is also difficult, as well as time consuming.

The takeaway for sticking with your current provider: Be a budget hawk for every fee you may be at risk of incurring, and ensure your development keeps you from unwittingly racking up heavy fees.

Path 2: Take a Multi-cloud Approach

For some developers, although you may want to switch to a different cloud after your free credits expire, your code can’t be easily separated from your cloud provider. In this case, a multi-cloud approach can achieve the necessary price point while maintaining the required level of service.

Short term, you can mitigate your cloud bill by immediately beginning to port any data you generate going forward to a more affordable solution. Even if the process of migrating your existing data is challenging, this move will stop your current bill from ballooning.

Beyond mitigation, there are multiple benefits to using a multi-cloud solution. A multi-cloud strategy gives companies the freedom to use the best possible cloud service for each workload. There are other benefits to taking a multi-cloud approach:

- Redundancy: Some major providers have faced outages recently. A multi-cloud strategy allows you to have a backup of your data to continue serving your customers even if your primary cloud provider goes down.

- Functionality: With so many providers introducing new features and services, it’s unlikely that a single cloud provider will meet all of your needs. With a multi-cloud approach, you can pick and choose the best services from each provider. Multinational companies can also optimize for their particular geographical regions.

- Flexibility: Avoid vendor lock-in if you outgrow a single cloud provider with a diverse cloud infrastructure.

- Cost: You may find that one cloud provider offers a lower price for compute and another for storage. A multi-cloud strategy allows you to pick and choose which works best for your budget.

The takeaway for pursuing multi-cloud: It might not solve your existing bill, but it will mitigate your exposure to additional fees going forward. And it offers the side benefit of providing a best-of-breed approach to your development tech stack.

Path 3: Find a New Cloud Provider

Finally, you can choose to move all of your data to a different cloud storage provider. We recommend taking a long-term approach: Look for cloud storage that allows you to scale with the least amount of friction while continuing to support everything you need for a good customer experience in your app. You’ll want to consider cost, usability, and solutions when looking for a new provider.

Cost

Many cloud providers use a multi-tier approach, which can become complex as your business starts to scale its cloud infrastructure. Switching to a provider that has single-tier pricing helps businesses planning for growth predict their cloud storage cost and optimize its spend, saving time and money for use on future opportunities. You can use this pricing calculator to check storage costs of Backblaze B2 Cloud Storage against AWS, Azure, and Google Cloud.

One example of a startup that saved money and was able to grow their business by switching to another storage provider is CloudSpot, a SaaS photography platform. They had initially gotten their business off the ground with the help of a startup incubator. Then in 2019, their AWS storage costs skyrocketed, but their team felt locked in to using Amazon.

When they looked at other cloud providers and eventually transferred their data out of AWS, they were able to save on storage costs that allowed them to reintroduce services they had previously been forced to shut down due to their AWS bill. Reviving these services made an immediate impact on customer acquisition and recurring revenue.

Usability

Time spent trying to navigate a complicated platform is a significant cost to business. Aiden Korotkin of AK Productions, a full-service video production company based in Washington, D.C., experienced this first hand. Korotkin initially stored his client data in Google Cloud because the platform had offered him a promotional credit. When the credits ran out in about a year, he found himself frustrated with the inefficiency, privacy concerns, and overall complexity of Google Cloud.

Korotkin chose to switch to Backblaze B2 Cloud Storage with the help of solution engineers that helped him figure out the best storage solution for his business. After quickly and seamlessly transferring his first 12TB in less than a day, he noticed a significant difference from using Google Cloud. “If I had to estimate, I was spending between 30 minutes to an hour trying to figure out simple tasks on Google (e.g. setting up a new application key, or syncing to a third-party source). On Backblaze it literally takes me five minutes,” he emphasized.

Integrations

Workflow integrations can make cloud storage easier to use and provide additional features. By selecting multiple best-of-breed providers, you can achieve better functionality with significantly reduced price and complexity.

Content delivery network (CDN) partnerships with Cloudflare and Fastly allow developers using services like Backblaze B2 to take advantage of free egress between the two services. Game developers can serve their games to users without paying egress between their origin source and their CDN, and media management solutions that can integrate directly with cloud storage to make media assets easy to find, sort, and pull into a new project or editing tool. Take a look at other solutions integrated with cloud storage that can support your workflows.

Cloud to Cloud Migration

After choosing a new cloud provider, you can plan your data migration. Your data may be spread out across multiple buckets, service providers, or different storage tiers—so your first task is discovering where your data is and what can and can’t move. Once you’re ready, there is a range of solutions for moving your data, but when it comes to moving between cloud services, a data migration tool like Flexify.IO can help make things a lot easier and faster.

Instead of manually offloading static and production data from your current cloud storage provider and reuploading it into your new provider, Flexify.IO reads the data from the source storage and writes it to the destination storage via inter-cloud bandwidth. Flexify.IO achieves fast and secure data migration at cloud-native speeds because the data transfer happens within the cloud environment.

For developers with customer-facing applications, it’s especially important that customers still retain access to data during the migration from one cloud provider to another. When CloudSpot moved about 700TB of data from AWS to Backblaze B2 in just six days with help from Flexify.IO, customers were actually still uploading images to their Amazon S3 buckets. The migration process was able to support both environments and allowed them to ensure everything worked properly. It was also necessary because downtime was out of the question—customers access their data so frequently that one of CloudSpot’s galleries is accessed every one or two seconds.

What’s Next?

If you’re interested in exploring a different cloud storage service for your solution, you can easily sign up today, or contact us for more information on how to run a free POC or just to begin transferring your data out of your current cloud provider.

The post Finding a 1Up When Free Cloud Credits Run Out appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Hawkins: Diving into the Reasoning Behind our Design System

Post Syndicated from Netflix Technology Blog original https://netflixtechblog.com/hawkins-diving-into-the-reasoning-behind-our-design-system-964a7357547

by Hawkins team member Joshua Godi; with art contributions by Wiki Chaves

Hawkins may be the name of a fictional town in Indiana, most widely known as the backdrop for one of Netflix’s most popular TV series “Stranger Things,” but the name is so much more. Hawkins is the namesake that established the basis for a design system used across the Netflix Studio ecosystem.

Have you ever used a suite of applications that had an inconsistent user experience? It can be a nightmare to work efficiently. The learning curve can be immense for each and every application in the suite, as the user is essentially learning a new tool with each interaction. Aside from the burden on these users, the engineers responsible for building and maintaining these applications must keep reinventing the wheel, starting from scratch with toolsets, component libraries and design patterns. This investment is repetitive and costly. A design system, such as the one we developed for the Netflix Studio, can help alleviate most of these headaches.

We have been working on our own design system that is widely used across the Netflix Studio’s growing application catalogue, which consists of 80+ applications. These applications power the production of Netflix’s content, from pitch evaluation to financial forecasting and completed asset delivery. A typical day for a production employee could require using a handful of these applications to entertain our members across the world. We wanted a way to ensure that we can have a consistent user experience while also sharing as much code as possible.

In this blog post, we will highlight why we built Hawkins, as well as how we got buy-in across the engineering organization and our plans moving forward. We recently presented a talk on how we built Hawkins; so if you are interested in more details, check out the video.

What is a design system?

Before we can dive into the importance of having a design system, we have to define what a design system means. It can mean different things to different people. For Hawkins, our design system is composed of two main aspects.

First, we have the design elements that form the foundational layer of Hawkins. These consist of Figma components that are used throughout the design team. These components are used to build out mocks for the engineering team. Being the foundational layer, it is important that these assets are consistent and intuitive.

Second, we have our React component library, which is a JavaScript library for building user interfaces. The engineering team uses this component library to ensure that each and every component is reusable, conforms to the design assets and can be highly configurable for different situations. We also make sure that each component is composable and can be used in many different combinations. We made the decision to keep our components very atomic; this keeps them small, lightweight and easy to combine into larger components.

At Netflix, we have two teams composed of six people who work together to make Hawkins a success, but that doesn’t always need to be the case. A successful design system can be created with just a small team. The key aspects are that it is reusable, configurable and composable.

Why is a design system important?

Having a solid design system can help to alleviate many issues that come from maintaining so many different applications. A design system can bring cohesion across your suite of applications and drastically reduce the engineering burden for each application.

Quality user experience can be hard to come by as your suite of applications grow. A design system should be there to help ease that burden, acting as the blueprint on how you build applications. Having a consistent user experience also reduces the training required. If users know how to fill out forms, access data in a table or receive notifications in one application, they will intuitively know how to in the next application.

The design system acts as a language that both designers and engineers can speak to align on how applications are built out. It also helps with onboarding new team members due to the documentation and examples outlined in your design system.

The last and arguably biggest win for design systems is the reduction of burden on engineering. There will only be one implementation of buttons, tables, forms, etc. This greatly reduces the number of bugs and improves the overall health and performance of every application that uses the design system. The entire engineering organization is working to improve one set of components vs. each using their own individual components. When a component is improved, whether through additional functionality or a bug fix, the benefit is shared across the entire organization.

Taking a wide view of the Netflix Studio landscape, we saw many opportunities where Hawkins could bring value to the engineering organization.

Build vs. buy

The first question we asked ourselves is whether we wanted to build out an entire design system from scratch or leverage an existing solution. There are pros and cons to each approach.

Building it yourself — The benefits of DIY means that you are in control every step of the way. You get to decide what will be included in the design system and what is better left out. The downside is that because you are responsible for it all, it will likely take longer to complete.

Leveraging an existing solution — When you leverage an existing solution, you can still customize certain elements of that solution, but ultimately you are getting a lot out of the box for free. Depending on which solution you choose, you could be inheriting a ton of issues or something that is battle tested. Do your research and don’t be afraid to ask around!

For Hawkins, we decided to take both approaches. On the design side, we decided to build it ourselves. This gave us complete creative control over how our user experience is throughout the design language. On the engineering side, we decided to build on top of an existing solution by utilizing Material-UI. Leveraging Material-UI, gave us a ton of components out of the box that we can configure and style to meet the needs of Hawkins. We also chose to obfuscate a number of the customizations that come from the library to ensure upgrading or replacing components will be smoother.

Generating users and getting buy-in

The single biggest question that we had when building out Hawkins is how to obtain buy-in across the engineering organization. We decided to track the number of uses of each component, the number of installs of the packages themselves, and how many applications were using Hawkins in production as metrics to determine success.

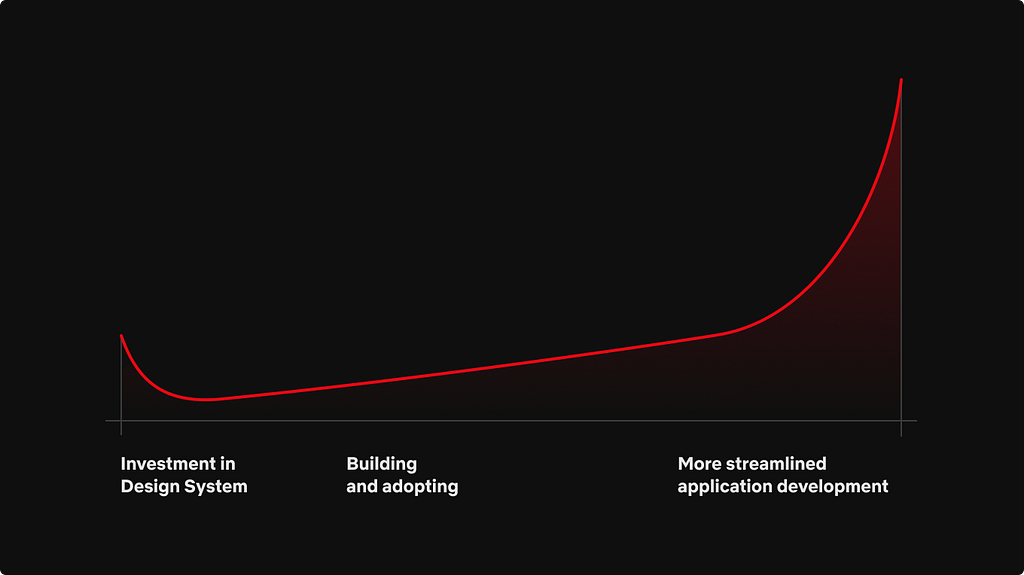

There is a definitive cost that comes with building out a design system no matter the route you take. The initial cost is very high, with research, building out the design tokens and the component library. Then, developers have to begin consuming the libraries inside of applications, either with full re-writes or feature by feature.

A good representation of this is the graph above. While an organization may spend a lot of time initially making the design system, it will benefit greatly once it is fully implemented and trusted across the organization. With Hawkins, our initial build phase took about two quarters. The two quarters were split between Q1 consisting of creating the design language and Q2 being the implementation phase. Engineering and Design worked closely during the entire build phase. The end result was a significant number of components in Figma and a large component library leveraging Material-UI. Only then could we start to look for engineering teams to start using Hawkins.

When building out the component library, we set out to accomplish four key aspects that we felt would help drive support for Hawkins:

Document components — First, we ensured that each component was fully documented and had examples using Storybook.

On-call rotation for support — Next, we set up an on-call rotation in Slack, where engineers could not only seek guidance, but report any issues they may have encountered. It was extremely important to be responsive in our communication channels. The more support engineers feel they have, the more receptive they will be to using the design library.

Demonstrate Hawkins usefulness — Next, we started to do “road shows,” where we would join team meetings to demonstrate the value that Hawkins could bring to each and every team. This also provided an opportunity for the engineers to ask questions in person and for us to gather feedback to ensure our plans for Hawkins would meet their needs.

Bootstrap features for proof of concept — Finally, we helped bootstrap out features or applications for teams as a proof of concept. All of these together helped to foster a relationship between the Hawkins team and engineering teams.

Even today, as the Hawkins team, we run through all of the above exercises and more to ensure that the design system is robust and has the level of support the engineering organization can trust.

Handling the outliers

The Hawkins libraries all consist of basic components that are the building blocks to the applications across the Netflix Studio. When engineers increased their usage of Hawkins, it became clear that many folks were using the atomic components to build more complex experiences that were common across multiple applications, like in-app chat, data grids, and file uploaders, to name a few. We did not want to put these components straight into Hawkins because of the complexity and because they weren’t used across the entire Studio. So, we were tasked with identifying a way to share these complex components while still being able to benefit from all the work we accomplished on Hawkins.

To meet this challenge, developers decided to spin up a parallel library that sits right next to Hawkins. This library builds on top of the existing design system to provide a home for all the complex components that didn’t fit into the original design system.

This library was set up as a Lerna monorepo with tooling to quickly jumpstart a new package. We followed the same steps as Hawkins with Storybook and communication channels. The benefit of using a monorepo was that it gave engineering a single place to discover what components are available when building out applications. We also decided to version each package independently, which helped avoid issues with updating Hawkins or in downstream applications.

With so many components that will go into this parallel library, we decided on taking an “open source” approach to share the burden of responsibility for each component. Every engineer is welcome to contribute new components and help fix bugs or release new features in existing components. This model helps spread the ownership out from just a single engineer to a team of developers and engineers working in tandem.

It is the goal that eventually these components could be migrated into the Hawkins library. That is why we took the time to ensure that each repository has the same rules when it came to development, testing and building. This would allow for an easy migration.

Wrapping up

We still have a long way to go on Hawkins. There are still a plethora of improvements that we can do to enhance performance and developer ergonomics, and make it easier to work with Hawkins in general, especially as we start to use Hawkins outside of just the Netflix Studio!

We are very excited to share our work on Hawkins and dive into some of the nuances that we came across.

Hawkins: Diving into the Reasoning Behind our Design System was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/845602/rss

Security updates have been issued by Debian (connman, firejail, libzstd, slirp, and xcftools), Fedora (chromium, jackson-databind, and privoxy), openSUSE (chromium), Oracle (kernel and kernel-container), Slackware (dnsmasq), SUSE (java-11-openjdk, kernel, and python), and Ubuntu (linux, linux-aws, linux-azure, linux-gcp, linux-hwe-5.8, linux-kvm, linux-oem-5.6, linux-oracle, linux-raspi, linux, linux-gke-5.0, linux-gke-5.3, linux-hwe, linux-raspi2-5.3, openjdk-8, openjdk-lts, and snapd).

"Fix" for Eclipse Mosquitto MQTT version 2.x running in Docker

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=KKw3tUrX3d8