Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=-B4WWevd2JI

Share Matter device from Apple Home to Home Assistant

Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=nyGyZv90jnQ

Add Matter device via iOS app in Home Assistant

Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=8y79Kq3QfCQ

Using GitHub Actions with Amazon CodeCatalyst

Post Syndicated from Dr. Rahul Sharad Gaikwad original https://aws.amazon.com/blogs/devops/using-github-actions-with-amazon-codecatalyst/

An Amazon CodeCatalyst workflow is an automated procedure that describes how to build, test, and deploy your code as part of a continuous integration and continuous delivery (CI/CD) system. You can use GitHub Actions alongside native CodeCatalyst actions in a CodeCatalyst workflow.

Introduction:

In a prior post in this series, Using Workflows to Build, Test, and Deploy with Amazon CodeCatalyst, I discussed creating CI/CD pipelines in CodeCatalyst and how that relates to The Unicorn Project’s main protagonist, Maxine. CodeCatalyst workflows help you reliably deliver high-quality application updates frequently, quickly, and securely. CodeCatalyst allows you to quickly assemble and configure actions to compose workflows that automate your CI/CD pipeline, test reporting, and other manual processes. Workflows use provisioned compute, Lambda compute, custom container images, and a managed build infrastructure to scale execution easily without sacrificing flexibility. In this post, I will return to workflows and discuss running GitHub Actions alongside native CodeCatalyst actions.

Prerequisites

If you would like to follow along with this walkthrough, you will need to:

- Have an AWS Builder ID for signing in to CodeCatalyst.

- Belong to a space and have the space administrator role assigned to you in that space. For more information, see Creating a space in CodeCatalyst, Managing members of your space, and Space administrator role.

- Have an AWS account associated with your space and have the IAM role in that account. For more information about the role and role policy, see Creating a CodeCatalyst service role.

Walkthrough

As with the previous posts in the CodeCatalyst series, I am going to use the Modern Three-tier Web Application blueprint. Blueprints provide sample code and CI/CD workflows to help you get started easily across different combinations of programming languages and architectures. To follow along, you can re-use a project you created previously, or you can refer to a previous post that walks through creating a project using the Three-tier blueprint.

As the team has grown, I have noticed that code quality has decreased. Therefore, I would like to add a few additional tools to validate code quality when a new pull request is submitted. In addition, I would like to create a Software Bill of Materials (SBOM) for each pull request so I know what components are used by the code. In the previous post on workflows, I focused on the deployment workflow. In this post, I will focus on the OnPullRequest workflow. You can view the OnPullRequest pipeline by expanding CI/CD from the left navigation, and choosing Workflows. Next, choose OnPullRequest and you will be presented with the workflow shown in the following screenshot. This workflow runs when a new pull request is submitted and currently uses Amazon CodeGuru to perform an automated code review.

Figure 1. OnPullRequest Workflow with CodeGuru code review

While CodeGuru provides intelligent recommendations to improve code quality, it does not check style. I would like to add a linter to ensure developers follow our coding standards. While CodeCatalyst supports a rich collection of native actions, this does not currently include a linter. Fortunately, CodeCatalyst also supports GitHub Actions. Let’s use a GitHub Action to add a linter to the workflow.

Select Edit in the top right corner of the Workflow screen. If the editor opens in YAML mode, switch to Visual mode using the toggle above the code. Next, select “+ Actions” to show the list of actions. Then, change from Amazon CodeCatalyst to GitHub using the dropdown. At the time this blog was published, CodeCatalyst includes about a dozen curated GitHub Actions. Note that you are not limited to the list of curated actions. I’ll show you how to add GitHub Actions that are not on the list later in this post. For now, I am going to use Super-Linter to check coding style in pull requests. Find Super-Linter in the curated list and click the plus icon to add it to the workflow.

Figure 2. Super-Linter action with add icon

This will add a new action to the workflow and open the configuration dialog box. There is no further configuration needed, so you can simply close the configuration dialog box. The workflow should now look like this.

Figure 3. Workflow with the new Super-Linter action

Notice that the actions are configured to run in parallel. In the previous post, when I discussed the deployment workflow, the steps were sequential. This made sense since each step built on the previous step. For the pull request workflow, the actions are independent, and I will allow them to run in parallel so they complete faster. I select Validate, and assuming there are no issues, I select Commit to save my changes to the repository.

While CodeCatalyst will start the workflow when a pull request is submitted, I do not have a pull request to submit. Therefore, I select Run to test the workflow. A notification at the top of the screen includes a link to view the run. As expected, Super Linter fails because it has found issues in the application code. I click on the Super Linter action and review the logs. Here are few issues that Super Linter reported regarding app.py used by the backend application. Note that the log has been modified slightly to fit on a single line.

With Super-Linter working, I turn my attention to creating a Software Bill of Materials

(SBOM). I am going to use OWASP CycloneDX to create the SBOM. While there is a GitHub Action for CycloneDX, at the time I am writing this post, it is not available from the list of curated GitHub Actions in CodeCatalyst. Fortunately, CodeCatalyst is not limited to the curated list. I can use most any GitHub Action in CodeCatalyst. To add a GitHub Action that is not in the curated list, I return to edit mode, find GitHub Actions in the list of curated actions, and click the plus icon to add it to the workflow.

Figure 4. GitHub Action with add icon

CodeCatalyst will add a new action to the workflow and open the configuration dialog box. I choose the Configuration tab and use the pencil icon to change the Action Name to Software-Bill-of-Materials. Then, I scroll down to the configuration section, and change the GitHub Action YAML. Note that you can copy the YAML from the GitHub Actions Marketplace, including the latest version number. In addition, the CycloneDX action expects you to pass the path to the Python requirements file as an input parameter.

Figure 5. GitHub Action YAML configuration

Since I am using the generic GitHub Action, I must tell CodeCatalyst which artifacts are produced by the action and should be collected after execution. CycloneDX creates an XML file called bom.xml which I configure as an artifact. Note that a CodeCatalyst artifact is the output of a workflow action, and typically consists of a folder or archive of files. You can share artifacts with subsequent actions.

Figure 6. Artifact configuration with the path to bom.xml

Once again, I select Validate, and assuming there are no issues, I select Commit to save my changes to the repository. I now have three actions that run in parallel when a pull request is submitted: CodeGuru, Super-Linter, and Software Bill of Materials.

Figure 7. Workflow including the software bill of materials

As before, I select Run to test my workflow and click the view link in the notification. As expected, the workflow fails because Super-Linter is still reporting issues. However, the new Software Bill of Materials has completed successfully. From the artifacts tab I can download the SBOM.

Figure 8. Artifacts tab listing code review and SBOM

The artifact is a zip archive that includes the bom.xml created by CycloneDX. This includes, among other information, a list of components used in the backend application.

The workflow is now enforcing code quality and generating a SBOM like I wanted. Note that while this is a great start, there is still room for improvement. First, I could collect reports generated by the actions in my workflow, and define success criteria for code quality. Second, I could scan the SBOM for known security vulnerabilities using a Software Composition Analysis (SCA) solution. I will be covering this in a future post in this series.

Cleanup

If you have been following along with this workflow, you should delete the resources you deployed so you do not continue to incur charges. First, delete the two stacks that CDK deployed using the AWS CloudFormation console in the AWS account you associated when you launched the blueprint. These stacks will have names like mysfitsXXXXXWebStack and mysfitsXXXXXAppStack. Second, delete the project from CodeCatalyst by navigating to Project settings and choosing Delete project.

Conclusion

In this post, you learned how to add GitHub Actions to a CodeCatalyst workflow. I used GitHub Actions alongside native CodeCatalyst actions in my workflow. I also discussed adding actions from both the curated list of actions and others not in the curated list. Read the documentation to learn more about using GitHub Actions in CodeCatalyst.

About the authors:

Welcome to Wildebeest: the Fediverse on Cloudflare

Post Syndicated from Celso Martinho original https://blog.cloudflare.com/welcome-to-wildebeest-the-fediverse-on-cloudflare/

The Fediverse has been a hot topic of discussion lately, with thousands, if not millions, of new users creating accounts on platforms like Mastodon to either move entirely to “the other side” or experiment and learn about this new social network.

Today we’re introducing Wildebeest, an open-source, easy-to-deploy ActivityPub and Mastodon-compatible server built entirely on top of Cloudflare’s Supercloud. If you want to run your own spot in the Fediverse you can now do it entirely on Cloudflare.

The Fediverse, built on Cloudflare

Today you’re left with two options if you want to join the Mastodon federated network: either you join one of the existing servers (servers are also called communities, and each one has its own infrastructure and rules), or you can run your self-hosted server.

There are a few reasons why you’d want to run your own server:

- You want to create a new community and attract other users over a common theme and usage rules.

- You don’t want to have to trust third-party servers or abide by their policies and want your server, under your domain, for your personal account.

- You want complete control over your data, personal information, and content and visibility over what happens with your instance.

The Mastodon gGmbH non-profit organization provides a server implementation using Ruby, Node.js, PostgreSQL and Redis. Running the official server can be challenging, though. You need to own or rent a server or VPS somewhere; you have to install and configure the software, set up the database and public-facing web server, and configure and protect your network against attacks or abuse. And then you have to maintain all of that and deal with constant updates. It’s a lot of scripting and technical work before you can get it up and running; definitely not something for the less technical enthusiasts.

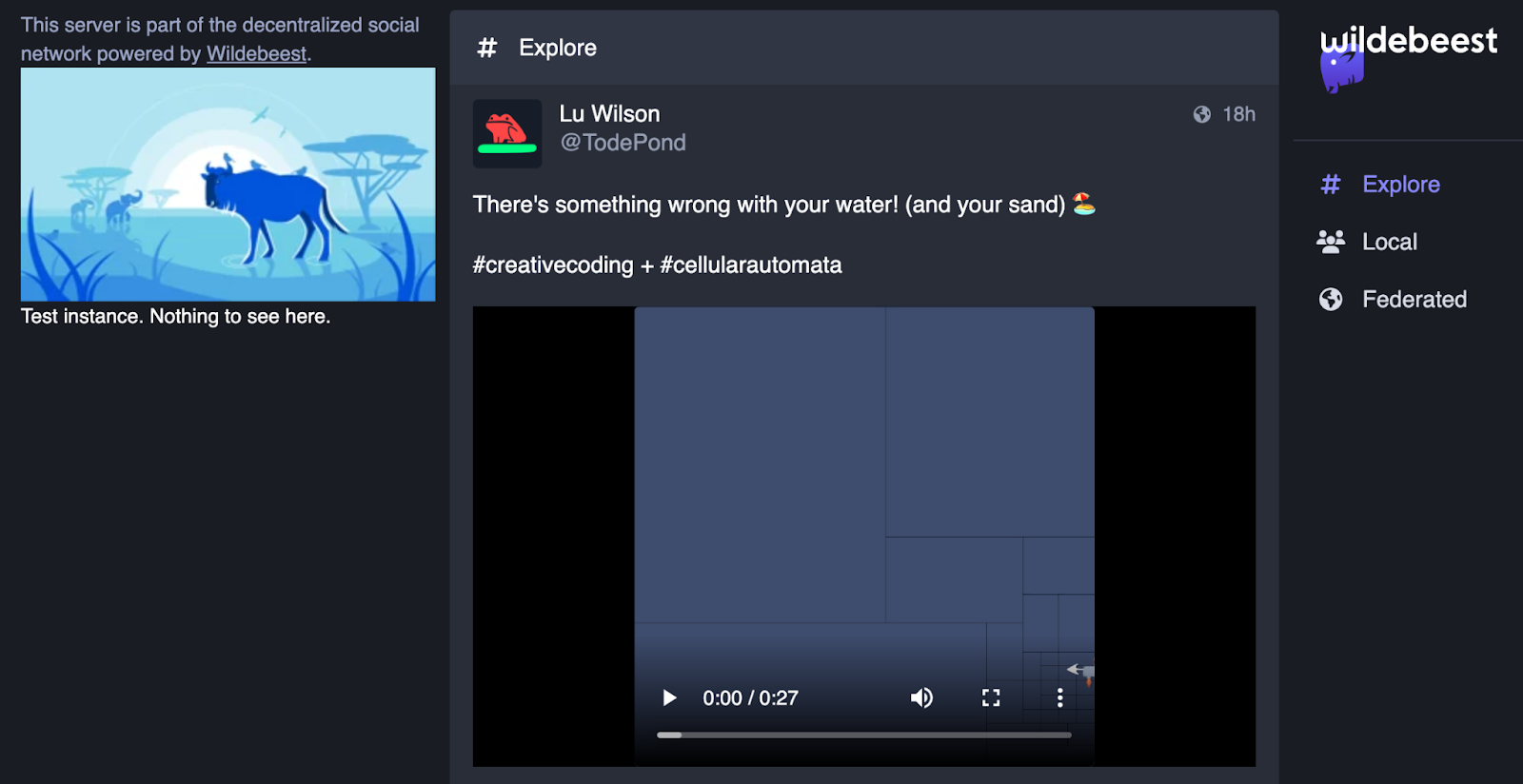

Wildebeest serves two purposes: you can quickly deploy your Mastodon-compatible server on top of Cloudflare and connect it to the Fediverse in minutes, and you don’t need to worry about maintaining or protecting it from abuse or attacks; Cloudflare will do it for you automatically.

Wildebeest is not a managed service. It’s your instance, data, and code running in our cloud under your Cloudflare account. Furthermore, it’s open-sourced, which means it keeps evolving with more features, and anyone can extend and improve it.

Here’s what we support today:

- ActivityPub, WebFinger, NodeInfo, WebPush and Mastodon-compatible APIs. Wildebeest can connect to or receive connections from other Fediverse servers.

- Compatible with the most popular Mastodon web (like Pinafore), desktop, and mobile clients. We also provide a simple read-only web interface to explore the timelines and user profiles.

- You can publish, edit, boost, or delete posts, sorry, toots. We support text, images, and (soon) video.

- Anyone can follow you; you can follow anyone.

- You can search for content.

- You can register one or multiple accounts under your instance. Authentication can be email-based on or using any Cloudflare Access compatible IdP, like GitHub or Google.

- You can edit your profile information, avatar, and header image.

How we built it

Our implementation is built entirely on top of our products and APIs. Building Wildebeest was another excellent opportunity to showcase our technology stack’s power and versatility and prove how anyone can also use Cloudflare to build larger applications that involve multiple systems and complex requirements.

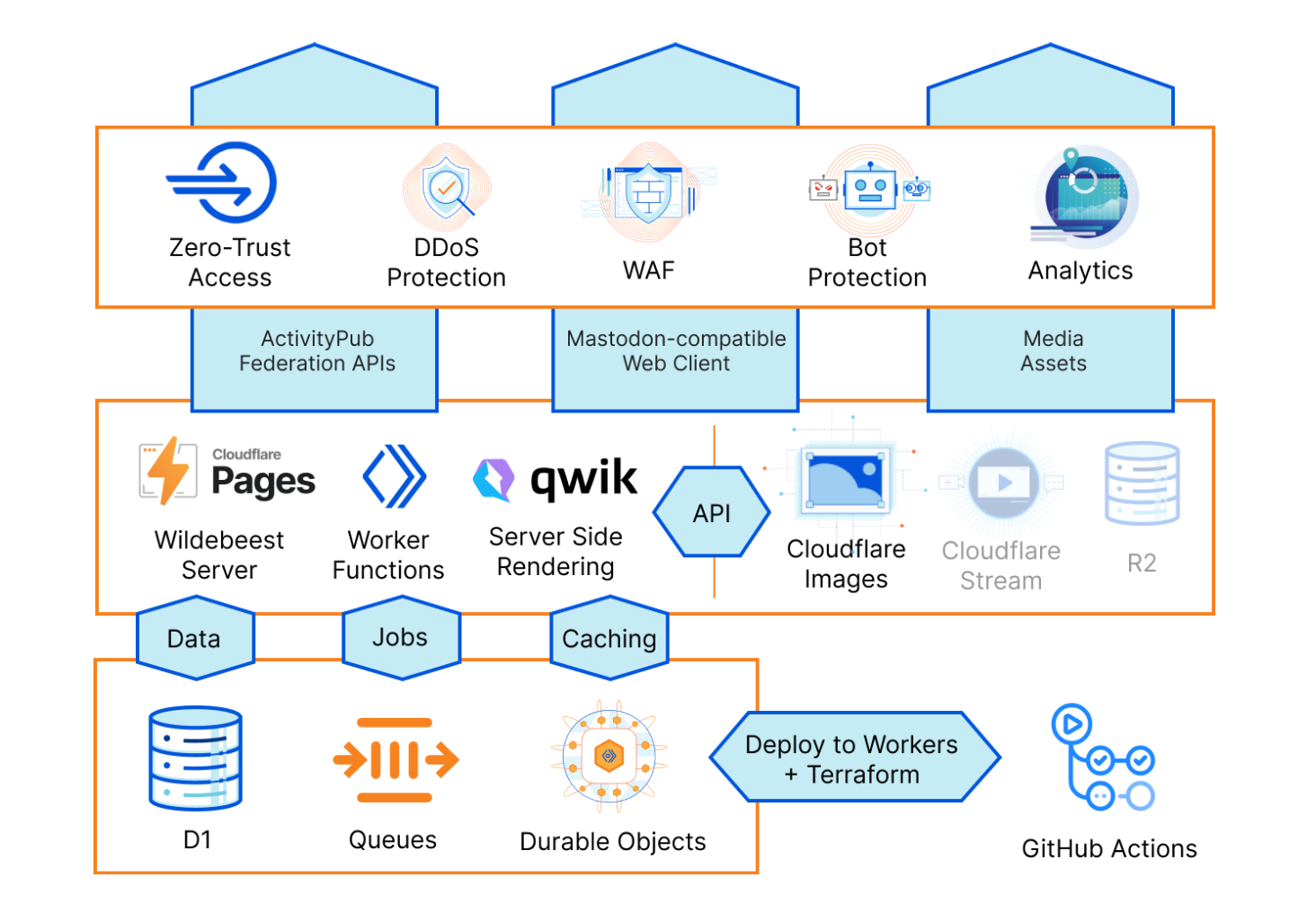

Here’s a birds-eye diagram of Wildebeest’s architecture:

Let’s get into the details and get technical now.

Cloudflare Pages

At the core, Wildebeest is a Cloudflare Pages project running its code using Pages Functions. Cloudflare Pages provides an excellent foundation for building and deploying your application and serving your bundled assets, Functions gives you full access to the Workers ecosystem, where you can run any code.

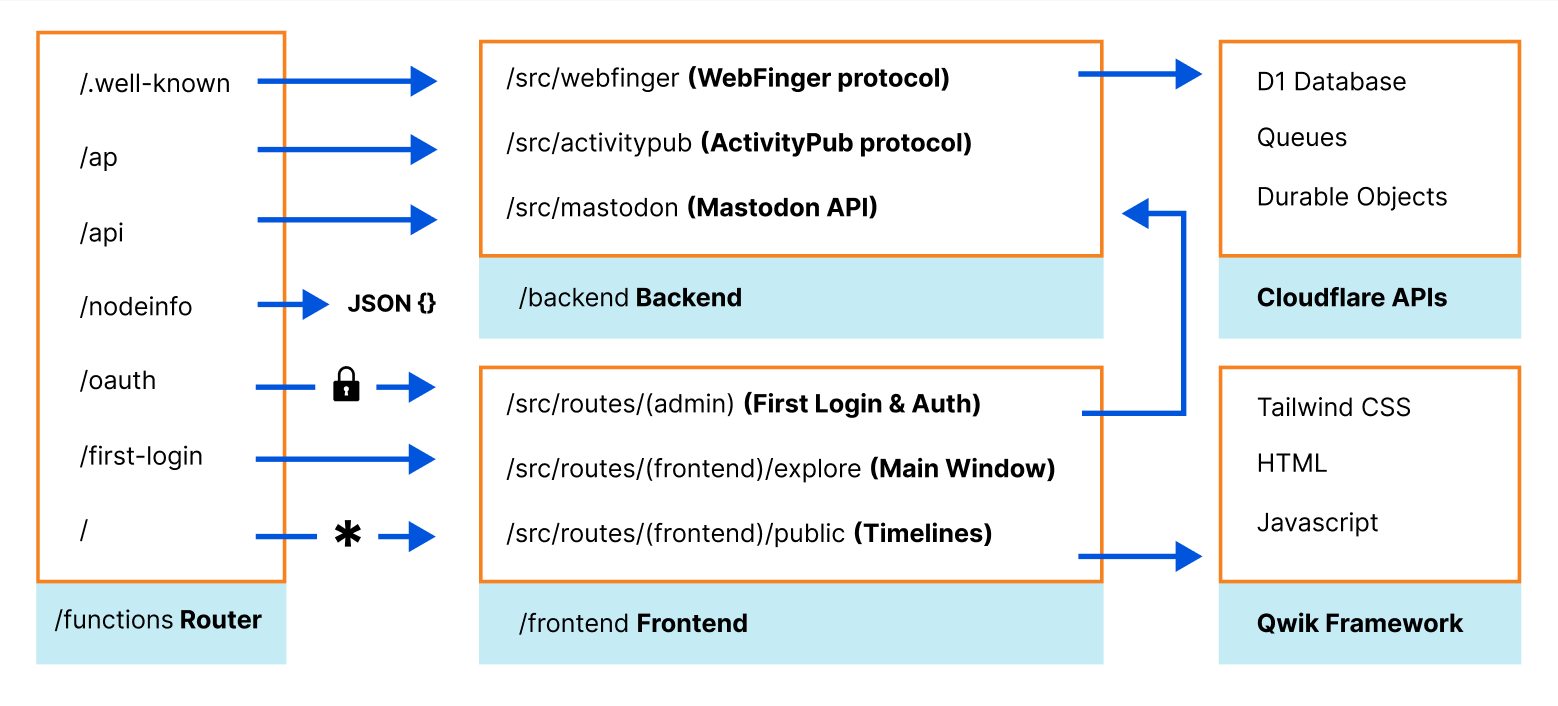

Functions has a built-in file-based router. The /functions directory structure, which is uploaded by Wildebeest’s continuous deployment builds, defines your application routes and what files and code will process each HTTP endpoint request. This routing technique is similar to what other frameworks like Next.js use.

For example, Mastodon’s /api/v1/timelines/public API endpoint is handled by /functions/api/v1/timelines/public.ts with the onRequest method.

export onRequest = async ({ request, env }) => {

const { searchParams } = new URL(request.url)

const domain = new URL(request.url).hostname

...

return handleRequest(domain, env.DATABASE, {})

}

export async function handleRequest(

…

): Promise<Response> {

…

}

Unit testing these endpoints becomes easier too, since we only have to call the handleRequest() function from the testing framework. Check one of our Jest tests, mastodon.spec.ts:

import * as v1_instance from 'wildebeest/functions/api/v1/instance'

describe('Mastodon APIs', () => {

describe('instance', () => {

test('return the instance infos v1', async () => {

const res = await v1_instance.handleRequest(domain, env)

assert.equal(res.status, 200)

assertCORS(res)

const data = await res.json<Data>()

assert.equal(data.rules.length, 0)

assert(data.version.includes('Wildebeest'))

})

})

})

As with any other regular Worker, Functions also lets you set up bindings to interact with other Cloudflare products and features like KV, R2, D1, Durable Objects, and more. The list keeps growing.

We use Functions to implement a large portion of the official Mastodon API specification, making Wildebeest compatible with the existing ecosystem of other servers and client applications, and also to run our own read-only web frontend under the same project codebase.

Wildebeest’s web frontend uses Qwik, a general-purpose web framework that is optimized for speed, uses modern concepts like the JSX JavaScript syntax extension and supports server-side-rendering (SSR) and static site generation (SSG).

Qwik provides a Cloudflare Pages Adaptor out of the box, so we use that (check our framework guide to know more about how to deploy a Qwik site on Cloudflare Pages). For styling we use the Tailwind CSS framework, which Qwik supports natively.

Our frontend website code and static assets can be found under the /frontend directory. The application is handled by the /functions/[[path]].js dynamic route, which basically catches all the non-API requests, and then invokes Qwik’s own internal router, Qwik City, which takes over everything else after that.

The power and versatility of Pages and Functions routes make it possible to run both the backend APIs and a server-side-rendered dynamic client, effectively a full-stack app, under the same project.

Let’s dig even deeper now, and understand how the server interacts with the other components in our architecture.

D1

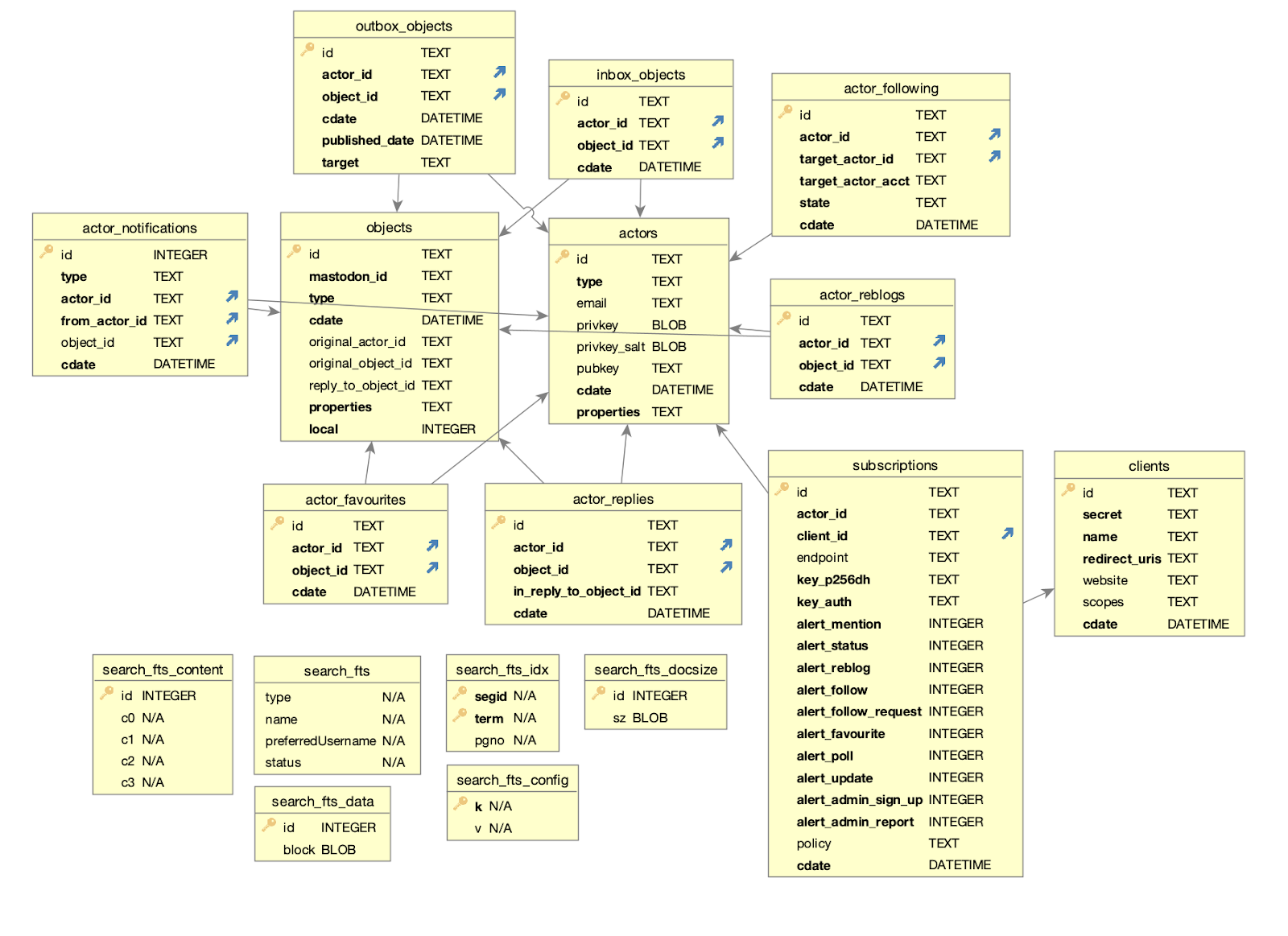

Wildebeest uses D1, Cloudflare’s first SQL database for the Workers platform built on top of SQLite, now open to everyone in alpha, to store and query data. Here’s our schema:

The schema will probably change in the future, as we add more features. That’s fine, D1 supports migrations which are great when you need to update your database schema without losing your data. With each new Wildebeest version, we can create a new migration file if it requires database schema changes.

-- Migration number: 0001 2023-01-16T13:09:04.033Z

CREATE UNIQUE INDEX unique_actor_following ON actor_following (actor_id, target_actor_id);

D1 exposes a powerful client API that developers can use to manipulate and query data from Worker scripts, or in our case, Pages Functions.

Here’s a simplified example of how we interact with D1 when you start following someone on the Fediverse:

export async function addFollowing(db, actor, target, targetAcct): Promise<UUID> {

const query = `INSERT OR IGNORE INTO actor_following (id, actor_id, target_actor_id, state, target_actor_acct) VALUES (?, ?, ?, ?, ?)`

const out = await db

.prepare(query)

.bind(id, actor.id.toString(), target.id.toString(), STATE_PENDING, targetAcct)

.run()

return id

}

Cloudflare’s culture of dogfooding and building on top of our own products means that we sometimes experience their shortcomings before our users. We did face a few challenges using D1, which is built on SQLite, to store our data. Here are two examples.

ActivityPub uses UUIDs to identify objects and reference them in URIs extensively. These objects need to be stored in the database. Other databases like PostgreSQL provide built-in functions to generate unique identifiers. SQLite and D1 don’t have that, yet, it’s in our roadmap.

Worry not though, the Workers runtime supports Web Crypto, so we use crypto.randomUUID() to get our unique identifiers. Check the /backend/src/activitypub/actors/inbox.ts:

export async function addObjectInInbox(db, actor, obj) {

const id = crypto.randomUUID()

const out = await db

.prepare('INSERT INTO inbox_objects(id, actor_id, object_id) VALUES(?, ?, ?)')

.bind(id, actor.id.toString(), obj.id.toString())

.run()

}Problem solved.

The other example is that we need to store dates with sub-second resolution. Again, databases like PostgreSQL have that:

psql> select now();

2023-02-01 11:45:17.425563+00However SQLite falls short with:

sqlite> select datetime();

2023-02-01 11:44:02We worked around this problem with a small hack using strftime():

sqlite> select strftime('%Y-%m-%d %H:%M:%f', 'NOW');

2023-02-01 11:49:35.624See our initial SQL schema, look for the cdate defaults.

Images

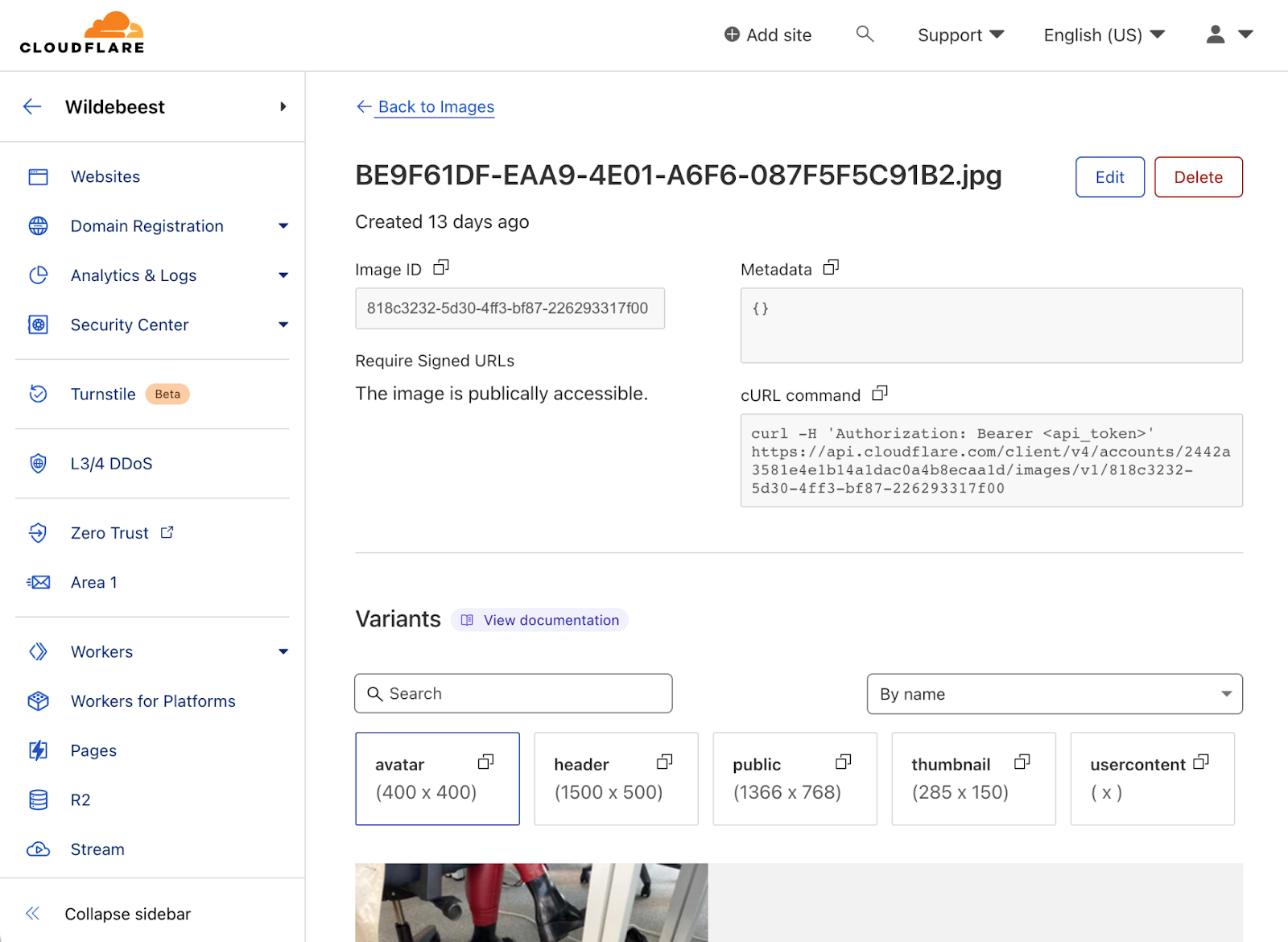

Mastodon content has a lot of rich media. We don’t need to reinvent the wheel and build an image pipeline; Cloudflare Images provides APIs to upload, transform, and serve optimized images from our global CDN, so it’s the perfect fit for Wildebeest’s requirements.

Things like posting content images, the profile avatar, or headers, all use the Images APIs. See /backend/src/media/image.ts to understand how we interface with Images.

async function upload(file: File, config: Config): Promise<UploadResult> {

const formData = new FormData()

const url = `https://api.cloudflare.com/client/v4/accounts/${config.accountId}/images/v1`

formData.set('file', file)

const res = await fetch(url, {

method: 'POST',

body: formData,

headers: {

authorization: 'Bearer ' + config.apiToken,

},

})

const data = await res.json()

return data.result

}If you’re curious about Images for your next project, here’s a tutorial on how to integrate Cloudflare Images on your website.

Cloudflare Images is also available from the dashboard. You can use it to browse or manage your catalog quickly.

Queues

The ActivityPub protocol is chatty by design. Depending on the size of your social graph, there might be a lot of back-and-forth HTTP traffic. We can’t have the clients blocked waiting for hundreds of Fediverse message deliveries every time someone posts something.

We needed a way to work asynchronously and launch background jobs to offload data processing away from the main app and keep the clients snappy. The official Mastodon server has a similar strategy using Sidekiq to do background processing.

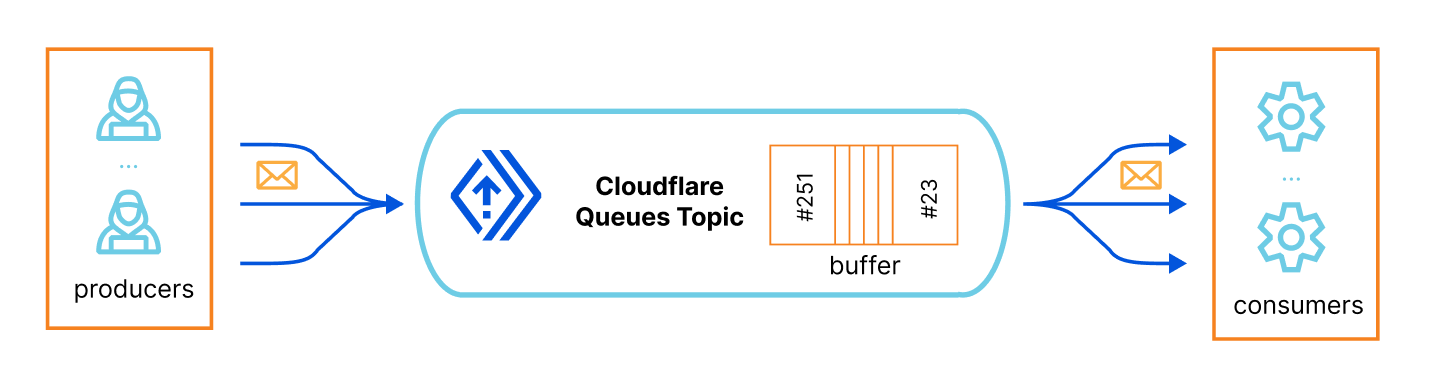

Fortunately, we don’t need to worry about any of this complexity either. Cloudflare Queues allows developers to send and receive messages with guaranteed delivery, and offload work from your Workers’ requests, effectively providing you with asynchronous batch job capabilities.

To put it simply, you have a queue topic identifier, which is basically a buffered list that scales automatically, then you have one or more producers that, well, produce structured messages, JSON objects in our case, and put them in the queue (you define their schema), and finally you have one or more consumers that subscribes that queue, receive its messages and process them, at their own speed.

Here’s the How Queues works page for more information.

In our case, the main application produces queue jobs whenever any incoming API call requires long, expensive operations. For example, when someone posts, sorry, toots something, we need to broadcast that to their followers’ inboxes, potentially triggering many requests to remote servers. Here we are queueing a job for that, thus freeing the APIs to keep responding:

export async function deliverFollowers(

db: D1Database,

from: Actor,

activity: Activity,

queue: Queue

) {

const followers = await getFollowers(db, from)

const messages = followers.map((id) => {

const body = {

activity: JSON.parse(JSON.stringify(activity)),

actorId: from.id.toString(),

toActorId: id,

}

return { body }

})

await queue.sendBatch(messages)

}Similarly, we don’t want to stop the main APIs when remote servers deliver messages to our instance inboxes. Here’s Wildebeest creating asynchronous jobs when it receives messages in the inbox:

export async function handleRequest(

domain: string,

db: D1Database,

id: string,

activity: Activity,

queue: Queue,

): Promise<Response> {

const handle = parseHandle(id)

const actorId = actorURL(domain, handle.localPart)

const actor = await actors.getPersonById(db, actorId)

// creates job

await queue.send({

type: MessageType.Inbox,

actorId: actor.id.toString(),

activity,

})

// frees the API

return new Response('', { status: 200 })

}And the final piece of the puzzle, our queue consumer runs in a separate Worker, independently from the Pages project. The consumer listens for new messages and processes them sequentially, at its rhythm, freeing everyone else from blocking. When things get busy, the queue grows its buffer. Still, things keep running, and the jobs will eventually get dispatched, freeing the main APIs for the critical stuff: responding to remote servers and clients as quickly as possible.

export default {

async queue(batch, env, ctx) {

for (const message of batch.messages) {

…

switch (message.body.type) {

case MessageType.Inbox: {

await handleInboxMessage(...)

break

}

case MessageType.Deliver: {

await handleDeliverMessage(...)

break

}

}

}

},

}If you want to get your hands dirty with Queues, here’s a simple example on Using Queues to store data in R2.

Caching and Durable Objects

Caching repetitive operations is yet another strategy for improving performance in complex applications that require data processing. A famous Netscape developer, Phil Karlton, once said: “There are only two hard things in Computer Science: cache invalidation and naming things.”

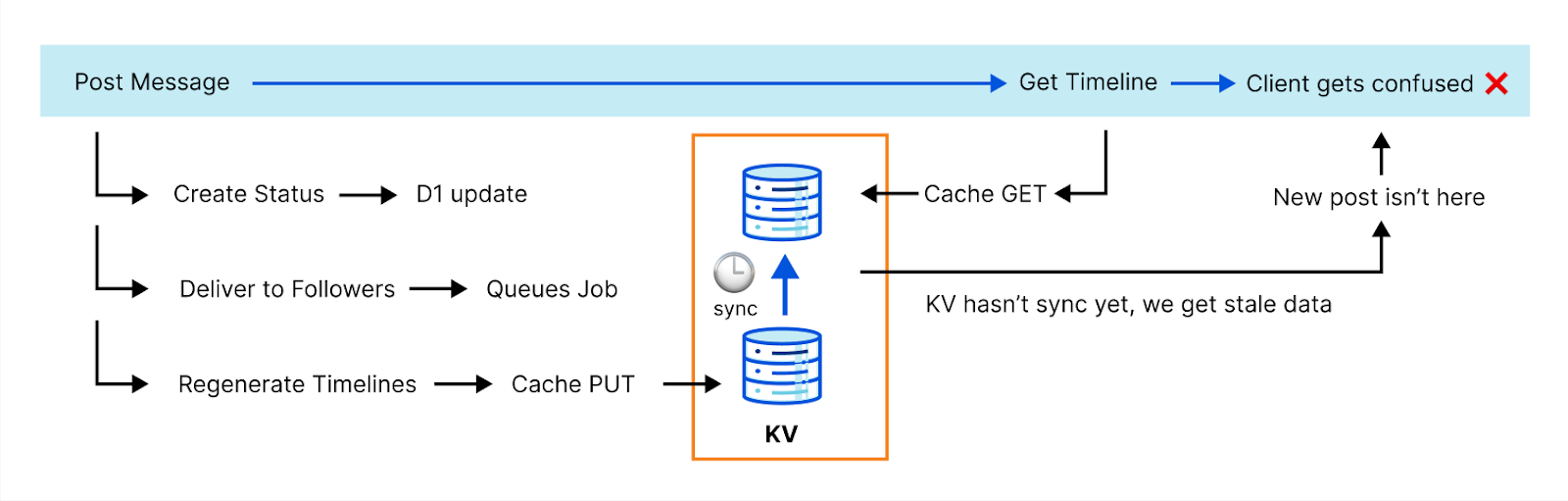

Cloudflare obviously knows a lot about caching since it’s a core feature of our global CDN. We also provide Workers KV to our customers, a global, low-latency, key-value data store that anyone can use to cache data objects in our data centers and build fast websites and applications.

However, KV achieves its performance by being eventually consistent. While this is fine for many applications and use cases, it’s not ideal for others.

The ActivityPub protocol is highly transactional and can’t afford eventual consistency. Here’s an example: generating complete timelines is expensive, so we cache that operation. However, when you post something, we need to invalidate that cache before we reply to the client. Otherwise, the new post won’t be in the timeline and the client can fail with an error because it doesn’t see it. This actually happened to us with one of the most popular clients.

We needed to get clever. The team discussed a few options. Fortunately, our API catalog has plenty of options. Meet Durable Objects.

Durable Objects are single-instance Workers that provide a transactional storage API. They’re ideal when you need central coordination, strong consistency, and state persistence. You can use Durable Objects in cases like handling the state of multiple WebSocket connections, coordinating and routing messages in a chatroom, or even running a multiplayer game like Doom.

You know where this is going now. Yes, we implemented our key-value caching subsystem for Wildebeest on top of a Durable Object. By taking advantage of the DO’s native transactional storage API, we can have strong guarantees that whenever we create or change a key, the next read will always return the latest version.

The idea is so simple and effective that it took us literally a few lines of code to implement a key-value cache with two primitives: HTTP PUT and GET.

export class WildebeestCache {

async fetch(request: Request) {

if (request.method === 'GET') {

const { pathname } = new URL(request.url)

const key = pathname.slice(1)

const value = await this.storage.get(key)

return new Response(JSON.stringify(value))

}

if (request.method === 'PUT') {

const { key, value } = await request.json()

await this.storage.put(key, value)

return new Response('', { status: 201 })

}

}

}

Strong consistency it is. Lets move to user registration and authentication now.

Zero Trust Access

The official Mastodon server handles user registrations, typically using email, before you can choose your local user name and start using the service. Handling user registration and authentication can be daunting and time-consuming if we were to build it from scratch though.

Furthermore, people don’t want to create new credentials for every new service they want to use and instead want more convenient OAuth-like authorization and authentication methods so that they can reuse their existing Apple, Google, or GitHub accounts.

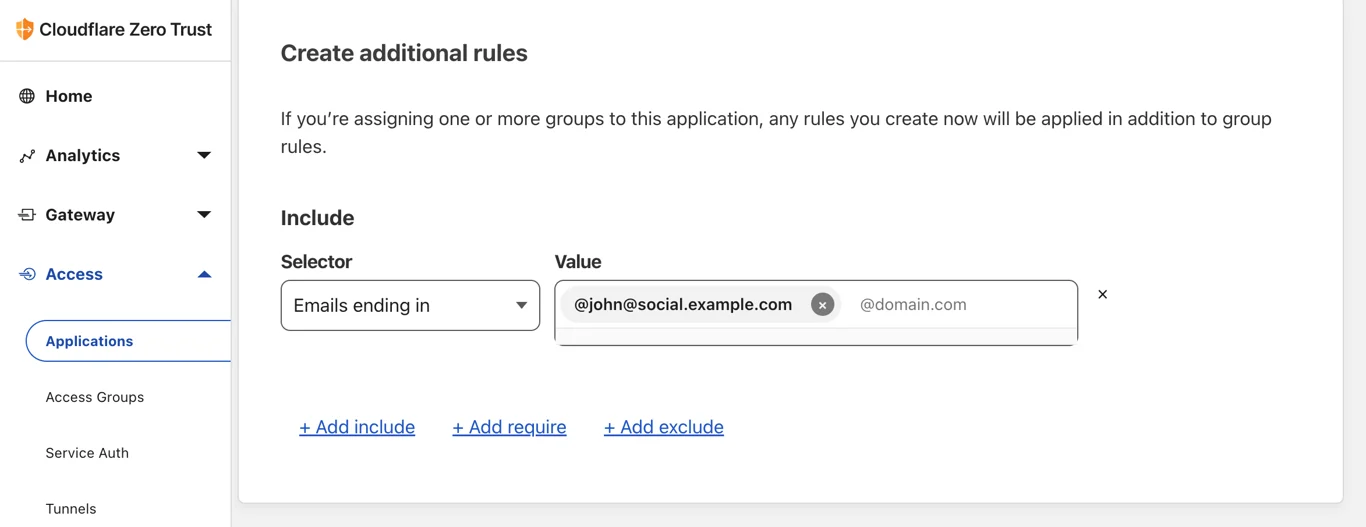

We wanted to simplify things using Cloudflare’s built-in features. Needless to say, we have a product that handles user onboarding, authentication, and access policies to any application behind Cloudflare; it’s called Zero Trust. So we put Wildebeest behind it.

Zero Trust Access can either do one-time PIN (OTP) authentication using email or single-sign-on (SSO) with many identity providers (examples: Google, Facebook, GitHub, Linkedin), including any generic one supporting SAML 2.0.

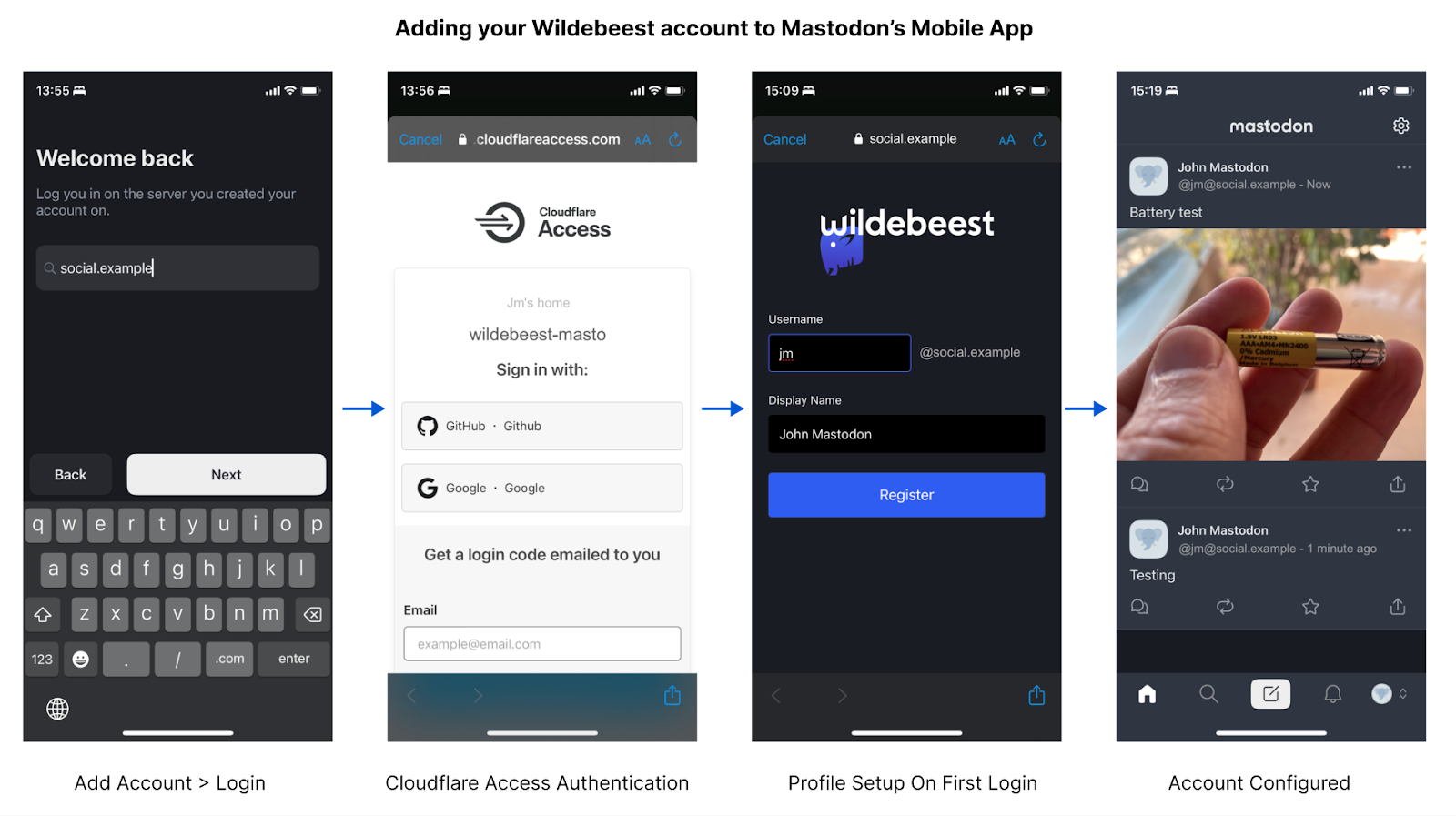

When you start using Wildebeest with a client, you don’t need to register at all. Instead, you go straight to log in, which will redirect you to the Access page and handle the authentication according to the policy that you, the owner of your instance, configured.

The policy defines who can authenticate, and how.

When authenticated, Access will redirect you back to Wildebeest. The first time this happens, we will detect that we don’t have information about the user and ask for your Username and Display Name. This will be asked only once and is what will be to create your public Mastodon profile.

Technically, Wildebeest implements the OAuth 2 specification. Zero Trust protects the /oauth/authorize endpoint and issues a valid JWT token in the request headers when the user is authenticated. Wildebeest then reads and verifies the JWT and returns an authorization code in the URL redirect.

Once the client has an authorization code, it can use the /oauth/token endpoint to obtain an API access token. Subsequent API calls inject a bearer token in the Authorization header:

Authorization: Bearer access_token

Deployment and Continuous Integration

We didn’t want to run a managed service for Mastodon as it would somewhat diminish the concepts of federation and data ownership. Also, we recognize that ActivityPub and Mastodon are emerging, fast-paced technologies that will evolve quickly and in ways that are difficult to predict just yet.

For these reasons, we thought the best way to help the ecosystem right now would be to provide an open-source software package that anyone could use, customize, improve, and deploy on top of our cloud. Cloudflare will obviously keep improving Wildebeest and support the community, but we want to give our Fediverse maintainers complete control and ownership of their instances and data.

The remaining question was, how do we distribute the Wildebeest bundle and make it easy to deploy into someone’s account when it requires configuring so many Cloudflare features, and how do we facilitate updating the software over time?

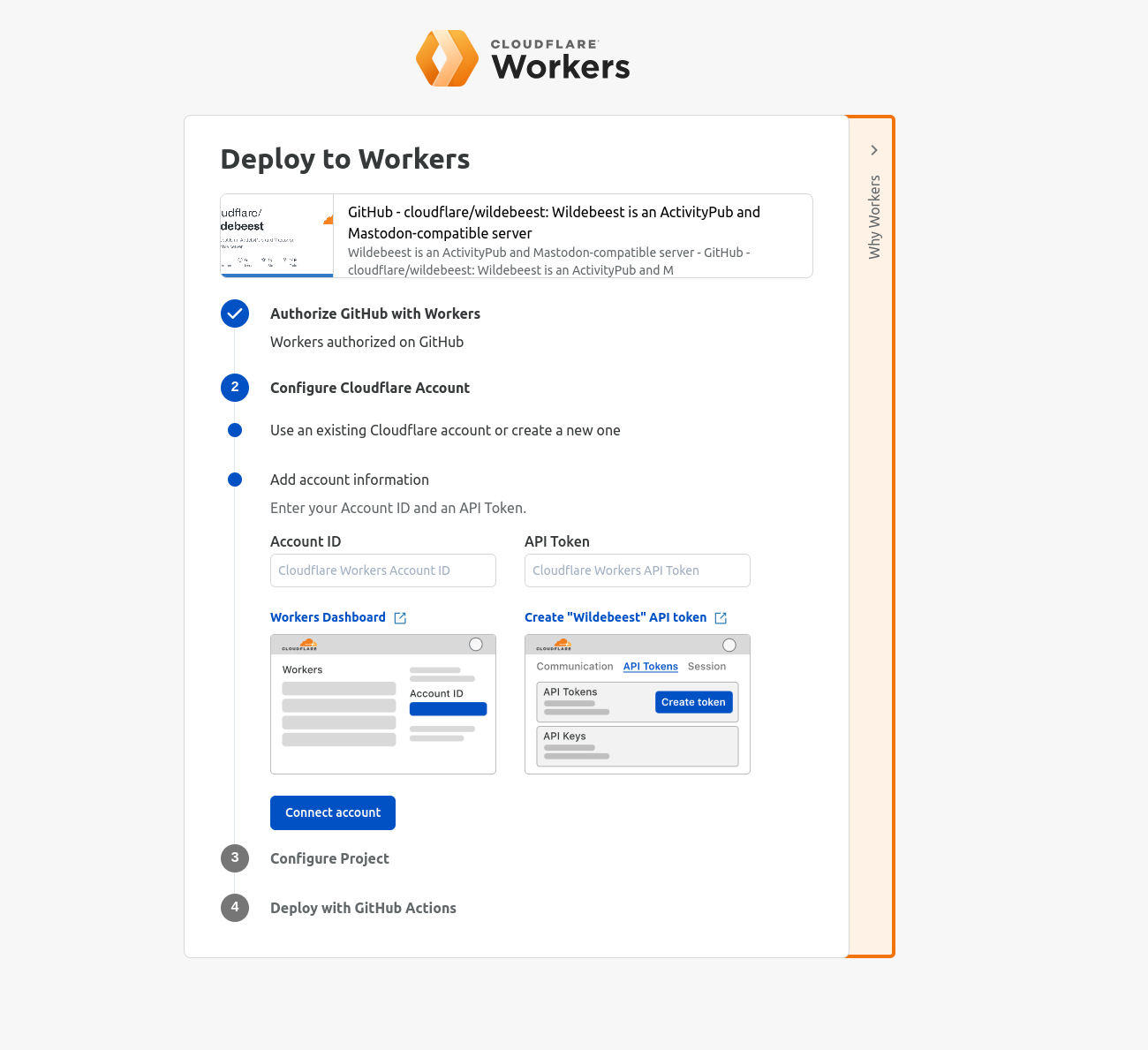

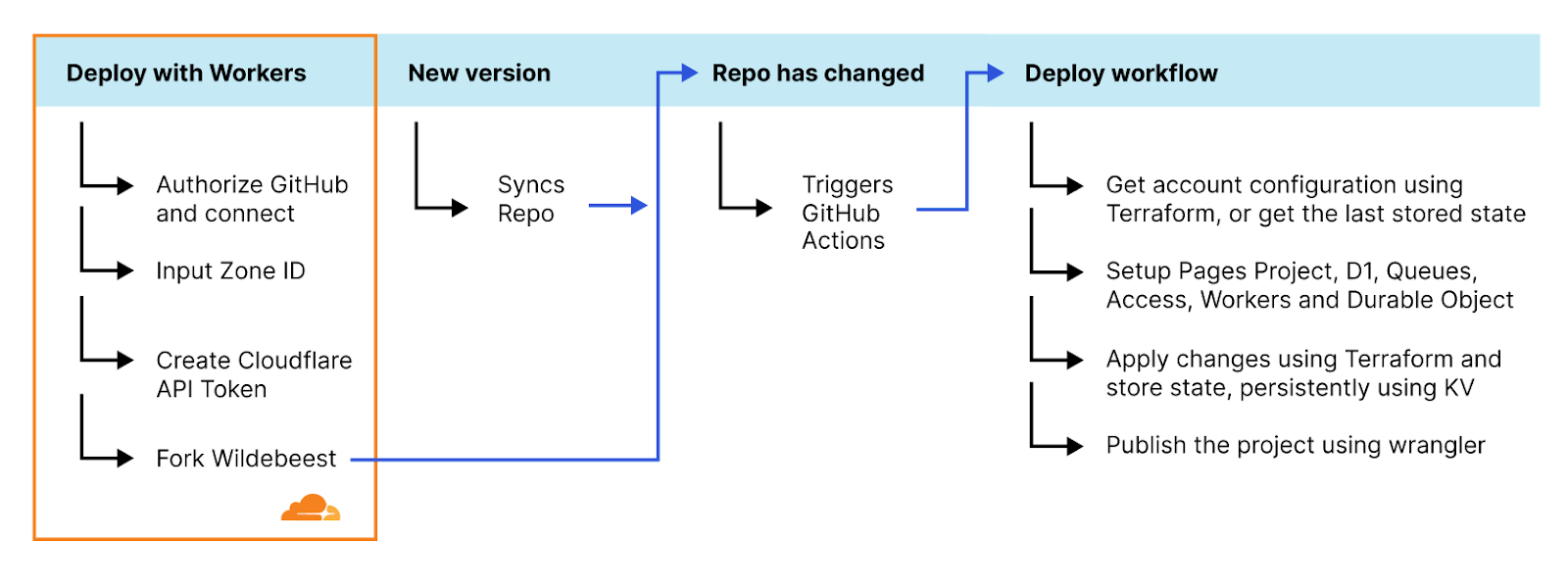

The solution ended up being a clever mix of using GitHub with GitHub Actions, Deploy with Workers, and Terraform.

The Deploy with Workers button is a specially crafted link that auto-generates a workflow page where the user gets asked some questions, and Cloudflare handles authorizing GitHub to deploy to Workers, automatically forks the Wildebeest repository into the user’s account, and then configures and deploys the project using a GitHub Actions workflow.

A GitHub Actions workflow is a YAML file that declares what to do in every step. Here’s the Wildebeest workflow (simplified):

name: Deploy

on:

push:

branches:

- main

repository_dispatch:

jobs:

deploy:

runs-on: ubuntu-latest

timeout-minutes: 60

steps:

- name: Ensure CF_DEPLOY_DOMAIN and CF_ZONE_ID are defined

...

- name: Create D1 database

uses: cloudflare/[email protected]

with:

command: d1 create wildebeest-${{ env.OWNER_LOWER }}

...

- name: retrieve Zero Trust organization

...

- name: retrieve Terraform state KV namespace

...

- name: download VAPID keys

...

- name: Publish DO

- name: Configure

run: terraform plan && terraform apply -auto-approve

- name: Create Queue

...

- name: Publish consumer

...

- name: Publish

uses: cloudflare/[email protected]

with:

command: pages publish --project-name=wildebeest-${{ env.OWNER_LOWER }} .Updating Wildebeest

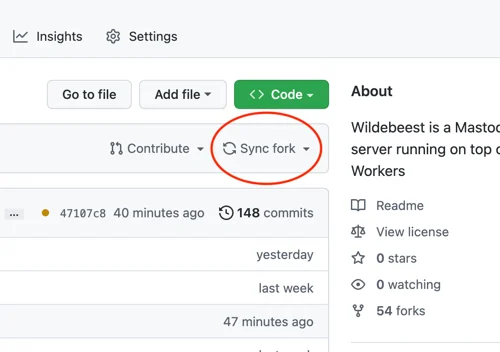

This workflow runs automatically every time the main branch changes, so updating the Wildebeest is as easy as synchronizing the upstream official repository with the fork. You don’t even need to use git commands for that; GitHub provides a convenient Sync button in the UI that you can simply click.

What’s more? Updates are incremental and non-destructive. When the GitHub Actions workflow redeploys Wildebeest, we only make the necessary changes to your configuration and nothing else. You don’t lose your data; we don’t need to delete your existing configurations. Here’s how we achieved this:

We use Terraform, a declarative configuration language and tool that interacts with our APIs and can query and configure your Cloudflare features. Here’s the trick, whenever we apply a new configuration, we keep a copy of the Terraform state for Wildebeest in a Cloudflare KV key. When a new deployment is triggered, we get that state from the KV copy, calculate the differences, then change only what’s necessary.

Data loss is not a problem either because, as you read above, D1 supports migrations. If we need to add a new column to a table or a new table, we don’t need to destroy the database and create it again; we just apply the necessary SQL to that change.

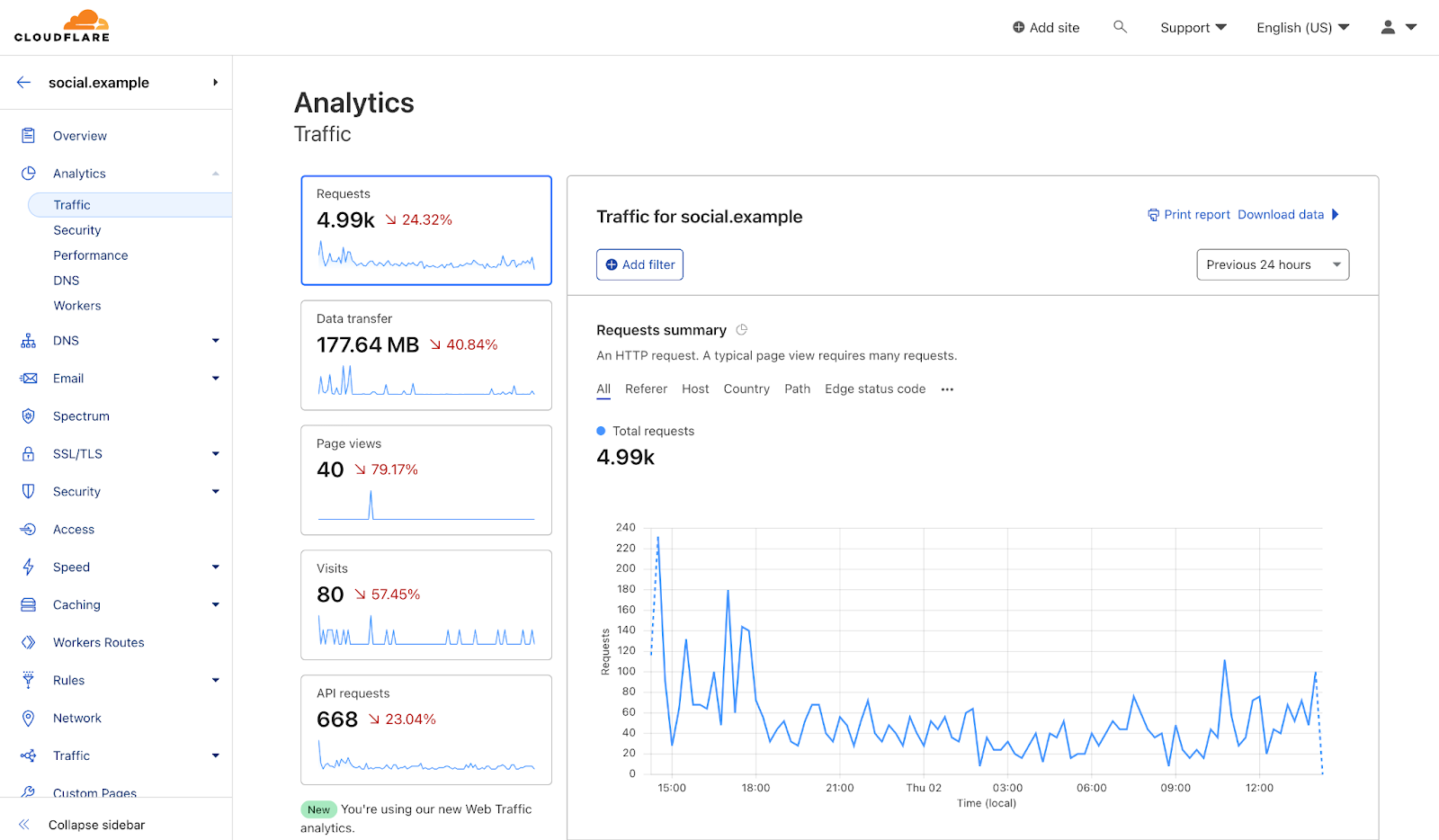

Protection, optimization and observability, naturally

Once Wildebeest is up and running, you can protect it from bad traffic and malicious actors. Cloudflare offers you DDoS, WAF, and Bot Management protection out of the box at a click’s distance.

Likewise, you’ll get instant network and content delivery optimizations from our products and analytics on how your Wildebeest instance is performing and being used.

ActivityPub, WebFinger, NodeInfo and Mastodon APIs

Mastodon popularized the Fediverse concept, but many of the underlying technologies used have been around for quite a while. This is one of those rare moments when everything finally comes together to create a working platform that answers an actual use case for Internet users. Let’s quickly go through the protocols that Wildebeest had to implement:

ActivityPub

ActivityPub is a decentralized social networking protocol and has been around as a W3C recommendation since at least 2018. It defines client APIs for creating and manipulating content and server-to-server APIs for content exchange and notifications, also known as federation. ActivityPub uses ActivityStreams, an even older W3C protocol, for its vocabulary.

The concepts of Actors (profiles), messages or Objects (the toots), inbox (where you receive toots from people you follow), and outbox (where you send your toots to the people you follow), to name a few of many other actions and activities, are all defined on the ActivityPub specification.

Here’s our folder with the ActivityPub implementation.

import type { APObject } from 'wildebeest/backend/src/activitypub/objects'

import type { Actor } from 'wildebeest/backend/src/activitypub/actors'

export async function addObjectInInbox(db, actor, obj) {

const id = crypto.randomUUID()

const out = await db

.prepare('INSERT INTO inbox_objects(id, actor_id, object_id) VALUES(?, ?, ?)')

.bind(id, actor.id.toString(), obj.id.toString())

.run()

}

WebFinger

WebFinger is a simple HTTP protocol used to discover information about any entity, like a profile, a server, or a specific feature. It resolves URIs to resource objects.

Mastodon uses WebFinger lookups to discover information about remote users. For example, say you want to interact with @[email protected]. Your local server would request https://example.com/.well-known/webfinger?resource=acct:[email protected] (using the acct scheme) and get something like this:

{

"subject": "acct:[email protected]",

"aliases": [

"https://example.com/ap/users/user"

],

"links": [

{

"rel": "self",

"type": "application/activity+json",

"href": "https://example.com/ap/users/user"

}

]

}

Now we know how to interact with @[email protected], using the https://example.com/ap/users/user endpoint.

Here’s our WebFinger response:

export async function handleRequest(request, db): Promise<Response> {

…

const jsonLink = /* … link to actor */

const res: WebFingerResponse = {

subject: `acct:...`,

aliases: [jsonLink],

links: [

{

rel: 'self',

type: 'application/activity+json',

href: jsonLink,

},

],

}

return new Response(JSON.stringify(res), { headers })

}Mastodon API

Finally, things like setting your server information, profile information, generating timelines, notifications, and searches, are all Mastodon-specific APIs. The Mastodon open-source project defines a catalog of REST APIs, and you can find all the documentation for them on their website.

Our Mastodon API implementation can be found here (REST endpoints) and here (backend primitives). Here’s an example of Mastodon’s server information /api/v2/instance implemented by Wildebeest:

export async function handleRequest(domain, db, env) {

const res: InstanceConfigV2 = {

domain,

title: env.INSTANCE_TITLE,

version: getVersion(),

source_url: 'https://github.com/cloudflare/wildebeest',

description: env.INSTANCE_DESCR,

thumbnail: {

url: DEFAULT_THUMBNAIL,

},

languages: ['en'],

registrations: {

enabled: false,

},

contact: {

email: env.ADMIN_EMAIL,

},

rules: [],

}

return new Response(JSON.stringify(res), { headers })

}Wildebeest also implements WebPush for client notifications and NodeInfo for server information.

Other Mastodon-compatible servers had to implement all these protocols too; Wildebeest is one of them. The community is very active in discussing future enhancements; we will keep improving our compatibility and adding support to more features over time, ensuring that Wildebeest plays well with the Fediverse ecosystem of servers and clients emerging.

Get started now

Enough about technology; let’s get you into the Fediverse. We tried to detail all the steps to deploy your server. To start using Wildebeest, head to the public GitHub repository and check our Get Started tutorial.

Most of Wildebeest’s dependencies offer a generous free plan that allows you to try them for personal or hobby projects that aren’t business-critical, however you will need to subscribe an Images plan (the lowest tier should be enough for most needs) and, depending on your server load, Workers Unbound (again, the minimum cost should be plenty for most use cases).

Following our dogfooding mantra, Cloudflare is also officially joining the Fediverse today. You can start following our Mastodon accounts and get the same experience of having regular updates from Cloudflare as you get from us on other social platforms, using your favorite Mastodon apps. These accounts are entirely running on top of a Wildebeest server:

- @[email protected] – Our main account

- @[email protected] – Cloudflare Radar

Wildebeest is compatible with most client apps; we are confirmed to work with the official Mastodon Android and iOS apps, Pinafore, Mammoth, and tooot, and looking into others like Ivory. If your favorite isn’t working, please submit an issue here, we’ll do our best to help support it.

Final words

Wildebeest was built entirely on top of our Supercloud stack. It was one of the most complete and complex projects we have created that uses various Cloudflare products and features.

We hope this write-up inspires you to not only try deploying Wildebeest and joining the Fediverse, but also building your next application, however demanding it is, on top of Cloudflare.

Wildebeest is a minimally viable Mastodon-compatible server right now, but we will keep improving it with more features and supporting it over time; after all, we’re using it for our official accounts. It is also open-sourced, meaning you are more than welcome to contribute with pull requests or feedback.

In the meantime, we opened a Wildebeest room on our Developers Discord Server and are keeping an eye open on the GitHub repo issues tab. Feel free to engage with us; the team is eager to know how you use Wildebeest and answer your questions.

PS: The code snippets in this blog were simplified to benefit readability and space (the TypeScript types and error handling code were removed, for example). Please refer to the GitHub repo links for the complete versions.

Use fuzzy string matching to approximate duplicate records in Amazon Redshift

Post Syndicated from Sean Beath original https://aws.amazon.com/blogs/big-data/use-fuzzy-string-matching-to-approximate-duplicate-records-in-amazon-redshift/

Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. Amazon Redshift enables you to run complex SQL analytics at scale and performance on terabytes to petabytes of structured and unstructured data, and make the insights widely available through popular business intelligence (BI) and analytics tools.

It’s common to ingest multiple data sources into Amazon Redshift to perform analytics. Often, each data source will have its own processes of creating and maintaining data, which can lead to data quality challenges within and across sources.

One challenge you may face when performing analytics is the presence of imperfect duplicate records within the source data. Answering questions as simple as “How many unique customers do we have?” can be very challenging when the data you have available is like the following table.

| Name | Address | Date of Birth |

| Cody Johnson | 8 Jeffery Brace, St. Lisatown | 1/3/1956 |

| Cody Jonson | 8 Jeffery Brace, St. Lisatown | 1/3/1956 |

Although humans can identify that Cody Johnson and Cody Jonson are most likely the same person, it can be difficult to distinguish this using analytics tools. This identification of duplicate records also becomes nearly impossible when working on large datasets across multiple sources.

This post presents one possible approach to addressing this challenge in an Amazon Redshift data warehouse. We import an open-source fuzzy matching Python library to Amazon Redshift, create a simple fuzzy matching user-defined function (UDF), and then create a procedure that weights multiple columns in a table to find matches based on user input. This approach allows you to use the created procedure to approximately identify your unique customers, improving the accuracy of your analytics.

This approach doesn’t solve for data quality issues in source systems, and doesn’t remove the need to have a wholistic data quality strategy. For addressing data quality challenges in Amazon Simple Storage Service (Amazon S3) data lakes and data pipelines, AWS has announced AWS Glue Data Quality (preview). You can also use AWS Glue DataBrew, a visual data preparation tool that makes it easy for data analysts and data scientists to clean and normalize data to prepare it for analytics.

Prerequisites

To complete the steps in this post, you need the following:

- An AWS account.

- An Amazon Redshift cluster or Amazon Redshift Serverless endpoint.

- An S3 bucket.

- The open-source Python package TheFuzz—from this link, you need all files in the folder

thefuzzzipped and uploaded to the S3 bucket. - An AWS Identity and Access Management (IAM) role that provides read access to the created S3 bucket. This role will need to be set as the default role on the Amazon Redshift cluster or endpoint for the following steps to work.

The following AWS CloudFormation stack will deploy a new Redshift Serverless endpoint and an S3 bucket for use in this post.

All SQL commands shown in this post are available in the following notebook, which can be imported into the Amazon Redshift Query Editor V2.

Overview of the dataset being used

The dataset we use is mimicking a source that holds customer information. This source has a manual process of inserting and updating customer data, and this has led to multiple instances of non-unique customers being represented with duplicate records.

The following examples show some of the data quality issues in the dataset being used.

In this first example, all three customers are the same person but have slight differences in the spelling of their names.

| id | name | age | address_line1 | city | postcode | state |

| 1 | Cody Johnson | 80 | 8 Jeffrey Brace | St. Lisatown | 2636 | South Australia |

| 101 | Cody Jonson | 80 | 8 Jeffrey Brace | St. Lisatown | 2636 | South Australia |

| 121 | Kody Johnson | 80 | 8 Jeffrey Brace | St. Lisatown | 2636 | South Australia |

In this next example, the two customers are the same person with slightly different addresses.

| id | name | age | address_line1 | city | postcode | state |

| 7 | Angela Watson | 59 | 3/752 Bernard Follow | Janiceberg | 2995 | Australian Capital Territory |

| 107 | Angela Watson | 59 | 752 Bernard Follow | Janiceberg | 2995 | Australian Capital Territory |

In this example, the two customers are different people with the same address. This simulates multiple different customers living at the same address who should still be recognized as different people.

| id | name | age | address_line1 | city | postcode | state |

| 6 | Michael Hunt | 69 | 8 Santana Rest | St. Jessicamouth | 2964 | Queensland |

| 106 | Sarah Hunt | 69 | 8 Santana Rest | St. Jessicamouth | 2964 | Queensland |

Load the dataset

First, create a new table in your Redshift Serverless endpoint and copy the test data into it by doing the following:

- Open the Query Editor V2 and log in using the admin user name and details defined when the endpoint was created.

- Run the following CREATE TABLE statement:

- Run the following COPY command to copy data into the newly created table:

- Confirm the COPY succeeded and there are 110 records in the table by running the following query:

Fuzzy matching

Fuzzy string matching, more formally known as approximate string matching, is the technique of finding strings that match a pattern approximately rather than exactly. Commonly (and in this solution), the Levenshtein distance is used to measure the distance between two strings, and therefore their similarity. The smaller the Levenshtein distance between two strings, the more similar they are.

In this solution, we exploit this property of the Levenshtein distance to estimate if two customers are the same person based on multiple attributes of the customer, and it can be expanded to suit many different use cases.

This solution uses TheFuzz, an open-source Python library that implements the Levenshtein distance in a few different ways. We use the partial_ratio function to compare two strings and provide a result between 1–100. If one of the strings matches perfectly with a portion of the other, the partial_ratio function will return 100.

Weighted fuzzy matching

By adding a scaling factor to each of our column fuzzy matches, we can create a weighted fuzzy match for a record. This is especially useful in two scenarios:

- We have more confidence in some columns of our data than others, and therefore want to prioritize their similarity results.

- One column is much longer than the others. A single character difference in a long string will have much less impact on the Levenshtein distance than a single character difference in a short string. Therefore, we want to prioritize long string matches over short string matches.

The solution in this post applies weighted fuzzy matching based on user input defined in another table.

Create a table for weight information

This reference table holds two columns; the table name and the column mapping with weights. The column mapping is held in a SUPER datatype, which allows JSON semistructured data to be inserted and queried directly in Amazon Redshift. For examples on how to query semistructured data in Amazon Redshift, refer to Querying semistructured data.

In this example, we apply the largest weight to the column address_line1 (0.5) and the smallest weight to the city and postcode columns (0.1).

Using the Query Editor V2, create a new table in your Redshift Serverless endpoint and insert a record by doing the following:

- Run the following CREATE TABLE statement:

- Run the following INSERT statement:

- Confirm the mapping data has inserted into the table correctly by running the following query:

- To check all weights for the

customertable add up to 1 (100%), run the following query:

User-defined functions

With Amazon Redshift, you can create custom scalar user-defined functions (UDFs) using a Python program. A Python UDF incorporates a Python program that runs when the function is called and returns a single value. In addition to using the standard Python functionality, you can import your own custom Python modules, such as the module described earlier (TheFuzz).

In this solution, we create a Python UDF to take two input values and compare their similarity.

Import external Python libraries to Amazon Redshift

Run the following code snippet to import the TheFuzz module into Amazon Redshift as a new library. This makes the library available within Python UDFs in the Redshift Serverless endpoint. Make sure to provide the name of the S3 bucket you created earlier.

Create a Python user-defined function

Run the following code snippet to create a new Python UDF called unique_record. This UDF will do the following:

- Take two input values that can be of any data type as long as they are the same data type (such as two integers or two varchars).

- Import the newly created

thefuzzPython library. - Return an integer value comparing the partial ratio between the two input values.

You can test the function by running the following code snippet:

The result shows that these two strings are have a similarity value of 91%.

Now that the Python UDF has been created, you can test the response of different input values.

Alternatively, you can follow the amazon-redshift-udfs GitHub repo to install the f_fuzzy_string_match Python UDF.

Stored procedures

Stored procedures are commonly used to encapsulate logic for data transformation, data validation, and business-specific logic. By combining multiple SQL steps into a stored procedure, you can reduce round trips between your applications and the database.

In this solution, we create a stored procedure that applies weighting to multiple columns. Because this logic is common and repeatable regardless of the source table or data, it allows us to create the stored procedure once and use it for multiple purposes.

Create a stored procedure

Run the following code snippet to create a new Amazon Redshift stored procedure called find_unique_id. This procedure will do the following:

- Take one input value. This value is the table you would like to create a golden record for (in our case, the

customertable). - Declare a set of variables to be used throughout the procedure.

- Check to see if weight data is in the staging table created in previous steps.

- Build a query string for comparing each column and applying weights using the weight data inserted in previous steps.

- For each record in the input table that doesn’t have a unique record ID (URID) yet, it will do the following:

- Create a temporary table to stage results. This temporary table will have all potential URIDs from the input table.

- Allocate a similarity value to each URID. This value specifies how similar this URID is to the record in question, weighted with the inputs defined.

- Choose the closest matched URID, but only if there is a >90% match.

- If there is no URID match, create a new URID.

- Update the source table with the new URID and move to the next record.

This procedure will only ever look for new URIDs for records that don’t already have one allocated. Therefore, rerunning the URID procedure multiple times will have no impact on the results.

Now that the stored procedure has been created, create the unique record IDs for the customer table by running the following in the Query Editor V2. This will update the urid column of the customer table.

When the procedure has completed its run, you can identify what duplicate customers were given unique IDs by running the following query:

From this you can see that IDs 1, 101, and 121 have all been given the same URID, as have IDs 7 and 107.

The procedure has also correctly identified that IDs 6 and 106 are different customers, and they therefore don’t have the same URID.

Clean up

To avoid incurring future reoccurring charges, delete all files in the S3 bucket you created. After you delete the files, go to the AWS CloudFormation console and delete the stack deployed in this post. This will delete all created resources.

Conclusion

In this post, we showed one approach to identifying imperfect duplicate records by applying a fuzzy matching algorithm in Amazon Redshift. This solution allows you to identify data quality issues and apply more accurate analytics to your dataset residing in Amazon Redshift.

We showed how you can use open-source Python libraries to create Python UDFs, and how to create a generic stored procedure to identify imperfect matches. This solution is extendable to provide any functionality required, including adding as a regular process in your ELT (extract, load, and transform) workloads.

Test the created procedure on your datasets to investigate the presence of any imperfect duplicates, and use the knowledge learned throughout this post to create stored procedures and UDFs to implement further functionality.

If you’re new to Amazon Redshift, refer to Getting started with Amazon Redshift for more information and tutorials on Amazon Redshift. You can also refer to the video Get started with Amazon Redshift Serverless for information on starting with Redshift Serverless.

About the Author

Sean Beath is an Analytics Solutions Architect at Amazon Web Services. He has experience in the full delivery lifecycle of data platform modernisation using AWS services and works with customers to help drive analytics value on AWS.

Extract data from SAP ERP using AWS Glue and the SAP SDK

Post Syndicated from Siva Manickam original https://aws.amazon.com/blogs/big-data/extract-data-from-sap-erp-using-aws-glue-and-the-sap-sdk/

This is a guest post by Siva Manickam and Prahalathan M from Vyaire Medical Inc.

Vyaire Medical Inc. is a global company, headquartered in suburban Chicago, focused exclusively on supporting breathing through every stage of life. Established from legacy brands with a 65-year history of pioneering breathing technology, the company’s portfolio of integrated solutions is designed to enable, enhance, and extend lives.

At Vyaire, our team of 4,000 pledges to advance innovation and evolve what’s possible to ensure every breath is taken to its fullest. Vyaire’s products are available in more than 100 countries and are recognized, trusted, and preferred by specialists throughout the respiratory community worldwide. Vyaire has 65-year history of clinical experience and leadership with over 27,000 unique products and 370,000 customers worldwide.

Vyaire Medical’s applications landscape has multiple ERPs, such as SAP ECC, JD Edwards, Microsoft Dynamics AX, SAP Business One, Pointman, and Made2Manage. Vyaire uses Salesforce as our CRM platform and the ServiceMax CRM add-on for managing field service capabilities. Vyaire developed a custom data integration platform, iDataHub, powered by AWS services such as AWS Glue, AWS Lambda, and Amazon API Gateway.

In this post, we share how we extracted data from SAP ERP using AWS Glue and the SAP SDK.

Business and technical challenges

Vyaire is working on deploying the field service management solution ServiceMax (SMAX, a natively built on SFDC ecosystem), offering features and services that help Vyaire’s Field Services team improve asset uptime with optimized in-person and remote service, boost technician productivity with the latest mobile tools, and deliver metrics for confident decision-making.

A major challenge with ServiceMax implementation is building a data pipeline between ERP and the ServiceMax application, precisely integrating pricing, orders, and primary data (product, customer) from SAP ERP to ServiceMax using Vyaire’s custom-built integration platform iDataHub.

Solution overview

Vyaire’s iDataHub powered by AWS Glue has been effectively used for data movement between SAP ERP and ServiceMax.

AWS Glue a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning (ML), and application development. It’s used in Vyaire’s Enterprise iDatahub Platform for facilitating data movement across different systems, however the focus for this post is to discuss the integration between SAP ERP and Salesforce SMAX.

The following diagram illustrates the integration architecture between Vyaire’s Salesforce ServiceMax and SAP ERP system.

In the following sections, we walk through setting up a connection to SAP ERP using AWS Glue and the SAP SDK through remote function calls. The high-level steps are as follows:

- Clone the PyRFC module from GitHub.

- Set up the SAP SDK on an Amazon Elastic Compute Cloud (Amazon EC2) machine.

- Create the PyRFC wheel file.

- Merge SAP SDK files into the PyRFC wheel file.

- Test the connection with SAP using the wheel file.

Prerequisites

For this walkthrough, you should have the following:

- An AWS account.

- The Linux version of NW RFC SDK from a SAP licensed source. For more information, refer to Download and Installation of NW RFC SDK.

- The AWS Command Line Interface (AWS CLI) configured. For instructions, refer to Configuration basics.

Clone the PyRFC module from GitHub

For instructions for creating and connecting to an Amazon Linux 2 AMI EC2 instance, refer to Tutorial: Get started with Amazon EC2 Linux instances.

The reason we choose Amazon Linux EC2 is to compile the SDK and PyRFC in a Linux environment, which is compatible with AWS Glue.

At the time of writing this post, AWS Glue’s latest supported Python version is 3.7. Ensure that the Amazon EC2 Linux Python version and AWS Glue Python version are the same. In the following instructions, we install Python 3.7 in Amazon EC2; we can follow the same instructions to install future versions of Python.

- In the bash terminal of the EC2 instance, run the following command:

- Log in to the Linux terminal, install git, and clone the PyRFC module using the following commands:

Set up the SAP SDK on an Amazon EC2 machine

To set up the SAP SDK, complete the following steps:

- Download the

nwrfcsdk.zipfile from a licensed SAP source to your local machine. - In a new terminal, run the following command on the EC2 instance to copy the

nwrfcsdk.zipfile from your local machine to theaws_to_sapfolder.

Unzip the

Unzip the nwrfcsdk.zipfile in the current EC2 working directory and verify the contents:

unzip nwrfcsdk.zip

- Configure the SAP SDK environment variable

SAPNWRFC_HOMEand verify the contents:

Create the PyRFC wheel file

Complete the following steps to create your wheel file:

- On the EC2 instance, install Python modules cython and wheel for generating wheel files using the following command:

- Navigate to the PyRFC directory you created and run the following command to generate the wheel file:

Verify that the pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl wheel file is created as in the following screenshot in the PyRFC/dist folder. Note that you may see a different wheel file name based on the latest PyRFC version on GitHub.

Merge SAP SDK files into the PyRFC wheel file

To merge the SAP SDK files, complete the following steps:

- Unzip the wheel file you created:

- Copy the contents of lib (the SAP SDK files) to the pyrfc folder:

Now you can update the rpath of the SAP SDK binaries using the PatchELF utility, a simple utility for modifying existing ELF executables and libraries.

- Install the supporting dependencies (gcc, gcc-c++, python3-devel) for the Linux utility function PatchELF:

Download and install PatchELF:

- Run patchelf:

- Update the wheel file with the modified pyrfc and dist-info folders:

- Copy the wheel file

pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whlfrom Amazon EC2 to Amazon Simple Storage Service (Amazon S3):

Test the connection with SAP using the wheel file

The following is a working sample code to test the connectivity between the SAP system and AWS Glue using the wheel file.

- On the AWS Glue Studio console, choose Jobs in the navigation pane.

- Select Spark script editor and choose Create.

- Overwrite the boilerplate code with the following code on the Script tab:

- On the Job details tab, fill in mandatory fields.

- In the Advanced properties section, provide the S3 URI of the wheel file in the Job parameters section as a key value pair:

- Key –

--additional-python-modules - Value –

s3://<bucket_name>/ec2-dump/pyrfc-2.5.0-cp37-cp37m-linux_x86_64.whl(provide your S3 bucket name)

- Key –

- Save the job and choose Run.

Verify SAP connectivity

Complete the following steps to verify SAP connectivity:

- When the job run is complete, navigate to the Runs tab on the Jobs page and choose Output logs in the logs section.

- Choose the job_id and open the detailed logs.

- Observe the message

SAP Connection successful – connection object: <connection object>, which confirms a successful connection with the SAP system. - Observe the message Successfully extracted data from SAP using custom RFC – Printing the top 5 rows, which confirms successful access of data from the SAP system.

Conclusion

AWS Glue facilitated the data extraction, transformation, and loading process from different ERPs into Salesforce SMAX to improve Vyaire’s products and its related information visibility to service technicians and tech support users.

In this post, you learned how you can use AWS Glue to connect to SAP ERP utilizing SAP SDK remote functions. To learn more about AWS Glue, check out AWS Glue Documentation.

About the Authors

Siva Manickam is the Director of Enterprise Architecture, Integrations, Digital Research & Development at Vyaire Medical Inc. In this role, Mr. Manickam is responsible for the company’s corporate functions (Enterprise Architecture, Enterprise Integrations, Data Engineering) and produce function (Digital Innovation Research and Development).

Siva Manickam is the Director of Enterprise Architecture, Integrations, Digital Research & Development at Vyaire Medical Inc. In this role, Mr. Manickam is responsible for the company’s corporate functions (Enterprise Architecture, Enterprise Integrations, Data Engineering) and produce function (Digital Innovation Research and Development).

Prahalathan M is the Data Integration Architect at Vyaire Medical Inc. In this role, he is responsible for end-to-end enterprise solutions design, architecture, and modernization of integrations and data platforms using AWS cloud-native services.

Prahalathan M is the Data Integration Architect at Vyaire Medical Inc. In this role, he is responsible for end-to-end enterprise solutions design, architecture, and modernization of integrations and data platforms using AWS cloud-native services.

Deenbandhu Prasad is a Senior Analytics Specialist at AWS, specializing in big data services. He is passionate about helping customers build modern data architecture on the AWS Cloud. He has helped customers of all sizes implement data management, data warehouse, and data lake solutions.

Deenbandhu Prasad is a Senior Analytics Specialist at AWS, specializing in big data services. He is passionate about helping customers build modern data architecture on the AWS Cloud. He has helped customers of all sizes implement data management, data warehouse, and data lake solutions.

NVIDIA A16 with 4x Ampere 16GB GPUs Onboard Quick Look

Post Syndicated from John Lee original https://www.servethehome.com/nvidia-a16-with-4x-ampere-16gb-gpus-onboard-quick-look/

We take a look at the NVIDIA A16 GPU with 4x low power Ampere GPUs on a single PCIe card, each with 16GB of GDDR6

The post NVIDIA A16 with 4x Ampere 16GB GPUs Onboard Quick Look appeared first on ServeTheHome.

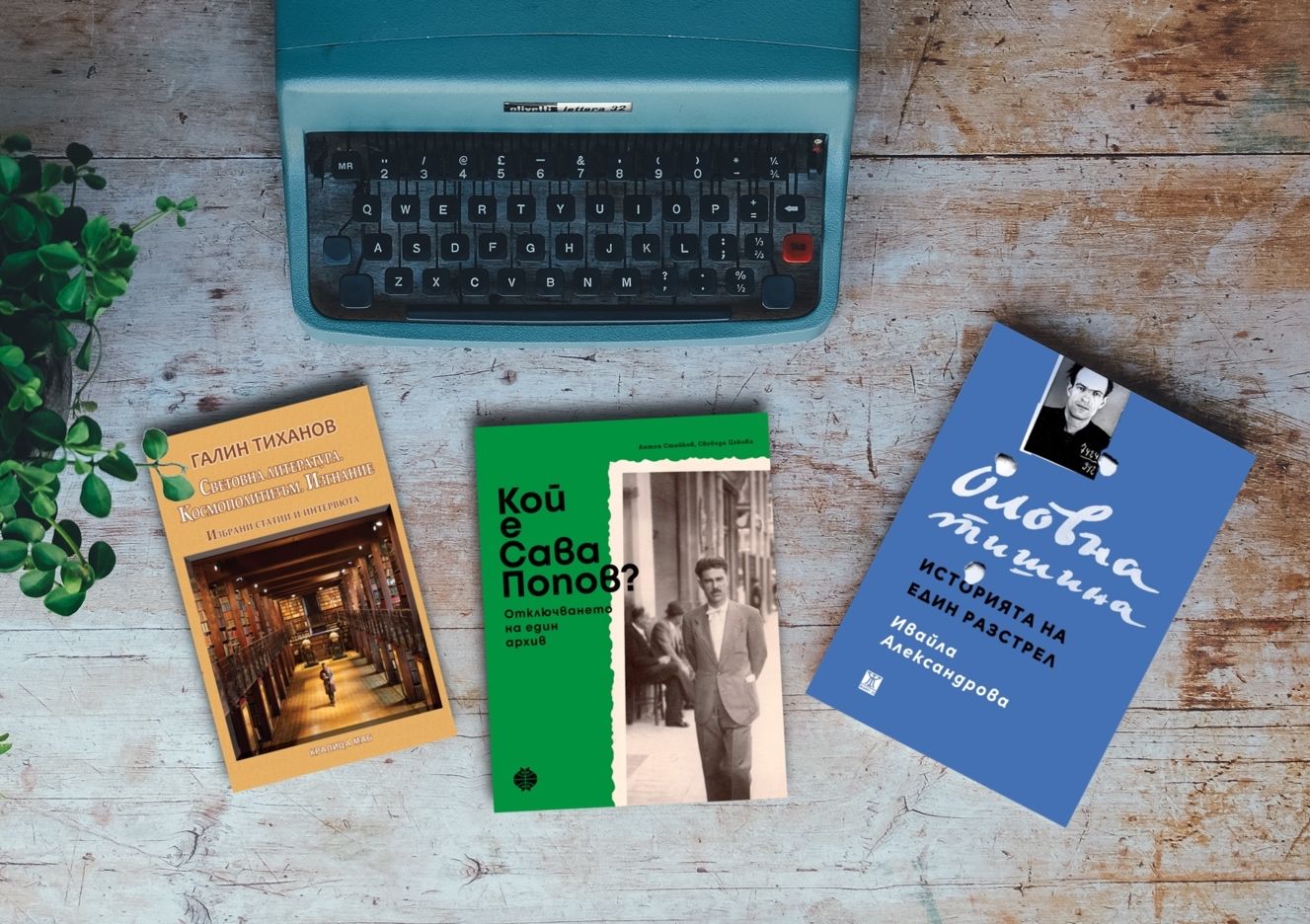

По буквите: Стайков/Цекова, Александрова, Тиханов

Post Syndicated from Зорница Христова original https://www.toest.bg/po-bukvite-staykov-tsekova-alexandrova-tihanov/

Класна стая. Надпис над дъската. „Колелото на историята се върти и ще се върти до окончателната победа на комунизма.“ На стената, тъй като училището е „елитно“ и може да си позволи стенописи – шестоъгълни клетки с рисунки от българската история. Левски заклева съмишленици. Хвърковатата чета. Паисий. Друго не помня.

Ние изобщо не познаваме историята си, защото сме преситени от нейния сурогат – краткия идеологическо-исторически разказ. Затова подбрах две книги, които оспорват тази кратка (и грешна) версия по единствения възможен начин – с доказателства. Две истории „от архивите“, всяка фокусирана върху конкретен човек, пишещ човек, роден в самото начало на миналия век. Единият – забравен. Другият – познат на всички, тъй като е привързан към въпросното „колело на историята“. И двете книги имат и друго, не-книжно битие. Едната – като изложба. Другата – като филм.

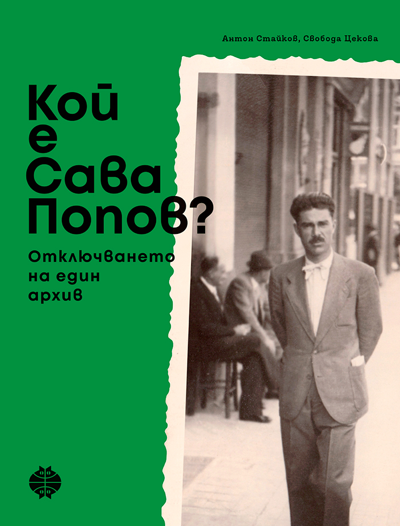

„Кой е Сава Попов? Отключването на един архив“ от Антон Стайков и Свобода Цекова

Габрово: Музей на хумора и сатирата, 2022

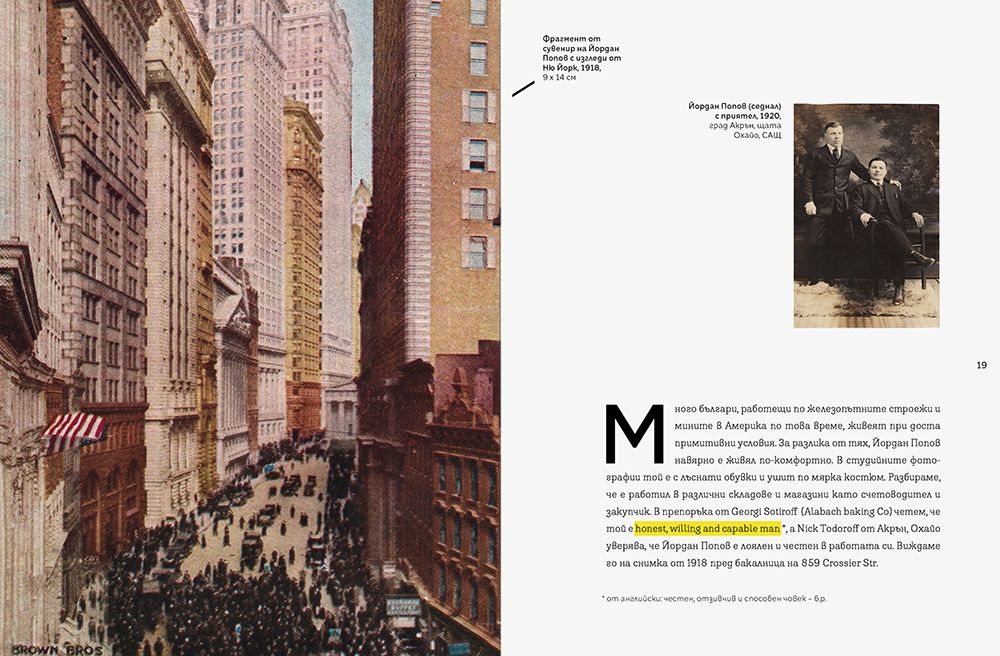

В историята на Сава Попов има богата суровина за роман. Класически роман, в който героят тръгва от нищото, минава през множество перипетии, които ту биха могли да го отведат до Венесуела, ту да му създадат сериозни политически неприятности. Разказът ту се опира на познатото (в-к „Стършел“, историите за Хитър Петър), ту представя уж познатия свят в съвсем нова светлина.

Антон Стайков и Свобода Цекова не пишат роман. Те представят документален разказ, изцяло изваден от архива на писателя. С обаянието на автентичния материал, но и с осезаемо разказваческо умение. Всъщност такъв тип работа с архивите прилича на колажната техника при визуалните изкуства: крайният резултат зависи от вкуса и избора на художника.

Каква история се получава? Историята на човек като нас,

не особено героичен и жертвоготовен, дарен с талант, но без претенции за романтично-титанични върхове. И тази история е окичена с факти, които сами по себе си звучат като литература.

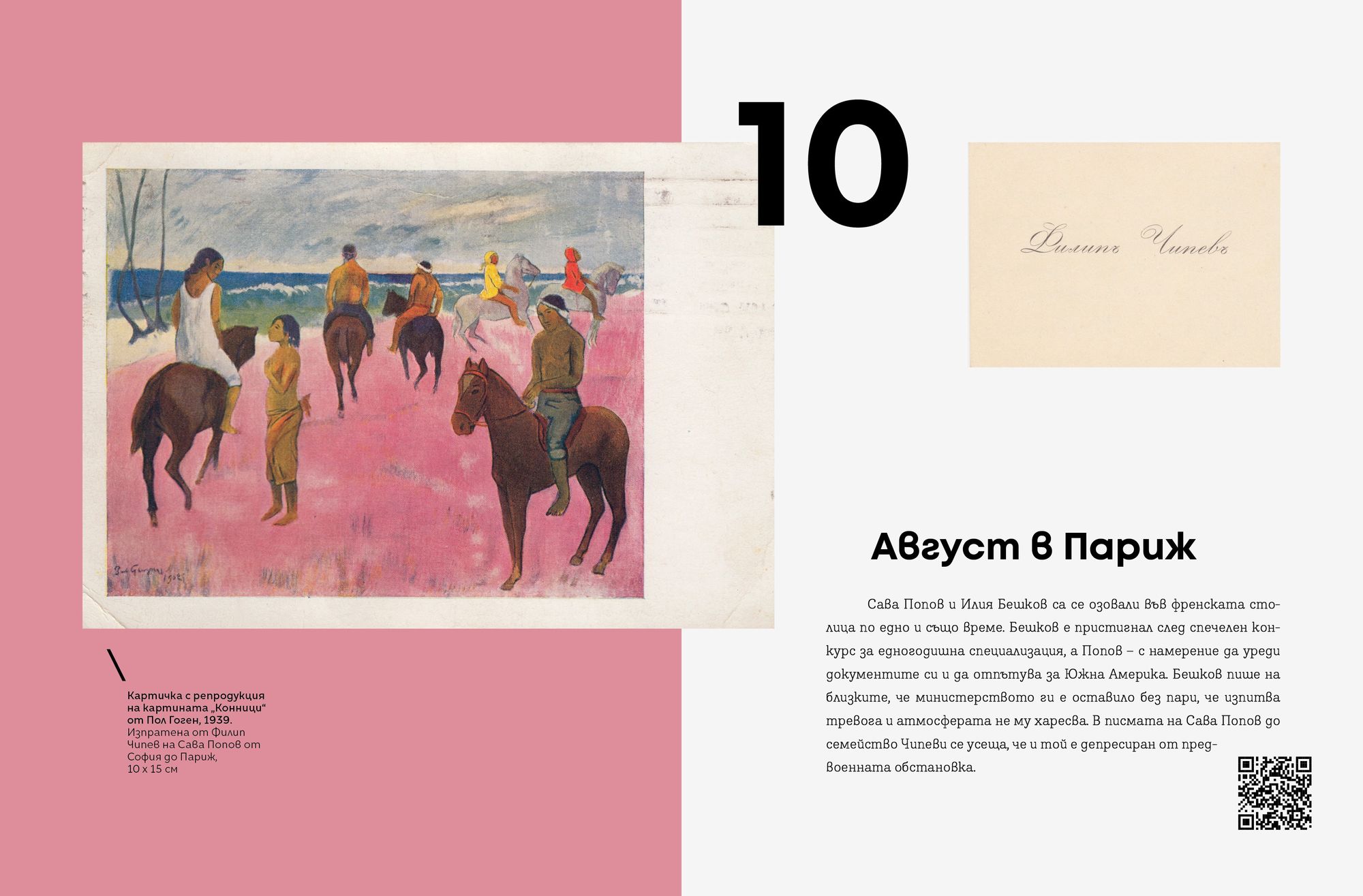

За начало – заглавията от българската въздържателска преса от 30-те винаги са будили у мен невъздържан смях. „Трезваче“! Сава Попов има, макар и за кратко, вземане-даване и с този тип писане. Или пък хората, които обикаляли селата, за да зарибяват желаещи да емигрират отвъд океана – също разкошна и малко изследвана тема. Бащата на Сава Попов отива – и се връща. Или пък авторската хумористична преса. Сава Попов създава заедно с Илия Бешков вестник „Стършел“, който тогава просъществува само няколко месеца; Елин Пелин и Александър Божинов пък създават детския „Чавче“, който просъществува година.

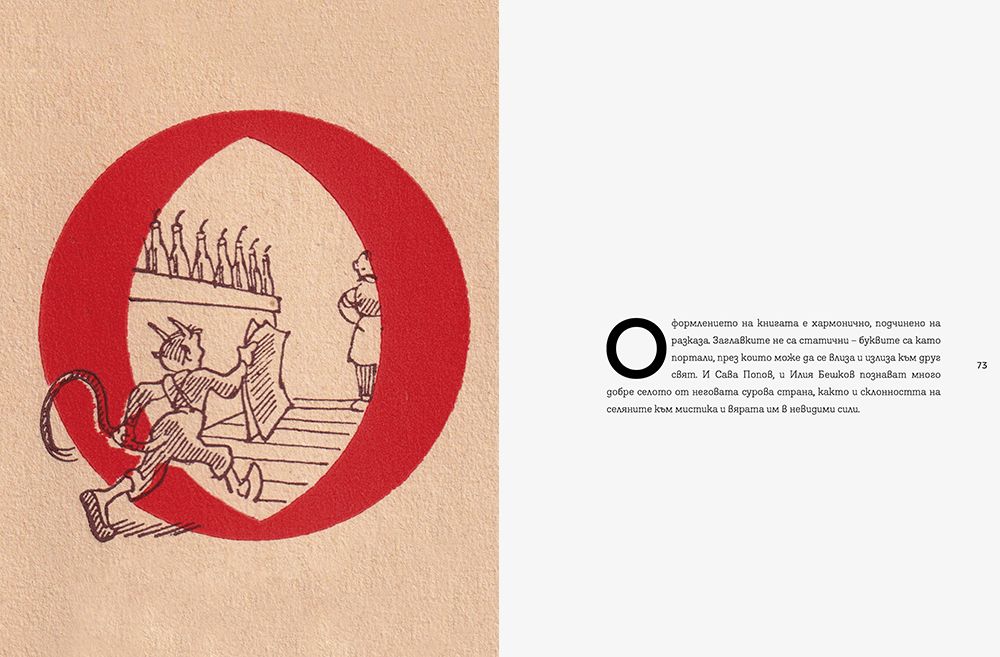

Свобода Цекова и Антон Стайков белетризират архива на Сава Попов, без да го променят. Видимият критерий при избора какво да извадят, е или функционален (какво важно казва за героя), или художествен (с какво ще обогати процеса на четене, дали е ярко, въздействащо и пр.) Долавя се и скрито ехо – например между бакалските сметки на бащата и сметките за туткал на сина (който след Девети е принуден да се издържа с варене на лепило). Няма колебания обаче в отразяването на основната траектория на Сава Попов с все компромисите ѝ, нито в плътното проследяване на книгите му. И на техните илюстрации. Коментарите на авторите за тях са кратки, но вещо насочват читателя на какво да обърне внимание – къде на динамиката, къде на статичното, „почти средновековно“ представяне, къде на „грубия“ Стоян Венев, къде на калиграфските рисунки на Илия Бешков.

Прочее, с Бешков е свързан и най-силният според мен образ от книгата, когато Сава Попов отива при отколешния си приятел с молба да илюстрира „Хитър Петър“, а болният художник не успява да накара стария си писец да рисува както трябва. Тогава сцепва кибритена клечка, топва я в мастилото и с нея рисува илюстрациите, които всички познаваме.

„Кой е Сава Попов?“ е показнò как може да се разказва една история „начисто“, тоест по непозната за читателя тема – как може тя да се „олитератури“ достатъчно, за да бъде пивка за очите, без обаче за целта да се жертва истината.

Втората книга за днес е в друга позиция, в позицията на „контраистория“, която трябва да се бори с господстващата и налагана с години версия.

„Оловна тишина. Историята на един разстрел“ от Ивайла Александрова

Пловдив: ИК „Жанет 45“, 2022

Ако историята на Сава Попов е интригуваща в своя отказ от героичност, в предвидимостта на човешкия избор, то биографията на човека в центъра на „Оловна тишина“ е нейна противоположност. Историята на Вапцаров, позната ни от същите онези класни стаи, влиза в типичната матрица на българския литературно-историографски разказ – поетът-революционер, саможертвата и т.н.

По мои спомени матрицата вървеше Ботев–Яворов–Вапцаров, а комунистическият идеал беше представен като огледален на националния. Изключително устойчив мит, дотолкова, че свеждането му в реалността – каквото представлява изваждането на документите около неговия герой – предизвика предварителна и бурна реакция. Бях свидетел как читателка на Панаира на книгата беше възмутена, преди да е видяла изданието, че някой иска да ѝ „отнеме Вапцаров“. Ето другата страна на кратките (и неверни) версии на историята – те неизбежно се вплитат в емоционалната връзка на човека със себе си и с другите. И идеята за раздяла с тях може да предизвика криза, вкл. криза на идентичността.

„Оловна тишина“ не е съд над Вапцаров. Особено пък като поет.

Книгата е аналогична, макар и много по-внушителна по обем, на тази за Сава Попов. И нейният главен персонаж е не по-малко повлечен от стихията на житейските си обстоятелства – въпреки идеята за героя, личния избор, саможертвата. Или точно поради тях. Защото тази парадигма на мислене може да те превърне в жертва толкова бързо, колкото не си си и представял.

Но вариантите за спокоен живот на Вапцаров поначало не са много. Ако бащата на Попов е работлив бакалин, който от Новия свят помага на семейството си, а след завръщането си се грижи за добруването му тук, бащата на Вапцаров е описван като „комита“, каквото и да означава това. Де факто обаче неговата фигура е първият ни челен сблъсък с насилието в тази книга, защото свидетелствата на съселяните му го описват по-скоро като главорез. Като че ли се завихря някаква поредица от вини: Йонко Вапцаров допуска в дома си „белогвардееца“ Мьолер, който обаче се оказва червен агент и всява разкол във ВМРО; за да изкупи вината си, Йонко избива (зверски) комунисти; за да изкупи вината му, синът застава на тяхна страна.

В същото време комунистическите акции в България (особено тази, в която Вапцаров в крайна сметка е замесен) се планират от Москва под диктовката на Георги Димитров, който изпитва параноичен ужас, че българските политемигранти планират да го убият. И редовно ги праща в Сибир (ужасяващи спомени на неговия съподсъдим от Лайпциг Благой Попов). Или на обречени акции като тази. Това е истинското развенчаване в книгата – не на Вапцаров, а на другия „портрет от стената“, автора на изречението за колелото на историята, собственика на тефтерче с номерата на Сталин и Берия, привилегирования участник в най-тежките сталински репресии, вкл. срещу своите, най-вече срещу своите. Спомнете си описанията на сталинските лагери у Светлана Алексиевич например. Е, сега ги свържете пряко с портрета на Георги Димитров.

На фона на целия този цинизъм Вапцаров, изглежда, живее във филм – парадоксално, като се има предвид стихотворението му „Кино“. Освен че предлага на жена си да бъдат „като Лорка и Петрарка“, „Яворов и Лора“ и т.н., окача над брачното им ложе посмъртната маска на Яворов, а при погребението на детето им казва: „… ударник щеше да стане…“ В цялата акция Вапцаров се държи като герой от кинолента за съпротивата – не ползва кодово име, събира хората в собствения си дом… и разбира се, лесно се превръща в жертва. А след Девети – и в мит, удобен за същата тази партийна машина.

Оттам нататък започва вторият труден за преглъщане пласт. Защото посмъртното увековечаване може да му дава поетическата слава, за която е мечтал, но на каква цена? Да ти пренапишат биографията, вкл. от какво е умрял синът ти, семейството ти да се изпокара за правата над паметта ти, поезията ти да бъде поставена в еднозначна служба на режима. Впрочем единственото, което тази книга не закача, е поезията на Вапцаров – напротив, книгата звучи като написана с подразбирането, че става въпрос за важен поет, и точно затова историята му трябва да се знае.

Това е още по-недвусмислено във филма по същите записки („Пет разказа за един разстрел“ на Костадин Бонев), където отделните части са разделени от Вапцарови стихотворения, прочетени от Цветана Манева. Особеното, което се чува в тях при този прочит, е акцентът върху уязвимостта. И ми се струва, че именно в своята уязвимост, в екзистенциалната несигурност под привидната категоричност на думите, Вапцаров е най-близък до съвременния читател.

Няколко думи за обема на тази книга. „Кой е Сава Попов?“ може да си позволи да бъде четивна, „по мярка“ като време за четене; да разказва спрямо правилата на доброто повествование. Защото тя разказва непозната, нова история. „Оловна тишина“ има функцията на папка с доказателства, представени в съда. Ако тя беше с обем 100 страници, нямаше да има шансове спрямо дългогодишната образователна традиция в преподаването на Вапцаров, срещу установените клишета за него. В този си вид и обем хартиеното тяло на книгата е видимо, предметно възражение. Дали действително ще промени „готовото знание“ по въпроса – тепърва ще се разбере.

„Световна литература. Космополитизъм. Изгнание“ от Галин Тиханов

превод от английски Гриша Атанасов, Филип Стоилов, Кирил Василев, с участието на Марин Бодаков, София: изд. „Кралица Маб“, 2022

И в двете гореописани книги действащите лица са хора… но и идеи, без които случващото се няма как да бъде разбрано. Именно историята на идеите пък е сърцевината на книгата на проф. Галин Тиханов, литературовед, член на Британската академия. Ако приемем обаче на шега представата за идеята като персонаж, то бихме се оказали в един трагикомичен сюжет на объркани и подменени идентичности.

Да вземем „космополитизма“ – дума, зад която може да се крие както личният етически избор да си гражданин на полис, съвпадащ със света, така и чисто политическата идея за един неразделен на нации свят без войни. Аха, значи нашият персонаж поне със сигурност е положителен герой? Не така смята Хана Аренд, според която светът без войни може да бъде светът на една тотална полицейска машина, от която няма къде да избягаш. В друг епизод пък виждаме „космополитизма“ като преследван герой – в СССР след края на Втората световна война, когато самото познание за живота в Западна и Централна Европа е било заплаха за вътрешния ред, думата „космополит“ е била унижаваща и маргинализираща стигма, включително за учени като Ейхенбаум и Проп. И още нещо, виждаме нашия герой в симбиоза със своя уж-антагонист, национализма – не като изконни същности, а като опасно обратими начини на поведение.

Всъщност всяко предефиниране на понятието за космополитизъм включва рекалибриране и на идеята за национализма – нещо, чието политическо значение в момента е все по-очевидно.

Изобщо, политическият потенциал на тази книга е повече от видим – не само спрямо битието на опити за утвърждаване на идентичност по средата между националната и космополитната (какъвто е ЕС), не само в контекста на Брекзит (който се дискутира в едно от интервютата), а и изобщо в нашия свят на – както точно се изразява проф. Тиханов – комодификация на различията.

На няколко пъти в книгата става въпрос за наследство, включително по отношение на предполагаемо „мъртвата“ вече литературна теория. Как може да ни бъде полезно то? Каква цена ще платим, ако се откажем да го използваме? Вероятно това, което се случва, когато друг управлява наследството на историята – както е видно от примера с Вапцаров или, в по-различен ключ, с цитираното от Галин Тиханов изкривяване на образите на руските формалисти по време на Студената война. С други думи, неупотребеното наследство ще бъде присвоено, разпарчетосано и употребено от други. Отвъд всички практични следствия от това ще приведа думите на проф. Тиханов, цитирани от Марин Бодаков в последното интервю от книгата:

Човек носи със себе си своя минал живот и е въпрос на вътрешно достойнство той да го предава цялостно.

Активните дарители на „Тоест“ получават постоянна отстъпка в размер на 20% от коричната цена на всички заглавия от каталога на ИК „Жанет 45“, както и на няколко други български издателства в рамките на партньорската програма Читателски клуб „Тоест“. За повече информация прочетете на toest.bg/club.

В емблематичната си колонка, започната още през 2008 г. във в-к „Култура“, Марин Бодаков ни представяше нови литературни заглавия и питаше с какво точно тези книги ни променят. Вярваме, че е важно тази рубрика да продължи. От човек до човек, с нова книга в ръка.

OMG – Tuya local HACS integration for Tuya devices in Home Assistant

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=apWfjargTpA

Rustproofing Linux (nccgroup)

Post Syndicated from original https://lwn.net/Articles/922638/

The nccgroup blog is carrying a

four-part series by Domen Puncer Kugler on how vulnerabilities can make

their way into device drivers written in Rust.

In other words, the CONFIG_INIT_STACK_ALL_ZERO build

option does nothing for Rust code! Developers must be cautious to

avoid shooting themselves in the foot when porting a driver from C

to Rust, especially if they previously relied on this config option